From Wikipedia, the free encyclopedia

A multilayer perceptron (MLP) is a fully connected class of feedforward artificial neural network (ANN). The term MLP is used ambiguously, sometimes loosely to mean any feedforward ANN, sometimes strictly to refer to networks composed of multiple layers of perceptrons (with threshold activation)[citation needed]; see § Terminology.

Multilayer perceptrons are sometimes colloquially referred to as

"vanilla" neural networks, especially when they have a single hidden

layer.[1]

An MLP consists of at least three layers

of nodes: an input layer, a hidden layer and an output layer. Except

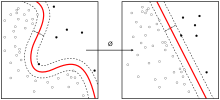

for the input nodes, each node is a neuron that uses a nonlinear activation function. MLP utilizes a chain rule[2] based supervised learning technique called backpropagation or reverse mode of automatic differentiation for training.[3][4][5][6][7] Its multiple layers and non-linear activation distinguish MLP from a linear perceptron. It can distinguish data that is not linearly separable.[8]

https://en.wikipedia.org/wiki/Multilayer_perceptron

No comments:

Post a Comment