Billy the Kid (1911 film)

| Billy the Kid | |

|---|---|

| Directed by | Laurence Trimble |

| Written by | Edward J. Montagne |

| Produced by | Vitagraph Company of America |

| Starring | Tefft Johnson Edith Storey |

| Distributed by | The General Film Company, Incorporated |

Release date |

|

Running time | One reel / 305 metres |

| Country | United States |

| Languages | Silent English intertitles |

Billy the Kid is a 1911 American silent Western film directed by Laurence Trimble for Vitagraph Studios. It is very loosely based on the life of Billy the Kid. It is believed to be a lost film.

Plot

The following is one of the only reviews of the 1911 short film from The Moving Picture World:[2]

The duties of the sheriff as performed by "Uncle Billy" were not a matter of pleasure. His son-in-law is a member of the posse; lie and "Uncle Billy" leave the house hurriedly to join their comrades in pursuit of the outlaws. Two hours later the son in-law is carried back to his home, the victim of the gang. A little daughter is born to the widow and left an orphan. "Uncle Billy" is anxious that the child should be a boy, but through the secrecy of the Spanish servant. "Uncle Billy" never knew that "Billy, the Kid." was a girl. The sheriff brought her up as a young cowboy, although he noticed there was a certain timidity in the "Kid" that was not at all becoming a boy. When "Billy, the Kid," is sixteen years of age, her grandfather sends her to a town school. Lee Curtis, the foreman of "Uncle Billy's" ranch, was the "Kid's" pal; they were very fond of each other, and it was hard for Lee to part with his young associate, when he accompanied her to the cross roads where they meet the stage. The stage is held up by the outlaws. The "Kid" is taken captive and held as a ransom; they send a note to "Uncle Billy" saying: "If he will grant them immunity, they will restore the 'Kid.'" When the sheriff gets this message, he is furious, and the Spanish servant who well knows that the "Kid" is a girl, is almost frantic with apprehension lest the "Kid's" captors discover this fact. She tells "Uncle Billy" why she has kept him in ignorance of the truth. Lee Curtis overhears the servant's statement, and with the sheriff, rushes out to get the posse in action. "Billy the Kid" has managed to escape from the outlaws and meets the sheriff and his posse. Her grandfather loses no time in getting her back home and into female attire. This time "Uncle Billy" Is going to send the "Kid" to a female seminary and he is going to take her there himself. She tells her grandfather she just wants to be "Billy the Kid" and have Lee for her life companion. "Uncle Billy" has nothing more to say, and it is not long before she changes her name to Mrs. Lee Curtis.

Cast

- Tefft Johnson as Lee Curtis

- Edith Storey as Billy the Kid

- Ralph Ince as Billy's Uncle

- Julia Swayne Gordon as Billy's Mother

- William R. Dunn

- Harry T. Morey

Production

- Directed by Laurence Trimble, this film was shot in a standard 35mm spherical 1.33:1 format.

- Edith Storey, who had played male characters prior in such films as Oliver Twist and A Florida Enchantment, portrays the "first 'cowgirl' film star" as Billy the Kid.[2]

Preservation status

This is presumed a lost film.

See also

References

- "When Billy the Kid Was Billie the Kid". October 9, 2014. Archived from the original on October 16, 2016. Retrieved July 6, 2017.

External links

- 1911 films

- 1911 Western (genre) films

- 1911 lost films

- American black-and-white films

- American silent short films

- Biographical films about Billy the Kid

- Films based on biographies

- Lost American films

- Lost Western (genre) films

- Silent American Western (genre) films

- Vitagraph Studios films

- Films directed by Laurence Trimble

- 1910s American films

https://en.wikipedia.org/wiki/Billy_the_Kid_(1911_film)

Category:1911 lost films

| This is a non-diffusing subcategory of Category:1911 films. It includes 1911 films that can also be found in the parent category, or in diffusing subcategories of the parent. |

Pages in category "1911 lost films"

The following 53 pages are in this category, out of 53 total. This list may not reflect recent changes.

A

B

C

F

L

https://en.wikipedia.org/wiki/Category:1911_lost_films

| The Vote That Counted | |

|---|---|

| Produced by | Thanhouser Company |

| Distributed by | Motion Picture Distributing and Sales Company |

Release date |

|

| Country | United States |

| Languages | Silent film English inter-titles |

The Vote That Counted is a 1911 American silent short drama film produced by the Thanhouser Company. The film focuses on a state senator who disappears from a train and detective Violet Gray investigates the case. Gray manages to find that he was kidnapped and that it was done because he opposed a powerful lobby. She manages to free the state senator in time for him to cast the deciding vote to defeat the lobby. The film was released on January 13, 1911, it was the second of four films in the "Violet Gray, Detective" series. The film received favorable reviews from Billboard and The New York Dramatic Mirror. The film is presumed lost.

Plot

The Moving Picture World synopsis states, "State Senator Jack Dare, one of the reform members of the legislature, starts to the state capitol to attend an important session of that body. That he took the midnight train from his home city is clearly proven, for his aged mother was a passenger on it, and besides the conductor and porter are certain that he retired for the night. In the morning, however, his berth is empty, although some of his garments are found there. The case puzzles the railroad officials and the police, and Violet Gray is given a chance to distinguish herself. She learns from the conductor and porter, who had happened to spend the night awake at opposite ends of the car, that the senator did not go by them. Consequently this leaves only the window as his means of egress, and she knows that he must have gone that way. Violet discovers that Dare is a hearty supporter of a bill that a powerful lobby is trying to defeat. The fight is so close that his is the deciding vote. Dare cannot be bribed, so his opponents spirited him away in a novel fashion. But the girl finds where he is hidden and brings him back, although he is much injured. He reaches his seat in time to cast the needed vote, and to astound and defeat the lobby."[1]

Cast and production

The only known credit in the cast is Julia M. Taylor as Violet Gray.[1] Film historian Q. David Bowers does not cite any scenario or directorial credits.[1] At this time the Thanhouser company operated out of their studio in New Rochelle, New York. In October 1910, an article in The Moving Picture World described the improvements to the studio as having permanently installed a lighting arrangement that was previously experimental in nature. The studio had amassed a collection of props for the productions and dressing rooms had been constructed for the actors. The studio had installed new equipment in the laboratories to improve the quality of the films.[2] By 1911, the Thanhouser company was recognized as one of leading Independent film makers, but Carl Laemmle's Independent Moving Picture Company (IMP) captured most of the publicity. Two production companies were maintained by the company, the first under Barry O'Neil and the second under Lucius J. Henderson and John Noble, an assistant director to Henderson. Though the company had at least two Bianchi cameras from Columbia Phonograph Company, it is believed that imported cameras were also used. The Bianchi cameras were unreliable and inferior to competitors, but it was believed to be a non-infringing camera, though with "rare fortune" it could shoot up to 200 feet of film before requiring repairs.[3]

Release and reception

The single reel drama, approximately 1,000 feet long, was released on January 13, 1911.[1] This would be billed as the second in the series following the successful Love And Law film. The two later releases would be The Norwood Necklace and The Court's Decree would conclude the "Violet Gray, Detective" series.[4] Apparently the film series made an impact because the Lubin Manufacturing Company released a film under the title Violet Dare, Detective in June 1913.[4] The film received a positive review from The Billboard for its interest plot, good acting and photography. The New York Dramatic Mirror found the film to be a good melodrama, but found fault in that a woman detective was used to resolve the case when the part would have had more dignity and realism if the case was resolved by a man.[1] The film would be shown in Pennsylvania and be advertised by theaters even a year after its release.[5][6]

References

- "New Photoplay". The Gettysburg Times (Gettysburg, Pennsylvania). February 8, 1912. p. 1. Retrieved May 28, 2015.

https://en.wikipedia.org/wiki/The_Vote_That_Counted

| Temperate season | |

|---|---|

Forest covered in snow during winter | |

| Northern temperate zone | |

| Astronomical season | 22 December – 21 March |

| Meteorological season | 1 December – 28/29 February |

| Solar (Celtic) season | 1 November – 31 January |

| Southern temperate zone | |

| Astronomical season | 21 June – 23 September |

| Meteorological season | 1 June – 31 August |

| Solar (Celtic) season | 1 May – 31 July |

| Summer Spring Winter | |

| Part of a series on |

| Weather |

|---|

|

|

|

|

|

|

|

|

|

|

|

|

|

Glossaries |

|

|

Winter is the coldest season of the year in polar and temperate climates. It occurs after autumn and before spring. The tilt of Earth's axis causes seasons; winter occurs when a hemisphere is oriented away from the Sun. Different cultures define different dates as the start of winter, and some use a definition based on weather.

When it is winter in the Northern Hemisphere, it is summer in the Southern Hemisphere, and vice versa. In many regions, winter brings snow and freezing temperatures. The moment of winter solstice is when the Sun's elevation with respect to the North or South Pole is at its most negative value; that is, the Sun is at its farthest below the horizon as measured from the pole. The day on which this occurs has the shortest day and the longest night, with day length increasing and night length decreasing as the season progresses after the solstice.

The earliest sunset and latest sunrise dates outside the polar regions differ from the date of the winter solstice and depend on latitude. They differ due to the variation in the solar day throughout the year caused by the Earth's elliptical orbit (see: earliest and latest sunrise and sunset).

Etymology

The English word winter comes from the Proto-Germanic noun *wintru-, whose origin is unclear. Several proposals exist, a commonly mentioned one connecting it to the Proto-Indo-European root *wed- 'water' or a nasal infix variant *wend-.[1]

Cause

The tilt of the Earth's axis relative to its orbital plane plays a large role in the formation of weather. The Earth is tilted at an angle of 23.44° to the plane of its orbit, causing different latitudes to directly face the Sun as the Earth moves through its orbit. This variation brings about seasons. When it is winter in the Northern Hemisphere, the Southern Hemisphere faces the Sun more directly and thus experiences warmer temperatures than the Northern Hemisphere. Conversely, winter in the Southern Hemisphere occurs when the Northern Hemisphere is tilted more toward the Sun. From the perspective of an observer on the Earth, the winter Sun has a lower maximum altitude in the sky than the summer Sun.

During winter in either hemisphere, the lower altitude of the Sun causes the sunlight to hit the Earth at an oblique angle. Thus a lower amount of solar radiation strikes the Earth per unit of surface area. Furthermore, the light must travel a longer distance through the atmosphere, allowing the atmosphere to dissipate more heat. Compared with these effects, the effect of the changes in the distance of the Earth from the Sun (due to the Earth's elliptical orbit) is negligible.

The manifestation of the meteorological winter (freezing temperatures) in the northerly snow–prone latitudes is highly variable depending on elevation, position versus marine winds and the amount of precipitation. For instance, within Canada (a country of cold winters), Winnipeg on the Great Plains, a long way from the ocean, has a January high of −11.3 °C (11.7 °F) and a low of −21.4 °C (−6.5 °F).[2]

In comparison, Vancouver on the west coast with a marine influence from moderating Pacific winds has a January low of 1.4 °C (34.5 °F) with days well above freezing at 6.9 °C (44.4 °F).[3] Both places are at 49°N latitude, and in the same western half of the continent. A similar but less extreme effect is found in Europe: in spite of their northerly latitude, the British Isles have not a single non-mountain weather station with a below-freezing mean January temperature.[4]

Meteorological reckoning

Meteorological reckoning is the method of measuring the winter season used by meteorologists based on "sensible weather patterns" for record keeping purposes,[5] so the start of meteorological winter varies with latitude.[6] Winter is often defined by meteorologists to be the three calendar months with the lowest average temperatures. This corresponds to the months of December, January and February in the Northern Hemisphere, and June, July and August in the Southern Hemisphere.

The coldest average temperatures of the season are typically experienced in January or February in the Northern Hemisphere and in June, July or August in the Southern Hemisphere. Nighttime predominates in the winter season, and in some regions winter has the highest rate of precipitation as well as prolonged dampness because of permanent snow cover or high precipitation rates coupled with low temperatures, precluding evaporation. Blizzards often develop and cause many transportation delays. Diamond dust, also known as ice needles or ice crystals, forms at temperatures approaching −40 °C (−40 °F) due to air with slightly higher moisture from above mixing with colder, surface-based air.[7] They are made of simple hexagonal ice crystals.[8]

The Swedish meteorological institute (SMHI) defines thermal winter as when the daily mean temperatures are below 0 °C (32 °F) for five consecutive days.[9] According to the SMHI, winter in Scandinavia is more pronounced when Atlantic low-pressure systems take more southerly and northerly routes, leaving the path open for high-pressure systems to come in and cold temperatures to occur. As a result, the coldest January on record in Stockholm, in 1987, was also the sunniest.[10][11]

Accumulations of snow and ice are commonly associated with winter in the Northern Hemisphere, due to the large land masses there. In the Southern Hemisphere, the more maritime climate and the relative lack of land south of 40°S makes the winters milder; thus, snow and ice are less common in inhabited regions of the Southern Hemisphere. In this region, snow occurs every year in elevated regions such as the Andes, the Great Dividing Range in Australia, and the mountains of New Zealand, and also occurs in the southerly Patagonia region of South Argentina. Snow occurs year-round in Antarctica.

Astronomical and other calendar-based reckoning

In the Northern Hemisphere, some authorities define the period of winter based on astronomical fixed points (i.e. based solely on the position of the Earth in its orbit around the Sun), regardless of weather conditions. In one version of this definition, winter begins at the winter solstice and ends at the March equinox.[12] These dates are somewhat later than those used to define the beginning and end of the meteorological winter – usually considered to span the entirety of December, January, and February in the Northern Hemisphere and June, July, and August in the Southern.[12][13]

Astronomically, the winter solstice, being the day of the year which has fewest hours of daylight, ought to be in the middle of the season,[14][15] but seasonal lag means that the coldest period normally follows the solstice by a few weeks. In some cultures, the season is regarded as beginning at the solstice and ending on the following equinox[16][17] – in the Northern Hemisphere, depending on the year, this corresponds to the period between 20, 21 or 22 December and 19, 20 or 21 March.[12]

In an old Norwegian tradition winter begins on 14 October and ends on the last day of February.[18]

In many countries in the Southern Hemisphere, including Australia,[19][20] New Zealand,[21] and South Africa, winter begins on 1 June and ends on 31 August.

In Celtic nations such as Ireland (using the Irish calendar) and in Scandinavia, the winter solstice is traditionally considered as midwinter, with the winter season beginning 1 November, on All Hallows, or Samhain. Winter ends and spring begins on Imbolc, or Candlemas, which is 1 or 2 February.[citation needed] In Chinese astronomy and other East Asian calendars, winter is taken to commence on or around 7 November, on Lìdōng, and end with the arrival of spring on 3 or 4 February, on Lìchūn.[22] Late Roman Republic scholar Marcus Terentius Varro defined winter as lasting from the fourth day before the Ides of November (10 November) to the eighth day before the Ides of Februarius (6 February).[23]

This system of seasons is based on the length of days exclusively. The three-month period of the shortest days and weakest solar radiation occurs during November, December and January in the Northern Hemisphere and May, June and July in the Southern Hemisphere.

Many mainland European countries tended to recognize Martinmas or St. Martin's Day (11 November), as the first calendar day of winter.[24] The day falls at the midpoint between the old Julian equinox and solstice dates. Also, Valentine's Day (14 February) is recognized by some countries as heralding the first rites of spring, such as flowers blooming.[25]

The three-month period associated with the coldest average temperatures typically begins somewhere in late November or early December in the Northern Hemisphere and lasts through late February or early March. This "thermological winter" is earlier than the solstice delimited definition, but later than the daylight (Celtic or Chinese) definition. Depending on seasonal lag, this period will vary between climatic regions.

Since by almost all definitions valid for the Northern Hemisphere, winter spans 31 December and 1 January, the season is split across years, just like summer in the Southern Hemisphere. Each calendar year includes parts of two winters. This causes ambiguity in associating a winter with a particular year, e.g. "Winter 2018". Solutions for this problem include naming both years, e.g. "Winter 18/19", or settling on the year the season starts in or on the year most of its days belong to, which is the later year for most definitions.

Ecological reckoning and activity

Ecological reckoning of winter differs from calendar-based by avoiding the use of fixed dates. It is one of six seasons recognized by most ecologists who customarily use the term hibernal for this period of the year (the other ecological seasons being prevernal, vernal, estival, serotinal, and autumnal).[26] The hibernal season coincides with the main period of biological dormancy each year whose dates vary according to local and regional climates in temperate zones of the Earth. The appearance of flowering plants like the crocus can mark the change from ecological winter to the prevernal season as early as late January in mild temperate climates.

To survive the harshness of winter, many animals have developed different behavioral and morphological adaptations for overwintering:

- Migration is a common effect of winter upon animals such as migratory birds. Some butterflies also migrate seasonally.

- Hibernation is a state of reduced metabolic activity during the winter. Some animals "sleep" during winter and only come out when the warm weather returns; e.g., gophers, frogs, snakes, and bats.

- Some animals store food for the winter and live on it instead of hibernating completely. This is the case for squirrels, beavers, skunks, badgers, and raccoons.

- Resistance is observed when an animal endures winter but changes in ways such as color and musculature. The color of the fur or plumage changes to white (in order to be confused with snow) and thus retains its cryptic coloration year-round. Examples are the rock ptarmigan, Arctic fox, weasel, white-tailed jackrabbit, and mountain hare.

- Some fur-coated mammals grow a heavier coat during the winter; this improves the heat-retention qualities of the fur. The coat is then shed following the winter season to allow better cooling. The heavier coat in winter made it a favorite season for trappers, who sought more profitable skins.

- Snow also affects the ways animals behave; many take advantage of the insulating properties of snow by burrowing in it. Mice and voles typically live under the snow layer.

Some annual plants never survive the winter. Other annual plants require winter cold to complete their life cycle; this is known as vernalization. As for perennials, many small ones profit from the insulating effects of snow by being buried in it. Larger plants, particularly deciduous trees, usually let their upper part go dormant, but their roots are still protected by the snow layer. Few plants bloom in the winter, one exception being the flowering plum, which flowers in time for Chinese New Year. The process by which plants become acclimated to cold weather is called hardening.

Examples

Exceptionally cold

- 1683–1684, "The Great Frost", when the Thames, hosting the River Thames frost fairs, was frozen all the way up to London Bridge and remained frozen for about two months. Ice was about 27 cm (11 in) thick in London and about 120 cm (47 in) thick in Somerset. The sea froze up to 2 miles (3.2 km) out around the coast of the southern North Sea, causing severe problems for shipping and preventing use of many harbours.

- 1739–1740, one of the most severe winters in the UK on record. The Thames remained frozen over for about 8 weeks. The Irish famine of 1740–1741 claimed the lives of at least 300,000 people.[27]

- 1816 was the Year Without a Summer in the Northern Hemisphere. The unusual coolness of the winter of 1815–1816 and of the following summer was primarily due to the eruption of Mount Tambora in Indonesia, in April 1815. There were secondary effects from an unknown eruption or eruptions around 1810, and several smaller eruptions around the world between 1812 and 1814. The cumulative effects were worldwide but were especially strong in the Eastern United States, Atlantic Canada, and Northern Europe. Frost formed in May in New England, killing many newly planted crops, and the summer never recovered. Snow fell in New York and Maine in June, and ice formed in lakes and rivers in July and August. In the UK, snow drifts remained on hills until late July, and the Thames froze in September. Agricultural crops failed and livestock died in much of the Northern Hemisphere, resulting in food shortages and the worst famine of the 19th century.

- 1887–1888, there were record cold temperatures in the Upper Midwest, heavy snowfalls worldwide, and amazing storms, including the Schoolhouse Blizzard of 1888 (in the Midwest in January), and the Great Blizzard of 1888 (in the Eastern US and Canada in March).

- In Europe, the winters of early 1947,[28] February 1956, 1962–1963, 1981–1982 and 2009–2010 were abnormally cold. The UK winter of 1946–1947 started out relatively normal, but became one of the snowiest UK winters to date, with nearly continuous snowfall from late January until March.

- In South America, the winter of 1975 was one of the strongest, with record snow occurring at 25°S in cities of low altitude, with the registration of −17 °C (1.4 °F) in some parts of southern Brazil.

- In the eastern United States and Canada, the winter of 2013–2014 and the second half of February 2015 were abnormally cold.

Historically significant

- 1310–1330, many severe winters and cold, wet summers in Europe – the first clear manifestation of the unpredictable weather of the Little Ice Age that lasted for several centuries (from about 1300 to 1900). The persistently cold, wet weather caused great hardship, was primarily responsible for the Great Famine of 1315–1317, and strongly contributed to the weakened immunity and malnutrition leading up to the Black Death (1348–1350).

- 1600–1602, extremely cold winters in Switzerland and Baltic region after eruption of Huaynaputina in Peru in 1600.

- 1607–1608, in North America, ice persisted on Lake Superior until June. Londoners held their first frost fair on the frozen-over River Thames.

- 1622, in Turkey, the Golden Horn and southern section of Bosphorus froze over.

- 1690s, extremely cold, snowy, severe winters. Ice surrounded Iceland for miles in every direction.

- 1779–1780, Scotland's coldest winter on record, and ice surrounded Iceland in every direction (like in the 1690s). In the United States, a record five-week cold spell bottomed out at −20 °F (−29 °C) at Hartford, Connecticut, and −16 °F (−27 °C) in New York City. Hudson River and New York's harbor froze over.

- 1783–1786, the Thames partially froze, and snow remained on the ground for months. In February 1784, the North Carolina was frozen in Chesapeake Bay.

- 1794–1795, severe winter, with the coldest January in the UK and lowest temperature ever recorded in London: −21 °C (−6 °F) on 25 January. The cold began on Christmas Eve and lasted until late March, with a few temporary warm-ups. The Severn and Thames froze, and frost fairs started up again. The French army tried to invade the Netherlands over its frozen rivers, while the Dutch fleet was stuck in its harbor. The winter had easterlies (from Siberia) as its dominant feature.

- 1813–1814, severe cold, last freeze-over of Thames, and last frost fair. (Removal of old London Bridge and changes to river's banks made freeze-overs less likely.)

- 1883–1888, colder temperatures worldwide, including an unbroken string of abnormally cold and brutal winters in the Upper Midwest, related to the explosion of Krakatoa in August 1883. There was snow recorded in the UK as early as October and as late as July during this time period.

- 1976–1977, one of the coldest winters in the US in decades.

- 1985, Arctic outbreak in US resulting from shift in polar vortex, with many cold temperature records broken.

- 2002–2003 was an unusually cold winter in the Northern and Eastern US.

- 2010–2011, persistent bitter cold in the entire eastern half of the US from December onward, with few or no mid-winter warm-ups, and with cool conditions continuing into spring. La Niña and negative Arctic oscillation were strong factors. Heavy and persistent precipitation contributed to almost constant snow cover in the Northeastern US which finally receded in early May.

- 2011 was one of the coldest on record in New Zealand with sea level snow falling in Wellington in July for the first time in 35 years and a much heavier snowstorm for 3 days in a row in August.

Effect on humans

Humans are sensitive to winter cold, which compromises the body's ability to maintain both core and surface heat of the body.[29] Slipping on icy surfaces is a common cause of winter injury.[30] Other cold injuries include:[31]

- Hypothermia – Shivering, leading to uncoordinated movements and death.

- Frostbite – Freezing of skin, leading to loss of feeling and damaged tissue.

- Trench foot – Numbness, leading to damaged tissue and gangrene.

- Chilblains – Capillary damage in digits can lead to more severe cold injuries.

Rates of influenza, COVID-19 and other respiratory diseases also increase during the winter.[32][33]

Mythology

In Persian culture, the winter solstice is called Yaldā (meaning: birth) and it has been celebrated for thousands of years. It is referred to as the eve of the birth of Mithra, who symbolised light, goodness and strength on earth.

In Greek mythology, Hades kidnapped Persephone to be his wife. Zeus ordered Hades to return her to Demeter, the goddess of the Earth and her mother. Hades tricked Persephone into eating the food of the dead, so Zeus decreed that she spend six months with Demeter and six months with Hades. During the time her daughter is with Hades, Demeter became depressed and caused winter.

In Welsh mythology, Gwyn ap Nudd abducted a maiden named Creiddylad. On May Day, her lover, Gwythr ap Greidawl, fought Gwyn to win her back. The battle between them represented the contest between summer and winter.

See also

References

On St. Martin's day (11 November) winter begins, summer takes its end, harvest is completed. ...This text is one of many that preserves vestiges of the ancient Indo-European system of two seasons, winter and summer.

- "Why cold winter weather makes it harder for the body to fight respiratory infections". Science. 15 December 2020. Retrieved 24 April 2022.

Further reading

- Rosenthal, Norman E. (1998). Winter Blues. New York: The Guilford Press. ISBN 1-57230-395-6.

External links

Media related to Winter (category) at Wikimedia Commons

Media related to Winter (category) at Wikimedia Commons Quotations related to Winter at Wikiquote

Quotations related to Winter at Wikiquote Cold weather travel guide from Wikivoyage

Cold weather travel guide from Wikivoyage The dictionary definition of winter at Wiktionary

The dictionary definition of winter at Wiktionary

https://en.wikipedia.org/wiki/Winter

https://en.wikipedia.org/wiki/Post_hoc_ergo_propter_hoc

See also

- Apophenia – Tendency to perceive connections between unrelated things

- Affirming the consequent – Type of fallacious argument (logical fallacy)

- Association fallacy – Informal inductive fallacy

- Cargo cult – New religious movement

- Causal inference – Branch of statistics concerned with inferring causal relationships between variables

- Coincidence – Concurrence of events with no connection

- Confirmation bias – Bias confirming existing attitudes

- Correlation does not imply causation – Refutation of a logical fallacy

- Jumping to conclusions – Psychological term

- Magical thinking – Belief in the connection of unrelated events

- Superstition – Belief or behavior that is considered irrational or supernatural

- Surrogate endpoint

https://en.wikipedia.org/wiki/Post_hoc_ergo_propter_hoc

https://en.wikipedia.org/wiki/Questionable_cause

https://en.wikipedia.org/wiki/Informal_fallacy

A simple example is "The rooster crows immediately before sunrise; therefore the rooster causes the sun to rise."[3]

https://en.wikipedia.org/wiki/Post_hoc_ergo_propter_hoc

A false dilemma, also referred to as false dichotomy or false binary, is an informal fallacy based on a premise that erroneously limits what options are available. The source of the fallacy lies not in an invalid form of inference but in a false premise. This premise has the form of a disjunctive claim: it asserts that one among a number of alternatives must be true. This disjunction is problematic because it oversimplifies the choice by excluding viable alternatives, presenting the viewer with only two absolute choices when in fact, there could be many.

https://en.wikipedia.org/wiki/False_dilemma

https://en.wikipedia.org/wiki/Destructive_dilemma

https://en.wikipedia.org/wiki/Defeasible_reasoning

https://en.wikipedia.org/wiki/Disjunctive_syllogism

https://en.wikipedia.org/wiki/Double_negative

https://en.wikipedia.org/wiki/Morphology_(linguistics)

https://en.wikipedia.org/wiki/Morphological_typology

https://en.wikipedia.org/wiki/Monarchy

https://en.wikipedia.org/wiki/no-contendre

https://en.wikipedia.org/wiki/Rule_of_inference

https://en.wikipedia.org/wiki/Biconditional_introduction

https://en.wikipedia.org/wiki/Propositional_calculus

https://en.wikipedia.org/wiki/Undecidable_problem

https://en.wikipedia.org/wiki/Paradox

https://en.wikipedia.org/wiki/Markov_chain_central_limit_theorem

https://en.wikipedia.org/wiki/Asymptotic_distribution#Central_limit_theorem

https://en.wikipedia.org/wiki/Limiting_density_of_discrete_points

https://en.wikipedia.org/wiki/Central_limit_theorem?wprov=srpw1_0

https://en.wikipedia.org/wiki/Independent_and_identically_distributed_random_variables

https://en.wikipedia.org/wiki/Cumulative_distribution_functions

https://en.wikipedia.org/wiki/Right-continuous

https://en.wikipedia.org/wiki/Semi-continuity

https://en.wikipedia.org/wiki/Hemicontinuity

https://en.wikipedia.org/wiki/Transfinite_number

https://en.wikipedia.org/wiki/Cardinal_number

https://en.wikipedia.org/wiki/Bijection

https://en.wikipedia.org/wiki/Surjective_function

https://en.wikipedia.org/wiki/Codomain

https://en.wikipedia.org/wiki/Image_(mathematics)

https://en.wikipedia.org/wiki/Binary_relation#Operations

https://en.wikipedia.org/wiki/Duality_(order_theory)

https://en.wikipedia.org/wiki/If_and_only_if

https://en.wikipedia.org/wiki/Necessity_and_sufficiency#Simultaneous_necessity_and_sufficiency

https://en.wikipedia.org/wiki/Extension_(semantics)

https://en.wikipedia.org/wiki/Extension_(predicate_logic)

https://en.wikipedia.org/wiki/Function_(mathematics)

https://en.wikipedia.org/wiki/Calculus

https://en.wikipedia.org/wiki/Limit_of_a_function#(%CE%B5,_%CE%B4)-definition_of_limit

https://en.wikipedia.org/wiki/Arity

In logic, mathematics, and computer science, arity (/ˈærɪti/ (![]() listen)) is the number of arguments or operands taken by a function, operation or relation. In mathematics, arity may also be called rank,[1][2] but this word can have many other meanings. In logic and philosophy, arity may also be called adicity and degree.[3][4] In linguistics, it is usually named valency.[5]

listen)) is the number of arguments or operands taken by a function, operation or relation. In mathematics, arity may also be called rank,[1][2] but this word can have many other meanings. In logic and philosophy, arity may also be called adicity and degree.[3][4] In linguistics, it is usually named valency.[5]

https://en.wikipedia.org/wiki/Arity

https://en.wikipedia.org/wiki/Hexadecimal

https://en.wikipedia.org/wiki/Hex_file

https://en.wikipedia.org/wiki/Intel_HEX#MT

https://en.wikipedia.org/wiki/Programmable_ROM

https://en.wikipedia.org/wiki/Punched_tape

https://en.wikipedia.org/wiki/Teleprinter

https://en.wikipedia.org/wiki/Telex

https://en.wikipedia.org/wiki/Digital_signal

https://en.wikipedia.org/wiki/Mark_and_space

https://en.wikipedia.org/wiki/Modem

https://en.wikipedia.org/wiki/Subcarrier

https://en.wikipedia.org/wiki/Radio_broadcasting

https://en.wikipedia.org/wiki/Simulcast

https://en.wikipedia.org/wiki/Mass_media

Simulcast (a portmanteau of simultaneous broadcast) is the broadcasting of programmes/programs or events across more than one resolution, bitrate or medium, or more than one service on the same medium, at exactly the same time (that is, simultaneously). For example, Absolute Radio is simulcast on both AM and on satellite radio.[1][2] Likewise, the BBC's Prom concerts were formerly simulcast on both BBC Radio 3 and BBC Television. Another application is the transmission of the original-language soundtrack of movies or TV series over local or Internet radio, with the television broadcast having been dubbed into a local language.

https://en.wikipedia.org/wiki/Simulcast

https://en.wikipedia.org/wiki/Frequency-shift_keying#Audio_FSK

Speeds

Modems are frequently classified by the maximum amount of data they can send in a given unit of time, usually expressed in bits per second (symbol bit/s, sometimes abbreviated "bps") or rarely in bytes per second (symbol B/s). Modern broadband modem speeds are typically expressed in megabits per second (Mbit/s).

Historically, modems were often classified by their symbol rate, measured in baud. The baud unit denotes symbols per second, or the number of times per second the modem sends a new signal. For example, the ITU V.21 standard used audio frequency-shift keying with two possible frequencies, corresponding to two distinct symbols (or one bit per symbol), to carry 300 bits per second using 300 baud. By contrast, the original ITU V.22 standard, which could transmit and receive four distinct symbols (two bits per symbol), transmitted 1,200 bits by sending 600 symbols per second (600 baud) using phase-shift keying.

https://en.wikipedia.org/wiki/Modem

https://en.wikipedia.org/wiki/Modem

Overall history

Modems grew out of the need to connect teleprinters over ordinary phone lines instead of the more expensive leased lines which had previously been used for current loop–based teleprinters and automated telegraphs. The earliest devices that satisfy the definition of a modem may be the multiplexers used by news wire services in the 1920s.[1]

In 1941, the Allies developed a voice encryption system called SIGSALY which used a vocoder to digitize speech, then encrypted the speech with one-time pad and encoded the digital data as tones using frequency shift keying. This was also a digital modulation technique, making this an early modem.[2]

Commercial modems largely did not become available until the late 1950s, when the rapid development of computer technology created demand for a method of connecting computers together over long distances, resulting in the Bell Company and then other businesses producing an increasing number of computer modems for use over both switched and leased telephone lines.

Later developments would produce modems that operated over cable television lines, power lines, and various radio technologies, as well as modems that achieved much higher speeds over telephone lines.

https://en.wikipedia.org/wiki/Modem

In electrical signalling an analog current loop is used where a device must be monitored or controlled remotely over a pair of conductors. Only one current level can be present at any time.

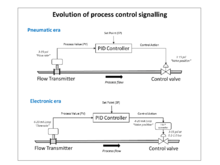

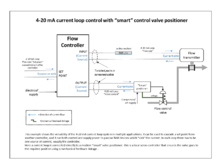

A major application of current loops is the industry de facto standard 4–20 mA current loop for process control applications, where they are extensively used to carry signals from process instrumentation to PID controllers, SCADA systems, and programmable logic controllers (PLCs). They are also used to transmit controller outputs to the modulating field devices such as control valves. These loops have the advantages of simplicity and noise immunity, and have a large international user and equipment supplier base. Some 4–20 mA field devices can be powered by the current loop itself, removing the need for separate power supplies, and the "smart" HART protocol uses the loop for communications between field devices and controllers. Various automation protocols may replace analog current loops, but 4–20 mA is still a principal industrial standard.

https://en.wikipedia.org/wiki/Current_loop

A control valve is a valve used to control fluid flow by varying the size of the flow passage as directed by a signal from a controller.[1] This enables the direct control of flow rate and the consequential control of process quantities such as pressure, temperature, and liquid level.

In automatic control terminology, a control valve is termed a "final control element".

https://en.wikipedia.org/wiki/Control_valve

The opening or closing of automatic control valves is usually done by electrical, hydraulic or pneumatic actuators. Normally with a modulating valve, which can be set to any position between fully open and fully closed, valve positioners are used to ensure the valve attains the desired degree of opening.[2]

Air-actuated valves are commonly used because of their simplicity, as they only require a compressed air supply, whereas electrically operated valves require additional cabling and switch gear, and hydraulically actuated valves required high pressure supply and return lines for the hydraulic fluid.

The pneumatic control signals are traditionally based on a pressure range of 3–15 psi (0.2–1.0 bar), or more commonly now, an electrical signal of 4-20mA for industry, or 0–10 V for HVAC systems. Electrical control now often includes a "Smart" communication signal superimposed on the 4–20 mA control current, such that the health and verification of the valve position can be signalled back to the controller. The HART, Fieldbus Foundation, and Profibus are the most common protocols.

An automatic control valve consists of three main parts in which each part exist in several types and designs:

- Valve actuator – which moves the valve's modulating element, such as ball or butterfly.

- Valve positioner – which ensures the valve has reached the desired degree of opening. This overcomes the problems of friction and wear.

- Valve body – in which the modulating element, a plug, globe, ball or butterfly, is contained.

Control action

Taking the example of an air-operated valve, there are two control actions possible:

- "Air or current to open" – The flow restriction decreases with increased control signal value.

- "Air or current to close" – The flow restriction increases with increased control signal value.

There can also be failure to safety modes:

- "Air or control signal failure to close" – On failure of compressed air to the actuator, the valve closes under spring pressure or by backup power.

- "Air or control signal failure to open" – On failure of compressed air to actuator, the valve opens under spring pressure or by backup power.

The modes of failure operation are requirements of the failure to safety process control specification of the plant. In the case of cooling water it may be to fail open, and the case of delivering a chemical it may be to fail closed.

Valve positioners

The fundamental function of a positioner is to deliver pressurized air to the valve actuator, such that the position of the valve stem or shaft corresponds to the set point from the control system. Positioners are typically used when a valve requires throttling action. A positioner requires position feedback from the valve stem or shaft and delivers pneumatic pressure to the actuator to open and close the valve. The positioner must be mounted on or near the control valve assembly. There are three main categories of positioners, depending on the type of control signal, the diagnostic capability, and the communication protocol: pneumatic, analog, and digital.[3]

Pneumatic positioners

Processing units may use pneumatic pressure signaling as the control set point to the control valves. Pressure is typically modulated between 20.7 and 103 kPa (3 to 15 psig) to move the valve from 0 to 100% position. In a common pneumatic positioner, the position of the valve stem or shaft is compared with the position of a bellows that receives the pneumatic control signal. When the input signal increases, the bellows expands and moves a beam. The beam pivots about an input axis, which moves a flapper closer to the nozzle. The nozzle pressure increases, which increases the output pressure to the actuator through a pneumatic amplifier relay. The increased output pressure to the actuator causes the valve stem to move.

Stem movement is fed back to the beam by means of a cam. As the cam rotates, the beam pivots about the feedback axis to move the flapper slightly away from the nozzle. The nozzle pressure decreases and reduces the output pressure to the actuator. Stem movement continues, backing the flapper away from the nozzle until equilibrium is reached. When the input signal decreases, the bellows contracts (aided by an internal range spring) and the beam pivots about the input axis to move the flapper away from the nozzle. Nozzle decreases and the relay permits the release of diaphragm casing pressure to the atmosphere, which allows the actuator stem to move upward.

Through the cam, stem movement is fed back to the beam to reposition the flapper closer to the nozzle. When equilibrium conditions are obtained, stem movement stops and the flapper is positioned to prevent any further decrease in actuator pressure.[3]

Analog positioners

The second type of positioner is an analog I/P positioner. Most modern processing units use a 4 to 20 mA DC signal to modulate the control valves. This introduces electronics into the positioner design and requires that the positioner convert the electronic current signal into a pneumatic pressure signal (current-to-pneumatic or I/P). In a typical analog I/P positioner, the converter receives a DC input signal and provides a proportional pneumatic output signal through a nozzle/flapper arrangement. The pneumatic output signal provides the input signal to the pneumatic positioner. Otherwise, the design is the same as the pneumatic positioner[3]

Digital positioners

While pneumatic positioners and analog I/P positioners provide basic valve position control, digital valve controllers add another dimension to positioner capabilities. This type of positioner is a microprocessor-based instrument. The microprocessor enables diagnostics and two-way communication to simplify setup and troubleshooting.

In a typical digital valve controller, the control signal is read by the microprocessor, processed by a digital algorithm, and converted into a drive current signal to the I/P converter. The microprocessor performs the position control algorithm rather than a mechanical beam, cam, and flapper assembly. As the control signal increases, the drive signal to the I/P converter increases, increasing the output pressure from the I/P converter. This pressure is routed to a pneumatic amplifier relay and provides two output pressures to the actuator. With increasing control signal, one output pressure always increases and the other output pressure decreases

Double-acting actuators use both outputs, whereas single-acting actuators use only one output. The changing output pressure causes the actuator stem or shaft to move. Valve position is fed back to the microprocessor. The stem continues to move until the correct position is attained. At this point, the microprocessor stabilizes the drive signal to the I/P converter until equilibrium is obtained.

In addition to the function of controlling the position of the valve, a digital valve controller has two additional capabilities: diagnostics and two-way digital communication.[3]

Widely used communication protocols include HART, FOUNDATION fieldbus, and PROFIBUS.

Advantages of placing a smart positioner on a control valve:

- Automatic calibration and configuration of positioner.

- Real time diagnostics.

- Reduced cost of loop commissioning, including installation and calibration.

- Use of diagnostics to maintain loop performance levels.

- Improved process control accuracy that reduces process variability.

Types of control valve

Control valves are classified by attributes and features.

Based on the pressure drop profile

- High recovery valve: These valves typically regain most of static pressure drop from the inlet to vena contracta at the outlet. They are characterised by a lower recovery coefficient. Examples: butterfly valve, ball valve, plug valve, gate valve

- Low recovery valve: These valves typically regain little of the static pressure drop from the inlet to vena contracta at the outlet. They are characterised by a higher recovery coefficient. Examples: globe valve, angle valve

Based on the movement profile of the controlling element

- Sliding stem: The valve stem / plug moves in a linear, or straight line motion. Examples: Globe valve,[4] angle valve, wedge type gate valve

- Rotary valve: The valve disc rotates. Examples: Butterfly valve, ball valve

Based on the functionality

- Control valve: Controls flow parameters proportional to an input signal received from the central control system. Examples: Globe valve, angle valve, ball valve

- Shut-off / On-off valve: These valves are either completely open or closed. Examples: Gate valve, ball valve, globe valve, angle valve, pinch valve, diaphragm valve

- Check valve: Allows flow only in a single direction

- Steam conditioning valve: Regulates the pressure and temperature of inlet media to required parameters at outlet. Examples: Turbine bypass valve, process steam letdown station

- Spring-loaded safety valve: Closed by the force of a spring, which retracts to open when the inlet pressure is equal to the spring force

Based on the actuating medium

- Manual valve: Actuated by hand wheel

- Pneumatic valve: Actuated using a compressible medium like air, hydrocarbon, or nitrogen, with a spring diaphragm, piston cylinder or piston-spring type actuator

- Hydraulic valve: Actuated by a non-compressible medium such as water or oil

- Electric valve: Actuated by an electric motor

A wide variety of valve types and control operation exist. However, there are two main forms of action, the sliding stem and the rotary.

The most common and versatile types of control valves are sliding-stem globe, V-notch ball, butterfly and angle types. Their popularity derives from rugged construction and the many options available that make them suitable for a variety of process applications.[5] Control valve bodies may be categorized as below:[3]

List of common types of control valve

- Sliding stem

- Globe valve – Flow control device

- Angle body valve

- Angle seat piston valve

- Axial Flow valve

- Rotary

- Butterfly valve – Flow control device

- Ball valve – Flow control device

- Other

- Pinch valve – PINCH VALVES ARE PRESSURE CONTROLLED SHUT-OFF VALVES FOR APPLICATIONS IN INDUSTRIAL AUTOMATION

- Diaphragm valve – Flow control device

See also

- Check valve – Flow control device

- Control engineering – Engineering discipline that deals with control systems

- Control system – System that manages the behavior of other systems

- Distributed control system – Computerized control systems with distributed decision-making

- Fieldbus Foundation

- Flow control valve – Valve that regulates the flow or pressure of a fluid

- Highway Addressable Remote Transducer Protocol, also known as HART Protocol – hybrid analog+digital industrial automation protocol; can communicate over legacy 4–20 mA analog instrumentation current loops, sharing the pair of wires used by the analog only host systems

- Instrumentation – Measuring instruments which monitor and control a process

- PID controller – Control loop feedback mechanism

- Process control – Discipline that uses industrial control to achieve a production level of consistency

- Profibus – Communications protocol

- SCADA, also known as Supervisory control and data acquisition system – Control system architecture for supervision of machines and processes

References

External links

- Process Instrumentation (Lecture 8): Control valves Article from a University of South Australia website.

- Control Valve Sizing Calculator Control Valve Sizing Calculator to determine Cv for a valve.

https://en.wikipedia.org/wiki/Control_valve

Denying the antecedent, sometimes also called inverse error or fallacy of the inverse, is a formal fallacy of inferring the inverse from the original statement. It is committed by reasoning in the form:[1]

- If P, then Q.

- Therefore, if not P, then not Q.

which may also be phrased as

(P implies Q)

(therefore, not-P implies not-Q)[1]

Arguments of this form are invalid. Informally, this means that arguments of this form do not give good reason to establish their conclusions, even if their premises are true. In this example, a valid conclusion would be: ~P or Q.

The name denying the antecedent derives from the premise "not P", which denies the "if" clause of the conditional premise.

One way to demonstrate the invalidity of this argument form is with an example that has true premises but an obviously false conclusion. For example:

- If you are a ski instructor, then you have a job.

- You are not a ski instructor.

- Therefore, you have no job.[1]

That argument is intentionally bad, but arguments of the same form can sometimes seem superficially convincing, as in the following example offered by Alan Turing in the article "Computing Machinery and Intelligence":

If each man had a definite set of rules of conduct by which he regulated his life he would be no better than a machine. But there are no such rules, so men cannot be machines.[2]

However, men could still be machines that do not follow a definite set of rules. Thus, this argument (as Turing intends) is invalid.

It is possible that an argument that denies the antecedent could be valid if the argument instantiates some other valid form. For example, if the claims P and Q express the same proposition, then the argument would be trivially valid, as it would beg the question. In everyday discourse, however, such cases are rare, typically only occurring when the "if-then" premise is actually an "if and only if" claim (i.e., a biconditional/equality). The following argument is not valid, but would be if the first premise was "If I can veto Congress, then I am the US President." This claim is now modus tollens, and thus valid.

- If I am President of the United States, then I can veto Congress.

- I am not President.

- Therefore, I cannot veto Congress.

See also

References

- Turing, Alan (October 1950), "Computing Machinery and Intelligence", Mind, LIX (236): 433–460, doi:10.1093/mind/LIX.236.433, ISSN 0026-4423

External links

https://en.wikipedia.org/wiki/Denying_the_antecedent

In propositional logic, affirming the consequent, sometimes called converse error, fallacy of the converse, or confusion of necessity and sufficiency, is a formal fallacy of taking a true conditional statement (e.g., "if the lamp were broken, then the room would be dark"), and invalidly inferring its converse ("the room is dark, so the lamp must be broken"), even though that statement may not be true. This arises when the consequent ("the room would be dark") has other possible antecedents (for example, "the lamp is in working order, but is switched off" or "there is no lamp in the room").

Converse errors are common in everyday thinking and communication and can result from, among other causes, communication issues, misconceptions about logic, and failure to consider other causes.

The opposite statement, denying the consequent, is called modus tollens and is a valid form of argument.[1]

Formal description

Affirming the consequent is the action of taking a true statement

Affirming the consequent can also result from overgeneralizing the experience of many statements having true converses. If P and Q are "equivalent" statements, i.e.

Of the possible forms of "mixed hypothetical syllogisms," two are valid and two are invalid. Affirming the antecedent (modus ponens) and denying the consequent (modus tollens) are valid. Affirming the consequent and denying the antecedent are invalid(see table).[6]

Additional examples

Example 1

One way to demonstrate the invalidity of this argument form is with a counterexample with true premises but an obviously false conclusion. For example:

- If someone lives in San Diego, then they live in California.

- Joe lives in California.

- Therefore, Joe lives in San Diego.

There are many places to live in California other than San Diego; however, one can affirm with certainty that "if someone does not live in California" (non-Q), then "this person does not live in San Diego" (non-P). This is the contrapositive of the first statement, and it must be true if and only if the original statement is true.

Example 2

Here is another useful, obviously fallacious example.

- If an animal is a dog, then it has four legs.

- My cat has four legs.

- Therefore, my cat is a dog.

Here, it is immediately intuitive that any number of other antecedents ("If an animal is a deer...", "If an animal is an elephant...", "If an animal is a moose...", etc.) can give rise to the consequent ("then it has four legs"), and that it is preposterous to suppose that having four legs must imply that the animal is a dog and nothing else. This is useful as a teaching example since most people can immediately recognize that the conclusion reached must be wrong (intuitively, a cat cannot be a dog), and that the method by which it was reached must therefore be fallacious.

Example 3

Arguments of the same form can sometimes seem superficially convincing, as in the following example:

- If Brian had been thrown off the top of the Eiffel Tower, then he would be dead.

- Brian is dead.

- Therefore, Brian was thrown off the top of the Eiffel Tower.

Being thrown off the top of the Eiffel Tower is not the only cause of death, since there exist numerous different causes of death.

Example 4

In Catch-22,[7] the chaplain is interrogated for supposedly being "Washington Irving"/"Irving Washington", who has been blocking out large portions of soldiers' letters home. The colonel has found such a letter, but with the Chaplain's name signed.

- "You can read, though, can't you?" the colonel persevered sarcastically. "The author signed his name."

- "That's my name there."

- "Then you wrote it. Q.E.D."

P in this case is 'The chaplain signs his own name', and Q 'The chaplain's name is written'. The chaplain's name may be written, but he did not necessarily write it, as the colonel falsely concludes.[7]

Example 5

When teaching the scientific method, the following example is used to illustrate why, via the fallacy of affirming the consequent, no scientific theory is ever proven true but rather simply failed to be falsified.

- If this theory is correct, we will observe X.

- We observe X.

- Therefore, this theory is correct.

Concluding or assuming that a theory is true because of a prediction it makes being observed is invalid. This is one of the challenges of applying the scientific method though rarely is it brought up in academic contexts as it is unlikely to be of consequence to the results of the study. Much more common is questioning the validity of the theory, the validity of expected the theory to have predicted the observation, and/or the validity of the observation itself.

See also

References

- Heller, Joseph (1994). Catch-22. Vintage. pp. 438, 8. ISBN 0-09-947731-9.

https://en.wikipedia.org/wiki/Affirming_the_consequent

Appeal to consequences, also known as argumentum ad consequentiam (Latin for "argument to the consequence"), is an argument that concludes a hypothesis (typically a belief) to be either true or false based on whether the premise leads to desirable or undesirable consequences.[1] This is based on an appeal to emotion and is a type of informal fallacy, since the desirability of a premise's consequence does not make the premise true. Moreover, in categorizing consequences as either desirable or undesirable, such arguments inherently contain subjective points of view.

In logic, appeal to consequences refers only to arguments that assert a conclusion's truth value (true or false) without regard to the formal preservation of the truth from the premises; appeal to consequences does not refer to arguments that address a premise's consequential desirability (good or bad, or right or wrong) instead of its truth value. Therefore, an argument based on appeal to consequences is valid in long-term decision making (which discusses possibilities that do not exist yet in the present) and abstract ethics, and in fact such arguments are the cornerstones of many moral theories, particularly related to consequentialism. Appeal to consequences also should not be confused with argumentum ad baculum, which is the bringing up of 'artificial' consequences (i.e. punishments) to argue that an action is wrong.

https://en.wikipedia.org/wiki/Appeal_to_consequences

Ad hominem (Latin for 'to the person'), short for argumentum ad hominem, is a term that refers to several types of arguments, most of which are fallacious. Typically this term refers to a rhetorical strategy where the speaker attacks the character, motive, or some other attribute of the person making an argument rather than attacking the substance of the argument itself. This avoids genuine debate by creating a diversion to some irrelevant but often highly charged issue. The most common form of this fallacy is "A makes a claim x, B asserts that A holds a property that is unwelcome, and hence B concludes that argument x is wrong".

The valid types of ad hominem arguments are generally only encountered in specialized philosophical usage. These typically refer to the dialectical strategy of using the target's own beliefs and arguments against them, while not agreeing with the validity of those beliefs and arguments. Ad hominem arguments were first studied in ancient Greece; John Locke revived the examination of ad hominem arguments in the 17th century. Many contemporary politicians routinely use ad hominem attacks, which can be encapsulated to a derogatory nickname for a political opponent.

https://en.wikipedia.org/wiki/Ad_hominem

Wishful thinking is the formation of beliefs based on what might be pleasing to imagine, rather than on evidence, rationality, or reality. It is a product of resolving conflicts between belief and desire.[1] Methodologies to examine wishful thinking are diverse. Various disciplines and schools of thought examine related mechanisms such as neural circuitry, human cognition and emotion, types of bias, procrastination, motivation, optimism, attention and environment. This concept has been examined as a fallacy. It is related to the concept of wishful seeing.

https://en.wikipedia.org/wiki/Wishful_thinking

The fallacy of the undistributed middle (Latin: non distributio medii) is a formal fallacy that is committed when the middle term in a categorical syllogism is not distributed in either the minor premise or the major premise. It is thus a syllogistic fallacy.

https://en.wikipedia.org/wiki/Fallacy_of_the_undistributed_middle

| Type | |

|---|---|

| Field | |

| Statement |  implies implies  . .  is true. Therefore is true. Therefore  must also be true. must also be true. |

| Symbolic statement |  |

In propositional logic, modus ponens (/ˈmoʊdəs ˈpoʊnɛnz/; MP), also known as modus ponendo ponens (Latin for "method of putting by placing"),[1] implication elimination, or affirming the antecedent,[2] is a deductive argument form and rule of inference.[3] It can be summarized as "P implies Q. P is true. Therefore Q must also be true."

Modus ponens is closely related to another valid form of argument, modus tollens. Both have apparently similar but invalid forms such as affirming the consequent, denying the antecedent, and evidence of absence. Constructive dilemma is the disjunctive version of modus ponens. Hypothetical syllogism is closely related to modus ponens and sometimes thought of as "double modus ponens."

The history of modus ponens goes back to antiquity.[4] The first to explicitly describe the argument form modus ponens was Theophrastus.[5] It, along with modus tollens, is one of the standard patterns of inference that can be applied to derive chains of conclusions that lead to the desired goal.

https://en.wikipedia.org/wiki/Modus_ponens

| Type | |

|---|---|

| Field | |

| Statement |  implies implies  . .  is false. Therefore is false. Therefore  must also be false. must also be false. |

| Symbolic statement |   [1] [1] |

In propositional logic, modus tollens (/ˈmoʊdəs ˈtɒlɛnz/) (MT), also known as modus tollendo tollens (Latin for "method of removing by taking away")[2] and denying the consequent,[3] is a deductive argument form and a rule of inference. Modus tollens takes the form of "If P, then Q. Not Q. Therefore, not P." It is an application of the general truth that if a statement is true, then so is its contrapositive. The form shows that inference from P implies Q to the negation of Q implies the negation of P is a valid argument.

The history of the inference rule modus tollens goes back to antiquity.[4] The first to explicitly describe the argument form modus tollens was Theophrastus.[5]

Modus tollens is closely related to modus ponens. There are two similar, but invalid, forms of argument: affirming the consequent and denying the antecedent. See also contraposition and proof by contrapositive.

https://en.wikipedia.org/wiki/Modus_tollens

In logic and mathematics, contraposition refers to the inference of going from a conditional statement into its logically equivalent contrapositive, and an associated proof method known as proof by contraposition. The contrapositive of a statement has its antecedent and consequent inverted and flipped.

Conditional statement

If P, Then Q. — If not Q, Then not P. "If it is raining, then I wear my coat" — "If I don't wear my coat, then it isn't raining."

The law of contraposition says that a conditional statement is true if, and only if, its contrapositive is true.[2]

The contrapositive (

- Inversion (the inverse),

- "If it is not raining, then I don't wear my coat." Unlike the contrapositive, the inverse's truth value is not at all dependent on whether or not the original proposition was true, as evidenced here.

- Conversion (the converse),

- "If I wear my coat, then it is raining." The converse is actually the contrapositive of the inverse, and so always has the same truth value as the inverse (which as stated earlier does not always share the same truth value as that of the original proposition).

- Negation (the logical complement),

- "It is not the case that if it is raining then I wear my coat.", or equivalently, "Sometimes, when it is raining, I don't wear my coat. " If the negation is true, then the original proposition (and by extension the contrapositive) is false.

Note that if

https://en.wikipedia.org/wiki/Contraposition

Evidence of absence is evidence of any kind that suggests something is missing or that it does not exist. What counts as evidence of absence has been a subject of debate between scientists and philosophers. It is often distinguished from absence of evidence.

Overview

Evidence of absence and absence of evidence are similar but distinct concepts. This distinction is captured in the aphorism "Absence of evidence is not evidence of absence." This antimetabole is often attributed to Martin Rees or Carl Sagan, but a version appeared as early as 1888 in a writing by William Wright.[1] In Sagan's words, the expression is a critique of the "impatience with ambiguity" exhibited by appeals to ignorance.[2] Despite what the expression may seem to imply, a lack of evidence can be informative. For example, when testing a new drug, if no harmful effects are observed then this suggests that the drug is safe.[3] This is because, if the drug were harmful, evidence of that fact can be expected to turn up during testing. The expectation of evidence makes its absence significant.[4]

As the previous example shows, the difference between evidence that something is absent (e.g., an observation that suggests there were no dragons here today) and simple absence of evidence (e.g., no careful research has been done) can be nuanced. Indeed, scientists will often debate whether an experiment's result should be considered evidence of absence, or if it remains absence of evidence. The debate regards whether the experiment would have detected the phenomenon of interest if it were there.[5]

The argument from ignorance for "absence of evidence" is not necessarily fallacious, for example, that a potentially life-saving new drug poses no long-term health risk unless proved otherwise. On the other hand, were such an argument to rely imprudently on the lack of research to promote its conclusion, it would be considered an informal fallacy whereas the former can be a persuasive way to shift the burden of proof in an argument or debate.[6]

Science

In carefully designed scientific experiments, even null results can be evidence of absence.[7] For instance, a hypothesis may be falsified if a vital predicted observation is not found empirically. At this point, the underlying hypothesis may be rejected or revised and sometimes, additional ad hoc explanations may even be warranted. Whether the scientific community will accept a null result as evidence of absence depends on many factors, including the detection power of the applied methods, the confidence of the inference, as well as confirmation bias within the community.

Law

In many legal systems, a lack of evidence for a defendant's guilt is sufficient for acquittal. This is because of the presumption of innocence and the belief that it is worse to convict an innocent person than to let a guilty one go free.[3]

On the other hand, the absence of evidence in the defendant's favor (e.g. an alibi) can make their guilt seem more likely. A jury can be persuaded to convict because of "evidentiary lacunae", or a lack of evidence they expect to hear.[8]

Proving a negative

This section needs additional citations for verification. Please help improve this article by adding citations to reliable sources in this section. Unsourced material may be challenged and removed. (October 2021) (Learn how and when to remove this template message) |

A negative claim is a colloquialism for an affirmative claim that asserts the non-existence or exclusion of something.[9] Proofs of negative claims are common in mathematics. Such claims include Euclid's theorem that there is no largest prime number, and Arrow's impossibility theorem.[citation needed] There can be multiple claims within a debate, nevertheless, whoever makes a claim usually carries the burden of proof regardless of positive or negative content in the claim.[citation needed]

A negative claim may or may not exist as a counterpoint to a previous claim. A proof of impossibility or an evidence of absence argument are typical methods to fulfill the burden of proof for a negative claim.[9][10]

Philosopher Steven Hales argues that typically one can logically be as confident with the negation of an affirmation. Hales says that if one's standards of certainty leads them to say "there is never 'proof' of non-existence", then they must also say that "there is never 'proof' of existence either". Hales argues that there are many cases where we may be able to prove something does not exist with as much certainty as proving something does exist.[9]: 109–112 A similar position is taken by philosopher Stephen Law who highlights that rather than focusing on the existence of "proof", a better question would be whether there is any reasonable doubt for existence or non-existence.[11]

See also

References

Appeal to ignorance—the claim that whatever has not been proved false must be true, and vice versa (e.g., There is no compelling evidence that UFOs are not visiting the Earth; therefore UFOs exist—and there is intelligent life elsewhere in the Universe. Or: There may be seventy kazillion other worlds, but not one is known to have the moral advancement of the Earth, so we're still central to the Universe.) This impatience with ambiguity can be criticized in the phrase: absence of evidence is not evidence of absence.

[Advocates] of the presumption of atheism... insist that it is precisely the absence of evidence for theism that justifies their claim that God does not exist. The problem with such a position is captured neatly by the aphorism, beloved of forensic scientists, that "absence of evidence is not evidence of absence." The absence of evidence is evidence of absence only in case in which, were the postulated entity to exist, we should expect to have more evidence of its existence than we do.

- "You Can Prove a Negative | Psychology Today". www.psychologytoday.com. Retrieved 2022-11-28.

In classical logic, a hypothetical syllogism is a valid argument form, a syllogism with a conditional statement for one or both of its premises.

An example in English:

- If I do not wake up, then I cannot go to work.

- If I cannot go to work, then I will not get paid.

- Therefore, if I do not wake up, then I will not get paid.

The term originated with Theophrastus.[2]

A pure hypothetical syllogism is a syllogism in which both premises and conclusions are conditionals. The antecedent of one premise must match the consequent of the other for the conditional to be valid. Consequently, conditionals contain remained antecedent as antecedent and remained consequent as consequent.

- If p, then q.

- If q, then r.

- ∴ If p, then r.

A mixed hypothetical syllogism consists of one conditional statement and one statement that expresses either affirmation or denial with either the antecedent or consequence of that conditional. Therefore, such a mixed hypothetical syllogism has four possible forms, of which two are valid, while the other two are invalid(See Table). The first way to get a valid conclusion is to affirm the antecedent. A valid hypothetical syllogism either denies the consequent (modus tollens) or affirms the antecedent (modus ponens).[1]

https://en.wikipedia.org/wiki/Hypothetical_syllogism

Confusion of the inverse, also called the conditional probability fallacy or the inverse fallacy, is a logical fallacy whereupon a conditional probability is equated with its inverse; that is, given two events A and B, the probability of A happening given that B has happened is assumed to be about the same as the probability of B given A, when there is actually no evidence for this assumption.[1][2] More formally, P(A|B) is assumed to be approximately equal to P(B|A).

https://en.wikipedia.org/wiki/Confusion_of_the_inverse

| Part of a series on |

| Bayesian statistics |

|---|

| Posterior = Likelihood × Prior ÷ Evidence |

| Background |

| Model building |

| Posterior approximation |

| Estimators |

| Evidence approximation |

| Model evaluation |

A prior probability distribution of an uncertain quantity, often simply called the prior, is its assumed probability distribution before some evidence is taken into account. For example, the prior could be the probability distribution representing the relative proportions of voters who will vote for a particular politician in a future election. The unknown quantity may be a parameter of the model or a latent variable rather than an observable variable.

https://en.wikipedia.org/wiki/Prior_probability

https://en.wikipedia.org/wiki/Conditional_probability

Abductive reasoning (also called abduction,[1] abductive inference,[1] or retroduction[2]) is a form of logical inference that seeks the simplest and most likely conclusion from a set of observations. It was formulated and advanced by American philosopher Charles Sanders Peirce beginning in the last third of the 19th century.

Abductive reasoning, unlike deductive reasoning, yields a plausible conclusion but does not definitively verify it. Abductive conclusions do not eliminate uncertainty or doubt, which is expressed in retreat terms such as "best available" or "most likely". One can understand abductive reasoning as inference to the best explanation,[3] although not all usages of the terms abduction and inference to the best explanation are equivalent.[4][5]

In the 1990s, as computing power grew, the fields of law,[6] computer science, and artificial intelligence research[7] spurred renewed interest in the subject of abduction.[8] Diagnostic expert systems frequently employ abduction.[9]

https://en.wikipedia.org/wiki/Abductive_reasoning

Backward chaining (or backward reasoning) is an inference method described colloquially as working backward from the goal. It is used in automated theorem provers, inference engines, proof assistants, and other artificial intelligence applications.[1]

In game theory, researchers apply it to (simpler) subgames to find a solution to the game, in a process called backward induction. In chess, it is called retrograde analysis, and it is used to generate table bases for chess endgames for computer chess.

Backward chaining is implemented in logic programming by SLD resolution. Both rules are based on the modus ponens inference rule. It is one of the two most commonly used methods of reasoning with inference rules and logical implications – the other is forward chaining. Backward chaining systems usually employ a depth-first search strategy, e.g. Prolog.[2]

https://en.wikipedia.org/wiki/Backward_chaining

In chess and other similar games, the endgame (or end game or ending) is the stage of the game when few pieces are left on the board.