Bunsen detected previously unknown new blue spectral emission lines in samples of mineral water from Dürkheim.

https://en.wikipedia.org/wiki/Robert_Bunsen

Cadet's fuming liquid was a red-brown oily liquid prepared in 1760 by the French chemist Louis Claude Cadet de Gassicourt (1731-1799) by the reaction of potassium acetate with arsenic trioxide.[1] It consisted mostly of dicacodyl (((CH3)2As)2) and cacodyl oxide (((CH3)2As)2O). The reaction for forming the oxide was something like:

- 4 KCH3COO + As2O3 → ((CH3)2As)2O + 2 K2CO3 + 2 CO2

These were the first organometallic substances prepared; as such, Cadet has been regarded as the father of organometallic chemistry.[2]

This liquid develops white fumes when exposed to air, resulting in a pale flame producing carbon dioxide, water, and arsenic trioxide. It has a nauseating and very disagreeable garlic-like odor.

Around 1840, Robert Bunsen did much work on characterizing the compounds in the liquid and its derivatives. His research was important in the development of radical theory.

https://en.wikipedia.org/wiki/Cadet%27s_fuming_liquid

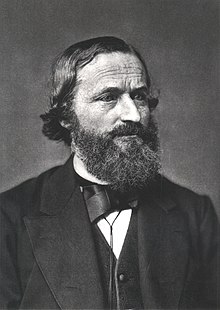

Gustav Robert Kirchhoff (German: [ˈkɪʁçhɔf]; 12 March 1824 – 17 October 1887) was a German physicist who contributed to the fundamental understanding of electrical circuits, spectroscopy, and the emission of black-body radiation by heated objects.[1][2]

He coined the term black-body radiation in 1862. Several different sets of concepts are named "Kirchhoff's laws" after him, concerning such diverse subjects as black-body radiation and spectroscopy, electrical circuits, and thermochemistry. The Bunsen–Kirchhoff Award for spectroscopy is named after him and his colleague, Robert Bunsen.

Gustav Kirchhoff | |

|---|---|

| |

| Born | Gustav Robert Kirchhoff 12 March 1824 |

| Died | 17 October 1887 (aged 63) |

| Nationality | Prussian (1824–1871) German (1871–1887) |

| Alma mater | University of Königsberg |

| Known for | Kirchhoff's circuit laws Kirchhoff's law of thermal radiation Kirchhoff's laws of spectroscopy Kirchhoff's law of thermochemistry |

| Awards | Rumford medal (1862) Davy Medal (1877) Matteucci Medal (1877) Janssen Medal (1887) |

| Scientific career | |

| Fields | Physics Chemistry |

| Institutions | University of Berlin University of Breslau University of Heidelberg |

| Doctoral advisor | Franz Ernst Neumann[citation needed] |

| Notable students | Loránd Eötvös Edward Nichols Gabriel Lippmann[citation needed] Dmitri Ivanovich Mendeleev Max Planck Jules Piccard Max Noether Heike Kamerlingh Onnes Ernst Schröder |

https://en.wikipedia.org/wiki/Gustav_Kirchhoff

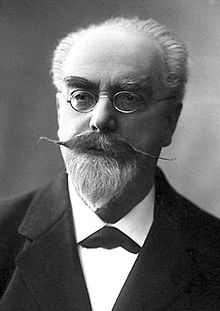

Jonas Ferdinand Gabriel Lippmann[2] (16 August 1845 – 13 July 1921) was a Franco-Luxembourgish physicist and inventor, and Nobel laureate in physics for his method of reproducing colours photographically based on the phenomenon of interference.[3]

Gabriel Lippmann | |

|---|---|

| |

| Born | Jonas Ferdinand Gabriel Lippmann 16 August 1845 Bonnevoie/Bouneweg, Luxembourg (since 1921 part of Luxembourg City) |

| Died | 13 July 1921 (aged 75) SS France, Atlantic Ocean |

| Nationality | France |

| Alma mater | École Normale Supérieure |

| Known for | Lippmann colour photography Integral 3-D photography Lippmann electrometer |

| Awards | Nobel Prize for Physics(1908) |

| Scientific career | |

| Fields | Physics |

| Institutions | Sorbonne |

| Doctoral advisor | Gustav Kirchhoff |

| Other academic advisors | Hermann von Helmholtz[1] |

| Doctoral students | Marie Curie |

Career[edit]

Lippmann made several important contributions to various branches of physics over the years.

The capillary electrometer[edit]

One of Lippmann's early discoveries was the relationship between electrical and capillary phenomena which allowed him to develop a sensitive capillary electrometer, subsequently known as the Lippmann electrometer which was used in the first ECG machine. In a paper delivered to the Philosophical Society of Glasgow on 17 January 1883, John G. M'Kendrick described the apparatus as follows:

- Lippmann's electrometer consists of a tube of ordinary glass, 1 metre long and 7 millimetres in diameter, open at both ends, and kept in the vertical position by a stout support. The lower end is drawn into a capillary point, until the diameter of the capillary is .005 of a millimetre. The tube is filled with mercury, and the capillary point is immersed in dilute sulphuric acid (1 to 6 of water in volume), and in the bottom of the vessel containing the acid there is a little more mercury. A platinum wire is put into connection with the mercury in each tube, and, finally, arrangements are made by which the capillary point can be seen with a microscope magnifying 250 diameters. Such an instrument is very sensitive; and Lippmann states that it is possible to determine a difference of potential so small as that of one 10,080th of a Daniell. It is thus a very delicate means of observing and (as it can be graduated by a compensation-method) of measuring minute electromotive forces.[9][10]

Lippmann's PhD thesis, presented to the Sorbonne on 24 July 1875, was on electrocapillarity.[11]

Piezoelectricity[edit]

In 1881, Lippmann predicted the converse piezoelectric effect.[12]

Colour photography[edit]

Above all, Lippmann is remembered as the inventor of a method for reproducing colours by photography, based on the interference phenomenon, which earned him the Nobel Prize in Physics for 1908.[7]

In 1886, Lippmann's interest turned to a method of fixing the colours of the solar spectrum on a photographic plate. On 2 February 1891, he announced to the Academy of Sciences: "I have succeeded in obtaining the image of the spectrum with its colours on a photographic plate whereby the image remains fixed and can remain in daylight without deterioration." By April 1892, he was able to report that he had succeeded in producing colour images of a stained glass window, a group of flags, a bowl of oranges topped by a red poppy and a multicoloured parrot. He presented his theory of colour photography using the interference method in two papers to the Academy, one in 1894, the other in 1906.[5]

The interference phenomenon in optics occurs as a result of the wave propagation of light. When light of a given wavelength is reflected back upon itself by a mirror, standing waves are generated, much as the ripples resulting from a stone dropped into still water create standing waves when reflected back by a surface such as the wall of a pool. In the case of ordinary incoherent light, the standing waves are distinct only within a microscopically thin volume of space next to the reflecting surface.

Lippmann made use of this phenomenon by projecting an image onto a special photographic plate capable of recording detail smaller than the wavelengths of visible light. The light passed through the supporting glass sheet into a very thin and nearly transparent photographic emulsion containing sub microscopically small silver halide grains. A temporary mirror of liquid mercury in intimate contact reflected the light back through the emulsion, creating standing waves whose nodes had little effect while their antinodes created a latent image. After development, the result was a structure of laminae, distinct parallel layers composed of submicroscopic metallic silver grains, which was a permanent record of the standing waves. In each part of the image, the spacing of the laminae corresponded to the half-wavelengths of the light photographed.

The finished plate was illuminated from the front at a nearly perpendicular angle, using daylight or another source of white light containing the full range of wavelengths in the visible spectrum. At each point on the plate, light of approximately the same wavelength as the light which had generated the laminae was strongly reflected back toward the viewer. Light of other wavelengths which was not absorbed or scattered by the silver grains simply passed through the emulsion, usually to be absorbed by a black anti-reflection coating applied to the back of the plate after it had been developed. The wavelengths, and therefore the colours, of the light which had formed the original image were thus reconstituted and a full-colour image was seen.[13][14][15]

In practice, the Lippmann process was not easy to use. Extremely fine-grained high-resolution photographic emulsions are inherently much less light-sensitive than ordinary emulsions, so long exposure times were required. With a lens of large aperture and a very brightly sunlit subject, a camera exposure of less than one minute was sometimes possible, but exposures measured in minutes were typical. Pure spectral colours reproduced brilliantly, but the ill-defined broad bands of wavelengths reflected by real-world objects could be problematic. The process did not produce colour prints on paper and it proved impossible to make a good duplicate of a Lippmann colour photograph by rephotographing it, so each image was unique. A very shallow-angled prism was usually cemented to the front of the finished plate to deflect unwanted surface reflections, and this made plates of any substantial size impractical. The lighting and viewing arrangement required to see the colours to best effect precluded casual use. Although the special plates and a plate holder with a built-in mercury reservoir were commercially available for a few years circa 1900, even expert users found consistent good results elusive and the process never graduated from being a scientifically elegant laboratory curiosity. It did, however, stimulate interest in the further development of colour photography.[15]

Lippmann's process foreshadowed laser holography, which is also based on recording standing waves in a photographic medium. Denisyuk reflection holograms, often referred to as Lippmann-Bragg holograms, have similar laminar structures that preferentially reflect certain wavelengths. In the case of actual multiple-wavelength colour holograms of this type, the colour information is recorded and reproduced just as in the Lippmann process, except that the highly coherent laser light passing through the recording medium and reflected back from the subject generates the required distinct standing waves throughout a relatively large volume of space, eliminating the need for reflection to occur immediately adjacent to the recording medium. Unlike Lippmann colour photography, however, the lasers, the subject and the recording medium must all be kept stable to within one quarter of a wavelength during the exposure in order for the standing waves to be recorded adequately or at all.

Integral photography[edit]

In 1908, Lippmann introduced what he called "integral photography", in which a plane array of closely spaced, small, spherical lenses is used to photograph a scene, recording images of the scene as it appears from many slightly different horizontal and vertical locations. When the resulting images are rectified and viewed through a similar array of lenses, a single integrated image, composed of small portions of all the images, is seen by each eye. The position of the eye determines which parts of the small images it sees. The effect is that the visual geometry of the original scene is reconstructed, so that the limits of the array seem to be the edges of a window through which the scene appears life-size and in three dimensions, realistically exhibiting parallax and perspective shift with any change in the position of the observer.[16] This principle of using numerous lenses or imaging apertures to record what was later termed a light field underlies the evolving technology of light-field cameras and microscopes.

When Lippmann presented the theoretical foundations of his "integral photography" in March 1908, it was impossible to accompany them with concrete results. At the time, the materials necessary for producing a lenticular screen with the proper optical qualities were lacking. In the 1920s, promising trials were made by Eugène Estanave, using glass Stanhope lenses, and by Louis Lumière, using celluloid.[17] Lippmann's integral photography was the foundation of research on 3D and animated lenticular imagery and also on color lenticular processes.

Measurement of time[edit]

In 1895, Lippmann evolved a method of eliminating the personal equation in measurements of time, using photographic registration, and he studied the eradication of irregularities of pendulum clocks, devising a method of comparing the times of oscillation of two pendulums of nearly equal period.[4]

The coelostat[edit]

Lippmann also invented the coelostat, an astronomical tool that compensated for the Earth's rotation and allowed a region of the sky to be photographed without apparent movement.[4]

https://en.wikipedia.org/wiki/Gabriel_Lippmann

Baron Loránd Eötvös de Vásárosnamény (or Loránd Eötvös, pronounced [ˈloraːnd ˈøtvøʃ], Hungarian: vásárosnaményi báró Eötvös Loránd Ágoston; 27 July 1848 – 8 April 1919), also called Baron Roland von Eötvös in English literature,[2] was a Hungarian physicist. He is remembered today largely for his work on gravitation and surface tension, and the invention of the torsion pendulum.

In addition to Eötvös Loránd University[3] and the Eötvös Loránd Institute of Geophysics in Hungary, the Eötvös crater on the Moon,[4] the asteroid 12301 Eötvösand the mineral lorándite also bear his name.

Loránd Eötvös | |

|---|---|

Eötvös in 1912 | |

| Born | 27 July 1848 |

| Died | 8 April 1919 (aged 70) |

| Nationality | Hungarian |

| Alma mater | University of Heidelberg |

| Known for | Eötvös experiment Eötvös rule Eötvös pendulum |

| Spouse(s) | Gizella Horvát |

| Children | Jolán Rolanda Ilona |

| Parent(s) | József Eötvös Agnes Rosty de Barkócz |

| Scientific career | |

| Fields | Physics |

| Institutions | University of Budapest |

| Doctoral advisor | Hermann Helmholtz[1] |

Life[edit]

Born in 1848, the year of the Hungarian revolution, Eötvös was the son of the Baron József Eötvös de Vásárosnamény (1813–1871), a well-known poet, writer, and liberal politician, who was cabinet minister at the time, and played an important part in 19th century Hungarian intellectual and political life. His mother was the Hungarian noble lady Agnes Rosty de Barkócz (1825–1913), member of the illustrious noble family Rosty de Barkócz that originally hailed from the Vas county, and through this, he descended from the ancient medieval Hungarian noble Perneszy family, which died out in the 18th century. Loránd's uncle was Pál Rosty de Barkócz (1830–1874) was a Hungarian nobleman, photographer, explorer, who visited Texas, New Mexico, Mexico, Cuba and Venezuela between 1857 and 1859.

Loránd Eötvös first studied law, but soon switched to physics and went abroad to study in Heidelberg and Königsberg. After earning his doctorate, he became a university professor in Budapest and played a leading part in Hungarian science for almost half a century. He gained international recognition first by his innovative work on capillarity, then by his refined experimental methods and extensive field studies in gravity.

Eötvös is remembered today for his experimental work on gravity, in particular his study of the equivalence of gravitational and inertial mass (the so-called weak equivalence principle) and his study of the gravitational gradient on the Earth's surface. The weak equivalence principle plays a prominent role in relativity theoryand the Eötvös experiment was cited by Albert Einstein in his 1916 paper The Foundation of the General Theory of Relativity. Measurements of the gravitational gradient are important in applied geophysics, such as the location of petroleum deposits. The CGS unit for gravitational gradient is named the eotvos in his honor.

From 1886 until his death, Loránd Eötvös researched and taught in the University of Budapest, which in 1950 was renamed after him (Eötvös Loránd University).

Eötvös is buried in the Kerepesi Cemetery in Budapest, Hungary.[5]

Torsion balance[edit]

A variation of the earlier invention, the torsion balance, the Eötvös pendulum, designed by Hungarian Baron Loránd Eötvös, is a sensitive instrument for measuring the density of underlying rock strata. The device measures not only the direction of force of gravity, but the change in the force of gravity's extent in the horizontal plane. It determines the distribution of masses in the Earth's crust. The Eötvös torsion balance, an important instrument of geodesy and geophysics throughout the whole world, studies the Earth's physical properties. It is used for mine exploration, and also in the search for minerals, such as oil, coal and ores. The Eötvös pendulum was never patented, but after the demonstration of its accuracy and numerous visits to Hungary from abroad, several instruments were exported worldwide, and the richest oilfields in the United States were discovered by using it. The Eötvös pendulum was used to prove the equivalence of the inertial mass and the gravitational mass accurately, as a response to the offer of a prize. This equivalence was used later by Albert Einstein in setting out the theory of general relativity.

This is how Eötvös describes his balance:

One of Eötvös' assistants who later became a noted scientist was Radó von Kövesligethy.

Honors[edit]

To honor Eötvös, a postage stamp was issued by Hungary on 1 July 1932.[6] Another stamp was issued on 27 July 1948 to commemorate the centenary of the birth of the physicist.[7] Hungary issued a postage stamp on 31 January 1991.[8]

See also[edit]

- Eotvos, a unit of gravitational gradient

- Eötvös effect, a concept in geodesy

- Eötvös number, a concept in fluid dynamics

- Eötvös rule for predicting surface tension dependent on temperature

- List of geophysicists

- Lorándite, a mineral named after Loránd Eötvös

References[edit]

- ^ Physics Tree – Hermann von Helmholtz Family Tree

- ^ L. Bod, E. Fishbach, G. Marx, and Maria Náray-Ziegler: One hundred years of the Eötvös experiment, – Acta Physica Hungarica 69/3-4 (1991) 335–355

- ^ Brief History of ELTE, Eötvös Loránd University, archived from the original on 7 May 2016, retrieved 7 May 2016

- ^ Pickover, Clifford (2008), Archimedes to Hawking: Laws of Science and the Great Minds Behind Them, Oxford University Press, p. 383, ISBN 9780199792689.

- ^ See this site for a photograph of his gravesite.

- ^ colnect.com/en/stamps/stamp/141647-Baron_Loránd_Eötvös_1848-1919_physicist-Personalities-Hungary.

- ^ colnect.com/en/stamps/stamp/179845-Baron_Lóránd_Eötvös_1848-1919_physicist-Lóránd_Eötvös-Hungary

- ^ colnect.com/en/stamps/stamp/181792-Lóránd_Eötvös-People-Hungary

Further reading[edit]

- Antall, J. (1971), "The Pest School of Medicine and the health policy of the Centralists. On the centenary of the death of József Eötvös", Orvosi Hetilap (published 9 May 1971), 112 (19), pp. 1083–9, PMID 4932574

https://en.wikipedia.org/wiki/Loránd_Eötvös

The eotvos is a unit of acceleration divided by distance that was used in conjunction with the older centimetre–gram–second system of units (cgs). The eotvos is defined as 10−9 galileos per centimetre. The symbol of the eotvos unit is E.[1][2]

In SI units or in cgs units, 1 eotvos = 10−9 second−2.[3]

The gravitational gradient of the Earth, that is, the change in the gravitational acceleration vector from one point on the Earth's surface to another, is customarily measured in units of eotvos. The Earth's gravity gradient is dominated by the component due to Earth's near-spherical shape, which results in a vertical tensile gravity gradient of 3,080 E (an elevation increase of 1 m gives a decrease of gravity of about 0.3 mGal), and horizontal compressive gravity gradients of one half that, or 1,540 E. Earth's rotation perturbs this in a direction-dependent manner by about 5 E. Gravity gradient anomalies in mountainous areas can be as large as several hundred eotvos.

The eotvos unit is named for the physicist Loránd Eötvös, who made pioneering studies of the gradient of the Earth's gravitational field.[4]

| eotvos | |

|---|---|

| Unit system | Non-SI metric unit |

| Unit of | Linear acceleration density |

| Symbol | E |

| Named after | Loránd Eötvös |

| Derivation | 10−9 Gal/cm |

| Conversions | |

| 1 E in ... | ... is equal to ... |

| CGS base units | 10−9 s−2 |

| SI base units | 10−9 s−2 |

https://en.wikipedia.org/wiki/Eotvos_(unit)

Gravity gradiometry is the study and measurement of variations (anomalies) in the Earth's gravitational field. The gravity gradient is the spatial rate of change of gravitational acceleration. As acceleration is a vector quantity, with magnitude and three-dimensional direction, the full gravity gradient is a 3x3 tensor.

Gravity gradiometry is used by oil and mineral prospectors to measure the density of the subsurface, effectively by measuring the rate of change of gravitational acceleration due to underlying rock properties. From this information it is possible to build a picture of subsurface anomalies which can then be used to more accurately target oil, gas and mineral deposits. It is also used to image water column density, when locating submerged objects, or determining water depth (bathymetry). Physical scientists use gravimeters to determine the exact size and shape of the earth and they contribute to the gravity compensations applied to inertial navigation systems.

https://en.wikipedia.org/wiki/Gravity_gradiometry

Friedrich Wilhelm Karl Ernst Schröder (25 November 1841 in Mannheim, Baden, Germany – 16 June 1902 in Karlsruhe, Germany) was a Germanmathematician mainly known for his work on algebraic logic. He is a major figure in the history of mathematical logic, by virtue of summarizing and extending the work of George Boole, Augustus De Morgan, Hugh MacColl, and especially Charles Peirce. He is best known for his monumental Vorlesungen über die Algebra der Logik (Lectures on the Algebra of Logic, 1890–1905), in three volumes, which prepared the way for the emergence of mathematical logic as a separate discipline in the twentieth century by systematizing the various systems of formal logic of the day.

Ernst Schröder | |

|---|---|

Ernst Schröder | |

| Born | 25 November 1841 |

| Died | 16 June 1902 (aged 60) |

| Nationality | German |

| Scientific career | |

| Fields | Mathematics |

https://en.wikipedia.org/wiki/Ernst_Schröder

Heike Kamerlingh Onnes (21 September 1853 – 21 February 1926) was a Dutch physicist and Nobel laureate. He exploited the Hampson–Linde cycle to investigate how materials behave when cooled to nearly absolute zero and later to liquefy helium for the first time, in 1908. He also discovered superconductivity in 1911.[1][2][3]

Heike Kamerlingh Onnes | |

|---|---|

| |

| Born | Heike Kamerlingh Onnes 21 September 1853 Groningen, Netherlands |

| Died | 21 February 1926 (aged 72) Leiden, Netherlands |

| Nationality | Netherlands |

| Alma mater | Heidelberg University University of Groningen |

| Known for | Liquid helium Onnes-effect Superconductivity Virial equation of state Coining the term “enthalpy” Kamerlingh Onnes Award |

| Awards | Matteucci Medal (1910) Rumford Medal (1912) Nobel Prize in Physics (1913) Franklin Medal (1915) |

| Scientific career | |

| Fields | Physics |

| Institutions | University of Leiden Delft Polytechnic |

| Doctoral advisor | Rudolf Adriaan Mees |

| Other academic advisors | Robert Bunsen Gustav Kirchhoff Johannes Bosscha |

| Doctoral students | Jacob Clay Wander de Haas Gilles Holst Johannes Kuenen Pieter Zeeman |

| Influences | Johannes Diderik van der Waals |

| Influenced | Willem Hendrik Keesom Cryogenics |

University of Leiden[edit]

From 1882 to 1923 Kamerlingh Onnes served as professor of experimental physics at the University of Leiden. In 1904 he founded a very large cryogenicslaboratory and invited other researchers to the location, which made him highly regarded in the scientific community. The laboratory is known now as Kamerlingh Onnes Laboratory.[4] Only one year after his appointment as professor he became member of the Royal Netherlands Academy of Arts and Sciences.[5]

Liquefaction of helium[edit]

On 10 July 1908, he was the first to liquefy helium, using several precooling stages and the Hampson–Linde cycle based on the Joule–Thomson effect. This way he lowered the temperature to the boiling point of helium (−269 °C, 4.2 K). By reducing the pressure of the liquid helium he achieved a temperature near 1.5 K. These were the coldest temperatures achieved on earth at the time. The equipment employed is at the Museum Boerhaave in Leiden.[4]

Superconductivity[edit]

In 1911 Kamerlingh Onnes measured the electrical conductivity of pure metals (mercury, and later tin and lead) at very low temperatures. Some scientists, such as William Thomson (Lord Kelvin), believed that electrons flowing through a conductor would come to a complete halt or, in other words, metal resistivity would become infinitely large at absolute zero. Others, including Kamerlingh Onnes, felt that a conductor's electrical resistance would steadily decrease and drop to nil. Augustus Matthiessen said that when the temperature decreases, the metal conductivity usually improves or in other words, the electrical resistivity usually decreases with a decrease of temperature.[6][7]

On 8 April 1911, Kamerlingh Onnes found that at 4.2 K the resistance in a solid mercury wire immersed in liquid helium suddenly vanished. He immediately realized the significance of the discovery (as became clear when his notebook was deciphered a century later).[8] He reported that "Mercury has passed into a new state, which on account of its extraordinary electrical properties may be called the superconductive state". He published more articles about the phenomenon, initially referring to it as "supraconductivity" and, only later adopting the term "superconductivity".

Kamerlingh Onnes received widespread recognition for his work, including the 1913 Nobel Prize in Physics for (in the words of the committee) "his investigations on the properties of matter at low temperatures which led, inter alia, to the production of liquid helium".

https://en.wikipedia.org/wiki/Heike_Kamerlingh_Onnes

https://en.wikipedia.org/wiki/Superfluidity

https://en.wikipedia.org/wiki/Superfluid_helium-4

https://en.wikipedia.org/wiki/Hydrogen_fuel

https://en.wikipedia.org/wiki/Vapor-compression_refrigeration

https://en.wikipedia.org/wiki/Lossless_compression

https://en.wikipedia.org/wiki/Infinite_divisibility

https://en.wikipedia.org/wiki/Shear_force

https://en.wikipedia.org/wiki/Compression_(physics)

https://en.wikipedia.org/wiki/Tension_(physics)

https://en.wikipedia.org/wiki/Trapped_ion_quantum_computer

https://en.wikipedia.org/wiki/Quantum_computing

https://en.wikipedia.org/wiki/Coupling_(physics)

https://en.wikipedia.org/wiki/Oscillation

https://en.wikipedia.org/wiki/Hooke%27s_law

https://en.wikipedia.org/wiki/Christiaan_Huygens

https://en.wikipedia.org/wiki/Aromatic_ring_current

https://en.wikipedia.org/wiki/Magnetic_susceptibility

https://en.wikipedia.org/wiki/Ferromagnetism

https://en.wikipedia.org/wiki/scalar

https://en.wikipedia.org/wiki/surface

https://en.wikipedia.org/wiki/tension

https://en.wikipedia.org/wiki/tensor

https://en.wikipedia.org/wiki/dimension

https://en.wikipedia.org/wiki/plane

https://en.wikipedia.org/wiki/vertical

https://en.wikipedia.org/wiki/pressure

https://en.wikipedia.org/wiki/acceleration

https://en.wikipedia.org/wiki/angular

https://en.wikipedia.org/wiki/dihedral_angle

https://en.wikipedia.org/wiki/Ferromagnetic_resonance

https://en.wikipedia.org/wiki/Eddy_current

https://en.wikipedia.org/wiki/Antiferromagnetism#antiferromagnetic_materials

https://en.wikipedia.org/wiki/Geometrical_frustration

https://en.wikipedia.org/wiki/Saturation_(magnetic)

https://en.wikipedia.org/wiki/Coercivity

https://en.wikipedia.org/wiki/Tensor

https://en.wikipedia.org/wiki/Superconductivity

https://en.wikipedia.org/wiki/Gaussian_units

https://en.wikipedia.org/wiki/Electric_susceptibility

https://en.wikipedia.org/wiki/Magnetic_field

https://en.wikipedia.org/wiki/Permeability_(electromagnetism)#Relative_permeability_and_magnetic_susceptibility

https://en.wikipedia.org/wiki/Dimensionless_quantity

https://en.wikipedia.org/wiki/Superconductivity

https://en.wikipedia.org/wiki/Kinetic_theory_of_gases

https://en.wikipedia.org/wiki/Permeability_(electromagnetism)#Relative_permeability_and_magnetic_susceptibility

https://en.wikipedia.org/wiki/Magnetic_susceptibility

https://en.wikipedia.org/wiki/Cyclophane

https://en.wikipedia.org/wiki/Proton_nuclear_magnetic_resonance

https://en.wikipedia.org/wiki/Non-coordinating_anion

https://en.wikipedia.org/wiki/Tetrakis(3,5-bis(trifluoromethyl)phenyl)borate

https://en.wikipedia.org/wiki/Faraday_effect

https://en.wikipedia.org/wiki/Axial_chirality

Karl Johann Freudenberg ForMemRS[1] (29 January 1886 Weinheim, Baden – 3 April 1983 Weinheim) was a German chemist who did early seminal work on the absolute configurations to carbohydrates, terpenes, and steroids, and on the structure of cellulose (first correct formula published, 1928) and other polysaccharides, and on the nature, structure, and biosynthesis of lignin. The Research Institute for the Chemistry of Wood and Polysaccharides at the University of Heidelberg was created for him in the mid to late 1930s, and he led this until 1969.[2]

Life[edit]

Freudenberg studied at Bonn University in 1904, and the University of Berlin from 1907 to 1910, where he studied with Emil Fischer. In July 1910, he married Doris Nieden; they had five children.[3] His grandfather Carl Johann Freudenberg was a tanner and businessman, who in 1849, with Heinrich Christian Heintze, founded Freudenberg Group.

Freudenberg was a professor at University of Freiburg in 1921, at Heidelberg University in 1922, at Karlsruhe University from 1926 to 1956, and director of the Research Institute at the University of Heidelberg, noted above, from 1936 to 1969.[4]

Works[edit]

- Chemie der natürlichen Gerbstoffe (1920) [studies on tannins and their relations to catechins]

- Stereochemie (1933)

- Tannin, Cellulose, Lignin (1933)

https://en.wikipedia.org/wiki/Karl_Freudenberg

https://en.wikipedia.org/wiki/Karl_Freudenberg

https://en.wikipedia.org/wiki/Christiaan_Huygens

https://en.wikipedia.org/wiki/CRC_Handbook_of_Chemistry_and_Physics

Johannes Diderik van der Waals (Dutch pronunciation: [joːˈɦɑnəz ˈdidərɪk fɑn dər ˈʋaːls] (![]() listen)[note 1]; 23 November 1837 – 8 March 1923) was a Dutch theoretical physicist and thermodynamicist famous for his pioneering work on the equation of state for gases and liquids. Van der Waals started his career as a school teacher. He became the first physics professor of the University of Amsterdam when in 1877 the old Athenaeum was upgraded to Municipal University. Van der Waals won the 1910 Nobel Prize in physics for his work on the equation of state for gases and liquids.[1]

listen)[note 1]; 23 November 1837 – 8 March 1923) was a Dutch theoretical physicist and thermodynamicist famous for his pioneering work on the equation of state for gases and liquids. Van der Waals started his career as a school teacher. He became the first physics professor of the University of Amsterdam when in 1877 the old Athenaeum was upgraded to Municipal University. Van der Waals won the 1910 Nobel Prize in physics for his work on the equation of state for gases and liquids.[1]

His name is primarily associated with the Van der Waals equation of state that describes the behavior of gases and their condensation to the liquid phase. His name is also associated with Van der Waals forces (forces between stable molecules),[2] with Van der Waals molecules (small molecular clusters bound by Van der Waals forces), and with Van der Waals radii (sizes of molecules). As James Clerk Maxwell said, "there can be no doubt that the name of Van der Waals will soon be among the foremost in molecular science."[3]

In his 1873 thesis, Van der Waals noted the non-ideality of real gases and attributed it to the existence of intermolecular interactions. He introduced the first equation of state derived by the assumption of a finite volume occupied by the constituent molecules.[4] Spearheaded by Ernst Mach and Wilhelm Ostwald, a strong philosophical current that denied the existence of molecules arose towards the end of the 19th century. The molecular existence was considered unproven and the molecular hypothesis unnecessary. At the time Van der Waals's thesis was written (1873), the molecular structure of fluids had not been accepted by most physicists, and liquid and vapor were often considered as chemically distinct. But Van der Waals's work affirmed the reality of molecules and allowed an assessment of their size and attractive strength. His new formula revolutionized the study of equations of state. By comparing his equation of state with experimental data, Van der Waals was able to obtain estimates for the actual size of molecules and the strength of their mutual attraction.[5]

The effect of Van der Waals's work on molecular physics in the 20th century was direct and fundamental.[6] By introducing parameters characterizing molecular size and attraction in constructing his equation of state, Van der Waals set the tone for modern molecular science. That molecular aspects such as size, shape, attraction, and multipolar interactions should form the basis for mathematical formulations of the thermodynamic and transport properties of fluids is presently considered an axiom.[7] With the help of the Van der Waals's equation of state, the critical-point parameters of gases could be accurately predicted from thermodynamic measurements made at much higher temperatures. Nitrogen, oxygen, hydrogen, and helium subsequently succumbed to liquefaction. Heike Kamerlingh Onnes was significantly influenced by the pioneering work of Van der Waals. In 1908, Onnes became the first to make liquid helium; this led directly to his 1911 discovery of superconductivity.[8]

https://en.wikipedia.org/wiki/Johannes_Diderik_van_der_Waals

Max Noether (24 September 1844 – 13 December 1921) was a German mathematician who worked on algebraic geometry and the theory of algebraic functions. He has been called "one of the finest mathematicians of the nineteenth century".[1] He was the father of Emmy Noether.

https://en.wikipedia.org/wiki/Max_Noether

Jules Piccard, also known as Julius Piccard (20 September 1840, in Lausanne – 11 April 1933, in Lausanne) was a Swiss chemist. He was the father of twins Auguste Piccard (1884–1962) and Jean Felix Piccard (1884–1963), both renowned balloonists.

He studied chemistry at the University of Heidelberg as a student of Robert Bunsen, receiving his doctorate in 1862. Shortly afterwards, he obtained his habilitation at the polytechnical institute in Zürich. From 1869 to 1903 he was a professor of chemistry at the University of Basel.[1][2]

He made contributions in the field of food chemistry and in his research of cantharidin, dinitrocresol, chrysin and resorcinol.[2][3] He is also known for his studies involving the atomic weight of rubidium.[4]

https://en.wikipedia.org/wiki/Jules_Piccard

Justus Freiherr von Liebig[2] (12 May 1803 – 18 April 1873)[3] was a German scientist who made major contributions to agricultural and biological chemistry, and is considered one of the principal founders of organic chemistry.[4] As a professor at the University of Giessen, he devised the modern laboratory-oriented teaching method, and for such innovations, he is regarded as one of the greatest chemistry teachers of all time.[5] He has been described as the "father of the fertilizerindustry" for his emphasis on nitrogen and trace minerals as essential plant nutrients, and his formulation of the law of the minimum, which described how plant growth relied on the scarcest nutrient resource, rather than the total amount of resources available.[6] He also developed a manufacturing process for beef extracts,[7] and with his consent a company, called Liebig Extract of Meat Company, was founded to exploit the concept; it later introduced the Oxo brand beef bouillon cube. He popularized an earlier invention for condensing vapors, which came to be known as the Liebig condenser.[8]

https://en.wikipedia.org/wiki/Justus_von_Liebig

Eilhard Mitscherlich (German pronunciation: [ˈaɪ̯lhaʁt ˈmɪtʃɐlɪç];[1][2] 7 January 1794 – 28 August 1863) was a German chemist, who is perhaps best remembered today for his discovery of the phenomenon of crystallographic isomorphism in 1819.

https://en.wikipedia.org/wiki/Eilhard_Mitscherlich

Friedlieb Ferdinand Runge (8 February 1794 – 25 March 1867) was a German analytical chemist. Runge identified the mydriatic (pupil dilating) effects of belladonna (deadly nightshade) extract, identified caffeine, and discovered the first coal tar dye (aniline blue).

https://en.wikipedia.org/wiki/Friedlieb_Ferdinand_Runge

Johann Friedrich Ludwig Hausmann (22 February 1782, Hannover – 26 December 1859, Göttingen) was a German mineralogist.[1]

https://en.wikipedia.org/wiki/Johann_Friedrich_Ludwig_Hausmann

Johann Carl Friedrich Gauss (/ɡaʊs/; German: Gauß [kaʁl ˈfʁiːdʁɪç ˈɡaʊs] (![]() listen);[1][2] Latin: Carolus Fridericus Gauss; 30 April 1777 – 23 February 1855) was a German mathematician and physicist who made significant contributions to many fields in mathematics and science.[3] Sometimes referred to as the Princeps mathematicorum[4] (Latin for '"the foremost of mathematicians"') and "the greatest mathematician since antiquity", Gauss had an exceptional influence in many fields of mathematics and science, and is ranked among history's most influential mathematicians.[5]

listen);[1][2] Latin: Carolus Fridericus Gauss; 30 April 1777 – 23 February 1855) was a German mathematician and physicist who made significant contributions to many fields in mathematics and science.[3] Sometimes referred to as the Princeps mathematicorum[4] (Latin for '"the foremost of mathematicians"') and "the greatest mathematician since antiquity", Gauss had an exceptional influence in many fields of mathematics and science, and is ranked among history's most influential mathematicians.[5]

https://en.wikipedia.org/wiki/Carl_Friedrich_Gauss

Planar chirality, also known as 2D chirality, is the special case of chirality for two dimensions.

Most fundamentally, planar chirality is a mathematical term, finding use in chemistry, physics and related physical sciences, for example, in astronomy, optics and metamaterials. Recent occurrences in latter two fields are dominated by microwave and terahertz applications as well as micro- and nanostructured planar interfaces for infrared and visible light.

https://en.wikipedia.org/wiki/Planar_chirality#Planar_chirality_in_chemistry

A cyclophane is a hydrocarbon consisting of an aromatic unit (typically a benzene ring) and an aliphatic chain that forms a bridge between two non-adjacent positions of the aromatic ring. More complex derivatives with multiple aromatic units and bridges forming cagelike structures are also known. Cyclophanes are well-studied in organic chemistry because they adopt unusual chemical conformations due to build-up of strain.

Basic cyclophane types are [n]metacyclophanes (I) in scheme 1, [n]paracyclophanes (II) and [n.n']cyclophanes (III). The prefixes meta and paracorrespond to the usual arene substitution patterns and n refers to the number of carbon atoms making up the bridge.

Structure[edit]

Paracyclophanes adopt the boat conformation normally observed in cyclohexanes but are still able to retain aromaticity. The smaller the value of n the larger the deviation from aromatic planarity. In '[6]paracyclophane' which is one of the smallest, yet stable, cyclophanes X-ray crystallography shows that the aromatic bridgehead carbon atom makes an angle of 20.5° with the plane. The benzyl carbons deviate by another 20.2°. The carbon-to-carbon bond length alternation has increased from 0 for benzene to 39 pm.[1][2]

In organic reactions [6]cyclophane tends to react as a diene derivative and not as an arene. With bromine it gives 1,4-addition and with chlorine the 1,2-addition product forms.

Yet the proton NMR spectrum displays the aromatic protons and their usual deshielded positions around 7.2 ppm and the central methylene protons in the aliphatic bridge are even severely shielded to a position of around - 0.5 ppm, that is, even shielded compared to the internal reference tetramethylsilane. With respect to the diamagnetic ring current criterion for aromaticity this cyclophane is still aromatic.

One particular research field in cyclophanes involves probing just how close atoms can get above the center of an aromatic nucleus.[3] In so-called in-cyclophanes with part of the molecule forced to point inwards one of the closest hydrogen to arene distances experimentally determined is just 168 picometers (pm).

A non-bonding nitrogen to arene distance of 244 pm is recorded for a pyridinophane and in the unusual superphane the two benzene rings are separated by a mere 262 pm. Other representative of this group are in-methylcyclophanes,[4] in-ketocyclophanes[5] and in,in-Bis(hydrosilane).[6]

Synthetic methods[edit]

[6]paracyclophane can be synthesized[7][8] in the laboratory by a Bamford-Stevens reaction with spiro ketone 1 in scheme 3 rearranging in a pyrolysis reaction through the carbene intermediate 4. The cyclophane can be photochemically converted to the Dewar benzene 6 and back again by application of heat. A separate route to the Dewar form is by a cationic silver perchlorate induced rearrangement reaction of the bicyclopropenyl compound 7.

Metaparacyclophanes constitute another class of cyclophans like the [14][14]metaparacyclophane[9] in scheme 4[10] featuring a in-situ Ramberg-Bäcklund Reaction converting the sulfone 3 to the alkene 4.

Naturally occurring cyclophanes[edit]

Despite carrying strain, the cyclophane motif does exist in nature. One example of a metacyclophane is cavicularin.

Haouamine A is a paracyclophane found in a certain species of tunicate. Because of its potential application as an anticancer drug it is also available from total synthesis via an alkyne - pyrone Diels-Alder reaction in the crucial step with expulsion of carbon dioxide (scheme 5).[11]

In this compound the deviation from planarity is 13° for the benzene ring and 17° for the bridgehead carbons.[12] An alternative cyclophane formation strategy in scheme 6[13] was developed based on aromatization of the ring well after the formation of the bridge.

Two additional types of cyclophanes were discovered in nature when they were isolated from two species of cyanobacteria from the family Nostocacae.[14] These two classes of cyclophanes are both [7,7] paracyclophanes and were named after the species from which they were extracted: cylindrocyclophanes from Cylindrospermum lichenforme and nostocyclophanes from Nostoc linckia.

[n.n]Paracyclophanes[edit]

A well exploited member of the [n.n]paracyclophane family is [2.2]paracyclophane.[15][16] One method for its preparation is by a 1,6-Hofmann elimination:[17]

The [2.2]paracyclophane-1,9-diene has been applied in ROMP to a poly(p-phenylene vinylene) with alternating cis-alkene and trans-alkene bonds using Grubbs' second generation catalyst:[18]

The driving force for ring-opening and polymerization is strain relief. The reaction is believed to be a living polymerization due to the lack of competing reactions.

Because the two benzene rings are in close proximity this cyclophane type also serves as guinea pig for photochemical dimerization reactions as illustrated by this example:[19]

The product formed has an octahedrane skeleton. When the amine group is replaced by a methylene group no reaction takes place: the dimerization requires through-bond overlap between the aromatic pi electrons and the sigma electrons in the C-N bond in the reactants LUMO.

Cycloparaphenylenes[edit]

[n]Cycloparaphenylenes ([n]CPPs) consist of cyclic all-para-linked phenyl groups.[20] This compound class is of some interest as potential building block for nanotubes. Members have been reported with 18, 12, 10, 9, 8, 7, 6 and 5 phenylenes. These molecules are unique in that they contain no aliphatic linker group that places strain on the aromatic unit. Instead the entire molecule is a strained aromatic unit.

Phanes[edit]

Generalization of cyclophanes led to the concept of phanes in the IUPAC nomenclature.

The systematic phane nomenclature name for e.g. [14]metacyclophane is 1(1,3)-benzenacyclopentadecaphane;

and [2.2']paracyclophane (or [2.2]paracyclophane) is 1,4(1,4)-dibenzenacyclohexaphane.

References

https://en.wikipedia.org/wiki/Cyclophane

An aromatic ring current is an effect observed in aromatic molecules such as benzene and naphthalene. If a magnetic field is directed perpendicular to the plane of the aromatic system, a ring current is induced in the delocalized π electrons of the aromatic ring.[1] This is a direct consequence of Ampère's law; since the electrons involved are free to circulate, rather than being localized in bonds as they would be in most non-aromatic molecules, they respond much more strongly to the magnetic field.

The ring current creates its own magnetic field. Outside the ring, this field is in the same direction as the externally applied magnetic field; inside the ring, the field counteracts the externally applied field. As a result, the net magnetic field outside the ring is greater than the externally applied field alone, and is less inside the ring.

Aromatic ring currents are relevant to NMR spectroscopy, as they dramatically influence the chemical shifts of 1H nuclei in aromatic molecules.[2] The effect helps distinguish these nuclear environments and is therefore of great use in molecular structure determination. In benzene, the ring protons experience deshieldingbecause the induced magnetic field has the same direction outside the ring as the external field and their chemical shift is 7.3 ppm compared to 5.6 for the vinylicproton in cyclohexene. In contrast any proton inside the aromatic ring experiences shielding because both fields are in opposite direction. This effect can be observed in cyclooctadecanonaene ([18]annulene) with 6 inner protons at −3 ppm.

The situation is reversed in antiaromatic compounds. In the dianion of [18]annulene the inner protons are strongly deshielded at 20.8 ppm and 29.5 ppm with the outer protons significantly shielded (with respect to the reference) at −1.1 ppm. Hence a diamagnetic ring current or diatropic ring current is associated with aromaticity whereas a paratropic ring current signals antiaromaticity.

A similar effect is observed in three-dimensional fullerenes; in this case it is called a sphere current.[3]

https://en.wikipedia.org/wiki/Aromatic_ring_current

Proton nuclear magnetic resonance (proton NMR, hydrogen-1 NMR, or 1H NMR) is the application of nuclear magnetic resonancein NMR spectroscopy with respect to hydrogen-1 nuclei within the molecules of a substance, in order to determine the structure of its molecules.[1] In samples where natural hydrogen (H) is used, practically all the hydrogen consists of the isotope 1H (hydrogen-1; i.e. having a proton for a nucleus).

Simple NMR spectra are recorded in solution, and solvent protons must not be allowed to interfere. Deuterated (deuterium = 2H, often symbolized as D) solvents especially for use in NMR are preferred, e.g. deuterated water, D2O, deuterated acetone, (CD3)2CO, deuterated methanol, CD3OD, deuterated dimethyl sulfoxide, (CD3)2SO, and deuterated chloroform, CDCl3. However, a solvent without hydrogen, such as carbon tetrachloride, CCl4 or carbon disulfide, CS2, may also be used.

Historically, deuterated solvents were supplied with a small amount (typically 0.1%) of tetramethylsilane (TMS) as an internal standardfor calibrating the chemical shifts of each analyte proton. TMS is a tetrahedral molecule, with all protons being chemically equivalent, giving one single signal, used to define a chemical shift = 0 ppm. [2] It is volatile, making sample recovery easy as well. Modern spectrometers are able to reference spectra based on the residual proton in the solvent (e.g. the CHCl3, 0.01% in 99.99% CDCl3). Deuterated solvents are now commonly supplied without TMS.

Deuterated solvents permit the use of deuterium frequency-field lock (also known as deuterium lock or field lock) to offset the effect of the natural drift of the NMR's magnetic field . In order to provide deuterium lock, the NMR constantly monitors the deuterium signal resonance frequency from the solvent and makes changes to the to keep the resonance frequency constant.[3] Additionally, the deuterium signal may be used to accurately define 0 ppm as the resonant frequency of the lock solvent and the difference between the lock solvent and 0 ppm (TMS) are well known.

Proton NMR spectra of most organic compounds are characterized by chemical shifts in the range +14 to -4 ppm and by spin-spin coupling between protons. The integration curve for each proton reflects the abundance of the individual protons.

Simple molecules have simple spectra. The spectrum of ethyl chloride consists of a triplet at 1.5 ppm and a quartet at 3.5 ppm in a 3:2 ratio. The spectrum of benzene consists of a single peak at 7.2 ppm due to the diamagnetic ring current.

Together with carbon-13 NMR, proton NMR is a powerful tool for molecular structure characterization.

https://en.wikipedia.org/wiki/Proton_nuclear_magnetic_resonance

Pyrones or pyranones are a class of heterocyclic chemical compounds. They contain an unsaturated six-membered ring containing one oxygen atom and a ketone functional group.[1]There are two isomers denoted as 2-pyrone and 4-pyrone. The 2-pyrone (or α-pyrone) structure is found in nature as part of the coumarin ring system. 4-Pyrone (or γ-pyrone) is found in some natural chemical compounds such as chromone, maltol and kojic acid.

See also[edit]

- Furanone, which has one fewer carbon atom in the ring.

A nanostructure is a structure of intermediate size between microscopic and molecular structures. Nanostructural detail is microstructure at nanoscale.

In describing nanostructures, it is necessary to differentiate between the number of dimensions in the volume of an object which are on the nanoscale. Nanotextured surfaces have one dimension on the nanoscale, i.e., only the thickness of the surface of an object is between 0.1 and 100 nm. Nanotubes have two dimensions on the nanoscale, i.e., the diameter of the tube is between 0.1 and 100 nm; its length can be far more. Finally, spherical nanoparticles have three dimensions on the nanoscale, i.e., the particle is between 0.1 and 100 nm in each spatial dimension. The terms nanoparticles and ultrafine particles (UFP) are often used synonymously although UFP can reach into the micrometre range. The term nanostructure is often used when referring to magnetic technology.

Nanoscale structure in biology is often called ultrastructure.

Properties of nanoscale objects and ensembles of these objects are widely studied in physics.[2]

List of nanostructures[edit]

- Gradient multilayer nanofilm (GML nanofilm)

- Icosahedral twins

- Nanocages

- Magnetic nanochains

- Nanocomposite

- Nanofabrics

- Nanofiber

- Nanoflower

- Nanofoam

- Nanohole

- Nanomesh

- Box-shaped

- Nanoparticle

- Nanopillar

- Nanopin film

- Nanoplatelet

- Nanoribbon

- Nanoring

- Nanorod

- Nanosheet

- Nanoshell

- Nanotip

- Nanowire

- Nanostructured film

- Self-assembled

- Quantum dot

- Quantum heterostructure

- Sculptured thin film

- Nano WaterCube

https://en.wikipedia.org/wiki/Nanostructure

Phanes are abstractions of highly complex organic molecules introduced for simplification of the naming of these highly complex molecules.

Systematic nomenclature of organic chemistry consists of building a name for the structure of an organic compound by a collection of names of its composite parts but describing also its relative positions within the structure. Naming information is summarised by IUPAC:[1][2][3]

Whilst the cyclophane name describes only a limited number of sub-structures of benzene rings interconnected by individual atoms or chains, 'phane' is a class name which includes others, hence heterocyclic rings as well. Therefore, the various cyclophanes are perfectly good for the general class of phanes as well keeping in mind that the cyclic structures in phanes could have much greater diversity.

References[edit]

- ^ International Union of Pure and Applied Chemistry - Recommendations on Organic & Biochemical Nomenclature, Symbols & Terminology etc. http://www.chem.qmul.ac.uk/iupac/ World Wide Web material prepared by G. P. Moss, Department of Chemistry, Queen Mary University of London, Mile End Road, London, E1 4NS, UKg.p.moss@qmul.ac.uk

- ^ International Union of Pure and Applied Chemistry - Organic Chemistry Division - Commission on nomenclature of organic chemistry http://www.chem.qmul.ac.uk/iupac/phane/ Archived 2006-07-03 at the Wayback Machine Phane Nomenclature Part I: Phane Parent Names IUPAC Recommendations 1998 Prepared for publication by W. H. Powell 1436 Havencrest Ct, Columbus, OH 43220-3841, USA

- ^ Phane Nomenclature Part II: Modification of the Degree of Hydrogenation and Substitution Derivatives of Phane Parent Hydrides

https://en.wikipedia.org/wiki/Phanes_(organic_chemistry)

https://en.wikipedia.org/wiki/Charles_Blagden

https://en.wikipedia.org/wiki/Antoine_Lavoisier

https://en.wikipedia.org/wiki/Category:Independent_scientists

https://en.wikipedia.org/wiki/Category:Executed_scientists

https://en.wikipedia.org/wiki/Rose_Center_for_Earth_and_Space

https://en.wikipedia.org/wiki/Nazis_at_the_Center_of_the_Earth

https://en.wikipedia.org/wiki/Earth%27s_inner_core

https://en.wikipedia.org/wiki/Geographical_centre_of_Earth

https://en.wikipedia.org/wiki/Equirectangular_projection

https://en.wikipedia.org/wiki/Earth

Spontaneous; Center; Symmetry

In geometry, to translate a geometric figure is to move it from one place to another without rotating it. A translation "slides" a thing by a: Ta(p) = p + a.

In physics and mathematics, continuous translational symmetry is the invariance of a system of equations under any translation. Discrete translational symmetry is invariant under discrete translation.

Analogously an operator A on functions is said to be translationally invariant with respect to a translation operator if the result after applying A doesn't change if the argument function is translated. More precisely it must hold that

Laws of physics are translationally invariant under a spatial translation if they do not distinguish different points in space. According to Noether's theorem, space translational symmetry of a physical system is equivalent to the momentum conservation law.

Translational symmetry of an object means that a particular translation does not change the object. For a given object, the translations for which this applies form a group, the symmetry group of the object, or, if the object has more kinds of symmetry, a subgroup of the symmetry group.

https://en.wikipedia.org/wiki/Translational_symmetry

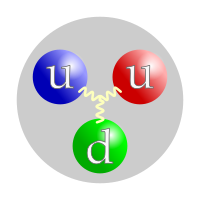

Many powerful theories in physics are described by Lagrangians that are invariant under some symmetry transformation groups.

Gauge theories are important as the successful field theories explaining the dynamics of elementary particles. Quantum electrodynamics is an abelian gauge theory with the symmetry group U(1) and has one gauge field, the electromagnetic four-potential, with the photon being the gauge boson. The Standard Model is a non-abelian gauge theory with the symmetry group U(1) × SU(2) × SU(3) and has a total of twelve gauge bosons: the photon, three weak bosons and eight gluons.

Gauge theories are also important in explaining gravitation in the theory of general relativity. Its case is somewhat unusual in that the gauge field is a tensor, the Lanczos tensor. Theories of quantum gravity, beginning with gauge gravitation theory, also postulate the existence of a gauge boson known as the graviton. Gauge symmetries can be viewed as analogues of the principle of general covariance of general relativity in which the coordinate system can be chosen freely under arbitrary diffeomorphisms of spacetime. Both gauge invariance and diffeomorphism invariance reflect a redundancy in the description of the system. An alternative theory of gravitation, gauge theory gravity, replaces the principle of general covariance with a true gauge principle with new gauge fields.

Historically, these ideas were first stated in the context of classical electromagnetism and later in general relativity. However, the modern importance of gauge symmetries appeared first in the relativistic quantum mechanics of electrons – quantum electrodynamics, elaborated on below. Today, gauge theories are useful in condensed matter, nuclear and high energy physicsamong other subfields.

https://en.wikipedia.org/wiki/Gauge_theory

https://en.wikipedia.org/wiki/Mathematical_formulation_of_the_Standard_Model

https://en.wikipedia.org/wiki/Higgs_mechanism

Gluon radiation

rotation symmetry

electroweak in baryon v. electroweak in exotics v. scale

memory time on chip (signal infinite w/ maintainenance by human)

gravity graviton pressure material surface range tensor step scale friction air intermo intramo force attractiorepulsio channel vaccume space mater void vortex differential gradient

integral net force

slip sppoints topology turbulence (oxygen electron slip point; scale of hydrogen and proton; protonium)

symmetry spontaneous symmetry center spinor

atomic subatomic; baryoinic v. dark matter; tension torque torsion axial plane vertical pressuron preon dust particle syst large expanding particle nuclear

voltage pressure non ideal force v mass and charge hydroelectric thermodynamics

levels time supersymmetry charge mass transitivity

supramolecular chemistry nuclear neutron mirror multilayer particle space division multiplexing hyperfine quantum computing ion scale quantum interace eigenstate

skew asymmetry spiral

molecular gyroscope flyweel maglev linear induction motor rotation energy no activation energy with non covalent bond quantum energy nuclear hydrogen trihydrogencation universe dark matter universe baryonic matter chains hydroelectric nuclear fusion fission transmutation molecular inversion bond rotation energy

phase change water hydrogen microwave matrix glycerine cellulose point particle or particle space division multiplexing cascade change time space quantum ion quantum ion computing oscillation frequency measure hydrogen emission spectra

bunsen Lippmann (physics, 1908)

https://www.nobelprize.org/prizes/physics/1908/summary/

In a supersymmetric theory the equations for force and the equations for matter are identical. In theoretical and mathematical physics, any theory with this property has the principle of supersymmetry (SUSY). Dozens of supersymmetric theories exist.[1]Supersymmetry is a spacetime symmetry between two basic classes of particles: bosons, which have an integer-valued spin and follow Bose–Einstein statistics, and fermions, which have a half-integer-valued spin and follow Fermi-Dirac statistics.[2][3] In supersymmetry, each particle from one class would have an associated particle in the other, known as its superpartner, the spin of which differs by a half-integer. For example, if the electron exists in a supersymmetric theory, then there would be a particle called a "selectron" (superpartner electron), a bosonic partner of the electron. In the simplest supersymmetry theories, with perfectly "unbroken" supersymmetry, each pair of superpartners would share the same mass and internal quantum numbers besides spin. More complex supersymmetry theories have a spontaneously broken symmetry, allowing superpartners to differ in mass.[4][5][6]

Supersymmetry has various applications to different areas of physics, such as quantum mechanics, statistical mechanics, quantum field theory, condensed matter physics, nuclear physics, optics, stochastic dynamics, particle physics, astrophysics, quantum gravity, string theory, and cosmology. Supersymmetry has also been applied outside of physics, such as in finance. In particle physics, a supersymmetric extension of the Standard Model is a possible candidate for physics beyond the Standard Model, and in cosmology, supersymmetry could explain the issue of cosmological inflation.

In quantum field theory, supersymmetry is motivated by solutions to several theoretical problems, for generally providing many desirable mathematical properties, and for ensuring sensible behavior at high energies. Supersymmetric quantum field theory is often much easier to analyze, as many more problems become mathematically tractable. When supersymmetry is imposed as a local symmetry, Einstein's theory of general relativity is included automatically, and the result is said to be a theory of supergravity. Another theoretically appealing property of supersymmetry is that it offers the only "loophole" to the Coleman–Mandula theorem, which prohibits spacetime and internal symmetries from being combined in any nontrivial way, for quantum field theories with very general assumptions. The Haag–Łopuszański–Sohnius theorem demonstrates that supersymmetry is the only way spacetime and internal symmetries can be combined consistently.[7]

https://en.wikipedia.org/wiki/Supersymmetry

In a supersymmetric theory the equations for force and the equations for matter are identical. In theoretical and mathematical physics, any theory with this property has the principle of supersymmetry (SUSY). Dozens of supersymmetric theories exist.[1]Supersymmetry is a spacetime symmetry between two basic classes of particles: bosons, which have an integer-valued spin and follow Bose–Einstein statistics, and fermions, which have a half-integer-valued spin and follow Fermi-Dirac statistics.[2][3] In supersymmetry, each particle from one class would have an associated particle in the other, known as its superpartner, the spin of which differs by a half-integer. For example, if the electron exists in a supersymmetric theory, then there would be a particle called a "selectron" (superpartner electron), a bosonic partner of the electron. In the simplest supersymmetry theories, with perfectly "unbroken" supersymmetry, each pair of superpartners would share the same mass and internal quantum numbers besides spin. More complex supersymmetry theories have a spontaneously broken symmetry, allowing superpartners to differ in mass.[4][5][6]

Supersymmetry has various applications to different areas of physics, such as quantum mechanics, statistical mechanics, quantum field theory, condensed matter physics, nuclear physics, optics, stochastic dynamics, particle physics, astrophysics, quantum gravity, string theory, and cosmology. Supersymmetry has also been applied outside of physics, such as in finance. In particle physics, a supersymmetric extension of the Standard Model is a possible candidate for physics beyond the Standard Model, and in cosmology, supersymmetry could explain the issue of cosmological inflation.

In quantum field theory, supersymmetry is motivated by solutions to several theoretical problems, for generally providing many desirable mathematical properties, and for ensuring sensible behavior at high energies. Supersymmetric quantum field theory is often much easier to analyze, as many more problems become mathematically tractable. When supersymmetry is imposed as a local symmetry, Einstein's theory of general relativity is included automatically, and the result is said to be a theory of supergravity. Another theoretically appealing property of supersymmetry is that it offers the only "loophole" to the Coleman–Mandula theorem, which prohibits spacetime and internal symmetries from being combined in any nontrivial way, for quantum field theories with very general assumptions. The Haag–Łopuszański–Sohnius theorem demonstrates that supersymmetry is the only way spacetime and internal symmetries can be combined consistently.[7]

Supersymmetric quantum mechanics[edit]

Main article: Supersymmetric quantum mechanics

Supersymmetric quantum mechanics adds the SUSY superalgebra to quantum mechanics as opposed to quantum field theory. Supersymmetric quantum mechanics often becomes relevant when studying the dynamics of supersymmetric solitons, and due to the simplified nature of having fields which are only functions of time (rather than space-time), a great deal of progress has been made in this subject and it is now studied in its own right.

SUSY quantum mechanics involves pairs of Hamiltonians which share a particular mathematical relationship, which are called partner Hamiltonians. (The potential energy terms which occur in the Hamiltonians are then known as partner potentials.) An introductory theorem shows that for every eigenstate of one Hamiltonian, its partner Hamiltonian has a corresponding eigenstate with the same energy. This fact can be exploited to deduce many properties of the eigenstate spectrum. It is analogous to the original description of SUSY, which referred to bosons and fermions. We can imagine a "bosonic Hamiltonian", whose eigenstates are the various bosons of our theory. The SUSY partner of this Hamiltonian would be "fermionic", and its eigenstates would be the theory's fermions. Each boson would have a fermionic partner of equal energy.

In finance[edit]

In 2021, supersymmetric quantum mechanics was applied to option pricing and the analysis of markets in finance,[19] and to financial networks.[20]

Supersymmetry in condensed matter physics[edit]

SUSY concepts have provided useful extensions to the WKB approximation. Additionally, SUSY has been applied to disorder averaged systems both quantum and non-quantum (through statistical mechanics), the Fokker–Planck equation being an example of a non-quantum theory. The 'supersymmetry' in all these systems arises from the fact that one is modelling one particle and as such the 'statistics' do not matter. The use of the supersymmetry method provides a mathematical rigorous alternative to the replica trick, but only in non-interacting systems, which attempts to address the so-called 'problem of the denominator' under disorder averaging. For more on the applications of supersymmetry in condensed matter physics see Efetov (1997).[21]

In 2021, a group of researchers showed that, in theory, {\displaystyle N=(0,1)} SUSY could be realised at the edge of a Moore-Read quantum Hall state.[22] However, to date, no experiments have been done yet to realise it at an edge of a Moore-Read state.

Supersymmetry in optics[edit]

In 2013, integrated optics was found[23] to provide a fertile ground on which certain ramifications of SUSY can be explored in readily-accessible laboratory settings. Making use of the analogous mathematical structure of the quantum-mechanical Schrödinger equation and the wave equation governing the evolution of light in one-dimensional settings, one may interpret the refractive indexdistribution of a structure as a potential landscape in which optical wave packets propagate. In this manner, a new class of functional optical structures with possible applications in phase matching, mode conversion[24] and space-division multiplexing becomes possible. SUSY transformations have been also proposed as a way to address inverse scattering problems in optics and as a one-dimensional transformation optics.[25]

Supersymmetry in dynamical systems[edit]

Main article: Supersymmetric theory of stochastic dynamics

All stochastic (partial) differential equations, the models for all types of continuous time dynamical systems, possess topological supersymmetry.[26][27] In the operator representation of stochastic evolution, the topological supersymmetry is the exterior derivativewhich is commutative with the stochastic evolution operator defined as the stochastically averaged pullback induced on differential forms by SDE-defined diffeomorphisms of the phase space. The topological sector of the so-emerging supersymmetric theory of stochastic dynamics can be recognized as the Witten-type topological field theory.

The meaning of the topological supersymmetry in dynamical systems is the preservation of the phase space continuity—infinitely close points will remain close during continuous time evolution even in the presence of noise. When the topological supersymmetry is broken spontaneously, this property is violated in the limit of the infinitely long temporal evolution and the model can be said to exhibit (the stochastic generalization of) the butterfly effect. From a more general perspective, spontaneous breakdown of the topological supersymmetry is the theoretical essence of the ubiquitous dynamical phenomenon variously known as chaos, turbulence, self-organized criticality etc. The Goldstone theorem explains the associated emergence of the long-range dynamical behavior that manifests itself as 1/f noise, butterfly effect, and the scale-free statistics of sudden (instantonic) processes, such as earthquakes, neuroavalanches, and solar flares, known as the Zipf's law and the Richter scale.

https://en.wikipedia.org/wiki/Supersymmetry

https://en.wikipedia.org/wiki/Refractive_index

https://en.wikipedia.org/wiki/Topology

https://en.wikipedia.org/wiki/Symmetry

https://en.wikipedia.org/wiki/Equilateral_Triangle

https://en.wikipedia.org/wiki/Perception_Sensation_Cognition

https://en.wikipedia.org/wiki/Goldstone_boson

https://en.wikipedia.org/wiki/Space-division_multiple_access

https://en.wikipedia.org/wiki/Richter_magnitude_scale

https://en.wikipedia.org/wiki/Zipf%27s_law

https://en.wikipedia.org/wiki/Butterfly_effect

https://en.wikipedia.org/wiki/Topological_quantum_field_theory

https://en.wikipedia.org/wiki/Supersymmetric_theory_of_stochastic_dynamics

https://en.wikipedia.org/wiki/Quantum_Hall_effect

https://en.wikipedia.org/wiki/Quantum_state#Pure_states

https://en.wikipedia.org/wiki/WKB_approximation

https://en.wikipedia.org/wiki/Lambda-CDM_model

https://en.wikipedia.org/wiki/Hyperfine_structure

https://en.wikipedia.org/wiki/Electron_paramagnetic_resonance#Hyperfine_coupling

https://en.wikipedia.org/wiki/Hyperfinite_type_II_factor

https://en.wikipedia.org/wiki/Hypertext_Transfer_Protocol

Friday, September 17, 2021

09-17-2021-0229 - Space-division multiple access (SDMA)

Thermodynamics[edit]

Supramolecular chemistry deals with subtle interactions, and consequently control over the processes involved can require great precision. In particular, non-covalent bonds have low energies and often no activation energy for formation. As demonstrated by the Arrhenius equation, this means that, unlike in covalent bond-forming chemistry, the rate of bond formation is not increased at higher temperatures. In fact, chemical equilibrium equations show that the low bond energy results in a shift towards the breaking of supramolecular complexes at higher temperatures.

However, low temperatures can also be problematic to supramolecular processes. Supramolecular chemistry can require molecules to distort into thermodynamically disfavored conformations (e.g. during the "slipping" synthesis of rotaxanes), and may include some covalent chemistry that goes along with the supramolecular. In addition, the dynamic nature of supramolecular chemistry is utilized in many systems (e.g. molecular mechanics), and cooling the system would slow these processes.

Saturday, August 14, 2021

08-13-2021-2226 - Time Reversal Symmetry

T-symmetry or time reversal symmetry is the theoretical symmetry of physical laws under the transformation of time reversal,

Since the second law of thermodynamics states that entropy increases as time flows toward the future, in general, the macroscopic universe does not show symmetry under time reversal. In other words, time is said to be non-symmetric, or asymmetric, except for special equilibrium states when the second law of thermodynamics predicts the time symmetry to hold. However, quantum noninvasive measurements are predicted to violate time symmetry even in equilibrium,[1] contrary to their classical counterparts, although this has not yet been experimentally confirmed.

Time asymmetries generally are caused by one of three categories:

- intrinsic to the dynamic physical law (e.g., for the weak force)

- due to the initial conditions of the universe (e.g., for the second law of thermodynamics)

- due to measurements (e.g., for the noninvasive measurements)

https://en.wikipedia.org/wiki/Molecular_recognition

Phthalocyanine (H

2Pc) is a large, aromatic, macrocyclic, organic compound with the formula (C

8H

4N

2)

4H

2 and is of theoretical or specialized interest in chemical dyes and photoelectricity.

It is composed of four isoindole units[a] linked by a ring of nitrogen atoms. (C

8H

4N

2)

4H

2= H

2Pc has a two-dimensional geometry and a ring system consisting of 18 π-electrons. The extensive delocalization of the π-electrons affords the molecule useful properties, lending itself to applications in dyes and pigments. Metal complexesderived from Pc2−

, the conjugate base of H

2Pc, are valuable in catalysis, organic solar cells, and photodynamic therapy.

https://en.wikipedia.org/wiki/Electrochemistry

https://en.wikipedia.org/wiki/Sarcosine

https://en.wikipedia.org/wiki/Hydrazone

The electron transfer is fast because the redox process induces little structural change.

Redox properties[edit]

Viologens, in their dicationic form, typically undergo two one-electron reductions. The first reduction affords the deeply colored radical cation:[2]

- [V]2+ + e− [V]+

The radical cations are blue for 4,4'-viologens and green for 2,2'-derivatives. The second reduction yields a yellow quinoidcompounds:

- [V]+ + e− [V]0

The electron transfer is fast because the redox process induces little structural change.

[edit]

Diquat is an isomer of viologens, being derived from 2,2'-bipyridine (instead of the 4,4'-isomer). It also is a potent herbicide that functions by disrupting electron-transfer.

Extended viologens have been developed based on conjugated oligomers such as based on aryl, ethylene, and thiophene units are inserted between the pyridine units.[7] The bipolaron di-octyl bis(4-pyridyl)biphenyl viologen 2 in scheme 2 can be reduced by sodium amalgam in DMF to the neutral viologen 3.

The resonance structures of the quinoid 3a and the biradical 3b contribute equally to the hybrid structure. The driving force for the contributing 3b is the restoration of aromaticity with the biphenyl unit. It has been established using X-ray crystallography that the molecule is, in effect, coplanar with slight nitrogen pyramidalization, and that the central carbon bonds are longer (144 pm) than what would be expected for a double bond (136 pm). Further research shows that the diradical exists as a mixture of triplets and singlets, although an ESR signal is absent. In this sense, the molecule resembles Tschischibabin's hydrocarbon, discovered during 1907. It also shares with this molecule a blue color in solution, and a metallic-green color as crystals.

Compound 3 is a very strong reducing agent, with a redox potential of −1.48 V.

Sodium amalgam, commonly denoted Na(Hg), is an alloy of mercury and sodium. The term amalgam is used for alloys, intermetallic compounds, and solutions (both solid solutions and liquid solutions) involving mercury as a major component. Sodium amalgams are often used in reactions as strong reducing agents with better handling properties compared to solid sodium. They are less dangerously reactive toward water and in fact are often used as an aqueous suspension.

Sodium amalgam was used as a reagent as early as 1862.[1] A synthesis method was described by J. Alfred Wanklyn in 1866.[2]

Uses[edit]

Sodium amalgam has been used in organic chemistry as a powerful reducing agent, which is safer to handle than sodium itself. It is used in Emde degradation, and also for reduction of aromatic ketones to hydrols.[9]

A sodium amalgam is used in the design of the high pressure sodium lamp providing sodium to produce the proper color, and mercury to tailor the electrical characteristics of the lamp.

Mercury cell electrolysis[edit]

Sodium amalgam is a by-product of chlorine manufactured by mercury cell electrolysis. In this cell, brine (concentrated sodium chloride solution) is electrolysed between a liquid mercury cathode and a titanium or graphite anode. Chlorine is formed at the anode, while sodium formed at the cathode dissolves into the mercury, making sodium amalgam. Normally this sodium amalgam is drawn off and reacted with water in a "decomposer cell" to produce hydrogen gas, concentrated sodium hydroxide solution, and mercury to be recycled through the process. In principle, all the mercury should be completely recycled, but inevitably a small portion goes missing. Because of concerns about this mercury escaping into the environment, the mercury cell process is generally being replaced by plants which use a less toxic cathode.

https://en.wikipedia.org/wiki/Sodium_amalgam

https://en.wikipedia.org/wiki/Emde_degradation

https://en.wikipedia.org/wiki/Bipolaron