https://en.wikipedia.org/wiki/Category:Pharmacological_classification_systems

Blog Archive

- Apr 12 (12)

- Apr 13 (2)

- Apr 14 (7)

- Apr 15 (11)

- Apr 16 (5)

- Apr 17 (14)

- Apr 18 (16)

- Apr 19 (17)

- Apr 20 (28)

- Apr 21 (29)

- Apr 22 (15)

- Apr 23 (19)

- Apr 24 (8)

- Apr 25 (58)

- Apr 26 (44)

- Apr 28 (6)

- Apr 29 (6)

- Apr 30 (7)

- May 01 (8)

- May 02 (9)

- May 03 (4)

- May 04 (6)

- May 05 (14)

- May 06 (20)

- May 07 (11)

- May 08 (18)

- May 09 (6)

- May 10 (17)

- May 11 (8)

- May 12 (25)

- May 13 (8)

- May 14 (2)

- May 15 (2)

- May 17 (16)

- May 18 (1)

- May 19 (5)

- May 20 (22)

- May 21 (6)

- May 22 (3)

- May 23 (2)

- May 24 (7)

- May 25 (1)

- May 26 (6)

- May 27 (3)

- May 28 (3)

- May 29 (10)

- May 30 (8)

- May 31 (12)

- Jun 01 (1)

- Jun 02 (1)

- Jun 03 (9)

- Jun 04 (1)

- Jun 05 (2)

- Jun 07 (4)

- Jun 08 (8)

- Jun 09 (1)

- Jun 10 (1)

- Jun 19 (1)

- Jun 27 (1)

- Jun 29 (1)

- Jun 30 (7)

- Jul 01 (3)

- Jul 02 (1)

- Jul 03 (1)

- Jul 04 (2)

- Jul 05 (1)

- Jul 06 (3)

- Jul 08 (9)

- Jul 09 (1)

- Jul 10 (1)

- Jul 11 (2)

- Jul 12 (2)

- Jul 13 (4)

- Jul 14 (4)

- Jul 15 (2)

- Jul 17 (8)

- Jul 18 (17)

- Jul 19 (1)

- Jul 20 (8)

- Jul 21 (6)

- Jul 22 (12)

- Jul 23 (10)

- Jul 25 (6)

- Jul 26 (23)

- Jul 28 (50)

- Jul 30 (12)

- Jul 31 (5)

- Aug 01 (16)

- Aug 02 (5)

- Aug 03 (5)

- Aug 04 (11)

- Aug 05 (13)

- Aug 06 (7)

- Aug 07 (10)

- Aug 08 (2)

- Aug 09 (27)

- Aug 10 (15)

- Aug 11 (67)

- Aug 12 (44)

- Aug 13 (29)

- Aug 14 (120)

- Aug 15 (61)

- Aug 16 (36)

- Aug 17 (21)

- Aug 18 (5)

- Aug 21 (5)

- Aug 22 (54)

- Aug 23 (101)

- Aug 24 (100)

- Aug 25 (99)

- Aug 26 (100)

- Aug 27 (84)

- Aug 28 (73)

- Aug 29 (76)

- Aug 30 (67)

- Aug 31 (95)

- Sep 01 (126)

- Sep 02 (68)

- Sep 03 (11)

- Sep 04 (14)

- Sep 05 (47)

- Sep 06 (101)

- Sep 07 (61)

- Sep 08 (57)

- Sep 09 (46)

- Sep 10 (14)

- Sep 11 (93)

- Sep 12 (101)

- Sep 13 (101)

- Sep 14 (100)

- Sep 15 (77)

- Sep 16 (2)

- Sep 17 (101)

- Sep 18 (91)

- Sep 19 (102)

- Sep 20 (102)

- Sep 21 (94)

- Sep 22 (84)

- Sep 23 (110)

- Sep 24 (101)

- Sep 25 (76)

- Sep 26 (43)

- Sep 27 (87)

- Sep 28 (104)

- Sep 29 (92)

- Sep 30 (33)

- Oct 01 (58)

- Oct 02 (1)

- Oct 05 (8)

- Oct 06 (6)

- Oct 07 (4)

- Oct 08 (4)

- Oct 09 (1)

- Oct 10 (18)

- Oct 11 (8)

- Oct 12 (26)

- Oct 13 (6)

- Oct 14 (2)

- Oct 15 (4)

- Oct 16 (3)

- Oct 17 (4)

- Oct 18 (3)

- Oct 19 (11)

- Oct 20 (5)

- Oct 21 (7)

- Oct 22 (5)

- Oct 23 (8)

- Oct 24 (9)

- Oct 25 (14)

- Oct 26 (8)

- Oct 27 (13)

- Oct 28 (7)

- Oct 29 (7)

- Oct 30 (22)

- Oct 31 (13)

- Nov 01 (13)

- Nov 02 (6)

- Nov 03 (10)

- Nov 04 (17)

- Nov 05 (8)

- Nov 06 (9)

- Nov 07 (11)

- Nov 08 (3)

- Nov 09 (7)

- Nov 10 (5)

- Nov 11 (5)

- Nov 12 (5)

- Nov 13 (10)

- Nov 14 (7)

- Nov 15 (15)

- Nov 16 (8)

- Nov 17 (6)

- Nov 18 (5)

- Nov 19 (7)

- Nov 20 (8)

- Nov 21 (12)

- Nov 22 (5)

- Nov 23 (7)

- Nov 24 (7)

- Nov 25 (8)

- Nov 26 (2)

- Nov 27 (12)

- Nov 28 (2)

- Nov 29 (2)

- Dec 01 (1)

- Dec 02 (3)

- Dec 03 (2)

- Dec 04 (1)

- Dec 05 (9)

- Dec 06 (22)

- Dec 07 (2)

- Dec 08 (3)

- Dec 09 (1)

- Dec 13 (2)

- Dec 14 (12)

- Dec 15 (1)

- Dec 17 (1)

- Dec 23 (4)

- Dec 24 (2)

- Dec 25 (1)

- Dec 27 (2)

- Dec 28 (1)

- Dec 29 (6)

- Dec 30 (2)

- Dec 31 (6)

- Jan 03 (3)

- Jan 04 (12)

- Jan 05 (5)

- Jan 06 (7)

- Jan 07 (1)

- Jan 08 (3)

- Jan 09 (1)

- Jan 11 (1)

- Jan 12 (5)

- Jan 14 (1)

- Jan 16 (1)

- Jan 17 (1)

- Jan 18 (2)

- Jan 23 (1)

- Jan 26 (3)

- Jan 28 (2)

- Jan 29 (3)

- Jan 30 (1)

- Jan 31 (1)

- Feb 04 (2)

- Feb 05 (2)

- Feb 08 (2)

- Feb 09 (1)

- Feb 13 (3)

- Feb 15 (2)

- Feb 16 (1)

- Feb 17 (1)

- Feb 25 (2)

- Feb 28 (2)

- Mar 03 (1)

- Mar 08 (3)

- Mar 16 (2)

- Mar 17 (1)

- Mar 18 (11)

- Mar 20 (9)

- Mar 22 (1)

- Mar 23 (3)

- Mar 31 (1)

- Apr 01 (2)

- Apr 02 (1)

- Apr 03 (2)

- Apr 04 (1)

- Apr 05 (2)

- Apr 06 (6)

- Apr 07 (1)

- Apr 08 (7)

- Apr 09 (4)

- Apr 10 (7)

- Apr 19 (18)

- Apr 20 (12)

- Apr 21 (1)

- Apr 24 (2)

- May 11 (1)

- May 16 (4)

- May 20 (2)

- May 24 (2)

- May 27 (3)

- Jun 02 (2)

- Jun 06 (1)

- Jun 07 (9)

- Jun 10 (1)

- Jun 11 (2)

- Jun 12 (3)

- Jun 15 (1)

- Jun 17 (1)

- Jun 20 (5)

- Jun 21 (12)

- Jun 22 (21)

- Jun 23 (10)

- Jun 24 (4)

- Jun 25 (10)

- Jun 26 (5)

- Jun 28 (4)

- Jun 29 (2)

- Jun 30 (2)

- Jul 01 (1)

- Jul 04 (1)

- Jul 05 (2)

- Jul 06 (1)

- Jul 07 (2)

- Jul 08 (1)

- Jul 09 (3)

- Jul 10 (6)

- Jul 11 (7)

- Jul 12 (2)

- Jul 13 (3)

- Jul 14 (7)

- Jul 15 (4)

- Jul 16 (9)

- Jul 17 (2)

- Jul 18 (6)

- Jul 19 (6)

- Jul 20 (14)

- Jul 21 (2)

- Jul 22 (6)

- Jul 23 (14)

- Jul 24 (6)

- Jul 25 (5)

- Jul 26 (5)

- Jul 27 (2)

- Jul 28 (6)

- Jul 29 (1)

- Jul 30 (3)

- Jul 31 (1)

- Aug 01 (6)

- Aug 03 (6)

- Aug 04 (4)

- Aug 05 (2)

- Aug 06 (2)

- Aug 07 (1)

- Aug 08 (1)

- Aug 09 (1)

- Aug 10 (1)

- Aug 11 (3)

- Aug 12 (1)

- Aug 13 (1)

- Aug 14 (1)

- Aug 15 (1)

- Aug 17 (9)

- Aug 19 (1)

- Aug 24 (1)

- Aug 28 (1)

- Oct 14 (1)

- Oct 22 (1)

- Nov 13 (10)

- Nov 14 (1)

- Nov 15 (3)

- Nov 23 (2)

- Nov 24 (1)

- Nov 25 (1)

- Nov 26 (1)

- Dec 01 (3)

- Dec 07 (3)

- Dec 08 (1)

- Dec 10 (2)

- Dec 12 (22)

- Dec 13 (30)

- Dec 15 (7)

- Dec 20 (5)

- Dec 28 (1)

- Dec 29 (3)

- Dec 31 (1)

- Jan 02 (2)

- Jan 10 (1)

- Jan 14 (1)

- Jan 17 (4)

- Jan 29 (2)

- Feb 03 (1)

- Feb 04 (6)

- Feb 05 (5)

- Feb 06 (10)

- Feb 08 (16)

- Feb 10 (63)

- Feb 11 (39)

- Feb 12 (33)

- Feb 13 (27)

- Feb 14 (4)

- Feb 15 (66)

- Feb 16 (7)

- Feb 17 (22)

- Feb 18 (14)

- Feb 19 (44)

- Feb 20 (3)

- Feb 21 (12)

- Feb 22 (68)

- Feb 23 (78)

- Feb 25 (3)

- Feb 26 (10)

- Feb 27 (28)

- Feb 28 (26)

- Mar 01 (17)

- Mar 02 (7)

- Mar 03 (6)

- Mar 04 (3)

- Mar 05 (7)

- Mar 06 (8)

- Mar 07 (13)

- Mar 08 (6)

- Mar 09 (3)

- Mar 10 (2)

- Mar 11 (15)

- Mar 12 (6)

- Mar 13 (2)

- Mar 14 (15)

- Mar 15 (10)

- Mar 16 (6)

- Mar 17 (5)

- Mar 18 (3)

- Mar 19 (3)

- Mar 20 (9)

- Mar 21 (2)

- Mar 22 (1)

- Mar 23 (15)

- Mar 24 (1)

- Mar 25 (1)

- Mar 26 (7)

- Mar 27 (5)

- Mar 28 (2)

- Mar 29 (8)

- Mar 30 (21)

- Mar 31 (10)

- Apr 01 (3)

- Apr 02 (3)

- Apr 03 (9)

- Apr 04 (1)

- Apr 05 (4)

- Apr 06 (4)

- Apr 07 (4)

- Apr 08 (4)

- Apr 09 (1)

- Apr 10 (1)

- Apr 11 (6)

- Apr 12 (7)

- Apr 13 (3)

- Apr 14 (2)

- Apr 15 (11)

- Apr 16 (16)

- Apr 17 (12)

- Apr 18 (29)

- Apr 19 (21)

- Apr 20 (3)

- Apr 21 (8)

- Apr 22 (3)

- Apr 23 (5)

- Apr 24 (1)

- Apr 25 (4)

- Apr 26 (6)

- Apr 27 (8)

- Apr 28 (10)

- Apr 30 (2)

- May 01 (7)

- May 02 (3)

- May 03 (16)

- May 04 (3)

- May 05 (11)

- May 06 (41)

- May 07 (2)

- May 08 (18)

- May 09 (117)

- May 10 (15)

- May 11 (85)

- May 12 (12)

- May 13 (54)

- May 14 (73)

- May 15 (85)

- May 16 (148)

- May 17 (101)

- May 18 (100)

- May 19 (99)

- May 20 (101)

- May 21 (101)

- May 22 (101)

- May 23 (101)

- May 24 (101)

- May 25 (7)

- May 27 (1)

- May 28 (1)

- May 29 (29)

- Jun 02 (1)

- Jun 03 (21)

- Jun 04 (7)

- Jun 05 (8)

- Jun 06 (1)

- Jun 22 (5)

- Jun 23 (10)

- Jun 24 (10)

- Jun 25 (4)

- Jun 26 (7)

- Jun 27 (22)

- Jun 28 (12)

- Jun 29 (11)

- Jun 30 (23)

- Jul 01 (10)

- Jul 02 (13)

- Jul 03 (17)

- Jul 04 (41)

- Jul 05 (17)

- Jul 06 (8)

- Jul 07 (10)

- Jul 08 (6)

- Jul 09 (3)

- Jul 10 (2)

- Jul 11 (2)

- Jul 12 (12)

- Jul 13 (6)

- Jul 14 (14)

- Jul 15 (5)

- Jul 17 (1)

- Jul 18 (1)

- Jul 19 (1)

- Jul 20 (1)

- Jul 22 (2)

- Jul 23 (30)

- Jul 24 (5)

- Jul 25 (55)

- Jul 27 (8)

- Jul 28 (26)

- Jul 29 (15)

- Jul 30 (35)

- Jul 31 (5)

- Aug 01 (13)

- Aug 02 (3)

- Aug 04 (1)

- Aug 05 (2)

- Aug 11 (11)

- Aug 13 (3)

- Aug 14 (7)

- Aug 15 (3)

- Aug 16 (5)

- Aug 17 (4)

- Aug 18 (4)

- Aug 19 (2)

- Aug 20 (19)

- Aug 21 (38)

- Aug 23 (14)

- Aug 24 (6)

- Aug 25 (30)

- Aug 26 (57)

- Aug 27 (19)

- Aug 28 (25)

- Aug 29 (120)

- Aug 30 (82)

- Aug 31 (46)

- Sep 01 (96)

- Sep 02 (101)

- Sep 03 (62)

- Sep 04 (32)

- Sep 05 (44)

- Sep 06 (91)

- Sep 07 (22)

- Sep 08 (100)

- Sep 09 (71)

- Sep 10 (15)

- Sep 11 (90)

- Sep 13 (2)

Friday, July 28, 2023

07-28-2023-1638 - Spectral theory and *-algebras (draft)

| Basic concepts | |

|---|---|

| Main results | |

| Special Elements/Operators | |

| Spectrum | |

| Decomposition | |

| Spectral Theorem | |

| Special algebras | |

| Finite-Dimensional | |

| Generalizations | |

| Miscellaneous | |

| Examples | |

| Applications |

|

https://en.wikipedia.org/wiki/Spectral_radius

07-28-2023-1637 - spectral radius, spectral gap, spectrum of a matrix, etc.. draft

See also

- Spectral gap

- The Joint spectral radius is a generalization of the spectral radius to sets of matrices.

- Spectrum of a matrix

- Spectral abscissa

https://en.wikipedia.org/wiki/Spectral_radius

07-28-2023-1223 - DECOMPOSITION OF SPECTRUM (FUNCTIONAL ANALYSIS) (DRAFT)

The spectrum of a linear operator

- a point spectrum, consisting of the eigenvalues of

;

- a continuous spectrum, consisting of the scalars that are not eigenvalues but make the range of

a proper dense subset of the space;

- a residual spectrum, consisting of all other scalars in the spectrum.

This decomposition is relevant to the study of differential equations, and has applications to many branches of science and engineering. A well-known example from quantum mechanics is the explanation for the discrete spectral lines and the continuous band in the light emitted by excited atoms of hydrogen.

https://en.wikipedia.org/wiki/Decomposition_of_spectrum_(functional_analysis)

07-28-2023-1222 - In mathematics, the spectral radius of a square matrix is the maximum of the absolute values of its eigenvalues.[1] More generally, the spectral radius of a bounded linear operator is the supremum of the absolute values of the elements of its spectrum. The spectral radius is often denoted by ρ(·). (DRAFT)

In mathematics, the spectral radius of a square matrix is the maximum of the absolute values of its eigenvalues.[1] More generally, the spectral radius of a bounded linear operator is the supremum of the absolute values of the elements of its spectrum. The spectral radius is often denoted by ρ(·).

https://en.wikipedia.org/wiki/Spectral_radius

07-28-2023-1210 - FASTER HARDWARE, LONG SHORT-TERM MEMORY, MULTI-LEVEL HIERARCHY, BACKPROPAGATION, FEATURE DETECTORS, LATENT VARIABLES, GENERATIVE MODEL, LOWER BOUND, DEEP BELIEF NETWORK, UNSUPERVISED LEARNING, SUPERVISED LEARNING VARIABLE TREND CURRICULUM INDOCTRINATION SCHEDULE, 1980, 1900, 1800 DUSTY BLUE, 1700 XLIF OR A BOMBER, 1600 WIFE OR N/A, -10000 HUMANS (HUMAN RECORD ONLY)(DRAFT)(VAR ENV)(DRAFT), <-10000 N/A, ETC.. DRAFT

Multi-level hierarchy

One is Jürgen Schmidhuber's multi-level hierarchy of networks (1992) pre-trained one level at a time through unsupervised learning, fine-tuned through backpropagation.[10] Here each level learns a compressed representation of the observations that is fed to the next level.

Related approach

Similar ideas have been used in feed-forward neural networks for unsupervised pre-training to structure a neural network, making it first learn generally useful feature detectors. Then the network is trained further by supervised backpropagation to classify labeled data. The deep belief network model by Hinton et al. (2006) involves learning the distribution of a high level representation using successive layers of binary or real-valued latent variables. It uses a restricted Boltzmann machine to model each new layer of higher level features. Each new layer guarantees an increase on the lower-bound of the log likelihood of the data, thus improving the model, if trained properly. Once sufficiently many layers have been learned the deep architecture may be used as a generative model by reproducing the data when sampling down the model (an "ancestral pass") from the top level feature activations.[11] Hinton reports that his models are effective feature extractors over high-dimensional, structured data.[12]

Long short-term memory

Another technique particularly used for recurrent neural networks is the long short-term memory (LSTM) network of 1997 by Hochreiter & Schmidhuber.[13] In 2009, deep multidimensional LSTM networks demonstrated the power of deep learning with many nonlinear layers, by winning three ICDAR 2009 competitions in connected handwriting recognition, without any prior knowledge about the three different languages to be learned.[14][15]

Faster hardware

Hardware advances have meant that from 1991 to 2015, computer power (especially as delivered by GPUs) has increased around a million-fold, making standard backpropagation feasible for networks several layers deeper than when the vanishing gradient problem was recognized. Schmidhuber notes that this "is basically what is winning many of the image recognition competitions now", but that it "does not really overcome the problem in a fundamental way"[16] since the original models tackling the vanishing gradient problem by Hinton and others were trained in a Xeon processor, not GPUs.[11]

https://en.wikipedia.org/wiki/Vanishing_gradient_problem

07-28-2023-1208 - VANISHING GRADIENT PROBLEM, EXPLODING GRADIENT PROBLEM, EXPLODING GRADIENT, ETC.. DRAFT

| Part of a series on |

| Machine learning and data mining |

|---|

|

|

|

Paradigms |

|

|

Problems |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Learning with humans |

|

|

Model diagnostics |

|

|

Mathematical foundations |

|

|

Machine-learning venues |

|

|

Related articles |

In machine learning, the vanishing gradient problem is encountered when training artificial neural networks with gradient-based learning methods and backpropagation. In such methods, during each iteration of training each of the neural networks weights receives an update proportional to the partial derivative of the error function with respect to the current weight.[1] The problem is that in some cases, the gradient will be vanishingly small, effectively preventing the weight from changing its value.[1] In the worst case, this may completely stop the neural network from further training.[1] As one example of the problem cause, traditional activation functions such as the hyperbolic tangent function have gradients in the range (0,1], and backpropagation computes gradients by the chain rule. This has the effect of multiplying n of these small numbers to compute gradients of the early layers in an n-layer network, meaning that the gradient (error signal) decreases exponentially with n while the early layers train very slowly.

Back-propagation allowed researchers to train supervised deep artificial neural networks from scratch, initially with little success. Hochreiter's diplom thesis of 1991 formally identified the reason for this failure in the "vanishing gradient problem",[2][3] which not only affects many-layered feedforward networks,[4] but also recurrent networks.[5] The latter are trained by unfolding them into very deep feedforward networks, where a new layer is created for each time step of an input sequence processed by the network. (The combination of unfolding and backpropagation is termed backpropagation through time.)

When activation functions are used whose derivatives can take on larger values, one risks encountering the related exploding gradient problem.

https://en.wikipedia.org/wiki/Vanishing_gradient_problem

07-28-2023-1207 - Batch normalization (also known as batch norm) (DRAFT)

Batch normalization (also known as batch norm) is a method used to make training of artificial neural networks faster and more stable through normalization of the layers' inputs by re-centering and re-scaling. It was proposed by Sergey Ioffe and Christian Szegedy in 2015.[1]

While the effect of batch normalization is evident, the reasons behind its effectiveness remain under discussion. It was believed that it can mitigate the problem of internal covariate shift, where parameter initialization and changes in the distribution of the inputs of each layer affect the learning rate of the network.[1] Recently, some scholars have argued that batch normalization does not reduce internal covariate shift, but rather smooths the objective function, which in turn improves the performance.[2] However, at initialization, batch normalization in fact induces severe gradient explosion in deep networks, which is only alleviated by skip connections in residual networks.[3] Others maintain that batch normalization achieves length-direction decoupling, and thereby accelerates neural networks.[4]

https://en.wikipedia.org/wiki/Batch_normalization

07-28-2023-1206 - An echo state network (ESN)[1][2] (DRAFT)

An echo state network (ESN)[1][2] is a type of reservoir computer that uses a recurrent neural network with a sparsely connected hidden layer (with typically 1% connectivity). The connectivity and weights of hidden neurons are fixed and randomly assigned. The weights of output neurons can be learned so that the network can produce or reproduce specific temporal patterns. The main interest of this network is that although its behaviour is non-linear, the only weights that are modified during training are for the synapses that connect the hidden neurons to output neurons. Thus, the error function is quadratic with respect to the parameter vector and can be differentiated easily to a linear system.

Alternatively, one may consider a nonparametric Bayesian formulation of the output layer, under which: (i) a prior distribution is imposed over the output weights; and (ii) the output weights are marginalized out in the context of prediction generation, given the training data. This idea has been demonstrated in[3] by using Gaussian priors, whereby a Gaussian process model with ESN-driven kernel function is obtained. Such a solution was shown to outperform ESNs with trainable (finite) sets of weights in several benchmarks.

Some publicly available implementations of ESNs are: (i) aureservoir: an efficient C++ library for various kinds of echo state networks with python/numpy bindings; (ii) Matlab code: an efficient matlab for an echo state network; (iii) ReservoirComputing.jl: an efficient Julia-based implementation of various types of echo state networks; and (iv) pyESN: simple echo state networks in Python.

https://en.wikipedia.org/wiki/Echo_state_network

07-28-2023-1205 - Deep learning speech synthesis uses Deep Neural Networks (DNN) (DRAFT)

Deep learning speech synthesis uses Deep Neural Networks (DNN) to produce artificial speech from text (text-to-speech) or spectrum (vocoder). The deep neural networks are trained using a large amount of recorded speech and, in the case of a text-to-speech system, the associated labels and/or input text.

Some DNN-based speech synthesizers are approaching the naturalness of the human voice.

https://en.wikipedia.org/wiki/Deep_learning_speech_synthesis

07-28-2023-1204 - In mathematics and computer algebra, automatic differentiation (auto-differentiation, autodiff, or AD), also called algorithmic differentiation, computational differentiation,[1][2] is a set of techniques to evaluate the partial derivative of a function specified by a computer program. (DRAFT)

In mathematics and computer algebra, automatic differentiation (auto-differentiation, autodiff, or AD), also called algorithmic differentiation, computational differentiation,[1][2] is a set of techniques to evaluate the partial derivative of a function specified by a computer program.

Automatic differentiation exploits the fact that every computer calculation, no matter how complicated, executes a sequence of elementary arithmetic operations (addition, subtraction, multiplication, division, etc.) and elementary functions (exp, log, sin, cos, etc.). By applying the chain rule repeatedly to these operations, partial derivatives of arbitrary order can be computed automatically, accurately to working precision, and using at most a small constant factor of more arithmetic operations than the original program.

https://en.wikipedia.org/wiki/Automatic_differentiation

Automatic differentiation is distinct from symbolic differentiation and numerical differentiation. Symbolic differentiation faces the difficulty of converting a computer program into a single mathematical expression and can lead to inefficient code. Numerical differentiation (the method of finite differences) can introduce round-off errors in the discretization process and cancellation. Both of these classical methods have problems with calculating higher derivatives, where complexity and errors increase. Finally, both of these classical methods are slow at computing partial derivatives of a function with respect to many inputs, as is needed for gradient-based optimization algorithms. Automatic differentiation solves all of these problems.

https://en.wikipedia.org/wiki/Automatic_differentiation

Differentiable computing | |||||||

|---|---|---|---|---|---|---|---|

| General | |||||||

| Concepts | |||||||

| Applications | |||||||

| Hardware | |||||||

| Software libraries | |||||||

| Implementations |

| ||||||

| People | |||||||

| Organizations | |||||||

| Architectures |

| ||||||

07-28-2023-1202 - 1980 ML RECOG (OLD TECH), LAYERD MEMORY MAGNETICARY UNITS, ETC.. PERCEPTRONICS, ELECTRONIC, MAGNETRONICS, DARK MATTER, NUCLEAR, CONDENSED MATTER PHYSICS, NUCLEAR PHYSICS, ETC.. DRAFT

MLPs were a popular machine learning solution in the 1980s, finding applications in diverse fields such as speech recognition, image recognition, and machine translation software,[23] but thereafter faced strong competition from much simpler (and related[24]) support vector machines. Interest in backpropagation networks returned due to the successes of deep learning.

https://en.wikipedia.org/wiki/Multilayer_perceptron

As late as 2021, a very simple NN architecture combining two deep MLPs with skip connections and layer normalizations was designed and called MLP-Mixer; its realizations featuring 19 to 431 millions of parameters were shown to be comparable to vision transformers of similar size on ImageNet and similar image classification tasks.[22]

https://en.wikipedia.org/wiki/Multilayer_perceptron

07-28-2023-1202 - Learning occurs in the perceptron by changing connection weights after each piece of data is processed, based on the amount of error in the output compared to the expected result. This is an example of supervised learning, and is carried out through backpropagation, a generalization of the least mean squares algorithm in the linear perceptron. (DRAFT)

Learning occurs in the perceptron by changing connection weights after each piece of data is processed, based on the amount of error in the output compared to the expected result. This is an example of supervised learning, and is carried out through backpropagation, a generalization of the least mean squares algorithm in the linear perceptron.

https://en.wikipedia.org/wiki/Multilayer_perceptron

07-28-2023-1201 - In recent developments of deep learning the rectified linear unit (ReLU) is more frequently used as one of the possible ways to overcome the numerical problems related to the sigmoids. (DRAFT)

In recent developments of deep learning the rectified linear unit (ReLU) is more frequently used as one of the possible ways to overcome the numerical problems related to the sigmoids.

https://en.wikipedia.org/wiki/Multilayer_perceptron

07-28-2023-1201 - A multilayer perceptron (MLP) (DRAFT)

| Part of a series on |

| Machine learning and data mining |

|---|

|

|

|

Paradigms |

|

|

Problems |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Learning with humans |

|

|

Model diagnostics |

|

|

Mathematical foundations |

|

|

Machine-learning venues |

|

|

Related articles |

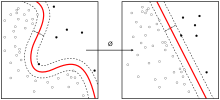

A multilayer perceptron (MLP) is a fully connected class of feedforward artificial neural network (ANN). The term MLP is used ambiguously, sometimes loosely to mean any feedforward ANN, sometimes strictly to refer to networks composed of multiple layers of perceptrons (with threshold activation)[citation needed]; see § Terminology. Multilayer perceptrons are sometimes colloquially referred to as "vanilla" neural networks, especially when they have a single hidden layer.[1]

An MLP consists of at least three layers of nodes: an input layer, a hidden layer and an output layer. Except for the input nodes, each node is a neuron that uses a nonlinear activation function. MLP utilizes a chain rule[2] based supervised learning technique called backpropagation or reverse mode of automatic differentiation for training.[3][4][5][6][7] Its multiple layers and non-linear activation distinguish MLP from a linear perceptron. It can distinguish data that is not linearly separable.[8]

https://en.wikipedia.org/wiki/Multilayer_perceptron

07-28-2023-1200 - LATENT CLASS ANALYSIS DRAFT

In statistics, a latent class model (LCM) relates a set of observed (usually discrete) multivariate variables to a set of latent variables. It is a type of latent variable model. It is called a latent class model because the latent variable is discrete. A class is characterized by a pattern of conditional probabilities that indicate the chance that variables take on certain values.

Latent class analysis (LCA) is a subset of structural equation modeling, used to find groups or subtypes of cases in multivariate categorical data. These subtypes are called "latent classes".[1][2]

Confronted with a situation as follows, a researcher might choose to use LCA to understand the data: Imagine that symptoms a-d have been measured in a range of patients with diseases X, Y, and Z, and that disease X is associated with the presence of symptoms a, b, and c, disease Y with symptoms b, c, d, and disease Z with symptoms a, c and d.

The LCA will attempt to detect the presence of latent classes (the disease entities), creating patterns of association in the symptoms. As in factor analysis, the LCA can also be used to classify case according to their maximum likelihood class membership.[1][3]

Because the criterion for solving the LCA is to achieve latent classes within which there is no longer any association of one symptom with another (because the class is the disease which causes their association), and the set of diseases a patient has (or class a case is a member of) causes the symptom association, the symptoms will be "conditionally independent", i.e., conditional on class membership, they are no longer related.[1]

https://en.wikipedia.org/wiki/Latent_class_model