Wednesday, November 18, 2020

Dissipative System Quantum Decoherence Decoupling Optics

Quantum decoherence is the loss of quantum coherence. In quantum mechanics, particles such as electrons are described by a wave function, a mathematical representation of the quantum state of a system; a probabilistic interpretation of the wave function is used to explain various quantum effects. As long as there exists a definite phase relation between different states, the system is said to be coherent. A definite phase relationship is necessary to perform quantum computing on quantum information encoded in quantum states. Coherence is preserved under the laws of quantum physics.

https://en.wikipedia.org/wiki/Quantum_decoherence

A dissipative system is a thermodynamically open system which is operating out of, and often far from, thermodynamic equilibrium in an environment with which it exchanges energyand matter. A tornado may be thought of as a dissipative system. Dissipative systems stand in contrast to conservative systems.

A dissipative structure is a dissipative system that has a dynamical regime that is in some sense in a reproducible steady state. This reproducible steady state may be reached by natural evolution of the system, by artifice, or by a combination of these two.

As quantum mechanics, and any classical dynamical system, relies heavily on Hamiltonian mechanics for which time is reversible, these approximations are not intrinsically able to describe dissipative systems. It has been proposed that in principle, one can couple weakly the system – say, an oscillator – to a bath, i.e., an assembly of many oscillators in thermal equilibrium with a broad band spectrum, and trace (average) over the bath. This yields a master equation which is a special case of a more general setting called the Lindblad equationthat is the quantum equivalent of the classical Liouville equation. The well-known form of this equation and its quantum counterpart takes time as a reversible variable over which to integrate, but the very foundations of dissipative structures imposes an irreversible and constructive role for time.

The framework of dissipative structures as a mechanism to understand the behavior of systems in constant interexchange of energy has been successfully applied on different science fields and applications, as in optics,[11][12] population dynamics and growth [13] [14][15] and chemomechanical structures[16][17][18]

https://en.wikipedia.org/wiki/Dissipative_system

The path integral formulation is a description in quantum mechanics that generalizes the action principle of classical mechanics. It replaces the classical notion of a single, unique classical trajectory for a system with a sum, or functional integral, over an infinity of quantum-mechanically possible trajectories to compute a quantum amplitude.

The path integral also relates quantum and stochastic processes, and this provided the basis for the grand synthesis of the 1970s, which unified quantum field theory with the statistical field theory of a fluctuating field near a second-order phase transition. The Schrödinger equation is a diffusion equation with an imaginary diffusion constant, and the path integral is an analytic continuation of a method for summing up all possible random walks.[2]

https://en.wikipedia.org/wiki/Path_integral_formulation#As_a_probability

The maser is based on the principle of stimulated emission proposed by Albert Einstein in 1917. When atoms have been induced into an excited energy state, they can amplify radiation at a frequency particular to the element or molecule used as the masing medium (similar to what occurs in the lasing medium in a laser).

By putting such an amplifying medium in a resonant cavity, feedback is created that can produce coherent radiation.

- Atomic beam masers

- Ammonia maser

- Free electron maser

- Hydrogen maser

- Gas masers

- Rubidium maser

- Liquid-dye and chemical laser

- Solid state masers

- Ruby maser

- Whispering-gallery modes iron-sapphire maser

- Dual noble gas maser (The dual noble gas of a masing medium which is nonpolar.[7])

In 2012, a research team from the National Physical Laboratory and Imperial College London developed a solid-state maser that operated at room temperature by using optically pumped, pentacene-doped p-Terphenyl as the amplifier medium.[8][9][10] It produced pulses of maser emission lasting for a few hundred microseconds.

In 2018, a research team from Imperial College London and University College London demonstrated continuous-wave maser oscillation using synthetic diamonds containing nitrogen-vacancy defects.[11][12]

https://en.wikipedia.org/wiki/Maser

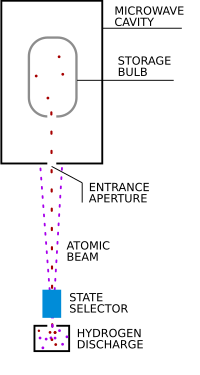

The hydrogen maser is used as an atomic frequency standard. Together with other kinds of atomic clocks, these help make up the International Atomic Time standard ("Temps Atomique International" or "TAI" in French). This is the international time scale coordinated by the International Bureau of Weights and Measures. Norman Ramsey and his colleagues first conceived of the maser as a timing standard. More recent masers are practically identical to their original design. Maser oscillations rely on the stimulated emission between two hyperfine energy levels of atomic hydrogen. Here is a brief description of how they work:

- First, a beam of atomic hydrogen is produced. This is done by submitting the gas at low pressure to a high-frequency radio wave discharge (see the picture on this page).

- The next step is "state selection"—in order to get some stimulated emission, it is necessary to create a population inversion of the atoms. This is done in a way that is very similar to the Stern–Gerlach experiment. After passing through an aperture and a magnetic field, many of the atoms in the beam are left in the upper energy level of the lasing transition. From this state, the atoms can decay to the lower state and emit some microwave radiation.

- A high Q factor (quality factor) microwave cavity confines the microwaves and reinjects them repeatedly into the atom beam. The stimulated emission amplifies the microwaves on each pass through the beam. This combination of amplification and feedback is what defines all oscillators. The resonant frequency of the microwave cavity is tuned to the frequency of the hyperfine energy transition of hydrogen: 1,420,405,752 hertz.[15]

- A small fraction of the signal in the microwave cavity is coupled into a coaxial cable and then sent to a coherent radio receiver.

- The microwave signal coming out of the maser is very weak (a few picowatts). The frequency of the signal is fixed and extremely stable. The coherent receiver is used to amplify the signal and change the frequency. This is done using a series of phase-locked loops and a high performance quartz oscillator.

Maser-like stimulated emission has also been observed in nature from interstellar space, and it is frequently called "superradiant emission" to distinguish it from laboratory masers. Such emission is observed from molecules such as water (H2O), hydroxyl radicals (•OH), methanol (CH3OH), formaldehyde (HCHO), and silicon monoxide (SiO). Water molecules in star-forming regions can undergo a population inversion and emit radiation at about 22.0 GHz, creating the brightest spectral line in the radio universe. Some water masers also emit radiation from a rotational transition at a frequency of 96 GHz.[16][17]

Extremely powerful masers, associated with active galactic nuclei, are known as megamasers and are up to a million times more powerful than stellar masers.

In physics, Faddeev–Popov ghosts (also called Faddeev–Popov gauge ghosts or Faddeev–Popov ghost fields) are extraneous fields which are introduced into gauge quantum field theories to maintain the consistency of the path integral formulation. They are named after Ludvig Faddeev and Victor Popov.[1][2]

A more general meaning of the word ghost in theoretical physics is discussed in Ghost (physics).

https://en.wikipedia.org/wiki/Faddeev–Popov_ghost

Whispering-gallery waves, or whispering-gallery modes, are a type of wave that can travel around a concave surface. Originally discovered for sound waves in the whispering gallery of St Paul’s Cathedral, they can exist for light and for other waves, with important applications in nondestructive testing, lasing, cooling and sensing, as well as in astronomy.

https://en.wikipedia.org/wiki/Whispering-gallery_wave

Nuclear fission products are the atomic fragments left after a large atomic nucleus undergoes nuclear fission. Typically, a large nucleuslike that of uranium fissions by splitting into two smaller nuclei, along with a few neutrons, the release of heat energy (kinetic energy of the nuclei), and gamma rays. The two smaller nuclei are the fission products. (See also Fission products (by element)).

About 0.2% to 0.4% of fissions are ternary fissions, producing a third light nucleus such as helium-4 (90%) or tritium (7%).

https://en.wikipedia.org/wiki/Nuclear_fission_product

A neutron bomb, officially defined as a type of enhanced radiation weapon (ERW), is a low yield thermonuclear weapondesigned to maximize lethal neutron radiation in the immediate vicinity of the blast while minimizing the physical power of the blast itself. The neutron release generated by a nuclear fusion reaction is intentionally allowed to escape the weapon, rather than being absorbed by its other components.[3] The neutron burst, which is used as the primary destructive action of the warhead, is able to penetrate enemy armor more effectively than a conventional warhead, thus making it more lethal as a tactical weapon.

https://en.wikipedia.org/wiki/Neutron_bomb

A salted bomb is a nuclear weapon designed to function as a radiological weapon, producing enhanced quantities of radioactive fallout, rendering a large area uninhabitable.[1] The term is derived both from the means of their manufacture, which involves the incorporation of additional elements to a standard atomic weapon, and from the expression "to salt the earth", meaning to render an area uninhabitable for generations. The idea originated with Hungarian-American physicist Leo Szilard, in February 1950. His intent was not to propose that such a weapon be built, but to show that nuclear weapon technology would soon reach the point where it could end human life on Earth.[1]

No intentionally salted bomb has ever been atmospherically tested, and as far as is publicly known, none have ever been built.[1] However, the UK tested a 1 kiloton bomb incorporating a small amount of cobalt as an experimental radiochemical tracer at their Tadje testing site in Maralinga range, Australia, on September 14, 1957.[2] The triple "taiga" nuclear salvo test, as part of the preliminary March 1971 Pechora–Kama Canal project, converted significant amounts of stable cobalt-59 to radioactive cobalt-60 by fusion generated neutron activation and this product is responsible for about half of the gamma dose measured at the test site in 2011.[3][4] The experiment was regarded as a failure and not repeated.[1]

A salted bomb should not be confused with a dirty bomb, which is an ordinary explosive bomb containing radioactive material which is spread over the area when the bomb explodes. A salted bomb is able to contaminate a much larger area than a dirty bomb.

Salted versions of both fission and fusion weapons can be made by surrounding the core of the explosive device with a material containing an element that can be converted to a highly radioactive isotope by neutron bombardment.[1] When the bomb explodes, the element absorbs neutrons released by the nuclear reaction, converting it to its radioactive form. The explosion scatters the resulting radioactive material over a wide area, leaving it uninhabitable far longer than an area affected by typical nuclear weapons. In a salted hydrogen bomb, the radiation case around the fusion fuel, which normally is made of some fissionable element, is replaced with a metallic salting element. Salted fission bombs can be made by replacing the neutron reflector between the fissionable core and the explosive layer with a metallic element. The energy yield from a salted weapon is usually lower than from an ordinary weapon of similar size as a consequence of these changes.

The radioactive isotope used for the fallout material would be a high intensity gamma ray emitter, with a half-life long enough that it remains lethal for an extended period. It would also have to have a chemistry that causes it to return to earth as fallout, rather than stay in the atmosphere after being vaporized in the explosion. Another consideration is biological: radioactive isotopes of elements normally taken up by plants and animals as nutrition would pose a special threat to organisms that absorbed them, as their radiation would be delivered from within the body of the organism.

Radioactive isotopes that have been suggested for salted bombs include gold-198, tantalum-182, zinc-65, and cobalt-60.[1] Physicist W. H. Clark looked at the potential of such devices and estimated that a 20 megaton bomb salted with sodium would generate sufficient radiation to contaminate 200,000 square miles (520,000 km2) (an area that is slightly larger than Spain or Thailand, though smaller than France). Given the intensity of the γ radiation, not even those in basement shelters could survive within the fallout zone.[5] However, the short half-life of sodium-24 (15 h)[6]:25 would mean that the radiation would not spread far enough to be a true doomsday weapon.[5][7]

A cobalt bomb was first suggested by Leo Szilard, who publicly sounded the alarm against the possible development of a salted thermonuclear bombs that might annihilate mankind[8]in a University of Chicago Round Table radio program on February 26, 1950.[9] His comments, as well as those of Hans Bethe, Harrison Brown, and Frederick Seitz (the three other scientists who participated in the program), were attacked by the Atomic Energy Commission's former Chairman David Lilienthal, and the criticisms plus a response from Szilard were published.[9] Time compared Szilard to Chicken Little[7] while the AEC dismissed his ideas, but scientists debated whether it was feasible or not. The Bulletin of the Atomic Scientistscommissioned a study by James R. Arnold, who concluded that it was.[10] Clark suggested that a 50,000 megaton cobalt bomb did have the potential to produce sufficient long-lasting radiation to be a doomsday weapon, in theory,[5] but was of the view that, even then, "enough people might find refuge to wait out the radioactivity and emerge to begin again."[7]

https://en.wikipedia.org/wiki/Salted_bomb

Antimatter catalyzed nuclear pulse propulsion is a variation of nuclear pulse propulsion based upon the injection of antimatter into a mass of nuclear fuel which normally would not be useful in propulsion. The anti-protons used to start the reaction are consumed, so it is a misnomer to refer to them as a catalyst.

Traditional nuclear pulse propulsion has the downside that the minimum size of the engine is defined by the minimum size of the nuclear bombs used to create thrust. A conventional nuclear H-bomb design consists of two parts, the primary which is almost always based on plutonium, and a secondary using fusion fuel, normally lithium-deuteride. There is a minimum size for the primary, about 25 kilograms, which produces a small nuclear explosion about 1/100 kiloton (10 tons, 42 GJ; W54). More powerful devices scale up in size primarily through the addition of fusion fuel. Of the two, the fusion fuel is much less expensive and gives off far fewer radioactive products, so from a cost and efficiency standpoint, larger bombs are much more efficient. However, using such large bombs for spacecraft propulsion demands much larger structures able to handle the stress. There is a tradeoff between the two demands.

By injecting a small amount of antimatter into a subcritical mass of fuel (typically plutonium or uranium) fission of the fuel can be forced. An anti-proton has a negative electric chargejust like an electron, and can be captured in a similar way by a positively charged atomic nucleus. The initial configuration, however, is not stable and radiates energy as gamma rays. As a consequence, the anti-proton moves closer and closer to the nucleus until they eventually touch, at which point the anti-proton and a proton are both annihilated. This reaction releases a tremendous amount of energy, of which, some is released as gamma rays and some is transferred as kinetic energy to the nucleus, causing it to explode. The resulting shower of neutrons can cause the surrounding fuel to undergo rapid fission or even nuclear fusion.

The lower limit of the device size is determined by anti-proton handling issues and fission reaction requirements; as such, unlike either the Project Orion-type propulsion system, which requires large numbers of nuclear explosive charges, or the various anti-matter drives, which require impossibly expensive amounts of antimatter, antimatter catalyzed nuclear pulse propulsion has intrinsic advantages.[1]

A conceptual design of an antimatter-catalyzed thermonuclear explosive physics package, is one in which the primary mass of plutonium, usually necessary for the ignition in a conventional Teller-Ulam thermonuclear explosion, is replaced by one microgram of antihydrogen. In this theoretical design, the antimatter is helium-cooled and magnetically levitated in the center of the device, in the form of a pellet a tenth of a mm in diameter, a position analogous to the primary fission core in the layer cake/Sloika design[2][3]). As the antimatter must remain away from ordinary matter until the desired moment of the explosion, the central pellet must be isolated from the surrounding hollow sphere of 100 grams of thermonuclear fuel. During and after the implosive compression by the high explosive lenses, the fusion fuel comes into contact with the antihydrogen. Annihilation reactions, which would start soon after the Penning trap is destroyed, is to provide the energy to begin the nuclear fusion in the thermonuclear fuel. If the chosen degree of compression is high, a device with increased explosive/propulsive effects is obtained, and if it is low, that is, the fuel is not at high density, a considerable number of neutrons will escape the device, and a neutron bomb forms. In both cases the electromagnetic pulse effect and the radioactive fallout are substantially lower than that of a conventional fission or Teller-Ulam device of the same yield, approximately 1 kt.[4]

https://en.wikipedia.org/wiki/Antimatter-catalyzed_nuclear_pulse_propulsion

A nuclear salt-water rocket (NSWR) is a theoretical type of nuclear thermal rocket which was designed by Robert Zubrin.[1] In place of traditional chemical propellant, such as that in a chemical rocket, the rocket would be fueled by salts of plutonium or 20 percent enriched uranium. The solution would be contained in a bundle of pipes coated in boron carbide (for its properties of neutron absorption). Through a combination of the coating and space between the pipes, the contents would not reach critical mass until the solution is pumped into a reaction chamber, thus reaching a critical mass, and being expelled through a nozzle to generate thrust.[1]

https://en.wikipedia.org/wiki/Nuclear_salt-water_rocket

Gas core reactor rockets are a conceptual type of rocket that is propelled by the exhausted coolant of a gaseous fission reactor. The nuclear fission reactor core may be either a gasor plasma. They may be capable of creating specific impulses of 3,000–5,000 s (30 to 50 kN·s/kg, effective exhaust velocities 30 to 50 km/s) and thrust which is enough for relatively fast interplanetary travel. Heat transfer to the working fluid (propellant) is by thermal radiation, mostly in the ultraviolet, given off by the fission gas at a working temperature of around 25,000 °C.

https://en.wikipedia.org/wiki/Gas_core_reactor_rocket

In a traditional nuclear photonic rocket, an onboard nuclear reactor would generate such high temperatures that the blackbody radiation from the reactor would provide significant thrust. The disadvantage is that it takes much power to generate a small amount of thrust this way, so acceleration is very low. The photon radiators would most likely be constructed using graphite or tungsten. Photonic rockets are technologically feasible, but rather impractical with current technology based on an onboard nuclear power source. However, the recent development of Photonic Laser Thruster (PLT), the Beamed Laser Propulsion (BLP) with photon recycling, promises to overcome these issues by separating the nuclear power source and the spacecraft and by increasing the thrust to nuclear power ratio (specific thrust) by orders of magnitude.[1]

https://en.wikipedia.org/wiki/Nuclear_photonic_rocket

A boosted fission weapon usually refers to a type of nuclear bomb that uses a small amount of fusion fuel to increase the rate, and thus yield, of a fission reaction. The neutronsreleased by the fusion reactions add to the neutrons released due to fission, allowing for more neutron-induced fission reactions to take place. The rate of fission is thereby greatly increased such that much more of the fissile material is able to undergo fission before the core explosively disassembles. The fusion process itself adds only a small amount of energy to the process, perhaps 1%.[1]

The alternative meaning is an obsolete type of single-stage nuclear bomb that uses thermonuclear fusion on a large scale to create fast neutrons that can cause fission in depleted uranium, but which is not a two-stage hydrogen bomb. This type of bomb was referred to by Edward Teller as "Alarm Clock", and by Andrei Sakharov as "Sloika" or "Layer Cake" (Teller and Sakharov developed the idea independently, as far as is known).[2]

https://en.wikipedia.org/wiki/Boosted_fission_weapon

A pure fusion weapon is a hypothetical hydrogen bomb design that does not need a fission "primary" explosive to ignite the fusion of deuterium and tritium, two heavy isotopes of hydrogen used in fission-fusion thermonuclear weapons. Such a weapon would require no fissile material and would therefore be much easier to develop in secret than existing weapons. The necessity of separating weapons-grade uranium (U-235) or breeding plutonium (Pu-239) requires a substantial and difficult-to-conceal industrial investment, and blocking the sale and transfer of the needed machinery has been the primary mechanism to control nuclear proliferation to date. Due to its not requiring a fission primary explosive to initiate a fusion reaction, the pure fusion weapon would also have greatly increased potential yield over current thermonuclear weapons.[citation needed]

https://en.wikipedia.org/wiki/Pure_fusion_weapon

A thermonuclear weapon, fusion weapon or hydrogen bomb (H bomb), is a second-generation nuclear weapon design. Its greater sophistication affords it vastly greater destructive power than first-generation atomic bombs, a more compact size, a lower mass or a combination of these benefits. Characteristics of nuclear fusion reactions make possible the use of non-fissile depleted uranium as the weapon's main fuel, thus allowing more efficient use of scarce fissile material such as uranium-235 (235U) or plutonium-239 (239Pu).

Modern fusion weapons consist essentially of two main components: a nuclear fission primary stage (fueled by 235U or 239Pu) and a separate nuclear fusion secondary stage containing thermonuclear fuel: the heavy hydrogen isotopes deuterium and tritium, or in modern weapons lithium deuteride. For this reason, thermonuclear weapons are often colloquially called hydrogen bombs or H-bombs.[1]

https://en.wikipedia.org/wiki/Thermonuclear_weapon

An optical amplifier is a device that amplifies an optical signal directly, without the need to first convert it to an electrical signal. An optical amplifier may be thought of as a laser without an optical cavity, or one in which feedback from the cavity is suppressed. Optical amplifiers are important in optical communication and laser physics. They are used as optical repeaters in the long distance fiberoptic cables which carry much of the world's telecommunication links.

There are several different physical mechanisms that can be used to amplify a light signal, which correspond to the major types of optical amplifiers. In doped fiber amplifiers and bulk lasers, stimulated emission in the amplifier's gain medium causes amplification of incoming light. In semiconductor optical amplifiers (SOAs), electron-hole recombination occurs. In Raman amplifiers, Raman scattering of incoming light with phonons in the lattice of the gain medium produces photons coherent with the incoming photons. Parametric amplifiers use parametric amplification.

https://en.wikipedia.org/wiki/Optical_amplifier

GLASS SCIENCE, OPTICS, LASER

https://en.wikipedia.org/wiki/Time–temperature_superposition

https://en.wikipedia.org/wiki/Stimulated_emission

https://en.wikipedia.org/wiki/Optoelectronics

https://en.wikipedia.org/wiki/Absorption_(electromagnetic_radiation)

https://en.wikipedia.org/wiki/International_System_of_Units

https://en.wikipedia.org/wiki/Proton_emission

https://en.wikipedia.org/wiki/Weak_interaction

https://en.wikipedia.org/wiki/Positron_emission

https://en.wikipedia.org/wiki/Annihilation

https://en.wikipedia.org/wiki/Positron

https://en.wikipedia.org/wiki/Extended_periodic_table#Pyykkö_model

https://en.wikipedia.org/wiki/Cluster_decay

https://en.wikipedia.org/wiki/Nuclear_reaction

https://en.wikipedia.org/wiki/Proton_emission

https://en.wikipedia.org/wiki/Neurocysticercosis

https://en.wikipedia.org/wiki/Albendazole

https://en.wikipedia.org/wiki/Category:Carbamates

https://en.wikipedia.org/wiki/Table_of_nuclides

https://en.wikipedia.org/wiki/Theories_of_general_anaesthetic_action

https://en.wikipedia.org/wiki/List_of_unsolved_problems_in_chemistry

https://en.wikipedia.org/wiki/Onchocerca_volvulus

https://en.wikipedia.org/wiki/Flatworm

https://en.wikipedia.org/wiki/Thioketone

https://en.wikipedia.org/wiki/Category:Piperidines

https://en.wikipedia.org/wiki/Ethylenediamine

https://en.wikipedia.org/wiki/Cyproheptadine

https://en.wikipedia.org/wiki/Amine#Classification_of_amines

https://en.wikipedia.org/wiki/Substituted_piperazine

https://en.wikipedia.org/wiki/Piperoxan

https://en.wikipedia.org/wiki/Theophylline

https://en.wikipedia.org/wiki/H1_antagonist

https://en.wikipedia.org/wiki/Bisphosphonate

https://en.wikipedia.org/wiki/Hydrogen_potassium_ATPase

https://en.wikipedia.org/wiki/Proton-pump_inhibitor

https://en.wikipedia.org/wiki/CYP2C19

https://en.wikipedia.org/wiki/Barbiturate

https://en.wikipedia.org/wiki/Fluorination_by_sulfur_tetrafluoride

https://www.sciencedirect.com/topics/chemistry/metal-matrix-composites

https://www.sciencedirect.com/topics/chemistry/silicon-carbide

https://www.sciencedirect.com/topics/chemistry/sublimation-method

https://en.wikipedia.org/wiki/Electron_affinity_(data_page)

https://pubchem.ncbi.nlm.nih.gov/periodic-table/

https://pubchem.ncbi.nlm.nih.gov/#query=H5F

https://chemical-search.toxplanet.com/#/category-search/409-21-2

https://tips.fbi.gov/complete

Nikiya Anton Bettey Particle. BLK-BLU WHTWHT.

Equilibrium, Dyequilibrium Particle.

The actinoid /ˈæktɪnɔɪd/ (IUPAC nomenclature, also called actinide[1] /ˈæktɪnaɪd/) series encompasses the 15 metallic chemical elements with atomic numbers from 89 to 103, actinium through lawrencium. The actinoid series derives its name from the first element in the series, actinium. The informal chemical symbol An is used in general discussions of actinoid chemistry to refer to any actinoid.[2][3][4]

Strictly speaking, actinium has been labeled as group 3 element, but is often included in any general discussion of the chemistry of the actinoid elements. Since "actinoid" means "actinium-like" (cf. humanoid or android), it has been argued due semantic reasons that actinium cannot logically be an actinoid, but IUPAC acknowledges its inclusion based on common usage.[5]

All but one of the actinides are f-block elements, with the exception being either actinium or lawrencium. The series mostly corresponds to the filling of the 5f electron shell, although actinium and thorium lack any 5f electrons, and curium and lawrencium have the same number as the preceding element. In comparison with the lanthanides, also mostly f-block elements, the actinides show much more variable valence. They all have very large atomic and ionic radii and exhibit an unusually large range of physical properties. While actinium and the late actinides (from americium onwards) behave similarly to the lanthanides, the elements thorium, protactinium, and uranium are much more similar to transition metals in their chemistry, with neptunium and plutonium occupying an intermediate position.

All actinides are radioactive and release energy upon radioactive decay; naturally occurring uranium and thorium, and synthetically produced plutonium are the most abundant actinides on Earth. These are used in nuclear reactors and nuclear weapons. Uranium and thorium also have diverse current or historical uses, and americium is used in the ionization chambers of most modern smoke detectors.

Of the actinides, primordial thorium and uranium occur naturally in substantial quantities. The radioactive decay of uranium produces transient amounts of actinium and protactinium, and atoms of neptunium and plutonium are occasionally produced from transmutation reactions in uranium ores. The other actinides are purely synthetic elements.[2][6] Nuclear weapons tests have released at least six actinides heavier than plutonium into the environment; analysis of debris from a 1952 hydrogen bomb explosion showed the presence of americium, curium, berkelium, californium, einsteinium and fermium.[7]

https://en.wikipedia.org/wiki/Actinide

A deep geological repository is a way of storing toxic or radioactive waste within a stable geologic environment (typically 200–1000 m deep).[1] It entails a combination of waste form, waste package, engineered seals and geology that is suited to provide a high level of long-term isolation and containment without future maintenance. A number of mercury, cyanide and arsenic waste repositories are operating worldwide including Canada (Giant Mine) and Germany (potash mines in Herfa-Neurode and Zielitz).[2]

https://en.wikipedia.org/wiki/Deep_geological_repository

High-level waste (HLW) is a type of nuclear waste created by the reprocessing of spent nuclear fuel.[1] It exists in two main forms:

- First and second cycle raffinate and other waste streams created by nuclear reprocessing.

- Waste formed by vitrification of liquid high-level waste.

Liquid high-level waste is typically held temporarily in underground tanks pending vitrification. Most of the high-level waste created by the Manhattan project and the weapons programs of the cold war exists in this form because funding for further processing was typically not part of the original weapons programs. Both spent nuclear fuel and vitrified waste are considered [2] as suitable forms for long term disposal, after a period of temporary storage in the case of spent nuclear fuel.

High-level waste is very radioactive and, therefore, requires special shielding during handling and transport. Initially it also needs cooling, because it generates a great deal of heat. Most of the heat, at least after short-lived nuclides have decayed, is from the medium-lived fission products caesium-137 and strontium-90, which have half-lives on the order of 30 years.

A typical large 1000 MWe nuclear reactor produces 25–30 tons of spent fuel per year.[4] If the fuel were reprocessed and vitrified, the waste volume would be only about three cubic meters per year, but the decay heat would be almost the same.

It is generally accepted that the final waste will be disposed of in a deep geological repository, and many countries have developed plans for such a site, including Finland, France, Japan, United States and Sweden.

High-level waste is the highly radioactive waste material resulting from the reprocessing of spent nuclear fuel, including liquid waste produced directly in reprocessing and any solid material derived from such liquid waste that contains fission products in sufficient concentrations; and other highly radioactive material that is determined, consistent with existing law, to require permanent isolation.[5]

Spent (used) reactor fuel.

- Spent nuclear fuel is used reactor fuel that is no longer efficient in creating electricity, because its fission process has slowed due to a build-up of reaction poisons. However, it is still thermally hot, highly radioactive, and potentially harmful.

Waste materials from reprocessing.

- Materials for nuclear weapons are acquired by reprocessing spent nuclear fuel from breeder reactors. Reprocessing is a method of chemically treating spent fuel to separate out uraniumand plutonium. The byproduct of reprocessing is a highly radioactive sludge residue.

High-level radioactive waste is stored for 10 or 20 years in spent fuel pools, and then can be put in dry cask storage facilities.

In 1997, in the 20 countries which account for most of the world's nuclear power generation, spent fuel storage capacity at the reactors was 148,000 tonnes, with 59% of this utilized. Away-from-reactor storage capacity was 78,000 tonnes, with 44% utilized.[6] With annual additions of about 12,000 tonnes, issues for final disposal are not urgent.

https://en.wikipedia.org/wiki/High-level_waste

Nuclear reprocessing is the chemical separation of fission products and unused uranium from spent nuclear fuel.[1] Originally, reprocessing was used solely to extract plutonium for producing nuclear weapons. With commercialization of nuclear power, the reprocessed plutonium was recycled back into MOX nuclear fuel for thermal reactors.[2] The reprocessed uranium, also known as the spent fuel material, can in principle also be re-used as fuel, but that is only economical when uranium supply is low and prices are high.[citation needed] A breeder reactor is not restricted to using recycled plutonium and uranium. It can employ all the actinides, closing the nuclear fuel cycle and potentially multiplying the energy extracted from natural uranium by about 60 times.[3][4]

Reprocessing must be highly controlled and carefully executed in advanced facilities by highly specialized personnel. Fuel bundles which arrive at the sites from nuclear power plants (after having cooled down for several years) are completely dissolved in chemical baths, which could pose contamination risks if not properly managed. Thus, a reprocessing factory must be considered an advanced chemical site, rather than a nuclear one.

The potentially useful components dealt with in nuclear reprocessing comprise specific actinides (plutonium, uranium, and some minor actinides). The lighter elements components include fission products, activation products, and cladding.

| material | disposition |

|---|---|

| plutonium, minor actinides, reprocessed uranium | fission in fast, fusion, or subcritical reactor |

| reprocessed uranium, cladding, filters | less stringent storage as intermediate-level waste |

| long-lived fission and activation products | nuclear transmutation or geological repository |

| medium-lived fission products 137Cs and 90Sr | medium-term storage as high-level waste |

| useful radionuclides and noble metals | industrial and medical uses |

https://en.wikipedia.org/wiki/Nuclear_reprocessing

Spent nuclear fuel, occasionally called used nuclear fuel, is nuclear fuel that has been irradiated in a nuclear reactor (usually at a nuclear power plant). It is no longer useful in sustaining a nuclear reaction in an ordinary thermal reactor and depending on its point along the nuclear fuel cycle, it may have considerably different isotopic constituents.[1]

In the oxide fuel, intense temperature gradients exist that cause fission products to migrate. The zirconium tends to move to the centre of the fuel pellet where the temperature is highest, while the lower-boiling fission products move to the edge of the pellet. The pellet is likely to contain many small bubble-like pores that form during use; the fission product xenon migrates to these voids. Some of this xenon will then decay to form caesium, hence many of these bubbles contain a large concentration of 137Cs.

In the case of mixed oxide (MOX) fuel, the xenon tends to diffuse out of the plutonium-rich areas of the fuel, and it is then trapped in the surrounding uranium dioxide. The neodymium tends to not be mobile.

Also metallic particles of an alloy of Mo-Tc-Ru-Pd tend to form in the fuel. Other solids form at the boundary between the uranium dioxide grains, but the majority of the fission products remain in the uranium dioxide as solid solutions. A paper describing a method of making a non-radioactive "uranium active" simulation of spent oxide fuel exists.[2]

3% of the mass consists of fission products of 235U and 239Pu (also indirect products in the decay chain); these are considered radioactive waste or may be separated further for various industrial and medical uses. The fission products include every element from zinc through to the lanthanides; much of the fission yield is concentrated in two peaks, one in the second transition row (Zr, Mo, Tc, Ru, Rh, Pd, Ag) and the other later in the periodic table (I, Xe, Cs, Ba, La, Ce, Nd). Many of the fission products are either non-radioactive or only short-lived radioisotopes, but a considerable number are medium to long-lived radioisotopes such as 90Sr, 137Cs, 99Tc and 129I. Research has been conducted by several different countries into segregating the rare isotopes in fission waste including the "fission platinoids" (Ru, Rh, Pd) and silver (Ag) as a way of offsetting the cost of reprocessing; this is not currently being done commercially.

The fission products can modify the thermal properties of the uranium dioxide; the lanthanide oxides tend to lower the thermal conductivity of the fuel, while the metallic nanoparticles slightly increase the thermal conductivity of the fuel.[3]

| Element | Gas | Metal | Oxide | Solid solution |

|---|---|---|---|---|

| Br Kr | Yes | - | - | - |

| Rb | Yes | - | Yes | - |

| Sr | - | - | Yes | Yes |

| Y | - | - | - | Yes |

| Zr | - | - | Yes | Yes |

| Nb | - | - | Yes | - |

| Mo | - | Yes | Yes | - |

| Tc Ru Rh Pd Ag Cd In Sb | - | Yes | - | - |

| Te | Yes | Yes | Yes | Yes |

| I Xe | Yes | - | - | - |

| Cs | Yes | - | Yes | - |

| Ba | - | - | Yes | Yes |

| La Ce Pr Nd Pm Sm Eu | - | - | - | Yes |

https://en.wikipedia.org/wiki/Spent_nuclear_fuel

Biosolids are solid organic matter recovered from a sewage treatment process and used as fertilizer.[1] In the past, it was common for farmers to use animal manure to improve their soil fertility. In the 1920s, the farming community began also to use sewage sludge from local wastewater treatment plants. Scientific research over many years has confirmed that these biosolids contain similar nutrients to those in animal manures. Biosolids that are used as fertilizer in the farming community are usually treated to help to prevent disease-causing pathogens from spreading to the public.[2]

https://en.wikipedia.org/wiki/Biosolids

Waste-to-energy (WtE) or energy-from-waste (EfW) is the process of generating energy in the form of electricity and/or heat from the primary treatment of waste, or the processing of waste into a fuel source. WtE is a form of energy recovery. Most WtE processes generate electricity and/or heat directly through combustion, or produce a combustible fuel commodity, such as methane, methanol, ethanol. or synthetic fuels.[1]

https://en.wikipedia.org/wiki/Waste-to-energy

As an alternative to TRUEX, an extraction process using a malondiamide has been devised. The DIAMEX (DIAMide EXtraction) process has the advantage of avoiding the formation of organic waste which contains elements other than carbon, hydrogen, nitrogen, and oxygen. Such an organic waste can be burned without the formation of acidic gases which could contribute to acid rain(although the acidic gases could be recovered by a scrubber). The DIAMEX process is being worked on in Europe by the French CEA. The process is sufficiently mature that an industrial plant could be constructed with the existing knowledge of the process.[17] In common with PUREX this process operates by a solvation mechanism.

https://en.wikipedia.org/wiki/Nuclear_reprocessing

The bismuth-phosphate process was used to extract plutonium from irradiated uranium taken from nuclear reactors.[1][2] It was developed during World War II by Stanley G. Thompson, a chemist working for the Manhattan Project at the University of California, Berkeley. This process was used to produce plutonium at the Hanford Site. Plutonium was used in the atomic bomb that was used in the atomic bombing of Nagasaki in August 1945. The process was superseded in the 1950s by the REDOX and PUREX processes.

https://en.wikipedia.org/wiki/Bismuth_phosphate_process

Nuclear transmutation is the conversion of one chemical element or an isotope into another chemical element.[1] Because any element (or isotope of one) is defined by its number of protons (and neutrons) in its atoms, i.e. in the atomic nucleus, nuclear transmutation occurs in any process where the number of protons or neutrons in the nucleus is changed.

A transmutation can be achieved either by nuclear reactions (in which an outside particle reacts with a nucleus) or by radioactive decay, where no outside cause is needed.

Natural transmutation by stellar nucleosynthesis in the past created most of the heavier chemical elements in the known existing universe, and continues to take place to this day, creating the vast majority of the most common elements in the universe, including helium, oxygen and carbon. Most stars carry out transmutation through fusion reactions involving hydrogen and helium, while much larger stars are also capable of fusing heavier elements up to iron late in their evolution.

Elements heavier than iron, such as gold or lead, are created through elemental transmutations that can only naturally occur in supernovae. As stars begin to fuse heavier elements, substantially less energy is released from each fusion reaction. Reactions that produce elements heavier than iron are endothermic and unable to generate the energy required to maintain stable fusion inside the star.

One type of natural transmutation observable in the present occurs when certain radioactive elements present in nature spontaneously decay by a process that causes transmutation, such as alpha or beta decay. An example is the natural decay of potassium-40 to argon-40, which forms most of the argon in the air. Also on Earth, natural transmutations from the different mechanisms of natural nuclear reactions occur, due to cosmic ray bombardment of elements (for example, to form carbon-14), and also occasionally from natural neutron bombardment (for example, see natural nuclear fission reactor).

Artificial transmutation may occur in machinery that has enough energy to cause changes in the nuclear structure of the elements. Such machines include particle accelerators and tokamakreactors. Conventional fission power reactors also cause artificial transmutation, not from the power of the machine, but by exposing elements to neutrons produced by fission from an artificially produced nuclear chain reaction. For instance, when a uranium atom is bombarded with slow neutrons, fission takes place. This releases, on average, 3 neutrons and a large amount of energy. The released neutrons then cause fission of other uranium atoms, until all of the available uranium is exhausted. This is called a chain reaction.

Artificial nuclear transmutation has been considered as a possible mechanism for reducing the volume and hazard of radioactive waste.[2]

More information on gold synthesis, see Synthesis of precious metals.

197Au + n → 198Au (half-life 2.7 days) → 198Hg + n → 199Hg + n → 200Hg + n → 201Hg + n → 202Hg + n → 203Hg (half-life 47 days) → 203Tl + n → 204Tl (half-life 3.8 years) → 204Pb

https://en.wikipedia.org/wiki/Nuclear_transmutation

Nucleosynthesis is the process that creates new atomic nuclei from pre-existing nucleons (protons and neutrons) and nuclei. According to current theories, the first nuclei were formed a few minutes after the Big Bang, through nuclear reactions in a process called Big Bang nucleosynthesis. After about 20 minutes, the universe had expanded and cooled to a point at which these high-energy collisions among nucleons ended, so only the fastest and simplest reactions occurred, leaving our universe containing about 75% hydrogen, 24% helium by mass. The rest is traces of other elements such as lithium and the hydrogen isotope deuterium. Nucleosynthesis in stars and their explosions later produced the variety of elements and isotopes that we have today, in a process called cosmic chemical evolution. The amounts of total mass in elements heavier than hydrogen and helium (called 'metals' by astrophysicists) remains small (few percent), so that the universe still has approximately the same composition.

Stars fuse light elements to heavier ones in their cores, giving off energy in the process known as stellar nucleosynthesis. Nuclear fusion reactions create many of the lighter elements, up to and including iron and nickel in the most massive stars. Products of stellar nucleosynthesis mostly remain trapped in stellar cores and remnants, except if ejected through stellar winds and explosions. The neutron capture reactions of the r-process and s-process create heavier elements, from iron upwards.

Supernova nucleosynthesis within exploding stars is largely responsible for the elements between oxygen and rubidium: from the ejection of elements produced during stellar nucleosynthesis; through explosive nucleosynthesis during the supernova explosion; and from the r-process (absorption of multiple neutrons) during the explosion.

Neutron star mergers are a recently discovered candidate source of elements produced in the r-process. When two neutron stars collide, a significant amount of neutron-rich matter may be ejected, including newly formed nuclei.

Cosmic ray spallation is a process wherein cosmic rays impact the nuclei of the interstellar medium and fragment larger atomic nuclei. It is a significant source of the lighter nuclei, particularly 3He, 9Be and 10,11B, that are not created by stellar nucleosynthesis.

Cosmic ray bombardment of solar-system material found on Earth (including meteorites) also contribute to the presence on Earth of cosmogenic nuclides. On Earth, no new nuclei are produced, except in nuclear laboratories that reproduce the above nuclear reactions with particle beams. Natural radioactivity radiogenesis (decay) of long-lived, heavy, primordial radionuclides such as uranium and thorium is the only exception, leading to an increase in the daughter nuclei of such natural decays.

https://en.wikipedia.org/wiki/Nucleosynthesis

According to the work of corrosion electrochemist David W. Shoesmith,[6][7] the nanoparticles of Mo-Tc-Ru-Pd have a strong effect on the corrosion of uranium dioxide fuel. For instance his work suggests that when hydrogen (H2) concentration is high (due to the anaerobic corrosion of the steel waste can), the oxidation of hydrogen at the nanoparticles will exert a protective effect on the uranium dioxide. This effect can be thought of as an example of protection by a sacrificial anode, where instead of a metal anode reacting and dissolving it is the hydrogen gas that is consumed.

https://en.wikipedia.org/wiki/Spent_nuclear_fuel

The light-water reactor (LWR) is a type of thermal-neutron reactor that uses normal water, as opposed to heavy water, as both its coolant and neutron moderator – furthermore a solid form of fissile elements is used as fuel. Thermal-neutron reactors are the most common type of nuclear reactor, and light-water reactors are the most common type of thermal-neutron reactor.

There are three varieties of light-water reactors: the pressurized water reactor (PWR), the boiling water reactor (BWR), and (most designs of) the supercritical water reactor (SCWR).

https://en.wikipedia.org/wiki/Light-water_reactor

Mixed oxide fuel, commonly referred to as MOX fuel, is nuclear fuel that contains more than one oxide of fissile material, usually consisting of plutonium blended with natural uranium, reprocessed uranium, or depleted uranium. MOX fuel is an alternative to the low-enriched uranium (LEU) fuel used in the light water reactors that predominate nuclear power generation.

For example, a mixture of 7% plutonium and 93% natural uranium reacts similarly, although not identically, to LEU fuel. MOX usually consists of two phases, UO2 and PuO2, and/or a single phase solid solution (U,Pu)O2. The content of PuO2 may vary from 1.5 wt.% to 25–30 wt.% depending on the type of nuclear reactor. Although MOX fuel can be used in thermal reactors to provide energy, efficient fission of plutonium in MOX can only be achieved in fast reactors.[1]

One attraction of MOX fuel is that it is a way of utilizing surplus weapons-grade plutonium, an alternative to storage of surplus plutonium, which would need to be secured against the risk of theft for use in nuclear weapons.[2][3] On the other hand, some studies warned that normalising the global commercial use of MOX fuel and the associated expansion of nuclear reprocessing will increase, rather than reduce, the risk of nuclear proliferation, by encouraging increased separation of plutonium from spent fuel in the civil nuclear fuel cycle.[4][5][6]

Because the fission to capture ratio of neutron cross-section with high energy or fast neutrons changes to favour fission for almost all of the actinides, including 238

92U

, fast reactors can use all of them for fuel. All actinides, including TRU or transuranium actinides can undergo neutron induced fission with unmoderated or fast neutrons. A fast reactor is more efficient for using plutonium and higher actinides as fuel. Depending on how the reactor is fueled it can either be used as a plutonium breeder or burner.

A mixture of uranyl nitrate and plutonium nitrate in nitric acid is converted by treatment with a base such as ammonia to form a mixture of ammonium diuranate and plutonium hydroxide. After heating in a mixture of 5% hydrogen and 95% argon will form a mixture of uranium dioxide and plutonium dioxide. Using a base, the resulting powder can be run through a press and converted into green colored pellets. The green pellet can then be sintered into mixed uranium and plutonium oxide pellet. While this second type of fuel is more homogenous on the microscopic scale (scanning electron microscope) it is possible to see plutonium rich areas and plutonium poor areas. It can be helpful to think of the solid as being like a salami (more than one solid material present in the pellet).

It is possible that both americium and curium could be added to a U/Pu MOX fuel before it is loaded into a fast reactor. This is one means of transmutation. Work with curium is much harder than americium because curium is a neutron emitter, the MOX production line would need to be shielded with both lead and water to protect the workers.

Also, the neutron irradiation of curium generates the higher actinides, such as californium, which increase the neutron dose associated with the used nuclear fuel; this has the potential to pollute the fuel cycle with strong neutron emitters. As a result, it is likely that curium will be excluded from most MOX fuels.

https://en.wikipedia.org/wiki/MOX_fuel

POTENTIAL ENERGY, AUTOWAVE

Autowaves are self-supporting non-linear waves in active media (i.e. those that provide distributed energy sources). The term is generally used in processes where the waves carry relatively low energy, which is necessary for synchronization or switching the active medium.

https://en.wikipedia.org/wiki/Autowave

Self-organization, also called (in the social sciences) spontaneous order, is a process where some form of overall order arises from local interactions between parts of an initially disordered system. The process can be spontaneous when sufficient energy is available, not needing control by any external agent. It is often triggered by seemingly random fluctuations, amplified by positive feedback. The resulting organization is wholly decentralized, distributed over all the components of the system. As such, the organization is typically robust and able to survive or self-repair substantial perturbation. Chaos theory discusses self-organization in terms of islands of predictability in a sea of chaotic unpredictability.

Self-organization occurs in many physical, chemical, biological, robotic, and cognitive systems. Examples of self-organization include crystallization, thermal convection of fluids, chemical oscillation, animal swarming, neural circuits, and black markets.

https://en.wikipedia.org/wiki/Self-organization

A single chemical reaction is said to be autocatalytic if one of the reaction products is also a catalyst for the same or a coupled reaction.[1] Such a reaction is called an autocatalytic reaction.

A set of chemical reactions can be said to be "collectively autocatalytic" if a number of those reactions produce, as reaction products, catalysts for enough of the other reactions that the entire set of chemical reactions is self-sustaining given an input of energy and food molecules (see autocatalytic set).

https://en.wikipedia.org/wiki/Autocatalysis

In mathematics, a dynamical system is a system in which a function describes the time dependence of a point in a geometrical space. Examples include the mathematical models that describe the swinging of a clock pendulum, the flow of water in a pipe, and the number of fish each springtime in a lake.

https://en.wikipedia.org/wiki/Dynamical_system

The term autopoiesis (from Greek αὐτo- (auto-) 'self', and ποίησις (poiesis) 'creation, production') refers to a system capable of reproducing and maintaining itself.

Autopoiesis was originally presented as a system description that was said to define and explain the nature of living systems. A canonical example of an autopoietic system is the biological cell. The eukaryotic cell, for example, is made of various biochemical components such as nucleic acids and proteins, and is organized into bounded structures such as the cell nucleus, various organelles, a cell membrane and cytoskeleton. These structures, based on an external flow of molecules and energy, produce the components which, in turn, continue to maintain the organized bounded structure that gives rise to these components (not unlike a wave propagating through a medium).

An autopoietic system is to be contrasted with an allopoietic system, such as a car factory, which uses raw materials (components) to generate a car (an organized structure) which is something other than itself (the factory). However, if the system is extended from the factory to include components in the factory's "environment", such as supply chains, plant / equipment, workers, dealerships, customers, contracts, competitors, cars, spare parts, and so on, then as a total viable system it could be considered to be autopoietic.[3]

Though others have often used the term as a synonym for self-organization, Maturana himself stated he would "[n]ever use the notion of self-organization ... Operationally it is impossible. That is, if the organization of a thing changes, the thing changes".[4] Moreover, an autopoietic system is autonomous and operationally closed, in the sense that there are sufficient processes within it to maintain the whole. Autopoietic systems are "structurally coupled" with their medium, embedded in a dynamic of changes that can be recalled as sensory-motor coupling.[5] This continuous dynamic is considered as a rudimentary form of knowledge or cognition and can be observed throughout life-forms.

Autopoiesis can be defined as the ratio between the complexity of a system and the complexity of its environment.[12]

The connection of autopoiesis to cognition, or if necessary, of living systems to cognition, is an objective assessment ascertainable by observation of a living system.

One question that arises is about the connection between cognition seen in this manner and consciousness. The separation of cognition and consciousness recognizes that the organism may be unaware of the substratum where decisions are made. What is the connection between these realms? Thompson refers to this issue as the "explanatory gap", and one aspect of it is the hard problem of consciousness, how and why we have qualia.[18]

A second question is whether autopoiesis can provide a bridge between these concepts. Thompson discusses this issue from the standpoint of enactivism. An autopoietic cell actively relates to its environment. Its sensory responses trigger motor behavior governed by autopoiesis, and this behavior (it is claimed) is a simplified version of a nervous system behavior. The further claim is that real-time interactions like this require attention, and an implication of attention is awareness.[19]

https://en.wikipedia.org/wiki/Autopoiesis

Autonomous agency theory (AAT) is a viable system theory (VST) which models autonomous social complex adaptive systems. It can be used to model the relationship between an agency and its environment(s), and these may include other interactive agencies. The nature of that interaction is determined by both the agency's external and internal attributes and constraints. Internal attributes may include immanent dynamic "self" processes that drive agency change.

https://en.wikipedia.org/wiki/Autonomous_agency_theory

Information metabolism, sometimes referred to as informational metabolism or energetic-informational metabolism, is a psychological theory of interaction between biological organisms and their environment, developed by Polish psychiatrist Antoni Kępiński .[1][2][3]

https://en.wikipedia.org/wiki/Information_metabolism

A number of independent lines of research depict the universe, including the social organization of living creatures which is of particular interest to humans, as systems, or networks, of relationships. Basic physics has assumed and characterized distinctive regimes of relationships. For common examples, gases, liquids and solids are characterized as systems of objects which have among them relationships of distinctive types. Gases contain elements which vary continuously in their spatial relationships as among themselves. In liquids component elements vary continuously as to angles as between themselves, but are restricted as to spatial dispersion. In solids both angles and distances are circumscribed. These systems of relationships, where relational states are relatively uniform, bounded and distinct from other relational states in their surroundings, are often characterized as phases of matter, as set out in Phase (matter). These examples are only a few of the sorts of relational regimes which can be identified, made notable by their relative simplicity and ubiquity in the universe.

Such Relational systems, or regimes, can be seen as defined by reductions in degrees of freedom among the elements of the system. This diminution in degrees of freedom in relationships among elements is characterized as correlation. In the commonly observed transitions between phases of matter, or phase transitions, the progression of less ordered, or more random, to more ordered, or less random, systems is recognized as the result of correlational processes (e.g. gas to liquid, liquid to solid). In the reverse of this process, transitions from a more-ordered state to a less ordered state, as from ice to liquid water, are accompanied by the disruption of correlations.

Correlational processes have been observed at several levels. For example, atoms are fused in suns, building up aggregations of nucleons, which we recognize as complex and heavy atoms. Atoms, both simple and complex, aggregate into molecules. In life a variety of molecules form extremely complex dynamically ordered living cells. Over evolutionary time multicellular organizations developed as dynamically ordered aggregates of cells. Multicellular organisms have over evolutionary time developed correlated activities forming what we term social groups. Etc.

Thus, as is reviewed below, correlation, i.e. ordering, processes have been tiered through several levels, reaching from quantum mechanics upward through complex, dynamic, 'non-equilibrium', systems, including living systems.

Lee Smolin[4] proposes a system of "knots and networks" such that "the geometry of space arises out of a … fundamental quantum level which is made up of an interwoven network of … processes".[5] Smolin and a group of like minded researchers have devoted a number of years to developing a loop quantum gravity basis for physics, which encompasses this relational network viewpoint.

Carlo Rovelli initiated development of a system of views now called relational quantum mechanics. This concept has at its foundation the view that all systems are quantum systems, and that each quantum system is defined by its relationship with other quantum systems with which it interacts.

The physical content of the theory is not to do with objects themselves, but the relations between them. As Rovelli puts it: "Quantum mechanics is a theory about the physical description of physical systems relative to other systems, and this is a complete description of the world".[6]

Rovelli has proposed that each interaction between quantum systems involves a ‘measurement’, and such interactions involved reductions in degrees of freedom between the respective systems, to which he applies the term correlation.

https://en.wikipedia.org/wiki/Relational_theory#Relational_order_theories

Viable system theory (VST) concerns cybernetic processes in relation to the development/evolution of dynamical systems. They are considered to be living systems in the sense that they are complex and adaptive, can learn, and are capable of maintaining an autonomous existence, at least within the confines of their constraints. These attributes involve the maintenance of internal stability through adaptation to changing environments. One can distinguish between two strands such theory: formal systems and principally non-formal system. Formal viable system theory is normally referred to as viability theory, and provides a mathematical approach to explore the dynamics of complex systems set within the context of control theory. In contrast, principally non-formal viable system theory is concerned with descriptive approaches to the study of viability through the processes of control and communication, though these theories may have mathematical descriptions associated with them.

https://en.wikipedia.org/wiki/Viable_system_theory

Pyroprocessing is a generic term for high-temperature methods. Solvents are molten salts (e.g. LiCl + KCl or LiF + CaF2) and molten metals (e.g. cadmium, bismuth, magnesium) rather than water and organic compounds. Electrorefining, distillation, and solvent-solvent extraction are common steps.

https://en.wikipedia.org/wiki/Nuclear_reprocessing

In the fluoride volatility process, fluorine is reacted with the fuel. Fluorine is so much more reactive than even oxygenthat small particles of ground oxide fuel will burst into flame when dropped into a chamber full of fluorine. This is known as flame fluorination; the heat produced helps the reaction proceed. Most of the uranium, which makes up the bulk of the fuel, is converted to uranium hexafluoride, the form of uranium used in uranium enrichment, which has a very low boiling point. Technetium, the main long-lived fission product, is also efficiently converted to its volatile hexafluoride. A few other elements also form similarly volatile hexafluorides, pentafluorides, or heptafluorides. The volatile fluorides can be separated from excess fluorine by condensation, then separated from each other by fractional distillation or selective reduction. Uranium hexafluoride and technetium hexafluoride have very similar boiling points and vapor pressures, which makes complete separation more difficult.

Many of the fission products volatilized are the same ones volatilized in non-fluorinated, higher-temperature volatilization, such as iodine, tellurium and molybdenum; notable differences are that technetium is volatilized, but caesium is not.

https://en.wikipedia.org/wiki/Nuclear_reprocessing

Until recently, most studies on time travel are based upon classical general relativity. Coming up with a quantum version of time travel requires physicists to figure out the time evolution equations for density states in the presence of closed timelike curves (CTC).

Novikov[1] had conjectured that once quantum mechanics is taken into account, self-consistent solutions always exist for all time machine configurations, and initial conditions. However, it has been noted such solutions are not unique in general, in violation of determinism, unitarity and linearity.

The application of self-consistency to quantum mechanical time machines has taken two main routes. Novikov's rule applied to the density matrix itself gives the Deutsch prescription. Applied instead to the state vector, the same rule gives nonunitary physics with a dual description in terms of post-selection.

https://en.wikipedia.org/wiki/Quantum_mechanics_of_time_travel

A quantum dot single-photon source is based on a single quantum dot placed in an optical cavity. It is an on-demand single-photon source. A laser pulse can excite a pair of carriers known as an exciton in the quantum dot. The decay of a single exciton due to spontaneous emission leads to the emission of a single photon. Due to interactions between excitons, the emission when the quantum dot contains a single exciton is energetically distinct from that when the quantum dot contains more than one exciton. Therefore, a single exciton can be deterministically created by a laser pulse and the quantum dot becomes a nonclassical light source that emits photons one by one and thus shows photon antibunching. The emission of single photons can be proven by measuring the second order intensity correlation function. The spontaneous emission rate of the emitted photons can be enhanced by integrating the quantum dot in an optical cavity. Additionally, the cavity leads to emission in a well-defined optical mode increasing the efficiency of the photon source.

In 1991, David Deutsch[2] came up with a proposal for the time evolution equations, with special note as to how it resolves the grandfather paradox and nondeterminism. However, his resolution to the grandfather paradox is considered unsatisfactory to some people, because it states the time traveller reenters another parallel universe, and that the actual quantum state is a quantum superposition of states where the time traveller does and does not exist.

https://en.wikipedia.org/wiki/Quantum_dot_single-photon_source

Some physicists suggest that causal loops only exist in the quantum scale, in a fashion similar to that of the chronology protection conjecture proposed by Stephen Hawking, so histories over larger scales are not looped.[20]:51

https://en.wikipedia.org/wiki/Grandfather_paradox

Quantum tunnelling or tunneling (US) is the quantum mechanical phenomenon where a wavefunction can propagate through a potential barrier.

The transmission through the barrier can be finite and depends exponentially on the barrier height and barrier width. The wavefunction does not disappear on one side and reappear on the other side. The wavefunction and its first derivative are continuous. In steady-state, the probability flux in the forward direction is spatially uniform. No particle or wave is lost. Tunneling occurs with barriers of thickness around 1–3 nm and smaller.[1]

https://en.wikipedia.org/wiki/Quantum_tunnelling

A quantum well is a potential well with only discrete energy values.

The classic model used to demonstrate a quantum well is to confine particles, which were initially free to move in three dimensions, to two dimensions, by forcing them to occupy a planar region. The effects of quantum confinement take place when the quantum well thickness becomes comparable to the de Broglie wavelength of the carriers (generally electrons and holes), leading to energy levels called "energy subbands", i.e., the carriers can only have discrete energy values.

A wide variety of electronic quantum well devices have been developed based on the theory of quantum well systems. These devices have found applications in lasers, photodetectors, modulators, and switches for example. Compared to conventional devices, quantum well devices are much faster and operate much more economically and are a point of incredible importance to the technological and telecommunication industries. These quantum well devices are currently replacing many, if not all, conventional electrical components in many electronic devices.[2]

The concept of quantum well was proposed in 1963 independently by Herbert Kroemer and by Zhores Alferov and R.F. Kazarinov.[3][4]

https://en.wikipedia.org/wiki/Quantum_well

Quantum tunnelling or tunneling (US) is the quantum mechanical phenomenon where a wavefunction can propagate through a potential barrier.

The transmission through the barrier can be finite and depends exponentially on the barrier height and barrier width. The wavefunction does not disappear on one side and reappear on the other side. The wavefunction and its first derivative are continuous. In steady-state, the probability flux in the forward direction is spatially uniform. No particle or wave is lost. Tunneling occurs with barriers of thickness around 1–3 nm and smaller.[1]

Some authors also identify the mere penetration of the wavefunction into the barrier, without transmission on the other side as a tunneling effect. Quantum tunneling is not predicted by the laws of classical mechanics where surmounting a potential barrier requires potential energy.

Quantum tunneling plays an essential role in physical phenomena, such as nuclear fusion.[2] It has applications in the tunnel diode,[3] quantum computing, and in the scanning tunneling microscope.

The effect was predicted in the early 20th century. Its acceptance as a general physical phenomenon came mid-century.[4]

Quantum tunneling is projected to create physical limits to the size of the transistors used in microelectronics, due to electrons being able to tunnel past transistors that are too small.[5][6]

Tunneling may be explained in terms of the Heisenberg uncertainty principle in that a quantum object can be known as a wave or as a particle in general. In other words, the uncertainty in the exact location of light particles allows these particles to break rules of classical mechanics and move in space without passing over the potential energy barrier.

https://en.wikipedia.org/wiki/Quantum_tunnelling

A quantum dot laser is a semiconductor laser that uses quantum dots as the active laser medium in its light emitting region. Due to the tight confinement of charge carriers in quantum dots, they exhibit an electronic structure similar to atoms. Lasers fabricated from such an active media exhibit device performance that is closer to gas lasers, and avoid some of the negative aspects of device performance associated with traditional semiconductor lasers based on bulk or quantum well active media. Improvements in modulation bandwidth, lasing threshold, relative intensity noise, linewidth enhancement factor and temperature insensitivity have all been observed. The quantum dot active region may also be engineered to operate at different wavelengths by varying dot size and composition. This allows quantum dot lasers to be fabricated to operate at wavelengths previously not possible using semiconductor laser technology.

Recently, devices based on quantum dot active media are finding commercial application in medicine (laser scalpel, optical coherence tomography), display technologies (projection, laser TV), spectroscopy and telecommunications. A 10 Gbit/s quantum dot laser that is insensitive to temperature fluctuation for use in optical data communications and optical networks has been developed using this technology. The laser is capable of high-speed operation at 1.3 μm wavelengths, at temperatures from 20 °C to 70 °C. It works in optical data transmission systems, optical LANs and metro-access systems. In comparison to the performance of conventional strained quantum-well lasers of the past, the new quantum dot laser achieves significantly higher stability of temperature.

https://en.wikipedia.org/wiki/Quantum_dot_laser

In theoretical physics, quantum nonlocality refers to the phenomenon by which the measurement statistics of a multipartite quantum system do not admit an interpretation in terms of a local realistic theory. Quantum nonlocality has been experimentally verified under different physical assumptions.[1][2][3][4][5] Any physical theory that aims at superseding or replacing quantum theory should account for such experiments and therefore must also be nonlocal in this sense; quantum nonlocality is a property of the universe that is independent of our description of nature.

Quantum nonlocality does not allow for faster-than-light communication,[6] and hence is compatible with special relativity and its universal speed limit of objects. However, it prompts many of the foundational discussions concerning quantum theory, see Quantum foundations.

https://en.wikipedia.org/wiki/Quantum_nonlocality

In quantum field theory, the quantum vacuum state (also called the quantum vacuum or vacuum state) is the quantum state with the lowest possible energy. Generally, it contains no physical particles. Zero-point field is sometimes used as a synonym for the vacuum state of an individual quantized field.

According to present-day understanding of what is called the vacuum state or the quantum vacuum, it is "by no means a simple empty space".[1][2]According to quantum mechanics, the vacuum state is not truly empty but instead contains fleeting electromagnetic waves and particles that pop into and out of existence.[3][4][5]

The QED vacuum of quantum electrodynamics (or QED) was the first vacuum of quantum field theory to be developed. QED originated in the 1930s, and in the late 1940s and early 1950s it was reformulated by Feynman, Tomonaga and Schwinger, who jointly received the Nobel prize for this work in 1965.[6] Today the electromagnetic interactions and the weak interactions are unified (at very high energies only) in the theory of the electroweak interaction.

The Standard Model is a generalization of the QED work to include all the known elementary particles and their interactions (except gravity). Quantum chromodynamics (or QCD) is the portion of the Standard Model that deals with strong interactions, and QCD vacuum is the vacuum of quantum chromodynamics. It is the object of study in the Large Hadron Collider and the Relativistic Heavy Ion Collider, and is related to the so-called vacuum structure of strong interactions.[7]

https://en.wikipedia.org/wiki/Quantum_vacuum_state

Quantum annealing (QA) is a metaheuristic for finding the global minimum of a given objective function over a given set of candidate solutions (candidate states), by a process using quantum fluctuations (in other words, a meta-procedure for finding a procedure that finds an absolute minimum size/length/cost/distance from within a possibly very large, but nonetheless finite set of possible solutions using quantum fluctuation-based computation instead of classical computation). Quantum annealing is used mainly for problems where the search space is discrete (combinatorial optimization problems) with many local minima; such as finding the ground state of a spin glass[1] or the traveling salesman problem. Quantum annealing was first proposed in 1988 by B. Apolloni, N. Cesa Bianchi and D. De Falco.[2][3] It was formulated in its present form by T. Kadowaki and H. Nishimori (ja) in "Quantum annealing in the transverse Ising model"[4]though a proposal in a different form had been made by A. B. Finnila, M. A. Gomez, C. Sebenik and J. D. Doll, in "Quantum annealing: A new method for minimizing multidimensional functions".[5]

Quantum annealing starts from a quantum-mechanical superposition of all possible states (candidate states) with equal weights. Then the system evolves following the time-dependent Schrödinger equation, a natural quantum-mechanical evolution of physical systems. The amplitudes of all candidate states keep changing, realizing a quantum parallelism, according to the time-dependent strength of the transverse field, which causes quantum tunneling between states. If the rate of change of the transverse field is slow enough, the system stays close to the ground state of the instantaneous Hamiltonian (also see adiabatic quantum computation).[6] If the rate of change of the transverse field is accelerated, the system may leave the ground state temporarily but produce a higher likelihood of concluding in the ground state of the final problem Hamiltonian, i.e., diabatic quantum computation.[7][8] The transverse field is finally switched off, and the system is expected to have reached the ground state of the classical Ising model that corresponds to the solution to the original optimization problem. An experimental demonstration of the success of quantum annealing for random magnets was reported immediately after the initial theoretical proposal.[9]

https://en.wikipedia.org/wiki/Quantum_annealing