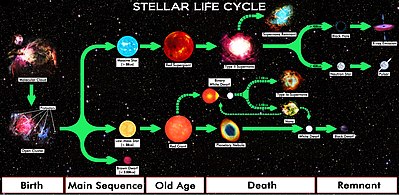

Photoevaporation denotes the process where energetic radiation ionises gas and causes it to disperse away from the ionising source. This typically refers to an astrophysical context where ultraviolet radiation from hot stars acts on clouds of material such as molecular clouds, protoplanetary disks, or planetary atmospheres.[1][2][3]

https://en.wikipedia.org/wiki/Photoevaporation

An evaporating gas globule or EGG is a region of hydrogen gas in outer space approximately 100 astronomical units in size, such that gases shaded by it are shielded from ionizing UV rays.[2] Dense areas of gas shielded by an evaporating gas globule can be conducive to the birth of stars.[2] Evaporating gas globules were first conclusively identified via photographs of the Pillars of Creation in the Eagle Nebula taken by the Hubble Space Telescope in 1995.[2][3]

EGG's are the likely predecessors of new protostars. Inside an EGG the gas and dust are denser than in the surrounding dust cloud. Gravity pulls the cloud even more tightly together as the EGG continues to draw in material from its surroundings. As the cloud density builds up the globule becomes hotter under the weight of the outer layers, a protostar is formed inside the EGG.

A protostar may have too little mass to become a star. If so it becomes a brown dwarf. If the protostar has sufficient mass, the density reaches a critical level where the temperature exceeds 10 million kelvin at its center. At this point, a nuclear reaction starts converting hydrogen to helium and releasing large amounts of energy. The protostar then becomes a star and joins the main sequence on the HR diagram.[4]

A study of 73 EGGs in the Pillars of Creation (Eagle Nebula) with the Very Large Telescope showed that only 15% of the EGGs show signs of star-formation. The star-formation is not everywhere the same: The largest pillar has a small cluster of these sources at the head of the pillar.[5]

https://en.wikipedia.org/wiki/Evaporating_gaseous_globule

Hydra (/ˈhaɪdrə/ HY-drə) is a genus of small, freshwater organisms of the phylum Cnidaria and class Hydrozoa. They are native to the temperate and tropical regions.[2][3] Biologists are especially interested in Hydra because of their regenerative ability; they do not appear to die of old age, or to age at all.

https://en.wikipedia.org/wiki/Hydra_(genus)

Turritopsis dohrnii, also known as the immortal jellyfish, is a species of small, biologically immortal jellyfish[2][3] found worldwide in temperate to tropic waters. It is one of the few known cases of animals capable of reverting completely to a sexually immature, colonial stage after having reached sexual maturity as a solitary individual. Others include the jellyfish Laodicea undulata[4] and species of the genus Aurelia.[5]

Like most other hydrozoans, T. dohrnii begin their life as tiny, free-swimming larvae known as planulae. As a planula settles down, it gives rise to a colony of polyps that are attached to the sea floor. All the polyps and jellyfish arising from a single planula are genetically identical clones.[6] The polyps form into an extensively branched form, which is not commonly seen in most jellyfish. Jellyfish, also known as medusae, then bud off these polyps and continue their life in a free-swimming form, eventually becoming sexually mature. When sexually mature, they have been known to prey on other jellyfish species at a rapid pace. If the T. dohrnii jellyfish is exposed to environmental stress, physical assault, or is sick or old, it can revert to the polyp stage, forming a new polyp colony.[7] It does this through the cell development process of transdifferentiation, which alters the differentiated state of the cells and transforms them into new types of cells.

Theoretically, this process can go on indefinitely, effectively rendering the jellyfish biologically immortal,[3][8] although in practice individuals can still die. In nature, most Turritopsis dohrnii are likely to succumb to predation or disease in the medusa stage without reverting to the polyp form.[9]

The capability of biological immortality with no maximum lifespan makes T. dohrnii an important target of basic biological, aging and pharmaceutical research.[10]

The "immortal jellyfish" was formerly classified as T. nutricula.[11]

https://en.wikipedia.org/wiki/Turritopsis_dohrnii

Hydroids are a life stage for most animals of the class Hydrozoa, small predators related to jellyfish.

https://en.wikipedia.org/wiki/Hydroid_(zoology)

Brown dwarfs are substellar objects that are not massive enough to sustain nuclear fusion of ordinary hydrogen (1H) into helium in their cores, unlike a main-sequence star. They have a mass between the most massive gas giant planets and the least massive stars, approximately 13 to 80 times that of Jupiter (MJ).[2][3] However, they are able to fuse deuterium (2H), and the most massive ones (> 65 MJ) are able to fuse lithium (7Li).[3]

https://en.wikipedia.org/wiki/Brown_dwarf

Magenta (/məˈdʒɛntə/) is a color that is variously defined as purplish-red,[1] reddish-purple or mauvish-crimson.[2] On color wheels of the RGB (additive) and CMY (subtractive) color models, it is located exactly midway between red and blue. It is one of the four colors of ink used in color printing by an inkjet printer, along with yellow, black, and cyan, to make all other colors. The tone of magenta used in printing is called "printer's magenta". It is also a shade of purple.

https://en.wikipedia.org/wiki/Magenta

A black dwarf is a theoretical stellar remnant, specifically a white dwarf that has cooled sufficiently that it no longer emits significant heat or light. Because the time required for a white dwarf to reach this state is calculated to be longer than the current age of the universe (13.77 billion years), no black dwarfs are expected to exist in the universe as of now, and the temperature of the coolest white dwarfs is one observational limit on the age of the universe.[1]

The name "black dwarf" has also been applied to hypothetical late-stage cooled brown dwarfs – substellar objects that do not have sufficient mass (less than approximately 0.07 M☉) to maintain hydrogen-burning nuclear fusion.[2][3][4][5]

https://en.wikipedia.org/wiki/Black_dwarf

Electron degeneracy pressure is a particular manifestation of the more general phenomenon of quantum degeneracy pressure. The Pauli exclusion principle disallows two identical half-integer spin particles (electrons and all other fermions) from simultaneously occupying the same quantum state. The result is an emergent pressure against compression of matter into smaller volumes of space. Electron degeneracy pressure results from the same underlying mechanism that defines the electron orbital structure of elemental matter. For bulk matter with no net electric charge, the attraction between electrons and nuclei exceeds (at any scale) the mutual repulsion of electrons plus the mutual repulsion of nuclei; so in absence of electron degeneracy pressure, the matter would collapse into a single nucleus. In 1967, Freeman Dyson showed that solid matter is stabilized by quantum degeneracy pressure rather than electrostatic repulsion.[1][2][3] Because of this, electron degeneracy creates a barrier to the gravitational collapse of dying stars and is responsible for the formation of white dwarfs.

https://en.wikipedia.org/wiki/Electron_degeneracy_pressure

https://en.wikipedia.org/wiki/Thermonuclear_fusion

https://en.wikipedia.org/wiki/Plasma_(physics)

https://en.wikipedia.org/wiki/Nuclear_fusion#Important_reactions

Aneutronic fusion is any form of fusion power in which very little of the energy released is carried by neutrons. While the lowest-threshold nuclear fusion reactions release up to 80% of their energy in the form of neutrons, aneutronic reactions release energy in the form of charged particles, typically protons or alpha particles. Successful aneutronic fusion would greatly reduce problems associated with neutron radiation such as damaging ionizing radiation, neutron activation, and requirements for biological shielding, remote handling and safety.

Since it is simpler to convert the energy of charged particles into electrical power than it is to convert energy from uncharged particles, an aneutronic reaction would be attractive for power systems. Some proponents see a potential for dramatic cost reductions by converting energy directly to electricity, as well as in eliminating the radiation from neutrons, which are difficult to shield against.[1][2] However, the conditions required to harness aneutronic fusion are much more extreme than those required for deuterium-tritium fusion being investigated in ITER or Wendelstein 7-X.

https://en.wikipedia.org/wiki/Aneutronic_fusion

Neutron radiation is a form of ionizing radiation that presents as free neutrons. Typical phenomena are nuclear fission or nuclear fusion causing the release of free neutrons, which then react with nuclei of other atoms to form new isotopes—which, in turn, may trigger further neutron radiation. Free neutrons are unstable, decaying into a proton, an electron, plus an electron antineutrino with a mean lifetime of 887 seconds (14 minutes, 47 seconds).[1]

https://en.wikipedia.org/wiki/Neutron_radiation

The electron neutrino has a corresponding antiparticle, the electron antineutrino (

ν

e), which differs only in that some of its properties have equal magnitude but opposite sign. One major open question in particle physics is whether or not neutrinos and anti-neutrinos are the same particle, in which case it would be a Majorana fermion, or whether they are different particles, in which case they would be Dirac fermions. They are produced in beta decay and other types of weak interactions.

https://en.wikipedia.org/wiki/Electron_neutrino#Electron_antineutrino

In chemistry and physics, the exchange interaction (with an exchange energy and exchange term) is a quantum mechanical effect that only occurs between identical particles. Despite sometimes being called an exchange force in an analogy to classical force, it is not a true force as it lacks a force carrier.

Charged-current interaction[edit]

In one type of charged current interaction, a charged lepton (such as an electron or a muon, having a charge of −1) can absorb a

W+

boson (a particle with a charge of +1) and be thereby converted into a corresponding neutrino (with a charge of 0), where the type ("flavour") of neutrino (electron, muon or tau) is the same as the type of lepton in the interaction, for example:

Similarly, a down-type quark (d with a charge of −1⁄3) can be converted into an up-type quark (u, with a charge of +2⁄3), by emitting a

W−

boson or by absorbing a

W+

boson. More precisely, the down-type quark becomes a quantum superposition of up-type quarks: that is to say, it has a possibility of becoming any one of the three up-type quarks, with the probabilities given in the CKM matrix tables. Conversely, an up-type quark can emit a

W+

boson, or absorb a

W−

boson, and thereby be converted into a down-type quark, for example:

The W boson is unstable so will rapidly decay, with a very short lifetime. For example:

Decay of a W boson to other products can happen, with varying probabilities.[16]

In the so-called beta decay of a neutron (see picture, above), a down quark within the neutron emits a virtual

W−

boson and is thereby converted into an up quark, converting the neutron into a proton. Because of the limited energy involved in the process (i.e., the mass difference between the down quark and the up quark), the virtual

W−

boson can only carry sufficient energy to produce an electron and an electron-antineutrino – the two lowest-possible masses among its prospective decay products.[17] At the quark level, the process can be represented as:

Neutral-current interaction[edit]

In neutral current interactions, a quark or a lepton (e.g., an electron or a muon) emits or absorbs a neutral Z boson. For example:

Like the

W±

bosons, the

Z0

boson also decays rapidly,[16] for example:

Unlike the charged-current interaction, whose selection rules are strictly limited by chirality, electric charge, and / or weak isospin, the neutral-current

Z0

interaction can cause any two fermions in the standard model to deflect: Either particles or anti-particles, with any electric charge, and both left- and right-chirality, although the strength of the interaction differs.[g]

The quantum number weak charge (Qw) serves the same role in the neutral current interaction with the

Z0

that electric charge (Q, with no subscript) does in the electromagnetic interaction: It quantifies the vector part of the interaction. Its value is given by:[19]

Since the weak mixing angle the parenthetic expression with its value varying slightly with the momentum difference (called “running”) between the particles involved. Hence

since by convention and for all fermions involved in the weak interaction The weak charge of charged leptons is then close to zero, so these mostly interact with the Z boson through the axial coupling.

In quantum field theory the vacuum expectation value (also called condensate or simply VEV) of an operator is its average or expectation value in the vacuum. The vacuum expectation value of an operator O is usually denoted by One of the most widely used examples of an observable physical effect that results from the vacuum expectation value of an operator is the Casimir effect.

This concept is important for working with correlation functions in quantum field theory. It is also important in spontaneous symmetry breaking. Examples are:

- The Higgs field has a vacuum expectation value of 246 GeV.[1] This nonzero value underlies the Higgs mechanism of the Standard Model. This value is given by , where MW is the mass of the W Boson, the reduced Fermi constant, and g the weak isospin coupling, in natural units. It is also near the limit of the most massive nuclei, at v = 264.3 Da.

- The chiral condensate in Quantum chromodynamics, about a factor of a thousand smaller than the above, gives a large effective mass to quarks, and distinguishes between phases of quark matter. This underlies the bulk of the mass of most hadrons.

- The gluon condensate in Quantum chromodynamics may also be partly responsible for masses of hadrons.

The observed Lorentz invariance of space-time allows only the formation of condensates which are Lorentz scalars and have vanishing charge.[citation needed] Thus fermion condensates must be of the form , where ψ is the fermion field. Similarly a tensor field, Gμν, can only have a scalar expectation value such as .

In some vacua of string theory, however, non-scalar condensates are found.[which?] If these describe our universe, then Lorentz symmetry violation may be observable.

Vacuum energy is an underlying background energy that exists in space throughout the entire Universe.[1] The vacuum energy is a special case of zero-point energy that relates to the quantum vacuum.[2]

Why does the zero-point energy of the vacuum not cause a large cosmological constant? What cancels it out?

The effects of vacuum energy can be experimentally observed in various phenomena such as spontaneous emission, the Casimir effect and the Lamb shift, and are thought to influence the behavior of the Universe on cosmological scales. Using the upper limit of the cosmological constant, the vacuum energy of free space has been estimated to be 10−9 joules (10−2 ergs), or ~5 GeV per cubic meter.[3] However, in quantum electrodynamics, consistency with the principle of Lorentz covariance and with the magnitude of the Planck constant suggest a much larger value of 10113 joules per cubic meter. This huge discrepancy is known as the cosmological constant problem.

https://en.wikipedia.org/wiki/Vacuum_energy

Zero-point energy (ZPE) is the lowest possible energy that a quantum mechanical system may have. Unlike in classical mechanics, quantum systems constantly fluctuate in their lowest energy state as described by the Heisenberg uncertainty principle.[1] Therefore, even at absolute zero, atoms and molecules retain some vibrational motion. Apart from atoms and molecules, the empty space of the vacuum also has these properties. According to quantum field theory, the universe can be thought of not as isolated particles but continuous fluctuating fields: matter fields, whose quanta are fermions (i.e., leptons and quarks), and force fields, whose quanta are bosons (e.g., photons and gluons). All these fields have zero-point energy.[2] These fluctuating zero-point fields lead to a kind of reintroduction of an aether in physics[1][3] since some systems can detect the existence of this energy. However, this aether cannot be thought of as a physical medium if it is to be Lorentz invariant such that there is no contradiction with Einstein's theory of special relativity.[1]

https://en.wikipedia.org/wiki/Zero-point_energy

Hydrazinium is the cation with the formula N

2H+

5. It can be derived from hydrazine by protonation (treatment with a strong acid). Hydrazinium is a weak acid with pKa = 8.1.

Salts of hydrazinium are common reagents in chemistry and are often used in certain industrial processes.[1] Notable examples are hydrazinium hydrogensulfate, N

2H

6SO

4 or [N

2H+

5][HSO−

4], and hydrazinium azide, N

5H

5 or [N

2H+

5][N−

3]. In the common names of such salts, the cation is often called "hydrazine", as in "hydrazine sulfate" for hydrazinium hydrogensulfate.

The terms "hydrazinium" and "hydrazine" may also be used for the doubly protonated cation N

2H2+

6, more properly called hydrazinediium or hydrazinium(2+). This cation has an ethane-like structure. Salts of this cation include hydrazinediium sulfate [N

2H2+

6][SO2−

4][2] and hydrazinediium bis(6-carboxypyridazine-3-carboxylate), [N

2H2+

6][C

6H

3N

2O−

4]

2.[3]

Quaternary ammonium cations, also known as quats, are positively charged polyatomic ions of the structure NR+

4, R being an alkyl group or an aryl group.[1] Unlike the ammonium ion (NH+

4) and the primary, secondary, or tertiary ammonium cations, the quaternary ammonium cations are permanently charged, independent of the pH of their solution. Quaternary ammonium salts or quaternary ammonium compounds (called quaternary amines in oilfield parlance) are salts of quaternary ammonium cations. Polyquats are a variety of engineered polymer forms which provide multiple quat molecules within a larger molecule.

Quats are used in consumer applications including as antimicrobials (such as detergents and disinfectants), fabric softeners, and hair conditioners. As an antimicrobial, they are able to inactivate enveloped viruses (such as SARS-CoV-2). Quats tend to be gentler on surfaces than bleach-based disinfectants, and are generally fabric-safe.[2]

https://en.wikipedia.org/wiki/Quaternary_ammonium_cation

Polyquaternium is the International Nomenclature for Cosmetic Ingredients designation for several polycationic polymers that are used in the personal care industry. Polyquaternium is a neologism used to emphasize the presence of quaternary ammonium centers in the polymer. INCI has approved at least 40 different polymers under the polyquaternium designation. Different polymers are distinguished by the numerical value that follows the word "polyquaternium". Polyquaternium-5, polyquaternium-7, and polyquaternium-47 are three examples, each a chemically different type of polymer. The numbers are assigned in the order in which they are registered rather than because of their chemical structure.

Polyquaterniums find particular application in conditioners, shampoo, hair mousse, hair spray, hair dye, personal lubricant, and contact lens solutions. Because they are positively charged, they neutralize the negative charges of most shampoos and hair proteins and help hair lie flat. Their positive charges also ionically bond them to hair and skin. Some have antimicrobial properties.

https://en.wikipedia.org/wiki/Polyquaternium

An ionomer (/ˌaɪˈɑːnəmər/) (iono- + -mer) is a polymer composed of repeat units of both electrically neutral repeating units and ionized units covalently bonded to the polymer backbone as pendant group moieties. Usually no more than 15 mole percent are ionized. The ionized units are often carboxylic acid groups.

The classification of a polymer as an ionomer depends on the level of substitution of ionic groups as well as how the ionic groups are incorporated into the polymer structure. For example, polyelectrolytes also have ionic groups covalently bonded to the polymer backbone, but have a much higher ionic group molar substitution level (usually greater than 80%); ionenes are polymers where ionic groups are part of the actual polymer backbone. These two classes of ionic-group-containing polymers have vastly different morphological and physical properties and are therefore not considered ionomers.

Ionomers have unique physical properties including electrical conductivity and viscosity—increase in ionomer solution viscosity with increasing temperatures (see conducting polymer). Ionomers also have unique morphological properties as the non-polar polymer backbone is energetically incompatible with the polar ionic groups. As a result, the ionic groups in most ionomers will undergo microphase separation to form ionic-rich domains.

Commercial applications for ionomers include golf ball covers, semipermeable membranes, sealing tape and thermoplastic elastomers. Common examples of ionomers include polystyrene sulfonate, Nafion and Hycar.

https://en.wikipedia.org/wiki/Ionomer

Thermoplastic elastomers (TPE), sometimes referred to as thermoplastic rubbers, are a class of copolymers or a physical mix of polymers (usually a plastic and a rubber) that consist of materials with both thermoplastic and elastomeric properties. While most elastomers are thermosets, thermoplastics are in contrast relatively easy to use in manufacturing, for example, by injection molding. Thermoplastic elastomers show advantages typical of both rubbery materials and plastic materials. The benefit of using thermoplastic elastomers is the ability to stretch to moderate elongations and return to its near original shape creating a longer life and better physical range than other materials.[1] The principal difference between thermoset elastomers and thermoplastic elastomers is the type of cross-linking bond in their structures. In fact, crosslinking is a critical structural factor which imparts high elastic properties.

https://en.wikipedia.org/wiki/Thermoplastic_elastomer

Compression molding is a method of molding in which the molding material, generally preheated, is first placed in an open, heated mold cavity. The mold is closed with a top force or plug member, pressure is applied to force the material into contact with all mold areas, while heat and pressure are maintained until the molding material has cured; this process is known as compression molding method and in case of rubber it is also known as 'Vulcanisation'.[1] The process employs thermosetting resins in a partially cured stage, either in the form of granules, putty-like masses, or preforms.

https://en.wikipedia.org/wiki/Compression_molding

Flash, also known as flashing, is excess material attached to a molded, forged, or cast product, which must usually be removed. This is typically caused by leakage of the material between the two surfaces of a mold (beginning along the parting line[1]) or between the base material and the mold in the case of overmolding.

https://en.wikipedia.org/wiki/Flash_(manufacturing)

Cryogenic deflashing is a deflashing process that uses cryogenic temperatures to aid in the removal of flash on cast or molded workpieces. These temperatures cause the flash to become stiff or brittle and to break away cleanly. Cryogenic deflashing is the preferred process when removing excess material from oddly shaped, custom molded products.

A wide range of molded materials can utilize cryogenic deflashing with proven results. These include:

- Silicones

- Plastics – (both thermoset & thermoplastic)

- Rubbers – (including neoprene & urethane)

- Liquid crystal polymer (LCP)

- Glass-filled nylons

- Aluminum zinc die cast

Examples of applications that use cryogenic deflashing include:

- O-rings & gaskets

- Catheters and other in-vitro medical

- Insulators and other electric / electronic

- Valve stems, washers and fittings

- Tubes and flexible boots

- Face masks & goggles

Today, many molding operations are using cryogenic deflashing instead of rebuilding or repairing molds on products that are approaching their end-of-life. It is often more prudent and economical to add a few cents of production cost for a part than invest in a new molding tool that can cost hundreds of thousands of dollars and has a limited service life due to declining production forecasts.

In other cases, cryogenic deflashing has proven to be an enabling technology, permitting the economical manufacture of high quality, high precision parts fabricated with cutting edge materials and compounds.

https://en.wikipedia.org/wiki/Cryogenic_deflashing

Liquid crystal polymers (LCPs) are polymers with the property of liquid crystal, usually containing aromatic rings as mesogens. Despite uncrosslinked LCPs, polymeric materials like liquid crystal elastomers (LCEs) and liquid crystal networks (LCNs) can exhibit liquid crystallinity as well. They are both crosslinked LCPs but have different cross link density.[1] They are widely used in the digital display market.[2] In addition, LCPs have unique properties like thermal actuation, anisotropic swelling, and soft elasticity. Therefore, they can be good actuators and sensors.[3] One of the most famous and classical applications for LCPs is Kevlar, a strong but light fiber with wide applications including bulletproof vests.

https://en.wikipedia.org/wiki/Liquid-crystal_polymer

An artificial cell, synthetic cell or minimal cell is an engineered particle that mimics one or many functions of a biological cell. Often, artificial cells are biological or polymeric membranes which enclose biologically active materials.[1] As such, liposomes, polymersomes, nanoparticles, microcapsules and a number of other particles can qualify as artificial cells.

https://en.wikipedia.org/wiki/Artificial_cell

Electric field actuation[edit]

Electro-Active Polymers (EAPs) are polymers that can be actuated through the application of electric fields. Currently, the most prominent EAPs include piezoelectric polymers, dielectric actuators (DEAs), electrostrictive graft elastomers, liquid crystal elastomers (LCE) and ferroelectric polymers. While these EAPs can be made to bend, their low capacities for torque motion currently limit their usefulness as artificial muscles. Moreover, without an accepted standard material for creating EAP devices, commercialization has remained impractical. However, significant progress has been made in EAP technology since the 1990s.[7]

Ion-based actuation[edit]

Ionic EAPs are polymers that can be actuated through the diffusion of ions in an electrolyte solution (in addition to the application of electric fields). Current examples of ionic electroactive polymers include polyelectrode gels, ionomeric polymer metallic composites (IPMC), conductive polymers and electrorheological fluids (ERF). In 2011, it was demonstrated that twisted carbon nanotubes could also be actuated by applying an electric field.[8]

https://en.wikipedia.org/wiki/Artificial_muscle#Electric_field_actuation

An electroactive polymer (EAP) is a polymer that exhibits a change in size or shape when stimulated by an electric field. The most common applications of this type of material are in actuators[1] and sensors.[2][3] A typical characteristic property of an EAP is that they will undergo a large amount of deformation while sustaining large forces.

The majority of historic actuators are made of ceramic piezoelectric materials. While these materials are able to withstand large forces, they commonly will only deform a fraction of a percent. In the late 1990s, it has been demonstrated that some EAPs can exhibit up to a 380% strain, which is much more than any ceramic actuator.[1] One of the most common applications for EAPs is in the field of robotics in the development of artificial muscles; thus, an electroactive polymer is often referred to as an artificial muscle.

https://en.wikipedia.org/wiki/Electroactive_polymer

Piezoelectricity (/ˌpiːzoʊ-, ˌpiːtsoʊ-, paɪˌiːzoʊ-/, US: /piˌeɪzoʊ-, piˌeɪtsoʊ-/)[1] is the electric charge that accumulates in certain solid materials—such as crystals, certain ceramics, and biological matter such as bone, DNA, and various proteins—in response to applied mechanical stress.[2] The word piezoelectricity means electricity resulting from pressure and latent heat. It is derived from the Greek word πιέζειν; piezein, which means to squeeze or press, and ἤλεκτρον ēlektron, which means amber, an ancient source of electric charge.[3][4]

https://en.wikipedia.org/wiki/Piezoelectricity

A clock or a timepiece[1] is a device used to measure and indicate time. The clock is one of the oldest human inventions, meeting the need to measure intervals of time shorter than the natural units: the day, the lunar month, year and galactic year. Devices operating on several physical processes have been used over the millennia.

https://en.wikipedia.org/wiki/Clock

A crystal oscillator is an electronic oscillator that makes use of crystal as a frequency selective element to obtain an inverse piezoelectric effect.The term crystal oscillator refers to the circuit, not the resonator: Graf, Rudolf F. (1999). Modern Dictionary of Electronics, 7th Ed. US: Newnes. pp. 162, 163. ISBN 978-0750698665.</ref>[1][2] This frequency is often used to keep track of time, as in quartz wristwatches, to provide a stable clock signal for digital integrated circuits, and to stabilize frequencies for radio transmitters and receivers. The most common type of piezoelectric resonator used is a quartz crystal, so oscillator circuits incorporating them became known as crystal oscillators.[3] However, other piezoelectric materials including polycrystalline ceramics are used in similar circuits.

https://en.wikipedia.org/wiki/Crystal_oscillator

A pressure sensor is a device for pressure measurement of gases or liquids. Pressure is an expression of the force required to stop a fluid from expanding, and is usually stated in terms of force per unit area. A pressure sensor usually acts as a transducer; it generates a signal as a function of the pressure imposed. For the purposes of this article, such a signal is electrical.

https://en.wikipedia.org/wiki/Pressure_sensor

A hermetic seal is any type of sealing that makes a given object airtight (preventing the passage of air, oxygen, or other gases). The term originally applied to airtight glass containers, but as technology advanced it applied to a larger category of materials, including rubber and plastics. Hermetic seals are essential to the correct and safe functionality of many electronic and healthcare products. Used technically, it is stated in conjunction with a specific test method and conditions of use.

https://en.wikipedia.org/wiki/Hermetic_seal

A transducer is a device that converts energy from one form to another. Usually a transducer converts a signal in one form of energy to a signal in another.[1]

Transducers are often employed at the boundaries of automation, measurement, and control systems, where electrical signals are converted to and from other physical quantities (energy, force, torque, light, motion, position, etc.). The process of converting one form of energy to another is known as transduction.[2]

https://en.wikipedia.org/wiki/Transducer

A transductor is type of magnetic amplifier used in power systems for compensating reactive power. It consists of an iron-cored inductor with two windings - a main winding through which an alternating current flows from the power system, and a secondary control winding which carries a small direct current. By varying the direct current, the iron core of the transductor can be arranged to saturate at different levels and thus vary the amount of reactive power absorbed.

https://en.wikipedia.org/wiki/Transductor

A Hall effect sensor (or simply Hall sensor) is a type of sensor which detects the presence and magnitude of a magnetic field using the Hall effect. The output voltage of a Hall sensor is directly proportional to the strength of the field. It is named for the American physicist Edwin Hall.[1]

https://en.wikipedia.org/wiki/Hall_effect_sensor

In a spark ignition internal combustion engine, Ignition timing refers to the timing, relative to the current piston position and crankshaft angle, of the release of a spark in the combustion chamber near the end of the compression stroke.

https://en.wikipedia.org/wiki/Ignition_timing

Compression stroke[edit]

The compression stroke is the second of the four stages in a four-stroke engine.

In this stage, the air-fuel mixture (or air alone, in the case of a direct injection engine) is compressed to the top of the cylinder by the piston. This is the result of the piston moving upwards, reducing the volume of the chamber. Towards the end of this phase, the mixture is ignited, by a spark plug for petrol engines or by self-ignition for diesel engines.

Exhaust stroke[edit]

The exhaust stroke is the final phase in a four stroke engine. In this phase, the piston moves upwards, squeezing out the gasses that were created during the combustion stroke. The gasses exit the cylinder through an exhaust valve at the top of the cylinder. At the end of this phase, the exhaust valve closes and the intake valve opens, which then closes to allow a fresh air-fuel mixture into the cylinder so the process can repeat itself.

https://en.wikipedia.org/wiki/Stroke_(engine)#Compression_stroke

An oscillating cylinder steam engine (also known as a wobbler in the US)[citation needed] is a simple steam-engine design (proposed by William Murdoch at the end of 18th century) that requires no valve gear. Instead the cylinder rocks, or oscillates, as the crank moves the piston, pivoting in the mounting trunnion so that ports in the cylinder line up with ports in a fixed port face alternately to direct steam into or out of the cylinder.

Oscillating cylinder steam engines are now mainly used in toys and models but, in the past, have been used in full-size working engines, mainly on ships and small stationary engines. They have the advantage of simplicity and, therefore, low manufacturing costs. They also tend to be more compact than other types of cylinder of the same capacity, which makes them advantageous for use in ships.

https://en.wikipedia.org/wiki/Oscillating_cylinder_steam_engine

Stationary steam engines are fixed steam engines used for pumping or driving mills and factories, and for power generation. They are distinct from locomotive engines used on railways, traction engines for heavy steam haulage on roads, steam cars (and other motor vehicles), agricultural engines used for ploughing or threshing, marine engines, and the steam turbines used as the mechanism of power generation for most nuclear power plants.

They were introduced during the 18th century and widely made for the whole of the 19th century and most of the first half of the 20th century, only declining as electricity supply and the internal combustion engine became more widespread.

https://en.wikipedia.org/wiki/Stationary_steam_engine

A beam engine is a type of steam engine where a pivoted overhead beam is used to apply the force from a vertical piston to a vertical connecting rod. This configuration, with the engine directly driving a pump, was first used by Thomas Newcomen around 1705 to remove water from mines in Cornwall. The efficiency of the engines was improved by engineers including James Watt, who added a separate condenser; Jonathan Hornblower and Arthur Woolf, who compounded the cylinders; and William McNaught, who devised a method of compounding an existing engine. Beam engines were first used to pump water out of mines or into canals but could be used to pump water to supplement the flow for a waterwheel powering a mill.

The rotative beam engine is a later design of beam engine where the connecting rod drives a flywheel by means of a crank (or, historically, by means of a sun and planet gear). These beam engines could be used to directly power the line-shafting in a mill. They also could be used to power steam ships.

https://en.wikipedia.org/wiki/Beam_engine

A line shaft is a power-driven rotating shaft for power transmission that was used extensively from the Industrial Revolution until the early 20th century. Prior to the widespread use of electric motors small enough to be connected directly to each piece of machinery, line shafting was used to distribute power from a large central power source to machinery throughout a workshop or an industrial complex. The central power source could be a water wheel, turbine, windmill, animal power or a steam engine. Power was distributed from the shaft to the machinery by a system of belts, pulleys and gears known as millwork.[1]

https://en.wikipedia.org/wiki/Line_shaft

A plain bearing, or more commonly sliding contact bearing and slide bearing (in railroading sometimes called a solid bearing, journal bearing, or friction bearing[1]), is the simplest type of bearing, comprising just a bearing surface and no rolling elements. Therefore, the journal (i.e., the part of the shaft in contact with the bearing) slides over the bearing surface. The simplest example of a plain bearing is a shaft rotating in a hole. A simple linear bearing can be a pair of flat surfaces designed to allow motion; e.g., a drawer and the slides it rests on[2] or the ways on the bed of a lathe.

Plain bearings, in general, are the least expensive type of bearing. They are also compact and lightweight, and they have a high load-carrying capacity.[3]

https://en.wikipedia.org/wiki/Plain_bearing

A bearing surface in mechanical engineering is the area of contact between two objects. It usually is used in reference to bolted joints and bearings, but can be applied to a wide variety of engineering applications.

https://en.wikipedia.org/wiki/Bearing_surface

Helical Compression Spring Technology

The notes on helical compression spring terminology refer to diagram at bottom of page. At Master Spring, we've been creating custom helical compression springs for more than 75 years. Learn more about how we can help you design and build the springs for your next application by calling us at 708-453-2570 today.

https://web.archive.org/web/20101101174850/http://www.masterspring.com/technical_resources/helical_compression_spring_terminology/default.html

A diamond anvil cell (DAC) is a high-pressure device used in geology, engineering, and materials science experiments. It enables the compression of a small (sub-millimeter-sized) piece of material to extreme pressures, typically up to around 100–200 gigapascals, although it is possible to achieve pressures up to 770 gigapascals (7,700,000 bars or 7.7 million atmospheres).[1][2]

The device has been used to recreate the pressure existing deep inside planets to synthesise materials and phases not observed under normal ambient conditions. Notable examples include the non-molecular ice X,[3] polymeric nitrogen[4] and metallic phases of xenon,[5] lonsdaleite, and potentially hydrogen.[6]

A DAC consists of two opposing diamonds with a sample compressed between the polished culets (tips). Pressure may be monitored using a reference material whose behavior under pressure is known. Common pressure standards include ruby[7] fluorescence, and various structurally simple metals, such as copper or platinum.[8] The uniaxial pressure supplied by the DAC may be transformed into uniform hydrostatic pressure using a pressure-transmitting medium, such as argon, xenon, hydrogen, helium, paraffin oil or a mixture of methanol and ethanol.[9] The pressure-transmitting medium is enclosed by a gasket and the two diamond anvils. The sample can be viewed through the diamonds and illuminated by X-rays and visible light. In this way, X-ray diffraction and fluorescence; optical absorption and photoluminescence; Mössbauer, Raman and Brillouin scattering; positron annihilation and other signals can be measured from materials under high pressure. Magnetic and microwave fields can be applied externally to the cell allowing nuclear magnetic resonance, electron paramagnetic resonance and other magnetic measurements.[10] Attaching electrodes to the sample allows electrical and magnetoelectrical measurements as well as heating up the sample to a few thousand degrees. Much higher temperatures (up to 7000 K)[11] can be achieved with laser-induced heating,[12] and cooling down to millikelvins has been demonstrated.[9]

https://en.wikipedia.org/wiki/Diamond_anvil_cell

Magnetoresistance is the tendency of a material (often ferromagnetic) to change the value of its electrical resistance in an externally-applied magnetic field. There are a variety of effects that can be called magnetoresistance. Some occur in bulk non-magnetic metals and semiconductors, such as geometrical magnetoresistance, Shubnikov–de Haas oscillations, or the common positive magnetoresistance in metals.[1] Other effects occur in magnetic metals, such as negative magnetoresistance in ferromagnets[2] or anisotropic magnetoresistance (AMR). Finally, in multicomponent or multilayer systems (e.g. magnetic tunnel junctions), giant magnetoresistance (GMR), tunnel magnetoresistance (TMR), colossal magnetoresistance (CMR), and extraordinary magnetoresistance (EMR) can be observed.

https://en.wikipedia.org/wiki/Magnetoresistance

A semimetal is a material with a very small overlap between the bottom of the conduction band and the top of the valence band. According to electronic band theory, solids can be classified as insulators, semiconductors, semimetals, or metals. In insulators and semiconductors the filled valence band is separated from an empty conduction band by a band gap. For insulators, the magnitude of the band gap is larger (e.g., > 4 eV) than that of a semiconductor (e.g., < 4 eV). Because of the slight overlap between the conduction and valence bands, semimetals have no band gap and a negligible density of states at the Fermi level. A metal, by contrast, has an appreciable density of states at the Fermi level because the conduction band is partially filled.[1]

https://en.wikipedia.org/wiki/Semimetal

In solid state physics and condensed matter physics, the density of states (DOS) of a system describes the proportion of states that are to be occupied by the system at each energy. The density of states is defined as , where is the number of states in the system of volume whose energies lie in the range from to . It is mathematically represented as a distribution by a probability density function, and it is generally an average over the space and time domains of the various states occupied by the system. The density of states is directly related to the dispersion relations of the properties of the system. High DOS at a specific energy level means that many states are available for occupation.

https://en.wikipedia.org/wiki/Density_of_states

A Luttinger liquid, or Tomonaga–Luttinger liquid, is a theoretical model describing interacting electrons (or other fermions) in a one-dimensional conductor (e.g. quantum wires such as carbon nanotubes).[1] Such a model is necessary as the commonly used Fermi liquid model breaks down for one dimension.

The Tomonaga–Luttinger liquid was first proposed by Tomonaga in 1950. The model showed that under certain constraints, second-order interactions between electrons could be modelled as bosonic interactions. In 1963, J.M. Luttinger reformulated the theory in terms of Bloch sound waves and showed that the constraints proposed by Tomonaga were unnecessary in order to treat the second-order perturbations as bosons. But his solution of the model was incorrect; the correct solution was given by Daniel C. Mattis and Elliot H. Lieb 1965.[2]

https://en.wikipedia.org/wiki/Luttinger_liquid

A carbon nanotube (CNT) is a tube made of carbon with diameters typically measured in nanometers.

Single-wall carbon nanotubes (SWCNTs) Single-wall carbon nanotubes are one of the allotropes of carbon, intermediate between fullerene cages and flat graphene, with diameters in the range of a nanometer. Although not made this way, single-wall carbon nanotubes can be idealized as cutouts from a two-dimensional hexagonal lattice of carbon atoms rolled up along one of the Bravais lattice vectors of the hexagonal lattice to form a hollow cylinder. In this construction, periodic boundary conditions are imposed over the length of this roll-up vector to yield a helical lattice of seamlessly bonded carbon atoms on the cylinder surface.[1]

https://en.wikipedia.org/wiki/Carbon_nanotube

Carbon fibers or carbon fibres (alternatively CF, graphite fiber or graphite fibre) are fibers about 5 to 10 micrometers (0.00020–0.00039 in) in diameter and composed mostly of carbon atoms.[1] Carbon fibers have several advantages: high stiffness, high tensile strength, high strength to weight ratio, high chemical resistance, high-temperature tolerance, and low thermal expansion.[2] These properties have made carbon fiber very popular in aerospace, civil engineering, military, motorsports, and other competition sports. However, they are relatively expensive compared to similar fibers, such as glass fiber, basalt fibers, or plastic fibers.[3]

https://en.wikipedia.org/wiki/Carbon_fibers

Glass fiber (or glass fibre) is a material consisting of numerous extremely fine fibers of glass.

https://en.wikipedia.org/wiki/Glass_fiber

Glass wool is an insulating material made from fibres of glass arranged using a binder into a texture similar to wool. The process traps many small pockets of air between the glass, and these small air pockets result in high thermal insulation properties. Glass wool is produced in rolls or in slabs, with different thermal and mechanical properties. It may also be produced as a material that can be sprayed or applied in place, on the surface to be insulated. The modern method for producing glass wool was invented by Games Slayter while he was working at the Owens-Illinois Glass Co. (Toledo, Ohio). He first applied for a patent for a new process to make glass wool in 1933.[1]

https://en.wikipedia.org/wiki/Glass_wool

In polymer chemistry and materials science, resin is a solid or highly viscous substance of plant or synthetic origin that is typically convertible into polymers.[1] Resins are usually mixtures of organic compounds. This article focuses on naturally occurring resins.

Plants secrete resins for their protective benefits in response to injury. The resin protects the plant from insects and pathogens.[2] Resins confound a wide range of herbivores, insects, and pathogens, while the volatile phenolic compounds may attract benefactors such as parasitoids or predators of the herbivores that attack the plant.[3]

https://en.wikipedia.org/wiki/Resin

Calendering of textiles is a finishing process used to smooth, coat, or thin a material. With textiles, fabric is passed between calender rollers at high temperatures and pressures. Calendering is used on fabrics such as moire to produce its watered effect and also on cambric and some types of sateens.

https://en.wikipedia.org/wiki/Calendering_(textiles)

Coal is a combustible black or brownish-black sedimentary rock, formed as rock strata called coal seams. Coal is mostly carbon with variable amounts of other elements, chiefly hydrogen, sulfur, oxygen, and nitrogen.[1] Coal is formed when dead plant matter decays into peat and is converted into coal by the heat and pressure of deep burial over millions of years.[2] Vast deposits of coal originate in former wetlands—called coal forests—that covered much of the Earth's tropical land areas during the late Carboniferous (Pennsylvanian) and Permian times.[3][4] However, many significant coal deposits are younger than this and originate from the Mesozoic and Cenozoic eras.

Coal is primarily used as a fuel. While coal has been known and used for thousands of years, its usage was limited until the Industrial Revolution. With the invention of the steam engine, coal consumption increased. In 2020 coal supplied about a quarter of the world's primary energy and over a third of its electricity.[5] Some iron and steel making and other industrial processes burn coal.

The extraction and use of coal causes premature deaths and illness.[6] The use of coal damages the environment, and it is the largest anthropogenic source of carbon dioxide contributing to climate change. 14 billion tonnes of carbon dioxide was emitted by burning coal in 2020,[7] which is 40% of the total fossil fuel emissions[8] and over 25% of total global greenhouse gas emissions.[9] As part of the worldwide energy transition many countries have reduced or eliminated their use of coal power.[10][11] The UN Secretary General asked governments to stop building new coal plants by 2020.[12] Global coal use peaked in 2013.[13] To meet the Paris Agreement target of keeping global warming to below 2 °C (3.6 °F) coal use needs to halve from 2020 to 2030,[14] and phasing down coal was agreed in the Glasgow Climate Pact.

The largest consumer and importer of coal in 2020 was China. China accounts for almost half the world's annual coal production, followed by India with about a tenth. Indonesia and Australia export the most, followed by Russia.[15]

| Sedimentary rock | |

| |

| Composition | |

|---|---|

| Primary | carbon |

| Secondary | |

https://en.wikipedia.org/wiki/Coal

Mining[edit]

China today mines by far the largest share of global anthracite production, accounting for more than three-quarters of global output.[7] Most Chinese production is of standard-grade anthracite, which is used in power generation.[citation needed] Increased demand in China has made that country into a net importer of the fuel, mostly from Vietnam, another major producer of anthracite for power generation, although increasing domestic consumption in Vietnam means that exports may be scaled back.[20]

Current U.S. anthracite production averages around five million tons per year. Of that, about 1.8 million tons were mined in the state of Pennsylvania.[21] Mining of anthracite coal continues to this day in eastern Pennsylvania, and contributes up to 1% to the gross state product. More than 2,000 people were employed in the mining of anthracite coal in 1995. Most of the mining as of that date involved reclaiming coal from slag heaps (waste piles from past coal mining) at nearby closed mines. Some underground anthracite coal is also being mined.

Countries producing HG and UHG anthracite include Russia and South Africa. HG and UHG anthracite are used as a coke or coal substitute in various metallurgical coal applications (sintering, PCI, direct BF charge, pelletizing). It plays an important role in cost reduction in the steel making process and is also used in production of ferroalloys, silicomanganese, calcium carbide and silicon carbide. South Africa exports lower-quality, higher-ash anthracite to Brazil to be used in steel-making.[citation needed]

https://en.wikipedia.org/wiki/Anthracite

The Carboniferous (/ˌkɑːr.bəˈnɪf.ər.əs/ KAHR-bə-NIF-ər-əs)[6] is a geologic period and system of the Paleozoic that spans 60 million years from the end of the Devonian Period 358.9 million years ago (Mya), to the beginning of the Permian Period, 298.9 million years ago. The name Carboniferous means "coal-bearing", from the Latin carbō ("coal") and ferō ("bear, carry"), and refers to the many coal beds formed globally during that time.[7]

https://en.wikipedia.org/wiki/Carboniferous

Mining is the extraction of valuable minerals or other geological materials from the Earth, usually from an ore body, lode, vein, seam, reef, or placer deposit. Exploitation of these deposits for raw material is based on the economic viability of investing in the equipment, labor, and energy required to extract, refine and transport the materials found at the mine to manufacturers who can use the material.

Ores recovered by mining include metals, coal, oil shale, gemstones, limestone, chalk, dimension stone, rock salt, potash, gravel, and clay. Mining is required to obtain most materials that cannot be grown through agricultural processes, or feasibly created artificially in a laboratory or factory. Mining in a wider sense includes extraction of any non-renewable resource such as petroleum, natural gas, or even water. Modern mining processes involve prospecting for ore bodies, analysis of the profit potential of a proposed mine, extraction of the desired materials, and final reclamation of the land after the mine is closed.[1]

https://en.wikipedia.org/wiki/Mining

De re metallica (Latin for On the Nature of Metals [Minerals]) is a book in Latin cataloguing the state of the art of mining, refining, and smelting metals, published a year posthumously in 1556 due to a delay in preparing woodcuts for the text. The author was Georg Bauer, whose pen name was the Latinized Georgius Agricola ("Bauer" and "Agricola" being respectively the German and Latin words for "farmer"). The book remained the authoritative text on mining for 180 years after its publication. It was also an important chemistry text for the period and is significant in the history of chemistry.[1]

Title page of 1561 edition | |

| Author | Georgius Agricola |

|---|---|

| Translator | Herbert Hoover Lou Henry Hoover |

Publication date | 1556 |

Published in English | 1912 |

| ISBN | 0-486-60006-8 |

| OCLC | 34181557 |

https://en.wikipedia.org/wiki/De_re_metallica

A solid-state drive (SSD) is a solid-state storage device that uses integrated circuit assemblies to store data persistently, typically using flash memory, and functioning as secondary storage in the hierarchy of computer storage. It is also sometimes called a semiconductor storage device, a solid-state device or a solid-state disk,[1] even though SSDs lack the physical spinning disks and movable read–write heads used in hard disk drives (HDDs) and floppy disks.[2]

https://en.wikipedia.org/wiki/Solid-state_drive

A charge-coupled device (CCD) is an integrated circuit containing an array of linked, or coupled, capacitors. Under the control of an external circuit, each capacitor can transfer its electric charge to a neighboring capacitor. CCD sensors are a major technology used in digital imaging.

In a CCD image sensor, pixels are represented by p-doped metal–oxide–semiconductor (MOS) capacitors. These MOS capacitors, the basic building blocks of a CCD,[1] are biased above the threshold for inversion when image acquisition begins, allowing the conversion of incoming photons into electron charges at the semiconductor-oxide interface; the CCD is then used to read out these charges. Although CCDs are not the only technology to allow for light detection, CCD image sensors are widely used in professional, medical, and scientific applications where high-quality image data are required. In applications with less exacting quality demands, such as consumer and professional digital cameras, active pixel sensors, also known as CMOS sensors (complementary MOS sensors), are generally used. However, the large quality advantage CCDs enjoyed early on has narrowed over time and since the late 2010s CMOS sensors are the dominant technology, having largely if not completely replaced CCD image sensors.

https://en.wikipedia.org/wiki/Charge-coupled_device

The metal–oxide–semiconductor field-effect transistor (MOSFET, MOS-FET, or MOS FET), also known as the metal–oxide–silicon transistor (MOS transistor, or MOS),[1] is a type of insulated-gate field-effect transistor that is fabricated by the controlled oxidation of a semiconductor, typically silicon. The voltage of the gate terminal determines the electrical conductivity of the device; this ability to change conductivity with the amount of applied voltage can be used for amplifying or switching electronic signals.

A key advantage of a MOSFET is that it requires almost no input current to control the load current, when compared with bipolar junction transistors (BJTs). In an enhancement mode MOSFET, voltage applied to the gate terminal can increase the conductivity from the "normally off" state. In a depletion mode MOSFET, voltage applied at the gate can reduce the conductivity from the "normally on" state.[5] MOSFETs are also capable of high scalability, with increasing miniaturization, and can be easily scaled down to smaller dimensions.

| MOSFET scaling (process nodes) |

https://en.wikipedia.org/wiki/MOSFET

A metal gate, in the context of a lateral metal–oxide–semiconductor (MOS) stack, is the gate electrode separated by an oxide from the transistor's channel – the gate material is made from a metal. In most MOS transistors since about the mid 1970s, the "M" for metal has been replaced by a non-metal gate material.

Aluminum gate[edit]

The first MOSFET (metal–oxide–semiconductor field-effect transistor) was made by Mohamed Atalla and Dawon Kahng at Bell Labs in 1959, and demonstrated in 1960.[1] They used silicon as channel material and a non-self-aligned aluminum gate.[2] Aluminum gate metal (typically deposited in an evaporation vacuum chamber onto the wafer surface) was common through the early 1970s.

Polysilicon[edit]

By the late 1970s, the industry had moved away from aluminum as the gate material in the metal–oxide–semiconductor stack due to fabrication complications and performance issues.[citation needed] A material called polysilicon (polycrystalline silicon, highly doped with donors or acceptors to reduce its electrical resistance) was used to replace aluminum.

Polysilicon can be deposited easily via chemical vapor deposition (CVD) and is tolerant to subsequent manufacturing steps which involve extremely high temperatures (in excess of 900–1000 °C), where metal was not. Particularly, metal (most commonly aluminum – a Type III (P-type) dopant) has a tendency to disperse into (alloy with) silicon during these thermal annealing steps.[citation needed] In particular, when used on a silicon wafer with a < 1 1 1 > crystal orientation, excessive alloying of aluminum (from extended high temperature processing steps) with the underlying silicon can create a short circuit between the diffused FET source or drain areas under the aluminum and across the metallurgical junction into the underlying substrate – causing irreparable circuit failures. These shorts are created by pyramidal-shaped spikes of silicon-aluminum alloy – pointing vertically "down" into the silicon wafer. The practical high-temperature limit for annealing aluminum on silicon is on the order of 450 °C. Polysilicon is also attractive for the easy manufacturing of self-aligned gates. The implantation or diffusion of source and drain dopant impurities is

https://en.wikipedia.org/wiki/Metal_gate

In semiconductor elecronics fabricaton technology, a self-aligned gate is a transistor manufacturing feature whereby the gate electrode of a MOSFET (metal–oxide–semiconductor field-effect transistor) is used as a mask for the doping of the source and drain regions. This technique ensures that the gate is naturally and precisely aligned to the edges of the source and drain.

The use of self-aligned gates in MOS transistors is one of the key innovations that led to the large increase in computing power in the 1970s. Self-aligned gates are still used in most modern integrated circuit processes.

https://en.wikipedia.org/wiki/Self-aligned_gate

Carbon dioxide (chemical formula CO2) is a chemical compound occurring as an acidic colorless gas with a density about 53% higher than that of dry air. Carbon dioxide molecules consist of a carbon atom covalently double bonded to two oxygen atoms. It occurs naturally in Earth's atmosphere as a trace gas. The current concentration is about 0.04% (412 ppm) by volume, having risen from pre-industrial levels of 280 ppm.[9][10] Natural sources include volcanoes, forest fires, hot springs, geysers, and it is freed from carbonate rocks by dissolution in water and acids. Because carbon dioxide is soluble in water, it occurs naturally in groundwater, rivers and lakes, ice caps, glaciers and seawater. It is present in deposits of petroleum and natural gas. Carbon dioxide has a sharp and acidic odor and generates the taste of soda water in the mouth.[11] However, at normally encountered concentrations it is odorless.[1]

https://en.wikipedia.org/wiki/Carbon_dioxide#In_Earth's_atmosphere

In chemistry, triiodide usually refers to the triiodide ion, I−

3. This anion, one of the polyhalogen ions, is composed of three iodine atoms. It is formed by combining aqueous solutions of iodide salts and iodine. Some salts of the anion have been isolated, including thallium(I) triiodide (Tl+[I3]−) and ammonium triiodide ([NH4]+[I3]−). Triiodide is observed to be a red colour in solution[1] .

https://en.wikipedia.org/wiki/Triiodide

The trihydrogen cation or protonated molecular hydrogen is a cation (positive ion) with formula H+

3, consisting of three hydrogen nuclei (protons) sharing two electrons.

The trihydrogen cation is one of the most abundant ions in the universe. It is stable in the interstellar medium (ISM) due to the low temperature and low density of interstellar space. The role that H+

3 plays in the gas-phase chemistry of the ISM is unparalleled by any other molecular ion.

The trihydrogen cation is the simplest triatomic molecule, because its two electrons are the only valence electrons in the system. It is also the simplest example of a three-center two-electron bond system.

https://en.wikipedia.org/wiki/Trihydrogen_cation

Phosphorous acid (or phosphonic acid (singular)) is the compound described by the formula H3PO3. This acid is diprotic (readily ionizes two protons), not triprotic as might be suggested by this formula. Phosphorous acid is an intermediate in the preparation of other phosphorus compounds. Organic derivatives of phosphorous acid, compounds with the formula RPO3H2, are called phosphonic acids.

https://en.wikipedia.org/wiki/Phosphorous_acid

Phosphonates or phosphonic acids are organophosphorus compounds containing C−PO(OH)2 or C−PO(OR)2 groups (where R = alkyl, aryl). Phosphonic acids, typically handled as salts, are generally nonvolatile solids that are poorly soluble in organic solvents, but soluble in water and common alcohols. Many commercially important compounds are phosphonates, including glyphosate (the active molecule of the herbicide "Roundup"), and ethephon, a widely used plant growth regulator. Bisphosphonates are popular drugs for treatment of osteoporosis.[1]

In biology and medicinal chemistry, phosphonate groups are used as stable bioisoteres for phosphate, such as in the antiviral nucleotide analog, Tenofovir, one of the cornerstones of anti-HIV therapy. And there is an indication that phosphonate derivatives are "promising ligands for nuclear medicine."[2]

https://en.wikipedia.org/wiki/Phosphonate

Potassium-40 (40K) is a radioactive isotope of potassium which has a long half-life of 1.251×109 years. It makes up 0.012% (120 ppm) of the total amount of potassium found in nature.

Potassium-40 is a rare example of an isotope that undergoes both types of beta decay. In about 89.28% of events, it decays to calcium-40 (40Ca) with emission of a beta particle (β−, an electron) with a maximum energy of 1.31 MeV and an antineutrino. In about 10.72% of events, it decays to argon-40 (40Ar) by electron capture (EC), with the emission of a neutrino and then a 1.460 MeV gamma ray.[1] The radioactive decay of this particular isotope explains the large abundance of argon (nearly 1%) in the Earth's atmosphere, as well as prevalence of 40Ar over other isotopes. Very rarely (0.001% of events), it decays to 40Ar by emitting a positron (β+) and a neutrino.[2]

https://en.wikipedia.org/wiki/Potassium-40

Phosphorus is a chemical element with the symbol P and atomic number 15. Elemental phosphorus exists in two major forms, white phosphorus and red phosphorus, but because it is highly reactive, phosphorus is never found as a free element on Earth. It has a concentration in the Earth's crust of about one gram per kilogram (compare copper at about 0.06 grams). In minerals, phosphorus generally occurs as phosphate.

https://en.wikipedia.org/wiki/Phosphorus

Hydrogen is the chemical element with the symbol H and atomic number 1. Hydrogen is the lightest element. At standard conditions hydrogen is a gas of diatomic molecules having the formula H2. It is colorless, odorless, tasteless,[8] non-toxic, and highly combustible. Hydrogen is the most abundant chemical substance in the universe, constituting roughly 75% of all normal matter.[9][note 1] Stars such as the Sun are mainly composed of hydrogen in the plasma state. Most of the hydrogen on Earth exists in molecular forms such as water and organic compounds. For the most common isotope of hydrogen (symbol 1H) each atom has one proton, one electron, and no neutrons.

https://en.wikipedia.org/wiki/Hydrogen

Sodium is a chemical element with the symbol Na (from Latin natrium) and atomic number 11. It is a soft, silvery-white, highly reactive metal. Sodium is an alkali metal, being in group 1 of the periodic table. Its only stable isotope is 23Na. The free metal does not occur in nature, and must be prepared from compounds. Sodium is the sixth most abundant element in the Earth's crust and exists in numerous minerals such as feldspars, sodalite, and rock salt (NaCl). Many salts of sodium are highly water-soluble: sodium ions have been leached by the action of water from the Earth's minerals over eons, and thus sodium and chlorine are the most common dissolved elements by weight in the oceans.

https://en.wikipedia.org/wiki/Sodium

Saline water (more commonly known as salt water) is water that contains a high concentration of dissolved salts (mainly sodium chloride). The salt concentration is usually expressed in parts per thousand (permille, ‰) and parts per million (ppm). The United States Geological Survey classifies saline water in three salinity categories. Salt concentration in slightly saline water is around 1,000 to 3,000 ppm (0.1–0.3%), in moderately saline water 3,000 to 10,000 ppm (0.3–1%) and in highly saline water 10,000 to 35,000 ppm (1–3.5%). Seawater has a salinity of roughly 35,000 ppm, equivalent to 35 grams of salt per one liter (or kilogram) of water. The saturation level is only nominally dependent on the temperature of the water.[1] At 20 °C one liter of water can dissolve about 357 grams of salt, a concentration of 26.3% w/w. At boiling (100 °C) the amount that can be dissolved in one liter of water increases to about 391 grams, a concentration of 28.1% w/w.

https://en.wikipedia.org/wiki/Saline_water

Seawater, or salt water, is water from a sea or ocean. On average, seawater in the world's oceans has a salinity of about 3.5% (35 g/l, 35 ppt, 600 mM). This means that every kilogram (roughly one liter by volume) of seawater has approximately 35 grams (1.2 oz) of dissolved salts (predominantly sodium (Na+

) and chloride (Cl−

) ions). Average density at the surface is 1.025 kg/l. Seawater is denser than both fresh water and pure water (density 1.0 kg/l at 4 °C (39 °F)) because the dissolved salts increase the mass by a larger proportion than the volume. The freezing point of seawater decreases as salt concentration increases. At typical salinity, it freezes at about −2 °C (28 °F).[1] The coldest seawater still in the liquid state ever recorded was found in 2010, in a stream under an Antarctic glacier: the measured temperature was −2.6 °C (27.3 °F).[2] Seawater pH is typically limited to a range between 7.5 and 8.4.[3] However, there is no universally accepted reference pH-scale for seawater and the difference between measurements based on different reference scales may be up to 0.14 units.[4]

https://en.wikipedia.org/wiki/Seawater

Dry ice is the solid form of carbon dioxide. It is commonly used as it does not have a liquid state and sublimates directly from the solid state to the gas state. It is used primarily as a cooling agent, but is also used in fog machines at theatres for dramatic effects. Its advantages include lower temperature than that of water ice and not leaving any residue (other than incidental frost from moisture in the atmosphere). It is useful for preserving frozen foods (such as ice cream) where mechanical cooling is unavailable.

Dry ice sublimates at 194.7 K (−78.5 °C; −109.2 °F) at Earth atmospheric pressure. This extreme cold makes the solid dangerous to handle without protection from frostbite injury. While generally not very toxic, the outgassing from it can cause hypercapnia (abnormally elevated carbon dioxide levels in the blood) due to buildup in confined locations.

https://en.wikipedia.org/wiki/Dry_ice

The atmosphere of Earth, commonly known as air, is the layer of gases retained by Earth's gravity that surrounds the planet and forms its planetary atmosphere. The atmosphere of Earth protects life on Earth by creating pressure allowing for liquid water to exist on the Earth's surface, absorbing ultraviolet solar radiation, warming the surface through heat retention (greenhouse effect), and reducing temperature extremes between day and night (the diurnal temperature variation).

https://en.wikipedia.org/wiki/Atmosphere_of_Earth

https://en.wikipedia.org/w/index.php?title=Air&redirect=no

https://en.wikipedia.org/wiki/Cumulonimbus_cloud

Ozone depletion consists of two related events observed since the late 1970s: a steady lowering of about four percent in the total amount of ozone in Earth's atmosphere, and a much larger springtime decrease in stratospheric ozone (the ozone layer) around Earth's polar regions.[1] The latter phenomenon is referred to as the ozone hole. There are also springtime polar tropospheric ozone depletion events in addition to these stratospheric events.

https://en.wikipedia.org/wiki/Ozone_depletion

https://en.wikipedia.org/wiki/Acid_rain

A mirror is an object that reflects an image. Light that bounces off a mirror will show an image of whatever is in front of it, when focused through the lens of the eye or a camera. Mirrors reverse the direction of the image in an equal yet opposite angle from which the light shines upon it. This allows the viewer to see themselves or objects behind them, or even objects that are at an angle from them but out of their field of view, such as around a corner. Natural mirrors have existed since prehistoric times, such as the surface of water, but people have been manufacturing mirrors out of a variety of materials for thousands of years, like stone, metals, and glass. In modern mirrors, metals like silver or aluminum are often used due to their high reflectivity, applied as a thin coating on glass because of its naturally smooth and very hard surface.

https://en.wikipedia.org/wiki/Mirror

Specular reflection, or regular reflection, is the mirror-like reflection of waves, such as light, from a surface.[1]

The law of reflection states that a reflected ray of light emerges from the reflecting surface at the same angle to the surface normal as the incident ray, but on the opposing side of the surface normal in the plane formed by the incident and reflected rays. This behavior was first described by Hero of Alexandria (AD c. 10–70).[2]

Specular reflection may be contrasted with diffuse reflection, in which light is scattered away from the surface in a range of directions.

https://en.wikipedia.org/wiki/Specular_reflection

In describing reflection and refraction in optics, the plane of incidence (also called the incidence plane or the meridional plane[citation needed]) is the plane which contains the surface normal and the propagation vector of the incoming radiation.[1] (In wave optics, the latter is the k-vector, or wavevector, of the incoming wave.)

When reflection is specular, as it is for a mirror or other shiny surface, the reflected ray also lies in the plane of incidence; when refraction also occurs, the refracted ray lies in the same plane. The condition of co-planarity among incident ray, surface normal, and reflected ray (refracted ray) is known as the first law of reflection (first law of refraction, respectively).[2]

In optics, the corpuscular theory of light, arguably set forward by Descartes in 1637, states that light is made up of small discrete particles called "corpuscles" (little particles) which travel in a straight line with a finite velocity and possess impetus. This was based on an alternate description of atomism of the time period.

Isaac Newton was a pioneer of this theory; he notably elaborated upon it in 1672. This early conception of the particle theory of light was an early forerunner to the modern understanding of the photon. This theory cannot explain refraction, diffraction and interference, which require an understanding of the wave theory of light of Christiaan Huygens.

https://en.wikipedia.org/wiki/Corpuscular_theory_of_light

In physics, mirror matter, also called shadow matter or Alice matter, is a hypothetical counterpart to ordinary matter.[1]

https://en.wikipedia.org/wiki/Mirror_matter

In quantum mechanics, a parity transformation (also called parity inversion) is the flip in the sign of one spatial coordinate. In three dimensions, it can also refer to the simultaneous flip in the sign of all three spatial coordinates (a point reflection):

It can also be thought of as a test for chirality of a physical phenomenon, in that a parity inversion transforms a phenomenon into its mirror image. All fundamental interactions of elementary particles, with the exception of the weak interaction, are symmetric under parity. The weak interaction is chiral and thus provides a means for probing chirality in physics. In interactions that are symmetric under parity, such as electromagnetism in atomic and molecular physics, parity serves as a powerful controlling principle underlying quantum transitions.

A matrix representation of P (in any number of dimensions) has determinant equal to −1, and hence is distinct from a rotation, which has a determinant equal to 1. In a two-dimensional plane, a simultaneous flip of all coordinates in sign is not a parity transformation; it is the same as a 180°-rotation.

In quantum mechanics, wave functions that are unchanged by a parity transformation are described as even functions, while those that change sign under a parity transformation are odd functions.

https://en.wikipedia.org/wiki/Parity_(physics)

Certain epoxy resins and their processes can create a hermetic bond to copper, brass, stainless steel, specialty alloys, plastic, or epoxy itself with similar coefficients of thermal expansion, and are used in the manufacture of hermetic electrical and fiber optic hermetic seals. Epoxy-based seals can increase signal density within a feedthrough design compared to other technologies with minimal spacing requirements between electrical conductors. Epoxy hermetic seal designs can be used in hermetic seal applications for low or high vacuum or pressures, effectively sealing gases or fluids including helium gas to very low helium gas leak rates similar to glass or ceramic. Hermetic epoxy seals also offer the design flexibility of sealing either copper alloy wires or pins instead of the much less electrically conductive Kovar pin materials required in glass or ceramic hermetic seals. With a typical operating temperature range of −70 °C to +125 °C or 150 °C, epoxy hermetic seals are more limited in comparison to glass or ceramic seals, although some hermetic epoxy designs are capable of withstanding 200 °C.[2]

https://en.wikipedia.org/wiki/Hermetic_seal

https://en.wikipedia.org/wiki/Oxygen_transmission_rate

https://en.wikipedia.org/wiki/Lubricant

https://en.wikipedia.org/wiki/Solvent

https://en.wikipedia.org/wiki/Proton

https://en.wikipedia.org/wiki/Phonon

https://en.wikipedia.org/wiki/Igor_Tamm

https://en.wikipedia.org/wiki/Condensed_matter_physics

https://en.wikipedia.org/wiki/Clock_generator

https://en.wikipedia.org/wiki/Mirror

https://en.wikipedia.org/wiki/Vacuum

See also[edit]

- Zero-point energy, the minimum energy a quantum mechanical system may have

- Zero-point field, a synonym for the vacuum state in quantum field theory

- Hofstadter zero-point, a special point associated with every plane triangle

- Point of origin (disambiguation)

- Triple zero (disambiguation)

- Point Zero (disambiguation)

https://en.wikipedia.org/wiki/Zero_point

https://en.wikipedia.org/wiki/Nucleic_acid_analogue

radioligand

phosphor rad trans

hydrogen oxygen ozone trihydrocat hydrag prop hydrazine proton phonon pholton mirror matter vacume dark matter plane formsalt salt water air atmosphere water triangle triplet material rock iodine triiodine cationcyclic triatom hydro PH4 hydro solvent oxygen lube oxy tri cascade

draft

Energy transformation, also known as energy conversion, is the process of changing energy from one form to another. In physics, energy is a quantity that provides the capacity to perform work (e.g. Lifting an object) or provides heat. In addition to being converted, according to the law of conservation of energy, energy is transferable to a different location or object, but it cannot be created or destroyed.