A retroreflector (sometimes called a retroflector or cataphote) is a device or surface that reflects radiation (usually light) back to its source with minimum scattering. This works at a wide range of angle of incidence, unlike a planar mirror, which does this only if the mirror is exactly perpendicular to the wave front, having a zero angle of incidence. Being directed, the retroflector's reflection is brighter than that of a diffuse reflector. Corner reflectors and cat's eye reflectors are the most used kinds.

https://en.wikipedia.org/wiki/Retroreflector

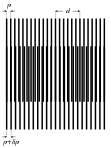

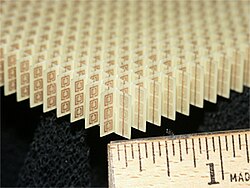

A large relatively thin retroreflector can be formed by combining many small corner reflectors, using the standard hexagonal tiling.

https://en.wikipedia.org/wiki/Retroreflector

Corner reflector

A set of three mutually perpendicular reflective surfaces, placed to form the internal corner of a cube, work as a retroreflector. The three corresponding normal vectors of the corner's sides form a basis (x, y, z) in which to represent the direction of an arbitrary incoming ray, [a, b, c]. When the ray reflects from the first side, say x, the ray's x-component, a, is reversed to −a, while the y- and z-components are unchanged. Therefore, as the ray reflects first from side x then side y and finally from side z the ray direction goes from [a, b, c] to [−a, b, c] to [−a, −b, c] to [−a, −b, −c] and it leaves the corner with all three components of its direction exactly reversed.

Corner reflectors occur in two varieties. In the more common form, the corner is literally the truncated corner of a cube of transparent material such as conventional optical glass. In this structure, the reflection is achieved either by total internal reflection or silvering of the outer cube surfaces. The second form uses mutually perpendicular flat mirrors bracketing an air space. These two types have similar optical properties.

A large relatively thin retroreflector can be formed by combining many small corner reflectors, using the standard hexagonal tiling.

https://en.wikipedia.org/wiki/Retroreflector

Cat's eye

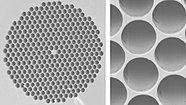

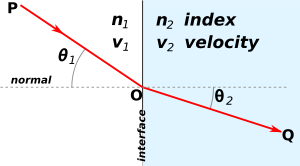

Another common type of retroreflector consists of refracting optical elements with a reflective surface, arranged so that the focal surface of the refractive element coincides with the reflective surface, typically a transparent sphere and (optionally) a spherical mirror. In the paraxial approximation, this effect can be achieved with lowest divergence with a single transparent sphere when the refractive index of the material is exactly one plus the refractive index ni of the medium from which the radiation is incident (ni is around 1 for air). In that case, the sphere surface behaves as a concave spherical mirror with the required curvature for retroreflection. In practice, the optimal index of refraction may be lower than ni + 1 ≅ 2 due to several factors. For one, it is sometimes preferable to have an imperfect, slightly divergent retroreflection, as in the case of road signs, where the illumination and observation angles are different. Due to spherical aberration, there also exists a radius from the centerline at which incident rays are focused at the center of the rear surface of the sphere. Finally, high index materials have higher Fresnel reflection coefficients, so the efficiency of coupling of the light from the ambient into the sphere decreases as the index becomes higher. Commercial retroreflective beads thus vary in index from around 1.5 (common forms of glass) up to around 1.9 (commonly barium titanate glass).

The spherical aberration problem with the spherical cat's eye can be solved in various ways, one being a spherically symmetrical index gradient within the sphere, such as in the Luneburg lens design. Practically, this can be approximated by a concentric sphere system.[2]

Because the back-side reflection for an uncoated sphere is imperfect, it is fairly common to add a metallic coating to the back half of retroreflective spheres to increase the reflectance, but this implies that the retroreflection only works when the sphere is oriented in a particular direction.

An alternative form of the cat's eye retroreflector uses a normal lens focused onto a curved mirror rather than a transparent sphere, though this type is much more limited in the range of incident angles that it retroreflects.

The term cat's eye derives from the resemblance of the cat's eye retroreflector to the optical system that produces the well-known phenomenon of "glowing eyes" or eyeshine in cats and other vertebrates (which are only reflecting light, rather than actually glowing). The combination of the eye's lens and the cornea form the refractive converging system, while the tapetum lucidum behind the retina forms the spherical concave mirror. Because the function of the eye is to form an image on the retina, an eye focused on a distant object has a focal surface that approximately follows the reflective tapetum lucidum structure,[citation needed] which is the condition required to form a good retroreflection.

This type of retroreflector can consist of many small versions of these structures incorporated in a thin sheet or in paint. In the case of paint containing glass beads, the paint adheres the beads to the surface where retroreflection is required and the beads protrude, their diameter being about twice the thickness of the paint.

Phase-conjugate mirror

A third, much less common way of producing a retroreflector is to use the nonlinear optical phenomenon of phase conjugation. This technique is used in advanced optical systems such as high-power lasers and optical transmission lines. Phase-conjugate mirrors[3] reflects an incoming wave so that the reflected wave exactly follows the path it has previously taken, and require a comparatively expensive and complex apparatus, as well as large quantities of power (as nonlinear optical processes can be efficient only at high enough intensities). However, phase-conjugate mirrors have an inherently much greater accuracy in the direction of the retroreflection, which in passive elements is limited by the mechanical accuracy of the construction.

https://en.wikipedia.org/wiki/Retroreflector

"Aura" around the shadow of a hot-air balloon, caused by retroreflection from dewdrops

"Aura" around the shadow of a hot-air balloon, caused by retroreflection from dewdrops

https://en.wikipedia.org/wiki/Retroreflector#/media/File:Balloon_shadow.jpg

Free-space optical communication

Modulated retroreflectors, in which the reflectance is changed over time by some means, are the subject of research and development for free-space optical communications networks. The basic concept of such systems is that a low-power remote system, such as a sensor mote, can receive an optical signal from a base station and reflect the modulated signal back to the base station. Since the base station supplies the optical power, this allows the remote system to communicate without excessive power consumption. Modulated retroreflectors also exist in the form of modulated phase-conjugate mirrors (PCMs). In the latter case, a "time-reversed" wave is generated by the PCM with temporal encoding of the phase-conjugate wave (see, e.g., SciAm, Oct. 1990, "The Photorefractive Effect," David M. Pepper, et al.).

Inexpensive corner-aiming retroreflectors are used in user-controlled technology as optical datalink devices. Aiming is done at night, and the necessary retroreflector area depends on aiming distance and ambient lighting from street lamps. The optical receiver itself behaves as a weak retroreflector because it contains a large, precisely focused lens that detects illuminated objects in its focal plane. This allows aiming without a retroreflector for short ranges.

Other uses

Retroreflectors are used in the following example applications:

- In common (non-SLR) digital cameras, the sensor system is often retroreflective. Researchers have used this property to demonstrate a system to prevent unauthorized photographs by detecting digital cameras and beaming a highly focused beam of light into the lens.[25]

- In movie screens to allow for high brilliance under dark conditions.[26]

- Digital compositing programs and chroma key environments use retroreflection to replace traditional lit backdrops in composite work as they provide a more solid colour without requiring that the backdrop be lit separately.[27]

- In Longpath-DOAS systems retroreflectors are used to reflect the light emitted from a lightsource back into a telescope. It is then spectrally analyzed to obtain information about the trace gas content of the air between the telescope and the retro reflector.

- Barcode labels can be printed on retroreflective material to increase the range of scanning up to 50 feet.[28]

- In a form of 3D display; where a retro-reflective sheeting and a set of projectors is used to project stereoscopic images back to user's eye. The use of mobile projectors and positional tracking mounted on user's spectacles frame allows the illusion of a hologram to be created for computer generated imagery.[29][30][31]

- Flashlight fish of the family Anomalopidae have natural retroreflectors. See tapetum lucidum.

https://en.wikipedia.org/wiki/Retroreflector

Heiligenschein (German: [ˈhaɪlɪɡn̩ˌʃaɪn]; lit. 'halo, aureola') is an optical phenomenon in which a bright spot appears around the shadow of the viewer's head in the presence of dew. In photogrammetry and remote sensing, it is more commonly known as the hotspot. It is also occasionally known as Cellini's halo after the Italian artist and writer Benvenuto Cellini (1500–1571), who described the phenomenon in his memoirs in 1562.[1]

Nearly spherical dew droplets act as lenses to focus the light onto the surface behind them. When this light scatters or reflects off that surface, the same lens re-focuses that light into the direction from which it came. This configuration is sometimes called a cat's eye retroreflector. Any retroreflective surface is brightest around the antisolar point.

Opposition surge by other particles than water and the glory in water vapour are similar effects caused by different mechanisms.

https://en.wikipedia.org/wiki/Heiligenschein

A 22° halo around the Sun, observed over Bretton Woods, New Hampshire, USA on February 13, 2021

https://en.wikipedia.org/wiki/Halo_(optical_phenomenon)

Optical phenomena are any observable events that result from the interaction of light and matter.

All optical phenomena coincide with quantum phenomena.[1] Common optical phenomena are often due to the interaction of light from the sun or moon with the atmosphere, clouds, water, dust, and other particulates. One common example is the rainbow, when light from the sun is reflected and refracted by water droplets. Some phenomena, such as the green ray, are so rare they are sometimes thought to be mythical.[2] Others, such as Fata Morganas, are commonplace in favored locations.

Other phenomena are simply interesting aspects of optics, or optical effects. For instance, the colors generated by a prism are often shown in classrooms.

https://en.wikipedia.org/wiki/Optical_phenomena

A Fata Morgana (Italian: [ˈfaːta morˈɡaːna]) is a complex form of superior mirage visible in a narrow band right above the horizon. The term Fata Morgana is the Italian translation of "Morgan the Fairy" (Morgan le Fay of Arthurian legend). These mirages are often seen in the Italian Strait of Messina, and were described as fairy castles in the air or false land conjured by her magic.

Fata Morgana mirages significantly distort the object or objects on which they are based, often such that the object is completely unrecognizable. A Fata Morgana may be seen on land or at sea, in polar regions, or in deserts. It may involve almost any kind of distant object, including boats, islands, and the coastline. Often, a Fata Morgana changes rapidly. The mirage comprises several inverted (upside down) and erect (right-side up) images that are stacked on top of one another. Fata Morgana mirages also show alternating compressed and stretched zones.[1]

The optical phenomenon occurs because rays of light bend when they pass through air layers of different temperatures in a steep thermal inversion where an atmospheric duct has formed.[1] In calm weather, a layer of significantly warmer air may rest over colder dense air, forming an atmospheric duct that acts like a refracting lens, producing a series of both inverted and erect images. A Fata Morgana requires a duct to be present; thermal inversion alone is not enough to produce this kind of mirage. While a thermal inversion often takes place without there being an atmospheric duct, an atmospheric duct cannot exist without there first being a thermal inversion.

Schematic diagram explaining the Fata Morgana mirage

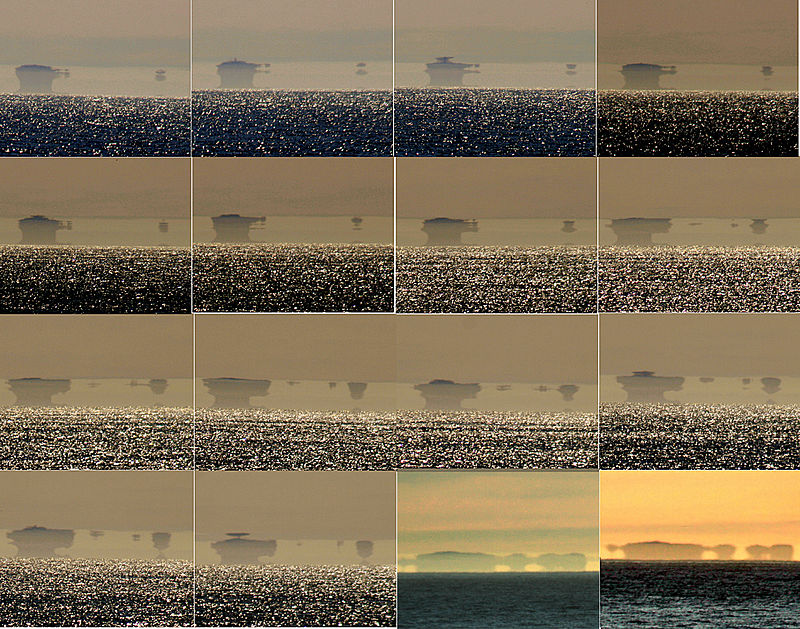

A sequence of a Fata Morgana of the Farallon Islands as seen from San Francisco

https://en.wikipedia.org/wiki/Fata_Morgana_(mirage)

Observing a Fata Morgana

A Fata Morgana is most commonly seen in polar regions, especially over large sheets of ice that have a uniform low temperature. It may, however, be observed in almost any area. In polar regions the Fata Morgana phenomenon is observed on relatively cold days. In deserts, over oceans, and over lakes, however, a Fata Morgana may be observed on hot days.

To generate the Fata Morgana phenomenon, the thermal inversion has to be strong enough that the curvature of the light rays within the inversion layer is stronger than the curvature of the Earth.[1] Under these conditions, the rays bend and create arcs. An observer needs to be within or below an atmospheric duct in order to be able to see a Fata Morgana.[2] Fata Morgana may be observed from any altitude within the Earth's atmosphere, from sea level up to mountaintops, and even including the view from airplanes.[3][4]

A Fata Morgana may be described as a very complex superior mirage with more than three distorted erect and inverted images.[1] Because of the constantly changing conditions of the atmosphere, a Fata Morgana may change in various ways within just a few seconds of time, including changing to become a straightforward superior mirage. The sequential image here shows sixteen photographic frames of a mirage of the Farallon Islands as seen from San Francisco; the images were all taken on the same day. In the first fourteen frames, elements of the Fata Morgana mirage display alternations of compressed and stretched zones.[1] The last two frames were photographed a few hours later, around sunset time. At that point in time, the air was cooler while the ocean was probably a little bit warmer, which caused the thermal inversion to be not as extreme as it was few hours before. A mirage was still present at that point, but it was not so complex as a few hours before sunset: the mirage was no longer a Fata Morgana, but instead had become a simple superior mirage.

Fata Morgana mirages are visible to the naked eye, but in order to be able to see the detail within them, it is best to view them through binoculars, a telescope, or as is the case in the images here, through a telephoto lens. Gabriel Gruber (1740–1805) and Tobias Gruber (1744–1806), who observed Fata Morgana above Lake Cerknica, were the first to study it in a laboratory setting.

https://en.wikipedia.org/wiki/Fata_Morgana_(mirage)

Etymology

La Fata Morgana ("The Fairy Morgana") is the Italian name of Morgan le Fay, also known as Morgana and other variants, who was described as a powerful sorceress in the Arthurian legend. As her name indicates, the figure of Morgan appears to have been originally a fairy figure rather than a human woman. The early works featuring Morgan do not elaborate on her nature, other than describing her role as that of a fairy or magician. Later, she was described as a King Arthur's half-sister and an enchantress.[5] After King Arthur's final battle at Camlann, Morgan takes her half-brother Arthur to Avalon.[6] In medieval times, suggestions for the location of Avalon included the other side of the Earth at the antipodes, Sicily, and other locations in the Mediterranean.[7] Legends claimed that sirens in the waters around Sicily lured the unwary to their death. Morgan is associated not only with Sicily's Mount Etna (the supposedly hollow mountain locally identified as Avalon since the 12th century[8]), but also with sirens. In a medieval French Arthurian romance of the 13th century, Floriant et Florete, she is called "mistress of the fairies of the salt sea" (La mestresse [des] fées de la mer salée).[9] Ever since that time, Fata Morgana has been associated with Sicily in the Italian folklore and literature.[10] For example, a local legend connects Morgan and her magical mirages with Roger I of Sicily and the Norman conquest of the island from the Arabs.[11][12]

Walter Charleton, in his 1654 treatise "Physiologia Epicuro-Gassendo-Charltoniana", devotes several pages to the description of the Morgana of Rhegium, in the Strait of Messina (Book III, Chap. II, Sect. II). He records that a similar phenomenon was reported in Africa by Diodorus Siculus, a Greek historian writing in the first century BC, and that the Rhegium Fata Morgana was described by Damascius, a Greek philosopher of the sixth century AD. In addition, Charleton tells us that Athanasius Kircher described the Rhegium mirage in his book of travels.

An early mention of the term Fata Morgana in English, in 1818, referred to such a mirage noticed in the Strait of Messina, between Calabria and Sicily.[13]

- Fata Morgana, phr. : It. : a peculiar mirage occasionally seen on the coasts of the Straits of Messina, locally attributed to a fay Morgana. Hence, metaph. any illusory appearance. 1818 In mountainous regions, deceptions of sight, Fata Morgana, &c., are more common: In E. Burl's Lett. N. Scotl., Vol. II. p. in (1818).

Famous legends and observations

The Flying Dutchman

The Flying Dutchman, according to folklore, is a ghost ship that can never go home, and is doomed to sail the seven seas forever. The Flying Dutchman is usually spotted from afar, sometimes seen to be glowing with ghostly light. One of the possible explanations of the origin of the Flying Dutchman legend is a Fata Morgana mirage seen at sea.[14]

A Fata Morgana superior mirage of a ship can take many different forms. Even when the boat in the mirage does not seem to be suspended in the air, it still looks ghostly, and unusual, and what is even more important, it is ever-changing in its appearance. Sometimes a Fata Morgana causes a ship to appear to float inside the waves, at other times an inverted ship appears to sail above its real companion.

In fact, with a Fata Morgana it can be hard to say which individual segment of the mirage is real and which is not real: when a real ship is out of sight because it is below the horizon line, a Fata Morgana can cause the image of it to be elevated, and then everything which is seen by the observer is a mirage. On the other hand, if the real ship is still above the horizon, the image of it can be duplicated many times and elaborately distorted by a Fata Morgana.

The appearance of two ships changing owing to the Fata Morgana phenomenon: the four frames in the first column are of ship No. 1, and the four frames in the second column are of ship No. 2

Phantom islands

In the 19th and early 20th centuries, Fata Morgana mirages may have played a role in a number of unrelated "discoveries" of arctic and antarctic land masses which were later shown not to exist.[citation needed] Icebergs frozen into the pack ice, or the uneven surface of the ice itself, may have contributed to the illusion of distant land features.

Sannikov Land

Yakov Sannikov and Matvei Gedenschtrom claimed to have seen a land mass north of Kotelny Island during their 1809–1810 cartographic expedition to the New Siberian Islands. Sannikov reported this sighting of a "new land" in 1811, and the supposed island was named after him.[15] Three-quarters of a century later, in 1886, Baron Eduard Toll, a Baltic German explorer in Russian service, reported observing Sannikov Land during another expedition to the New Siberian Islands. In 1900, he would lead still another expedition to the region, which had among its objectives the location and exploration of Sannikov Land.[16] The expedition was unsuccessful in this respect.[17] Toll and three others were lost after they departed their ship, which was stuck in ice for the winter, and embarked on a risky expedition by dog sled.[18] In 1937, the Soviet icebreaker Sadko also tried and failed to find Sannikov Land.[19] Some historians and geographers have theorised that the land mass that Sannikov and Toll saw was actually Fata Morganas of Bennett Island.[15]

Croker Mountains

In 1818, Sir John Ross led an expedition to discover the long-sought-after Northwest Passage. When he reached Lancaster Sound in Canada, he sighted, in the distance, a land mass with mountains, directly ahead in the ship's course. He named the mountain range the Croker Mountains,[20] after First Secretary to the Admiralty John Wilson Croker, and ordered the ship to turn around and return to England. Several of his officers protested, including First Mate William Edward Parry and Edward Sabine, but they could not dissuade him.[21] The account of Ross's voyage, published a year later, brought to light this disagreement, and the ensuing controversy over the existence of the Croker Mountains ruined Ross's reputation. The year after Ross's expedition, in 1819, Parry was given command of his own Arctic expedition, and proved Ross wrong by continuing west beyond where Ross had turned back, and sailing through the supposed location of the Croker Mountains. The mountain range that had caused Ross to abandon his mission had been a mirage.

Ross made two errors. First, he refused to listen to the counsel of his officers, who may have been more familiar with mirages than he was. Second, his attempt to honour Croker by naming a mountain range after him backfired when the mountains turned out to be non-existent. Ross could not obtain ships, or funds, from the government for his subsequent expeditions, and was forced to rely on private backers instead.[22]

New South Greenland

Benjamin Morrell reported that, in March 1823, while on a voyage to the Antarctic and southern Pacific Ocean, he had explored what he thought was the east coast of New South Greenland.[23] The west coast of New South Greenland had been explored two years earlier by Robert Johnson, who had given the land its name.[24] This name was not adopted, however, and the area, which is the northern part of the Antarctic Peninsula, is now known as Graham Land.[25] Morrell's reported position was actually far to the east of Graham Land.[26] Searches for the land that Morrell claimed to have explored would continue into the early 20th century before New South Greenland's existence was conclusively disproven. Why Morrell reported exploring a non-existent land is unclear, but one possibility is that he mistook a Fata Morgana for actual land.[27]

Crocker Land

Robert Peary claimed to have seen, while on a 1906 Arctic expedition, a land mass in the distance. He said that it was north-west from the highest point of Cape Thomas Hubbard, which is situated in what is now the northern Canadian territory of Nunavut, and he estimated it to be 210 km (130 miles) away, at about 83 degrees N, longitude 100 degrees W. He named it Crocker Land, after George Crocker of the Peary Arctic Club.[28] As Peary's diary contradicts his public claim that he had sighted land,[29] it is now believed that Crocker Land was a fraudulent invention of Peary,[30] created in an unsuccessful attempt to secure further funding from Crocker.

In 1913, unaware that Crocker Land was merely an invention, Donald Baxter MacMillan organised the Crocker Land Expedition, which set out to reach and explore the supposed land mass. On 21 April, the members of the expedition did, in fact, see what appeared to be a huge island on the north-western horizon. As MacMillan later said, "Hills, valleys, snow-capped peaks extending through at least one hundred and twenty degrees of the horizon". Piugaattoq, a member of the expedition and an Inuit hunter with 20 years of experience of the area, explained that it was just an illusion. He called it poo-jok, which means 'mist'. However, MacMillan insisted that they press on, even though it was late in the season and the sea ice was breaking up. For five days they went on, following the mirage. Finally, on 27 April, after they had covered some 200 km (125 miles) of dangerous sea ice, MacMillan was forced to admit that Piugaattoq was right—the land that they had sighted was in fact a mirage (probably a Fata Morgana). Later, MacMillan wrote:

The day was exceptionally clear, not a cloud or trace of mist; if land could be seen, now was our time. Yes, there it was! It could even be seen without a glass, extending from southwest true to north-northeast. Our powerful glasses, however, brought out more clearly the dark background in contrast with the white, the whole resembling hills, valleys and snow-capped peaks to such a degree that, had we not been out on the frozen sea for 150 miles [240 km], we would have staked our lives upon its reality. Our judgment then, as now, is that this was a mirage or loom of the sea ice.

The expedition collected interesting samples, but is still considered to be a failure and a very expensive mistake. The final cost was $100,000 (equivalent to $2,000,000 in 2021).[32]

Hy Brasil

Hy Brasil is an island that was said to appear once every few years off the coast of Co. Kerry, Ireland. Hy Brasil has been drawn on ancient maps as a perfectly circular island with a river running directly through it.

Lake Ontario

Lake Ontario is said to be famous for mirages, with opposite shorelines becoming clearly visible during the events.[33]

In July 1866, mirages of boats and islands were seen from Kingston, Ontario.[34]

A Mirage – The atmospheric phenomenon known as "mirage" might have been observed on Sunday evening between 6 and 7 o'clock, by looking towards the lake. The line beyond which this phenomenon was observable seemed to strike from about the middle portion of Amherst Island across to the southeast, for while the lower half of the island presented its usual appearance, the upper half was unnaturally distorted and thrown upward in columnar shape with an apparent height of two to three hundred feet. The upper line or cloud from this elevation stretched southward, upon which was thrown the image of objects. A barque sailing in front of this cloud presented a double appearance. While she appeared slightly distorted on the surface of the water, her image was inverted upon the background of the cloud referred to, and both blending together produced a curious sight. At the same time the ship and its shadow were again repeated in a more shadowy form, but distinct, in the foreground, the base being a line of smooth water. Another bark whose hull was entirely below the horizon, the topsails alone being visible, had its hull shadowed on this foreground, but no inversion in this case could be observed. It may be added that these optical phenomena in regard to the vessels could only be seen with the aid of a telescope, for the nearest vessel was at the time fully sixteen miles [26 km] distant. The phenomena lasted over an hour, the illusion changing every moment in its character.

Here the described mirages of vessels "could only be seen with the aid of a telescope". It is often the case when observing a Fata Morgana that one needs to use a telescope or binoculars to really make out the mirage. The "cloud" that the article mentions a few times probably refers to a duct.

On 25 August 1894, Scientific American described a "remarkable mirage" seen by the citizens of Buffalo, New York.[35][36]

The people of Buffalo, N.Y., were treated to a remarkable mirage, between ten and eleven o'clock, on the morning of 16 August, [1894]. It was the city of Toronto with its harbor and small island to the south of the city. Toronto is fifty-six miles [90 km] from Buffalo, but the church spires could be counted with the greatest ease. The mirage took in the whole breadth of Lake Ontario, Charlotte, the suburbs of Rochester, being recognized as a projection east of Toronto. A side–wheel steamer could be seen traveling in a line from Charlotte to Toronto Bay. Two dark objects were at last found to be the steamers of the New York Central plying between Lewiston and Toronto. A sail-boat was also visible and disappeared suddenly. Slowly the mirage began to fade away, to the disappointment of thousands who crowded the roofs of houses and office buildings. A bank of clouds was the cause of the disappearance of the mirage. A close examination of the map showed the mirage did not cause the slightest distortion, the gradual rise of the city from the water being rendered perfectly. It is estimated that at least 20,000 spectators saw the novel spectacle.

This mirage is what is known as that of the third order; that is, the object looms up far above the level and not inverted, as with mirages of the first and second orders, but appearing like a perfect landscape far away in the sky.

Scientific American, 25 August 1894.

This description might refer to looming owing to inversion rather than to an actual mirage.

McMurdo Sound and Antarctica

From McMurdo Station in Antarctica, Fata Morganas are often seen during the Antarctic spring and summer, across McMurdo Sound.[37][38][39] An Antarctic Fata Morgana, seen from a C-47 transport flight, was recounted:

We were going along smoothly and all of a sudden a mountain peak seemed to rise up out of nowhere up ahead. We looked again and it was gone. A couple of minutes later it popped up again rising some 300 feet higher than our altitude. We never seemed to get any closer to it. The peak just kept popping up and down, getting higher and higher and higher every time it reappeared.

Rear Adm. Fred E. Bakutis, commanding the Antarctic Navy Support Activities[37]

UFOs

Fata Morgana mirages may continue to trick some observers and are still sometimes mistaken for otherworldly objects such as UFOs.[40] A Fata Morgana can display an object that is located below the astronomical horizon as an apparent object hovering in the sky. A Fata Morgana can also magnify such an object vertically and make it look absolutely unrecognizable.

Some UFOs which are seen on radar may also be due to Fata Morgana mirages. Official UFO investigations in France indicate:

As is well known, atmospheric ducting is the explanation for certain optical mirages, and in particular the arctic illusion called "fata morgana" where distant ocean or surface ice, which is essentially flat, appears to the viewer in the form of vertical columns and spires, or "castles in the air".

People often assume that mirages occur only rarely. This may be true of optical mirages, but conditions for radar mirages are more common, due to the role played by water vapor which strongly affects the atmospheric refractivity in relation to radio waves. Since clouds are closely associated with high levels of water vapor, optical mirages due to water vapor are often rendered undetectable by the accompanying opaque cloud. On the other hand, radar propagation is essentially unaffected by the water droplets of the cloud so that changes in water vapor content with altitude are very effective in producing atmospheric ducting and radar mirages.[41]

Australia

Fata Morgana mirages could explain the mysterious Australian Min Min light phenomenon.[42] This would also explain the way in which the legend has changed over time: The first reports were of a stationary light, which in a Fata Morgana effect would be an image of a campfire. In more recent reports this has changed to moving lights, which in an inversion reflection such as Fata Morgana would be headlights over the horizon being reflected by the inversion.

Greenland

Fata Morgana Land is a phantom island in the Arctic, reported first in 1907. After an unfruitful search, it was deemed to be Tobias Island.[43]

In literature

A Fata Morgana is usually associated with something mysterious, something that never could be approached.[44]

O sweet illusions of song

That tempt me everywhere,

In the lonely fields, and the throng

Of the crowded thoroughfare!

I approach and ye vanish away,

I grasp you, and ye are gone;

But ever by night and by day,

The melody soundeth on.

As the weary traveler sees

In desert or prairie vast,

Blue lakes, overhung with trees

That a pleasant shadow cast;

Fair towns with turrets high,

And shining roofs of gold,

That vanish as he draws nigh,

Like mists together rolled—

So I wander and wander along,

And forever before me gleams

The shining city of song,

In the beautiful land of dreams.

But when I would enter the gate

Of that golden atmosphere,

It is gone, and I wonder and wait

For the vision to reappear.— Henry Wadsworth Longfellow[45], Fata Morgana (1873)

In the lines, "the weary traveller sees / In desert or prairie vast, / Blue lakes, overhung with trees / That a pleasant shadow cast", because of the mention of blue lakes, it is clear that the author is actually describing not a Fata Morgana, but rather a common inferior or desert mirage. The 1886 drawing shown here of a "Fata Morgana" in a desert might have been an imaginative illustration for the poem, but in reality no mirage ever looks like this. Andy Young writes, "They're always confined to a narrow strip of sky—less than a finger's width at arm's length—at the horizon."[1]

The 18th-century poet Christoph Martin Wieland wrote about "Fata Morgana's castles in the air". The idea of castles in the air was probably so irresistible that many languages still use the phrase Fata Morgana to describe a mirage.[9]

In the book Thunder Below! about the submarine USS Barb, the crew sees a Fata Morgana (called an "arctic mirage" in the book) of four ships trapped in the ice. As they try to approach the ships the mirage vanishes.[46]

The Fata Morgana is briefly mentioned in the 1936 H. P. Lovecraft horror novel At the Mountains of Madness, in which the narrator states: "On many occasions the curious atmospheric effects enchanted me vastly; these including a strikingly vivid mirage—the first I had ever seen—in which distant bergs became the battlements of unimaginable cosmic castles."

See also

- Atmospheric optics

- Brocken spectre

- Fata Morgana (1971 film)

- Green flash

- Looming and similar refraction phenomena

- Mirage of astronomical objects

- Summerland (2020 film)

https://en.wikipedia.org/wiki/Fata_Morgana_(mirage)

A Brocken spectre (British English; American spelling: Brocken specter; German: Brockengespenst), also called Brocken bow, mountain spectre, or spectre of the Brocken is the magnified (and apparently enormous) shadow of an observer cast in mid air upon any type of cloud opposite a strong light source. Additionally, if the cloud consists of water droplets backscattered, a bright area called Heiligenschein, and halo-like rings of rainbow coloured light called a glory can be seen around the head or apperature silhouette of the spectre. Typically the spectre appears in sunlight opposite the sun's direction at the antisolar point.

The phenomenon can appear on any misty mountainside, cloud bank, or be seen from an aircraft, but the frequent fogs and low-altitude accessibility of the Brocken, a peak in the Harz Mountains in Germany, have created a local legend from which the phenomenon draws its name. The Brocken spectre was observed and described by Johann Silberschlag in 1780, and has often been recorded in literature about the region.

Occurrence

The "spectre" appears when the sun shines from behind the observer, who is looking down from a ridge or peak into mist or fog.[1] The light projects their shadow through the mist, often in a triangular shape due to perspective.[2] The apparent magnification of size of the shadow is an optical illusion that occurs when the observer judges their shadow on relatively nearby clouds to be at the same distance as faraway land objects seen through gaps in the clouds, or when there are no reference points by which to judge its size. The shadow also falls on water droplets of varying distances from the eye, confusing depth perception. The ghost can appear to move (sometimes suddenly) because of the movement of the cloud layer and variations in density within the cloud.

References in popular culture and the arts

Samuel Taylor Coleridge's poem "Constancy to an Ideal Object" concludes with an image of the Brocken spectre:

And art thou nothing? Such thou art, as when

The woodman winding westward up the glen

At wintry dawn, where o'er the sheep-track's maze

The viewless snow-mist weaves a glist'ning haze,

Sees full before him, gliding without tread,

An image with a glory round its head;

The enamoured rustic worships its fair hues,

Nor knows he makes the shadow he pursues!

Lewis Carroll's "Phantasmagoria" includes a line about a Spectre who "...tried the Brocken business first/but caught a sort of chill/so came to England to be nursed/and here it took the form of thirst/which he complains of still."

Stanisław Lem's Fiasco (1986) has a reference to the "Brocken Specter": "He was alone. He had been chasing himself. Not a common phenomenon, but known even on Earth. The Brocken Specter in the Alps, for example." The situation, of pursuing one's self, via a natural illusion is a repeated theme in Lem. A scene of significance in his book The Investigation (1975) depicts a detective who, within the confines of a snowy, dead-end alley, confronts a man who turns out to be the detective's own reflection, "The stranger... was himself. He was standing in front of a huge mirrored wall marking the end of the arcade."

In The Radiant Warrior (1989), part of Leo Frankowski's Conrad Stargard series, the protagonist uses the Brocken Spectre to instill confidence in his recruits.

The Brocken spectre is a key trope in Paul Beatty's The White Boy Shuffle (1996), in which a character, Nicholas Scoby, declares that his dream (he specifically calls it a "Dream and a half, really") is to see his glory through a Brocken spectre (69).

In James Hogg's novel The Private Memoirs and Confessions of a Justified Sinner (1824) the Brocken spectre is used to suggest psychological horror.

Carl Jung in Memories, Dreams, Reflections wrote:

... I had a dream which both frightened and encouraged me. It was night in some unknown place, and I was making slow and painful headway against a mighty wind. Dense fog was flying along everywhere. I had my hands cupped around a tiny light which threatened to go out at any moment... Suddenly I had the feeling that something was coming up behind me. I looked back, and saw a gigantic black figure following me... When I awoke I realized at once that the figure was a "specter of the Brocken," my own shadow on the swirling mists, brought into being by the little light I was carrying.[3]

In Gravity's Rainbow, Geli Tripping and Slothrop make "god-shadows" from a Harz precipice, as Walpurgisnacht wanes to dawn. Additionally, the French–Canadian quadruple agent Rémy Marathe muses episodically about the possibility of witnessing the fabled spectre on the mountains of Tucson in David Foster Wallace's novel Infinite Jest.

The explorer Eric Shipton saw a Brocken Spectre during his first ascent of Nelion on Mount Kenya with Percy Wyn-Harris and Gustav Sommerfelt in 1929. He wrote:

Then the towering buttresses of Batian and Nelion appeared; the rays of the setting sun broke through and, in the east, sharply defined, a great circle of rainbow colours framed our own silhouettes. It was the only perfect Brocken Spectre I have ever seen.[4]

The progressive metal band Fates Warning makes numerous references to the Brocken Spectre in both their debut album title Night on Bröcken and in lyrics on a subsequent song called "The Sorceress" from the album Awaken the Guardian that read "Through the Brocken Spectre rose a luring Angel."

The design of Kriemhild Gretchen, a Witch in the anime series Puella Magi Madoka Magica, may have been inspired by the Brocken spectre.[5]

In Charles Dickens's Little Dorrit, Book II Chapter 23, Flora Finching, in the course of one of her typically free-associative babbles to Mr Clennam, says " ... ere yet Mr F appeared a misty shadow on the horizon paying attentions like the well-known spectre of some place in Germany beginning with a B ... "

"Brocken Spectre" is the title of a track on David Tipper's 2010 album Broken Soul Jamboree.

In the manga and anime Tensou Sentai Goseiger, Brocken Spectres were one of the enemies that Gosei Angels must face.

In the manga One Piece, Brocken spectres make an appearance in the Skypiea story arc.

In the anime Detective Conan, Brocken spectres are mentioned in episode 348 and episode 546 as well.

In "The Problem of Pain" by C.S. Lewis the Brocken spectre is mentioned in the chapter "Heaven".

In chapter 12 of Whose Body? (Lord Peter Wimsey) by Dorothy L. Sayers.

See also

- Diffraction

- Earth's shadow, the shadow that the Earth itself casts on its atmosphere

- Am Fear Liath Mòr, "Big Grey Man" in Scottish Gaelic, a supposed supernatural being found on Scotland's second-highest peak, Ben Macdhui

- Dark Watchers, supposed supernatural beings seen along the crest of the Santa Lucia Mountains, in California

- Gegenschein

- Glory (optical phenomenon)

- Heiligenschein, an optical phenomenon which creates a bright spot around the shadow of the viewer's head

- Opposition surge, the brightening of a rough surface, or an object with many particles, when illuminated from directly behind the observer

References

- "Kriemhild Gretchen". puella-magi.net.

Further reading

- Shenstone, A.G (1954). "The Brocken Spectre". Science. 119 (3094): 511–512. Bibcode:1954Sci...119..511S. doi:10.1126/science.119.3094.511. PMID 17842741.

- Goodrich, Samuel Griswold (1851). Peter Parley's Wonders of the Sea and Sky. Archived from the original on 2007-12-25. Retrieved 2007-07-26.

- Minnaert, M. (1954). The Nature of Light and Colour in the Open Air (Paperback). Dover Books on Earth Sciences. [New York] Dover Publications. ISBN 9780486201962.

- Greenler, R (1980). Rainbows, Halos, and Glories. Cambridge University Press.

- Dunlop, Storm (2002). The Weather Identification Handbook. Harper Collins UK. p. 141. ISBN 1-58574-857-9.

External links

- "What are Brocken Spectres and How Do They Form?", an article on the Online Fellwalking Club page (dead link, 2012 archived version)

- A Cairngorm example, from the Universities Space Research Association

- See a picture and a YouTube videoclip taken by Michael Elcock here [1]

- Snowdon walker captures rare Brocken spectre, BBC News Online, 3 January 2020

- Brocken Spectre panorama

- "Time-lapse video of Brocken specter cast by Mt. Tamalpais fire lookout in Marin County California."

https://en.wikipedia.org/wiki/Looming_and_similar_refraction_phenomena

The green flash and green ray are meteorological optical phenomena that sometimes occur transiently around the moment of sunset or sunrise. When the conditions are right, a distinct green spot is briefly visible above the Sun's upper limb; the green appearance usually lasts for no more than two seconds. Rarely, the green flash can resemble a green ray shooting up from the sunset or sunrise point.

Green flashes occur because the Earth's atmosphere can cause the light from the Sun to separate, or refract, into different colors. Green flashes are a group of similar phenomena that stem from slightly different causes, and therefore, some types of green flashes are more common than others.[1]

https://en.wikipedia.org/wiki/Green_flash

Atmospheric optical phenomena

Atmospheric optical phenomena include:

- Afterglow

- Airglow

- Alexander's band, the dark region between the two bows of a double rainbow.

- Alpenglow

- Anthelion

- Anticrepuscular rays

- Aurora

- Auroral light (northern and southern lights, aurora borealis and aurora australis)

- Belt of Venus

- Brocken Spectre

- Circumhorizontal arc

- Circumzenithal arc

- Cloud iridescence

- Crepuscular rays

- Earth's shadow

- Earthquake lights

- Glories

- Green flash

- Halos, of Sun or Moon, including sun dogs

- Haze

- Heiligenschein or halo effect, partly caused by the opposition effect

- Ice blink

- Light pillar

- Lightning

- Mirages (including Fata Morgana)

- Monochrome Rainbow

- Moon dog

- Moonbow

- Nacreous cloud/Polar stratospheric cloud

- Rainbow

- Subsun

- Sun dog

- Tangent arc

- Tyndall effect

- Upper-atmospheric lightning, including red sprites, Blue jets, and ELVES

- Water sky

Non-atmospheric optical phenomena

Other optical effects

- Asterism, star gems such as star sapphire or star ruby

- Aura, a phenomenon in which gas or dust surrounding an object luminesces or reflects light from the object

- Aventurescence, also called the Schiller effect, spangled gems such as aventurine quartz and sunstone

- Baily's beads, grains of sunlight visible in total solar eclipses.

- camera obscura

- Cathodoluminescence

- Caustics

- Chatoyancy, cat's eye gems such as chrysoberyl cat's eye or aquamarine cat's eye

- Chromatic polarization

- Diffraction, the apparent bending and spreading of light waves when they meet an obstruction

- Dispersion

- Double refraction or birefringence of calcite and other minerals

- Double-slit experiment

- Electroluminescence

- Evanescent wave

- Fluorescence, also called luminescence or photoluminescence

- Mie scattering (Why clouds are white)

- Metamerism as of alexandrite

- Moiré pattern

- Newton's rings

- Phosphorescence

- Pleochroism gems or crystals, which seem "many-colored"

- Polarized light-related phenomena such as double refraction, or Haidinger's brush

- Rayleigh scattering (Why the sky is blue, sunsets are red, and associated phenomena)

- Reflection

- Refraction

- Sonoluminescence

- Synchrotron radiation

- The separation of light into colors by a prism

- Triboluminescence

- Thomson scattering

- Total internal reflection

- Twisted light

- Umov effect

- Zeeman effect

- The ability of light to travel through space or through a vacuum.

Entoptic phenomena

- Diffraction of light through the eyelashes

- Haidinger's brush

- Monocular diplopia (or polyplopia) from reflections at boundaries between the various ocular media

- Phosphenes from stimulation other than by light (e.g., mechanical, electrical) of the rod cells and cones of the eye or of other neurons of the visual system

- Purkinje images.

Optical illusions

- The unusually large size of the Moon as it rises and sets, the moon illusion

- The shape of the sky, the sky bowl

Unexplained phenomena

Some phenomena are yet to be conclusively explained and may possibly be some form of optical phenomena. Some[weasel words] consider many of these "mysteries" to simply be local tourist attractions that are not worthy of thorough investigation.[4]

See also

https://en.wikipedia.org/wiki/Optical_phenomena

In folklore, a will-o'-the-wisp, will-o'-wisp or ignis fatuus (Latin for 'giddy flame'),[1] plural ignes fatui, is an atmospheric ghost light seen by travellers at night, especially over bogs, swamps or marshes. The phenomenon is known in English folk belief, English folklore and much of European folklore by a variety of names, including jack-o'-lantern, friar's lantern and hinkypunk, and is said to mislead travellers by resembling a flickering lamp or lantern.[2] In literature, will-o'-the-wisp metaphorically refers to a hope or goal that leads one on, but is impossible to reach, or something one finds strange or sinister.[3]

Wills-o'-the-wisp appear in folk tales and traditional legends of numerous countries and cultures; notable wills-o'-the-wisp include St. Louis Light in Saskatchewan, the Spooklight in Southwestern Missouri and Northeastern Oklahoma, the Marfa lights of Texas, the Naga fireballs on the Mekong in Thailand, the Paulding Light in Upper Peninsula of Michigan and the Hessdalen light in Norway.

In urban legends, folklore and superstition, wills-o'-the-wisp are typically attributed to ghosts, fairies or elemental spirits. Modern science explains the light aspect as natural phenomena such as bioluminescence or chemiluminescence, caused by the oxidation of phosphine (PH3), diphosphane (P2H4) and methane (CH4) produced by organic decay.

https://en.wikipedia.org/wiki/Will-o%27-the-wisp

An optical vortex (also known as a photonic quantum vortex, screw dislocation or phase singularity) is a zero of an optical field; a point of zero intensity. The term is also used to describe a beam of light that has such a zero in it. The study of these phenomena is known as singular optics.

https://en.wikipedia.org/wiki/Optical_vortex

Synchrotron radiation (also known as magnetobremsstrahlung radiation) is the electromagnetic radiation emitted when relativistic charged particles are subject to an acceleration perpendicular to their velocity (a ⊥ v). It is produced artificially in some types of particle accelerators, or naturally by fast electrons moving through magnetic fields. The radiation produced in this way has a characteristic polarization and the frequencies generated can range over a large portion of the electromagnetic spectrum.[1]

Synchrotron radiation is similar to bremsstrahlung radiation, which is emitted by a charged particle when the acceleration is parallel to the direction of motion. The general term for radiation emitted by particles in a magnetic field is gyromagnetic radiation, for which synchrotron radiation is the ultra-relativistic special case. Radiation emitted by charged particles moving non-relativistically in a magnetic field is called cyclotron emission.[2] For particles in the mildly relativistic range (≈85% of the speed of light), the emission is termed gyro-synchrotron radiation.[3]

In astrophysics, synchrotron emission occurs, for instance, due to ultra-relativistic motion of a charged particle around a black hole.[4] When the source follows a circular geodesic around the black hole, the synchrotron radiation occurs for orbits close to the photosphere where the motion is in the ultra-relativistic regime.

https://en.wikipedia.org/wiki/Synchrotron_radiation

Sonoluminescence is the emission of light from imploding bubbles in a liquid when excited by sound.

Sonoluminescence was first discovered in 1934 at the University of Cologne. It occurs when a sound wave of sufficient intensity induces a gaseous cavity within a liquid to collapse quickly, emitting a burst of light. The phenomenon can be observed in stable single-bubble sonoluminescence (SBSL) and multi-bubble sonoluminescence (MBSL). In 1960, Peter Jarman proposed that sonoluminescence is thermal in origin and might arise from microshocks within collapsing cavities. Later experiments revealed that the temperature inside the bubble during SBSL could reach up to 12,000 kelvins. The exact mechanism behind sonoluminescence remains unknown, with various hypotheses including hotspot, bremsstrahlung , and collision-induced radiation. Some researchers[citation needed] have even speculated that temperatures in sonoluminescing systems could reach millions of kelvins, potentially causing thermonuclear fusion. The phenomenon has also been observed in nature, with the pistol shrimp being the first known instance of an animal producing light through sonoluminescence.

History

The sonoluminescence effect was first discovered at the University of Cologne in 1934 as a result of work on sonar.[1] Hermann Frenzel and H. Schultes put an ultrasound transducer in a tank of photographic developer fluid. They hoped to speed up the development process. Instead, they noticed tiny dots on the film after developing and realized that the bubbles in the fluid were emitting light with the ultrasound turned on.[2] It was too difficult to analyze the effect in early experiments because of the complex environment of a large number of short-lived bubbles. This phenomenon is now referred to as multi-bubble sonoluminescence (MBSL).

In 1960, Peter Jarman from Imperial College of London proposed the most reliable theory of sonoluminescence phenomenon. He concluded that sonoluminescence is basically thermal in origin and that it might possibly arise from microshocks with the collapsing cavities.[3]

In 1989, an experimental advance was introduced which produced stable single-bubble sonoluminescence (SBSL).[citation needed] In single-bubble sonoluminescence, a single bubble trapped in an acoustic standing wave emits a pulse of light with each compression of the bubble within the standing wave. This technique allowed a more systematic study of the phenomenon, because it isolated the complex effects into one stable, predictable bubble. It was realized that the temperature inside the bubble was hot enough to melt steel, as seen in an experiment done in 2012; the temperature inside the bubble as it collapsed reached about 12,000 kelvins.[4] Interest in sonoluminescence was renewed when an inner temperature of such a bubble well above one million kelvins was postulated.[5] This temperature is thus far not conclusively proven; rather, recent experiments indicate temperatures around 20,000 K (19,700 °C; 35,500 °F).[6]

Properties

Sonoluminescence can occur when a sound wave of sufficient intensity induces a gaseous cavity within a liquid to collapse quickly. This cavity may take the form of a pre-existing bubble, or may be generated through a process known as cavitation. Sonoluminescence in the laboratory can be made to be stable, so that a single bubble will expand and collapse over and over again in a periodic fashion, emitting a burst of light each time it collapses. For this to occur, a standing acoustic wave is set up within a liquid, and the bubble will sit at a pressure anti-node of the standing wave. The frequencies of resonance depend on the shape and size of the container in which the bubble is contained.

Some facts about sonoluminescence:[citation needed]

- The light that flashes from the bubbles last between 35 and a few hundred picoseconds long, with peak intensities of the order of 1–10 mW.

- The bubbles are very small when they emit light—about 1 micrometer in diameter—depending on the ambient fluid (e.g., water) and the gas content of the bubble (e.g., atmospheric air).

- Single-bubble sonoluminescence pulses can have very stable periods and positions. In fact, the frequency of light flashes can be more stable than the rated frequency stability of the oscillator making the sound waves driving them. However, the stability analyses of the bubble show that the bubble itself undergoes significant geometric instabilities, due to, for example, the Bjerknes forces and Rayleigh–Taylor instabilities.

- The addition of a small amount of noble gas (such as helium, argon, or xenon) to the gas in the bubble increases the intensity of the emitted light.

Spectral measurements have given bubble temperatures in the range from 2300 K to 5100 K, the exact temperatures depending on experimental conditions including the composition of the liquid and gas.[7] Detection of very high bubble temperatures by spectral methods is limited due to the opacity of liquids to short wavelength light characteristic of very high temperatures.

A study describes a method of determining temperatures based on the formation of plasmas. Using argon bubbles in sulfuric acid, the data shows the presence of ionized molecular oxygen O2+, sulfur monoxide, and atomic argon populating high-energy excited states, which confirms a hypothesis that the bubbles have a hot plasma core.[8] The ionization and excitation energy of dioxygenyl cations, which they observed, is 18 electronvolts. From this they conclude the core temperatures reach at least 20,000 kelvins[6]—hotter than the surface of the sun.

https://en.wikipedia.org/wiki/Sonoluminescence

Thomson scattering is the elastic scattering of electromagnetic radiation by a free charged particle, as described by classical electromagnetism. It is the low-energy limit of Compton scattering: the particle's kinetic energy and photon frequency do not change as a result of the scattering.[1] This limit is valid as long as the photon energy is much smaller than the mass energy of the particle: , or equivalently, if the wavelength of the light is much greater than the Compton wavelength of the particle (e.g., for electrons, longer wavelengths than hard x-rays).

| Light–matter interaction |

|---|

|

| Low-energy phenomena: |

| Photoelectric effect |

| Mid-energy phenomena: |

| Thomson scattering |

| Compton scattering |

| High-energy phenomena: |

| Pair production |

| Photodisintegration |

| Photofission |

https://en.wikipedia.org/wiki/Thomson_scattering

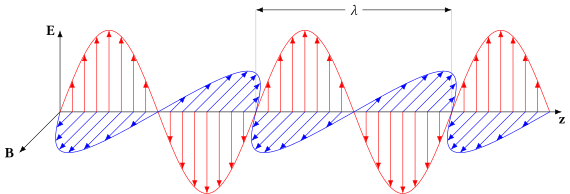

Polarization (also polarisation) is a property of transverse waves which specifies the geometrical orientation of the oscillations.[1][2][3][4][5] In a transverse wave, the direction of the oscillation is perpendicular to the direction of motion of the wave.[4] A simple example of a polarized transverse wave is vibrations traveling along a taut string (see image); for example, in a musical instrument like a guitar string. Depending on how the string is plucked, the vibrations can be in a vertical direction, horizontal direction, or at any angle perpendicular to the string. In contrast, in longitudinal waves, such as sound waves in a liquid or gas, the displacement of the particles in the oscillation is always in the direction of propagation, so these waves do not exhibit polarization. Transverse waves that exhibit polarization include electromagnetic waves such as light and radio waves, gravitational waves,[6] and transverse sound waves (shear waves) in solids.

An electromagnetic wave such as light consists of a coupled oscillating electric field and magnetic field which are always perpendicular to each other; by convention, the "polarization" of electromagnetic waves refers to the direction of the electric field. In linear polarization, the fields oscillate in a single direction. In circular or elliptical polarization, the fields rotate at a constant rate in a plane as the wave travels, either in the right-hand or in the left-hand direction.

Light or other electromagnetic radiation from many sources, such as the sun, flames, and incandescent lamps, consists of short wave trains with an equal mixture of polarizations; this is called unpolarized light. Polarized light can be produced by passing unpolarized light through a polarizer, which allows waves of only one polarization to pass through. The most common optical materials do not affect the polarization of light, but some materials—those that exhibit birefringence, dichroism, or optical activity—affect light differently depending on its polarization. Some of these are used to make polarizing filters. Light also becomes partially polarized when it reflects at an angle from a surface.

According to quantum mechanics, electromagnetic waves can also be viewed as streams of particles called photons. When viewed in this way, the polarization of an electromagnetic wave is determined by a quantum mechanical property of photons called their spin.[7][8] A photon has one of two possible spins: it can either spin in a right hand sense or a left hand sense about its direction of travel. Circularly polarized electromagnetic waves are composed of photons with only one type of spin, either right- or left-hand. Linearly polarized waves consist of photons that are in a superposition of right and left circularly polarized states, with equal amplitude and phases synchronized to give oscillation in a plane.[8]

Polarization is an important parameter in areas of science dealing with transverse waves, such as optics, seismology, radio, and microwaves. Especially impacted are technologies such as lasers, wireless and optical fiber telecommunications, and radar.

Introduction

Wave propagation and polarization

Most sources of light are classified as incoherent and unpolarized (or only "partially polarized") because they consist of a random mixture of waves having different spatial characteristics, frequencies (wavelengths), phases, and polarization states. However, for understanding electromagnetic waves and polarization in particular, it is easier to just consider coherent plane waves; these are sinusoidal waves of one particular direction (or wavevector), frequency, phase, and polarization state. Characterizing an optical system in relation to a plane wave with those given parameters can then be used to predict its response to a more general case, since a wave with any specified spatial structure can be decomposed into a combination of plane waves (its so-called angular spectrum). Incoherent states can be modeled stochastically as a weighted combination of such uncorrelated waves with some distribution of frequencies (its spectrum), phases, and polarizations.

Transverse electromagnetic waves

Electromagnetic waves (such as light), traveling in free space or another homogeneous isotropic non-attenuating medium, are properly described as transverse waves, meaning that a plane wave's electric field vector E and magnetic field H are each in some direction perpendicular to (or "transverse" to) the direction of wave propagation; E and H are also perpendicular to each other. By convention, the "polarization" direction of an electromagnetic wave is given by its electric field vector. Considering a monochromatic plane wave of optical frequency f (light of vacuum wavelength λ has a frequency of f = c/λ where c is the speed of light), let us take the direction of propagation as the z axis. Being a transverse wave the E and H fields must then contain components only in the x and y directions whereas Ez = Hz = 0. Using complex (or phasor) notation, the instantaneous physical electric and magnetic fields are given by the real parts of the complex quantities occurring in the following equations. As a function of time t and spatial position z (since for a plane wave in the +z direction the fields have no dependence on x or y) these complex fields can be written as:

and

where λ = λ0/n is the wavelength in the medium (whose refractive index is n) and T = 1/f is the period of the wave. Here ex, ey, hx, and hy are complex numbers. In the second more compact form, as these equations are customarily expressed, these factors are described using the wavenumber and angular frequency (or "radian frequency") . In a more general formulation with propagation not restricted to the +z direction, then the spatial dependence kz is replaced by where is called the wave vector, the magnitude of which is the wavenumber.

Thus the leading vectors e and h each contain up to two nonzero (complex) components describing the amplitude and phase of the wave's x and y polarization components (again, there can be no z polarization component for a transverse wave in the +z direction). For a given medium with a characteristic impedance , h is related to e by:

and

- .

In a dielectric, η is real and has the value η0/n, where n is the refractive index and η0 is the impedance of free space. The impedance will be complex in a conducting medium.[clarification needed] Note that given that relationship, the dot product of E and H must be zero:[dubious ]

indicating that these vectors are orthogonal (at right angles to each other), as expected.

So knowing the propagation direction (+z in this case) and η, one can just as well specify the wave in terms of just ex and ey describing the electric field. The vector containing ex and ey (but without the z component which is necessarily zero for a transverse wave) is known as a Jones vector. In addition to specifying the polarization state of the wave, a general Jones vector also specifies the overall magnitude and phase of that wave. Specifically, the intensity of the light wave is proportional to the sum of the squared magnitudes of the two electric field components:

However, the wave's state of polarization is only dependent on the (complex) ratio of ey to ex. So let us just consider waves whose |ex|2 + |ey|2 = 1; this happens to correspond to an intensity of about .00133 watts per square meter in free space (where ). And since the absolute phase of a wave is unimportant in discussing its polarization state, let us stipulate that the phase of ex is zero, in other words ex is a real number while ey may be complex. Under these restrictions, ex and ey can be represented as follows:

where the polarization state is now fully parameterized by the value of Q (such that −1 < Q < 1) and the relative phase .

Non-transverse waves

In addition to transverse waves, there are many wave motions where the oscillation is not limited to directions perpendicular to the direction of propagation. These cases are far beyond the scope of the current article which concentrates on transverse waves (such as most electromagnetic waves in bulk media), but one should be aware of cases where the polarization of a coherent wave cannot be described simply using a Jones vector, as we have just done.

Just considering electromagnetic waves, we note that the preceding discussion strictly applies to plane waves in a homogeneous isotropic non-attenuating medium, whereas in an anisotropic medium (such as birefringent crystals as discussed below) the electric or magnetic field may have longitudinal as well as transverse components. In those cases the electric displacement D and magnetic flux density B[clarification needed] still obey the above geometry but due to anisotropy in the electric susceptibility (or in the magnetic permeability), now given by a tensor, the direction of E (or H) may differ from that of D (or B). Even in isotropic media, so-called inhomogeneous waves can be launched into a medium whose refractive index has a significant imaginary part (or "extinction coefficient") such as metals;[clarification needed] these fields are also not strictly transverse.[9]: 179–184 [10]: 51–52 Surface waves or waves propagating in a waveguide (such as an optical fiber) are generally not transverse waves, but might be described as an electric or magnetic transverse mode, or a hybrid mode.

Even in free space, longitudinal field components can be generated in focal regions, where the plane wave approximation breaks down. An extreme example is radially or tangentially polarized light, at the focus of which the electric or magnetic field respectively is entirely longitudinal (along the direction of propagation).[11]

For longitudinal waves such as sound waves in fluids, the direction of oscillation is by definition along the direction of travel, so the issue of polarization is normally not even mentioned. On the other hand, sound waves in a bulk solid can be transverse as well as longitudinal, for a total of three polarization components. In this case, the transverse polarization is associated with the direction of the shear stress and displacement in directions perpendicular to the propagation direction, while the longitudinal polarization describes compression of the solid and vibration along the direction of propagation. The differential propagation of transverse and longitudinal polarizations is important in seismology.

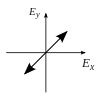

Polarization state

Polarization is best understood by initially considering only pure polarization states, and only a coherent sinusoidal wave at some optical frequency. The vector in the adjacent diagram might describe the oscillation of the electric field emitted by a single-mode laser (whose oscillation frequency would be typically 1015 times faster). The field oscillates in the x-y plane, along the page, with the wave propagating in the z direction, perpendicular to the page. The first two diagrams below trace the electric field vector over a complete cycle for linear polarization at two different orientations; these are each considered a distinct state of polarization (SOP). Note that the linear polarization at 45° can also be viewed as the addition of a horizontally linearly polarized wave (as in the leftmost figure) and a vertically polarized wave of the same amplitude in the same phase.

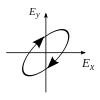

Now if one were to introduce a phase shift in between those horizontal and vertical polarization components, one would generally obtain elliptical polarization[12] as is shown in the third figure. When the phase shift is exactly ±90°, then circular polarization is produced (fourth and fifth figures). Thus is circular polarization created in practice, starting with linearly polarized light and employing a quarter-wave plate to introduce such a phase shift. The result of two such phase-shifted components in causing a rotating electric field vector is depicted in the animation on the right. Note that circular or elliptical polarization can involve either a clockwise or counterclockwise rotation of the field. These correspond to distinct polarization states, such as the two circular polarizations shown above.

Of course the orientation of the x and y axes used in this description is arbitrary. The choice of such a coordinate system and viewing the polarization ellipse in terms of the x and y polarization components, corresponds to the definition of the Jones vector (below) in terms of those basis polarizations. One would typically choose axes to suit a particular problem such as x being in the plane of incidence. Since there are separate reflection coefficients for the linear polarizations in and orthogonal to the plane of incidence (p and s polarizations, see below), that choice greatly simplifies the calculation of a wave's reflection from a surface.

Moreover, one can use as basis functions any pair of orthogonal polarization states, not just linear polarizations. For instance, choosing right and left circular polarizations as basis functions simplifies the solution of problems involving circular birefringence (optical activity) or circular dichroism.

Polarization ellipse

Consider a purely polarized monochromatic wave. If one were to plot the electric field vector over one cycle of oscillation, an ellipse would generally be obtained, as is shown in the figure, corresponding to a particular state of elliptical polarization. Note that linear polarization and circular polarization can be seen as special cases of elliptical polarization.

A polarization state can then be described in relation to the geometrical parameters of the ellipse, and its "handedness", that is, whether the rotation around the ellipse is clockwise or counter clockwise. One parameterization of the elliptical figure specifies the orientation angle ψ, defined as the angle between the major axis of the ellipse and the x-axis[13] along with the ellipticity ε = a/b, the ratio of the ellipse's major to minor axis.[14][15][16] (also known as the axial ratio). The ellipticity parameter is an alternative parameterization of an ellipse's eccentricity or the ellipticity angle, as is shown in the figure.[13] The angle χ is also significant in that the latitude (angle from the equator) of the polarization state as represented on the Poincaré sphere (see below) is equal to ±2χ. The special cases of linear and circular polarization correspond to an ellipticity ε of infinity and unity (or χ of zero and 45°) respectively.

Jones vector

Full information on a completely polarized state is also provided by the amplitude and phase of oscillations in two components of the electric field vector in the plane of polarization. This representation was used above to show how different states of polarization are possible. The amplitude and phase information can be conveniently represented as a two-dimensional complex vector (the Jones vector):

Here and denote the amplitude of the wave in the two components of the electric field vector, while and represent the phases. The product of a Jones vector with a complex number of unit modulus gives a different Jones vector representing the same ellipse, and thus the same state of polarization. The physical electric field, as the real part of the Jones vector, would be altered but the polarization state itself is independent of absolute phase. The basis vectors used to represent the Jones vector need not represent linear polarization states (i.e. be real). In general any two orthogonal states can be used, where an orthogonal vector pair is formally defined as one having a zero inner product. A common choice is left and right circular polarizations, for example to model the different propagation of waves in two such components in circularly birefringent media (see below) or signal paths of coherent detectors sensitive to circular polarization.

Coordinate frame

Regardless of whether polarization state is represented using geometric parameters or Jones vectors, implicit in the parameterization is the orientation of the coordinate frame. This permits a degree of freedom, namely rotation about the propagation direction. When considering light that is propagating parallel to the surface of the Earth, the terms "horizontal" and "vertical" polarization are often used, with the former being associated with the first component of the Jones vector, or zero azimuth angle. On the other hand, in astronomy the equatorial coordinate system is generally used instead, with the zero azimuth (or position angle, as it is more commonly called in astronomy to avoid confusion with the horizontal coordinate system) corresponding to due north.

s and p designations

Another coordinate system frequently used relates to the plane of incidence. This is the plane made by the incoming propagation direction and the vector perpendicular to the plane of an interface, in other words, the plane in which the ray travels before and after reflection or refraction. The component of the electric field parallel to this plane is termed p-like (parallel) and the component perpendicular to this plane is termed s-like (from senkrecht, German for perpendicular). Polarized light with its electric field along the plane of incidence is thus denoted p-polarized, while light whose electric field is normal to the plane of incidence is called s-polarized. P polarization is commonly referred to as transverse-magnetic (TM), and has also been termed pi-polarized or tangential plane polarized. S polarization is also called transverse-electric (TE), as well as sigma-polarized or sagittal plane polarized.

Degree of polarization

Degree of polarization (DOP) is a quantity used to describe the portion of an electromagnetic wave which is polarized. A perfectly polarized wave has a DOP of 100%, whereas an unpolarized wave has a DOP of 0%. A wave which is partially polarized, and therefore can be represented by a superposition of a polarized and unpolarized component, will have a DOP somewhere in between 0 and 100%. DOP is calculated as the fraction of the total power that is carried by the polarized component of the wave.

DOP can be used to map the strain field in materials when considering the DOP of the photoluminescence. The polarization of the photoluminescence is related to the strain in a material by way of the given material's photoelasticity tensor.

DOP is also visualized using the Poincaré sphere representation of a polarized beam. In this representation, DOP is equal to the length of the vector measured from the center of the sphere.

Unpolarized and partially polarized light

Unpolarized light is light with a random, time-varying polarization. Natural light, like most other common sources of visible light, produced independently by a large number of atoms or molecules whose emissions are uncorrelated. This term is somewhat inexact, since at any instant of time at one location there is a definite plane of polarization; however, it implies that the polarization changes so quickly in time that it will not be measured or relevant to the outcome of an experiment.

Unpolarized light can be produced from the incoherent combination of vertical and horizontal linearly polarized light, or right- and left-handed circularly polarized light.[17] Conversely, the two constituent linearly polarized states of unpolarized light cannot form an interference pattern, even if rotated into alignment (Fresnel–Arago 3rd law).[18]

A so-called depolarizer acts on a polarized beam to create one in which the polarization varies so rapidly across the beam that it may be ignored in the intended applications. Conversely, a polarizer acts on an unpolarized beam or arbitrarily polarized beam to create one which is polarized.

Unpolarized light can be described as a mixture of two independent oppositely polarized streams, each with half the intensity.[19][20] Light is said to be partially polarized when there is more power in one of these streams than the other. At any particular wavelength, partially polarized light can be statistically described as the superposition of a completely unpolarized component and a completely polarized one.[21]: 346–347 [22]: 330 One may then describe the light in terms of the degree of polarization and the parameters of the polarized component. That polarized component can be described in terms of a Jones vector or polarization ellipse. However, in order to also describe the degree of polarization, one normally employs Stokes parameters to specify a state of partial polarization.[21]: 351, 374–375Implications for reflection and propagation

Polarization in wave propagation

In a vacuum, the components of the electric field propagate at the speed of light, so that the phase of the wave varies in space and time while the polarization state does not. That is, the electric field vector e of a plane wave in the +z direction follows: