https://en.wikipedia.org/wiki/Rate%E2%80%93distortion_theory

https://en.wikipedia.org/wiki/Entropy_(information_theory)

https://en.wikipedia.org/wiki/Deblocking_filter

https://en.wikipedia.org/wiki/Video_quality

https://en.wikipedia.org/wiki/Video#Characteristics_of_video_streams

https://en.wikipedia.org/wiki/Interlaced_video

https://en.wikipedia.org/wiki/Video_compression_picture_types

https://en.wikipedia.org/wiki/Film_frame

https://en.wikipedia.org/wiki/Display_resolution

https://en.wikipedia.org/wiki/Wavelet_transform

https://en.wikipedia.org/wiki/Image_resolution

https://en.wikipedia.org/wiki/Compression_artifact

https://en.wikipedia.org/wiki/Sampling_(signal_processing)

https://en.wikipedia.org/wiki/Sub-band_coding

https://en.wikipedia.org/wiki/Speech_coding

https://en.wikipedia.org/wiki/Dynamic_range

https://en.wikipedia.org/wiki/Latency_(audio)

https://en.wikipedia.org/wiki/Convolution

https://en.wikipedia.org/wiki/Companding

https://en.wikipedia.org/wiki/Line_spectral_pairs

https://en.wikipedia.org/wiki/Log_area_ratio

https://en.wikipedia.org/wiki/Linear_predictive_coding

https://en.wikipedia.org/wiki/Psychoacoustics

https://en.wikipedia.org/wiki/Motion_estimation

https://en.wikipedia.org/wiki/Byte_pair_encoding

https://en.wikipedia.org/wiki/Unary_coding

https://en.wikipedia.org/wiki/Elias_gamma_coding

https://en.wikipedia.org/wiki/Golomb_coding

https://en.wikipedia.org/wiki/Reference_implementation

https://en.wikipedia.org/wiki/Key_frame

https://en.wikipedia.org/wiki/Global_motion_compensation

https://en.wikipedia.org/wiki/Scan-Line_Interleave

https://en.wikipedia.org/wiki/Interlaced_video

https://en.wikipedia.org/wiki/Intra-frame_coding

https://en.wikipedia.org/wiki/Inter_frame

https://en.wikipedia.org/wiki/Film_frame#Video_frames

https://en.wikipedia.org/wiki/Akinetopsia

https://en.wikipedia.org/wiki/Frame_line

https://en.wikipedia.org/wiki/Fourth_wall

https://en.wikipedia.org/wiki/Magnetic_resonance_imaging

https://en.wikipedia.org/wiki/Freeze-frame_shot

https://en.wikipedia.org/wiki/Screenshot

https://en.wikipedia.org/wiki/Analog_signal

https://en.wikipedia.org/wiki/SMPTE_timecode

Psychoacoustics is the branch of psychophysics involving the scientific study of sound perception and audiology—how human auditory system perceives various sounds. More specifically, it is the branch of science studying the psychological responses associated with sound (including noise, speech, and music). Psychoacoustics is an interdisciplinary field of many areas, including psychology, acoustics, electronic engineering, physics, biology, physiology, and computer science.[1]

Background

Hearing is not a purely mechanical phenomenon of wave propagation, but is also a sensory and perceptual event; in other words, when a person hears something, that something arrives at the ear as a mechanical sound wave traveling through the air, but within the ear it is transformed into neural action potentials. The outer hair cells (OHC) of a mammalian cochlea give rise to enhanced sensitivity and better[clarification needed] frequency resolution of the mechanical response of the cochlear partition. These nerve pulses then travel to the brain where they are perceived. Hence, in many problems in acoustics, such as for audio processing, it is advantageous to take into account not just the mechanics of the environment, but also the fact that both the ear and the brain are involved in a person's listening experience.[clarification needed][citation needed]

The inner ear, for example, does significant signal processing in converting sound waveforms into neural stimuli, so certain differences between waveforms may be imperceptible.[2] Data compression techniques, such as MP3, make use of this fact.[3] In addition, the ear has a nonlinear response to sounds of different intensity levels; this nonlinear response is called loudness. Telephone networks and audio noise reduction systems make use of this fact by nonlinearly compressing data samples before transmission and then expanding them for playback.[4] Another effect of the ear's nonlinear response is that sounds that are close in frequency produce phantom beat notes, or intermodulation distortion products.[5]

The term psychoacoustics also arises in discussions about cognitive psychology and the effects that personal expectations, prejudices, and predispositions may have on listeners' relative evaluations and comparisons of sonic aesthetics and acuity and on listeners' varying determinations about the relative qualities of various musical instruments and performers. The expression that one "hears what one wants (or expects) to hear" may pertain in such discussions.[citation needed]

Limits of perception

The human ear can nominally hear sounds in the range 20 Hz (0.02 kHz) to 20,000 Hz (20 kHz). The upper limit tends to decrease with age; most adults are unable to hear above 16 kHz. The lowest frequency that has been identified as a musical tone is 12 Hz under ideal laboratory conditions.[6] Tones between 4 and 16 Hz can be perceived via the body's sense of touch.

Frequency resolution of the ear is about 3.6 Hz within the octave of 1000–2000 Hz. That is, changes in pitch larger than 3.6 Hz can be perceived in a clinical setting.[6] However, even smaller pitch differences can be perceived through other means. For example, the interference of two pitches can often be heard as a repetitive variation in the volume of the tone. This amplitude modulation occurs with a frequency equal to the difference in frequencies of the two tones and is known as beating.

The semitone scale used in Western musical notation is not a linear frequency scale but logarithmic. Other scales have been derived directly from experiments on human hearing perception, such as the mel scale and Bark scale (these are used in studying perception, but not usually in musical composition), and these are approximately logarithmic in frequency at the high-frequency end, but nearly linear at the low-frequency end.

The intensity range of audible sounds is enormous. Human eardrums are sensitive to variations in the sound pressure and can detect pressure changes from as small as a few micropascals (μPa) to greater than 100 kPa. For this reason, sound pressure level is also measured logarithmically, with all pressures referenced to 20 μPa (or 1.97385×10−10 atm). The lower limit of audibility is therefore defined as 0 dB, but the upper limit is not as clearly defined. The upper limit is more a question of the limit where the ear will be physically harmed or with the potential to cause noise-induced hearing loss.

A more rigorous exploration of the lower limits of audibility determines that the minimum threshold at which a sound can be heard is frequency dependent. By measuring this minimum intensity for testing tones of various frequencies, a frequency-dependent absolute threshold of hearing (ATH) curve may be derived. Typically, the ear shows a peak of sensitivity (i.e., its lowest ATH) between 1–5 kHz, though the threshold changes with age, with older ears showing decreased sensitivity above 2 kHz.[7]

The ATH is the lowest of the equal-loudness contours. Equal-loudness contours indicate the sound pressure level (dB SPL), over the range of audible frequencies, that are perceived as being of equal loudness. Equal-loudness contours were first measured by Fletcher and Munson at Bell Labs in 1933 using pure tones reproduced via headphones, and the data they collected are called Fletcher–Munson curves. Because subjective loudness was difficult to measure, the Fletcher–Munson curves were averaged over many subjects.

Robinson and Dadson refined the process in 1956 to obtain a new set of equal-loudness curves for a frontal sound source measured in an anechoic chamber. The Robinson-Dadson curves were standardized as ISO 226 in 1986. In 2003, ISO 226 was revised as equal-loudness contour using data collected from 12 international studies.

Sound localization

Sound localization is the process of determining the location of a sound source. The brain utilizes subtle differences in loudness, tone and timing between the two ears to allow us to localize sound sources.[8] Localization can be described in terms of three-dimensional position: the azimuth or horizontal angle, the zenith or vertical angle, and the distance (for static sounds) or velocity (for moving sounds).[9] Humans, as most four-legged animals, are adept at detecting direction in the horizontal, but less so in the vertical directions due to the ears being placed symmetrically. Some species of owls have their ears placed asymmetrically and can detect sound in all three planes, an adaption to hunt small mammals in the dark.[10]

Masking effects

Suppose a listener can hear a given acoustical signal under silent conditions. When a signal is playing while another sound is being played (a masker), the signal has to be stronger for the listener to hear it. The masker does not need to have the frequency components of the original signal for masking to happen. A masked signal can be heard even though it is weaker than the masker. Masking happens when a signal and a masker are played together—for instance, when one person whispers while another person shouts—and the listener doesn't hear the weaker signal as it has been masked by the louder masker. Masking can also happen to a signal before a masker starts or after a masker stops. For example, a single sudden loud clap sound can make sounds inaudible that immediately precede or follow. The effects of backward masking is weaker than forward masking. The masking effect has been widely studied in psychoacoustical research. One can change the level of the masker and measure the threshold, then create a diagram of a psychophysical tuning curve that will reveal similar features. Masking effects are also used in lossy audio encoding, such as MP3.

Missing fundamental

When presented with a harmonic series of frequencies in the relationship 2f, 3f, 4f, 5f, etc. (where f is a specific frequency), humans tend to perceive that the pitch is f. An audible example can be found on YouTube.[11]

Software

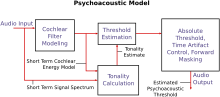

The psychoacoustic model provides for high quality lossy signal compression by describing which parts of a given digital audio signal can be removed (or aggressively compressed) safely—that is, without significant losses in the (consciously) perceived quality of the sound.

It can explain how a sharp clap of the hands might seem painfully loud in a quiet library but is hardly noticeable after a car backfires on a busy, urban street. This provides great benefit to the overall compression ratio, and psychoacoustic analysis routinely leads to compressed music files that are one-tenth to one-twelfth the size of high-quality masters, but with discernibly less proportional quality loss. Such compression is a feature of nearly all modern lossy audio compression formats. Some of these formats include Dolby Digital (AC-3), MP3, Opus, Ogg Vorbis, AAC, WMA, MPEG-1 Layer II (used for digital audio broadcasting in several countries) and ATRAC, the compression used in MiniDisc and some Walkman models.

Psychoacoustics is based heavily on human anatomy, especially the ear's limitations in perceiving sound as outlined previously. To summarize, these limitations are:

- High-frequency limit

- Absolute threshold of hearing

- Temporal masking (forward masking, backward masking)

- Simultaneous masking (also known as spectral masking)

A compression algorithm can assign a lower priority to sounds outside the range of human hearing. By carefully shifting bits away from the unimportant components and toward the important ones, the algorithm ensures that the sounds a listener is most likely to perceive are most accurately represented.

Music

Psychoacoustics includes topics and studies that are relevant to music psychology and music therapy. Theorists such as Benjamin Boretz consider some of the results of psychoacoustics to be meaningful only in a musical context.[12]

Irv Teibel's Environments series LPs (1969–79) are an early example of commercially available sounds released expressly for enhancing psychological abilities.[13]

Applied psychoacoustics

Psychoacoustics has long enjoyed a symbiotic relationship with computer science. Internet pioneers J. C. R. Licklider and Bob Taylor both completed graduate-level work in psychoacoustics, while BBN Technologies originally specialized in consulting on acoustics issues before it began building the first packet-switched network.

Licklider wrote a paper entitled "A duplex theory of pitch perception".[14]

Psychoacoustics is applied within many fields of software development, where developers map proven and experimental mathematical patterns in digital signal processing. Many audio compression codecs such as MP3 and Opus use a psychoacoustic model to increase compression ratios. The success of conventional audio systems for the reproduction of music in theatres and homes can be attributed to psychoacoustics[15] and psychoacoustic considerations gave rise to novel audio systems, such as psychoacoustic sound field synthesis.[16] Furthermore, scientists have experimented with limited success in creating new acoustic weapons, which emit frequencies that may impair, harm, or kill.[17] Psychoacoustics are also leveraged in sonification to make multiple independent data dimensions audible and easily interpretable.[18] This enables auditory guidance without the need for spatial audio and in sonification computer games[19] and other applications, such as drone flying and image-guided surgery.[20] It is also applied today within music, where musicians and artists continue to create new auditory experiences by masking unwanted frequencies of instruments, causing other frequencies to be enhanced. Yet another application is in the design of small or lower-quality loudspeakers, which can use the phenomenon of missing fundamentals to give the effect of bass notes at lower frequencies than the loudspeakers are physically able to produce (see references).

Automobile manufacturers engineer their engines and even doors to have a certain sound.[21]

See also

Related fields

Psychoacoustic topics

- A-weighting, a commonly used perceptual loudness transfer function

- ABX test

- Auditory illusions

- Auditory scene analysis incl. 3D-sound perception, localization

- Binaural beats

- Blind signal separation

- Combination tone (also Tartini tone)

- Deutsch's Scale illusion

- Equivalent rectangular bandwidth (ERB)

- Franssen effect

- Glissando illusion

- Hypersonic effect

- Language processing

- Levitin effect

- Misophonia

- Musical tuning

- Noise health effects

- Octave illusion

- Pitch (music)

- Precedence effect

- Psycholinguistics

- Rate-distortion theory

- Sound localization

- Sound of fingernails scraping chalkboard

- Sound masking

- Speech perception

- Speech recognition

- Timbre

- Tritone paradox

References

Notes

- Tarmy, James (5 August 2014). "Mercedes Doors Have a Signature Sound: Here's How". Bloomberg Business. Retrieved 10 August 2020.

Sources

- E. Larsen and R.M. Aarts (2004), Audio Bandwidth extension. Application of Psychoacoustics, Signal Processing and Loudspeaker Design., J. Wiley.

- Larsen E.; Aarts R.M. (March 2002). "Reproducing Low-pitched Signals through Small Loudspeakers" (PDF). Journal of the Audio Engineering Society. 50 (3): 147–64.[dead link]

- Oohashi T.; Kawai N.; Nishina E.; Honda M.; Yagi R.; Nakamura S.; Morimoto M.; Maekawa T.; Yonekura Y.; Shibasaki H. (February 2006). "The Role of Biological System other Than Auditory Air-conduction in the Emergence of the Hypersonic Effect". Brain Research. 1073–1074: 339–347. doi:10.1016/j.brainres.2005.12.096. PMID 16458271.

External links

- The Musical Ear—Perception of Sound Archived 2005-12-25 at the Wayback Machine

- Müller C, Schnider P, Persterer A, Opitz M, Nefjodova MV, Berger M (1993). "[Applied psychoacoustics in space flight]". Wien Med Wochenschr (in German). 143 (23–24): 633–5. PMID 8178525.—Simulation of Free-field Hearing by Head Phones

- GPSYCHO—An Open-source Psycho-Acoustic and Noise-Shaping Model for ISO-Based MP3 Encoders.

- Definition of: perceptual audio coding

- Java appletdemonstrating masking

- Temporal Masking

- HyperPhysics Concepts—Sound and Hearing

- The MP3 as Standard Object

https://en.wikipedia.org/wiki/Psychoacoustics

https://en.wikipedia.org/wiki/Category:Psychoacoustics

https://en.wikipedia.org/wiki/Institute_of_Higher_Nervous_Activity_and_Neurophysiology

https://en.wikipedia.org/wiki/Recall_(memory)

https://en.wikipedia.org/wiki/Amodal_perception

https://en.wikipedia.org/wiki/Illusory_contours

https://en.wikipedia.org/wiki/Optical_illusion

https://en.wikipedia.org/wiki/Motion_aftereffect

https://en.wikipedia.org/wiki/Auditory_illusion

https://en.wikipedia.org/wiki/Sound_recording_and_reproduction

https://en.wikipedia.org/wiki/Atmospheric_pressure

https://en.wikipedia.org/wiki/Program_(machine)

https://en.wikipedia.org/wiki/Music_roll

https://en.wikipedia.org/wiki/Pneumatics

https://en.wikipedia.org/wiki/Hydraulic_cylinder

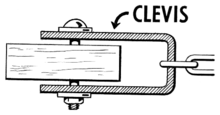

A clevis fastener is a two-piece fastener system consisting of a clevis and a clevis pin head. The clevis is a U-shaped piece that has holes at the end of the prongs to accept the clevis pin. The clevis pin is similar to a bolt, but is either partially threaded or unthreaded with a cross-hole for a split pin. A tang is a piece that is sometimes fitted in the space within the clevis and is held in place by the clevis pin.[1] The combination of a simple clevis fitted with a pin is commonly called a shackle, although a clevis and pin is only one of the many forms a shackle may take.

Clevises are used in a wide variety of fasteners used in farming equipment and sailboat rigging, as well as the automotive, aircraft and construction industries. They are also widely used to attach control surfaces and other accessories to servo controls in airworthy model aircraft. As a part of a fastener, a clevis provides a method of allowing rotation in some axes while restricting rotation in others.

https://en.wikipedia.org/wiki/Clevis_fastener

https://en.wikipedia.org/wiki/Split_pin

https://en.wikipedia.org/wiki/Failure_rate

https://en.wikipedia.org/wiki/Shackle#Twist_shackle

https://en.wikipedia.org/wiki/Shear_force

https://en.wikipedia.org/wiki/Compression_(physics)

https://en.wikipedia.org/wiki/Sound#Waves

https://en.wikipedia.org/wiki/Action_potential

https://en.wikipedia.org/wiki/Telephone_network

https://en.wikipedia.org/wiki/Cochlea

https://en.wikipedia.org/wiki/Auditory_masking#Temporal_masking

https://en.wikipedia.org/wiki/Hearing_range

https://en.wikipedia.org/wiki/Lossy_compression

https://en.wikipedia.org/wiki/Missing_fundamental

https://en.wikipedia.org/wiki/Backward_masking

https://en.wikipedia.org/wiki/Auditory_masking

https://en.wikipedia.org/wiki/Anechoic_chamber

https://en.wikipedia.org/wiki/Absolute_threshold_of_hearing

https://en.wikipedia.org/wiki/Tritone_paradox

https://en.wikipedia.org/wiki/Sound_masking

https://en.wikipedia.org/wiki/Sound_localization

https://en.wikipedia.org/wiki/Rate%E2%80%93distortion_theory

https://en.wikipedia.org/wiki/Psycholinguistics

https://en.wikipedia.org/wiki/Precedence_effect

https://en.wikipedia.org/wiki/Musical_tuning

https://en.wikipedia.org/wiki/Misophonia

https://en.wikipedia.org/wiki/Equivalent_rectangular_bandwidth

https://en.wikipedia.org/wiki/Signal_separation

https://en.wikipedia.org/wiki/Auditory_scene_analysis

https://en.wikipedia.org/wiki/Auditory_illusion

https://en.wikipedia.org/wiki/Wave_field_synthesis

https://en.wikipedia.org/wiki/Image-guided_surgery

https://en.wikipedia.org/wiki/Microsoft_HoloLens

https://en.wikipedia.org/wiki/Endovenous_laser_treatment

https://en.wikipedia.org/wiki/Category:Laser_medicine

https://en.wikipedia.org/wiki/Data_sonification

In 1913, Dr. Edmund Fournier d'Albe of University of Birmingham invented the optophone, which used selenium photosensors to detect black print and convert it into an audible output.[4] A blind reader could hold a book up to the device and hold an apparatus to the area she wanted to read. The optophone played a set group of notes: g c' d' e' g' b' c e. Each note corresponded with a position on the optophone's reading area, and that note was silenced if black ink was sensed. Thus, the missing notes indicated the positions where black ink was on the page and could be used to read.

https://en.wikipedia.org/wiki/Sonification

https://en.wikipedia.org/wiki/Optophone

https://en.wikipedia.org/wiki/Sonification

https://en.wikipedia.org/wiki/Category:Auditory_displays

https://en.wikipedia.org/wiki/Category:User_interface_techniques

https://en.wikipedia.org/wiki/Category:Display_technology

https://en.wikipedia.org/wiki/Category:Flexible_displays

https://en.wikipedia.org/wiki/Category:Vacuum_tube_displays

https://en.wikipedia.org/wiki/RCA_Dimensia

https://en.wikipedia.org/wiki/Magic_eye_tube

https://en.wikipedia.org/wiki/Optoelectronics

https://en.wikipedia.org/wiki/Category:Display_technology

https://en.wikipedia.org/wiki/Category:Multimodal_interaction

https://en.wikipedia.org/wiki/Sonar

https://en.wikipedia.org/wiki/Auditory_display

https://en.wikipedia.org/wiki/Architectural_acoustics

https://en.wikipedia.org/wiki/Monochord

https://en.wikipedia.org/wiki/Graduation_(scale)

https://en.wikipedia.org/wiki/Clock_face

https://en.wikipedia.org/wiki/Armillary_sphere

https://en.wikipedia.org/wiki/Resonator

https://en.wikipedia.org/wiki/Endpin

https://en.wikipedia.org/wiki/Tuning_mechanisms_for_stringed_instruments

https://en.wikipedia.org/wiki/Forth_Dimension_Displays

https://en.wikipedia.org/wiki/Grating_light_valve

https://en.wikipedia.org/wiki/Monochrome

https://en.wikipedia.org/wiki/Nine-segment_display

https://en.wikipedia.org/wiki/Open_Pluggable_Specification

https://en.wikipedia.org/wiki/Optoelectronics

https://en.wikipedia.org/wiki/Fog_display

https://en.wikipedia.org/wiki/Focus-plus-context_screen

https://en.wikipedia.org/wiki/Flight_instruments

https://en.wikipedia.org/wiki/Flicker-free

https://en.wikipedia.org/wiki/Flicker_(screen)

https://en.wikipedia.org/wiki/Flexible_organic_light-emitting_diode

https://en.wikipedia.org/wiki/Film-type_patterned_retarder

https://en.wikipedia.org/wiki/Fiber-optic_display

https://en.wikipedia.org/wiki/Ferroelectric_liquid_crystal_display

https://en.wikipedia.org/wiki/Electrowetting

https://en.wikipedia.org/wiki/Electronic_paper

https://en.wikipedia.org/wiki/Electronic_flight_instrument_system

https://en.wikipedia.org/wiki/Electroluminescent_wire

https://en.wikipedia.org/wiki/Electrofluidic_display_technology

https://en.wikipedia.org/wiki/EBeam

https://en.wikipedia.org/wiki/Digital_paper

https://en.wikipedia.org/wiki/Crystal_LED

https://en.wikipedia.org/wiki/Comparison_of_CRT,_LCD,_plasma,_and_OLED_displays

https://en.wikipedia.org/wiki/Burst_dimming

https://en.wikipedia.org/wiki/Broadcast_reference_monitor

https://en.wikipedia.org/wiki/Broadcast_reference_monitor

https://en.wikipedia.org/wiki/Lower_third

https://en.wikipedia.org/wiki/Contrast_ratio#Dynamic_contrast_(DC)

https://en.wikipedia.org/wiki/Deinterlacing

https://en.wikipedia.org/wiki/Video_post-processing

https://en.wikipedia.org/wiki/Image_scaling

https://en.wikipedia.org/wiki/Image_scaling

https://en.wikipedia.org/wiki/Video_scaler

https://en.wikipedia.org/wiki/Blue_only_mode

https://en.wikipedia.org/wiki/Black_Widow_(paint_mix)

https://en.wikipedia.org/wiki/Black_level

https://en.wikipedia.org/wiki/Autostereoscopy

https://en.wikipedia.org/wiki/Audification

https://en.wikipedia.org/wiki/Alternate_lighting_of_surfaces

https://en.wikipedia.org/wiki/Active_matrix

https://en.wikipedia.org/wiki/3D_display

https://en.wikipedia.org/wiki/Gain_(projection_screens)

https://en.wikipedia.org/wiki/Holographic_screen

https://en.wikipedia.org/wiki/Laser_TV

https://en.wikipedia.org/wiki/LCD_crosstalk

https://en.wikipedia.org/wiki/Light-emitting_diode

https://en.wikipedia.org/wiki/Light-emitting_electrochemical_cell

https://en.wikipedia.org/wiki/Liquid_vapor_display

https://en.wikipedia.org/wiki/Liquid_crystal_on_silicon

https://en.wikipedia.org/wiki/Magic_lantern

https://en.wikipedia.org/wiki/List_of_glossy_display_branding_manufacturers

https://en.wikipedia.org/wiki/Matte_display

https://en.wikipedia.org/wiki/MicroLED

https://en.wikipedia.org/wiki/MicroTiles

https://en.wikipedia.org/wiki/Monitor_filter

https://en.wikipedia.org/wiki/Monoscope

https://en.wikipedia.org/wiki/Moving_panorama

https://en.wikipedia.org/wiki/Nanotextured_surface

https://en.wikipedia.org/wiki/Time-multiplexed_optical_shutter

https://en.wikipedia.org/wiki/Transflective_liquid-crystal_display

https://en.wikipedia.org/wiki/Electroluminescence#Thick-film_dielectric_electroluminescent_technology

https://en.wikipedia.org/wiki/Surface-conduction_electron-emitter_display

https://en.wikipedia.org/wiki/Spatial_light_modulator

https://en.wikipedia.org/wiki/Silk_screen_effect

https://en.wikipedia.org/wiki/See-through_display

https://en.wikipedia.org/wiki/Photographophone

https://en.wikipedia.org/wiki/Phosphorescent_organic_light-emitting_diode

https://en.wikipedia.org/wiki/Phased-array_optics

https://en.wikipedia.org/wiki/Category:Display_technology

https://en.wikipedia.org/wiki/Display_lag

https://en.wikipedia.org/wiki/Response_time_(technology)#Display_technologies

https://en.wikipedia.org/wiki/Display_motion_blur

https://en.wikipedia.org/wiki/Interrupt_latency

https://en.wikipedia.org/wiki/Interrupt#Masking

https://en.wikipedia.org/wiki/Erratum#CPU_logic

https://en.wikipedia.org/wiki/Glitch

https://en.wikipedia.org/wiki/Crosstalk

https://en.wikipedia.org/wiki/Transmission_system

Some transmission systems contain multipliers, which amplify a signal prior to re-transmission, or regenerators, which attempt to reconstruct and re-shape the coded message before re-transmission.

One of the most widely used transmission system technologies in the Internet and the Public switched telephone network (PSTN) is synchronous optical networking (SONET).

Also, transmission system is the medium through which data is transmitted from one point to another. Examples of common transmission systems people use everyday are: the internet, mobile networks, cordless cables, etc.

Digital transmission system

The ITU defines a digital transmission system as a system that uses digital signals to transmit information. In a digital transmission system, the data is first converted into a digital format and then transmitted over a communication channel. The digital format provides a number of benefits over analog transmission systems, including improved signal quality, reduced noise and interference, and increased data accuracy.

ITU defines digital transmission system (DTS) as following:

The ITU sets global standards for digital transmission systems, including the encoding and decoding methods used, the data rates and transmission speeds, and the types of communication channels used. These standards ensure that digital transmission systems are compatible and interoperable with each other, regardless of the type of data being transmitted or the geographical location of the sender and receiver.

https://en.wikipedia.org/wiki/Transmission_system

https://en.wikipedia.org/wiki/Synchronous_optical_networking

https://en.wikipedia.org/wiki/Synchronization

https://en.wikipedia.org/wiki/Time_and_frequency_transfer

https://en.wikipedia.org/wiki/Nanosecond

https://en.wikipedia.org/wiki/Time_ball

https://en.wikipedia.org/wiki/Marine_chronometer

https://en.wikipedia.org/wiki/Local_mean_time

https://en.wikipedia.org/wiki/Railway_time

https://en.wikipedia.org/wiki/Timing_synchronization_function

https://en.wikipedia.org/wiki/Service_set_(802.11_network)#Basic_service_set_types

https://en.wikipedia.org/wiki/Interlocking

https://en.wikipedia.org/wiki/Synchronization_gear

https://en.wikipedia.org/wiki/Tractor_configuration

https://en.wikipedia.org/wiki/Pusher_configuration

https://en.wikipedia.org/wiki/Reciprocal_socialization

https://en.wikipedia.org/wiki/Phase_synchronization

https://en.wikipedia.org/wiki/Phase-locked_loop

https://en.wikipedia.org/wiki/Double-ended_synchronization

https://en.wikipedia.org/wiki/Telephone_exchange

For two connected exchanges in a communications network, a double-ended synchronization (also called double-ended control) is a synchronization control scheme in which the phase error signals used to control the clock at one telephone exchange are derived by comparison with the phase of the incoming digital signal and the phase of the internal clocks at both exchanges.

https://en.wikipedia.org/wiki/Double-ended_synchronization

https://en.wikipedia.org/wiki/Data_synchronization

https://en.wikipedia.org/wiki/Error_correction_code

https://en.wikipedia.org/wiki/Optical_interleaver

https://en.wikipedia.org/wiki/Clock_synchronization

https://en.wikipedia.org/wiki/Clock_network

https://en.wikipedia.org/wiki/Master_clock

https://en.wikipedia.org/wiki/Escapement

The verge (or crown wheel) escapement is the earliest known type of mechanical escapement, the mechanism in a mechanical clock that controls its rate by allowing the gear train to advance at regular intervals or 'ticks'. Verge escapements were used from the late 13th century until the mid 19th century in clocks and pocketwatches. The name verge comes from the Latin virga, meaning stick or rod.[1]

Its invention is important in the history of technology, because it made possible the development of all-mechanical clocks. This caused a shift from measuring time by continuous processes, such as the flow of liquid in water clocks, to repetitive, oscillatory processes, such as the swing of pendulums, which had the potential to be more accurate.[2][3] Oscillating timekeepers are used in all modern timepieces.

https://en.wikipedia.org/wiki/Verge_escapement

https://en.wikipedia.org/wiki/Plesiochronous_system

https://en.wikipedia.org/wiki/Plesiochronous_digital_hierarchy

https://en.wikipedia.org/wiki/Flash_synchronization

https://en.wikipedia.org/wiki/Photographic_film

https://en.wikipedia.org/wiki/Synchronization_(alternating_current)

https://en.wikipedia.org/wiki/Telephony#Digital_telephony

https://en.wikipedia.org/wiki/3D_reconstruction

https://en.wikipedia.org/wiki/Binding_problem

https://en.wikipedia.org/wiki/Self-oscillation

https://en.wikipedia.org/wiki/Synchronization_of_chaos

https://en.wikipedia.org/wiki/Frame_synchronization_(video)

https://en.wikipedia.org/wiki/Neural_oscillation

https://en.wikipedia.org/wiki/Synchronous_circuit

https://en.wikipedia.org/wiki/Clock_signal

https://en.wikipedia.org/wiki/Network_Time_Protocol

https://en.wikipedia.org/wiki/Race_condition

https://en.wikipedia.org/wiki/Thread_(computing)

https://en.wikipedia.org/wiki/Parallel_computing

https://en.wikipedia.org/wiki/Synchronization

https://en.wikipedia.org/wiki/Interrupt#Masking

No comments:

Post a Comment