https://www.news-medical.net/health/Growing-an-eye-for-transplantation-potentials-and-pitfalls.aspx

https://www.sciencedirect.com/science/article/pii/S1818087621000337?utm_source=TrendMD&utm_medium=cpc&utm_campaign=_Asian_Journal_of_Pharmaceutical_Sciences_TrendMD_1

https://www.livescience.com/brain-organoid-optic-eyes.html

https://pubmed.ncbi.nlm.nih.gov/2271446/

https://abcnews.go.com/Technology/story?id=120075&page=1

https://www.nytimes.com/2020/06/29/science/flatworms-eyes-regeneration.html

https://neurosciencenews.com/retina-cells-in-dish-genetics-2081/

An image sensor or imager is a sensor that detects and conveys information used to form an image. It does so by converting the variable attenuation of light waves (as they pass through or reflect off objects) into signals, small bursts of current that convey the information. The waves can be light or other electromagnetic radiation. Image sensors are used in electronic imaging devices of both analog and digital types, which include digital cameras, camera modules, camera phones, optical mouse devices,[1][2][3] medical imaging equipment, night vision equipment such as thermal imaging devices, radar, sonar, and others. As technology changes, electronic and digital imaging tends to replace chemical and analog imaging.

The two main types of electronic image sensors are the charge-coupled device (CCD) and the active-pixel sensor (CMOS sensor). Both CCD and CMOS sensors are based on metal–oxide–semiconductor (MOS) technology, with CCDs based on MOS capacitors and CMOS sensors based on MOSFET (MOS field-effect transistor) amplifiers. Analog sensors for invisible radiation tend to involve vacuum tubes of various kinds, while digital sensors include flat-panel detectors.

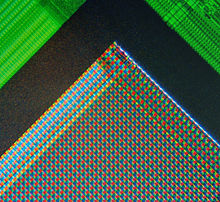

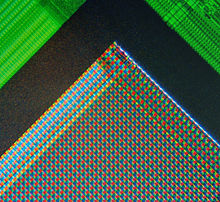

A micrograph of the corner of the photosensor array of a webcam digital camera

https://en.wikipedia.org/wiki/Image_sensor

Photodetectors, also called photosensors, are sensors of light or other electromagnetic radiation.[1] There is a wide variety of photodetectors which may be classified by mechanism of detection, such as photoelectric or photochemical effects, or by various performance metrics, such as spectral response. Semiconductor-based photodetectors typically photo detector have a p–n junction that converts light photons into current. The absorbed photons make electron–hole pairs in the depletion region. Photodiodes and photo transistors are a few examples of photo detectors. Solar cells convert some of the light energy absorbed into electrical energy.

A photodetector salvaged from a CD-ROM drive. The photodetector contains three photodiodes, visible in the photo (in center).

https://en.wikipedia.org/wiki/Photodetector

A photon (from Ancient Greek φῶς, φωτός (phôs, phōtós) 'light') is an elementary particle that is a quantum of the electromagnetic field, including electromagnetic radiation such as light and radio waves, and the force carrier for the electromagnetic force. Photons are massless,[a] so they always move at the speed of light in vacuum, 299792458 m/s (or about 186,282 mi/s). The photon belongs to the class of boson particles.

As with other elementary particles, photons are best explained by quantum mechanics and exhibit wave–particle duality, their behavior featuring properties of both waves and particles.[2] The modern photon concept originated during the first two decades of the 20th century with the work of Albert Einstein, who built upon the research of Max Planck. While trying to explain how matter and electromagnetic radiation could be in thermal equilibrium with one another, Planck proposed that the energy stored within a material object should be regarded as composed of an integer number of discrete, equal-sized parts. To explain the photoelectric effect, Einstein introduced the idea that light itself is made of discrete units of energy. In 1926, Gilbert N. Lewis popularized the term photon for these energy units.[3][4][5] Subsequently, many other experiments validated Einstein's approach.[6][7][8]

In the Standard Model of particle physics, photons and other elementary particles are described as a necessary consequence of physical laws having a certain symmetry at every point in spacetime. The intrinsic properties of particles, such as charge, mass, and spin, are determined by gauge symmetry. The photon concept has led to momentous advances in experimental and theoretical physics, including lasers, Bose–Einstein condensation, quantum field theory, and the probabilistic interpretation of quantum mechanics. It has been applied to photochemistry, high-resolution microscopy, and measurements of molecular distances. Moreover, photons have been studied as elements of quantum computers, and for applications in optical imaging and optical communication such as quantum cryptography.

Nomenclature

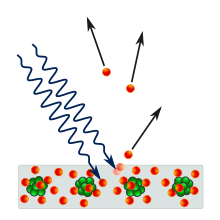

Photoelectric effect: the emission of electrons from a metal plate caused by light quanta – photons.

1926 Gilbert N. Lewis letter which brought the word "photon" into common usage

The word quanta (singular quantum, Latin for how much) was used before 1900 to mean particles or amounts of different quantities, including electricity. In 1900, the German physicist Max Planck was studying black-body radiation, and he suggested that the experimental observations, specifically at shorter wavelengths, would be explained if the energy stored within a molecule was a "discrete quantity composed of an integral number of finite equal parts", which he called "energy elements".[9] In 1905, Albert Einstein published a paper in which he proposed that many light-related phenomena—including black-body radiation and the photoelectric effect—would be better explained by modelling electromagnetic waves as consisting of spatially localized, discrete wave-packets.[10] He called such a wave-packet a light quantum (German: ein Lichtquant).[b]

The name photon derives from the Greek word for light, φῶς (transliterated phôs). Arthur Compton used photon in 1928, referring to G.N. Lewis, who coined the term in a letter to Nature on 18 December 1926.[3][11] The same name was used earlier but was never widely adopted before Lewis: in 1916 by the American physicist and psychologist Leonard T. Troland, in 1921 by the Irish physicist Joly, in 1924 by the French physiologist René Wurmser (1890–1993), and in 1926 by the French physicist Frithiof Wolfers (1891–1971).[5] The name was suggested initially as a unit related to the illumination of the eye and the resulting sensation of light and was used later in a physiological context. Although Wolfers's and Lewis's theories were contradicted by many experiments and never accepted, the new name was adopted by most physicists very soon after Compton used it.[5][c]

In physics, a photon is usually denoted by the symbol γ (the Greek letter gamma). This symbol for the photon probably derives from gamma rays, which were discovered in 1900 by Paul Villard,[13][14] named by Ernest Rutherford in 1903, and shown to be a form of electromagnetic radiation in 1914 by Rutherford and Edward Andrade.[15] In chemistry and optical engineering, photons are usually symbolized by hν, which is the photon energy, where h is the Planck constant and the Greek letter ν (nu) is the photon's frequency.[16]

https://en.wikipedia.org/wiki/Photon

A p–n junction is a boundary or interface between two types of semiconductor materials, p-type and n-type, inside a single crystal of semiconductor. The "p" (positive) side contains an excess of holes, while the "n" (negative) side contains an excess of electrons in the outer shells of the electrically neutral atoms there. This allows electrical current to pass through the junction only in one direction. The p-n junction is created by doping, for example by ion implantation, diffusion of dopants, or by epitaxy (growing a layer of crystal doped with one type of dopant on top of a layer of crystal doped with another type of dopant). If two separate pieces of material were used, this would introduce a grain boundary between the semiconductors that would severely inhibit its utility by scattering the electrons and holes.[citation needed]

p–n junctions are elementary "building blocks" of semiconductor electronic devices such as diodes, transistors, solar cells, light-emitting diodes (LEDs), and integrated circuits; they are the active sites where the electronic action of the device takes place. For example, a common type of transistor, the bipolar junction transistor (BJT), consists of two p–n junctions in series, in the form n–p–n or p–n–p; while a diode can be made from a single p-n junction. A Schottky junction is a special case of a p–n junction, where metal serves the role of the n-type semiconductor.

A p–n junction. The circuit symbol is shown: the triangle corresponds to the p side.

https://en.wikipedia.org/wiki/P%E2%80%93n_junction

Ion implantation is a low-temperature process by which ions of one element are accelerated into a solid target, thereby changing the physical, chemical, or electrical properties of the target. Ion implantation is used in semiconductor device fabrication and in metal finishing, as well as in materials science research. The ions can alter the elemental composition of the target (if the ions differ in composition from the target) if they stop and remain in the target. Ion implantation also causes chemical and physical changes when the ions impinge on the target at high energy. The crystal structure of the target can be damaged or even destroyed by the energetic collision cascades, and ions of sufficiently high energy (tens of MeV) can cause nuclear transmutation.

General principle

Ion implantation setup with mass separator

Ion implantation equipment typically consists of an ion source, where ions of the desired element are produced, an accelerator, where the ions are electrostatically accelerated to a high energy or using radiofrequency, and a target chamber, where the ions impinge on a target, which is the material to be implanted. Thus ion implantation is a special case of particle radiation. Each ion is typically a single atom or molecule, and thus the actual amount of material implanted in the target is the integral over time of the ion current. This amount is called the dose. The currents supplied by implants are typically small (micro-amperes), and thus the dose which can be implanted in a reasonable amount of time is small. Therefore, ion implantation finds application in cases where the amount of chemical change required is small.

Typical ion energies are in the range of 10 to 500 keV (1,600 to 80,000 aJ). Energies in the range 1 to 10 keV (160 to 1,600 aJ) can be used, but result in a penetration of only a few nanometers or less. Energies lower than this result in very little damage to the target, and fall under the designation ion beam deposition. Higher energies can also be used: accelerators capable of 5 MeV (800,000 aJ) are common. However, there is often great structural damage to the target, and because the depth distribution is broad (Bragg peak), the net composition change at any point in the target will be small.

The energy of the ions, as well as the ion species and the composition of the target determine the depth of penetration of the ions in the solid: A monoenergetic ion beam will generally have a broad depth distribution. The average penetration depth is called the range of the ions. Under typical circumstances ion ranges will be between 10 nanometers and 1 micrometer. Thus, ion implantation is especially useful in cases where the chemical or structural change is desired to be near the surface of the target. Ions gradually lose their energy as they travel through the solid, both from occasional collisions with target atoms (which cause abrupt energy transfers) and from a mild drag from overlap of electron orbitals, which is a continuous process. The loss of ion energy in the target is called stopping and can be simulated with the binary collision approximation method.

Accelerator systems for ion implantation are generally classified into medium current (ion beam currents between 10 μA and ~2 mA), high current (ion beam currents up to ~30 mA), high energy (ion energies above 200 keV and up to 10 MeV), and very high dose (efficient implant of dose greater than 1016 ions/cm2).[1]

https://en.wikipedia.org/wiki/Ion_implantation

Properties

This section needs additional citations for verification. Please help improve this article by adding citations to reliable sources in this section. Unsourced material may be challenged and removed. (May 2022) (Learn how and when to remove this template message)

This section may be too technical for most readers to understand. Please help improve it to make it understandable to non-experts, without removing the technical details. (May 2022) (Learn how and when to remove this template message)

Silicon atoms (Si) enlarged about 45,000,000x.

The p–n junction possesses a useful property for modern semiconductor electronics. A p-doped semiconductor is relatively conductive. The same is true of an n-doped semiconductor, but the junction between them can become depleted of charge carriers, and hence non-conductive, depending on the relative voltages of the two semiconductor regions. By manipulating this non-conductive layer, p–n junctions are commonly used as diodes: circuit elements that allow a flow of electricity in one direction but not in the other (opposite) direction.

Bias is the application of a voltage relative to a p–n junction region: forward bias is in the direction of easy current flow

reverse bias is in the direction of little or no current flow.

The forward-bias and the reverse-bias properties of the p–n junction imply that it can be used as a diode. A p–n junction diode allows electric charges to flow in one direction, but not in the opposite direction; negative charges (electrons) can easily flow through the junction from n to p but not from p to n, and the reverse is true for holes. When the p–n junction is forward-biased, electric charge flows freely due to reduced resistance of the p–n junction. When the p–n junction is reverse-biased, however, the junction barrier (and therefore resistance) becomes greater and charge flow is minimal.

https://en.wikipedia.org/wiki/P%E2%80%93n_junction

Types

A commercial amplified photodetector for use in optics research

Photodetectors may be classified by their mechanism for detection:[2][unreliable source?][3][4] Photoemission or photoelectric effect: Photons cause electrons to transition from the conduction band of a material to free electrons in a vacuum or gas.

Thermal: Photons cause electrons to transition to mid-gap states then decay back to lower bands, inducing phonon generation and thus heat.

Polarization: Photons induce changes in polarization states of suitable materials, which may lead to change in index of refraction or other polarization effects.

Photochemical: Photons induce a chemical change in a material.

Weak interaction effects: photons induce secondary effects such as in photon drag[5][6] detectors or gas pressure changes in Golay cells.

Photodetectors may be used in different configurations. Single sensors may detect overall light levels. A 1-D array of photodetectors, as in a spectrophotometer or a Line scanner, may be used to measure the distribution of light along a line. A 2-D array of photodetectors may be used as an image sensor to form images from the pattern of light before it.

A photodetector or array is typically covered by an illumination window, sometimes having an anti-reflective coating.

Properties

There are a number of performance metrics, also called figures of merit, by which photodetectors are characterized and compared[2][3] Spectral response: The response of a photodetector as a function of photon frequency.

Quantum efficiency: The number of carriers (electrons or holes) generated per photon.

Responsivity: The output current divided by total light power falling upon the photodetector.

Noise-equivalent power: The amount of light power needed to generate a signal comparable in size to the noise of the device.

Detectivity: The square root of the detector area divided by the noise equivalent power.

Gain: The output current of a photodetector divided by the current directly produced by the photons incident on the detectors, i.e., the built-in current gain.

Dark current: The current flowing through a photodetector even in the absence of light.

Response time: The time needed for a photodetector to go from 10% to 90% of final output.

Noise spectrum: The intrinsic noise voltage or current as a function of frequency. This can be represented in the form of a noise spectral density.

Nonlinearity: The RF-output is limited by the nonlinearity of the photodetector[7]

Devices

Grouped by mechanism, photodetectors include the following devices:

Photoemission or photoelectricGaseous ionization detectors are used in experimental particle physics to detect photons and particles with sufficient energy to ionize gas atoms or molecules. Electrons and ions generated by ionization cause a current flow which can be measured.

Photomultiplier tubes containing a photocathode which emits electrons when illuminated, the electrons are then amplified by a chain of dynodes.

Phototubes containing a photocathode which emits electrons when illuminated, such that the tube conducts a current proportional to the light intensity.

Microchannel plate detectors use a porous glass substrate as a mechanism for multiplying electrons. They can be used in combination with a photocathode like the photomultiplier described above, with the porous glass substrate acting as a dynode stage

SemiconductorActive-pixel sensors (APSs) are image sensors. Usually made in a complementary metal–oxide–semiconductor (CMOS) process, and also known as CMOS image sensors, APSs are commonly used in cell phone cameras, web cameras, and some DSLRs.

Cadmium zinc telluride radiation detectors can operate in direct-conversion (or photoconductive) mode at room temperature, unlike some other materials (particularly germanium) which require liquid nitrogen cooling. Their relative advantages include high sensitivity for x-rays and gamma-rays, due to the high atomic numbers of Cd and Te, and better energy resolution than scintillator detectors.

Charge-coupled devices (CCD) are image sensors which are used to record images in astronomy, digital photography, and digital cinematography. Before the 1990s, photographic plates were most common in astronomy. The next generation of astronomical instruments, such as the Astro-E2, include cryogenic detectors.

HgCdTe infrared detectors. Detection occurs when an infrared photon of sufficient energy kicks an electron from the valence band to the conduction band. Such an electron is collected by a suitable external readout integrated circuits (ROIC) and transformed into an electric signal.

LEDs which are reverse-biased to act as photodiodes. See LEDs as photodiode light sensors.

Photoresistors or Light Dependent Resistors (LDR) which change resistance according to light intensity. Normally the resistance of LDRs decreases with increasing intensity of light falling on it.[8]

Photodiodes which can operate in photovoltaic mode or photoconductive mode.[9][10] Photodiodes are often combined with low-noise analog electronics to convert the photocurrent into a voltage that can be digitized.[11][12]

Phototransistors, which act like amplifying photodiodes.

Pinned photodiodes, a photodetector structure with low lag, low noise, high quantum efficiency, and low dark current, widely used in most CCD and CMOS image sensors.[13]

Quantum dot photoconductors or photodiodes, which can handle wavelengths in the visible and infrared spectral regions.

Semiconductor detectors are employed in gamma and X-ray spectrometry and as particle detectors.[citation needed]

Silicon drift detectors (SDDs) are X-ray radiation detectors used in x-ray spectrometry (EDS) and electron microscopy (EDX).[14]

PhotovoltaicPhotovoltaic cells or solar cells which produce a voltage and supply an electric current when sunlight or certain kinds of light shines on them.

ThermalBolometers measure the power of incident electromagnetic radiation via the heating of a material with a temperature-dependent electrical resistance. A microbolometer is a specific type of bolometer used as a detector in a thermal camera.

Cryogenic detectors are sufficiently sensitive to measure the energy of single x-ray, visible and infrared photons.[15]

Pyroelectric detectors detect photons through the heat they generate and the subsequent voltage generated in pyroelectric materials.

Thermopiles detect electromagnetic radiation through heat, then generating a voltage in thermocouples.

Golay cells detect photons by the heat they generate in a gas-filled chamber, causing the gas to expand and deform a flexible membrane whose deflection is measured.

PhotochemicalPhotoreceptor cells in the retina detect light through, for instance, a rhodopsin photon-induced chemical cascade.

Chemical detectors, such as photographic plates, in which a silver halide molecule is split into an atom of metallic silver and a halogen atom. The photographic developer causes adjacent molecules to split similarly.

PolarizationThe photorefractive effect is used in holographic data storage.

Polarization-sensitive photodetectors use optically anisotropic materials to detect photons of a desired linear polarization.[16]

Graphene/silicon photodetectors

A graphene/n-type silicon heterojunction has been demonstrated to exhibit strong rectifying behavior and high photoresponsivity. Graphene is coupled with silicon quantum dots (Si QDs) on top of bulk Si to form a hybrid photodetector. Si QDs cause an increase of the built-in potential of the graphene/Si Schottky junction while reducing the optical reflection of the photodetector. Both the electrical and optical contributions of Si QDs enable a superior performance of the photodetector.[17]

Frequency range

In 2014 a technique for extending semiconductor-based photodetector's frequency range to longer, lower-energy wavelengths. Adding a light source to the device effectively "primed" the detector so that in the presence of long wavelengths, it fired on wavelengths that otherwise lacked the energy to do so.[18]

See alsoLighting control system

List of sensors

Optoelectronics

Photoelectric sensor

Photosensitivity

Readout integrated circuit

Resonant-cavity-enhanced photo detector

Photodetection

Authority control: National ![]()

Germany

Czech Republic 2

Category: Photodetectors

Electronic components

Semiconductor

devices

MOS

transistors

Transistor

NMOS

PMOS

BiCMOS

BioFET

Chemical field-effect transistor (ChemFET)

Complementary MOS (CMOS)

Depletion-load NMOS

Fin field-effect transistor (FinFET)

Floating-gate MOSFET (FGMOS)

Insulated-gate bipolar transistor (IGBT)

ISFET

LDMOS

MOS field-effect transistor (MOSFET)

Multi-gate field-effect transistor (MuGFET)

Power MOSFET

Thin-film transistor (TFT)

VMOS

UMOS

Other

transistors

Bipolar junction transistor (BJT)

Darlington transistor

Diffused junction transistor

Field-effect transistor (FET) Junction Gate FET (JFET)

Organic FET (OFET)

Light-emitting transistor (LET) Organic LET (OLET)

Pentode transistor

Point-contact transistor

Programmable unijunction transistor (PUT)

Static induction transistor (SIT)

Tetrode transistor

Unijunction transistor (UJT)

Diodes

Avalanche diode

Constant-current diode (CLD, CRD)

Gunn diode

Laser diode (LD)

Light-emitting diode (LED)

Organic light-emitting diode (OLED)

Photodiode

PIN diode

Schottky diode

Step recovery diode

Zener diode

Other

devices

Printed electronics

Printed circuit board

DIAC

Heterostructure barrier varactor

Integrated circuit (IC)

Hybrid integrated circuit

Light emitting capacitor (LEC)

Memistor

Memristor

Memtransistor

Memory cell

Metal oxide varistor (MOV)

Mixed-signal integrated circuit

MOS integrated circuit (MOS IC)

Organic semiconductor

Photodetector

Quantum circuit

RF CMOS

Silicon controlled rectifier (SCR)

Solaristor

Static induction thyristor (SITh)

Three-dimensional integrated circuit (3D IC)

Thyristor

Trancitor

TRIAC

Varicap

Voltage regulators

Linear regulator

Low-dropout regulator

Switching regulator

Buck

Boost

Buck–boost

Split-pi

Ćuk

SEPIC

Charge pump

Switched capacitor

Vacuum tubes

Acorn tube

Audion

Beam tetrode

Barretter

Compactron

Diode

Fleming valve

Neutron tube

Nonode

Nuvistor

Pentagrid (Hexode, Heptode, Octode)

Pentode

Photomultiplier

Phototube

Tetrode

Triode

Vacuum tubes (RF)

Backward-wave oscillator (BWO)

Cavity magnetron

Crossed-field amplifier (CFA)

Gyrotron

Inductive output tube (IOT)

Klystron

Maser

Sutton tube

Traveling-wave tube (TWT)

X-ray tube

Cathode-ray tubes

Beam deflection tube

Charactron

Iconoscope

Magic eye tube

Monoscope

Selectron tube

Storage tube

Trochotron

Video camera tube

Williams tube

Gas-filled tubes

Cold cathode

Crossatron

Dekatron

Ignitron

Krytron

Mercury-arc valve

Neon lamp

Nixie tube

Thyratron

Trigatron

Voltage-regulator tube

Adjustable

Potentiometer digital

Variable capacitor

Varicap

Passive

Connector audio and video

electrical power

RF

Electrolytic detector

Ferrite

Antifuse

Fuse resettable

eFUSE

Resistor

Switch

Thermistor

Transformer

Varistor

Wire Wollaston wire

Reactive

Capacitor types

Ceramic resonator

Crystal oscillator

Inductor

Parametron

Relay reed relay

mercury relay

https://en.wikipedia.org/wiki/Photodetector

CCD vs. CMOS sensors

A micrograph of the corner of the photosensor array of a webcam digital camera

Image sensor (upper left) on the motherboard of a Nikon Coolpix L2 6 MP

The two main types of digital image sensors are the charge-coupled device (CCD) and the active-pixel sensor (CMOS sensor), fabricated in complementary MOS (CMOS) or N-type MOS (NMOS or Live MOS) technologies. Both CCD and CMOS sensors are based on the MOS technology,[4] with MOS capacitors being the building blocks of a CCD,[5] and MOSFET amplifiers being the building blocks of a CMOS sensor.[6][7]

Cameras integrated in small consumer products generally use CMOS sensors, which are usually cheaper and have lower power consumption in battery powered devices than CCDs.[8] CCD sensors are used for high end broadcast quality video cameras, and CMOS sensors dominate in still photography and consumer goods where overall cost is a major concern. Both types of sensor accomplish the same task of capturing light and converting it into electrical signals.[citation needed]

Each cell of a CCD image sensor is an analog device. When light strikes the chip it is held as a small electrical charge in each photo sensor. The charges in the line of pixels nearest to the (one or more) output amplifiers are amplified and output, then each line of pixels shifts its charges one line closer to the amplifiers, filling the empty line closest to the amplifiers. This process is then repeated until all the lines of pixels have had their charge amplified and output.[9]

A CMOS image sensor has an amplifier for each pixel compared to the few amplifiers of a CCD. This results in less area for the capture of photons than a CCD, but this problem has been overcome by using microlenses in front of each photodiode, which focus light into the photodiode that would have otherwise hit the amplifier and not been detected.[9] Some CMOS imaging sensors also use Back-side illumination to increase the number of photons that hit the photodiode.[10] CMOS sensors can potentially be implemented with fewer components, use less power, and/or provide faster readout than CCD sensors.[11] They are also less vulnerable to static electricity discharges.

Another design, a hybrid CCD/CMOS architecture (sold under the name "sCMOS") consists of CMOS readout integrated circuits (ROICs) that are bump bonded to a CCD imaging substrate – a technology that was developed for infrared staring arrays and has been adapted to silicon-based detector technology.[12] Another approach is to utilize the very fine dimensions available in modern CMOS technology to implement a CCD like structure entirely in CMOS technology: such structures can be achieved by separating individual poly-silicon gates by a very small gap; though still a product of research hybrid sensors can potentially harness the benefits of both CCD and CMOS imagers.[13]

Performance

See also: EMVA1288

There are many parameters that can be used to evaluate the performance of an image sensor, including dynamic range, signal-to-noise ratio, and low-light sensitivity. For sensors of comparable types, the signal-to-noise ratio and dynamic range improve as the size increases. It’s because in a given integration (exposure) time, more photons hit the pixel with larger area.

Exposure-time control

Exposure time of image sensors is generally controlled by either a conventional mechanical shutter, as in film cameras, or by an electronic shutter. Electronic shuttering can be "global," in which case the entire image sensor area's accumulation of photoelectrons starts and stops simultaneously, or "rolling" in which case the exposure interval of each row immediate precedes that row's readout, in a process that "rolls" across the image frame (typically from top to bottom in landscape format). Global electronic shuttering is less common, as it requires "storage" circuits to hold charge from the end of the exposure interval until the readout process gets there, typically a few milliseconds later.[14]

Color separation

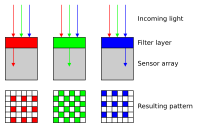

Bayer pattern on sensor

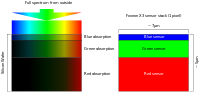

Foveon's scheme of vertical filtering for color sensing

There are several main types of color image sensors, differing by the type of color-separation mechanism: Integral color sensors[15] use a color filter array fabricated on top of a single monochrome CCD or CMOS image sensor. The most common color filter array pattern, the Bayer pattern, uses a checkerboard arrangement of two green pixels for each red and blue pixel, although many other color filter patterns have been developed, including patterns using cyan, magenta, yellow, and white pixels.[16] Integral color sensors were initially manufactured by transferring colored dyes through photoresist windows onto a polymer receiving layer coated on top of a monochrome CCD sensor.[17] Since each pixel provides only a single color (such as green), the "missing" color values (such as red and blue) for the pixel are interpolated using neighboring pixels.[18] This processing is also referred to as demosaicing or de-bayering.

Foveon X3 sensor, using an array of layered pixel sensors, separating light via the inherent wavelength-dependent absorption property of silicon, such that every location senses all three color channels. This method is similar to how color film for photography works.

3CCD, using three discrete image sensors, with the color separation done by a dichroic prism. The dichroic elements provide a sharper color separation, thus improving color quality. Because each sensor is equally sensitive within its passband, and at full resolution, 3-CCD sensors produce better color quality and better low light performance. 3-CCD sensors produce a full 4:4:4 signal, which is preferred in television broadcasting, video editing and chroma key visual effects.

Specialty sensors

Infrared view of the Orion Nebula taken by ESO's HAWK-I, a cryogenic wide-field imager[19]

Special sensors are used in various applications such as thermography, creation of multi-spectral images, video laryngoscopes, gamma cameras, sensor arrays for x-rays, and other highly sensitive arrays for astronomy.[20]

While in general, digital cameras use a flat sensor, Sony prototyped a curved sensor in 2014 to reduce/eliminate Petzval field curvature that occurs with a flat sensor. Use of a curved sensor allows a shorter and smaller diameter of the lens with reduced elements and components with greater aperture and reduced light fall-off at the edge of the photo.[21]

https://en.wikipedia.org/wiki/Image_sensor

History

See also: Digital imaging

Early analog sensors for visible light were video camera tubes. They date back to the 1930s, and several types were developed up until the 1980s. By the early 1990s, they had been replaced by modern solid-state CCD image sensors.[22]

The basis for modern solid-state image sensors is MOS technology,[23][24] which originates from the invention of the MOSFET by Mohamed M. Atalla and Dawon Kahng at Bell Labs in 1959.[25] Later research on MOS technology led to the development of solid-state semiconductor image sensors, including the charge-coupled device (CCD) and later the active-pixel sensor (CMOS sensor).[23][24]

The passive-pixel sensor (PPS) was the precursor to the active-pixel sensor (APS).[7] A PPS consists of passive pixels which are read out without amplification, with each pixel consisting of a photodiode and a MOSFET switch.[26] It is a type of photodiode array, with pixels containing a p-n junction, integrated capacitor, and MOSFETs as selection transistors. A photodiode array was proposed by G. Weckler in 1968.[6] This was the basis for the PPS.[7] These early photodiode arrays were complex and impractical, requiring selection transistors to be fabricated within each pixel, along with on-chip multiplexer circuits. The noise of photodiode arrays was also a limitation to performance, as the photodiode readout bus capacitance resulted in increased noise level. Correlated double sampling (CDS) could also not be used with a photodiode array without external memory.[6] However, in 1914 Deputy Consul General Carl R. Loop, reported to the state department in a Consular Report on Archibald M. Low's Televista system that "It is stated that the selenium in the transmitting screen may be replaced by any diamagnetic material".[27]

In June 2022, Samsung Electronics announced that it had created a 200 million pixel image sensor. The 200MP ISOCELL HP3 has 0.56 micrometer pixels with Samsung reporting that previous sensors had 0.64 micrometer pixels, a 12% decrease since 2019. The new sensor contains 200 million pixels in a 2 x 1.4 inch lens.[28]

Charge-coupled device

Main article: Charge-coupled device

The charge-coupled device (CCD) was invented by Willard S. Boyle and George E. Smith at Bell Labs in 1969.[29] While researching MOS technology, they realized that an electric charge was the analogy of the magnetic bubble and that it could be stored on a tiny MOS capacitor. As it was fairly straightforward to fabricate a series of MOS capacitors in a row, they connected a suitable voltage to them so that the charge could be stepped along from one to the next.[23] The CCD is a semiconductor circuit that was later used in the first digital video cameras for television broadcasting.[30]

Early CCD sensors suffered from shutter lag. This was largely resolved with the invention of the pinned photodiode (PPD).[7] It was invented by Nobukazu Teranishi, Hiromitsu Shiraki and Yasuo Ishihara at NEC in 1980.[7][31] It was a photodetector structure with low lag, low noise, high quantum efficiency and low dark current.[7] In 1987, the PPD began to be incorporated into most CCD devices, becoming a fixture in consumer electronic video cameras and then digital still cameras. Since then, the PPD has been used in nearly all CCD sensors and then CMOS sensors.[7]

Active-pixel sensor

Main article: Active-pixel sensor

The NMOS active-pixel sensor (APS) was invented by Olympus in Japan during the mid-1980s. This was enabled by advances in MOS semiconductor device fabrication, with MOSFET scaling reaching smaller micron and then sub-micron levels.[6][32] The first NMOS APS was fabricated by Tsutomu Nakamura's team at Olympus in 1985.[33] The CMOS active-pixel sensor (CMOS sensor) was later improved by a group of scientists at the NASA Jet Propulsion Laboratory in 1993.[7] By 2007, sales of CMOS sensors had surpassed CCD sensors.[34] By the 2010s, CMOS sensors largely displaced CCD sensors in all new applications.

Other image sensors

The first commercial digital camera, the Cromemco Cyclops in 1975, used a 32×32 MOS image sensor. It was a modified MOS dynamic RAM (DRAM) memory chip.[35]

MOS image sensors are widely used in optical mouse technology. The first optical mouse, invented by Richard F. Lyon at Xerox in 1980, used a 5 µm NMOS integrated circuit sensor chip.[36][37] Since the first commercial optical mouse, the IntelliMouse introduced in 1999, most optical mouse devices use CMOS sensors.[38]

In February 2018, researchers at Dartmouth College announced a new image sensing technology that the researchers call QIS, for Quanta Image Sensor. Instead of pixels, QIS chips have what the researchers call "jots." Each jot can detect a single particle of light, called a photon.[39]

See alsoList of sensors used in digital cameras

Contact image sensor (CIS)

Electro-optical sensor

Video camera tube

Semiconductor detector

Fill factor

Full-frame digital SLR

Image resolution

Image sensor format, the sizes and shapes of common image sensors

Color filter array, mosaic of tiny color filters over color image sensors

Sensitometry, the scientific study of light-sensitive materials

History of television, the development of electronic imaging technology since the 1880s

List of large sensor interchangeable-lens video cameras

Oversampled binary image sensor

Computer vision

Push broom scanner

Whisk broom scanner

https://en.wikipedia.org/wiki/Image_sensor

Digital imaging or digital image acquisition is the creation of a digital representation of the visual characteristics of an object,[1] such as a physical scene or the interior structure of an object. The term is often assumed to imply or include the processing, compression, storage, printing and display of such images. A key advantage of a digital image, versus an analog image such as a film photograph, is the ability to digitally propagate copies of the original subject indefinitely without any loss of image quality.

Digital imaging can be classified by the type of electromagnetic radiation or other waves whose variable attenuation, as they pass through or reflect off objects, conveys the information that constitutes the image. In all classes of digital imaging, the information is converted by image sensors into digital signals that are processed by a computer and made output as a visible-light image. For example, the medium of visible light allows digital photography (including digital videography) with various kinds of digital cameras (including digital video cameras). X-rays allow digital X-ray imaging (digital radiography, fluoroscopy, and CT), and gamma rays allow digital gamma ray imaging (digital scintigraphy, SPECT, and PET). Sound allows ultrasonography (such as medical ultrasonography) and sonar, and radio waves allow radar. Digital imaging lends itself well to image analysis by software, as well as to image editing (including image manipulation).

History

Before digital imaging, the first photograph ever produced, View from the Window at Le Gras, was in 1826 by Frenchman Joseph Nicéphore Niépce. When Joseph was 28, he was discussing with his brother Claude about the possibility of reproducing images with light. His focus on his new innovations began in 1816. He was in fact more interested in creating an engine for a boat. Joseph and his brother focused on that for quite some time and Claude successfully promoted his innovation moving and advancing him to England. Joseph was able to focus on the photograph and finally in 1826, he was able to produce his first photograph of a view through his window. This took 8 hours or more of exposure to light.[2]

The first digital image was produced in 1920, by the Bartlane cable picture transmission system. British inventors, Harry G. Bartholomew and Maynard D. McFarlane, developed this method. The process consisted of “a series of negatives on zinc plates that were exposed for varying lengths of time, thus producing varying densities,”.[3] The Bartlane cable picture transmission system generated at both its transmitter and its receiver end a punched data card or tape that was recreated as an image.[4]

In 1957, Russell A. Kirsch produced a device that generated digital data that could be stored in a computer; this used a drum scanner and photomultiplier tube.[3]

Digital imaging was developed in the 1960s and 1970s, largely to avoid the operational weaknesses of film cameras, for scientific and military missions including the KH-11 program. As digital technology became cheaper in later decades, it replaced the old film methods for many purposes.

In the early 1960s, while developing compact, lightweight, portable equipment for the onboard nondestructive testing of naval aircraft, Frederick G. Weighart[5] and James F. McNulty (U.S. radio engineer)[6] at Automation Industries, Inc., then, in El Segundo, California co-invented the first apparatus to generate a digital image in real-time, which image was a fluoroscopic digital radiograph. Square wave signals were detected on the fluorescent screen of a fluoroscope to create the image.

Digital image sensors

Main article: Image sensor

The charge-coupled device was invented by Willard S. Boyle and George E. Smith at Bell Labs in 1969.[7] While researching MOS technology, they realized that an electric charge was the analogy of the magnetic bubble and that it could be stored on a tiny MOS capacitor. As it was fairly straightforward to fabricate a series of MOS capacitors in a row, they connected a suitable voltage to them so that the charge could be stepped along from one to the next.[8] The CCD is a semiconductor circuit that was later used in the first digital video cameras for television broadcasting.[9]

Early CCD sensors suffered from shutter lag. This was largely resolved with the invention of the pinned photodiode (PPD).[10] It was invented by Nobukazu Teranishi, Hiromitsu Shiraki and Yasuo Ishihara at NEC in 1980.[10][11] It was a photodetector structure with low lag, low noise, high quantum efficiency and low dark current.[10] In 1987, the PPD began to be incorporated into most CCD devices, becoming a fixture in consumer electronic video cameras and then digital still cameras. Since then, the PPD has been used in nearly all CCD sensors and then CMOS sensors.[10]

The NMOS active-pixel sensor (APS) was invented by Olympus in Japan during the mid-1980s. This was enabled by advances in MOS semiconductor device fabrication, with MOSFET scaling reaching smaller micron and then sub-micron levels.[12][13] The NMOS APS was fabricated by Tsutomu Nakamura's team at Olympus in 1985.[14] The CMOS active-pixel sensor (CMOS sensor) was later developed by Eric Fossum's team at the NASA Jet Propulsion Laboratory in 1993.[10] By 2007, sales of CMOS sensors had surpassed CCD sensors.[15]

Digital image compression

Main article: Image compression

An important development in digital image compression technology was the discrete cosine transform (DCT).[16] DCT compression is used in JPEG, which was introduced by the Joint Photographic Experts Group in 1992.[17] JPEG compresses images down to much smaller file sizes, and has become the most widely used image file format on the Internet.[18]

Digital cameras

Main article: Digital camera

These different scanning ideas were the basis of the first designs of digital camera. Early cameras took a long time to capture an image and were poorly suited for consumer purposes.[3] It wasn't until the adoption of the CCD (charge-coupled device) that the digital camera really took off. The CCD became part of the imaging systems used in telescopes, the first black-and-white digital cameras in the 1980s.[3] Color was eventually added to the CCD and is a usual feature of cameras today.

Changing environment

Great strides have been made in the field of digital imaging. Negatives and exposure are foreign concepts to many, and the first digital image in 1920 led eventually to cheaper equipment, increasingly powerful yet simple software, and the growth of the Internet.[19]

The constant advancement and production of physical equipment and hardware related to digital imaging has affected the environment surrounding the field. From cameras and webcams to printers and scanners, the hardware is becoming sleeker, thinner, faster, and cheaper. As the cost of equipment decreases, the market for new enthusiasts widens, allowing more consumers to experience the thrill of creating their own images.

Everyday personal laptops, family desktops, and company computers are able to handle photographic software. Our computers are more powerful machines with increasing capacities for running programs of any kind—especially digital imaging software. And that software is quickly becoming both smarter and simpler. Although functions on today's programs reach the level of precise editing and even rendering 3-D images, user interfaces are designed to be friendly to advanced users as well as first-time fans.

The Internet allows editing, viewing, and sharing digital photos and graphics. A quick browse around the web can easily turn up graphic artwork from budding artists, news photos from around the world, corporate images of new products and services, and much more. The Internet has clearly proven itself a catalyst in fostering the growth of digital imaging.

Online photo sharing of images changes the way we understand photography and photographers. Online sites such as Flickr, Shutterfly, and Instagram give billions the capability to share their photography, whether they are amateurs or professionals. Photography has gone from being a luxury medium of communication and sharing to more of a fleeting moment in time. Subjects have also changed. Pictures used to be primarily taken of people and family. Now, we take them of anything. We can document our day and share it with everyone with the touch of our fingers.[20]

In 1826 Niepce was the first to develop a photo which used lights to reproduce images, the advancement of photography has drastically increased over the years. Everyone is now a photographer in their own way, whereas during the early 1800s and 1900s the expense of lasting photos was highly valued and appreciated by consumers and producers. According to the magazine article on five ways digital camera changed us states the following:The impact on professional photographers has been dramatic. Once upon a time a photographer wouldn't dare waste a shot unless they were virtually certain it would work.”The use of digital imaging( photography) has changed the way we interacted with our environment over the years. Part of the world is experienced differently through visual imagining of lasting memories, it has become a new form of communication with friends, family and love ones around the world without face to face interactions. Through photography it is easy to see those that you have never seen before and feel their presence without them being around, for example Instagram is a form of social media where anyone is allowed to shoot, edit, and share photos of whatever they want with friends and family. Facebook, snapshot, vine and twitter are also ways people express themselves with little or no words and are able to capture every moment that is important. Lasting memories that were hard to capture, is now easy because everyone is now able to take pictures and edit it on their phones or laptops. Photography has become a new way to communicate and it is rapidly increasing as time goes by, which has affected the world around us.[21]

A study done by Basey, Maines, Francis, and Melbourne found that drawings used in class have a significant negative effect on lower-order content for student's lab reports, perspectives of labs, excitement, and time efficiency of learning. Documentation style learning has no significant effects on students in these areas. He also found that students were more motivated and excited to learn when using digital imaging.[22]

Field advancements

In the field of education. As digital projectors, screens, and graphics find their way to the classroom, teachers and students alike are benefitting from the increased convenience and communication they provide, although their theft can be a common problem in schools.[23] In addition acquiring a basic digital imaging education is becoming increasingly important for young professionals. Reed, a design production expert from Western Washington University, stressed the importance of using “digital concepts to familiarize students with the exciting and rewarding technologies found in one of the major industries of the 21st century”.[24]

The field of medical imaging A branch of digital imaging that seeks to assist in the diagnosis and treatment of diseases, is growing at a rapid rate. A recent study by the American Academy of Pediatrics suggests that proper imaging of children who may have appendicitis may reduce the amount of appendectomies needed. Further advancements include amazingly detailed and accurate imaging of the brain, lungs, tendons, and other parts of the body—images that can be used by health professionals to better serve patients.[25]

According to Vidar, as more countries take on this new way of capturing an image, it has been found that image digitalization in medicine has been increasingly beneficial for both patient and medical staff. Positive ramifications of going paperless and heading towards digitization includes the overall reduction of cost in medical care, as well as an increased global, real-time, accessibility of these images. (http://www.vidar.com/film/images/stories/PDFs/newsroom/Digital%20Transition%20White%20Paper%20hi-res%20GFIN.pdf)

There is a program called Digital Imaging in Communications and Medicine (DICOM) that is changing the medical world as we know it. DICOM is not only a system for taking high quality images of the aforementioned internal organs, but also is helpful in processing those images. It is a universal system that incorporates image processing, sharing, and analyzing for the convenience of patient comfort and understanding. This service is all encompassing and is beginning a necessity.[26]

In the field of technology, digital image processing has become more useful than analog image processing when considering the modern technological advancement. Image sharpen & reinstatement – Image sharpens & reinstatement is the procedure of images which is capture by the contemporary camera making them an improved picture or manipulating the pictures in the way to get chosen product. This comprises the zooming process, the blurring process, the sharpening process, the gray scale to color translation process, the picture recovery process and the picture identification process.

Facial Recognition – Face recognition is a PC innovation that decides the positions and sizes of human faces in self-assertive digital pictures. It distinguishes facial components and overlooks whatever, for example, structures, trees & bodies.

Remote detection – Remote detecting is little or substantial scale procurement of data of article or occurrence, with the utilization of recording or ongoing detecting apparatus which is not in substantial or close contact with an article. Practically speaking, remote detecting is face-off accumulation using an assortment of gadgets for collecting data on particular article or location.

Pattern detection – The pattern detection is the study or investigation from picture processing. In the pattern detection, image processing is utilized for recognizing elements in the images and after that machine study is utilized to instruct a framework for variation in pattern. The pattern detection is utilized in computer-aided analysis, detection of calligraphy, identification of images, and many more.

Color processing – The color processing comprises processing of colored pictures and diverse color locations which are utilized. This moreover involves study of transmit, store, and encode of the color pictures.

Theoretical application

Although theories are quickly becoming realities in today's technological society, the range of possibilities for digital imaging is wide open. One major application that is still in the works is that of child safety and protection. How can we use digital imaging to better protect our kids? Kodak’s program, Kids Identification Digital Software (KIDS) may answer that question. The beginnings include a digital imaging kit to be used to compile student identification photos, which would be useful during medical emergencies and crimes. More powerful and advanced versions of applications such as these are still developing, with increased features constantly being tested and added.[27]

But parents and schools aren’t the only ones who see benefits in databases such as these. Criminal investigation offices, such as police precincts, state crime labs, and even federal bureaus have realized the importance of digital imaging in analyzing fingerprints and evidence, making arrests, and maintaining safe communities. As the field of digital imaging evolves, so does our ability to protect the public.[28]

Digital imaging can be closely related to the social presence theory especially when referring to the social media aspect of images captured by our phones. There are many different definitions of the social presence theory but two that clearly define what it is would be "the degree to which people are perceived as real" (Gunawardena, 1995), and "the ability to project themselves socially and emotionally as real people" (Garrison, 2000). Digital imaging allows one to manifest their social life through images in order to give the sense of their presence to the virtual world. The presence of those images acts as an extension of oneself to others, giving a digital representation of what it is they are doing and who they are with. Digital imaging in the sense of cameras on phones helps facilitate this effect of presence with friends on social media. Alexander (2012) states, "presence and representation is deeply engraved into our reflections on images...this is, of course, an altered presence...nobody confuses an image with the representation reality. But we allow ourselves to be taken in by that representation, and only that 'representation' is able to show the liveliness of the absentee in a believable way." Therefore, digital imaging allows ourselves to be represented in a way so as to reflect our social presence.[29]

Photography is a medium used to capture specific moments visually. Through photography our culture has been given the chance to send information (such as appearance) with little or no distortion. The Media Richness Theory provides a framework for describing a medium's ability to communicate information without loss or distortion. This theory has provided the chance to understand human behavior in communication technologies. The article written by Daft and Lengel (1984,1986) states the following:

Communication media fall along a continuum of richness. The richness of a medium comprises four aspects: the availability of instant feedback, which allows questions to be asked and answered; the use of multiple cues, such as physical presence, vocal inflection, body gestures, words, numbers and graphic symbols; the use of natural language, which can be used to convey an understanding of a broad set of concepts and ideas; and the personal focus of the medium (pp. 83).

The more a medium is able to communicate the accurate appearance, social cues and other such characteristics the more rich it becomes. Photography has become a natural part of how we communicate. For example, most phones have the ability to send pictures in text messages. Apps Snapchat and Vine have become increasingly popular for communicating. Sites like Instagram and Facebook have also allowed users to reach a deeper level of richness because of their ability to reproduce information. Sheer, V. C. (January–March 2011). Teenagers’ use of MSN features, discussion topics, and online friendship development: the impact of media richness and communication control. Communication Quarterly, 59(1).

Methods

A digital photograph may be created directly from a physical scene by a camera or similar device. Alternatively, a digital image may be obtained from another image in an analog medium, such as photographs, photographic film, or printed paper, by an image scanner or similar device. Many technical images—such as those acquired with tomographic equipment, side-scan sonar, or radio telescopes—are actually obtained by complex processing of non-image data. Weather radar maps as seen on television news are a commonplace example. The digitalization of analog real-world data is known as digitizing, and involves sampling (discretization) and quantization. Projectional imaging of digital radiography can be done by X-ray detectors that directly convert the image to digital format. Alternatively, phosphor plate radiography is where the image is first taken on a photostimulable phosphor (PSP) plate which is subsequently scanned by a mechanism called photostimulated luminescence.

Finally, a digital image can also be computed from a geometric model or mathematical formula. In this case, the name image synthesis is more appropriate, and it is more often known as rendering.

Digital image authentication is an issue[30] for the providers and producers of digital images such as health care organizations, law enforcement agencies, and insurance companies. There are methods emerging in forensic photography to analyze a digital image and determine if it has been altered.

Previously digital imaging depended on chemical and mechanical processes, now all these processes have converted to electronic. A few things need to take place for digital imaging to occur, the light energy converts to electrical energy – think of a grid with millions of little solar cells. Each condition generates a specific electrical charge. Charges for each of these "solar cells" are transported and communicated to the firmware to be interpreted. The firmware is what understands and translates the color and other light qualities. Pixels are what is noticed next, with varying intensities they create and cause different colors, creating a picture or image. Finally, the firmware records the information for a future date and for reproduction.

Advantages

There are several benefits of digital imaging. First, the process enables easy access of photographs and word documents. Google is at the forefront of this ‘revolution,’ with its mission to digitize the world's books. Such digitization will make the books searchable, thus making participating libraries, such as Stanford University and the University of California Berkeley, accessible worldwide.[31] Digital imaging also benefits the medical world because it “allows the electronic transmission of images to third-party providers, referring dentists, consultants, and insurance carriers via a modem”.[31] The process “is also environmentally friendly since it does not require chemical processing”.[31] Digital imaging is also frequently used to help document and record historical, scientific and personal life events.[32]

Benefits also exist regarding photographs. Digital imaging will reduce the need for physical contact with original images.[33] Furthermore, digital imaging creates the possibility of reconstructing the visual contents of partially damaged photographs, thus eliminating the potential that the original would be modified or destroyed.[33] In addition, photographers will be “freed from being ‘chained’ to the darkroom,” will have more time to shoot and will be able to cover assignments more effectively.[34] Digital imaging ‘means’ that “photographers no longer have to rush their film to the office, so they can stay on location longer while still meeting deadlines”.[35]

Another advantage to digital photography is that it has been expanded to camera phones. We are able to take cameras with us wherever as well as send photos instantly to others. It is easy for people to us as well as help in the process of self-identification for the younger generation[36]

Criticisms

Critics of digital imaging cite several negative consequences. An increased “flexibility in getting better quality images to the readers” will tempt editors, photographers and journalists to manipulate photographs.[34] In addition, “staff photographers will no longer be photojournalists, but camera operators... as editors have the power to decide what they want ‘shot’”.[34] Legal constraints, including copyright, pose another concern: will copyright infringement occur as documents are digitized and copying becomes easier?

See alsoDigital image mosaic

Digital image processing

Digital photography

Dynamic imaging

Image editing

Image retrieval

Graphics file format

Graphic image development

Society for Imaging Science and Technology, (IS&T)

Film recorder

Photoplotter

https://en.wikipedia.org/wiki/Digital_imaging

A film recorder is a graphical output device for transferring images to photographic film from a digital source. In a typical film recorder, an image is passed from a host computer to a mechanism to expose film through a variety of methods, historically by direct photography of a high-resolution cathode ray tube (CRT) display. The exposed film can then be developed using conventional developing techniques, and displayed with a slide or motion picture projector. The use of film recorders predates the current use of digital projectors, which eliminate the time and cost involved in the intermediate step of transferring computer images to film stock, instead directly displaying the image signal from a computer. Motion picture film scanners are the opposite of film recorders, copying content from film stock to a computer system. Film recorders can be thought of as modern versions of Kinescopes.

Pair of Arrilaser film recorders

Design

Operation

All film recorders typically work in the same manner. The image is fed from a host computer as a raster stream over a digital interface. A film recorder exposes film through various mechanisms; flying spot (early recorders); photographing a high resolution video monitor; electron beam recorder (Sony HDVS); a CRT scanning dot (Celco); focused beam of light from a light valve technology (LVT) recorder; a scanning laser beam (Arrilaser); or recently, full-frame LCD array chips.

For color image recording on a CRT film recorder, the red, green, and blue channels are sequentially displayed on a single gray scale CRT, and exposed to the same piece of film as a multiple exposure through a filter of the appropriate color. This approach yields better resolution and color quality than possible with a tri-phosphor color CRT. The three filters are usually mounted on a motor-driven wheel. The filter wheel, as well as the camera's shutter, aperture, and film motion mechanism are usually controlled by the recorder's electronics and/or the driving software. CRT film recorders are further divided into analog and digital types. The analog film recorder uses the native video signal from the computer, while the digital type uses a separate display board in the computer to produce a digital signal for a display in the recorder. Digital CRT recorders provide a higher resolution at a higher cost compared to analog recorders due to the additional specialized hardware.[1] Typical resolutions for digital recorders were quoted as 2K and 4K, referring to 2048×1366 and 4096×2732 pixels, respectively, while analog recorders provided a resolution of 640×428 pixels in comparison.[2]

Higher-quality LVT film recorders use a focused beam of light to write the image directly onto a film loaded spinning drum, one pixel at a time. In one example, the light valve was a liquid-crystal shutter, the light beam was steered with a lens, and text was printed using a pre-cut optical mask.[2] The LVT will record pixel beyond grain. Some machines can burn 120-res or 120 lines per millimeter. The LVT is basically a reverse drum scanner. The exposed film is developed and printed by regular photographic chemical processing.

Formats

Film recorders are available for a variety of film types and formats. The 35mm negative film and transparencies are popular because they can be processed by any photo shop. Single-image 4×5 film and 8×10 are often used for high-quality, large format printing.[2]

Some models have detachable film holders to handle multiple formats with the same camera or with Polaroid backs to provide on-site review of output before exposing film.[2]

Uses

Film recorders are used in digital printing to generate master negatives for offset and other bulk printing processes. For preview, archiving, and small-volume reproduction, film recorders have been rendered obsolete by modern printers that produce photographic-quality hardcopies directly on plain paper.

They are also used to produce the master copies of movies that use computer animation or other special effects based on digital image processing. However, most cinemas nowadays use Digital Cinema Packages on hard drives instead of film stock.

Computer graphics

Film recorders were among the earliest computer graphics output devices; for example, the IBM 740 CRT Recorder was announced in 1954.

Film recorders were also commonly used to produce slides for slide projectors;[1] but this need is now largely met by video projectors that project images directly from a computer to a screen. The terms "slide" and "slide deck" are still commonly used in presentation programs.

Current uses

Currently, film recorders are primarily used in the motion picture film-out process for the ever increasing amount of digital intermediate work being done. Although significant advances in large venue video projection alleviates the need to output to film, there remains a deadlock between the motion picture studios and theater owners over who should pay for the cost of these very costly projection systems. This, combined with the increase in international and independent film production, will keep the demand for film recording steady for at least a decade.[citation needed]

Key manufacturers

Traditional film recorder manufacturers have all but vanished from the scene or have evolved their product lines to cater to the motion picture industry. Dicomed was one such early provider of digital color film recorders. Polaroid, Management Graphics, Inc, MacDonald-Detwiler, Information International, Inc., and Agfa were other producers of film recorders. Arri is the only current major manufacturer of film recorders. Kodak Lightning I film recorder. One of the first laser recorders. Needed an engineering staff to set up.

Kodak Lightning II film recorder used both gas and diode laser to record on to film.

The last LVT machines produced by Kodak / Durst-Dice stopped production in 2002. There are no LVT film recorders currently being produced. LVT Saturn 1010 uses a LED exposure (RGB) to 8"x10" film at 1000-3000ppi.

LUX Laser Cinema Recorder from Autologic/Information International in Thousand Oaks, California. Sales end in March 2000. Used on the 1997 film “Titanic”.

Arri produces the Arrilaser line of laser-based motion picture film recorders.

MGI produced the Solitaire line of CRT-based motion picture film recorders.[3]

Matrix, originally ImaPRO, a branch of Agfa Division, produced the QCR line of CRT-based motion picture film recorders.[4]

CCG, formerly Agfa film recorders, has been a steady manufacturer of film recorders based in Germany.

In 2004 CCG introduced Definity, a motion picture film recorder utilizing LCD technology. In 2010 CCG introduced the first full LED LCD film recorder as a new step in film recording.

Cinevator was made by Cinevation AS, in Drammen, Norway. The Cinevator was a real-time digital film recorder. It could record IN, IP and prints with and without sound

Oxberry produced the Model 3100 film recorder camera system, with interchangeable pin-registered movements (shuttles) for 35mm (full frame/Silent, 1.33:1) and 16mm (regular 16, "2R"), and others have adapted the Oxberry movements for CinemaScope, 1.85:1, 1.75:1, 1.66:1, as well as Academy/Sound (1.37:1) in 35mm and Super-16 in 16mm ("1R"). For instance, the "Solitaire" and numerous others employed the Oxberry 3100 camera system.

History

Before video tape recorders or VTRs were invented, TV shows were either broadcast live or recorded to film for later showing, using the Kinescope process. In 1967, CBS Laboratories introduced the Electronic Video Recording format, which used video and telecined-to-video film sources, which were then recorded with an electron-beam recorder at CBS' EVR mastering plant at the time to 35mm film stock in a rank of 4 strips on the film, which was then slit down to 4 8.75 mm (0.344 in) film copies, for playback in an EVR player.

All types of CRT recorders were (and still are) used for film recording. Some early examples used for computer-output recording were the 1954 IBM 740 CRT Recorder, and the 1962 Stromberg-Carlson SC-4020, the latter using a Charactron CRT for text and vector graphic output to either 16mm motion picture film, 16mm microfilm, or hard-copy paper output.

Later 1970 and 80s-era recording to B&W (and color, with 3 separate exposures for red, green, and blue)) 16mm film was done with an EBR (Electron Beam Recorder), the most prominent examples made by 3M), for both video and COM (Computer Output Microfilm) applications. Image Transform in Universal City, California used specially modified 3M EBR film recorders that could perform color film-out recording on 16mm by exposing three 16mm frames in a row (one red, one green and one blue). The film was then printed to color 16mm or 35mm film. The video fed to the recorder could either be NTSC, PAL or SECAM. Later, Image Transform used specially modified VTRs to record 24 frame for their "Image Vision" system. The modified 1 inch type B videotape VTRs would record and play back 24frame video at 10 MHz bandwidth, at about twice the normal NTSC resolution. Modified 24fps 10 MHz Bosch Fernseh KCK-40 cameras were used on the set. This was a custom pre-HDTV video system. Image Transform had modified other gear for this process. At its peak, this system was used in the production of the film "Monty Python Live at the Hollywood Bowl" in 1982. This was the first major pre-digital intermediate post production using a film recorder for film-out production.

In 1988, companies in the United States collectively produced 715 million slides at a cost of $8.3 billion.[2]

Awards

The Academy of Motion Picture Arts and Sciences awarded an Oscar to the makers of the Arrilaser film recorder. The Award of Merit Oscar from the Academy Scientific and Technical Award ceremony was given on 11 February 2012 to Franz Kraus, Johannes Steurer and Wolfgang Riedel.[5][6] Steurer was awarded the Oskar Messter Memorial Medal two years later in 2014 for his role in the development of the Arrilaser.[7]

See alsoFilm-out

Tape-out

Digital Intermediate

Grating Light Valve

https://en.wikipedia.org/wiki/Film_recorder

Computer vision tasks include methods for acquiring, processing, analyzing and understanding digital images, and extraction of high-dimensional data from the real world in order to produce numerical or symbolic information, e.g. in the forms of decisions.[1][2][3][4] Understanding in this context means the transformation of visual images (the input of the retina) into descriptions of the world that make sense to thought processes and can elicit appropriate action. This image understanding can be seen as the disentangling of symbolic information from image data using models constructed with the aid of geometry, physics, statistics, and learning theory.

The scientific discipline of computer vision is concerned with the theory behind artificial systems that extract information from images. The image data can take many forms, such as video sequences, views from multiple cameras, multi-dimensional data from a 3D scanner, or medical scanning devices. The technological discipline of computer vision seeks to apply its theories and models to the construction of computer vision systems.

Sub-domains of computer vision include scene reconstruction, object detection, event detection, video tracking, object recognition, 3D pose estimation, learning, indexing, motion estimation, visual servoing, 3D scene modeling, and image restoration.

Adopting computer vision technology might be painstaking for organizations as there is no single point solution for it. There are very few companies that provide a unified and distributed platform or an Operating System where computer vision applications can be easily deployed and managed.

Definition

Computer vision is an interdisciplinary field that deals with how computers can be made to gain high-level understanding from digital images or videos. From the perspective of engineering, it seeks to automate tasks that the human visual system can do.[5][6][7] "Computer vision is concerned with the automatic extraction, analysis and understanding of useful information from a single image or a sequence of images. It involves the development of a theoretical and algorithmic basis to achieve automatic visual understanding."[8] As a scientific discipline, computer vision is concerned with the theory behind artificial systems that extract information from images. The image data can take many forms, such as video sequences, views from multiple cameras, or multi-dimensional data from a medical scanner.[9] As a technological discipline, computer vision seeks to apply its theories and models for the construction of computer vision systems.

History

In the late 1960s, computer vision began at universities that were pioneering artificial intelligence. It was meant to mimic the human visual system, as a stepping stone to endowing robots with intelligent behavior.[10] In 1966, it was believed that this could be achieved through a summer project, by attaching a camera to a computer and having it "describe what it saw".[11][12]

What distinguished computer vision from the prevalent field of digital image processing at that time was a desire to extract three-dimensional structure from images with the goal of achieving full scene understanding. Studies in the 1970s formed the early foundations for many of the computer vision algorithms that exist today, including extraction of edges from images, labeling of lines, non-polyhedral and polyhedral modeling, representation of objects as interconnections of smaller structures, optical flow, and motion estimation.[10]

The next decade saw studies based on more rigorous mathematical analysis and quantitative aspects of computer vision. These include the concept of scale-space, the inference of shape from various cues such as shading, texture and focus, and contour models known as snakes. Researchers also realized that many of these mathematical concepts could be treated within the same optimization framework as regularization and Markov random fields.[13] By the 1990s, some of the previous research topics became more active than others. Research in projective 3-D reconstructions led to better understanding of camera calibration. With the advent of optimization methods for camera calibration, it was realized that a lot of the ideas were already explored in bundle adjustment theory from the field of photogrammetry. This led to methods for sparse 3-D reconstructions of scenes from multiple images. Progress was made on the dense stereo correspondence problem and further multi-view stereo techniques. At the same time, variations of graph cut were used to solve image segmentation. This decade also marked the first time statistical learning techniques were used in practice to recognize faces in images (see Eigenface). Toward the end of the 1990s, a significant change came about with the increased interaction between the fields of computer graphics and computer vision. This included image-based rendering, image morphing, view interpolation, panoramic image stitching and early light-field rendering.[10]

Recent work has seen the resurgence of feature-based methods, used in conjunction with machine learning techniques and complex optimization frameworks.[14][15] The advancement of Deep Learning techniques has brought further life to the field of computer vision. The accuracy of deep learning algorithms on several benchmark computer vision data sets for tasks ranging from classification,[16] segmentation and optical flow has surpassed prior methods.[citation needed][17]

Related fields

Object detection in a photograph

Solid-state physics