Duoplasmatrons

Related terms:

Advances in Imaging and Electron Physics

Emmanuel de Chambost, in Advances in Imaging and Electron Physics, 2011

https://www.sciencedirect.com/topics/physics-and-astronomy/duoplasmatronsA soft box is a type of photographic lighting device, one of a number of photographic soft light devices. All the various soft light types create even and diffused light[1] by transmitting light through some scattering material, or by reflecting light off a second surface to diffuse the light. The best known form of reflective source is the umbrella light, where the light from the bulb is "bounced" off the inside of a metalized umbrella to create an indirect "soft" light.

A soft box is an enclosure around a bulb comprising reflective side and back walls and a diffusing material at the front of the light.

The sides and back of the box are lined with a bright surface - an aluminized fabric surface or an aluminum foil, to act as an efficient reflector. In some commercially available models the diffuser is removable to allow the light to be used alone as a floodlight or with an umbrella reflector.

A soft box can be used with either flash or continuous light sources such as fluorescent lamps or "hot lights" such as quartz halogen bulbs or tungsten bulbs. If soft box lights are used with "hot" light sources, the photographer must be sure the soft box is heat rated for the wattage of the light to which it is attached in order to avoid fire hazard.

https://en.wikipedia.org/wiki/Softbox

In photography and cinematography, a reflector is an improvised or specialised reflective surface used to redirect light towards a given subject or scene.

https://en.wikipedia.org/wiki/Reflector_(photography)

Photographic plates preceded photographic film as a capture medium in photography, and were still used in some communities up until the late 20th century. The light-sensitive emulsion of silver salts was coated on a glass plate, typically thinner than common window glass.

https://en.wikipedia.org/wiki/Photographic_plate

Heliography (in French, héliographie) from helios (Greek: ἥλιος), meaning "sun", and graphein (γράφειν),"writing") is the photographic process invented by Joseph Nicéphore Niépce around 1822,[1] which he used to make the earliest known surviving photograph from nature, View from the Window at Le Gras (1826 or 1827), and the first realisation of photoresist[2] as means to reproduce artworks through inventions of photolithography and photogravure.

In the summers of 1826, a French inventor, Nicephore Niepce, shocked the entire world by capturing the first image through a process called heliography. Intrigued by the potency of this undeveloped market, business executives invested more of their resources into the untapped world of photography. As expected, the industrial revolution set the chains in motion for the production and development of photography.[3] Niépce prepared a synopsis of his experiments in November 1829: On Heliography, or a method of automatically fixing by the action of light the image formed in the camera obscura[4][5] which outlines his intention to use his “Heliographic” method of photogravure or photolithography as a means of making lithographic, intaglio or relief master plates for multiple printed reproductions.[6]

He knew that the acid-resistant Bitumen of Judea used in etching hardened with exposure to light.[7] In experiments he coated it on plates of glass, zinc, copper and silver-surfaced copper, pewter and lithographic stone,[8] and found it resisted dissolution[9] in oil of lavender and petroleum, so that the uncoated shadow areas could be traditionally treated through acid etching and aquatint to print black ink.[10][11]

The exposed and solvent-treated plate itself, as in the case of View from the Window at Le Gras, presents a negative or positive image dependent upon ambient reflection, not unlike the daguerreotype which was based on Niépce's discoveries.

Bitumen has a complex and varied structure of polycyclic aromatic hydrocarbons (linked benzene rings), containing a small proportion of nitrogen and sulphur; its hardening in proportion to its exposure to light is understood to be due to further cross-linking of the rings, as is the hardening of tree resins (colophony, or abietic acid) by light, first noted by Jean Senebier in 1782. The photochemistry of these processes, which has been studied by Jean-Louis Marignier of Université Paris-Sud since the 1990s,[12][13][14] is still to be fully understood.[15]

https://en.wikipedia.org/wiki/Heliography

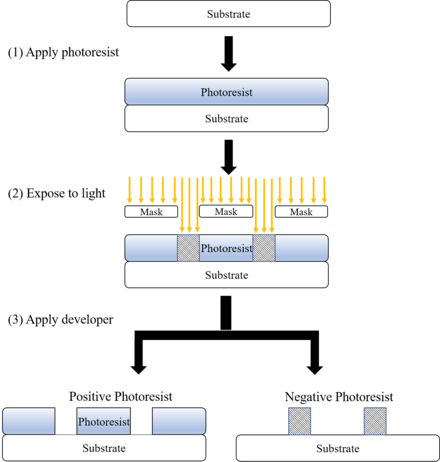

Photolithography, also called optical lithography or UV lithography, is a process used in microfabrication to pattern parts on a thin film or the bulk of a substrate (also called a wafer). It uses light to transfer a geometric pattern from a photomask (also called an optical mask) to a photosensitive (that is, light-sensitive) chemical photoresist on the substrate. A series of chemical treatments then either etches the exposure pattern into the material or enables deposition of a new material in the desired pattern upon the material underneath the photoresist. In complex integrated circuits, a CMOS wafer may go through the photolithographic cycle as many as 50 times.

Photolithography shares some fundamental principles with photography in that the pattern in the photoresist etching is created by exposing it to light, either directly (without using a mask) or with a projected image using a photomask. This procedure is comparable to a high precision version of the method used to make printed circuit boards. Subsequent stages in the process have more in common with etching than with lithographic printing. This method can create extremely small patterns, down to a few tens of nanometers in size. It provides precise control of the shape and size of the objects it creates and can create patterns over an entire surface cost-effectively. Its main disadvantages are that it requires a flat substrate to start with, it is not very effective at creating shapes that are not flat, and it can require extremely clean operating conditions. Photolithography is the standard method of printed circuit board (PCB) and microprocessor fabrication. Directed self-assembly is being evaluated as an alternative to photolithography.[1]

https://en.wikipedia.org/wiki/Photolithography

Photogravure is an intaglio printmaking or photo-mechanical process whereby a copper plate is grained (adding a pattern to the plate) and then coated with a light-sensitive gelatin tissue which had been exposed to a film positive, and then etched, resulting in a high quality intaglio plate that can reproduce detailed continuous tones of a photograph.

https://en.wikipedia.org/wiki/Photogravure

A photoresist (also known simply as a resist) is a light-sensitive material used in several processes, such as photolithography and photoengraving, to form a patterned coating on a surface. This process is crucial in the electronic industry.[1]

The process begins by coating a substrate with a light-sensitive organic material. A patterned mask is then applied to the surface to block light, so that only unmasked regions of the material will be exposed to light. A solvent, called a developer, is then applied to the surface. In the case of a positive photoresist, the photo-sensitive material is degraded by light and the developer will dissolve away the regions that were exposed to light, leaving behind a coating where the mask was placed. In the case of a negative photoresist, the photosensitive material is strengthened (either polymerized or cross-linked) by light, and the developer will dissolve away only the regions that were not exposed to light, leaving behind a coating in areas where the mask was not placed.

A BARC coating (Bottom Anti-Reflectant Coating) may be applied before the photoresist is applied, to avoid reflections from occurring under the photoresist and to improve the photoresist's performance at smaller semiconductor nodes.[2][3][4]

Differences between positive and negative resist[edit]

The following table[6] is based on generalizations which are generally accepted in the microelectromechanical systems (MEMS) fabrication industry.

| Characteristic | Positive | Negative |

| Adhesion to silicon | Fair | Excellent |

| Relative cost | More expensive | Less expensive |

| Developer base | Aqueous | Organic |

| Solubility in the developer | Exposed region is soluble | Exposed region is insoluble |

| Minimum feature | 0.5 µm | 2 µm |

| Step coverage | Better | Lower |

| Wet chemical resistance | Fair | Excellent |

A positive photoresist is a type of photoresist in which the portion of the photoresist that is exposed to light becomes soluble to the photoresist developer. The unexposed portion of the photoresist remains insoluble to the photoresist developer.

A negative photoresist is a type of photoresist in which the portion of the photoresist that is exposed to light becomes insoluble to the photoresist developer. The unexposed portion of the photoresist is dissolved by the photoresist developer.

Based on the chemical structure of photoresists, they can be classified into three types: photopolymeric, photodecomposing, photocrosslinking photoresist.

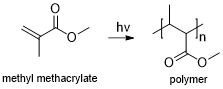

Photopolymeric photoresist is a type of photoresist, usually allyl monomer, which could generate free radical when exposed to light, then initiates the photopolymerization of monomer to produce a polymer. Photopolymeric photoresists are usually used for negative photoresist, e.g. methyl methacrylate.

Photodecomposing photoresist is a type of photoresist that generates hydrophilic products under light. Photodecomposing photoresists are usually used for positive photoresist. A typical example is azide quinone, e.g. diazonaphthaquinone (DQ).

Photocrosslinking photoresist is a type of photoresist, which could crosslink chain by chain when exposed to light, to generate an insoluble network. Photocrosslinking photoresist are usually used for negative photoresist.

Off-Stoichiometry Thiol-Enes (OSTE) polymers[7]

For self-assembled monolayer SAM photoresist, first a SAM is formed on the substrate by self-assembly. Then, this surface covered by SAM is irradiated through a mask, similar to other photoresist, which generates a photo-patterned sample in the irradiated areas. And finally developer is used to remove the designed part (could be used as both positive or negative photoresist).[8]

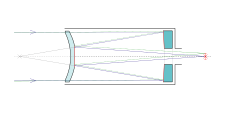

Chromatic correction of visible and near infrared wavelengths. Horizontal axis shows degree of aberration, 0 is no aberration. Lenses: 1: simple, 2: achromatic doublet, 3: apochromatic and 4: superachromat.

https://en.wikipedia.org/wiki/Photoresist

In optics, the f-number of an optical system such as a camera lens is the ratio of the system's focal length to the diameter of the entrance pupil ("clear aperture").[1][2][3] It is also known as the focal ratio, f-ratio, or f-stop, and is very important in photography.[4] It is a dimensionless number that is a quantitative measure of lens speed; increasing the f-number is referred to as stopping down. The f-number is commonly indicated using a lower-case hooked f with the format f/N, where N is the f-number.

The f-number is the reciprocal of the relative aperture (the aperture diameter divided by focal length).[5]

https://en.wikipedia.org/wiki/F-number

Fresnel zone antennas are antennas that focus the signal by using the phase shifting property of the antenna surface or its shape[1][2] [3] .[4][5] There are several types of Fresnel zone antennas, namely, Fresnel zone plate, offset Fresnel zone plate antennas, phase correcting reflective array or "Reflectarray" antennas and 3 Dimensional Fresnel antennas. They are a class of diffractive antennas and have been used from radio frequencies to X rays.

Fresnel zone antennas belong to the category of reflector and Lens antennas. Unlike traditional reflector and lens antennas, however, the focusing effect in a Fresnel zone antenna is achieved by controlling the phase shifting property of the surface and allows for flat[1][6] or arbitrary antenna shapes.[4] For historical reasons, a flat Fresnel zone antenna is termed a Fresnel zone plate antenna. An offset Fresnel zone plate can be flush mounted to the wall or roof of a building, printed on a window, or made conformal to the body of a vehicle.[7]

The advantages of the Fresnel zone plate antenna are numerous. It is normally cheap to manufacture and install, easy to transport and package and can achieve high gain. Owing to its flat nature, the wind loading force of a Fresnel zone plate can be as little as 1/8 of that of conventional solid or wire-meshed reflectors of similar size. When used at millimetre wave frequencies, a Fresnel zone antenna can be an integrated with the millimetre-wave monolithic integrated circuit (MMIC) and thus becomes even more competitive than a printed antenna array.

The simplest Fresnel zone plate antenna is the circular half-wave zone plate invented in the nineteenth century. The basic idea is to divide a plane aperture into circular zones with respect to a chosen focal point on the basis that all radiation from each zone arrives at the focal point in phase within ±π/2 range. If the radiation from alternate zones is suppressed or shifted in phase by π, an approximate focus is obtained and a feed can be placed there to collect the received energy effectively. Despite its simplicity, the half-wave zone plate remained mainly as an optical device for a long time, primarily because its efficiency is too low (less than 20%) and the sidelobe level of its radiation pattern is too high to compete with conventional reflector antennas.

Compared with conventional reflector and lens antennas, reported research on microwave and millimetre-wave Fresnel zone antennas appears to be limited. In 1948, Maddaus published the design and experimental work on stepped half-wave lens antennas operating at 23 GHz and sidelobe levels of around -17 dB were achieved. In 1961, Buskirk and Hendrix reported an experiment on simple circular phase reversal zone plate reflector antennas for radio frequency operation. Unfortunately, the sidelobe they achieved was as high as −7 dB. In 1987, Black and Wiltse published their theoretical and experimental work on the stepped quarter-wave zone plate at 35 GHz. A sidelobe level of about −17 dB was achieved. A year later a phase reversal zone plate reflector operating at 94 GHz was reported by Huder and Menzel, and 25% efficiency and −19 dB sidelobe level were obtained. An experiment on a similar antenna at 11.8 GHz was reported by NASA researchers in 1989. 5% 3 dB bandwidth and −16 dB sidelobe level were measured.[1]

Until the 1980s, the Fresnel zone plate antenna was regarded as a poor candidate for microwave applications. Following the development of DBSservices in the eighties, however, antenna engineers began to consider the use of Fresnel zone plates as candidate antennas for DBS reception, where antenna cost is an important factor. This, to some extent, provided a commercial push to the research on Fresnel zone antennas.[1][3][5]

Offset Fresnel antenna[edit]

The offset Fresnel zone plate was first reported in.[8] In contrast to the symmetrical Fresnel zone plate which consists of a set of circular zones, the offset Fresnel zone plate consists of a set of elliptical zones defined by

where a, b and c are determined by the offset angle and focal length and the zone index. This feature introduces some new problems to the analysis of offset Fresnel zone plate antennas. The formulae and algorithms for predicting the radiation pattern of an offset Fresnel lens antenna are presented in,[8]where some experimental results are also reported. Although a simple Fresnel lens antenna has low efficiency, it serves as a very attractive indoor candidate when a large window or an electrically transparent wall is available. In the application of direct broadcasting services (DBS), for example, an offset Fresnel lens can be produced by simply painting a zonal pattern on a window glass or a blind with conducting material. The satellite signal passing through the transparent zones is then collected by using an indoor feed.

Phase correcting antenna[edit]

To increase the efficiency of Fresnel zone plate antennas, one can divide each Fresnel zone into several sub-zones, such as quarter-wave sub-zones, and provide an appropriate phase shift in each of them, thus resulting in a sub-zone phase correcting zone plate.[9] The problem with dielectric based zone plate lens antenna is that whilst a dielectric is providing a phase shift to the transmitted wave, it inevitably reflects some of the energy back, so the efficiency of such a lens is limited. However, the low efficiency problem for a zone plate reflector is less severe, as total reflection can achieved by using a conducting reflector behind the zone plate.[10] Based on the focal field analysis, it is demonstrated that high efficiency zone plate reflectors can be obtained by employing the multilayer phase correcting technique, which is to use a number of dielectric slabs of low permittivity and print different metallic zonal patterns on the different interfaces. The design and experiments of circular and offset multilayer phase correcting zone plate reflectors were presented in.[1]

A problem with the multilayer zone plate reflector is the complexity introduced, which might offset the advantage of using Fresnel zone plate antennas. One solution is to print an inhomogeneous array of conducting elements on a grounded dielectric plate, thus leading to the so-called single-layer printed flat reflector.[1][11] This configuration bears much in common with the printed array antenna but it requires the use of a feed antenna instead of a corporate feed network. In contract to the normal array antenna, the array elements are different and are arranged in a pseudo-periodic manner. The theory and design method of single layer printed flat reflectors incorporating conducting rings and experimental results on such an antenna operating in the X-band were given in.[5] Naturally, this leads to a more general antenna concept, the phase correcting reflective array.

Reflectarray antenna[edit]

A phase correcting reflective array consists of an array of phase shifting elements illuminated by a feed placed at the focal point. The word "reflective" refers to the fact that each phase shifting element reflects back the energy in the incident wave with an appropriate phase shift. The phase shifting elements can be passive or active. Each phase shifting element can be designed to either produce a phase shift which is equal to that required at the element centre, or provide some quantised phase shifting values. Although the former does not seem to be commercially attractive, the latter proved to be practical antenna configuration. One potential advantage is that such an array can be reconfigured by changing the positions of the elements to produce different radiation patterns. A systematic theory of the phase efficiency of passive phase correcting array antennas and experimental results on an X-band prototype were reported in.[1] In recent years, it became common to call this type of antennas "reflectarrays".[12]

Reference phase modulation[edit]

It has been shown that the phase of the main lobe of a zone plate follows its reference phase,[13] a constant path length or phase added to the formula for the zones, but that the phase of the side lobes is much less sensitive.

So, when it is possible to modulate the signal by changing the material properties dynamically, the modulation of the side lobes is much less than that of the main lobe and so they disappear on demodulation, leaving a cleaner and more private signal.[14]

Beamsteering Fresnel antennes[edit]

Beamsteering can be applied by amplitude/phase control or amplitude-only control of the elements of an antenna array positioned in the focal point of the lens as antenna feed. With amplitude-only control, no bandwidth-limiting phase shifters are needed, saving complexity and alleviating bandwidth constraints at the cost of limited beamsteering capability.[15]

Three-dimensional Fresnel antennas[edit]

In order to increase the focusing, resolving and scanning properties and to create different shaped radiation patterns the Fresnel zone plate and antenna can be assembled conformable to a curvilinear natural or man-made formation and used as a diffractive antenna-Radome.[4]

https://en.wikipedia.org/wiki/Fresnel_zone_antenna

In optics, chromatic aberration (CA), also called chromatic distortion and spherochromatism, is a failure of a lens to focus all colors to the same point.[1] It is caused by dispersion: the refractive index of the lens elements varies with the wavelength of light. The refractive index of most transparent materials decreases with increasing wavelength.[2] Since the focal length of a lens depends on the refractive index, this variation in refractive index affects focusing.[3] Chromatic aberration manifests itself as "fringes" of color along boundaries that separate dark and bright parts of the image.

| Optical aberration |

|---|

|

There are two types of chromatic aberration: axial (longitudinal), and transverse (lateral). Axial aberration occurs when different wavelengths of light are focused at different distances from the lens (focus shift). Longitudinal aberration is typical at long focal lengths. Transverse aberration occurs when different wavelengths are focused at different positions in the focal plane, because the magnification and/or distortion of the lens also varies with wavelength. Transverse aberration is typical at short focal lengths. The ambiguous acronym LCA is sometimes used for either longitudinal or lateral chromatic aberration.[2]

The two types of chromatic aberration have different characteristics, and may occur together. Axial CA occurs throughout the image and is specified by optical engineers, optometrists, and vision scientists in diopters.[4] It can be reduced by stopping down, which increases depth of field so that though the different wavelengths focus at different distances, they are still in acceptable focus. Transverse CA does not occur in the center of the image and increases towards the edge. It is not affected by stopping down.

In digital sensors, axial CA results in the red and blue planes being defocused (assuming that the green plane is in focus), which is relatively difficult to remedy in post-processing, while transverse CA results in the red, green, and blue planes being at different magnifications (magnification changing along radii, as in geometric distortion), and can be corrected by radially scaling the planes appropriately so they line up.

Comparison of an ideal image of a ring (1) and ones with only axial (2) and only transverse (3) chromatic aberration

https://en.wikipedia.org/wiki/Chromatic_aberration

Parameters[edit]

Physical, chemical and optical properties of photoresists influence their selection for different processes.[14]

- Resolution is the ability to differ the neighboring features on the substrate. Critical dimension (CD) is a main measure of resolution.

The smaller the critical dimension is, the higher resolution would be.

- Contrast is the difference from exposed portion to unexposed portion. The higher the contrast is, the more obvious the difference between exposed and unexposed portions would be.

- Sensitivity is the minimum energy that is required to generate a well-defined feature in the photoresist on the substrate, measured in mJ/cm2. The sensitivity of a photoresist is important when using deep ultraviolet (DUV) or extreme-ultraviolet (EUV).

- Viscosity is a measure of the internal friction of a fluid, affecting how easily it will flow. When it is needed to produce a thicker layer, a photoresist with higher viscosity will be preferred.

- Adherence is the adhesive strength between photoresist and substrate. If the resist comes off the substrate, some features will be missing or damaged.

- Anti-etching is the ability of a photoresist to resist the high temperature, different pH environment or the ion bombardment in the process of post-modification.

- Surface tension is the tension that induced by a liquid tended to minimize its surface area, which is caused by the attraction of the particles in the surface layer. In order to better wet the surface of substrate, photoresists are required to possess relatively low surface tension.

Epoxy-based polymer[edit]

One very common negative photoresist is based on epoxy-based polymer. The common product name is SU-8 photoresist, and it was originally invented by IBM, but is now sold by Microchem and Gersteltec. One unique property of SU-8 is that it is very difficult to strip. As such, it is often used in applications where a permanent resist pattern (one that is not strippable, and can even be used in harsh temperature and pressure environments) is needed for a device.[15] Mechanism of epoxy-based polymer is shown in 1.2.3 SU-8.

Off-stoichiometry thiol-enes(OSTE) polymer[edit]

In 2016, OSTE Polymers were shown to possess a unique photolitography mechanism, based on diffusion-induced monomer depletion, which enables high photostructuring accuracy. The OSTE polymer material was originally invented at the KTH Royal Institute of Technology, but is now sold by Mercene Labs. Whereas the material has properties similar to those of SU8, OSTE has the specific advantage that it contains reactive surface molecules, which make this material attractive for microfluidic or biomedical applications.[14]

Microcontact printing[edit]

Microcontact printing was described by Whitesides Group in 1993. Generally, in this techniques, an elastomeric stamp is used to generate two-dimensional patterns, through printing the “ink” molecules onto the surface of a solid substrate.[16]

Step 1 for microcontact printing. A scheme for the creation of a polydimethylsiloxane (PDMS) master stamp. Step 2 for microcontact printing A scheme of the inking and contact process of microprinting lithography.

Printed circuit boards[edit]

The manufacture of printed circuit boards is one of the most important uses of photoresist. Photolithography allows the complex wiring of an electronic system to be rapidly, economically, and accurately reproduced as if run off a printing press. The general process is applying photoresist, exposing image to ultraviolet rays, and then etching to remove the copper-clad substrate.[17]

Patterning and etching of substrates[edit]

This includes specialty photonics materials, MicroElectro-Mechanical Systems (MEMS), glass printed circuit boards, and other micropatterning tasks. Photoresist tends not to be etched by solutions with a pH greater than 3.[18]

Microelectronics[edit]

This application, mainly applied to silicon wafers/silicon integrated circuits is the most developed of the technologies and the most specialized in the field.[19]

A photopolymer or light-activated resin is a polymer that changes its properties when exposed to light, often in the ultraviolet or visible region of the electromagnetic spectrum.[1] These changes are often manifested structurally, for example hardening of the material occurs as a result of cross-linkingwhen exposed to light. An example is shown below depicting a mixture of monomers, oligomers, and photoinitiators that conform into a hardened polymeric material through a process called curing.[2][3]

A wide variety of technologically useful applications rely on photopolymers, for example some enamels and varnishes depend on photopolymer formulation for proper hardening upon exposure to light. In some instances, an enamel can cure in a fraction of a second when exposed to light, as opposed to thermally cured enamels which can require half an hour or longer.[4] Curable materials are widely used for medical, printing, and photoresisttechnologies.

Changes in structural and chemical properties can be induced internally by chromophoresthat the polymer subunit already possesses, or externally by addition of photosensitivemolecules. Typically a photopolymer consists of a mixture of multifunctional monomers and oligomers in order to achieve the desired physical properties, and therefore a wide variety of monomers and oligomers have been developed that can polymerize in the presence of light either through internal or external initiation. Photopolymers undergo a process called curing, where oligomers are cross-linked upon exposure to light, forming what is known as a network polymer. The result of photo-curing is the formation of a thermoset network of polymers. One of the advantages of photo-curing is that it can be done selectively using high energy light sources, for example lasers, however, most systems are not readily activated by light, and in this case a photoinitiator is required. Photoinitiators are compounds that upon radiation of light decompose into reactive species that activate polymerization of specific functional groups on the oligomers.[5] An example of a mixture that undergoes cross-linking when exposed to light is shown below. The mixture consists of monomeric styrene and oligomeric acrylates.[6]

Most commonly, photopolymerized systems are typically cured through UV radiation, since ultraviolet light is more energetic. However, the development of dye-based photoinitiator systems have allowed for the use of visible light, having the potential advantages of being simpler and safer to handle.[7] UV curing in industrial processes has greatly expanded over the past several decades. Many traditional thermally cured and solvent-based technologies can be replaced by photopolymerization technologies. The advantages of photopolymerization over thermally cured polymerization include higher rates of polymerization and environmental benefits from elimination of volatile organic solvents.[1]

There are two general routes for photoinitiation: free radical and ionic.[1][4] The general process involves doping a batch of neat polymer with small amounts of photoinitiator, followed by selective radiation of light, resulting in a highly cross-linked product. Many of these reactions do not require solvent which eliminates termination path via reaction of initiators with solvent and impurities, in addition to decreasing the overall cost.[8]

https://en.wikipedia.org/wiki/Photopolymer

detailed knowledge of the optical system used to produce the image can allow for some useful correction.[13]

Chromatic aberration is used during a duochrome eye test to ensure that a correct lens power has been selected. The patient is confronted with red and green images and asked which is sharper. If the prescription is right, then the cornea, lens and prescribed lens will focus the red and green wavelengths just in front, and behind the retina, appearing of equal sharpness. If the lens is too powerful or weak, then one will focus on the retina, and the other will be much more blurred in comparison.[12]

In an ideal situation, post-processing to remove or correct lateral chromatic aberration would involve scaling the fringed color channels, or subtracting some of a scaled versions of the fringed channels, so that all channels spatially overlap each other correctly in the final image.[14]

https://en.wikipedia.org/wiki/Chromatic_aberration

The Cooke triplet is a photographic lens designed and patented (patent number GB 22,607) in 1893 by Dennis Taylor who was employed as chief engineer by T. Cooke & Sons of York. It was the first lens system that allowed elimination of most of the optical distortion or aberration at the outer edge of lenses.[citation needed]

The Cooke triplet is noted for being able to correct the Seidel aberrations.[1] It is recognized as one of the most important objective designs in the field of photography.[2][3]

The lens designed, invented by Dennis Taylor but named for the firm he worked for, consists of three separated lens elements.[2] It has two biconvex lenses on the outer and a biconcave lens in the middle.[2]

The design took a new approach to solving the optical design issues, and the design was presented to the Optical Society of London.[4]

https://en.wikipedia.org/wiki/Cooke_triplet

Category:Light-sensitive chemicals

Chemicals that will react under influence of light.

Pages in category "Light-sensitive chemicals"

The following 12 pages are in this category, out of 12 total. This list may not reflect recent changes (learn more).

https://en.wikipedia.org/wiki/Category:Light-sensitive_chemicals

In optics, aberration is a property of optical systems, such as lenses, that causes light to be spread out over some region of space rather than focused to a point.[1] Aberrations cause the image formed by a lens to be blurred or distorted, with the nature of the distortion depending on the type of aberration. Aberration can be defined as a departure of the performance of an optical system from the predictions of paraxial optics.[2] In an imaging system, it occurs when light from one point of an object does not converge into (or does not diverge from) a single point after transmission through the system. Aberrations occur because the simple paraxial theory is not a completely accurate model of the effect of an optical system on light, rather than due to flaws in the optical elements.[3]

An image-forming optical system with aberration will produce an image which is not sharp. Makers of optical instruments need to correct optical systems to compensate for aberration.

Aberration can be analyzed with the techniques of geometrical optics. The articles on reflection, refractionand caustics discuss the general features of reflected and refracted rays.

https://en.wikipedia.org/wiki/Optical_aberration

A waveplate or retarder is an optical device that alters the polarization state of a light wave travelling through it. Two common types of waveplates are the half-wave plate, which shifts the polarization direction of linearly polarized light, and the quarter-wave plate, which converts linearly polarized light into circularly polarized light and vice versa.[1] A quarter-wave plate can be used to produce elliptical polarization as well.

Waveplates are constructed out of a birefringent material (such as quartz or mica, or even plastic), for which the index of refraction is different for light linearly polarized along one or the other of two certain perpendicular crystal axes. The behavior of a waveplate (that is, whether it is a half-wave plate, a quarter-wave plate, etc.) depends on the thickness of the crystal, the wavelength of light, and the variation of the index of refraction. By appropriate choice of the relationship between these parameters, it is possible to introduce a controlled phase shift between the two polarization components of a light wave, thereby altering its polarization.[1]

A common use of waveplates—particularly the sensitive-tint (full-wave) and quarter-wave plates—is in optical mineralogy. Addition of plates between the polarizers of a petrographic microscopemakes the optical identification of minerals in thin sections of rocks easier,[2] in particular by allowing deduction of the shape and orientation of the optical indicatrices within the visible crystal sections. This alignment can allow discrimination between minerals which otherwise appear very similar in plane polarized and cross polarized light.

https://en.wikipedia.org/wiki/Waveplate#Half-wave_plate

Wind loads on buildings[edit]

The design of buildings must account for wind loads, and these are affected by wind shear. For engineering purposes, a power law wind-speed profile may be defined as:[5][6]

where:

- = speed of the wind at height

- = gradient wind at gradient height

- = exponential coefficient

Typically, buildings are designed to resist a strong wind with a very long return period, such as 50 years or more. The design wind speed is determined from historical records using extreme value theory to predict future extreme wind speeds. Wind speeds are generally calculated based on some regional design standard or standards. The design standards for building wind loads include:

- AS 1170.2 for Australia

- EN 1991-1-4 for Europe

- NBC for Canada

Wind engineering is a subset of mechanical engineering, structural engineering, meteorology, and applied physics that analyzes the effects of wind in the natural and the built environment and studies the possible damage, inconvenience or benefits which may result from wind. In the field of engineering it includes strong winds, which may cause discomfort, as well as extreme winds, such as in a tornado, hurricane or heavy storm, which may cause widespread destruction. In the fields of wind energy and air pollution it also includes low and moderate winds as these are relevant to electricity production and dispersion of contaminants.

Wind engineering draws upon meteorology, fluid dynamics, mechanics, geographic information systems, and a number of specialist engineering disciplines, including aerodynamics and structural dynamics.[1] The tools used include atmospheric models, atmospheric boundary layer wind tunnels, and computational fluid dynamics models.

Wind engineering involves, among other topics:

- Wind impact on structures (buildings, bridges, towers)

- Wind comfort near buildings

- Effects of wind on the ventilation system in a building

- Wind climate for wind energy

- Air pollution near buildings

Wind engineering may be considered by structural engineers to be closely related to earthquake engineering and explosion protection.

Some sports stadiums such as Candlestick Park and Arthur Ashe Stadium are known for their strong, sometimes swirly winds, which affect the playing conditions.

A lens antenna is a microwave antenna that uses a shaped piece of microwave-transparent material to bend and focus the radio waves by refraction, as an optical lens does for light.[1] Typically it consists of a small feed antenna such as a patch antenna or horn antenna which radiates radio waves, with a piece of dielectric or composite material in front which functions as a converging lens to collimate the radio waves into a beam.[2]Conversely, in a receiving antenna the lens focuses the incoming radio waves onto the feed antenna, which converts them to electric currents which are delivered to a radio receiver. They can also be fed by an array of feed antennas, called a focal plane array (FPA), to create more complicated radiation patterns.

To generate narrow beams, the lens must be much larger than the wavelength of the radio waves, so lens antennas are mainly used at the high frequency end of the radio spectrum, with microwaves and millimeter waves, whose small wavelengths allow the antenna to be a manageable size. The lens can be made of a dielectric material like plastic, or a composite structure of metal plates or waveguides.[3] Its principle of operation is the same as an optical lens: the microwaves have a different speed (phase velocity) within the lens material than in air, so that the varying lens thickness delays the microwaves passing through it by different amounts, changing the shape of the wavefront and the direction of the waves.[2] Lens antennas can be classified into two types: delay lens antennas in which the microwaves travel slower in the lens material than in air, and fast lens antennas in which the microwaves travel faster in the lens material. As with optical lenses, geometric optics are used to design lens antennas, and the different shapes of lenses used in ordinary optics have analogues in microwave lenses.

Lens antennas have similarities to parabolic antennas and are used in similar applications. In both, microwaves emitted by a small feed antenna are shaped by a large optical surface into the desired final beam shape.[4] They are used less than parabolic antennas due to chromatic aberration and absorption of microwave power by the lens material, their greater weight and bulk, and difficult fabrication and mounting.[3] They are used as collimating elements in high gain microwave systems, such as satellite antennas, radio telescopes, and millimeter wave radar and are mounted in the apertures of horn antennas to increase gain.

https://en.wikipedia.org/wiki/Lens_antenna

A zone plate is a device used to focus light or other things exhibiting wave character.[1] Unlike lenses or curved mirrors, zone plates use diffraction instead of refraction or reflection. Based on analysis by French physicist Augustin-Jean Fresnel, they are sometimes called Fresnel zone plates in his honor. The zone plate's focusing ability is an extension of the Arago spot phenomenon caused by diffraction from an opaque disc.[2]

A zone plate consists of a set of concentric rings, known as Fresnel zones, which alternate between being opaqueand transparent. Light hitting the zone plate will diffract around the opaque zones. The zones can be spaced so that the diffracted light constructively interferes at the desired focus, creating an image there.

https://en.wikipedia.org/wiki/Zone_plate

Opacity is the measure of impenetrability to electromagnetic or other kinds of radiation, especially visible light. In radiative transfer, it describes the absorption and scattering of radiation in a medium, such as a plasma, dielectric, shielding material, glass, etc. An opaque object is neither transparent (allowing all light to pass through) nor translucent (allowing some light to pass through). When light strikes an interface between two substances, in general some may be reflected, some absorbed, some scattered, and the rest transmitted (also see refraction). Reflection can be diffuse, for example light reflecting off a white wall, or specular, for example light reflecting off a mirror. An opaque substance transmits no light, and therefore reflects, scatters, or absorbs all of it. Both mirrors and carbon black are opaque. Opacity depends on the frequency of the light being considered. For instance, some kinds of glass, while transparent in the visual range, are largely opaque to ultraviolet light. More extreme frequency-dependence is visible in the absorption lines of cold gases. Opacity can be quantified in many ways; for example, see the article mathematical descriptions of opacity.

Different processes can lead to opacity including absorption, reflection, and scattering.

https://en.wikipedia.org/wiki/Opacity_(optics)

A photon sieve is a device for focusing light using diffraction and interference. It consists of a flat sheet of material full of pinholes that are arranged in a pattern which is similar to the rings in a Fresnel zone plate, but a sieve brings light to much sharper focus than a zone plate. The sieve concept, first developed in 2001,[1] is versatile because the characteristics of the focusing behaviour can be altered to suit the application by manufacturing a sieve containing holes of several different sizes and different arrangement of the pattern of holes.

Photon sieves have applications to photolithography.[2] and are an alternative to lenses or mirrors in telescopes[3] and terahertz lenses and antennas.[4][conflicted source][5]

When the size of sieves is smaller than one wavelength of operating light, the traditional method mentioned above to describe the diffraction patterns is not valid. The vertorial theory must be used to approximate the diffraction of light from nanosieves.[6] In this theory, the combination of coupled-mode theory and multiple expansion method is used to give an analytical model, which can facilitate the demonstration of traditional devices such as lens, holograms, etc.[7]

https://en.wikipedia.org/wiki/Photon_sieve

Terahertz radiation – also known as submillimeter radiation, terahertz waves, tremendously high frequency[1] (THF), T-rays, T-waves, T-light, T-lux or THz – consists of electromagnetic waves within the ITU-designated band of frequencies from 0.3 to 3 terahertz (THz),[2] although the upper boundary is somewhat arbitrary and is considered by some sources as 30 THz.[3] One terahertz is 1012 Hz or 1000 GHz. Wavelengths of radiation in the terahertz band correspondingly range from 1 mm to 0.01 mm = 10 µm. Because terahertz radiation begins at a wavelength of around 1 millimeter and proceeds into shorter wavelengths, it is sometimes known as the submillimeter band, and its radiation as submillimeter waves, especially in astronomy. This band of electromagnetic radiation lies within the transition region between microwave and far infrared, and can be regarded as either.

Terahertz radiation is strongly absorbed by the gases of the atmosphere, and in air is attenuated to zero within a few meters,[4][5] so it is not practical for terrestrial radio communication. It can penetrate thin layers of materials but is blocked by thicker objects. THz beams transmitted through materials can be used for material characterization, layer inspection, and as a lower-energy alternative to X-rays for producing high resolution images of the interior of solid objects.[6]

Terahertz radiation occupies a middle ground where the ranges of microwaves and infrared light waves overlap, known as the “terahertz gap”; it is called a “gap” because the technology for its generation and manipulation is still in its infancy. The generation and modulation of electromagnetic waves in this frequency range ceases to be possible by the conventional electronic devices used to generate radio waves and microwaves, requiring the development of new devices and techniques.

https://en.wikipedia.org/wiki/Terahertz_radiation

A lens antenna is a microwave antenna that uses a shaped piece of microwave-transparent material to bend and focus the radio waves by refraction, as an optical lens does for light.[1] Typically it consists of a small feed antenna such as a patch antenna or horn antenna which radiates radio waves, with a piece of dielectric or composite material in front which functions as a converging lens to collimate the radio waves into a beam.[2]Conversely, in a receiving antenna the lens focuses the incoming radio waves onto the feed antenna, which converts them to electric currents which are delivered to a radio receiver. They can also be fed by an array of feed antennas, called a focal plane array (FPA), to create more complicated radiation patterns.

To generate narrow beams, the lens must be much larger than the wavelength of the radio waves, so lens antennas are mainly used at the high frequency end of the radio spectrum, with microwaves and millimeter waves, whose small wavelengths allow the antenna to be a manageable size. The lens can be made of a dielectric material like plastic, or a composite structure of metal plates or waveguides.[3] Its principle of operation is the same as an optical lens: the microwaves have a different speed (phase velocity) within the lens material than in air, so that the varying lens thickness delays the microwaves passing through it by different amounts, changing the shape of the wavefront and the direction of the waves.[2] Lens antennas can be classified into two types: delay lens antennas in which the microwaves travel slower in the lens material than in air, and fast lens antennas in which the microwaves travel faster in the lens material. As with optical lenses, geometric optics are used to design lens antennas, and the different shapes of lenses used in ordinary optics have analogues in microwave lenses.

Lens antennas have similarities to parabolic antennas and are used in similar applications. In both, microwaves emitted by a small feed antenna are shaped by a large optical surface into the desired final beam shape.[4] They are used less than parabolic antennas due to chromatic aberration and absorption of microwave power by the lens material, their greater weight and bulk, and difficult fabrication and mounting.[3] They are used as collimating elements in high gain microwave systems, such as satellite antennas, radio telescopes, and millimeter wave radar and are mounted in the apertures of horn antennas to increase gain.

Types[edit]

Microwave lenses can be classified into two types by the propagation speed of the radio waves in the lens material:[2]

- Delay lens (slow wave lens): in this type the radio waves travel slower in the lens medium than in free space; the index of refraction is greater than one, so the path length is increased by passing through the lens medium. This is similar to the action of an ordinary optical lens on light. Since thicker parts of the lens increase the path length, a convex lens is a converging lens which focuses radio waves, and a concave lens is a diverging lens which disperses radio waves, as in ordinary lenses. Delay lenses are constructed of

- Dielectric materials

- H-plane plate structures

- Fast lens (fast wave lens): in this type the radio waves travel faster in the lens medium than in free space, so the index of refraction is less than one, so the optical path length is decreased by passing through the lens medium. This type has no analog in ordinary optical materials, it occurs because the phase velocity of radio waves in waveguides can be greater than the speed of light. Since thicker parts of the lens decrease path length, a concave lens is a converging lens which focuses radio waves, and a convex lens is a diverging lens, the opposite of ordinary optical lenses. Fast lenses are constructed of

- E-plane plate structures

- Negative-index metamaterials

The main types of lens construction are:[5][6]

- Natural dielectric lens - A lens made of a piece of dielectric material. Due to the longer wavelength, microwave lenses have much larger surface shape tolerances than optical lenses. Soft thermoplastics such as polystyrene, polyethylene, and plexiglass are often used, which can be molded or turned to the required shape. Most dielectric materials have significant attenuation and dispersion at microwave frequencies.

- Artificial dielectric lens - This simulates the properties of a dielectric at microwave wavelengths by a 3 dimensional array of small metal conductors, such as spheres, strips, discs or rings suspended in a nonconducting support medium

- Constrained lens - a lens composed of structures that control the direction of the microwaves. They are used with linearly polarized microwaves.

- E-plane metal plate lens - a lens made of closely spaced metal plates parallel to the plane of the electric or E field. This is a fast lens.

- H-plane metal plate lens - a lens made of closely spaced metal plates parallel to the plane of the magnetic or H field. This is a delay lens.

- Waveguide lens - A lens made of short sections of waveguide of different lengths

- Fresnel zone lens - A flat Fresnel zone plate, consisting of concentric annular sheet metal rings blocking out alternate Fresnel zones. It can be easily fabricated with copper foil shapes on a printed circuit board. This lens works by diffraction, not refraction. The microwaves passing through the spaces between the plates interfere constructively at the focal plane. It has large chromatic aberration and so is frequency-specific.

- Luneburg lens - A spherical dielectric lens with a stepped or graded index of refraction increasing toward the center.[7] Luneburg lens antennas have several unique features: the focal point, and the feed antenna, is located at the surface of the lens, so it focuses all the radiation from the feed over a wide angle. It can be used with multiple feed antennas to create multiple beams.

Zoned lens - Microwave lenses, especially short wavelength designs, tend to be excessively thick. This increases weight, bulk, and power losses in dielectric lenses. To reduce thickness, lenses are often made with a zoned geometry, similar to a Fresnel lens. The lens is cut down to a uniform thickness in concentric annular (circular) steps, keeping the same surface angle.[8][9] To keep the microwaves passing through different steps in phase, the height difference between steps must be an integral multiple of a wavelength. For this reason a zoned lens must be made for a specific frequency

Experiment demonstrating refraction of 1.5 GHz (20 cm) microwaves by a paraffin lens, by John Ambrose Fleming in 1897, repeating earlier experiments by Bose, Lodge, and Righi. A spark gap transmitter (A), consisting of a dipole antenna made of two brass rods with a spark gap between them inside an open waveguide, powered by an induction coil (I) generates a beam of microwaves which is focused by the cylindrical paraffin lens (L) on a dipole receiving antenna in the lefthand waveguide (B) and detected by a coherer radio receiver (not shown), which rang a bell every time the transmitter was pulsed. Fleming demonstrated that the lens actually focused the waves by showing that when it was removed from the apparatus, the unfocused waves from the transmitter were too weak to activate the receiver.

The first experiments using lenses to refract and focus radio waves occurred during the earliest research on radio waves in the 1890s. In 1873 mathematical physicist James Clerk Maxwell in his electromagnetic theory, now called Maxwell's equations, predicted the existence of electromagnetic waves and proposed that light consisted of electromagnetic waves of very short wavelength. In 1887 Heinrich Hertz discovered radio waves, electromagnetic waves of longer wavelength. Early scientists thought of radio waves as a form of "invisible light". To test Maxwell's theory that light was electromagnetic waves, these researchers concentrated on duplicating classic optics experiments with short wavelength radio waves, diffractingthem with wire diffraction gratings and refracting them with dielectric prismsand lenses of paraffin, pitch and sulfur. Hertz first demonstrated refraction of 450 MHz (66 cm) radio waves in 1887 using a 6 foot prism of pitch. These experiments among others confirmed that light and radio waves both consisted of the electromagnetic waves predicted by Maxwell, differing only in frequency.

The possibility of concentrating radio waves by focusing them into a beam like light waves interested many researchers of the time.[10] In 1889 Oliver Lodge and James L. Howard attempted to refract 300 MHz (1 meter) waves with cylindrical lenses made of pitch, but failed to find a focusing effect because the apparatus was smaller than the wavelength. In 1894 Lodge successfully focused 4 GHz (7.5 cm) microwaves with a 23 cm glass lens.[11]Beginning the same year, Indian physicist Jagadish Chandra Bose in his landmark 6 - 60 GHz (25 to 5 mm) microwave experiments may have been the first to construct lens antennas, using a 2.5 cm cylindrical sulfur lens in a waveguide to collimate the microwave beam from his spark oscillator,[12] and patenting a receiving antenna consisting of a glass lens focusing microwaves on a galena crystal detector.[13] Also in 1894 Augusto Righi in his microwave experiments at University of Bologna focused 12 GHz (3 cm) waves with 32 cm lenses of paraffin and sulfur. However, microwaves were limited to line-of-sight propagation and could not travel beyond the horizon, and the low power microwave spark transmitters used had very short range. So the practical development of radio after 1897 used much lower frequencies, for which lens antennas were not suitable.

The development of modern lens antennas occurred during a great expansion of research into microwave technology around World War 2 to develop military radar. In 1946 R. K. Luneberg invented the Luneberg lens.

https://en.wikipedia.org/wiki/Lens_antenna

An antenna reflector is a device that reflects electromagnetic waves. Antenna reflectors can exist as a standalone device for redirecting radio frequency (RF) energy, or can be integrated as part of an antennaassembly.

The function of a standalone reflector is to redirect electro-magnetic (EM) energy, generally in the radio wavelength range of the electromagnetic spectrum.

Common standalone reflector types are

- corner reflector, which reflects the incoming signal back to the direction from which it came, commonly used in radar.

- flat reflector, which reflects the signal such as a mirror and is often used as a passive repeater.

When integrated into an antenna assembly, the reflector serves to modify the radiation pattern of the antenna, increasing gain in a given direction.

Common integrated reflector types are

- parabolic reflector, which focuses a beam signal into one point or directs a radiating signal into a beam.[1]

- a passive element slightly longer than and located behind a radiating dipole element that absorbs and re-radiates the signal in a directional way as in a Yagi antenna array.

- a flat reflector such as used in a Short backfire antenna or Sector antenna.

- a corner reflector used in UHF television antennas.

- a cylindrical reflector as used in Cantenna.

Parameters that can directly influence the performance of an antenna with integrated reflector:

- Dimensions of the reflector (Big ugly dish versus small dish)

- Spillover (part of the feed antenna radiation misses the reflector)

- Aperture blockage (also known as feed blockage: part of the feed energy is reflected back into the feed antenna and does not contribute to the main beam)

- Illumination taper (feed illumination reduced at the edges of the reflector)

- Reflector surface deviation

- Defocusing

- Cross polarization

- Feed losses

- Antenna feed mismatch

- Non-uniform amplitude/phase distributions

The antenna efficiency is measured in terms of its effectiveness ratio.

Any gain-degrading factors which raise side lobes have a two-fold effect, in that they contribute to system noise temperature in addition to reducing gain. Aperture blockage and deviation of reflector surface (from the designed "ideal") are two important cases. Aperture blockage is normally due to shadowing by feed, subreflector and/or support members. Deviations in reflector surfaces cause non-uniform aperture distributions, resulting in reduced gains.

The standard symmetrical, parabolic, Cassegrain reflector system is very popular in practice because it allows minimum feeder length to the terminal equipment. The major disadvantage of this configuration is blockage by the hyperbolic sub-reflector and its supporting struts (usually 3–4 are used). The blockage becomes very significant when the size of the parabolic reflector is small compared to the diameter of the sub-reflector. To avoid blockage from the sub-reflector asymmetric designs such as the open Cassegrain can be employed. Note however that the asymmetry can have deleterious effects on some aspects of the antenna's performance - for example, inferior side-lobe levels, beam squint, poor cross-polar response, etc.

To avoid spillover from the effects of over-illumination of the main reflector surface and diffraction, a microwave absorber is sometimes employed. This lossy material helps prevent excessive side-lobe levels radiating from edge effects and over-illumination. Note that in the case of a front-fed Cassegrain the feed horn and feeder (usually waveguide) need to be covered with an edge absorber in addition to the circumference of the main paraboloid.

Measurements are made on reflector antennas to establish important performance indicators such as the gain and sidelobe levels. For this purpose the measurements must be made at a distance at which the beam is fully formed. A distance of four Rayleigh distances is commonly adopted as the minimum distance at which measurements can be made, unless specialized techniques are used (see Antenna measurement).

https://en.wikipedia.org/wiki/Reflector_(antenna)

Polarization (also polarisation) is a property applying to transverse waves that specifies the geometrical orientation of the oscillations.[1][2][3][4][5] In a transverse wave, the direction of the oscillation is perpendicular to the direction of motion of the wave.[4] A simple example of a polarized transverse wave is vibrations traveling along a taut string (see image); for example, in a musical instrument like a guitar string. Depending on how the string is plucked, the vibrations can be in a vertical direction, horizontal direction, or at any angle perpendicular to the string. In contrast, in longitudinal waves, such as sound waves in a liquid or gas, the displacement of the particles in the oscillation is always in the direction of propagation, so these waves do not exhibit polarization. Transverse waves that exhibit polarization include electromagnetic waves such as light and radio waves, gravitational waves,[6] and transverse sound waves (shear waves) in solids.

An electromagnetic wave such as light consists of a coupled oscillating electric field and magnetic fieldwhich are always perpendicular to each other; by convention, the "polarization" of electromagnetic waves refers to the direction of the electric field. In linear polarization, the fields oscillate in a single direction. In circular or elliptical polarization, the fields rotate at a constant rate in a plane as the wave travels. The rotation can have two possible directions; if the fields rotate in a right hand sense with respect to the direction of wave travel, it is called right circular polarization, while if the fields rotate in a left hand sense, it is called left circular polarization.

Light or other electromagnetic radiation from many sources, such as the sun, flames, and incandescent lamps, consists of short wave trains with an equal mixture of polarizations; this is called unpolarized light. Polarized light can be produced by passing unpolarized light through a polarizer, which allows waves of only one polarization to pass through. The most common optical materials do not affect the polarization of light, however, some materials—those that exhibit birefringence, dichroism, or optical activity—affect light differently depending on its polarization. Some of these are used to make polarizing filters. Light is also partially polarized when it reflects from a surface.

According to quantum mechanics, electromagnetic waves can also be viewed as streams of particles called photons. When viewed in this way, the polarization of an electromagnetic wave is determined by a quantum mechanical property of photons called their spin.[7][8] A photon has one of two possible spins: it can either spin in a right hand sense or a left hand sense about its direction of travel. Circularly polarized electromagnetic waves are composed of photons with only one type of spin, either right- or left-hand. Linearly polarized waves consist of photons that are in a superposition of right and left circularly polarized states, with equal amplitude and phases synchronized to give oscillation in a plane.[8]

Polarization is an important parameter in areas of science dealing with transverse waves, such as optics, seismology, radio, and microwaves. Especially impacted are technologies such as lasers, wireless and optical fiber telecommunications, and radar.

https://en.wikipedia.org/wiki/Polarization_(waves)

In antenna engineering, side lobes or sidelobes are the lobes (local maxima) of the far field radiation pattern of an antenna or other radiation source, that are not the main lobe.

The radiation pattern of most antennas shows a pattern of "lobes" at various angles, directions where the radiated signal strength reaches a maximum, separated by "nulls", angles at which the radiated signal strength falls to zero. This can be viewed as the diffraction pattern of the antenna. In a directional antenna in which the objective is to emit the radio waves in one direction, the lobe in that direction is designed to have a larger field strength than the others; this is the "main lobe". The other lobes are called "side lobes", and usually represent unwanted radiation in undesired directions. The side lobe directly behind the main lobe is called the back lobe. The longer the antenna relative to the radio wavelength, the more lobes its radiation pattern has. In transmittingantennas, excessive side lobe radiation wastes energy and may cause interference to other equipment. Another disadvantage is that confidential information may be picked up by unintended receivers. In receiving antennas, side lobes may pick up interfering signals, and increase the noise level in the receiver.

The power density in the side lobes is generally much less than that in the main beam. It is generally desirable to minimize the sidelobe level (SLL), which is measured in decibels relative to the peak of the main beam. The main lobe and side lobes occur for both transmitting and receiving. The concepts of main and side lobes, radiation pattern, aperture shapes, and aperture weighting, apply to optics (another branch of electromagnetics) and in acoustics fields such as loudspeaker and sonar design, as well as antenna design.

Because an antenna's far field radiation pattern is a Fourier Transform of its aperture distribution, most antennas will generally have sidelobes, unless the aperture distribution is a Gaussian, or if the antenna is so small as to have no sidelobes in the visible space. Larger antennas have narrower main beams, as well as narrower sidelobes. Hence, larger antennas have more sidelobes in the visible space (as the antenna size is increased, sidelobes move from the evanescent space to the visible space).

For discrete aperture antennas (such as phased arrays) in which the element spacing is greater than a half wavelength, the spatial aliasing effect causes some sidelobes to become substantially larger in amplitude, and approaching the level of the main lobe; these are called grating lobes, and they are identical, or nearly identical in the example shown, copies of the main beams.

Grating lobes side lobe main lobeare a special case of a sidelobe. In such a case, the sidelobes should be considered all the lobes lying between the main lobe and the first grating lobe, or between grating lobes. It is conceptually useful to distinguish between sidelobes and grating lobes because grating lobes have larger amplitudes than most, if not all, of the other side lobes. The mathematics of grating lobes is the same as of X-ray diffraction.

https://en.wikipedia.org/wiki/Side_lobe

In electronics, noise temperature is one way of expressing the level of available noise power introduced by a component or source. The power spectral density of the noise is expressed in terms of the temperature (in kelvins) that would produce that level of Johnson–Nyquist noise, thus:

where:

- is the noise power (in W, watts)

- is the total bandwidth (Hz, hertz) over which that noise power is measured

- is the Boltzmann constant (1.381×10−23 J/K, joules per kelvin)

- is the noise temperature (K, kelvin)

Thus the noise temperature is proportional to the power spectral density of the noise, . That is the power that would be absorbed from the component or source by a matched load. Noise temperature is generally a function of frequency, unlike that of an ideal resistor which is simply equal to the actual temperature of the resistor at all frequencies.

https://en.wikipedia.org/wiki/Noise_temperature

The Cassegrain reflector is a combination of a primary concave mirror and a secondary convex mirror, often used in optical telescopes and radio antennas, the main characteristic being that the optical path folds back onto itself, relative to the optical system's primary mirror entrance aperture. This design puts the focal point at a convenient location behind the primary mirror and the convex secondary adds a telephoto effect creating a much longer focal length in a mechanically short system.[1]

In a symmetrical Cassegrain both mirrors are aligned about the optical axis, and the primary mirror usually contains a hole in the center, thus permitting the light to reach an eyepiece, a camera, or an image sensor. Alternatively, as in many radio telescopes, the final focus may be in front of the primary. In an asymmetrical Cassegrain, the mirror(s) may be tilted to avoid obscuration of the primary or to avoid the need for a hole in the primary mirror (or both).

The classic Cassegrain configuration uses a parabolic reflector as the primary while the secondary mirror is hyperbolic.[2] Modern variants may have a hyperbolic primary for increased performance (for example, the Ritchey–Chrétien design); and either or both mirrors may be spherical or elliptical for ease of manufacturing.

The Cassegrain reflector is named after a published reflecting telescope design that appeared in the April 25, 1672 Journal des sçavans which has been attributed to Laurent Cassegrain.[3] Similar designs using convex secondary mirrors have been found in the Bonaventura Cavalieri's 1632 writings describing burning mirrors[4][5] and Marin Mersenne's 1636 writings describing telescope designs.[6] James Gregory's 1662 attempts to create a reflecting telescope included a Cassegrain configuration, judging by a convex secondary mirror found among his experiments.[7]

The Cassegrain design is also used in catadioptric systems.

An unusual variant of the Cassegrain is the Schiefspiegler telescope ("skewed" or "oblique reflector"; also known as the "Kutter telescope" after its inventor, Anton Kutter[9]) which uses tilted mirrors to avoid the secondary mirror casting a shadow on the primary. However, while eliminating diffraction patterns this leads to several other aberrations that must be corrected.

Several different off-axis configurations are used for radio antennas.[10]

Another off-axis, unobstructed design and variant of the Cassegrain is the 'Yolo' reflector invented by Arthur Leonard. This design uses a spherical or parabolic primary and a mechanically warped spherical secondary to correct for off-axis induced astigmatism. When set up correctly the Yolo can give uncompromising unobstructed views of planetary objects and non-wide field targets, with no lack of contrast or image quality caused by spherical aberration. The lack of obstruction also eliminates the diffraction associated with Cassegrain and Newtonian reflector astrophotography.

Catadioptric Cassegrains[edit]

Catadioptric Cassegrains use two mirrors, often with a spherical primary mirror to reduce cost, combined with refractive corrector element(s) to correct the resulting aberrations.

Schmidt-Cassegrain[edit]

The Schmidt-Cassegrain was developed from the wide-field Schmidt camera, although the Cassegrain configuration gives it a much narrower field of view. The first optical element is a Schmidt corrector plate. The plate is figured by placing a vacuum on one side, and grinding the exact correction required to correct the spherical aberration caused by the spherical primary mirror. Schmidt-Cassegrains are popular with amateur astronomers. An early Schmidt-Cassegrain camera was patented in 1946 by artist/architect/physicist Roger Hayward,[11] with the film holder placed outside the telescope.

Maksutov-Cassegrain[edit]

The Maksutov-Cassegrain is a variation of the Maksutov telescope named after the Soviet/Russian opticianand astronomer Dmitri Dmitrievich Maksutov. It starts with an optically transparent corrector lens that is a section of a hollow sphere. It has a spherical primary mirror, and a spherical secondary that is usually a mirrored section of the corrector lens.

Argunov-Cassegrain[edit]

In the Argunov-Cassegrain telescope all optics are spherical, and the classical Cassegrain secondary mirror is replaced by a sub-aperture corrector consisting of three air spaced lens elements. The element farthest from the primary mirror is a Mangin mirror, which acts as a secondary mirror.

Klevtsov-Cassegrain[edit]

The Klevtsov-Cassegrain, like the Argunov-Cassegrain, uses a sub-aperture corrector consisting of a small meniscus lens and a Mangin mirror as its "secondary mirror".[12]

https://en.wikipedia.org/wiki/Cassegrain_reflector

A catadioptric optical system is one where refraction and reflection are combined in an optical system, usually via lenses (dioptrics) and curved mirrors (catoptrics). Catadioptric combinations are used in focusing systems such as searchlights, headlamps, early lighthouse focusing systems, optical telescopes, microscopes, and telephoto lenses. Other optical systems that use lenses and mirrors are also referred to as "catadioptric", such as surveillancecatadioptric sensors.

https://en.wikipedia.org/wiki/Catadioptric_system

n a phased array or slotted waveguide antenna, squint refers to the angle that the transmission is offset from the normal of the plane of the antenna. In simple terms, it is the change in the beam direction as a function of operating frequency, polarization, or orientation.[1] It is an important phenomenon that can limit the bandwidth in phased array antenna systems.[2]

This deflection can be caused by:

- Signal frequency

- Signals in a waveguide travel at a speed that varies with frequency and the dimensions of the waveguide.

In a phased array or slotted waveguide antenna, the signal is designed to reach the outputs in a given phase relationship. This can be accomplished for any single frequency by properly adjusting the length of each waveguide so the signals arrive in-phase. However, if a different frequency is sent into the feeds, they will arrive at the ends at different times, the phase relationship will not be maintained,[3] and squint will result.

Frequency-dependant phase shifting of the elements of the array can be used to compensate for the squint,[4] which leads to the concept of a squintless antenna or feed.[5]

- Design

- In some cases the antenna may be designed to create a squint. For example, an antenna which is used to communicate with a satellite but must remain in a vertical configuration. Squint is also required in conical scanning.

- https://en.wikipedia.org/wiki/Squint_(antenna)

A radome (a portmanteau of radar and dome) is a structural, weatherproof enclosure that protects a radarantenna. The radome is constructed of material that minimally attenuates the electromagnetic signaltransmitted or received by the antenna, effectively transparent to radio waves. Radomes protect the antenna from weather and conceal antenna electronic equipment from view. They also protect nearby personnel from being accidentally struck by quickly rotating antennas.

Radomes can be constructed in several shapes – spherical, geodesic, planar, etc. – depending on the particular application, using various construction materials such as fiberglass, polytetrafluoroethylene (PTFE)-coated fabric, and others.

When found on fixed-wing aircraft with forward-looking radar, as are commonly used for object or weather detection, the nose cones often additionally serve as radomes. On aircraft used for airborne early warning and control (AEW&C), a rotating radome, often called a "rotodome", is mounted on the top of the fuselage for 360-degree coverage. Some newer AEW&C configurations instead use three antenna modules inside a radome, usually mounted on top of the fuselage, for 360-degree coverage, such as the Chinese KJ-2000 and Indian DRDO AEW&Cs.

On rotary-wing and fixed-wing aircraft using microwave satellite for beyond-line-of-sight communication, radomes often appear as blisters on the fuselage.[1] In addition to protection, radomes also streamline the antenna system, thus reducing drag.

A radome is often used to prevent ice and freezing rain from accumulating on antennas. In the case of a spinning radar parabolic antenna, the radome also protects the antenna from debris and rotational irregularities due to wind. Its shape is easily identified by its hardshell, which has strong properties against being damaged.

One of the main driving forces behind the development of fiberglass as a structural material was the need during World War II for radomes.[2] When considering structural load, the use of a radome greatly reduces wind load in both normal and iced conditions. Many tower sites require or prefer the use of radomes for wind loading benefits and for protection from falling ice or debris.

Where radomes might be considered unsightly if near the ground, electric antenna heaters could be used instead. Usually running on direct current, the heaters do not interfere physically or electrically with the alternating current of the radio transmission.

https://en.wikipedia.org/wiki/Radome

In the field of antenna design the term radiation pattern (or antenna pattern or far-field pattern) refers to the directional (angular) dependence of the strength of the radio waves from the antenna or other source.[1][2][3]

Particularly in the fields of fiber optics, lasers, and integrated optics, the term radiation pattern may also be used as a synonym for the near-field pattern or Fresnel pattern.[4] This refers to the positional dependence of the electromagnetic field in the near field, or Fresnel region of the source. The near-field pattern is most commonly defined over a plane placed in front of the source, or over a cylindrical or spherical surface enclosing it.[1][4]

The far-field pattern of an antenna may be determined experimentally at an antenna range, or alternatively, the near-field pattern may be found using a near-field scanner, and the radiation pattern deduced from it by computation.[1] The far-field radiation pattern can also be calculated from the antenna shape by computer programs such as NEC. Other software, like HFSS can also compute the near field.

The far field radiation pattern may be represented graphically as a plot of one of a number of related variables, including; the field strength at a constant (large) radius (an amplitude pattern or field pattern), the power per unit solid angle (power pattern) and the directive gain. Very often, only the relative amplitude is plotted, normalized either to the amplitude on the antenna boresight, or to the total radiated power. The plotted quantity may be shown on a linear scale, or in dB. The plot is typically represented as a three-dimensional graph (as at right), or as separate graphs in the vertical plane and horizontal plane. This is often known as a polar diagram.