Order theory is a branch of mathematics which investigates the intuitive notion of order using binary relations. It provides a formal framework for describing statements such as "this is less than that" or "this precedes that". This article introduces the field and provides basic definitions. A list of order-theoretic terms can be found in the order theory glossary.

See also[edit]

https://en.wikipedia.org/wiki/Order_theory

The causal sets program is an approach to quantum gravity. Its founding principles are that spacetime is fundamentally discrete (a collection of discrete spacetime points, called the elements of the causal set) and that spacetime events are related by a partial order. This partial order has the physical meaning of the causality relationsbetween spacetime events.

The program is based on a theorem[1] by David Malament that states that if there is a bijective map between two past and future distinguishing space times that preserves their causal structure then the map is a conformal isomorphism. The conformal factor that is left undetermined is related to the volume of regions in the spacetime. This volume factor can be recovered by specifying a volume element for each space time point. The volume of a space time region could then be found by counting the number of points in that region.

Causal sets was initiated by Rafael Sorkin who continues to be the main proponent of the program. He has coined the slogan "Order + Number = Geometry" to characterize the above argument. The program provides a theory in which space time is fundamentally discrete while retaining local Lorentz invariance.

Standard Model

hide

Evidence

Hierarchy problem Dark matter Dark energy Quintessence Phantom energy Dark radiation Dark photon Cosmological constant problem Strong CP problem Neutrino oscillation

hide

Theories

Brans–Dicke theory Cosmic censorship hypothesis Fifth force F-theory Theory of everything Unified field theory Grand Unified Theory Technicolor Kaluza–Klein theory Topological quantum field theory Local quantum field theory Liouville field theory 6D (2,0) superconformal field theory Noncommutative quantum field theory Quantum cosmology Brane cosmology String theory Superstring theory M-theory Mathematical universe hypothesis Mirror matter Randall–Sundrum model Yang–Mills theory N = 4 supersymmetric Yang–Mills theory Twistor string theory Dark fluid Doubly special relativity de Sitter invariant special relativity Causal fermion systems Black hole thermodynamics Unparticle physics Graviphoton Graviscalar Graviton Gravitino Massive gravity Gauge gravitation theory Gauge theory gravity CPT symmetry

hide

Supersymmetry

MSSM NMSSM Superstring theory M-theory Supergravity Supersymmetry breaking Extra dimensions Large extra dimensions

hide

Quantum gravity

False vacuum String theory Spin foam Quantum foam Quantum geometry Loop quantum gravity Quantum cosmology Loop quantum cosmology Causal dynamical triangulation Causal fermion systems Causal sets Canonical quantum gravity Semiclassical gravity Superfluid vacuum theory

hide

Experiments

ANNIE Gran Sasso INO LHC SNO Super-K Tevatron NOvA

https://en.wikipedia.org/wiki/Causal_sets

Hierarchy theory is a means of studying ecological systems in which the relationship between all of the components is of great complexity. Hierarchy theory focuses on levels of organization and issues of scale, with a specific focus on the role of the observer in the definition of the system.[1] Complexity in this context does not refer to an intrinsic property of the system but to the possibility of representing the systems in a plurality of non-equivalent ways depending on the pre-analytical choices of the observer. Instead of analyzing the whole structure, hierarchy theory refers to the analysis of hierarchical levels, and the interactions between them.

https://en.wikipedia.org/wiki/Hierarchy_theory

Pattern theory, formulated by Ulf Grenander, is a mathematical formalism to describe knowledge of the world as patterns. It differs from other approaches to artificial intelligence in that it does not begin by prescribing algorithms and machinery to recognize and classify patterns; rather, it prescribes a vocabulary to articulate and recast the pattern concepts in precise language. Broad in its mathematical coverage, Pattern Theory spans algebra and statistics, as well as local topological and global entropic properties.

In addition to the new algebraic vocabulary, its statistical approach is novel in its aim to:

- Identify the hidden variables of a data set using real world data rather than artificial stimuli, which was previously commonplace.

- Formulate prior distributions for hidden variables and models for the observed variables that form the vertices of a Gibbs-like graph.

- Study the randomness and variability of these graphs.

- Create the basic classes of stochastic models applied by listing the deformations of the patterns.

- Synthesize (sample) from the models, not just analyze signals with them.

The Brown University Pattern Theory Group was formed in 1972 by Ulf Grenander.[1] Many mathematicians are currently working in this group, noteworthy among them being the Fields Medalist David Mumford. Mumford regards Grenander as his "guru" in Pattern Theory.[citation needed]

https://en.wikipedia.org/wiki/Pattern_theory

Complexity characterises the behaviour of a system or model whose components interact in multiple ways and follow local rules, meaning there is no reasonable higher instruction to define the various possible interactions.[1]

The term is generally used to characterize something with many parts where those parts interact with each other in multiple ways, culminating in a higher order of emergence greater than the sum of its parts. The study of these complex linkages at various scales is the main goal of complex systems theory.

Science as of 2010 takes a number of approaches to characterizing complexity; Zayed et al.[2] reflect many of these. Neil Johnsonstates that "even among scientists, there is no unique definition of complexity – and the scientific notion has traditionally been conveyed using particular examples..." Ultimately Johnson adopts the definition of "complexity science" as "the study of the phenomena which emerge from a collection of interacting objects".[3]

https://en.wikipedia.org/wiki/Complexity

In physics and philosophy, a relational theory (or relationism) is a framework to understand reality or a physical system in such a way that the positions and other properties of objects are only meaningful relative to other objects. In a relational spacetime theory, space does not exist unless there are objects in it; nor does time exist without events. The relational view proposes that space is contained in objects and that an object represents within itself relationships to other objects. Space can be defined through the relations among the objects that it contains considering their variations through time. The alternative spatial theory is an absolute theory in which the space exists independently of any objects that can be immersed in it.[1]

Relational Order Theories

A number of independent lines of research depict the universe, including the social organization of living creatures which is of particular interest to humans, as systems, or networks, of relationships. Basic physics has assumed and characterized distinctive regimes of relationships. For common examples, gases, liquids and solids are characterized as systems of objects which have among them relationships of distinctive types. Gases contain elements which vary continuously in their spatial relationships as among themselves. In liquids component elements vary continuously as to angles as between themselves, but are restricted as to spatial dispersion. In solids both angles and distances are circumscribed. These systems of relationships, where relational states are relatively uniform, bounded and distinct from other relational states in their surroundings, are often characterized as phases of matter, as set out in Phase (matter). These examples are only a few of the sorts of relational regimes which can be identified, made notable by their relative simplicity and ubiquity in the universe.

Such Relational systems, or regimes, can be seen as defined by reductions in degrees of freedom among the elements of the system. This diminution in degrees of freedom in relationships among elements is characterized as correlation. In the commonly observed transitions between phases of matter, or phase transitions, the progression of less ordered, or more random, to more ordered, or less random, systems is recognized as the result of correlational processes (e.g. gas to liquid, liquid to solid). In the reverse of this process, transitions from a more-ordered state to a less ordered state, as from ice to liquid water, are accompanied by the disruption of correlations.

Correlational processes have been observed at several levels. For example, atoms are fused in suns, building up aggregations of nucleons, which we recognize as complex and heavy atoms. Atoms, both simple and complex, aggregate into molecules. In life a variety of molecules form extremely complex dynamically ordered living cells. Over evolutionary time multicellular organizations developed as dynamically ordered aggregates of cells. Multicellular organisms have over evolutionary time developed correlated activities forming what we term social groups. Etc.

Thus, as is reviewed below, correlation, i.e. ordering, processes have been tiered through several levels, reaching from quantum mechanics upward through complex, dynamic, 'non-equilibrium', systems, including living systems.

Quantum mechanics[edit]

Lee Smolin[4] proposes a system of "knots and networks" such that "the geometry of space arises out of a … fundamental quantum level which is made up of an interwoven network of … processes".[5] Smolin and a group of like minded researchers have devoted a number of years to developing a loop quantum gravity basis for physics, which encompasses this relational network viewpoint.

Carlo Rovelli initiated development of a system of views now called relational quantum mechanics. This concept has at its foundation the view that all systems are quantum systems, and that each quantum system is defined by its relationship with other quantum systems with which it interacts.

The physical content of the theory is not to do with objects themselves, but the relations between them. As Rovelli puts it: "Quantum mechanics is a theory about the physical description of physical systems relative to other systems, and this is a complete description of the world".[6]

Rovelli has proposed that each interaction between quantum systems involves a ‘measurement’, and such interactions involved reductions in degrees of freedom between the respective systems, to which he applies the term correlation.

Cosmology[edit]

The conventional explanations of Big Bang and related cosmologies (see also Timeline of the Big Bang) project an expansion and related ‘cooling’ of the universe. This has entailed a cascade of phase transitions. Initially were quark-gluon transitions to simple atoms. According to current, consensus cosmology, given gravitational forces, simple atoms aggregated into stars, and stars into galaxies and larger groupings. Within stars, gravitational compression fused simple atoms into increasingly complex atoms, and stellar explosions seeded interstellar gas with these atoms. Over the cosmological expansion process, with continuing star formation and evolution, the cosmic mixmaster produced smaller scale aggregations, many of which, surrounding stars, we call planets. On some planets, interactions between simple and complex atoms could produce differentiated sets of relational states, including gaseous, liquid, and solid (as, on Earth, atmosphere, oceans, and rock or land). In one and probably more of those planet level aggregations, energy flows and chemical interactions could produce dynamic, self replicating systems which we call life.

Strictly speaking, phase transitions can both manifest correlation and differentiation events, in the direction of diminution of degrees of freedom, and in the opposite direction disruption of correlations. However, the expanding universe picture presents a framework in which there appears to be a direction of phase transitions toward differentiation and correlation, in the universe as a whole, over time.

This picture of progressive development of order in the observable universe as a whole is at variance with the general framework of the Steady State theory of the universe, now generally abandoned. It also appears to be at variance with an understanding of the Second law of thermodynamics which would view the universe as an isolated system which would at some posited equilibrium be in a maximally random set of configurations.

Two prominent cosmologists have provided slightly varying but compatible explanations of how the expansion of the universe allows ordered, or correlated, relational regimes to arise and persist, notwithstanding the second law of thermodynamics. David Layzer[7]and Eric Chaisson.[8]

Layzer speaks in terms of the rate of expansion outrunning the rate of equilibration involved at local scales. Chaisson summarizes the argument as "In an expanding universe actual entropy … increases less than the maximum possible entropy"[9] thus allowing for, or requiring, ordered (negentropic) relationships to arise and persist.

Chaisson depicts the universe as a non-equilibrium process, in which energy flows into and through ordered systems, such as galaxies, stars, and life processes. This provides a cosmological basis for non-equilibrium thermodynamics, treated elsewhere to some extent in this encyclopedia at this time. In terms which unite non-equilibrium thermodynamics language and relational analyses language, patterns of processes arise and are evident as ordered, dynamic relational regimes.

Biology[edit]

Basic levels[edit]

There seems to be agreement that life is a manifestation of non-equilibrium thermodynamics, both as to individual living creatures and as to aggregates of such creatures, or ecosystems. See e.g. Brooks and Wiley[10] Smolin,[11] Chaisson, Stuart Kauffman[12] and Ulanowicz.[13]

This realization has proceeded from, among other sources, a seminal concept of ‘dissipative systems’ offered by Ilya Prigogine. In such systems, energy feeds through a stable, or correlated, set of dynamic processes, both engendering the system and maintaining the stability of the ordered, dynamic relational regime. A familiar example of such a structure is the Red Spot of Jupiter.

In the 1990s, Eric Schnieder and J.J. Kaye[14] began to develop the concept of life working off differentials, or gradients (e.g. the energy gradient manifested on Earth as a result of sunlight impinging on earth on the one hand and the temperature of interstellar space on the other). Schneider and Kaye identified the contributions of by Prigogine and Erwin Schrödinger What is Life? (Schrödinger) as foundations for their conceptual developments.

Schneider and Dorion Sagan have since elaborated on the view of life dynamics and the ecosystem in Into the Cool.[15] In this perspective, energy flows tapped from gradients create dynamically ordered structures, or relational regimes, in pre-life precursor systems and in living systems.

As noted above, Chaisson[16] has provided a conceptual grounding for the existence of the differentials, or gradients, off which, in the view of Kaye, Schneider, Sagan and others, life works. Those differentials and gradients arise in the ordered structures (such as suns, chemical systems, and the like) created by correlation processes entailed in the expansion and cooling processes of the universe.

Two investigators, Robert Ulanowicz[13] and Stuart Kauffman, .[17] have suggested the relevance of autocatalysis models for life processes. In this construct, a group of elements catalyse reactions in a cyclical, or topologically circular, fashion.

Several investigators have used these insights to suggest essential elements of a thermodynamic definition of the life process, which might briefly be summarized as stable, patterned (correlated) processes which intake (and dissipate) energy, and reproduce themselves.[18]

Ulanowicz, a theoretical ecologist, has extended the relational analysis of life processes to ecosystems, using information theorytools. In this approach, an ecosystem is a system of networks of relationships (a common viewpoint at present), which can be quantified and depicted at a basic level in terms of the degrees of order or organization manifested in the systems.

Two prominent investigators, Lynn Margulis and, more fully, Leo Buss[19] have developed a view of the evolved life structure as exhibiting tiered levels of (dynamic) aggregation of life units. In each level of aggregation, the component elements have mutually beneficial, or complementary, relationships.

In brief summary, the comprehensive Buss approach is cast in terms of replicating precursors which became inclusions in single celled organisms, thence single celled organisms, thence the eukaryotic cell (which are, in Margulis’ now widely adopted analysis, made up of single celled organisms), thence multicellular organisms, composed of eukaryotic cells, and thence social organizationscomposed of multicellular organisms. This work adds to the ‘tree of life’ metaphor a sort of ‘layer cake of life’ metaphor., taking into account tiered levels of life organization.

Related areas of current interest[edit]

Second law of thermodynamics[edit]

The development of non equilibrium thermodynamics and the observations of cosmological generation of ordered systems, identified above, have engendered proposed modifications in the interpretation of the Second Law of Thermodynamics, as compared with the earlier interpretations on the late 19th and the 20th century. For example, Chaisson and Layzer have advanced reconciliations of the concept of entropy with the cosmological creation of order. In another approach, Schneider and D. Sagan, in Into the Cool and other publications, depict the organization of life, and some other phenomena such as benard cells, as entropy generating phenomena which facilitate the dissipation, or reduction, of gradients (without in this treatment visibly getting to the prior issue of how gradients have arisen).

The ubiquity of power law and log-normal distribution manifestations in the universe[edit]

The development of network theories has yielded observations of widespread, or ubiquitous, appearance of power law and log-normal distributions of events in such networks, and in nature generally. (Mathematicians often distinguish between ‘power laws’ and ‘log-normal’ distributions, but not all discussions do so.) Two observers have provided documentation of these phenomena, Albert-László Barabási,[20] and Mark Buchanan[21]

Buchanan demonstrated that power law distribution occur throughout nature, in events such as earthquake frequencies, the size of cities, the size of sun and planetary masses, etc. Both Buchanan and Barabasi reported the demonstrations of a variety of investigators that such power law distributions arise in phase transitions.

In Barabasi's characterization "…if the system is forced to undergo a phase transition … then power laws emerge – nature's unmistakable sign that chaos is departing in favor of order. The theory of phase transitions told us loud and clear that the road from disorder to order is maintained by the powerful forces of self organization and paved with power laws."[22]

Given Barabasi's observation that phase transitions are, in one direction, correlational events yielding ordered relationships, relational theories of order following this logic would consider the ubiquity of power laws to be a reflection of the ubiquity of combinatorial processes of correlation in creating all ordered systems.

Emergence[edit]

The relational regime approach includes a straightforward derivation of the concept of emergence.

From the perspective of relational theories of order, emergent phenomena could be said to be relational effects of an aggregated and differentiated system made of many elements, in a field of relationships external to the considered system, when the elements of the considered system, taken separately and independently, would not have such effects.

For example, the stable structure of a rock, which allows very few degrees of freedom for its elements, can be seen to have a variety of external manifestations depending on the relational system in which it may be found. It could impede fluid flow, as a part of a retaining wall. If it were placed in a wind tunnel, it could be said to induce turbulence in the flow of air around it. In contests among rivalrous humans, it has sometimes been a convenient skull cracker. Or it might become, though itself a composite, an element of another solid, having similarly reduced degrees of freedom for its components, as would a pebble in a matrix making up cement.

To shift particulars, embedding carbon filaments in a resin making up a composite material can yield ‘emergent’ effects. (See the composite material article for a useful description of how varying components can, in a composite, yield effects within an external field of use, or relational setting, which the components alone would not yield).

This perspective has been advanced by Peter Corning, among others. In Corning's words, "...the debate about whether or not the whole can be predicted from the properties of the parts misses the point. Wholes produce unique combined effects, but many of these effects may be co-determined by the context and the interactions between the whole and its environment(s)."[23]

That this derivation of the concept of emergence is conceptually straightforward does not imply that the relational system may not itself be complex, or participate as an element in a complex system of relationships – as is illustrated using different terminology in some aspects of the linked emergence and complexity articles.

The term "emergence" has been used in the very different sense of characterizing the tiering of relational systems (groupings made of groupings) which constitutes the apparently progressive development of order in the universe, described by Chaisson, Layzer, and others, and noted in the Cosmology and Life Organization portions of this page. See for an additional example the derived, popularized narrative Epic of Evolution described in this encyclopedia. From his perspective, Corning adverts to this process of building 'wholes' which then in some circumstances participate in complex systems, such as life systems, as follows "...it is the synergistic effects produced by wholes that are the very cause of the evolution of complexity in nature."

The arrow of time[edit]

As the article on the Arrow of time makes clear, there have been a variety of approaches to defining time and defining how time may have a direction.

The theories which outline a development of order in the universe, rooted in the asymmetric processes of expansion and cooling, project an ‘arrow of time’ . That is, the expanding universe is a sustained process which as it proceeds yields changes of state which do not appear, over the universe as a whole, to be reversible. The changes of state in a given system, and in the universe as a whole, can be earmarked by observable periodicities to yield the concept of time.

Given the challenges confronting humans in determining how the Universe may evolve over billions and trillions of our years, it is difficult to say how long this arrow may be and its eventual end state. At this time some prominent investigators suggest that much if not most of the visible matter of the universe will collapse into black holes which can be depicted as isolated, in a static cosmology.[24]

Economics[edit]

At this time there is a visible attempt to re-cast the foundations of the economics discipline in the terms of non-equilibrium dynamics and network effects.

Albert-László Barabási, Igor Matutinovic[25] and others have suggested that economic systems can fruitfully be seen as network phenomena generated by non-equilibrium forces.

As is set out in Thermoeconomics, a group of analysts have adopted the non equilibrium thermodynamics concepts and mathematical apparati, discussed above, as a foundational approach to considering and characterizing economic systems. They propose that human economic systems can be modeled as thermodynamic systems. Then, based on this premise, theoretical economic analogs of the first and second laws of thermodynamics are developed.[26] In addition, the thermodynamic quantity exergy, i.e. measure of the useful work energy of a system, is one measure of value.[citation needed]

Thermoeconomists argue that economic systems always involve matter, energy, entropy, and information.[27] Thermoeconomics thus adapts the theories in non-equilibrium thermodynamics, in which structure formations called dissipative structures form, and information theory, in which information entropy is a central construct, to the modeling of economic activities in which the natural flows of energy and materials function to create and allocate resources. In thermodynamic terminology, human economic activity (as well as the activity of the human life units which make it up) may be described as a dissipative system, which flourishes by consuming free energy in transformations and exchange of resources, goods, and services.

The article on Complexity economics also contains concepts related to this line of thinking.

Another approach is led by researchers belonging to the school of evolutionary and institutional economics (Jason Potts), and ecological economics (Faber et al.).[28]

Separately, some economists have adopted the language of ‘network industries’.[29]

Particular formalisms[edit]

Two other entries in this encyclopedia set out particular formalisms involving mathematical modeling of relationships, in one case focusing to a substantial extent on mathematical expressions for relationships Theory of relations and in the other recording suggestions of a universal perspective on modeling and reality Relational theory.

See also[edit]

https://en.wikipedia.org/wiki/Relational_theory

Relationalism is any theoretical position that gives importance to the relational nature of things. For relationalism, things exist and function only as relational entities. Relationalism may be contrasted with relationism, which tends to emphasize relations per se.

https://en.wikipedia.org/wiki/Relationalism

Relativism is a family of philosophical views which deny claims to objectivity within a particular domain and assert that facts in that domain are relative to the perspective of an observer or the context in which they are assessed.[1] There are many different forms of relativism, with a great deal of variation in scope and differing degrees of controversy among them.[2] Moral relativism encompasses the differences in moral judgments among people and cultures.[3] Epistemic relativism holds that there are no absolute facts regarding norms of belief, justification, or rationality, and that there are only relative ones.[4] Alethic relativism (also factual relativism) is the doctrine that there are no absolute truths, i.e., that truth is always relative to some particular frame of reference, such as a language or a culture (cultural relativism).[5] Some forms of relativism also bear a resemblance to philosophical skepticism.[6] Descriptive relativism seeks to describe the differences among cultures and people without evaluation, while normative relativism evaluates the morality or truthfulness of views within a given framework.

https://en.wikipedia.org/wiki/Relativism

Epistemology (/ɪˌpɪstɪˈmɒlədʒi/ (![]() listen); from Greek ἐπιστήμη, epistēmē 'knowledge', and -logy) is the branch of philosophy concerned with knowledge. Epistemologists study the nature, origin, and scope of knowledge, epistemic justification, the rationalityof belief, and various related issues. Epistemology is considered a major subfield of philosophy, along with other major subfields such as ethics, logic, and metaphysics.[1]

listen); from Greek ἐπιστήμη, epistēmē 'knowledge', and -logy) is the branch of philosophy concerned with knowledge. Epistemologists study the nature, origin, and scope of knowledge, epistemic justification, the rationalityof belief, and various related issues. Epistemology is considered a major subfield of philosophy, along with other major subfields such as ethics, logic, and metaphysics.[1]

Debates in epistemology are generally clustered around four core areas:[2][3][4]

- The philosophical analysis of the nature of knowledge and the conditions required for a belief to constitute knowledge, such as truth and justification

- Potential sources of knowledge and justified belief, such as perception, reason, memory, and testimony

- The structure of a body of knowledge or justified belief, including whether all justified beliefs must be derived from justified foundational beliefs or whether justification requires only a coherent set of beliefs

- Philosophical skepticism, which questions the possibility of knowledge, and related problems, such as whether skepticism poses a threat to our ordinary knowledge claims and whether it is possible to refute skeptical arguments

In these debates and others, epistemology aims to answer questions such as "What do we know?", "What does it mean to say that we know something?", "What makes justified beliefs justified?", and "How do we know that we know?".[1][2][5][6][7]

https://en.wikipedia.org/wiki/Epistemology

A priori and a posteriori ('from the earlier' and 'from the later', respectively) are Latin phrases used in philosophy to distinguish types of knowledge, justification, or argument by their reliance on empirical evidence or experience. A priori knowledge is that which is independent from experience. Examples include mathematics,[i]tautologies, and deduction from pure reason.[ii] A posteriori knowledge is that which depends on empirical evidence. Examples include most fields of science and aspects of personal knowledge.

The terms originate from the analytic methods of Aristotle's organon: prior analyticscovering deductive logic from definitions and first principles, and posterior analyticscovering inductive logic from observational evidence.

Both terms appear in Euclid's Elements but were popularized by Immanuel Kant's Critique of Pure Reason, one of the most influential works in the history of philosophy.[1] Both terms are primarily used as modifiers to the noun "knowledge" (i.e. "a priori knowledge"). A priori can also be used to modify other nouns such as 'truth". Philosophers also may use apriority, apriorist, and aprioricity as nouns referring to the quality of being a priori.[2]

https://en.wikipedia.org/wiki/A_priori_and_a_posteriori

Bayesian probability is an interpretation of the concept of probability, in which, instead of frequency or propensity of some phenomenon, probability is interpreted as reasonable expectation[1] representing a state of knowledge[2] or as quantification of a personal belief.[3]

https://en.wikipedia.org/wiki/Bayesian_probability

Truth is the property of being in accord with fact or reality.[1] In everyday language, truth is typically ascribed to things that aim to represent reality or otherwise correspond to it, such as beliefs, propositions, and declarative sentences.[2]

Truth is usually held to be the opposite of falsehood. The concept of truth is discussed and debated in various contexts, including philosophy, art, theology, and science. Most human activities depend upon the concept, where its nature as a concept is assumed rather than being a subject of discussion; these include most of the sciences, law, journalism, and everyday life. Some philosophers view the concept of truth as basic, and unable to be explained in any terms that are more easily understood than the concept of truth itself.[2] Most commonly, truth is viewed as the correspondence of language or thought to a mind-independent world. This is called the correspondence theory of truth.

Various theories and views of truth continue to be debated among scholars, philosophers, and theologians.[2][3] There are many different questions about the nature of truth which are still the subject of contemporary debates, such as: How do we define truth? Is it even possible to give an informative definition of truth? What things are truth-bearers and are therefore capable of being true or false? Are truth and falsehood bivalent, or are there other truth values? What are the criteria of truth that allow us to identify it and to distinguish it from falsehood? What role does truth play in constituting knowledge? And is truth always absolute, or can it be relative to one's perspective?

https://en.wikipedia.org/wiki/Truth

Knowledge is a familiarity, awareness, or understanding of someone or something, such as facts (descriptive knowledge), skills (procedural knowledge), or objects (acquaintance knowledge). By most accounts, knowledge can be acquired in many different ways and from many sources, including but not limited to perception, reason, memory, testimony, scientific inquiry, education, and practice. The philosophical study of knowledge is called epistemology.

The term "knowledge" can refer to a theoretical or practical understanding of a subject. It can be implicit (as with practical skill or expertise) or explicit (as with the theoretical understanding of a subject); formal or informal; systematic or particular.[1] The philosopher Plato famously pointed out the need for a distinction between knowledge and true belief in the Theaetetus, leading many to attribute to him a definition of knowledge as "justified true belief".[2][3] The difficulties with this definition raised by the Gettier problem have been the subject of extensive debate in epistemology for more than half a century.[2]

https://en.wikipedia.org/wiki/Knowledge

Reality is the sum or aggregate of all that is real or existent within a system, as opposed to that which is only imaginary. The term is also used to refer to the ontological status of things, indicating their existence.[1] In physical terms, reality is the totality of a system, known and unknown.[2] Philosophical questions about the nature of reality or existence or being are considered under the rubric of ontology, which is a major branch of metaphysics in the Western philosophical tradition. Ontological questions also feature in diverse branches of philosophy, including the philosophy of science, philosophy of religion, philosophy of mathematics, and philosophical logic. These include questions about whether only physical objects are real (i.e., Physicalism), whether reality is fundamentally immaterial (e.g., Idealism), whether hypothetical unobservable entities posited by scientific theories exist, whether God exists, whether numbers and other abstract objects exist, and whether possible worlds exist.

https://en.wikipedia.org/wiki/Reality

Existence is the ability of an entity to interact with physical or mental reality. In philosophy, it refers to the ontological property[1] of being.[2]

https://en.wikipedia.org/wiki/Existence

Essence (Latin: essentia) is a polysemic term, used in philosophy and theology as a designation for the property or set of properties that make an entity or substance what it fundamentally is, and which it has by necessity, and without which it loses its identity. Essence is contrasted with accident: a property that the entity or substance has contingently, without which the substance can still retain its identity.

The concept originates rigorously with Aristotle (although it can also be found in Plato),[1] who used the Greek expression to ti ên einai (τὸ τί ἦν εἶναι,[2] literally meaning "the what it was to be" and corresponding to the scholastic term quiddity) or sometimes the shorter phrase to ti esti (τὸ τί ἐστι,[3] literally meaning "the what it is" and corresponding to the scholastic term haecceity) for the same idea. This phrase presented such difficulties for its Latin translators that they coined the word essentia(English "essence") to represent the whole expression. For Aristotle and his scholastic followers, the notion of essence is closely linked to that of definition (ὁρισμός horismos).[4]

In the history of Western philosophy, essence has often served as a vehicle for doctrines that tend to individuate different forms of existence as well as different identity conditions for objects and properties; in this logical meaning, the concept has given a strong theoretical and common-sense basis to the whole family of logical theories based on the "possible worlds" analogy set up by Leibniz and developed in the intensional logic from Carnap to Kripke, which was later challenged by "extensionalist" philosophers such as Quine.

https://en.wikipedia.org/wiki/Essence

Axiology (from Greek ἀξία, axia: "value, worth"; and -λογία, -logia: "study of") is the philosophical study of value. It includes questions about the nature and classification of values and about what kinds of things have value. It is intimately connected with various other philosophical fields that crucially depend on the notion of value, like ethics, aesthetics or philosophy of religion.[1][2] It is also closely related to value theory and meta-ethics. The term was first used by Paul Lapie, in 1902,[3][4] and Eduard von Hartmann, in 1908.[5][6]

https://en.wikipedia.org/wiki/Axiology

Logic[1] is an interdisciplinary field which studies truth and reasoning. Informal logicseeks to characterize valid arguments informally, for instance by listing varieties of fallacies. Formal logic represents statements and argument patterns symbolically, using formal systems such as first order logic. Within formal logic, mathematical logicstudies the mathematical characteristics of logical systems, while philosophical logicapplies them to philosophical problems such as the nature of meaning, knowledge, and existence. Systems of formal logic are also applied in other fields including linguistics, cognitive science, and computer science.

Logic has been studied since Antiquity, early approaches including Aristotelian logic, stoic logic, Anviksiki, and the mohists. Modern formal logic has its roots in the work of late 19th century mathematicians such as Gottlob Frege.

https://en.wikipedia.org/wiki/Logic

Ethics or moral philosophy is a branch[1] of philosophy that "involves systematizing, defending, and recommending concepts of right and wrong behavior".[2] The field of ethics, along with aesthetics, concerns matters of value; these fields comprise the branch of philosophy called axiology.[3]

Ethics seeks to resolve questions of human morality by defining concepts such as good and evil, right and wrong, virtue and vice, justice and crime. As a field of intellectual inquiry, moral philosophy is related to the fields of moral psychology, descriptive ethics, and value theory.

Three major areas of study within ethics recognized today are:[2]

- Meta-ethics, concerning the theoretical meaning and reference of moral propositions, and how their truth values (if any) can be determined;

- Normative ethics, concerning the practical means of determining a moral course of action;

- Applied ethics, concerning what a person is obligated (or permitted) to do in a specific situation or a particular domain of action.[2]

Philosophy of science is a branch of philosophy concerned with the foundations, methods, and implications of science. The central questions of this study concern what qualifies as science, the reliability of scientific theories, and the ultimate purpose of science. This discipline overlaps with metaphysics, ontology, and epistemology, for example, when it explores the relationship between science and truth. Philosophy of science focuses on metaphysical, epistemic and semantic aspects of science. Ethical issues such as bioethics and scientific misconduct are often considered ethics or science studies rather than philosophy of science.

There is no consensus among philosophers about many of the central problems concerned with the philosophy of science, including whether science can reveal the truth about unobservable things and whether scientific reasoning can be justified at all. In addition to these general questions about science as a whole, philosophers of science consider problems that apply to particular sciences (such as biology or physics). Some philosophers of science also use contemporary results in science to reach conclusions about philosophy itself.

While philosophical thought pertaining to science dates back at least to the time of Aristotle, general philosophy of science emerged as a distinct discipline only in the 20th century in the wake of the logical positivist movement, which aimed to formulate criteria for ensuring all philosophical statements' meaningfulness and objectively assessing them. Charles Sanders Peirce and Karl Popper moved on from positivism to establish a modern set of standards for scientific methodology. Thomas Kuhn's 1962 book The Structure of Scientific Revolutions was also formative, challenging the view of scientific progress as steady, cumulative acquisition of knowledge based on a fixed method of systematic experimentation and instead arguing that any progress is relative to a "paradigm", the set of questions, concepts, and practices that define a scientific discipline in a particular historical period.[1]

Subsequently, the coherentist approach to science, in which a theory is validated if it makes sense of observations as part of a coherent whole, became prominent due to W.V. Quine and others. Some thinkers such as Stephen Jay Gould seek to ground science in axiomatic assumptions, such as the uniformity of nature. A vocal minority of philosophers, and Paul Feyerabend in particular, argue that there is no such thing as the "scientific method", so all approaches to science should be allowed, including explicitly supernatural ones. Another approach to thinking about science involves studying how knowledge is created from a sociological perspective, an approach represented by scholars like David Bloor and Barry Barnes. Finally, a tradition in continental philosophy approaches science from the perspective of a rigorous analysis of human experience.

Philosophies of the particular sciences range from questions about the nature of time raised by Einstein's general relativity, to the implications of economics for public policy. A central theme is whether the terms of one scientific theory can be intra- or intertheoretically reduced to the terms of another. That is, can chemistry be reduced to physics, or can sociology be reduced to individual psychology? The general questions of philosophy of science also arise with greater specificity in some particular sciences. For instance, the question of the validity of scientific reasoning is seen in a different guise in the foundations of statistics. The question of what counts as science and what should be excluded arises as a life-or-death matter in the philosophy of medicine. Additionally, the philosophies of biology, of psychology, and of the social sciences explore whether the scientific studies of human nature can achieve objectivity or are inevitably shaped by values and by social relations.

https://en.wikipedia.org/wiki/Philosophy_of_science

Methodology is "'a contextual framework' for research, a coherent and logical scheme based on views, beliefs, and values, that guides the choices researchers [or other users] make".[1][2]

It comprises the theoretical analysis of the body of methods and principles associated with a branch of knowledge such that the methodologies employed from differing disciplines vary depending on their historical development. This creates a continuum of methodologies[3] that stretch across competing understandings of how knowledgeand reality are best understood. This situates methodologies within overarching philosophies and approaches.[4]

Methodology may be visualized as a spectrum from a predominantly quantitativeapproach towards a predominantly qualitative approach.[5] Although a methodology may conventionally sit specifically within one of these approaches, researchers may blend approaches in answering their research objectives and so have methodologies that are multimethod and/or interdisciplinary.[6][7][8]

Overall, a methodology does not set out to provide solutions - it is therefore, not the same as a method.[8][9] Instead, a methodology offers a theoretical perspective for understanding which method, set of methods, or best practices can be applied to the research question(s) at hand.

https://en.wikipedia.org/wiki/Methodology

Metaphysics is the branch of philosophy that studies the first principles of being, identity and change, space and time, causality, necessity and possibility.[1][2][3] It includes questions about the nature of consciousness and the relationship between mind and matter. The word "metaphysics" comes from two Greek words that, together, literally mean "after or behind or among [the study of] the natural". It has been suggested that the term might have been coined by a first century CE editor who assembled various small selections of Aristotle’s works into the treatise we now know by the name Metaphysics (μετὰ τὰ φυσικά, meta ta physika, lit. 'after the Physics ', another of Aristotle's works).[4]

Metaphysics studies questions related to what it is for something to exist and what types of existence there are. Metaphysics seeks to answer, in an abstract and fully general manner, the questions:[5]

- What is there?

- What is it like?

Topics of metaphysical investigation include existence, objects and their properties, space and time, cause and effect, and possibility. Metaphysics is considered one of the four main branches of philosophy, along with epistemology, logic, and ethics.[6]

https://en.wikipedia.org/wiki/Metaphysics

Pragmatism is a philosophical tradition that considers words and thought as tools and instruments for prediction, problem solving, and action, and rejects the idea that the function of thought is to describe, represent, or mirror reality. Pragmatists contend that most philosophical topics—such as the nature of knowledge, language, concepts, meaning, belief, and science—are all best viewed in terms of their practical uses and successes.

Pragmatism began in the United States in the 1870s. Its origins are often attributed to the philosophers Charles Sanders Peirce, William James, and John Dewey. In 1878, Peirce described it in his pragmatic maxim: "Consider the practical effects of the objects of your conception. Then, your conception of those effects is the whole of your conception of the object."[1]

https://en.wikipedia.org/wiki/Pragmatism

Philosophical skepticism (UK spelling: scepticism; from Greek σκέψις skepsis, "inquiry") is a family of philosophical views that question the possibility of knowledge.[1][2] Philosophical skeptics are often classified into two general categories: Those who deny all possibility of knowledge, and those who advocate for the suspension of judgment due to the inadequacy of evidence.[3] This distinction is modeled after the differences between the Academic skeptics and the Pyrrhonian skeptics in ancient Greek philosophy.

https://en.wikipedia.org/wiki/Philosophical_skepticism

The analytic–synthetic distinction is a semantic distinction, used primarily in philosophy to distinguish between propositions (in particular, statements that are affirmative subject–predicate judgments) that are of two types: analytic propositions and synthetic propositions. Analytic propositions are true or not true solely by virtue of their meaning, whereas synthetic propositions' truth, if any, derives from how their meaning relates to the world.[1]

While the distinction was first proposed by Immanuel Kant, it was revised considerably over time, and different philosophers have used the terms in very different ways. Furthermore, some philosophers (starting with W.V.O. Quine) have questioned whether there is even a clear distinction to be made between propositions which are analytically true and propositions which are synthetically true.[2] Debates regarding the nature and usefulness of the distinction continue to this day in contemporary philosophy of language.[2]

https://en.wikipedia.org/wiki/Analytic–synthetic_distinction

The internal–external distinction is a distinction used in philosophy to divide an ontology into two parts: an internal part consisting of a linguistic framework and observations related to that framework, and an external part concerning practical questions about the utility of that framework. This division was introduced by Rudolf Carnap in his work "Empiricism, Semantics, and Ontology".[1] It was subsequently criticized at length by Willard Van Orman Quine in a number of works,[2][3] and was considered for some time to have been discredited. However, recently a number of authors have come to the support of some or another version of Carnap's approach.[4][5][6]

https://en.wikipedia.org/wiki/Internal–external_distinction

Ontology is the branch of philosophy that studies concepts such as existence, being, becoming, and reality. It includes the questions of how entities are grouped into basic categories and which of these entities exist on the most fundamental level. Ontology is sometimes referred to as the science of being and belongs to the major branch of philosophy known as metaphysics.

Ontologists often try to determine what the categories or highest kinds are and how they form a system of categories that provides an encompassing classification of all entities. Commonly proposed categories include substances, properties, relations, states of affairs and events. These categories are characterized by fundamental ontological concepts, like particularity and universality, abstractness and concreteness, or possibility and necessity. Of special interest is the concept of ontological dependence, which determines whether the entities of a category exist on the most fundamental level. Disagreements within ontology are often about whether entities belonging to a certain category exist and, if so, how they are related to other entities.[1]

When used as a countable noun, the terms "ontology" and "ontologies" refer not to the science of being but to theories within the science of being. Ontological theories can be divided into various types according to their theoretical commitments. Monocategorical ontologies hold that there is only one basic category, which is rejected by polycategorical ontologies. Hierarchical ontologies assert that some entities exist on a more fundamental level and that other entities depend on them. Flat ontologies, on the other hand, deny such a privileged status to any entity.

https://en.wikipedia.org/wiki/Ontology

Ontogeny (also ontogenesis) is the origination and development of an organism (both physical and psychological, e.g., moral development[1]), usually from the time of fertilization of the egg to adult. The term can also be used to refer to the study of the entirety of an organism's lifespan.

Ontogeny is the developmental history of an organism within its own lifetime, as distinct from phylogeny, which refers to the evolutionary history of a species. In practice, writers on evolution often speak of species as "developing" traits or characteristics. This can be misleading. While developmental (i.e., ontogenetic) processes can influence subsequent evolutionary (e.g., phylogenetic) processes[2] (see evolutionary developmental biology and recapitulation theory), individual organisms develop (ontogeny), while species evolve (phylogeny).

Ontogeny, embryology and developmental biology are closely related studies and those terms are sometimes used interchangeably. Aspects of ontogeny are morphogenesis, the development of form; tissue growth; and cellular differentiation. The term ontogeny has also been used in cell biology to describe the development of various cell types within an organism.[3]

Ontogeny is a useful field of study in many disciplines, including developmental biology, developmental psychology, developmental cognitive neuroscience, and developmental psychobiology.

Ontogeny is used in anthropology as "the process through which each of us embodies the history of our own making."[4]

https://en.wikipedia.org/wiki/Ontogeny

In moral philosophy, deontological ethics or deontology (from Greek: δέον, 'obligation, duty' + λόγος, 'study') is the normative ethical theory that the morality of an action should be based on whether that action itself is right or wrong under a series of rules, rather than based on the consequences of the action.[1] It is sometimes described as duty-, obligation-, or rule-based ethics.[2][3]Deontological ethics is commonly contrasted to consequentialism,[4] virtue ethics, and pragmatic ethics. In this terminology, action is more important than the consequences.

The term deontological was first used to describe the current, specialised definition by C. D. Broad in his 1930 book, Five Types of Ethical Theory.[5] Older usage of the term goes back to Jeremy Bentham, who coined it prior to 1816 as a synonym of dicastic or censorial ethics (i.e., ethics based on judgement).[6][7] The more general sense of the word is retained in French, especially in the term code de déontologie (ethical code), in the context of professional ethics.

Depending on the system of deontological ethics under consideration, a moral obligation may arise from an external or internal source, such as a set of rules inherent to the universe (ethical naturalism), religious law, or a set of personal or cultural values (any of which may be in conflict with personal desires).

https://en.wikipedia.org/wiki/Deontology

In linguistics and philosophy, modality is the phenomenon whereby language is used to discuss possible situations. For instance, a modal expression may convey that something is likely, desirable, or permissible. Quintessential modal expressions include modal auxiliariessuch as "could", "should", or "must"; modal adverbs such as "possibly" or "necessarily"; and modal adjectives such as "conceivable" or "probable". However, modal components have been identified in the meanings of countless natural language expressions including counterfactuals, propositional attitudes, evidentials, habituals, and generics.

Modality has been intensely studied from a variety of perspectives. Within linguistics, typological studies have traced crosslinguistic variation in the strategies used to mark modality, with a particular focus on its interaction with Tense–aspect–mood marking. Theoretical linguists have sought to analyze both the propositional content and discourse effects of modal expressions using formal tools derived from modal logic. Within philosophy, linguistic modality is often seen as a window into broader metaphysical notions of necessity and possibility.

| Grammatical features |

|---|

| Related to nouns |

| Related to verbs |

| General features |

| Syntax relationships |

| Semantics |

| Phenomena |

See also[edit]

- Angelika Kratzer

- Counterfactuals

- Dynamic semantics

- Evidentiality

- Frank R. Palmer

- Free choice inference

- Modal logic

- Modal subordination

- Modality (semiotics)

- Possible world

- Tense–aspect–mood

- English modal adverbs at Wiktionary

show

Authority control Edit this at Wikidata

showvte

Formal semantics (natural language)

Categories: Semantics (linguistics)Linguistic modalityPhilosophy of languageFormal semantics (natural language)

https://en.wikipedia.org/wiki/Modality_(natural_language)

Philosophy of language is the branch of philosophy that studies language. Its primary concerns include the nature of linguistic meaning, reference, language use, language learning and creation, language understanding, truth, thought and experience (to the extent that both are linguistic), communication, interpretation, and translation.

https://en.wikipedia.org/wiki/Category:Philosophy_of_language

Philosophy (from Greek: φιλοσοφία, philosophia, 'love of wisdom')[1][2] is the study of general and fundamental questions, such as those about existence, reason, knowledge, values, mind, and language.[3][4] Such questions are often posed as problems[5][6] to be studied or resolved. Some sources claim the term was coined by Pythagoras (c. 570 – c. 495 BCE),[7][8] others dispute this story,[9][10] arguing that Pythagoreans merely claimed use of a preexisting term.[11] Philosophical methods include questioning, critical discussion, rational argument, and systematic presentation.[12][13][i]

Historically, philosophy encompassed all bodies of knowledge and a practitioner was known as a philosopher.[14] From the time of Ancient Greekphilosopher Aristotle to the 19th century, "natural philosophy" encompassed astronomy, medicine, and physics.[15] For example, Newton's 1687 Mathematical Principles of Natural Philosophy later became classified as a book of physics.

In the 19th century, the growth of modern research universities led academic philosophy and other disciplines to professionalize and specialize.[16][17] Since then, various areas of investigation that were traditionally part of philosophy have become separate academic disciplines, and namely the social sciences such as psychology, sociology, linguistics, and economics.

Today, major subfields of academic philosophy include metaphysics, which is concerned with the fundamental nature of existence and reality; epistemology, which studies the nature of knowledge and belief; ethics, which is concerned with moral value; and logic, which studies the rules of inference that allow one to derive conclusions from true premises.[18][19] Other notable subfields include philosophy of science, political philosophy, aesthetics, philosophy of language, and philosophy of mind.

https://en.wikipedia.org/wiki/Philosophy

In linguistics, evidentiality[1][2] is, broadly, the indication of the nature of evidence for a given statement; that is, whether evidence exists for the statement and if so, what kind. An evidential (also verificational or validational) is the particular grammatical element (affix, clitic, or particle) that indicates evidentiality. Languages with only a single evidential have had terms such as mediative, médiatif, médiaphorique, and indirective used instead of evidential.

https://en.wikipedia.org/wiki/Evidentiality

Free choice is a phenomenon in natural language where a disjunction appears to receive a conjunctive interpretation when it interacts with a modal operator. For example, the following English sentences can be interpreted to mean that the addressee can watch a movie and that they can also play video games, depending on their preference.[1]

- You can watch a movie or play video games.

- You can watch a movie or you can play video games.

Free choice inferences are a major topic of research in formal semantics and philosophical logic because they are not valid in classical systems of modal logic. If they were valid, then the semantics of natural language would validate the Free Choice Principle.

- Free Choice Principle:

This principle is not valid in classical modal logic. Moreover adding this principle to standard modal logics would allow one to conclude from , for any and . This observation is known as the Paradox of Free Choice.[1][2] To resolve this paradox, some researchers have proposed analyses of free choice within nonclassical frameworks such as dynamic semantics, linear logic, alternative semantics, and inquisitive semantics.[1][3][4] Others have proposed ways of deriving free choice inferences as scalar implicatures which arise on the basis of classical lexical entries for disjunction and modality.[1][5][6][7]

Free choice inferences are most widely studied for deontic modals, but also arise with other flavors of modality as well as imperatives, conditionals, and other kinds of operators.[1][8][9][4] Indefinite noun phrases give rise to a similar inference which is also referred to as "free choice" though researchers disagree as to whether it forms a natural class with disjunctive free choice.[9][10]

Formal semantics (natural language)

Central concepts

Compositionality Denotation Entailment Extension Generalized quantifier Intension Logical form Presupposition Proposition Reference Scope Speech act Syntax–semantics interface Truth conditions

Topics

Areas

Anaphora Ambiguity Binding Conditionals Definiteness Disjunction Evidentiality Focus Indexicality Lexical semantics Modality Negation Propositional attitudes Tense–aspect–mood Quantification Vagueness

Phenomena

Antecedent-contained deletion Cataphora Coercion Conservativity Counterfactuals Cumulativity De dicto and de re De se Deontic modality Discourse relations Donkey anaphora Epistemic modality Faultless disagreement Free choice inferences Givenness Crossover effects Hurford disjunction Inalienable possession Intersective modification Logophoricity Mirativity Modal subordination Negative polarity items Opaque contexts Performatives Privative adjectives Quantificational variability effect Responsive predicate Rising declaratives Scalar implicature Sloppy identity Subsective modification Telicity Temperature paradox Veridicality

Formalism

Formal systems

Alternative semantics Categorial grammar Combinatory categorial grammar Discourse representation theory Dynamic semantics Generative grammar Glue semantics Inquisitive semantics Intensional logic Lambda calculus Mereology Montague grammar Segmented discourse representation theory Situation semantics Supervaluationism Type theory TTR

Concepts

Autonomy of syntax Context set Continuation Conversational scoreboard Existential closure Function application Meaning postulate Monads Possible world Quantifier raising Quantization Question under discussion Squiggle operator Type shifter Universal grinder

See also

Cognitive semantics Computational semantics Distributional semantics Formal grammar Inferentialism Linguistics wars Philosophy of language Pragmatics Semantics of logic

Categories: SemanticsLogicModal logicPhilosophical logicMathematical logicRules of inferenceFormal semantics (natural language)Logic stubsSemantics stubsLinguistics stubs

https://en.wikipedia.org/wiki/Free_choice_inference

Deontic logic is the field of philosophical logic that is concerned with obligation, permission, and related concepts. Alternatively, a deontic logic is a formal system that attempts to capture the essential logical features of these concepts. Typically, a deontic logic uses OA to mean it is obligatory that A (or it ought to be (the case) that A), and PA to mean it is permitted (or permissible) that A.

Dyadic deontic logic[edit]

An important problem of deontic logic is that of how to properly represent conditional obligations, e.g. If you smoke (s), then you ought to use an ashtray (a). It is not clear that either of the following representations is adequate:

Under the first representation it is vacuously true that if you commit a forbidden act, then you ought to commit any other act, regardless of whether that second act was obligatory, permitted or forbidden (Von Wright 1956, cited in Aqvist 1994). Under the second representation, we are vulnerable to the gentle murder paradox, where the plausible statements (1) if you murder, you ought to murder gently, (2) you do commit murder, and (3) to murder gently you must murder imply the less plausible statement: you ought to murder. Others argue that must in the phrase to murder gently you must murder is a mistranslation from the ambiguous English word (meaning either implies or ought). Interpreting must as implies does not allow one to conclude you ought to murder but only a repetition of the given you murder. Misinterpreting must as ought results in a perverse axiom, not a perverse logic. With use of negations one can easily check if the ambiguous word was mistranslated by considering which of the following two English statements is equivalent with the statement to murder gently you must murder: is it equivalent to if you murder gently it is forbidden not to murder or if you murder gently it is impossible not to murder ?

Some deontic logicians have responded to this problem by developing dyadic deontic logics, which contain binary deontic operators:

- means it is obligatory that A, given B

- means it is permissible that A, given B.

(The notation is modeled on that used to represent conditional probability.) Dyadic deontic logic escapes some of the problems of standard (unary) deontic logic, but it is subject to some problems of its own.[example needed]

https://en.wikipedia.org/wiki/Deontic_logic

In mathematics and logic, a vacuous truth is a conditional or universal statement (a universal statement that can be converted to a conditional statement) that is true because the antecedent cannot be satisfied.[1][2] For example, the statement "all cell phones in the room are turned off" will be true when there are no cell phones in the room. In this case, the statement "all cell phones in the room are turned on" would also be vacuously true, as would the conjunction of the two: "all cell phones in the room are turned on and turned off". For that reason, it is sometimes said that a statement is vacuously true because it does not really say anything.[3]

More formally, a relatively well-defined usage refers to a conditional statement (or a universal conditional statement) with a false antecedent.[1][2][4][3][5] One example of such a statement is "if London is in France, then the Eiffel Tower is in Bolivia".

Such statements are considered vacuous truths, because the fact that the antecedent is false prevents using the statement to infer anything about the truth value of the consequent. In essence, a conditional statement, that is based on the material conditional, is true when the antecedent ("London is in France" in the example) is false regardless of whether the conclusion or consequent ("the Eiffel Tower is in Bolivia" in the example) is true or false because the material conditional is defined in that way.

Examples common to everyday speech include conditional phrases like "when hell freezes over..." and "when pigs can fly...", indicating that not before the given (impossible) condition is met will the speaker accept some respective (typically false or absurd) proposition.

In pure mathematics, vacuously true statements are not generally of interest by themselves, but they frequently arise as the base case of proofs by mathematical induction.[6][1] This notion has relevance in pure mathematics, as well as in any other field that uses classical logic.

Outside of mathematics, statements which can be characterized informally as vacuously true can be misleading. Such statements make reasonable assertions about qualified objects which do not actually exist. For example, a child might tell their parent "I ate every vegetable on my plate", when there were no vegetables on the child's plate to begin with. In this case, the parent can believe that the child has actually eaten all the vegetable on this plate (misleading) but this is not a fact. In addition, a vacuous truth is often used colloquially with absurd statements, either to confidently assert something (e.g. "the dog was red, or I'm a monkey's uncle" to strongly claim that the dog was red), or to express doubt, sarcasm, disbelief, incredulity or indignation (e.g. "yes, and I'm the Queen of England" to disagree a previously made statement).

https://en.wikipedia.org/wiki/Vacuous_truth

Indefinite article[edit]

An indefinite article is an article that marks an indefinite noun phrase. Indefinite articles are those such as English "some" or "a", which do not refer to a specific identifiable entity. Indefinites are commonly used to introduce a new discourse referent which can be referred back to in subsequent discussion:

- A monster ate a cookie. His name is Cookie Monster.

Indefinites can also be used to generalize over entities who have some property in common:

- A cookie is a wonderful thing to eat.

Indefinites can also be used to refer to specific entities whose precise identity is unknown or unimportant.

- A monster must have broken into my house last night and eaten all my cookies.

- A friend of mine told me that happens frequently to people who live on Sesame Street.

Indefinites also have predicative uses:

- Leaving my door unlocked was a bad decision.

Indefinite noun phrases are widely studied within linguistics, in particular because of their ability to take exceptional scope.

Proper article[edit]

A proper article indicates that its noun is proper, and refers to a unique entity. It may be the name of a person, the name of a place, the name of a planet, etc. The Māori language has the proper article a, which is used for personal nouns; so, "a Pita" means "Peter". In Māori, when the personal nouns have the definite or indefinite article as an important part of it, both articles are present; for example, the phrase "a Te Rauparaha", which contains both the proper article a and the definite article Te refers to the person name Te Rauparaha.

The definite article is sometimes also used with proper names, which are already specified by definition (there is just one of them). For example: the Amazon, the Hebrides. In these cases, the definite article may be considered superfluous. Its presence can be accounted for by the assumption that they are shorthand for a longer phrase in which the name is a specifier, i.e. the Amazon River, the Hebridean Islands.[citation needed] Where the nouns in such longer phrases cannot be omitted, the definite article is universally kept: the United States, the People's Republic of China. This distinction can sometimes become a political matter: the former usage the Ukraine stressed the word's Russian meaning of "borderlands"; as Ukraine became a fully independent state following the collapse of the Soviet Union, it requested that formal mentions of its name omit the article. Similar shifts in usage have occurred in the names of Sudan and both Congo (Brazzaville) and Congo (Kinshasa); a move in the other direction occurred with The Gambia. In certain languages, such as French and Italian, definite articles are used with all or most names of countries: la France/le Canada/l'Allemagne, l'Italia/la Spagna/il Brasile.

Some languages also use definite articles with personal names. For example, such use is standard in Portuguese (a Maria, literally: "the Maria"), in Greek (η Μαρία, ο Γιώργος, ο Δούναβης, η Παρασκευή) and in Catalan (la Núria, el/en Oriol). It can also occur colloquially or dialectally in Spanish, German, French, Italian and other languages. In Hungarian, the use of definite articles with personal names is quite widespread, mainly colloquially, although it is considered to be a Germanism.

This usage can appear in American English for particular nicknames. One prominent example occurs in the case of former United States President Donald Trump, who is also sometimes informally called "The Donald" in speech and in print media.[4] Another is President Ronald Reagan's most common nickname, "The Gipper", which is still used today in reference to him.[4]

Partitive article[edit]

A partitive article is a type of article, sometimes viewed as a type of indefinite article, used with a mass noun such as water, to indicate a non-specific quantity of it. Partitive articles are a class of determiner; they are used in French and Italian in addition to definite and indefinite articles. (In Finnish and Estonian, the partitive is indicated by inflection.) The nearest equivalent in English is some, although it is classified as a determiner, and English uses it less than French uses de.

- French: Veux-tu du café ?

- Do you want (some) coffee?

- For more information, see the article on the French partitive article.

Haida has a partitive article (suffixed -gyaa) referring to "part of something or... to one or more objects of a given group or category," e.g., tluugyaa uu hal tlaahlaang "he is making a boat (a member of the category of boats)."[5]

Negative article[edit]

A negative article specifies none of its noun, and can thus be regarded as neither definite nor indefinite. On the other hand, some consider such a word to be a simple determiner rather than an article. In English, this function is fulfilled by no, which can appear before a singular or plural noun:

- No man has been on this island.

- No dogs are allowed here.

- No one is in the room.

In German, the negative article is, among other variations, kein, in opposition to the indefinite article ein.

- Ein Hund – a dog

- Kein Hund – no dog

The equivalent in Dutch is geen:

- een hond – a dog

- geen hond – no dog

Zero article[edit]

The zero article is the absence of an article. In languages having a definite article, the lack of an article specifically indicates that the noun is indefinite. Linguists interested in X-bar theory causally link zero articles to nouns lacking a determiner.[6] In English, the zero article rather than the indefinite is used with plurals and mass nouns, although the word "some" can be used as an indefinite plural article.

- Visitors end up walking in mud.

Articles often develop by specialization of adjectives or determiners. Their development is often a sign of languages becoming more analytic instead of synthetic, perhaps combined with the loss of inflection as in English, Romance languages, Bulgarian, Macedonian and Torlakian.

Joseph Greenberg in Universals of Human Language[9] describes "the cycle of the definite article": Definite articles (Stage I) evolve from demonstratives, and in turn can become generic articles (Stage II) that may be used in both definite and indefinite contexts, and later merely noun markers (Stage III) that are part of nouns other than proper names and more recent borrowings. Eventually articles may evolve anew from demonstratives.

Definite articles[edit]

Definite articles typically arise from demonstratives meaning that. For example, the definite articles in most Romance languages—e.g., el, il, le, la, lo — derive from the Latin demonstratives ille (masculine), illa (feminine) and illud (neuter).

The English definite article the, written þe in Middle English, derives from an Old English demonstrative, which, according to gender, was written se (masculine), seo (feminine) (þe and þeo in the Northumbrian dialect), or þæt (neuter). The neuter form þæt also gave rise to the modern demonstrative that. The ye occasionally seen in pseudo-archaic usage such as "Ye Olde Englishe Tea Shoppe" is actually a form of þe, where the letter thorn (þ) came to be written as a y.

Multiple demonstratives can give rise to multiple definite articles. Macedonian, for example, in which the articles are suffixed, has столот (stolot), the chair; столов (stolov), this chair; and столон (stolon), that chair. These derive from the Proto-Slavicdemonstratives *tъ "this, that", *ovъ "this here" and *onъ "that over there, yonder" respectively. Colognian prepositions articles such as in dat Auto, or et Auto, the car; the first being specifically selected, focused, newly introduced, while the latter is not selected, unfocused, already known, general, or generic.

Standard Basque distinguishes between proximal and distal definite articles in the plural (dialectally, a proximal singular and an additional medial grade may also be present). The Basque distal form (with infix -a-, etymologically a suffixed and phonetically reduced form of the distal demonstrative har-/hai-) functions as the default definite article, whereas the proximal form (with infix -o-, derived from the proximal demonstrative hau-/hon-) is marked and indicates some kind of (spatial or otherwise) close relationship between the speaker and the referent (e.g., it may imply that the speaker is included in the referent): etxeak ("the houses") vs. etxeok ("these houses [of ours]"), euskaldunak ("the Basque speakers") vs. euskaldunok ("we, the Basque speakers").

Speakers of Assyrian Neo-Aramaic, a modern Aramaic language that lacks a definite article, may at times use demonstratives ahaand aya (feminine) or awa (masculine) – which translate to "this" and "that", respectively – to give the sense of "the".[10]

Indefinite articles[edit]

Indefinite articles typically arise from adjectives meaning one. For example, the indefinite articles in the Romance languages—e.g., un, una, une—derive from the Latin adjective unus. Partitive articles, however, derive from Vulgar Latin de illo, meaning (some) of the.

The English indefinite article an is derived from the same root as one. The -n came to be dropped before consonants, giving rise to the shortened form a. The existence of both forms has led to many cases of juncture loss, for example transforming the original a napron into the modern an apron.

The Persian indefinite article is yek, meaning one.

See also[edit]

- English articles

- Al- (definite article in Arabic)

- Definiteness

- Definite description

- False title

- Master, Peter (1997). "The English article system: Acquisition, function, and pedagogy". System. 25 (2): 215–232. doi:10.1016/S0346-251X(97)00010-9.

https://en.wikipedia.org/wiki/Article_(grammar)#Indefinite_article

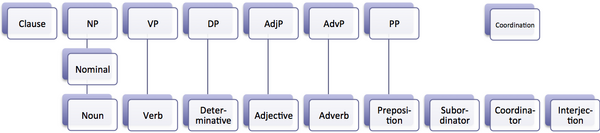

In traditional grammar, a part of speech or part-of-speech (abbreviated as POS or PoS) is a category of words (or, more generally, of lexical items) that have similar grammatical properties. Words that are assigned to the same part of speech generally display similar syntactic behavior—they play similar roles within the grammatical structure of sentences—and sometimes similar morphology in that they undergo inflection for similar properties.