The Einstein–Podolsky–Rosen paradox (EPR paradox) is a thought experiment proposed by physicists Albert Einstein, Boris Podolsky and Nathan Rosen (EPR), with which they argued that the description of physical reality provided by quantum mechanics was incomplete.[1] In a 1935 paper titled "Can Quantum-Mechanical Description of Physical Reality be Considered Complete?", they argued for the existence of "elements of reality" that were not part of quantum theory, and speculated that it should be possible to construct a theory containing them. Resolutions of the paradox have important implications for the interpretation of quantum mechanics.

The thought experiment involves a pair of particles prepared in an entangled state (note that this terminology was invented only later). Einstein, Podolsky, and Rosen pointed out that, in this state, if the position of the first particle were measured, the result of measuring the position of the second particle could be predicted. If instead the momentum of the first particle were measured, then the result of measuring the momentum of the second particle could be predicted. They argued that no action taken on the first particle could instantaneously affect the other, since this would involve information being transmitted faster than light, which is forbidden by the theory of relativity. They invoked a principle, later known as the "EPR criterion of reality", positing that, "If, without in any way disturbing a system, we can predict with certainty (i.e., with probability equal to unity) the value of a physical quantity, then there exists an element of reality corresponding to that quantity". From this, they inferred that the second particle must have a definite value of position and of momentum prior to either being measured. This contradicted the view associated with Niels Bohr and Werner Heisenberg, according to which a quantum particle does not have a definite value of a property like momentum until the measurement takes place.

Locality has several different meanings in physics. EPR describe the principle of locality as asserting that physical processes occurring at one place should have no immediate effect on the elements of reality at another location. At first sight, this appears to be a reasonable assumption to make, as it seems to be a consequence of special relativity, which states that energy can never be transmitted faster than the speed of light without violating causality;[20]: 427–428 [30] however, it turns out that the usual rules for combining quantum mechanical and classical descriptions violate EPR's principle of locality without violating special relativity or causality.[20]: 427–428 [30] Causality is preserved because there is no way for Alice to transmit messages (i.e., information) to Bob by manipulating her measurement axis. Whichever axis she uses, she has a 50% probability of obtaining "+" and 50% probability of obtaining "−", completely at random; according to quantum mechanics, it is fundamentally impossible for her to influence what result she gets. Furthermore, Bob is only able to perform his measurement once: there is a fundamental property of quantum mechanics, the no-cloning theorem, which makes it impossible for him to make an arbitrary number of copies of the electron he receives, perform a spin measurement on each, and look at the statistical distribution of the results. Therefore, in the one measurement he is allowed to make, there is a 50% probability of getting "+" and 50% of getting "−", regardless of whether or not his axis is aligned with Alice's.

As a summary, the results of the EPR thought experiment do not contradict the predictions of special relativity. Neither the EPR paradox nor any quantum experiment demonstrates that superluminal signaling is possible; however, the principle of locality appeals powerfully to physical intuition, and Einstein, Podolsky and Rosen were unwilling to abandon it. Einstein derided the quantum mechanical predictions as "spooky action at a distance".[b] The conclusion they drew was that quantum mechanics is not a complete theory.[32]

See also[edit]

Bohr-Einstein debates: The argument of EPR

CHSH Bell test

Coherence

Correlation does not imply causation

ER=EPR

GHZ experiment

Measurement problem

Philosophy of information

Philosophy of physics

Popper's experiment

Superdeterminism

Quantum entanglement

Quantum information

Quantum pseudo-telepathy

Quantum teleportation

Quantum Zeno effect

Synchronicity

Ward's probability amplitude

https://en.wikipedia.org/wiki/EPR_paradox#Paradox

A paradox is a logically self-contradictory statement or a statement that runs contrary to one's expectation.[1][2][3] It is a statement that, despite apparently valid reasoning from true premises, leads to a seemingly self-contradictory or a logically unacceptable conclusion.[4][5]A paradox usually involves contradictory-yet-interrelated elements that exist simultaneously and persist over time.[6][7][8]

In logic, many paradoxes exist that are known to be invalid arguments, yet are nevertheless valuable in promoting critical thinking,[9] while other paradoxes have revealed errors in definitions that were assumed to be rigorous, and have caused axioms of mathematics and logic to be re-examined.[1] One example is Russell's paradox, which questions whether a "list of all lists that do not contain themselves" would include itself, and showed that attempts to found set theory on the identification of sets with properties or predicates were flawed.[10][11] Others, such as Curry's paradox, cannot be easily resolved by making foundational changes in a logical system.[12]

Examples outside logic include the ship of Theseus from philosophy, a paradox that questions whether a ship repaired over time by replacing each and all of its wooden parts, one at a time, would remain the same ship.[13] Paradoxes can also take the form of images or other media. For example, M.C. Escher featured perspective-based paradoxes in many of his drawings, with walls that are regarded as floors from other points of view, and staircases that appear to climb endlessly.[14]

In common usage, the word "paradox" often refers to statements that are ironic or unexpected, such as "the paradox that standing is more tiring than walking".[15]

Common themes in paradoxes include self-reference, infinite regress, circular definitions, and confusion or equivocation between different levels of abstraction.

Patrick Hughes outlines three laws of the paradox:[16]

- Self-reference

- An example is the statement "This statement is false", a form of the liar paradox. The statement is referring to itself. Another example of self-reference is the question of whether the barber shaves himself in the barber paradox. Yet another example involves the question "Is the answer to this question 'No'?"

- Contradiction

- "This statement is false"; the statement cannot be false and true at the same time. Another example of contradiction is if a man talking to a genie wishes that wishes couldn't come true. This contradicts itself because if the genie grants his wish, he did not grant his wish, and if he refuses to grant his wish, then he did indeed grant his wish, therefore making it impossible either to grant or not grant his wish without leading to a contradiction.

- Vicious circularity, or infinite regress

- "This statement is false"; if the statement is true, then the statement is false, thereby making the statement true. Another example of vicious circularity is the following group of statements:

- "The following sentence is true."

- "The previous sentence is false."

Other paradoxes involve false statements and half-truths ("impossible is not in my vocabulary") or rely on a hasty assumption. (A father and his son are in a car crash; the father is killed and the boy is rushed to the hospital. The doctor says, "I can't operate on this boy. He's my son." There is no paradox if the boy's mother is a surgeon.)

Paradoxes that are not based on a hidden error generally occur at the fringes of context or language, and require extending the context or language in order to lose their paradoxical quality. Paradoxes that arise from apparently intelligible uses of language are often of interest to logicians and philosophers. "This sentence is false" is an example of the well-known liar paradox: it is a sentence that cannot be consistently interpreted as either true or false, because if it is known to be false, then it can be inferred that it must be true, and if it is known to be true, then it can be inferred that it must be false. Russell's paradox, which shows that the notion of the set of all those sets that do not contain themselves leads to a contradiction, was instrumental in the development of modern logic and set theory.[10]

Thought-experiments can also yield interesting paradoxes. The grandfather paradox, for example, would arise if a time-traveler were to kill his own grandfather before his mother or father had been conceived, thereby preventing his own birth.[17] This is a specific example of the more general observation of the butterfly effect, or that a time-traveller's interaction with the past—however slight—would entail making changes that would, in turn, change the future in which the time-travel was yet to occur, and would thus change the circumstances of the time-travel itself.

Often a seemingly paradoxical conclusion arises from an inconsistent or inherently contradictory definition of the initial premise. In the case of that apparent paradox of a time-traveler killing his own grandfather, it is the inconsistency of defining the past to which he returns as being somehow different from the one that leads up to the future from which he begins his trip, but also insisting that he must have come to that past from the same future as the one that it leads up to.

Quine's classification[edit]

W. V. O. Quine (1962) distinguished between three classes of paradoxes:[18][19]

According to Quine's classification of paradoxes:

- A veridical paradox produces a result that appears absurd, but is demonstrated to be true nonetheless. The paradox of Frederic's birthday in The Pirates of Penzance establishes the surprising fact that a twenty-one-year-old would have had only five birthdays had he been born on a leap day. Likewise, Arrow's impossibility theorem demonstrates difficulties in mapping voting results to the will of the people. Monty Hall paradox (or equivalently Three Prisoners problem) demonstrates that a decision that has an intuitive fifty–fifty chance is in fact heavily biased towards making a decision that, given the intuitive conclusion, the player would be unlikely to make. In 20th-century science, Hilbert's paradox of the Grand Hotel, Schrödinger's cat, Wigner's friend or Ugly duckling theorem are famously vivid examples of a theory being taken to a logical but paradoxical end.

- A falsidical paradox establishes a result that not only appears false but actually is false, due to a fallacy in the demonstration. The various invalid mathematical proofs (e.g., that 1 = 2) are classic examples of this, often relying on a hidden division by zero. Another example is the inductive form of the horse paradox, which falsely generalises from true specific statements. Zeno's paradoxes are 'falsidical', concluding, for example, that a flying arrow never reaches its target or that a speedy runner cannot catch up to a tortoise with a small head-start. Therefore, falsidical paradoxes can be classified as fallacious arguments.

- A paradox that is in neither class may be an antinomy, which reaches a self-contradictory result by properly applying accepted ways of reasoning. For example, the Grelling–Nelson paradox points out genuine problems in our understanding of the ideas of truth and description.

A fourth kind, which may be alternatively interpreted as a special case of the third kind, has sometimes been described since Quine's work:

- A paradox that is both true and false at the same time and in the same sense is called a dialetheia. In Western logics, it is often assumed, following Aristotle, that no dialetheia exist, but they are sometimes accepted in Eastern traditions (e.g. in the Mohists,[20] the Gongsun Longzi,[21] and in Zen[22]) and in paraconsistent logics. It would be mere equivocation or a matter of degree, for example, to both affirm and deny that "John is here" when John is halfway through the door, but it is self-contradictory simultaneously to affirm and deny the event.

Ramsey's classification[edit]

Frank Ramsey drew a distinction between logical paradoxes and semantical paradoxes, with Russell’s paradox belonging to the former category, and Liar's paradox and Grelling’s paradoxes to the latter.[23] Ramsey introduced the by-now standard distinction between logical and semantical contradictions. While logical contradictions involve mathematical or logical terms, like class, number, and hence show that our logic or mathematics is problematic, semantical contradictions involve, besides purely logical terms, notions like “thought”, “language”, “symbolism”, which, according to Ramsey, are empirical (not formal) terms. Hence these contradictions are due to faulty ideas about thought or language and they properly belong to “epistemology”(semantics). [24]

In philosophy[edit]

A taste for paradox is central to the philosophies of Laozi, Zeno of Elea, Zhuangzi, Heraclitus, Bhartrhari, Meister Eckhart, Hegel, Kierkegaard, Nietzsche, and G.K. Chesterton, among many others. Søren Kierkegaard, for example, writes in the Philosophical Fragments that:

In medicine[edit]

A paradoxical reaction to a drug is the opposite of what one would expect, such as becoming agitated by a sedative or sedated by a stimulant. Some are common and are used regularly in medicine, such as the use of stimulants such as Adderall and Ritalin in the treatment of attention deficit hyperactivity disorder (also known as ADHD), while others are rare and can be dangerous as they are not expected, such as severe agitation from a benzodiazepine.[26]

In the smoker's paradox, cigarette smoking, despite its proven harms, has a surprising inverse correlation with the epidemiological incidence of certain diseases.

See also[edit]

- Animalia Paradoxa – Mythical, magical or otherwise suspect animals mentioned in Systema Naturae

- Antinomy – Real or apparent mutual incompatibility of two laws

- Aporia – State of puzzlement or expression of doubt, in philosophy and rhetoric

- Contradiction – Logical incompatibility between two or more propositions

- Dilemma – Problem requiring a choice between equally undesirable alternatives

- Ethical dilemma

- Eubulides – Ancient Greek philosopher known for paradoxes

- Fallacy – Argument that uses faulty reasoning

- Formal fallacy – Faulty deductive reasoning due to a logical flaw

- Four-valued logic – Any logic with four truth values

- Impossible object – Type of optical illusion

- Category:Mathematical paradoxes

- List of paradoxes – Wikipedia list article

- Mu (negative) – Buddhist term, "not" or "without" or "un-" (negative prefix)

- Oxymoron – figure of speech that entails an ostensible self-contradiction to illustrate a rhetorical point or to reveal a paradox

- Paradox of tolerance – Logical paradox in decision-making theory

- Paradox of value

- Paradoxes of material implication

- Plato's beard – Example of a paradoxical argument

- Revision theory

- Self-refuting ideas

- Syntactic ambiguity – Sentences with structures permitting multiple possible interpretations

- Temporal paradox – Theoretical paradox resulting from time travel

- Twin paradox – Thought experiment in special relativity

- Zeno's paradoxes – Set of philosophical problems

Paradoxes

Philosophical

Analysis Buridan's bridge Dream argument Epicurean Fiction Fitch's knowability Free will Goodman's Hedonism Liberal Meno's Mere addition Moore's Newcomb's Nihilism Omnipotence Preface Rule-following White horse Zeno's

Logical

Self-reference

Barber Berry Bhartrhari's Burali-Forti Court Crocodile Curry's Epimenides Free choice paradox Grelling–Nelson Kleene–Rosser Liar Card No-no Pinocchio Quine's Yablo's Opposite Day Richard's Russell's Socratic Hilbert's Hotel

Vagueness

Theseus' ship List of examples Sorites

Others

Temperature paradox Barbershop Catch-22 Drinker Entailment Lottery Plato's beard Raven Ross's Unexpected hanging "What the Tortoise Said to Achilles" Heat death paradox Olbers' paradox

Economic

Allais Antitrust Arrow information Bertrand Braess's Competition Income and fertility Downs–Thomson Easterlin Edgeworth Ellsberg European Gibson's Giffen good Icarus Jevons Leontief Lerner Lucas Mandeville's Mayfield's Metzler Plenty Productivity Prosperity Scitovsky Service recovery St. Petersburg Thrift Toil Tullock Value

Decision theory

Abilene Apportionment Alabama New states Population Arrow's Buridan's ass Chainstore Condorcet's Decision-making Downs Ellsberg Fenno's Fredkin's Green Hedgehog's Inventor's Kavka's toxin puzzle Morton's fork Navigation Newcomb's Parrondo's Prevention Prisoner's dilemma Tolerance Willpower

List-Class article List Category Category

hidevte

Logic

Outline History

Fields

Computer science Formal semantics (natural language) Inference Philosophy of logic Proof Semantics of logic Syntax

Logics

Classical Informal Critical thinking Reason Mathematical Non-classical Philosophical

Theories

Argumentation Metalogic Metamathematics Set

Foundations

Abduction Analytic and synthetic propositions Contradiction Paradox Antinomy Deduction Deductive closure Definition Description Entailment Linguistic Form Induction Logical truth Name Necessity and sufficiency Premise Probability Reference Statement Substitution Truth Validity

Lists

topics

Mathematical logic Boolean algebra Set theory

other

Logicians Rules of inference Paradoxes Fallacies Logic symbols

Socrates.png Philosophy portal Category WikiProject (talk) changes

show

Authority control Edit this at Wikidata

Categories: ParadoxesConcepts in epistemologyConcepts in logicConcepts in metaphysicsCritical thinkingPhilosophical logicThought

https://en.wikipedia.org/wiki/Paradox

A temporal paradox, time paradox, or time travel paradox is a paradox, an apparent contradiction, or logical contradiction associated with the idea of time and time travel. In physics, temporal paradoxes fall into two broad groups: consistency paradoxes exemplified by the grandfather paradox; and causal loops.[1] Other paradoxes associated with time travel are a variation of the Fermi paradox and paradoxes of free will that stem from causal loops such as Newcomb's paradox.[2]

https://en.wikipedia.org/wiki/Temporal_paradox

The grandfather paradox is a paradox of time travel in which inconsistencies emerge through changing the past.[1] The name comes from the paradox's description: a person travels to the past and kills their own grandfather before the conception of their father or mother, which prevents the time traveller's existence.[2] Despite its title, the grandfather paradox does not exclusively regard the contradiction of killing one's own grandfather to prevent one's birth. Rather, the paradox regards any action that alters the past,[3] since there is a contradiction whenever the past becomes different from the way it was.[4]

https://en.wikipedia.org/wiki/Grandfather_paradox

A causal loop is a theoretical proposition in which, by means of either retrocausality or time travel, a sequence of events (actions, information, objects, people)[1][2] is among the causes of another event, which is in turn among the causes of the first-mentioned event.[3][4] Such causally looped events then exist in spacetime, but their origin cannot be determined.[1][2] A hypothetical example of a causality loop is given of a billiard ball striking its past self: the billiard ball moves in a path towards a time machine, and the future self of the billiard ball emerges from the time machine before its past self enters it, giving its past self a glancing blow, altering the past ball's path and causing it to enter the time machine at an angle that would cause its future self to strike its past self the very glancing blow that altered its path. In this sequence of events, the change in the ball's path is its own cause, which might appear paradoxical.[5]

https://en.wikipedia.org/wiki/Causal_loop#Terminology_in_physics,_philosophy,_and_fiction

A thought experiment is a hypothetical situation in which a hypothesis, theory,[1] or principle is laid out for the purpose of thinking through its consequences.

https://en.wikipedia.org/wiki/Thought_experiment

Syntactic ambiguity, also called structural ambiguity,[1] amphiboly or amphibology, is a situation where a sentence may be interpreted in more than one way due to ambiguous sentence structure.

Syntactic ambiguity arises not from the range of meanings of single words, but from the relationship between the words and clauses of a sentence, and the sentence structure underlying the word order therein. In other words, a sentence is syntactically ambiguous when a reader or listener can reasonably interpret one sentence as having more than one possible structure.

In legal disputes, courts may be asked to interpret the meaning of syntactic ambiguities in statutes or contracts. In some instances, arguments asserting highly unlikely interpretations have been deemed frivolous.[citation needed] A set of possible parse trees for an ambiguous sentence is called a parse forest.[2][3] The process of resolving syntactic ambiguity is called syntactic disambiguation.[4]

Kantian[edit]

Immanuel Kant employs the term "amphiboly" in a sense of his own, as he has done in the case of other philosophical words. He denotes by it a confusion of the notions of the pure understanding with the perceptions of experience, and a consequent ascription to the latter of what belongs only to the former.[18]

Formal semantics (natural language)

Central concepts

Compositionality Denotation Entailment Extension Generalized quantifier Intension Logical form Presupposition Proposition Reference Scope Speech act Syntax–semantics interface Truth conditions

Topics

Areas

Anaphora Ambiguity Binding Conditionals Definiteness Disjunction Evidentiality Focus Indexicality Lexical semantics Modality Negation Propositional attitudes Tense–aspect–mood Quantification Vagueness

Phenomena

Antecedent-contained deletion Cataphora Coercion Conservativity Counterfactuals Cumulativity De dicto and de re De se Deontic modality Discourse relations Donkey anaphora Epistemic modality Faultless disagreement Free choice inferences Givenness Crossover effects Hurford disjunction Inalienable possession Intersective modification Logophoricity Mirativity Modal subordination Negative polarity items Opaque contexts Performatives Privative adjectives Quantificational variability effect Responsive predicate Rising declaratives Scalar implicature Sloppy identity Subsective modification Telicity Temperature paradox Veridicality

Formalism

Formal systems

Alternative semantics Categorial grammar Combinatory categorial grammar Discourse representation theory Dynamic semantics Generative grammar Glue semantics Inquisitive semantics Intensional logic Lambda calculus Mereology Montague grammar Segmented discourse representation theory Situation semantics Supervaluationism Type theory TTR

Concepts

Autonomy of syntax Context set Continuation Conversational scoreboard Existential closure Function application Meaning postulate Monads Possible world Quantifier raising Quantization Question under discussion Squiggle operator Type shifter Universal grinder

See also

Cognitive semantics Computational semantics Distributional semantics Formal grammar Inferentialism Linguistics wars Philosophy of language Pragmatics Semantics of logic

Categories: AmbiguitySyntaxSemantics

https://en.wikipedia.org/wiki/Syntactic_ambiguity

The major unsolved problems[1] in physics are either problematic with regard to theoretically considered scientific data, meaning that existing analysis and theory seem incapable of explaining certain observed phenomenon or experimental results, or problematic with regard to experimental design, meaning that there is a difficulty in creating an experiment to test a proposed theory or investigate a phenomenon in greater detail.

There are still some questions beyond the Standard Model of physics, such as the strong CP problem, neutrino mass, matter–antimatter asymmetry, and the nature of dark matter and dark energy.[2][3] Another problem lies within the mathematical frameworkof the Standard Model itself—the Standard Model is inconsistent with that of general relativity, to the point that one or both theories break down under certain conditions (for example within known spacetime singularities like the Big Bang and the centres of black holes beyond the event horizon).

https://en.wikipedia.org/wiki/List_of_unsolved_problems_in_physics

In physics, hidden-variable theories are proposals to provide explanations of quantum mechanical phenomena through the introduction of unobservable hypothetical entities. The existence of fundamental indeterminacy for some measurements is assumed as part of the mathematical formulation of quantum mechanics; moreover, bounds for indeterminacy can be expressed in a quantitative form by the Heisenberg uncertainty principle. Most hidden-variable theories are attempts at a deterministic description of quantum mechanics, to avoid quantum indeterminacy, but at the expense of requiring the existence of nonlocal interactions.

Albert Einstein objected to the fundamentally probabilistic nature of quantum mechanics,[1]and famously declared "I am convinced God does not play dice".[2][3] Einstein, Podolsky, and Rosen argued that quantum mechanics is an incomplete description of reality.[4][5] Bell's theorem would later suggest that local hidden variables (a way for finding a complete description of reality) of certain types are impossible. A famous non-local theory is the De Broglie–Bohm theory.

https://en.wikipedia.org/wiki/List_of_unsolved_problems_in_physics

An unobservable (also called impalpable) is an entity whose existence, nature, properties, qualities or relations are not directly observable by humans. In philosophy of science, typical examples of "unobservables" are the force of gravity, causation and beliefsor desires.[1]: 7 [2] The distinction between observable and unobservable plays a central role in Immanuel Kant's distinction between noumena and phenomena as well as in John Locke's distinction between primary and secondary qualities. The theory that unobservables posited by scientific theories exist is referred to as scientific realism. It contrasts with instrumentalism, which asserts that we should withhold ontological commitments to unobservables even though it is useful for scientific theories to refer to them. There is considerable disagreement about which objects should be classified as unobservable, for example, whether bacteria studied using microscopes or positrons studied using cloud chambers count as unobservable. Different notions of unobservability have been formulated corresponding to different types of obstacles to their observation.

Metaphysics

Metaphysicians

Parmenides Plato Aristotle Plotinus Duns Scotus Thomas Aquinas Francisco Suárez Nicolas Malebranche René Descartes John Locke David Hume Thomas Reid Immanuel Kant Isaac Newton Arthur Schopenhauer Baruch Spinoza Georg W. F. Hegel George Berkeley Gottfried Wilhelm Leibniz Christian Wolff Bernard Bolzano Hermann Lotze Henri Bergson Friedrich Nietzsche Charles Sanders Peirce Joseph Maréchal Ludwig Wittgenstein Martin Heidegger Alfred N. Whitehead Bertrand Russell G. E. Moore Jean-Paul Sartre Gilbert Ryle Hilary Putnam P. F. Strawson R. G. Collingwood Rudolf Carnap Saul Kripke W. V. O. Quine G. E. M. Anscombe Donald Davidson Michael Dummett D. M. Armstrong David Lewis Alvin Plantinga Héctor-Neri Castañeda Peter van Inwagen Derek Parfit Alexius Meinong Ernst Mally Edward N. Zalta more ...

Theories

Abstract object theory Action theory Anti-realism Determinism Dualism Enactivism Essentialism Existentialism Free will Idealism Libertarianism Liberty Materialism Meaning of life Monism Naturalism Nihilism Phenomenalism Realism Physicalism Platonic idealism Relativism Scientific realism Solipsism Subjectivism Substance theory Truthmaker theory Type theory

Concepts

Abstract object Anima mundi Being Category of being Causality Causal closure Choice Cogito, ergo sum Concept Embodied cognition Essence Existence Experience Hypostatic abstraction Idea Identity Information Insight Intelligence Intention Linguistic modality Matter Meaning Memetics Mental representation Mind Motion Nature Necessity Notion Object Pattern Perception Physical object Principle Property Qualia Quality Reality Relation Soul Subject Substantial form Thought Time Truth Type–token distinction Universal Unobservable Value more ...

Related topics

Axiology Cosmology Epistemology Feminist metaphysics Interpretations of quantum mechanics Mereology Meta- Ontology Philosophy of mind Philosophy of psychology Philosophy of self Philosophy of space and time Teleology

Category Category Socrates.png Philosophy portal

Categories: Concepts in metaphysicsConcepts in epistemology

https://en.wikipedia.org/wiki/Unobservable

Observer, Observable Universe, Empiricism, Causality, Mechanical Physics, etc..

https://en.wikipedia.org/wiki/Observable_universe

https://en.wikipedia.org/wiki/Observer_effect_(physics)

https://en.wikipedia.org/wiki/Observer_(quantum_physics)

Epistemology (/ɪˌpɪstɪˈmɒlədʒi/ (![]() listen); from Greek ἐπιστήμη, epistēmē 'knowledge', and -logy) is the branch of philosophy concerned with knowledge. Epistemologists study the nature, origin, and scope of knowledge, epistemic justification, the rationalityof belief, and various related issues. Epistemology is considered a major subfield of philosophy, along with other major subfields such as ethics, logic, and metaphysics.[1]

listen); from Greek ἐπιστήμη, epistēmē 'knowledge', and -logy) is the branch of philosophy concerned with knowledge. Epistemologists study the nature, origin, and scope of knowledge, epistemic justification, the rationalityof belief, and various related issues. Epistemology is considered a major subfield of philosophy, along with other major subfields such as ethics, logic, and metaphysics.[1]

Debates in epistemology are generally clustered around four core areas:[2][3][4]

- The philosophical analysis of the nature of knowledge and the conditions required for a belief to constitute knowledge, such as truth and justification

- Potential sources of knowledge and justified belief, such as perception, reason, memory, and testimony

- The structure of a body of knowledge or justified belief, including whether all justified beliefs must be derived from justified foundational beliefs or whether justification requires only a coherent set of beliefs

- Philosophical skepticism, which questions the possibility of knowledge, and related problems, such as whether skepticism poses a threat to our ordinary knowledge claims and whether it is possible to refute skeptical arguments

In these debates and others, epistemology aims to answer questions such as "What do we know?", "What does it mean to say that we know something?", "What makes justified beliefs justified?", and "How do we know that we know?".[1][2][5][6][7]

Part of a series on

Epistemology

Category Index Outline

Core concepts

Belief Justification Knowledge Truth

Distinctions

A priori vs. A posteriori Analytic vs. synthetic

Schools of thought

Empiricism Naturalism Pragmatism Rationalism Relativism Skepticism

Topics and views

Certainty Coherentism Contextualism Dogmatism Experience Fallibilism Foundationalism Induction Infallibilism Infinitism Perspectivism Rationality Reason Solipsism

https://en.wikipedia.org/wiki/Epistemology

In quantum mechanics, the uncertainty principle (also known as Heisenberg's uncertainty principle) is any of a variety of mathematical inequalities[1] asserting a fundamental limit to the accuracy with which the values for certain pairs of physical quantities of a particle, such as position, x, and momentum, p, can be predicted from initial conditions.

Such variable pairs are known as complementary variables or canonically conjugate variables; and, depending on interpretation, the uncertainty principle limits to what extent such conjugate properties maintain their approximate meaning, as the mathematical framework of quantum physics does not support the notion of simultaneously well-defined conjugate properties expressed by a single value. The uncertainty principle implies that it is in general not possible to predict the value of a quantity with arbitrary certainty, even if all initial conditions are specified.

Introduced first in 1927 by the German physicist Werner Heisenberg, the uncertainty principle states that the more precisely the position of some particle is determined, the less precisely its momentum can be predicted from initial conditions, and vice versa.[2] The formal inequality relating the standard deviation of position σx and the standard deviation of momentum σp was derived by Earle Hesse Kennard[3] later that year and by Hermann Weyl[4] in 1928:

where ħ is the reduced Planck constant, h/(2π).

Historically, the uncertainty principle has been confused[5][6] with a related effect in physics, called the observer effect, which notes that measurements of certain systems cannot be made without affecting the system, that is, without changing something in a system. Heisenberg utilized such an observer effect at the quantum level (see below) as a physical "explanation" of quantum uncertainty.[7] It has since become clearer, however, that the uncertainty principle is inherent in the properties of all wave-like systems,[8] and that it arises in quantum mechanics simply due to the matter wave nature of all quantum objects. Thus, the uncertainty principle actually states a fundamental property of quantum systems and is not a statement about the observational success of current technology.[9] It must be emphasized that measurement does not mean only a process in which a physicist-observer takes part, but rather any interaction between classical and quantum objects regardless of any observer.[10][note 1][note 2]

Since the uncertainty principle is such a basic result in quantum mechanics, typical experiments in quantum mechanics routinely observe aspects of it. Certain experiments, however, may deliberately test a particular form of the uncertainty principle as part of their main research program. These include, for example, tests of number–phase uncertainty relations in superconducting[12] or quantum optics[13] systems. Applications dependent on the uncertainty principle for their operation include extremely low-noise technology such as that required in gravitational wave interferometers.[14]

https://en.wikipedia.org/wiki/Uncertainty_principle

In physics, the principle of locality states that an object is directly influenced only by its immediate surroundings. A theory that includes the principle of locality is said to be a "local theory". This is an alternative to the older concept of instantaneous "action at a distance". Locality evolved out of the field theories of classical physics. The concept is that for an action at one point to have an influence at another point, something in the space between those points such as a field must mediate the action. To exert an influence, something, such as a wave or particle, must travel through the space between the two points, carrying the influence.

The special theory of relativity limits the speed at which all such influences can travel to the speed of light, . Therefore, the principle of locality implies that an event at one point cannot cause a simultaneous result at another point. An event at point cannot cause a result at point in a time less than , where is the distance between the points and is the speed of light in a vacuum.

In 1935 Albert Einstein, Boris Podolsky and Nathan Rosen in their EPR paradox theorised that quantum mechanics might not be a local theory, because a measurement made on one of a pair of separated but entangled particles causes a simultaneous effect, the collapse of the wave function, in the remote particle (i.e. an effect exceeding the speed of light). But because of the probabilistic nature of wave function collapse, this violation of locality cannot be used to transmit information faster than light. In 1964 John Stewart Bell formulated the "Bell inequality", which, if violated in actual experiments, implies that quantum mechanics violates either local causality or statistical independence, another principle. The second principle is commonly referred to as free will.

Experimental tests of the Bell inequality, beginning with Alain Aspect's 1982 experiments, show that quantum mechanics seems to violate the inequality, so it must violate either locality or statistical independence. However, critics have noted these experiments contained "loopholes", which prevented a definitive answer to this question. This might be partially resolved: in 2015 Dr Ronald Hanson at Delft University performed what has been called the first loophole-free experiment.[1] On the other hand, some loopholes might persist, and may continue to persist to the point of being difficult to test.[2]

https://en.wikipedia.org/wiki/Principle_of_locality

Probability is the branch of mathematics concerning numerical descriptions of how likely an event is to occur, or how likely it is that a proposition is true. The probability of an event is a number between 0 and 1, where, roughly speaking, 0 indicates impossibility of the event and 1 indicates certainty.[note 1][1][2] The higher the probability of an event, the more likely it is that the event will occur. A simple example is the tossing of a fair (unbiased) coin. Since the coin is fair, the two outcomes ("heads" and "tails") are both equally probable; the probability of "heads" equals the probability of "tails"; and since no other outcomes are possible, the probability of either "heads" or "tails" is 1/2 (which could also be written as 0.5 or 50%).

These concepts have been given an axiomatic mathematical formalization in probability theory, which is used widely in areas of study such as statistics, mathematics, science, finance, gambling, artificial intelligence, machine learning, computer science, game theory, and philosophy to, for example, draw inferences about the expected frequency of events. Probability theory is also used to describe the underlying mechanics and regularities of complex systems.[3]

https://en.wikipedia.org/wiki/Probability

In common parlance, randomness is the apparent or actual lack of pattern or predictability in events.[1][2] A random sequence of events, symbols or steps often has no order and does not follow an intelligible pattern or combination. Individual random events are, by definition, unpredictable, but if the probability distribution is known, the frequency of different outcomes over repeated events (or "trials") is predictable.[3][note 1] For example, when throwing two dice, the outcome of any particular roll is unpredictable, but a sum of 7 will tend to occur twice as often as 4. In this view, randomness is not haphazardness; it is a measure of uncertainty of an outcome. Randomness applies to concepts of chance, probability, and information entropy.

The fields of mathematics, probability, and statistics use formal definitions of randomness. In statistics, a random variable is an assignment of a numerical value to each possible outcome of an event space. This association facilitates the identification and the calculation of probabilities of the events. Random variables can appear in random sequences. A random process is a sequence of random variables whose outcomes do not follow a deterministic pattern, but follow an evolution described by probability distributions. These and other constructs are extremely useful in probability theory and the various applications of randomness.

Randomness is most often used in statistics to signify well-defined statistical properties. Monte Carlo methods, which rely on random input (such as from random number generators or pseudorandom number generators), are important techniques in science, particularly in the field of computational science.[4] By analogy, quasi-Monte Carlo methods use quasi-random number generators.

Random selection, when narrowly associated with a simple random sample, is a method of selecting items (often called units) from a population where the probability of choosing a specific item is the proportion of those items in the population. For example, with a bowl containing just 10 red marbles and 90 blue marbles, a random selection mechanism would choose a red marble with probability 1/10. Note that a random selection mechanism that selected 10 marbles from this bowl would not necessarily result in 1 red and 9 blue. In situations where a population consists of items that are distinguishable, a random selection mechanism requires equal probabilities for any item to be chosen. That is, if the selection process is such that each member of a population, say research subjects, has the same probability of being chosen, then we can say the selection process is random.[2]

According to Ramsey theory, pure randomness is impossible, especially for large structures. Mathematician Theodore Motzkin suggested that "while disorder is more probable in general, complete disorder is impossible".[5] Misunderstanding this can lead to numerous conspiracy theories.[6] Cristian S. Calude stated that "given the impossibility of true randomness, the effort is directed towards studying degrees of randomness".[7] It can be proven that there is infinite hierarchy (in terms of quality or strength) of forms of randomness.[7]

https://en.wikipedia.org/wiki/Randomness

Stochastic (from Greek στόχος (stókhos) 'aim, guess'[1]) refers to the property of being well described by a random probability distribution.[1] Although stochasticity and randomness are distinct in that the former refers to a modeling approach and the latter refers to phenomena themselves, these two terms are often used synonymously. Furthermore, in probability theory, the formal concept of a stochastic process is also referred to as a random process.[2][3][4][5][6]

Stochasticity is used in many different fields, including the natural sciences such as biology,[7] chemistry,[8] ecology,[9]neuroscience,[10] and physics,[11] as well as technology and engineering fields such as image processing, signal processing,[12]information theory,[13] computer science,[14] cryptography,[15] and telecommunications.[16] It is also used in finance, due to seemingly random changes in financial markets[17][18][19] as well as in medicine, linguistics, music, media, colour theory, botany, manufacturing, and geomorphology. Stochastic modeling is also used in social science.

https://en.wikipedia.org/wiki/Stochastic

Perception (from the Latin perceptio, meaning gathering or receiving) is the organization, identification, and interpretation of sensory information in order to represent and understand the presented information or environment.[2]

https://en.wikipedia.org/wiki/Perception

Sensation refers to the processing of senses by the sensory system; see also sensation (psychology). Sensation & perception are not to be confused. Strictly speaking sensation is when the info reaches the CNS. E.g.you see a red, spherical object. Perceptions is when you compare it with previously seen objects and you realise it is an apple.

https://en.wikipedia.org/wiki/Sensation

Cognition (/kɒɡˈnɪʃ(ə)n/ (![]() listen)) refers to "the mental action or process of acquiring knowledge and understanding through thought, experience, and the senses".[2]

listen)) refers to "the mental action or process of acquiring knowledge and understanding through thought, experience, and the senses".[2]

https://en.wikipedia.org/wiki/Cognition

Analysis is the process of breaking a complex topic or substance into smaller parts in order to gain a better understanding of it.[1] The technique has been applied in the study of mathematics and logic since before Aristotle (384–322 B.C.), though analysis as a formal concept is a relatively recent development.[2]

https://en.wikipedia.org/wiki/Analysis

Reason is the capacity of consciously applying logic to seek truth and draw conclusions from new or existing information.[1][2] It is closely associated with such characteristically human activities as philosophy, science, language, mathematics, and art, and is normally considered to be a distinguishing ability possessed by humans.[3]Reason is sometimes referred to as rationality.[4]

https://en.wikipedia.org/wiki/Reason

Rationality is the quality or state of being rational – that is, being based on or agreeable to reason.[1][2] Rationality implies the conformity of one's beliefs with one's reasons to believe, and of one's actions with one's reasons for action. "Rationality" has different specialized meanings in philosophy,[3] economics, sociology, psychology, evolutionary biology, game theory and political science.

https://en.wikipedia.org/wiki/Rationality

Reality is the sum or aggregate of all that is real or existent within a system, as opposed to that which is only imaginary. The term is also used to refer to the ontological status of things, indicating their existence.[1] In physical terms, reality is the totality of a system, known and unknown.[2] Philosophical questions about the nature of reality or existence or being are considered under the rubric of ontology, which is a major branch of metaphysics in the Western philosophical tradition. Ontological questions also feature in diverse branches of philosophy, including the philosophy of science, philosophy of religion, philosophy of mathematics, and philosophical logic. These include questions about whether only physical objects are real (i.e., Physicalism), whether reality is fundamentally immaterial (e.g., Idealism), whether hypothetical unobservable entities posited by scientific theories exist, whether God exists, whether numbers and other abstract objects exist, and whether possible worlds exist.

https://en.wikipedia.org/wiki/Reality

In mathematics, a real number is a value of a continuous quantity that can represent a distance along a line(or alternatively, a quantity that can be represented as an infinite decimal expansion). The adjective real in this context was introduced in the 17th century by René Descartes, who distinguished between real and imaginary roots of polynomials. The real numbers include all the rational numbers, such as the integer −5 and the fraction 4/3, and all the irrational numbers, such as √2 (1.41421356..., the square root of 2, an irrational algebraic number). Included within the irrationals are the real transcendental numbers, such as π(3.14159265...).[1] In addition to measuring distance, real numbers can be used to measure quantities such as time, mass, energy, velocity, and many more. The set of real numbers is denoted using the symbol R or [2][3] and is sometimes called "the reals".[4]

https://en.wikipedia.org/wiki/Real_number

Existence is the ability of an entity to interact with physical or mental reality. In philosophy, it refers to the ontological property[1] of being.[2]

Etymology[edit]

The term existence comes from Old French existence, from Medieval Latin existentia/exsistentia.[3]

Context in philosophy[edit]

Materialism holds that the only things that exist are matter and energy, that all things are composed of material, that all actions require energy, and that all phenomena (including consciousness) are the result of the interaction of matter. Dialectical materialismdoes not make a distinction between being and existence, and defines it as the objective reality of various forms of matter.[2]

Idealism holds that the only things that exist are thoughts and ideas, while the material world is secondary.[4][5] In idealism, existence is sometimes contrasted with transcendence, the ability to go beyond the limits of existence.[2] As a form of epistemological idealism, rationalism interprets existence as cognizable and rational, that all things are composed of strings of reasoning, requiring an associated idea of the thing, and all phenomena (including consciousness) are the result of an understanding of the imprint from the noumenal world in which lies beyond the thing-in-itself.

In scholasticism, existence of a thing is not derived from its essence but is determined by the creative volition of God, the dichotomy of existence and essence demonstrates that the dualism of the created universe is only resolvable through God.[2] Empiricismrecognizes existence of singular facts, which are not derivable and which are observable through empirical experience.

The exact definition of existence is one of the most important and fundamental topics of ontology, the philosophical study of the nature of being, existence, or reality in general, as well as of the basic categories of being and their relations. Traditionally listed as a part of the major branch of philosophy known as metaphysics, ontology deals with questions concerning what things or entities exist or can be said to exist, and how such things or entities can be grouped, related within a hierarchy, and subdivided according to similarities and differences.

Anti-realism is the view of idealists who are skeptics about the physical world, maintaining either: (1) that nothing exists outside the mind, or (2) that we would have no access to a mind-independent reality even if it may exist. Realists, in contrast, hold that perceptions or sense data are caused by mind-independent objects. An "anti-realist" who denies that other minds exist (i. e., a solipsist) is different from an "anti-realist" who claims that there is no fact of the matter as to whether or not there are unobservable other minds (i. e., a logical behaviorist).

See also[edit]

- Cogito ergo sum

- Conservation law (physics)

- Existence precedes essence

- Existence theorem

- Existential quantification

- Existentialism

- Human condition

- Religious views on the self

- Solipsism

- Three marks of existence

- Universal quantification

https://en.wikipedia.org/wiki/Existence

In philosophy, potentiality and actuality[1] are a pair of closely connected principles which Aristotle used to analyze motion, causality, ethics, and physiology in his Physics, Metaphysics, Nicomachean Ethics and De Anima.[2]

The concept of potentiality, in this context, generally refers to any "possibility" that a thing can be said to have. Aristotle did not consider all possibilities the same, and emphasized the importance of those that become real of their own accord when conditions are right and nothing stops them.[3] Actuality, in contrast to potentiality, is the motion, change or activity that represents an exercise or fulfillment of a possibility, when a possibility becomes real in the fullest sense.[4]

These concepts, in modified forms, remained very important into the Middle Ages, influencing the development of medieval theologyin several ways. In modern times the dichotomy has gradually lost importance, as understandings of nature and deity have changed. However the terminology has also been adapted to new uses, as is most obvious in words like energy and dynamic. These were words first used in modern physics by the German scientist and philosopher, Gottfried Wilhelm Leibniz. Another more recent example is the highly controversial biological concept of an "entelechy".

Entelecheia in modern philosophy and biology[edit]

As discussed above, terms derived from dunamis and energeia have become parts of modern scientific vocabulary with a very different meaning from Aristotle's. The original meanings are not used by modern philosophers unless they are commenting on classical or medieval philosophy. In contrast, entelecheia, in the form of entelechy is a word used much less in technical senses in recent times.

As mentioned above, the concept had occupied a central position in the metaphysics of Leibniz, and is closely related to his monadin the sense that each sentient entity contains its own entire universe within it. But Leibniz' use of this concept influenced more than just the development of the vocabulary of modern physics. Leibniz was also one of the main inspirations for the important movement in philosophy known as German Idealism, and within this movement and schools influenced by it entelechy may denote a force propelling one to self-fulfillment.

In the biological vitalism of Hans Driesch, living things develop by entelechy, a common purposive and organising field. Leading vitalists like Driesch argued that many of the basic problems of biology cannot be solved by a philosophy in which the organism is simply considered a machine.[52] Vitalism and its concepts like entelechy have since been discarded as without value for scientific practice by the overwhelming majority of professional biologists.

However, in philosophy aspects and applications of the concept of entelechy have been explored by scientifically interested philosophers and philosophically inclined scientists alike. One example was the American critic and philosopher Kenneth Burke(1897–1993) whose concept of the "terministic screens" illustrates his thought on the subject. Most prominent was perhaps the German quantum physicist Werner Heisenberg. He looked to the notions of potentiality and actuality in order to better understand the relationship of quantum theory to the world.[53]

Prof Denis Noble argues that, just as teleological causation is necessary to the social sciences, a specific teleological causation in biology, expressing functional purpose, should be restored and that it is already implicit in neo-Darwinism (e.g. "selfish gene"). Teleological analysis proves parsimonious when the level of analysis is appropriate to the complexity of the required 'level' of explanation (e.g. whole body or organ rather than cell mechanism).[54]

See also[edit]

Actual infinity

Actus purus

Alexander of Aphrodisias

Essence–Energies distinction

First cause

Henosis

Hylomorphism

Hypokeimenon

Hypostasis (philosophy and religion)

Sumbebekos

Theosis

Unmoved movers

https://en.wikipedia.org/wiki/Potentiality_and_actuality

In scholastic philosophy, actus purus (English: "pure actuality," "pure act") is the absolute perfection of God.

https://en.wikipedia.org/wiki/Actus_purus

Scholasticism was a medieval school of philosophy that employed a critical method of philosophical analysis predicated upon a Latin Catholic theistic curriculum which dominated teaching in the medieval universities in Europe from about 1100 to 1700. It originated within the Christian monastic schools that were the basis of the earliest European universities.[1] The rise of scholasticism was closely associated with these schools that flourished in Italy, France, Spain and England.[2]

https://en.wikipedia.org/wiki/Scholasticism

In the philosophy of mathematics, the abstraction of actual infinity involves the acceptance (if the axiom of infinity is included) of infinite entities as given, actual and completed objects. These might include the set of natural numbers, extended real numbers, transfinite numbers, or even an infinite sequence of rational numbers.[1] Actual infinity is to be contrasted with potential infinity, in which a non-terminating process (such as "add 1 to the previous number") produces a sequence with no last element, and where each individual result is finite and is achieved in a finite number of steps. As a result, potential infinity is often formalized using the concept of limit.[2]

https://en.wikipedia.org/wiki/Actual_infinity

In axiomatic set theory and the branches of mathematics and philosophy that use it, the axiom of infinity is one of the axioms of Zermelo–Fraenkel set theory. It guarantees the existence of at least one infinite set, namely a set containing the natural numbers. It was first published by Ernst Zermelo as part of his set theory in 1908.[1]

https://en.wikipedia.org/wiki/Axiom_of_infinity

In axiomatic set theory and the branches of logic, mathematics, and computer science that use it, the axiom of extensionality, or axiom of extension, is one of the axioms of Zermelo–Fraenkel set theory. It says that sets having the same elements are the same set.

https://en.wikipedia.org/wiki/Axiom_of_extensionality

In many popular versions of axiomatic set theory, the axiom schema of specification, also known as the axiom schema of separation, subset axiom scheme or axiom schema of restricted comprehension is an axiom schema. Essentially, it says that any definable subclass of a set is a set.

Some mathematicians call it the axiom schema of comprehension, although others use that term for unrestrictedcomprehension, discussed below.

Because restricting comprehension avoided Russell's paradox, several mathematicians including Zermelo, Fraenkel, and Gödelconsidered it the most important axiom of set theory.[1]

https://en.wikipedia.org/wiki/Axiom_schema_of_specification

The word schema comes from the Greek word σχῆμα (skhēma), which means shape, or more generally, plan. The plural is σχήματα (skhēmata). In English, both schemas and schemata are used as plural forms.

https://en.wikipedia.org/wiki/Schema

In mathematical logic, an axiom schema (plural: axiom schemata or axiom schemas) generalizes the notion of axiom.

https://en.wikipedia.org/wiki/Axiom_schema

In psychology and cognitive science, a schema (plural schemata or schemas) describes a pattern of thought or behavior that organizes categories of information and the relationships among them.[1][2] It can also be described as a mental structure of preconceived ideas, a framework representing some aspect of the world, or a system of organizing and perceiving new information.[3] Schemata influence attention and the absorption of new knowledge: people are more likely to notice things that fit into their schema, while re-interpreting contradictions to the schema as exceptions or distorting them to fit. Schemata have a tendency to remain unchanged, even in the face of contradictory information.[4] Schemata can help in understanding the world and the rapidly changing environment.[5] People can organize new perceptions into schemata quickly as most situations do not require complex thought when using schema, since automatic thought is all that is required.[5]

People use schemata to organize current knowledge and provide a framework for future understanding. Examples of schemata include academic rubrics, social schemas, stereotypes, social roles, scripts, worldviews, and archetypes. In Piaget's theory of development, children construct a series of schemata, based on the interactions they experience, to help them understand the world.[6]

https://en.wikipedia.org/wiki/Schema_(psychology)

In Kantian philosophy, a transcendental schema (plural: schemata; from Greek: σχῆμα, "form, shape, figure") is the procedural rule by which a category or pure, non-empirical concept is associated with a sense impression. A private, subjective intuition is thereby discursively thought to be a representation of an external object. Transcendental schemata are supposedly produced by the imagination in relation to time.

https://en.wikipedia.org/wiki/Schema_(Kant)

In philosophy, philosophy of physics deals with conceptual and interpretational issues in modern physics, many of which overlap with research done by certain kinds of theoretical physicists. Philosophy of physics can be broadly lumped into three areas:

- interpretations of quantum mechanics: mainly concerning issues with how to formulate an adequate response to the measurement problem and understand what the theory says about reality

- the nature of space and time: Are space and time substances, or purely relational? Is simultaneity conventional or only relative? Is temporal asymmetry purely reducible to thermodynamic asymmetry?

- inter-theoretic relations: the relationship between various physical theories, such as thermodynamics and statistical mechanics. This overlaps with the issue of scientific reduction.

The philosophy of information (PI) is a branch of philosophy that studies topics relevant to information processing, representational system and consciousness, computer science, information science and information technology.

It includes:

- the critical investigation of the conceptual nature and basic principles of information, including its dynamics, utilisation and sciences

- the elaboration and application of information-theoretic and computational methodologies to philosophical problems.[1]

In quantum mechanics, the measurement problem considers how, or whether, wave function collapse occurs. The inability to observe such a collapse directly has given rise to different interpretations of quantum mechanics and poses a key set of questions that each interpretation must answer.

The wave function in quantum mechanics evolves deterministically according to the Schrödinger equation as a linear superposition of different states. However, actual measurements always find the physical system in a definite state. Any future evolution of the wave function is based on the state the system was discovered to be in when the measurement was made, meaning that the measurement "did something" to the system that is not obviously a consequence of Schrödinger evolution. The measurement problem is describing what that "something" is, how a superposition of many possible values becomes a single measured value.

To express matters differently (paraphrasing Steven Weinberg),[1][2] the Schrödinger wave equation determines the wave function at any later time. If observers and their measuring apparatus are themselves described by a deterministic wave function, why can we not predict precise results for measurements, but only probabilities? As a general question: How can one establish a correspondence between quantum reality and classical reality?[3]

https://en.wikipedia.org/wiki/Measurement_problem

Subcategories

This category has the following 3 subcategories, out of 3 total.

Pages in category "Physical paradoxes"

The following 44 pages are in this category, out of 44 total. This list may not reflect recent changes (learn more).

Categories: Paradoxes Philosophy of physics Thought experiments in physics

https://en.wikipedia.org/wiki/Category:Physical_paradoxes

https://en.wikipedia.org/wiki/EPR_paradox#Paradox

Formulated in 1862 by Lord Kelvin, Hermann von Helmholtz and William John Macquorn Rankine,[1] the heat death paradox, also known as Clausius's paradox and thermodynamic paradox,[2] is a reductio ad absurdum argument that uses thermodynamics to show the impossibility of an infinitely old universe.

This paradox is directed at the then-mainstream strand of belief in a classical view of a sempiternal universe whereby its matter is postulated as everlasting and having always been recognisably the universe. Clausius's paradox is one of paradigm. It was necessary to amend the fundamental cosmic ideas meaning change of the paradigm. The paradox was solved when the paradigm was changed.

The paradox was based upon the rigid mechanical point of view of the second law of thermodynamics postulated by Rudolf Clausiusaccording to which heat can only be transferred from a warmer to a colder object. It notes: if the universe were eternal, as claimed classically, it should already be cold and isotropic (its objects the same temperature).[3]

Any hot object transfers heat to its cooler surroundings, until everything is at the same temperature. For two objects at the same temperature as much heat flows from one body as flows from the other, and the net effect is no change. If the universe were infinitely old, there must have been enough time for the stars to cool and warm their surroundings. Everywhere should therefore be at the same temperature and there should either be no stars, or everything should be as hot as stars.

Since there are stars and colder objects the universe is not in thermal equilibrium so it cannot be infinitely old.

The paradox does not arise in Big Bang nor modern steady state cosmology. In the former the universe is too young to have reached equilibrium; in the latter including the more nuanced quasi-steady state theory sufficient hydrogen is posited to have been replenished or regenerated continuously to allow constant average density. Star population depletion and reduction in temperature is slowed by the formation or coalescing of great stars which between certain masses and certain temperatures form supernovae remnant nebulae—such reincarnation postpones the heat death as does expansion of the universe.

See also[edit]

https://en.wikipedia.org/wiki/Heat_death_paradox

Vertical pressure variation is the variation in pressure as a function of elevation. Depending on the fluid in question and the context being referred to, it may also vary significantly in dimensions perpendicular to elevation as well, and these variations have relevance in the context of pressure gradient force and its effects. However, the vertical variation is especially significant, as it results from the pull of gravity on the fluid; namely, for the same given fluid, a decrease in elevation within it corresponds to a taller column of fluid weighing down on that point.

Hydrostatic paradox[edit]

| Wikimedia Commons has media related to Hydrostatic paradox. |

The barometric formula depends only on the height of the fluid chamber, and not on its width or length. Given a large enough height, any pressure may be attained. This feature of hydrostatics has been called the hydrostatic paradox. As expressed by W. H. Besant,[2]

- Any quantity of liquid, however small, may be made to support any weight, however large.

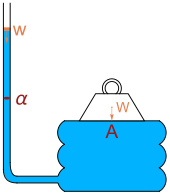

The Dutch scientist Simon Stevin was the first to explain the paradox mathematically.[3] In 1916 Richard Glazebrook mentioned the hydrostatic paradox as he described an arrangement he attributed to Pascal: a heavy weight W rests on a board with area A resting on a fluid bladder connected to a vertical tube with cross-sectional area α. Pouring water of weight w down the tube will eventually raise the heavy weight. Balance of forces leads to the equation

Glazebrook says, "By making the area of the board considerable and that of the tube small, a large weight W can be supported by a small weight w of water. This fact is sometimes described as the hydrostatic paradox."[4]

Demonstrations of the hydrostatic paradox are used in teaching the phenomenon.[5][6]

https://en.wikipedia.org/wiki/Vertical_pressure_variation#Hydrostatic_paradox

A gravitational singularity, spacetime singularity or simply singularity is a location in spacetime where the density and gravitational field of a celestial body is predicted to become infinite by general relativity in a way that does not depend on the coordinate system. The quantities used to measure gravitational field strength are the scalar invariant curvatures of spacetime, which includes a measure of the density of matter. Since such quantities become infinite at the singularity point, the laws of normal spacetime break down.[1][2]

https://en.wikipedia.org/wiki/Gravitational_singularity

Zero-point energy (ZPE) is the lowest possible energy that a quantum mechanical system may have. Unlike in classical mechanics, quantum systems constantly fluctuate in their lowest energy state as described by the Heisenberg uncertainty principle.[1] As well as atoms and molecules, the empty space of the vacuum has these properties. According to quantum field theory, the universe can be thought of not as isolated particles but continuous fluctuating fields: matter fields, whose quanta are fermions (i.e., leptons and quarks), and force fields, whose quanta are bosons (e.g., photons and gluons). All these fields have zero-point energy.[2] These fluctuating zero-point fields lead to a kind of reintroduction of an aether in physics[1][3] since some systems can detect the existence of this energy. However, this aether cannot be thought of as a physical medium if it is to be Lorentz invariant such that there is no contradiction with Einstein's theory of special relativity.[1]

https://en.wikipedia.org/wiki/Zero-point_energy

The quantum vacuum state or simply quantum vacuum refers to the quantum state with the lowest possible energy.

Quantum vacuum may also refer to:

- Quantum chromodynamic vacuum (QCD vacuum), a non-perturbative vacuum

- Quantum electrodynamic vacuum (QED vacuum), a field-theoretic vacuum

- Quantum vacuum (ground state), the state of lowest energy of a quantum system

- Quantum vacuum collapse, a hypothetical vacuum metastability event

- Quantum vacuum expectation value, an operator's average, expected value in a quantum vacuum

- Quantum vacuum energy, an underlying background energy that exists in space throughout the entire Universe

- The Quantum Vacuum, a physics textbook by Peter W. Milonni

See also[edit]

https://en.wikipedia.org/wiki/Quantum_vacuum_(disambiguation)

In astronomy, the Zero Point in a photometric system is defined as the magnitude of an object that produces 1 count per second on the detector.[1] The zero point is used to calibrate a system to the standard magnitude system, as the flux detected from stars will vary from detector to detector.[2] Traditionally, Vega is used as the calibration star for the zero point magnitude in specific pass bands (U, B, and V), although often, an average of multiple stars is used for higher accuracy.[3] It is not often practical to find Vega in the sky to calibrate the detector, so for general purposes, any star may be used in the sky that has a known apparent magnitude.[4]

https://en.wikipedia.org/wiki/Zero_Point_(photometry)

In mathematics, the origin of a Euclidean space is a special point, usually denoted by the letter O, used as a fixed point of reference for the geometry of the surrounding space.

In physical problems, the choice of origin is often arbitrary, meaning any choice of origin will ultimately give the same answer. This allows one to pick an origin point that makes the mathematics as simple as possible, often by taking advantage of some kind of geometric symmetry.

https://en.wikipedia.org/wiki/Origin_(mathematics)

ee also[edit]

- Zero-point energy, the minimum energy a quantum mechanical system may have

- Zero-point field, a synonym for the vacuum state in quantum field theory

- Hofstadter zero-point, a special point associated with every plane triangle

- Point of origin (disambiguation)

- Triple zero (disambiguation)

- Point Zero (disambiguation)

https://en.wikipedia.org/wiki/Zero_point

MissingNo. (Japanese: けつばん[1], Hepburn: Ketsuban), short for "Missing Number" and sometimes spelled without the period, is an unofficial Pokémon species found in the video games Pokémon Red and Blue. Due to the programming of certain in-game events, players can encounter MissingNo. via a glitch.

https://en.wikipedia.org/wiki/MissingNo.

In triangle geometry, a Hofstadter point is a special point associated with every plane triangle. In fact there are several Hofstadter points associated with a triangle. All of them are triangle centers. Two of them, the Hofstadter zero-point and Hofstadter one-point, are particularly interesting.[1] They are two transcendental triangle centers. Hofstadter zero-point is the center designated as X(360) and the Hofstafter one-point is the center denoted as X(359) in Clark Kimberling's Encyclopedia of Triangle Centers. The Hofstadter zero-point was discovered by Douglas Hofstadter in 1992.[1]

https://en.wikipedia.org/wiki/Hofstadter_points

In quantum field theory, the quantum vacuum state (also called the quantum vacuum or vacuum state) is the quantum state with the lowest possible energy. Generally, it contains no physical particles. the word Zero-point field is sometimes used as a synonym for the vacuum state of an quantized field which is completely individual.

According to present-day understanding of what is called the vacuum state or the quantum vacuum, it is "by no means a simple empty space".[1][2] According to quantum mechanics, the vacuum state is not truly empty but instead contains fleeting electromagnetic waves and particles that pop into and out of the quantum field.[3][4][5]

The QED vacuum of quantum electrodynamics (or QED) was the first vacuum of quantum field theory to be developed. QED originated in the 1930s, and in the late 1940s and early 1950s it was reformulated by Feynman, Tomonaga and Schwinger, who jointly received the Nobel prize for this work in 1965.[6] Today the electromagnetic interactions and the weak interactions are unified (at very high energies only) in the theory of the electroweak interaction.

The Standard Model is a generalization of the QED work to include all the known elementary particles and their interactions (except gravity). Quantum chromodynamics(or QCD) is the portion of the Standard Model that deals with strong interactions, and QCD vacuum is the vacuum of quantum chromodynamics. It is the object of study in the Large Hadron Collider and the Relativistic Heavy Ion Collider, and is related to the so-called vacuum structure of strong interactions.[7]

https://en.wikipedia.org/wiki/Quantum_vacuum_state

In philosophical epistemology, there are two types of coherentism: the coherence theory of truth;[1] and the coherence theory of justification[2] (also known as epistemic coherentism).[3]

Coherent truth is divided between an anthropological approach, which applies only to localized networks ('true within a given sample of a population, given our understanding of the population'), and an approach that is judged on the basis of universals, such as categorical sets. The anthropological approach belongs more properly to the correspondence theory of truth, while the universal theories are a small development within analytic philosophy.

The coherentist theory of justification, which may be interpreted as relating to either theory of coherent truth, characterizes epistemic justification as a property of a belief only if that belief is a member of a coherent set. What distinguishes coherentism from other theories of justification is that the set is the primary bearer of justification.[4]

As an epistemological theory, coherentism opposes dogmatic foundationalism and also infinitism through its insistence on definitions. It also attempts to offer a solution to the regress argument that plagues correspondence theory. In an epistemological sense, it is a theory about how belief can be proof-theoretically justified.

Coherentism is a view about the structure and system of knowledge, or else justified belief. The coherentist's thesis is normally formulated in terms of a denial of its contrary, such as dogmatic foundationalism, which lacks a proof-theoretical framework, or correspondence theory, which lacks universalism. Counterfactualism, through a vocabulary developed by David K. Lewis and his many worlds theory[5]although popular with philosophers, has had the effect of creating wide disbelief of universals amongst academics. Many difficulties lie in between hypothetical coherence and its effective actualization. Coherentism claims, at a minimum, that not all knowledge and justified belief rest ultimately on a foundation of noninferential knowledge or justified belief. To defend this view, they may argue that conjunctions (and) are more specific, and thus in some way more defensible, than disjunctions (or).

After responding to foundationalism, coherentists normally characterize their view positively by replacing the foundationalism metaphor of a building as a model for the structure of knowledge with different metaphors, such as the metaphor that models our knowledge on a ship at sea whose seaworthiness must be ensured by repairs to any part in need of it. This metaphor fulfills the purpose of explaining the problem of incoherence, which was first raised in mathematics. Coherentists typically hold that justification is solely a function of some relationship between beliefs, none of which are privileged beliefs in the way maintained by dogmatic foundationalists. In this way universal truths are in closer reach. Different varieties of coherentism are individuated by the specific relationship between a system of knowledge and justified belief, which can be interpreted in terms of predicate logic, or ideally, proof theory.[6]

https://en.wikipedia.org/wiki/Coherentism

In epistemology, the coherence theory of truth regards truth as coherence within some specified set of sentences, propositions or beliefs. The model is contrasted with the correspondence theory of truth.