https://en.wikipedia.org/wiki/Triple_point

https://en.wikipedia.org/wiki/Tritium

https://en.wikipedia.org/wiki/Implosion

The proton–proton chain, also commonly referred to as the p–p chain, is one of two known sets of nuclear fusion reactions by which stars convert hydrogen to helium. It dominates in stars with masses less than or equal to that of the Sun,[2] whereas the CNO cycle, the other known reaction, is suggested by theoretical models to dominate in stars with masses greater than about 1.3 times that of the Sun.[3]

In general, proton–proton fusion can occur only if the kinetic energy (i.e. temperature) of the protons is high enough to overcome their mutual electrostatic repulsion.[4]

In the Sun, deuteron-producing events are rare. Diprotons are the much more common result of proton–proton reactions within the star, and diprotons almost immediately decay back into two protons. Since the conversion of hydrogen to helium is slow, the complete conversion of the hydrogen initially in the core of the Sun is calculated to take more than ten billion years.[5]

Although sometimes called the "proton–proton chain reaction", it is not a chain reaction in the normal sense. In most nuclear reactions, a chain reaction designates a reaction that produces a product, such as neutrons given off during fission, that quickly induces another such reaction. The proton–proton chain is, like a decay chain, a series of reactions. The product of one reaction is the starting material of the next reaction. There are two main chains leading from hydrogen to helium in the Sun. One chain has five reactions, the other chain has six.

History of the theory

The theory that proton–proton reactions are the basic principle by which the Sun and other stars burn was advocated by Arthur Eddington in the 1920s. At the time, the temperature of the Sun was considered to be too low to overcome the Coulomb barrier. After the development of quantum mechanics, it was discovered that tunneling of the wavefunctions of the protons through the repulsive barrier allows for fusion at a lower temperature than the classical prediction.

In 1939, Hans Bethe attempted to calculate the rates of various reactions in stars. Starting with two protons combining to give a deuterium nucleus and a positron he found what we now call Branch II of the proton–proton chain. But he did not consider the reaction of two 3

He nuclei (Branch I) which we now know to be important.[6] This was part of the body of work in stellar nucleosynthesis for which Bethe won the Nobel Prize in Physics in 1967.

The proton–proton chain

The first step in all the branches is the fusion of two protons into a deuteron. As the protons fuse, one of them undergoes beta plus decay, converting into a neutron by emitting a positron and an electron neutrino[7] (though a small amount of deuterium nuclei is produced by the "pep" reaction, see below):

The positron will annihilate with an electron from the environment into two gamma rays. Including this annihilation and the energy of the neutrino, the net reaction

(which is the same as the PEP reaction, see below) has a Q value (released energy) of 1.442 MeV:[7] The relative amounts of energy going to the neutrino and to the other products is variable.

This is the rate-limiting reaction and is extremely slow due to it being initiated by the weak nuclear force. The average proton in the core of the Sun waits 9 billion years before it successfully fuses with another proton. It has not been possible to measure the cross-section of this reaction experimentally because it is so low[8] but it can be calculated from theory.[1]

After it is formed, the deuteron produced in the first stage can fuse with another proton to produce the light isotope of helium, 3

He

:

This process, mediated by the strong nuclear force rather than the weak force, is extremely fast by comparison to the first step. It is estimated that, under the conditions in the Sun's core, each newly created deuterium nucleus exists for only about one second before it is converted into helium-3.[1]

In the Sun, each helium-3 nucleus produced in these reactions

exists for only about 400 years before it is converted into helium-4.[9] Once the helium-3 has been produced, there are four possible paths to generate 4

He

. In p–p I, helium-4 is produced by fusing two helium-3 nuclei; the p–p II and p–p III branches fuse 3

He

with pre-existing 4

He

to form beryllium-7, which undergoes further reactions to produce two helium-4 nuclei.

About 99% of the energy output of the sun comes from the various p–p chains, with the other 1% coming from the CNO cycle. According to one model of the sun, 83.3 percent of the 4

He

produced by the various p–p branches is produced via branch I while p–p II produces 16.68 percent and p–p III 0.02 percent.[1]

Since half the neutrinos produced in branches II and III are produced

in the first step (synthesis of a deuteron), only about 8.35 percent of

neutrinos come from the later steps (see below), and about 91.65 percent

are from deuteron synthesis. However, another solar model from around

the same time gives only 7.14 percent of neutrinos from the later steps

and 92.86 percent from the synthesis of deuterium nuclei.[10] The difference is apparently due to slightly different assumptions about the composition and metallicity of the sun.

There is also the extremely rare p–p IV branch. Other even rarer reactions may occur. The rate of these reactions is very low due to very small cross-sections, or because the number of reacting particles is so low that any reactions that might happen are statistically insignificant.

The overall reaction is:

- 4 1H+ + 2 e- → 4He2+ + 2 νe

releasing 26.73 MeV of energy, some of which is lost to the neutrinos.

The p–p I branch

The complete chain releases a net energy of 26.732 MeV[11] but 2.2 percent of this energy (0.59 MeV) is lost to the neutrinos that are produced.[12]

The p–p I branch is dominant at temperatures of 10 to 18 MK.[13]

Below 10 MK, the p–p chain proceeds at slow rate, resulting in a low production of 4

He

.[14]

The p–p II branch

3

2He

+ 4

2He

→ 7

4Be+

γ

+ 1.59 MeV 7

4Be

+

e−

→ 7

3Li+

ν

e+ 0.861 MeV / 0.383 MeV 7

3Li

+ 1

1H

→ 24

2He

+ 17.35 MeV

The p–p II branch is dominant at temperatures of 18 to 25 MK.[13]

Note that the energies in the second reaction above are the

energies of the neutrinos that are produced by the reaction. 90 percent

of the neutrinos produced in the reaction of 7

Be

to 7

Li

carry an energy of 0.861 MeV, while the remaining 10 percent carry 0.383 MeV. The difference is whether the lithium-7 produced is in the ground state or an excited (metastable) state, respectively. The total energy released going from 7

Be to stable 7

Li is about 0.862 MeV, almost all of which is lost to the neutrino if the decay goes directly to the stable lithium.

The p–p III branch

The last three stages of this chain, plus the positron annihilation, contribute a total of 18.209 MeV, though much of this is lost to the neutrino.

The p–p III chain is dominant if the temperature exceeds 25 MK.[13]

The p–p III chain is not a major source of energy in the Sun, but it was very important in the solar neutrino problem because it generates very high energy neutrinos (up to 14.06 MeV).

The p–p IV (Hep) branch

This reaction is predicted theoretically, but it has never been observed due to its rarity (about 0.3 ppm in the Sun). In this reaction, helium-3 captures a proton directly to give helium-4, with an even higher possible neutrino energy (up to 18.8 MeV[citation needed]).

The mass–energy relationship gives 19.795 MeV for the energy released by this reaction plus the ensuing annihilation, some of which is lost to the neutrino.

Energy release

Comparing the mass of the final helium-4 atom with the masses of the four protons reveals that 0.7 percent of the mass of the original protons has been lost. This mass has been converted into energy, in the form of kinetic energy of produced particles, gamma rays, and neutrinos released during each of the individual reactions. The total energy yield of one whole chain is 26.73 MeV.

Energy released as gamma rays will interact with electrons and

protons and heat the interior of the Sun. Also kinetic energy of fusion

products (e.g. of the two protons and the 4

2He

from the p–p I reaction) adds energy to the plasma in the Sun. This heating keeps the core of the Sun hot and prevents it from collapsing under its own weight as it would if the sun were to cool down.

Neutrinos do not interact significantly with matter and therefore do not heat the interior and thereby help support the Sun against gravitational collapse. Their energy is lost: the neutrinos in the p–p I, p–p II, and p–p III chains carry away 2.0%, 4.0%, and 28.3% of the energy in those reactions, respectively.[15]

The following table calculates the amount of energy lost to neutrinos and the amount of "solar luminosity" coming from the three branches. "Luminosity" here means the amount of energy given off by the Sun as electromagnetic radiation rather than as neutrinos. The starting figures used are the ones mentioned higher in this article. The table concerns only the 99% of the power and neutrinos that come from the p–p reactions, not the 1% coming from the CNO cycle.

| Branch | Percent of helium-4 produced | Percent loss due to neutrino production | Relative amount of energy lost | Relative amount of luminosity produced | Percentage of total luminosity |

|---|---|---|---|---|---|

| Branch I | 83.3 | 2 | 1.67 | 81.6 | 83.6 |

| Branch II | 16.68 | 4 | 0.67 | 16.0 | 16.4 |

| Branch III | 0.02 | 28.3 | 0.0057 | 0.014 | 0.015 |

| Total | 100 | 2.34 | 97.7 | 100 |

The PEP reaction

A deuteron can also be produced by the rare pep (proton–electron–proton) reaction (electron capture):

In the Sun, the frequency ratio of the pep reaction versus the p–p reaction is 1:400. However, the neutrinos released by the pep reaction are far more energetic: while neutrinos produced in the first step of the p–p reaction range in energy up to 0.42 MeV, the pep reaction produces sharp-energy-line neutrinos of 1.44 MeV. Detection of solar neutrinos from this reaction were reported by the Borexino collaboration in 2012.[16]

Both the pep and p–p reactions can be seen as two different Feynman representations of the same basic interaction, where the electron passes to the right side of the reaction as a positron. This is represented in the figure of proton–proton and electron-capture reactions in a star, available at the NDM'06 web site.[17]

See also

References

- Int'l Conference on Neutrino and Dark Matter, 7 Sept 2006, Session 14.

External links

Media related to Proton-proton chain reaction at Wikimedia Commons

Media related to Proton-proton chain reaction at Wikimedia Commons

https://en.wikipedia.org/wiki/Proton%E2%80%93proton_chain

https://en.wikipedia.org/wiki/Nucleon_magnetic_moment

https://en.wikipedia.org/wiki/Coulomb%27s_law

The nucleon magnetic moments are the intrinsic magnetic dipole moments of the proton and neutron, symbols μp and μn. The nucleus of atoms comprises protons and neutrons, both nucleons that behave as small magnets. Their magnetic strengths are measured by their magnetic moments. The nucleons interact with normal matter through either the nuclear force or their magnetic moments, with the charged proton also interacting by the Coulomb force.

The proton's magnetic moment, surprisingly large, was directly measured in 1933, while the neutron was determined to have a magnetic moment by indirect methods in the mid 1930s. Luis Alvarez and Felix Bloch made the first accurate, direct measurement of the neutron's magnetic moment in 1940. The proton's magnetic moment is exploited to make measurements of molecules by proton nuclear magnetic resonance. The neutron's magnetic moment is exploited to probe the atomic structure of materials using scattering methods and to manipulate the properties of neutron beams in particle accelerators.

The existence of the neutron's magnetic moment and the large value for the proton magnetic moment indicate the nucleons are not elementary particles. For an elementary particle to have an intrinsic magnetic moment, it must have both spin and electric charge. The nucleons have spin-1/2 ħ, but the neutron has no net charge. Their magnetic moments were puzzling and defied a valid explanation until the quark model for hadron particles was developed in the 1960s. The nucleons are composed of three quarks, and the magnetic moments of these elementary particles combine to give the nucleons their magnetic moments.

https://en.wikipedia.org/wiki/Nucleon_magnetic_moment

https://en.wikipedia.org/wiki/Magnets

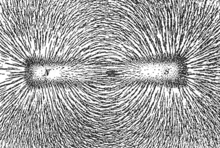

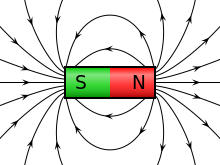

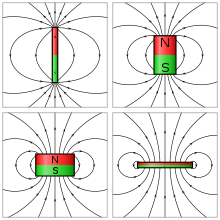

A magnet is a material or object that produces a magnetic field. This magnetic field is invisible but is responsible for the most notable property of a magnet: a force that pulls on other ferromagnetic materials, such as iron, steel, nickel, cobalt, etc. and attracts or repels other magnets.

A permanent magnet is an object made from a material that is magnetized and creates its own persistent magnetic field. An everyday example is a refrigerator magnet used to hold notes on a refrigerator door. Materials that can be magnetized, which are also the ones that are strongly attracted to a magnet, are called ferromagnetic (or ferrimagnetic). These include the elements iron, nickel and cobalt and their alloys, some alloys of rare-earth metals, and some naturally occurring minerals such as lodestone. Although ferromagnetic (and ferrimagnetic) materials are the only ones attracted to a magnet strongly enough to be commonly considered magnetic, all other substances respond weakly to a magnetic field, by one of several other types of magnetism.

Ferromagnetic materials can be divided into magnetically "soft" materials like annealed iron, which can be magnetized but do not tend to stay magnetized, and magnetically "hard" materials, which do. Permanent magnets are made from "hard" ferromagnetic materials such as alnico and ferrite that are subjected to special processing in a strong magnetic field during manufacture to align their internal microcrystalline structure, making them very hard to demagnetize. To demagnetize a saturated magnet, a certain magnetic field must be applied, and this threshold depends on coercivity of the respective material. "Hard" materials have high coercivity, whereas "soft" materials have low coercivity. The overall strength of a magnet is measured by its magnetic moment or, alternatively, the total magnetic flux it produces. The local strength of magnetism in a material is measured by its magnetization.

An electromagnet is made from a coil of wire that acts as a magnet when an electric current passes through it but stops being a magnet when the current stops. Often, the coil is wrapped around a core of "soft" ferromagnetic material such as mild steel, which greatly enhances the magnetic field produced by the coil.

Discovery and development

Ancient people learned about magnetism from lodestones (or magnetite) which are naturally magnetized pieces of iron ore. The word magnet was adopted in Middle English from Latin magnetum "lodestone", ultimately from Greek μαγνῆτις [λίθος] (magnētis [lithos])[1] meaning "[stone] from Magnesia",[2] a place in Anatolia where lodestones were found (today Manisa in modern-day Turkey). Lodestones, suspended so they could turn, were the first magnetic compasses. The earliest known surviving descriptions of magnets and their properties are from Anatolia, India, and China around 2500 years ago.[3][4][5] The properties of lodestones and their affinity for iron were written of by Pliny the Elder in his encyclopedia Naturalis Historia.[6]

In the 11th century in China, it was discovered that quenching red hot iron in the Earth's magnetic field would leave the iron permanently magnetized. This led to the development of the navigational compass, as described in Dream Pool Essays in 1088.[7][8] By the 12th to 13th centuries AD, magnetic compasses were used in navigation in China, Europe, the Arabian Peninsula and elsewhere.[9]

A straight iron magnet tends to demagnetize itself by its own magnetic field. To overcome this, the horseshoe magnet was invented by Daniel Bernoulli in 1743.[7][10] A horseshoe magnet avoids demagnetization by returning the magnetic field lines to the opposite pole.[11]

In 1820, Hans Christian Ørsted discovered that a compass needle is deflected by a nearby electric current. In the same year André-Marie Ampère showed that iron can be magnetized by inserting it in an electrically fed solenoid. This led William Sturgeon to develop an iron-cored electromagnet in 1824.[7] Joseph Henry further developed the electromagnet into a commercial product in 1830–1831, giving people access to strong magnetic fields for the first time. In 1831 he built an ore separator with an electromagnet capable of lifting 750 pounds (340 kg).[12]

Physics

Magnetic field

The magnetic flux density (also called magnetic B field or just magnetic field, usually denoted B) is a vector field. The magnetic B field vector at a given point in space is specified by two properties:

- Its direction, which is along the orientation of a compass needle.

- Its magnitude (also called strength), which is proportional to how strongly the compass needle orients along that direction.

In SI units, the strength of the magnetic B field is given in teslas.[13]

Magnetic moment

A magnet's magnetic moment (also called magnetic dipole moment and usually denoted μ) is a vector that characterizes the magnet's overall magnetic properties. For a bar magnet, the direction of the magnetic moment points from the magnet's south pole to its north pole,[14] and the magnitude relates to how strong and how far apart these poles are. In SI units, the magnetic moment is specified in terms of A·m2 (amperes times meters squared).

A magnet both produces its own magnetic field and responds to magnetic fields. The strength of the magnetic field it produces is at any given point proportional to the magnitude of its magnetic moment. In addition, when the magnet is put into an external magnetic field, produced by a different source, it is subject to a torque tending to orient the magnetic moment parallel to the field.[15] The amount of this torque is proportional both to the magnetic moment and the external field. A magnet may also be subject to a force driving it in one direction or another, according to the positions and orientations of the magnet and source. If the field is uniform in space, the magnet is subject to no net force, although it is subject to a torque.[16]

A wire in the shape of a circle with area A and carrying current I has a magnetic moment of magnitude equal to IA.

Magnetization

The magnetization of a magnetized material is the local value of its magnetic moment per unit volume, usually denoted M, with units A/m.[17] It is a vector field, rather than just a vector (like the magnetic moment), because different areas in a magnet can be magnetized with different directions and strengths (for example, because of domains, see below). A good bar magnet may have a magnetic moment of magnitude 0.1 A·m2 and a volume of 1 cm3, or 1×10−6 m3, and therefore an average magnetization magnitude is 100,000 A/m. Iron can have a magnetization of around a million amperes per meter. Such a large value explains why iron magnets are so effective at producing magnetic fields.

Modelling magnets

Two different models exist for magnets: magnetic poles and atomic currents.

Although for many purposes it is convenient to think of a magnet as having distinct north and south magnetic poles, the concept of poles should not be taken literally: it is merely a way of referring to the two different ends of a magnet. The magnet does not have distinct north or south particles on opposing sides. If a bar magnet is broken into two pieces, in an attempt to separate the north and south poles, the result will be two bar magnets, each of which has both a north and south pole. However, a version of the magnetic-pole approach is used by professional magneticians to design permanent magnets.[citation needed]

In this approach, the divergence of the magnetization ∇·M inside a magnet is treated as a distribution of magnetic monopoles. This is a mathematical convenience and does not imply that there are actually monopoles in the magnet. If the magnetic-pole distribution is known, then the pole model gives the magnetic field H. Outside the magnet, the field B is proportional to H, while inside the magnetization must be added to H. An extension of this method that allows for internal magnetic charges is used in theories of ferromagnetism.

Another model is the Ampère model, where all magnetization is due to the effect of microscopic, or atomic, circular bound currents, also called Ampèrian currents, throughout the material. For a uniformly magnetized cylindrical bar magnet, the net effect of the microscopic bound currents is to make the magnet behave as if there is a macroscopic sheet of electric current flowing around the surface, with local flow direction normal to the cylinder axis.[18] Microscopic currents in atoms inside the material are generally canceled by currents in neighboring atoms, so only the surface makes a net contribution; shaving off the outer layer of a magnet will not destroy its magnetic field, but will leave a new surface of uncancelled currents from the circular currents throughout the material.[19] The right-hand rule tells which direction positively-charged current flows. However, current due to negatively-charged electricity is far more prevalent in practice.[citation needed]

Polarity

The north pole of a magnet is defined as the pole that, when the magnet is freely suspended, points towards the Earth's North Magnetic Pole in the Arctic (the magnetic and geographic poles do not coincide, see magnetic declination). Since opposite poles (north and south) attract, the North Magnetic Pole is actually the south pole of the Earth's magnetic field.[20][21][22][23] As a practical matter, to tell which pole of a magnet is north and which is south, it is not necessary to use the Earth's magnetic field at all. For example, one method would be to compare it to an electromagnet, whose poles can be identified by the right-hand rule. The magnetic field lines of a magnet are considered by convention to emerge from the magnet's north pole and reenter at the south pole.[23]

Magnetic materials

The term magnet is typically reserved for objects that produce their own persistent magnetic field even in the absence of an applied magnetic field. Only certain classes of materials can do this. Most materials, however, produce a magnetic field in response to an applied magnetic field – a phenomenon known as magnetism. There are several types of magnetism, and all materials exhibit at least one of them.

The overall magnetic behavior of a material can vary widely, depending on the structure of the material, particularly on its electron configuration. Several forms of magnetic behavior have been observed in different materials, including:

- Ferromagnetic and ferrimagnetic materials are the ones normally thought of as magnetic; they are attracted to a magnet strongly enough that the attraction can be felt. These materials are the only ones that can retain magnetization and become magnets; a common example is a traditional refrigerator magnet. Ferrimagnetic materials, which include ferrites and the longest used and naturally occurring magnetic materials magnetite and lodestone, are similar to but weaker than ferromagnetics. The difference between ferro- and ferrimagnetic materials is related to their microscopic structure, as explained in Magnetism.

- Paramagnetic substances, such as platinum, aluminum, and oxygen, are weakly attracted to either pole of a magnet. This attraction is hundreds of thousands of times weaker than that of ferromagnetic materials, so it can only be detected by using sensitive instruments or using extremely strong magnets. Magnetic ferrofluids, although they are made of tiny ferromagnetic particles suspended in liquid, are sometimes considered paramagnetic since they cannot be magnetized.

- Diamagnetic means repelled by both poles. Compared to paramagnetic and ferromagnetic substances, diamagnetic substances, such as carbon, copper, water, and plastic, are even more weakly repelled by a magnet. The permeability of diamagnetic materials is less than the permeability of a vacuum. All substances not possessing one of the other types of magnetism are diamagnetic; this includes most substances. Although force on a diamagnetic object from an ordinary magnet is far too weak to be felt, using extremely strong superconducting magnets, diamagnetic objects such as pieces of lead and even mice[24] can be levitated, so they float in mid-air. Superconductors repel magnetic fields from their interior and are strongly diamagnetic.

There are various other types of magnetism, such as spin glass, superparamagnetism, superdiamagnetism, and metamagnetism.

Common uses

- Magnetic recording media: VHS tapes contain a reel of magnetic tape. The information that makes up the video and sound is encoded on the magnetic coating on the tape. Common audio cassettes also rely on magnetic tape. Similarly, in computers, floppy disks and hard disks record data on a thin magnetic coating.[25]

- Credit, debit, and automatic teller machine cards: All of these cards have a magnetic strip on one side. This strip encodes the information to contact an individual's financial institution and connect with their account(s).[26]

- Older types of televisions (non flat screen) and older large computer monitors: TV and computer screens containing a cathode ray tube employ an electromagnet to guide electrons to the screen.[27]

- Speakers and microphones: Most speakers employ a permanent magnet and a current-carrying coil to convert electric energy (the signal) into mechanical energy (movement that creates the sound). The coil is wrapped around a bobbin attached to the speaker cone and carries the signal as changing current that interacts with the field of the permanent magnet. The voice coil feels a magnetic force and in response, moves the cone and pressurizes the neighboring air, thus generating sound. Dynamic microphones employ the same concept, but in reverse. A microphone has a diaphragm or membrane attached to a coil of wire. The coil rests inside a specially shaped magnet. When sound vibrates the membrane, the coil is vibrated as well. As the coil moves through the magnetic field, a voltage is induced across the coil. This voltage drives a current in the wire that is characteristic of the original sound.

- Electric guitars use magnetic pickups to transduce the vibration of guitar strings into electric current that can then be amplified. This is different from the principle behind the speaker and dynamic microphone because the vibrations are sensed directly by the magnet, and a diaphragm is not employed. The Hammond organ used a similar principle, with rotating tonewheels instead of strings.

- Electric motors and generators: Some electric motors rely upon a combination of an electromagnet and a permanent magnet, and, much like loudspeakers, they convert electric energy into mechanical energy. A generator is the reverse: it converts mechanical energy into electric energy by moving a conductor through a magnetic field.

- Medicine: Hospitals use magnetic resonance imaging to spot problems in a patient's organs without invasive surgery.

- Chemistry: Chemists use nuclear magnetic resonance to characterize synthesized compounds.

- Chucks are used in the metalworking field to hold objects. Magnets are also used in other types of fastening devices, such as the magnetic base, the magnetic clamp and the refrigerator magnet.

- Compasses: A compass (or mariner's compass) is a magnetized pointer free to align itself with a magnetic field, most commonly Earth's magnetic field.

- Art: Vinyl magnet sheets may be attached to paintings, photographs, and other ornamental articles, allowing them to be attached to refrigerators and other metal surfaces. Objects and paint can be applied directly to the magnet surface to create collage pieces of art. Metal magnetic boards, strips, doors, microwave ovens, dishwashers, cars, metal I beams, and any metal surface can be used magnetic vinyl art.

- Science projects: Many topic questions are based on magnets, including the repulsion of current-carrying wires, the effect of temperature, and motors involving magnets.[28]

- Toys: Given their ability to counteract the force of gravity at close range, magnets are often employed in children's toys, such as the Magnet Space Wheel and Levitron, to amusing effect.

- Refrigerator magnets are used to adorn kitchens, as a souvenir, or simply to hold a note or photo to the refrigerator door.

- Magnets can be used to make jewelry. Necklaces and bracelets can have a magnetic clasp, or may be constructed entirely from a linked series of magnets and ferrous beads.

- Magnets can pick up magnetic items (iron nails, staples, tacks, paper clips) that are either too small, too hard to reach, or too thin for fingers to hold. Some screwdrivers are magnetized for this purpose.

- Magnets can be used in scrap and salvage operations to separate magnetic metals (iron, cobalt, and nickel) from non-magnetic metals (aluminum, non-ferrous alloys, etc.). The same idea can be used in the so-called "magnet test", in which a car chassis is inspected with a magnet to detect areas repaired using fiberglass or plastic putty.

- Magnets are found in process industries, food manufacturing especially, in order to remove metal foreign bodies from materials entering the process (raw materials) or to detect a possible contamination at the end of the process and prior to packaging. They constitute an important layer of protection for the process equipment and for the final consumer.[29]

- Magnetic levitation transport, or maglev, is a form of transportation that suspends, guides and propels vehicles (especially trains) through electromagnetic force. Eliminating rolling resistance increases efficiency. The maximum recorded speed of a maglev train is 581 kilometers per hour (361 mph).

- Magnets may be used to serve as a fail-safe device for some cable connections. For example, the power cords of some laptops are magnetic to prevent accidental damage to the port when tripped over. The MagSafe power connection to the Apple MacBook is one such example.

Medical issues and safety

Because human tissues have a very low level of susceptibility to static magnetic fields, there is little mainstream scientific evidence showing a health effect associated with exposure to static fields. Dynamic magnetic fields may be a different issue, however; correlations between electromagnetic radiation and cancer rates have been postulated due to demographic correlations (see Electromagnetic radiation and health).

If a ferromagnetic foreign body is present in human tissue, an external magnetic field interacting with it can pose a serious safety risk.[30]

A different type of indirect magnetic health risk exists involving pacemakers. If a pacemaker has been embedded in a patient's chest (usually for the purpose of monitoring and regulating the heart for steady electrically induced beats), care should be taken to keep it away from magnetic fields. It is for this reason that a patient with the device installed cannot be tested with the use of a magnetic resonance imaging device.

Children sometimes swallow small magnets from toys, and this can be hazardous if two or more magnets are swallowed, as the magnets can pinch or puncture internal tissues.[31]

Magnetic imaging devices (e.g. MRIs) generate enormous magnetic fields, and therefore rooms intended to hold them exclude ferrous metals. Bringing objects made of ferrous metals (such as oxygen canisters) into such a room creates a severe safety risk, as those objects may be powerfully thrown about by the intense magnetic fields.

Magnetizing ferromagnets

Ferromagnetic materials can be magnetized in the following ways:

- Heating the object higher than its Curie temperature, allowing it to cool in a magnetic field and hammering it as it cools. This is the most effective method and is similar to the industrial processes used to create permanent magnets.

- Placing the item in an external magnetic field will result in the item retaining some of the magnetism on removal. Vibration has been shown to increase the effect. Ferrous materials aligned with the Earth's magnetic field that are subject to vibration (e.g., frame of a conveyor) have been shown to acquire significant residual magnetism. Likewise, striking a steel nail held by fingers in a N-S direction with a hammer will temporarily magnetize the nail.

- Stroking: An existing magnet is moved from one end of the item to the other repeatedly in the same direction (single touch method) or two magnets are moved outwards from the center of a third (double touch method).[32]

- Electric Current: The magnetic field produced by passing an electric current through a coil can get domains to line up. Once all of the domains are lined up, increasing the current will not increase the magnetization.[33]

Demagnetizing ferromagnets

Magnetized ferromagnetic materials can be demagnetized (or degaussed) in the following ways:

- Heating a magnet past its Curie temperature; the molecular motion destroys the alignment of the magnetic domains. This always removes all magnetization.

- Placing the magnet in an alternating magnetic field with intensity above the material's coercivity and then either slowly drawing the magnet out or slowly decreasing the magnetic field to zero. This is the principle used in commercial demagnetizers to demagnetize tools, erase credit cards, hard disks, and degaussing coils used to demagnetize CRTs.

- Some demagnetization or reverse magnetization will occur if any part of the magnet is subjected to a reverse field above the magnetic material's coercivity.

- Demagnetization progressively occurs if the magnet is subjected to cyclic fields sufficient to move the magnet away from the linear part on the second quadrant of the B–H curve of the magnetic material (the demagnetization curve).

- Hammering or jarring: mechanical disturbance tends to randomize the magnetic domains and reduce magnetization of an object, but may cause unacceptable damage.

Types of permanent magnets

Magnetic metallic elements

Many materials have unpaired electron spins, and the majority of these materials are paramagnetic. When the spins interact with each other in such a way that the spins align spontaneously, the materials are called ferromagnetic (what is often loosely termed as magnetic). Because of the way their regular crystalline atomic structure causes their spins to interact, some metals are ferromagnetic when found in their natural states, as ores. These include iron ore (magnetite or lodestone), cobalt and nickel, as well as the rare earth metals gadolinium and dysprosium (when at a very low temperature). Such naturally occurring ferromagnets were used in the first experiments with magnetism. Technology has since expanded the availability of magnetic materials to include various man-made products, all based, however, on naturally magnetic elements.

Composites

Ceramic, or ferrite, magnets are made of a sintered composite of powdered iron oxide and barium/strontium carbonate ceramic. Given the low cost of the materials and manufacturing methods, inexpensive magnets (or non-magnetized ferromagnetic cores, for use in electronic components such as portable AM radio antennas) of various shapes can be easily mass-produced. The resulting magnets are non-corroding but brittle and must be treated like other ceramics.

Alnico magnets are made by casting or sintering a combination of aluminium, nickel and cobalt with iron and small amounts of other elements added to enhance the properties of the magnet. Sintering offers superior mechanical characteristics, whereas casting delivers higher magnetic fields and allows for the design of intricate shapes. Alnico magnets resist corrosion and have physical properties more forgiving than ferrite, but not quite as desirable as a metal. Trade names for alloys in this family include: Alni, Alcomax, Hycomax, Columax, and Ticonal.[34]

Injection-molded magnets are a composite of various types of resin and magnetic powders, allowing parts of complex shapes to be manufactured by injection molding. The physical and magnetic properties of the product depend on the raw materials, but are generally lower in magnetic strength and resemble plastics in their physical properties.

Flexible magnet

Flexible magnets are composed of a high-coercivity ferromagnetic compound (usually ferric oxide) mixed with a resinous polymer binder.[35] This is extruded as a sheet and passed over a line of powerful cylindrical permanent magnets. These magnets are arranged in a stack with alternating magnetic poles facing up (N, S, N, S...) on a rotating shaft. This impresses the plastic sheet with the magnetic poles in an alternating line format. No electromagnetism is used to generate the magnets. The pole-to-pole distance is on the order of 5 mm, but varies with manufacturer. These magnets are lower in magnetic strength but can be very flexible, depending on the binder used.[36]

For magnetic compounds (e.g. Nd2Fe14B) that are vulnerable to a grain boundary corrosion problem it gives additional protection.[35]

Rare-earth magnets

Rare earth (lanthanoid) elements have a partially occupied f electron shell (which can accommodate up to 14 electrons). The spin of these electrons can be aligned, resulting in very strong magnetic fields, and therefore, these elements are used in compact high-strength magnets where their higher price is not a concern. The most common types of rare-earth magnets are samarium–cobalt and neodymium–iron–boron (NIB) magnets.

Single-molecule magnets (SMMs) and single-chain magnets (SCMs)

In the 1990s, it was discovered that certain molecules containing paramagnetic metal ions are capable of storing a magnetic moment at very low temperatures. These are very different from conventional magnets that store information at a magnetic domain level and theoretically could provide a far denser storage medium than conventional magnets. In this direction, research on monolayers of SMMs is currently under way. Very briefly, the two main attributes of an SMM are:

- a large ground state spin value (S), which is provided by ferromagnetic or ferrimagnetic coupling between the paramagnetic metal centres

- a negative value of the anisotropy of the zero field splitting (D)

Most SMMs contain manganese but can also be found with vanadium, iron, nickel and cobalt clusters. More recently, it has been found that some chain systems can also display a magnetization that persists for long times at higher temperatures. These systems have been called single-chain magnets.

Nano-structured magnets

Some nano-structured materials exhibit energy waves, called magnons, that coalesce into a common ground state in the manner of a Bose–Einstein condensate.[37][38]

Rare-earth-free permanent magnets

The United States Department of Energy has identified a need to find substitutes for rare-earth metals in permanent-magnet technology, and has begun funding such research. The Advanced Research Projects Agency-Energy (ARPA-E) has sponsored a Rare Earth Alternatives in Critical Technologies (REACT) program to develop alternative materials. In 2011, ARPA-E awarded 31.6 million dollars to fund Rare-Earth Substitute projects.[39]

Costs

The current cheapest permanent magnets, allowing for field strengths, are flexible and ceramic magnets, but these are also among the weakest types. The ferrite magnets are mainly low-cost magnets since they are made from cheap raw materials: iron oxide and Ba- or Sr-carbonate. However, a new low cost magnet, Mn–Al alloy,[35][non-primary source needed][40] has been developed and is now dominating the low-cost magnets field.[citation needed] It has a higher saturation magnetization than the ferrite magnets. It also has more favorable temperature coefficients, although it can be thermally unstable. Neodymium–iron–boron (NIB) magnets are among the strongest. These cost more per kilogram than most other magnetic materials but, owing to their intense field, are smaller and cheaper in many applications.[41]

Temperature

Temperature sensitivity varies, but when a magnet is heated to a temperature known as the Curie point, it loses all of its magnetism, even after cooling below that temperature. The magnets can often be remagnetized, however.

Additionally, some magnets are brittle and can fracture at high temperatures.

The maximum usable temperature is highest for alnico magnets at over 540 °C (1,000 °F), around 300 °C (570 °F) for ferrite and SmCo, about 140 °C (280 °F) for NIB and lower for flexible ceramics, but the exact numbers depend on the grade of material.

Electromagnets

An electromagnet, in its simplest form, is a wire that has been coiled into one or more loops, known as a solenoid. When electric current flows through the wire, a magnetic field is generated. It is concentrated near (and especially inside) the coil, and its field lines are very similar to those of a magnet. The orientation of this effective magnet is determined by the right hand rule. The magnetic moment and the magnetic field of the electromagnet are proportional to the number of loops of wire, to the cross-section of each loop, and to the current passing through the wire.[42]

If the coil of wire is wrapped around a material with no special magnetic properties (e.g., cardboard), it will tend to generate a very weak field. However, if it is wrapped around a soft ferromagnetic material, such as an iron nail, then the net field produced can result in a several hundred- to thousandfold increase of field strength.

Uses for electromagnets include particle accelerators, electric motors, junkyard cranes, and magnetic resonance imaging machines. Some applications involve configurations more than a simple magnetic dipole; for example, quadrupole and sextupole magnets are used to focus particle beams.

Units and calculations

For most engineering applications, MKS (rationalized) or SI (Système International) units are commonly used. Two other sets of units, Gaussian and CGS-EMU, are the same for magnetic properties and are commonly used in physics.[citation needed]

In all units, it is convenient to employ two types of magnetic field, B and H, as well as the magnetization M, defined as the magnetic moment per unit volume.

- The magnetic induction field B is given in SI units of teslas (T). B is the magnetic field whose time variation produces, by Faraday's Law, circulating electric fields (which the power companies sell). B also produces a deflection force on moving charged particles (as in TV tubes). The tesla is equivalent to the magnetic flux (in webers) per unit area (in meters squared), thus giving B the unit of a flux density. In CGS, the unit of B is the gauss (G). One tesla equals 104 G.

- The magnetic field H is given in SI units of ampere-turns per meter (A-turn/m). The turns appear because when H is produced by a current-carrying wire, its value is proportional to the number of turns of that wire. In CGS, the unit of H is the oersted (Oe). One A-turn/m equals 4π×10−3 Oe.

- The magnetization M is given in SI units of amperes per meter (A/m). In CGS, the unit of M is the oersted (Oe). One A/m equals 10−3 emu/cm3. A good permanent magnet can have a magnetization as large as a million amperes per meter.

- In SI units, the relation B = μ0(H + M) holds, where μ0 is the permeability of space, which equals 4π×10−7 T•m/A. In CGS, it is written as B = H + 4πM. (The pole approach gives μ0H in SI units. A μ0M term in SI must then supplement this μ0H to give the correct field within B, the magnet. It will agree with the field B calculated using Ampèrian currents).

Materials that are not permanent magnets usually satisfy the relation M = χH in SI, where χ is the (dimensionless) magnetic susceptibility. Most non-magnetic materials have a relatively small χ (on the order of a millionth), but soft magnets can have χ on the order of hundreds or thousands. For materials satisfying M = χH, we can also write B = μ0(1 + χ)H = μ0μrH = μH, where μr = 1 + χ is the (dimensionless) relative permeability and μ =μ0μr is the magnetic permeability. Both hard and soft magnets have a more complex, history-dependent, behavior described by what are called hysteresis loops, which give either B vs. H or M vs. H. In CGS, M = χH, but χSI = 4πχCGS, and μ = μr.

Caution: in part because there are not enough Roman and Greek symbols, there is no commonly agreed-upon symbol for magnetic pole strength and magnetic moment. The symbol m has been used for both pole strength (unit A•m, where here the upright m is for meter) and for magnetic moment (unit A•m2). The symbol μ has been used in some texts for magnetic permeability and in other texts for magnetic moment. We will use μ for magnetic permeability and m for magnetic moment. For pole strength, we will employ qm. For a bar magnet of cross-section A with uniform magnetization M along its axis, the pole strength is given by qm = MA, so that M can be thought of as a pole strength per unit area.

Fields of a magnet

Far away from a magnet, the magnetic field created by that magnet is almost always described (to a good approximation) by a dipole field characterized by its total magnetic moment. This is true regardless of the shape of the magnet, so long as the magnetic moment is non-zero. One characteristic of a dipole field is that the strength of the field falls off inversely with the cube of the distance from the magnet's center.

Closer to the magnet, the magnetic field becomes more complicated and more dependent on the detailed shape and magnetization of the magnet. Formally, the field can be expressed as a multipole expansion: A dipole field, plus a quadrupole field, plus an octupole field, etc.

At close range, many different fields are possible. For example, for a long, skinny bar magnet with its north pole at one end and south pole at the other, the magnetic field near either end falls off inversely with the square of the distance from that pole.

Calculating the magnetic force

Pull force of a single magnet

The strength of a given magnet is sometimes given in terms of its pull force — its ability to pull ferromagnetic objects.[43] The pull force exerted by either an electromagnet or a permanent magnet with no air gap (i.e., the ferromagnetic object is in direct contact with the pole of the magnet[44]) is given by the Maxwell equation:[45]

- ,

where

- F is force (SI unit: newton)

- A is the cross section of the area of the pole in square meters

- B is the magnetic induction exerted by the magnet

This result can be easily derived using Gilbert model, which assumes that the pole of magnet is charged with magnetic monopoles that induces the same in the ferromagnetic object.

If a magnet is acting vertically, it can lift a mass m in kilograms given by the simple equation:

where g is the gravitational acceleration.

Force between two magnetic poles

Classically, the force between two magnetic poles is given by:[46]

where

- F is force (SI unit: newton)

- qm1 and qm2 are the magnitudes of magnetic poles (SI unit: ampere-meter)

- μ is the permeability of the intervening medium (SI unit: tesla meter per ampere, henry per meter or newton per ampere squared)

- r is the separation (SI unit: meter).

The pole description is useful to the engineers designing real-world magnets, but real magnets have a pole distribution more complex than a single north and south. Therefore, implementation of the pole idea is not simple. In some cases, one of the more complex formulae given below will be more useful.

Force between two nearby magnetized surfaces of area A

The mechanical force between two nearby magnetized surfaces can be calculated with the following equation. The equation is valid only for cases in which the effect of fringing is negligible and the volume of the air gap is much smaller than that of the magnetized material:[47][48]

where:

- A is the area of each surface, in m2

- H is their magnetizing field, in A/m

- μ0 is the permeability of space, which equals 4π×10−7 T•m/A

- B is the flux density, in T.

Force between two bar magnets

The force between two identical cylindrical bar magnets placed end to end at large distance is approximately:[dubious ],[47]

where:

- B0 is the magnetic flux density very close to each pole, in T,

- A is the area of each pole, in m2,

- L is the length of each magnet, in m,

- R is the radius of each magnet, in m, and

- z is the separation between the two magnets, in m.

- relates the flux density at the pole to the magnetization of the magnet.

Note that all these formulations are based on Gilbert's model, which is usable in relatively great distances. In other models (e.g., Ampère's model), a more complicated formulation is used that sometimes cannot be solved analytically. In these cases, numerical methods must be used.

Force between two cylindrical magnets

For two cylindrical magnets with radius and length , with their magnetic dipole aligned, the force can be asymptotically approximated at large distance by,[49]

where is the magnetization of the magnets and is the gap between the magnets. A measurement of the magnetic flux density very close to the magnet is related to approximately by the formula

The effective magnetic dipole can be written as

Where is the volume of the magnet. For a cylinder, this is .

When , the point dipole approximation is obtained,

which matches the expression of the force between two magnetic dipoles.

See also

Notes

- David Vokoun; Marco Beleggia; Ludek Heller; Petr Sittner (2009). "Magnetostatic interactions and forces between cylindrical permanent magnets". Journal of Magnetism and Magnetic Materials. 321 (22): 3758–3763. Bibcode:2009JMMM..321.3758V. doi:10.1016/j.jmmm.2009.07.030.

References

- "The Early History of the Permanent Magnet". Edward Neville Da Costa Andrade, Endeavour, Volume 17, Number 65, January 1958. Contains an excellent description of early methods of producing permanent magnets.

- "positive pole n". The Concise Oxford English Dictionary. Catherine Soanes and Angus Stevenson. Oxford University Press, 2004. Oxford Reference Online. Oxford University Press.

- Wayne M. Saslow, Electricity, Magnetism, and Light, Academic (2002). ISBN 0-12-619455-6. Chapter 9 discusses magnets and their magnetic fields using the concept of magnetic poles, but it also gives evidence that magnetic poles do not really exist in ordinary matter. Chapters 10 and 11, following what appears to be a 19th-century approach, use the pole concept to obtain the laws describing the magnetism of electric currents.

- Edward P. Furlani, Permanent Magnet and Electromechanical Devices:Materials, Analysis and Applications, Academic Press Series in Electromagnetism (2001). ISBN 0-12-269951-3.

External links

- How magnets are made Archived 2013-03-16 at the Wayback Machine (video)

- Floating Ring Magnets, Bulletin of the IAPT, Volume 4, No. 6, 145 (June 2012). (Publication of the Indian Association of Physics Teachers).

- A brief history of electricity and magnetism

https://en.wikipedia.org/wiki/Magnet

https://en.wikipedia.org/wiki/Dipole_magnet

A dipole magnet is the simplest type of magnet. It has two poles, one north and one south. Its magnetic field lines form simple closed loops which emerge from the north pole, re-enter at the south pole, then pass through the body of the magnet. The simplest example of a dipole magnet is a bar magnet.[1]

https://en.wikipedia.org/wiki/Dipole_magnet

A dipole magnet is the simplest type of magnet. It has two poles, one north and one south. Its magnetic field lines form simple closed loops which emerge from the north pole, re-enter at the south pole, then pass through the body of the magnet. The simplest example of a dipole magnet is a bar magnet.[1]

Dipole magnets in accelerators

In particle accelerators, a dipole magnet is the electromagnet used to create a homogeneous magnetic field over some distance. Particle motion in that field will be circular in a plane perpendicular to the field and collinear to the direction of particle motion and free in the direction orthogonal to it. Thus, a particle injected into a dipole magnet will travel on a circular or helical trajectory. By adding several dipole sections on the same plane, the bending radial effect of the beam increases.

In accelerator physics, dipole magnets are used to realize bends in the design trajectory (or 'orbit') of the particles, as in circular accelerators. Other uses include:

- Injection of particles into the accelerator

- Ejection of particles from the accelerator

- Correction of orbit errors

- Production of synchrotron radiation

The force on a charged particle in a particle accelerator from a dipole magnet can be described by the Lorentz force law, where a charged particle experiences a force of

(in SI units). In the case of a particle accelerator dipole magnet, the charged particle beam is bent via the cross product of the particle's velocity and the magnetic field vector, with direction also being dependent on the charge of the particle.

The amount of force that can be applied to a charged particle by a dipole magnet is one of the limiting factors for modern synchrotron and cyclotron proton and ion accelerators. As the energy of the accelerated particles increases, they require more force to change direction and require larger B fields to be steered. Limitations on the amount of B field that can be produced with modern dipole electromagnets require synchrotrons/cyclotrons to increase in size (thus increasing the number of dipole magnets used) to compensate for increases in particle velocity. In the largest modern synchrotron, the Large Hadron Collider, there are 1232 main dipole magnets used for bending the path of the particle beam, each weighing 35 metric tons.[2]

Other uses

Other uses of dipole magnets to deflect moving particles include isotope mass measurement in mass spectrometry, and particle momentum measurement in particle physics.

Such magnets are also used in traditional televisions, which contain a cathode ray tube, which is essentially a small particle accelerator. Their magnets are called deflecting coils. The magnets move a single spot on the screen of the TV tube in a controlled way all over the screen.

See also

- Accelerator physics

- Beam line

- Cyclotron

- Electromagnetism

- Linear particle accelerator

- Particle accelerator

- Quadrupole magnet

- Sextupole magnet

- Multipole magnet

- Storage ring

References

- ["Pulling together: Superconducting electromagnets" CERN; https://home.cern/science/engineering/pulling-together-superconducting-electromagnets]

External links

Media related to Dipole magnet at Wikimedia Commons

Media related to Dipole magnet at Wikimedia Commons

https://en.wikipedia.org/wiki/Dipole_magnet

https://en.wikipedia.org/wiki/Proton%E2%80%93proton_chain

https://en.wikipedia.org/wiki/Carbon-burning_process

https://en.wikipedia.org/wiki/Hydrostatic_equilibrium

https://en.wikipedia.org/wiki/Proton_nuclear_magnetic_resonance

https://en.wikipedia.org/wiki/Acetone

https://en.wikipedia.org/wiki/Properties_of_water

https://en.wikipedia.org/wiki/Color_of_water

https://en.wikipedia.org/wiki/Scattering

https://en.wikipedia.org/wiki/Diffuse_reflection

https://en.wikipedia.org/wiki/Crystallite

https://en.wikipedia.org/wiki/Single_crystal

https://en.wikipedia.org/wiki/Half-space_(geometry)

https://en.wikipedia.org/wiki/Paracrystallinity

https://en.wikipedia.org/wiki/Grain_boundary

https://en.wikipedia.org/wiki/Misorientation

https://en.wikipedia.org/wiki/Dislocation

https://en.wikipedia.org/wiki/Slip_(materials_science)

In materials science, slip is the large displacement of one part of a crystal relative to another part along crystallographic planes and directions.[1] Slip occurs by the passage of dislocations on close/packed planes, which are planes containing the greatest number of atoms per area and in close-packed directions (most atoms per length). Close-packed planes are known as slip or glide planes. A slip system describes the set of symmetrically identical slip planes and associated family of slip directions for which dislocation motion can easily occur and lead to plastic deformation. The magnitude and direction of slip are represented by the Burgers vector, b.

An external force makes parts of the crystal lattice glide along each other, changing the material's geometry. A critical resolved shear stress is required to initiate a slip.[2]

https://en.wikipedia.org/wiki/Slip_(materials_science)

https://en.wikipedia.org/wiki/Critical_resolved_shear_stress

https://en.wikipedia.org/wiki/Miller_index#Crystallographic_planes_and_directions

https://en.wikipedia.org/wiki/Axis%E2%80%93angle_representation

https://en.wikipedia.org/wiki/Amorphous_solid

https://en.wikipedia.org/wiki/Crystal_structure

https://en.wikipedia.org/wiki/Quaternions_and_spatial_rotation

https://en.wikipedia.org/wiki/Electron_microscope

https://en.wikipedia.org/wiki/Scanning_tunneling_microscope

https://en.wikipedia.org/wiki/Absolute_zero

https://en.wikipedia.org/wiki/Proton_spin_crisis

https://en.wikipedia.org/wiki/Neutron%E2%80%93proton_ratio

https://en.wikipedia.org/wiki/Proton_radius_puzzle

https://en.wikipedia.org/wiki/Proton-exchange_membrane_fuel_cell

https://en.wikipedia.org/wiki/Proton-to-electron_mass_ratio

https://en.wikipedia.org/wiki/Proton_ATPase

https://en.wikipedia.org/wiki/Electron_transport_chain#Proton_pumps

https://en.wikipedia.org/wiki/Electrochemical_gradient

https://en.wikipedia.org/wiki/Atomic_number

https://en.wikipedia.org/wiki/Isotopes_of_hydrogen

https://en.wikipedia.org/wiki/Proton_Synchrotron

https://en.wikipedia.org/wiki/Large_Hadron_Collider

https://en.wikipedia.org/wiki/Particle-induced_X-ray_emission

https://en.wikipedia.org/wiki/Proton_(satellite_program)

https://en.wikipedia.org/wiki/Super_Proton%E2%80%93Antiproton_Synchrotron

https://en.wikipedia.org/wiki/Proton_Synchrotron

https://en.wikipedia.org/wiki/Hydronium

https://en.wikipedia.org/wiki/Strong_interaction

https://en.wikipedia.org/wiki/Odderon

https://en.wikipedia.org/wiki/Annihilation#Proton-antiproton_annihilation

https://en.wikipedia.org/wiki/Nucleon_magnetic_moment

https://en.wikipedia.org/wiki/Stellar_structure

https://en.wikipedia.org/wiki/Nuclear_fusion

https://en.wikipedia.org/wiki/Combustion

https://en.wikipedia.org/wiki/Dredge-up

- The third dredge-up

- The third dredge-up occurs after a star enters the asymptotic giant branch, after a flash occurs in a helium-burning shell. The third dredge-up brings helium, carbon, and the s-process products to the surface, increasing the abundance of carbon relative to oxygen; in some larger stars this is the process that turns the star into a carbon star.[2]

https://en.wikipedia.org/wiki/Dredge-up

https://en.wikipedia.org/wiki/Beryllium

https://en.wikipedia.org/wiki/CNO_cycle

https://en.wikipedia.org/wiki/Smoke

https://en.wikipedia.org/wiki/Positron

https://en.wikipedia.org/wiki/Positron_emission

Positron emission, beta plus decay, or β+ decay is a subtype of radioactive decay called beta decay, in which a proton inside a radionuclide nucleus is converted into a neutron while releasing a positron and an electron neutrino (νe).[1] Positron emission is mediated by the weak force. The positron is a type of beta particle (β+), the other beta particle being the electron (β−) emitted from the β− decay of a nucleus.

https://en.wikipedia.org/wiki/Positron_emission

https://en.wikipedia.org/wiki/Radioactive_decay

https://en.wikipedia.org/wiki/Beta_decay

https://en.wikipedia.org/wiki/Radionuclide

https://en.wikipedia.org/wiki/Internal_conversion

https://en.wikipedia.org/wiki/Radionucleotide

https://en.wikipedia.org/wiki/Gamma_ray

https://en.wikipedia.org/wiki/Radio_wave

https://en.wikipedia.org/wiki/Black-body_radiation

https://en.wikipedia.org/wiki/Microwave

https://en.wikipedia.org/wiki/Terahertz_radiation

https://en.wikipedia.org/wiki/Ionosphere

https://en.wikipedia.org/wiki/Ground_wave

https://en.wikipedia.org/wiki/Electromagnetic_radiation

https://en.wikipedia.org/wiki/Far_infrared

https://en.wikipedia.org/wiki/Gauge_boson

https://en.wikipedia.org/wiki/Virtual_particle

https://en.wikipedia.org/wiki/Quantum_vacuum_(disambiguation)

https://en.wikipedia.org/wiki/Antiparticle

https://en.wikipedia.org/wiki/Uncertainty_principle

https://en.wikipedia.org/wiki/Gauge_boson

https://en.wikipedia.org/wiki/Initial_condition

https://en.wikipedia.org/wiki/S-matrix

https://en.wikipedia.org/wiki/Perturbation_theory_(quantum_mechanics)

https://en.wikipedia.org/wiki/Electromagnetism#repel

https://en.wikipedia.org/wiki/Graviton

https://en.wikipedia.org/wiki/Gauge_theory

https://en.wikipedia.org/wiki/Gauge_theory

https://en.wikipedia.org/wiki/Higgs_mechanism

https://en.wikipedia.org/wiki/1964_PRL_symmetry_breaking_papers

https://en.wikipedia.org/wiki/Glueball

https://en.wikipedia.org/wiki/Cosmic_microwave_background

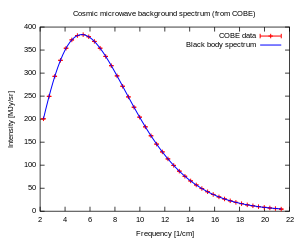

The cosmic microwave background (CMB, CMBR) is microwave radiation that fills all space. It is a remnant that provides an important source of data on the primordial universe.[1] With a standard optical telescope, the background space between stars and galaxies is almost completely dark. However, a sufficiently sensitive radio telescope detects a faint background glow that is almost uniform and is not associated with any star, galaxy, or other object. This glow is strongest in the microwave region of the radio spectrum. The accidental discovery of the CMB in 1965 by American radio astronomers Arno Penzias and Robert Wilson was the culmination of work initiated in the 1940s.[2][3]

CMB is landmark evidence of the Big Bang theory for the origin of the universe. In the Big Bang cosmological models, during the earliest periods, the universe was filled with an opaque fog of dense, hot plasma of sub-atomic particles. As the universe expanded, this plasma cooled to the point where protons and electrons combined to form neutral atoms of mostly hydrogen. Unlike the plasma, these atoms could not scatter thermal radiation by Thomson scattering, and so the universe became transparent.[4] Known as the recombination epoch, this decoupling event released photons to travel freely through space – sometimes referred to as relic radiation.[1] However, the photons have grown less energetic, since the expansion of space causes their wavelength to increase. The surface of last scattering refers to a shell at the right distance in space so photons are now received that were originally emitted at the time of decoupling.

The CMB is not completely smooth and uniform, showing a faint anisotropy that can be mapped by sensitive detectors. Ground and space-based experiments such as COBE and WMAP have been used to measure these temperature inhomogeneties. The anisotropy structure is determined by various interactions of matter and photons up to the point of decoupling, which results in a characteristic lumpy pattern that varies with angular scale. The distribution of the anisotropy across the sky has frequency components that can be represented by a power spectrum displaying a sequence of peaks and valleys. The peak values of this spectrum hold important information about the physical properties of the early universe: the first peak determines the overall curvature of the universe, while the second and third peak detail the density of normal matter and so-called dark matter, respectively. Extracting fine details from the CMB data can be challenging, since the emission has undergone modification by foreground features such as galaxy clusters.

Importance of precise measurement

Precise measurements of the CMB are critical to cosmology, since any proposed model of the universe must explain this radiation. The CMB has a thermal black body spectrum at a temperature of 2.72548±0.00057 K.[5] The spectral radiance dEν/dν peaks at 160.23 GHz, in the microwave range of frequencies, corresponding to a photon energy of about 6.626×10−4 eV. Alternatively, if spectral radiance is defined as dEλ/dλ, then the peak wavelength is 1.063 mm (282 GHz, 1.168×10−3 eV photons). The glow is very nearly uniform in all directions, but the tiny residual variations show a very specific pattern, the same as that expected of a fairly uniformly distributed hot gas that has expanded to the current size of the universe. In particular, the spectral radiance at different angles of observation in the sky contains small anisotropies, or irregularities, which vary with the size of the region examined. They have been measured in detail, and match what would be expected if small thermal variations, generated by quantum fluctuations of matter in a very tiny space, had expanded to the size of the observable universe we see today. This is a very active field of study, with scientists seeking both better data (for example, the Planck spacecraft) and better interpretations of the initial conditions of expansion. Although many different processes might produce the general form of a black body spectrum, no model other than the Big Bang has yet explained the fluctuations. As a result, most cosmologists consider the Big Bang model of the universe to be the best explanation for the CMB.

The high degree of uniformity throughout the observable universe and its faint but measured anisotropy lend strong support for the Big Bang model in general and the ΛCDM ("Lambda Cold Dark Matter") model in particular. Moreover, the fluctuations are coherent on angular scales that are larger than the apparent cosmological horizon at recombination. Either such coherence is acausally fine-tuned, or cosmic inflation occurred.[6][7]

Other than the temperature and polarization anisotropy, the CMB frequency spectrum is expected to feature tiny departures from the black-body law known as spectral distortions. These are also at the focus of an active research effort with the hope of a first measurement within the forthcoming decades, as they contain a wealth of information about the primordial universe and the formation of structures at late time.[8]

Features

The cosmic microwave background radiation is an emission of uniform, black body thermal energy coming from all parts of the sky. The radiation is isotropic to roughly one part in 100,000: the root mean square variations are only 18 μK,[10] after subtracting out a dipole anisotropy from the Doppler shift of the background radiation. The latter is caused by the peculiar velocity of the Sun relative to the comoving cosmic rest frame as it moves at some 369.82 ± 0.11 km/s towards the constellation Leo (galactic longitude 264.021 ± 0.011, galactic latitude 48.253 ± 0.005).[11] The CMB dipole and aberration at higher multipoles have been measured, consistent with galactic motion.[12]

In the Big Bang model for the formation of the universe, inflationary cosmology predicts that after about 10−37 seconds[13] the nascent universe underwent exponential growth that smoothed out nearly all irregularities. The remaining irregularities were caused by quantum fluctuations in the inflation field that caused the inflation event.[14] Long before the formation of stars and planets, the early universe was smaller, much hotter and, starting 10−6 seconds after the Big Bang, filled with a uniform glow from its white-hot fog of interacting plasma of photons, electrons, and baryons.

As the universe expanded, adiabatic cooling caused the energy density of the plasma to decrease until it became favorable for electrons to combine with protons, forming hydrogen atoms. This recombination event happened when the temperature was around 3000 K or when the universe was approximately 379,000 years old.[15] As photons did not interact with these electrically neutral atoms, the former began to travel freely through space, resulting in the decoupling of matter and radiation.[16]

The color temperature of the ensemble of decoupled photons has continued to diminish ever since; now down to 2.7260±0.0013 K,[5] it will continue to drop as the universe expands. The intensity of the radiation corresponds to black-body radiation at 2.726 K because red-shifted black-body radiation is just like black-body radiation at a lower temperature. According to the Big Bang model, the radiation from the sky we measure today comes from a spherical surface called the surface of last scattering. This represents the set of locations in space at which the decoupling event is estimated to have occurred[17] and at a point in time such that the photons from that distance have just reached observers. Most of the radiation energy in the universe is in the cosmic microwave background,[18] making up a fraction of roughly 6×10−5 of the total density of the universe.[19]

Two of the greatest successes of the Big Bang theory are its prediction of the almost perfect black body spectrum and its detailed prediction of the anisotropies in the cosmic microwave background. The CMB spectrum has become the most precisely measured black body spectrum in nature.[9]

The energy density of the CMB is 0.260 eV/cm3 (4.17×10−14 J/m3) which yields about 411 photons/cm3.[20]

History

The cosmic microwave background was first predicted in 1948 by Ralph Alpher and Robert Herman, in close relation to work performed by Alpher's PhD advisor George Gamow.[21][22][23][24] Alpher and Herman were able to estimate the temperature of the cosmic microwave background to be 5 K, though two years later they re-estimated it at 28 K. This high estimate was due to a misestimate of the Hubble constant by Alfred Behr, which could not be replicated and was later abandoned for the earlier estimate. Although there were several previous estimates of the temperature of space, these estimates had two flaws. First, they were measurements of the effective temperature of space and did not suggest that space was filled with a thermal Planck spectrum. Next, they depend on our being at a special spot at the edge of the Milky Way galaxy and they did not suggest the radiation is isotropic. The estimates would yield very different predictions if Earth happened to be located elsewhere in the universe.[25]

The 1948 results of Alpher and Herman were discussed in many physics settings through about 1955, when both left the Applied Physics Laboratory at Johns Hopkins University. The mainstream astronomical community, however, was not intrigued at the time by cosmology. Alpher and Herman's prediction was rediscovered by Yakov Zel'dovich in the early 1960s, and independently predicted by Robert Dicke at the same time. The first published recognition of the CMB radiation as a detectable phenomenon appeared in a brief paper by Soviet astrophysicists A. G. Doroshkevich and Igor Novikov, in the spring of 1964.[26] In 1964, David Todd Wilkinson and Peter Roll, Dicke's colleagues at Princeton University, began constructing a Dicke radiometer to measure the cosmic microwave background.[27] In 1964, Arno Penzias and Robert Woodrow Wilson at the Crawford Hill location of Bell Telephone Laboratories in nearby Holmdel Township, New Jersey had built a Dicke radiometer that they intended to use for radio astronomy and satellite communication experiments. On 20 May 1964 they made their first measurement clearly showing the presence of the microwave background,[28] with their instrument having an excess 4.2K antenna temperature which they could not account for. After receiving a telephone call from Crawford Hill, Dicke said "Boys, we've been scooped."[2][29][30] A meeting between the Princeton and Crawford Hill groups determined that the antenna temperature was indeed due to the microwave background. Penzias and Wilson received the 1978 Nobel Prize in Physics for their discovery.[31]

The interpretation of the cosmic microwave background was a controversial issue in the 1960s with some proponents of the steady state theory arguing that the microwave background was the result of scattered starlight from distant galaxies.[32] Using this model, and based on the study of narrow absorption line features in the spectra of stars, the astronomer Andrew McKellar wrote in 1941: "It can be calculated that the 'rotational temperature' of interstellar space is 2 K."[33] However, during the 1970s the consensus was established that the cosmic microwave background is a remnant of the big bang. This was largely because new measurements at a range of frequencies showed that the spectrum was a thermal, black body spectrum, a result that the steady state model was unable to reproduce.[34]

Harrison, Peebles, Yu and Zel'dovich realized that the early universe would require inhomogeneities at the level of 10−4 or 10−5.[35][36][37] Rashid Sunyaev later calculated the observable imprint that these inhomogeneities would have on the cosmic microwave background.[38] Increasingly stringent limits on the anisotropy of the cosmic microwave background were set by ground-based experiments during the 1980s. RELIKT-1, a Soviet cosmic microwave background anisotropy experiment on board the Prognoz 9 satellite (launched 1 July 1983) gave upper limits on the large-scale anisotropy. The NASA COBE mission clearly confirmed the primary anisotropy with the Differential Microwave Radiometer instrument, publishing their findings in 1992.[39][40] The team received the Nobel Prize in physics for 2006 for this discovery.

Inspired by the COBE results, a series of ground and balloon-based experiments measured cosmic microwave background anisotropies on smaller angular scales over the next decade. The primary goal of these experiments was to measure the scale of the first acoustic peak, which COBE did not have sufficient resolution to resolve. This peak corresponds to large scale density variations in the early universe that are created by gravitational instabilities, resulting in acoustical oscillations in the plasma.[41] The first peak in the anisotropy was tentatively detected by the Toco experiment and the result was confirmed by the BOOMERanG and MAXIMA experiments.[42][43][44] These measurements demonstrated that the geometry of the universe is approximately flat, rather than curved.[45] They ruled out cosmic strings as a major component of cosmic structure formation and suggested cosmic inflation was the right theory of structure formation.[46]

The second peak was tentatively detected by several experiments before being definitively detected by WMAP, which has tentatively detected the third peak.[47] As of 2010, several experiments to improve measurements of the polarization and the microwave background on small angular scales are ongoing.[needs update] These include DASI, WMAP, BOOMERanG, QUaD, Planck spacecraft, Atacama Cosmology Telescope, South Pole Telescope and the QUIET telescope.

Relationship to the Big Bang

−13 — – −12 — – −11 — – −10 — – −9 — – −8 — – −7 — – −6 — – −5 — – −4 — – −3 — – −2 — – −1 — – 0 — |

| |||||||||||||||||||||||||||||||||||||||

The cosmic microwave background radiation and the cosmological redshift-distance relation are together regarded as the best available evidence for the Big Bang event. Measurements of the CMB have made the inflationary Big Bang model the Standard Cosmological Model.[48] The discovery of the CMB in the mid-1960s curtailed interest in alternatives such as the steady state theory.[49]

In the late 1940s Alpher and Herman reasoned that if there was a Big Bang, the expansion of the universe would have stretched the high-energy radiation of the very early universe into the microwave region of the electromagnetic spectrum, and down to a temperature of about 5 K. They were slightly off with their estimate, but they had the right idea. They predicted the CMB. It took another 15 years for Penzias and Wilson to discover that the microwave background was actually there.[50]

According to standard cosmology, the CMB gives a snapshot of the hot early universe at the point in time when the temperature dropped enough to allow electrons and protons to form hydrogen atoms. This event made the universe nearly transparent to radiation because light was no longer being scattered off free electrons. When this occurred some 380,000 years after the Big Bang, the temperature of the universe was about 3,000 K. This corresponds to an ambient energy of about 0.26 eV, which is much less than the 13.6 eV ionization energy of hydrogen.[51] This epoch is generally known as the "time of last scattering" or the period of recombination or decoupling.[52]