Wire drawing is a metalworking process used to reduce the cross-section of a wire by pulling the wire through a single, or series of, drawing die(s). There are many applications for wire drawing, including electrical wiring, cables, tension-loaded structural components, springs, paper clips, spokes for wheels, and stringed musical instruments. Although similar in process, drawing is different from extrusion, because in drawing the wire is pulled, rather than pushed, through the die. Drawing is usually performed at room temperature, thus classified as a cold working process, but it may be performed at elevated temperatures for large wires to reduce forces.[1]

Of the elemental metals, copper, silver, gold, and platinum are the most ductile and immune from many of the problems associated with cold working.

https://en.wikipedia.org/wiki/Wire_drawing

In metallurgy, cold forming or cold working is any metalworking process in which metal is shaped below its recrystallization temperature, usually at the ambient temperature. Such processes are contrasted with hot working techniques like hot rolling, forging, welding, etc.[1]: p.375 The same or similar terms are used in glassmaking for the equivalents; for example cut glass is made by "cold work", cutting or grinding a formed object.

Cold forming techniques are usually classified into four major groups: squeezing, bending, drawing, and shearing. They generally have the advantage of being simpler to carry out than hot working techniques.

Unlike hot working, cold working causes the crystal grains and inclusions to distort following the flow of the metal; which may cause work hardening and anisotropic material properties. Work hardening makes the metal harder, stiffer, and stronger, but less plastic, and may cause cracks of the piece.[1]: p.378

The possible uses of cold forming are extremely varied, including large flat sheets, complex folded shapes, metal tubes, screw heads and threads, riveted joints, and much more.

https://en.wikipedia.org/wiki/Cold_working

https://en.wikipedia.org/wiki/Wire_gauge

https://en.wikipedia.org/wiki/Wire

Pearlite is a two-phased, lamellar (or layered) structure composed of alternating layers of ferrite (87.5 wt%) and cementite (12.5 wt%) that occurs in some steels and cast irons. During slow cooling of an iron-carbon alloy, pearlite forms by a eutectoid reaction as austenite cools below 723 °C (1,333 °F) (the eutectoid temperature). Pearlite is a microstructure occurring in many common grades of steels.

https://en.wikipedia.org/wiki/Pearlite

https://en.wikipedia.org/wiki/Silver

https://en.wikipedia.org/wiki/Niobium%E2%80%93tin

https://en.wikipedia.org/wiki/Wire_wrap

Drawing is a metalworking process that uses tensile forces to elongate metal, glass, or plastic. As the material is drawn (pulled), it stretches and becomes thinner, achieving a desired shape and thickness. Drawing is classified into two types: sheet metal drawing and wire, bar, and tube drawing. Sheet metal drawing is defined as a plastic deformation over a curved axis. For wire, bar, and tube drawing, the starting stock is drawn through a die to reduce its diameter and increase its length. Drawing is usually performed at room temperature, thus classified as a cold working process; however, drawing may also be performed at higher temperatures to hot work large wires, rods, or hollow tubes in order to reduce forces.[1][2]

Drawing differs from rolling in that pressure is not applied by the turning action of a mill but instead depends on force applied locally near the area of compression. This means the maximal drawing force is limited by the tensile strength of the material, a fact particularly evident when drawing thin wires.[3]

The starting point of cold drawing is hot-rolled stock of a suitable size.

https://en.wikipedia.org/wiki/Drawing_(manufacturing)

https://en.wikipedia.org/wiki/Gold

https://en.wikipedia.org/wiki/Overhead_line

https://en.wikipedia.org/wiki/Garrote

https://en.wikipedia.org/wiki/Wire_bonding

https://en.wikipedia.org/wiki/Ammeter

https://en.wikipedia.org/wiki/Electrical_wiring

https://en.wikipedia.org/wiki/Wire-frame_model

https://en.wikipedia.org/wiki/PC_strand

https://en.wikipedia.org/wiki/Draw_plate#Drawing_wire

https://en.wikipedia.org/wiki/Wheel#Wire

https://en.wikipedia.org/wiki/Horsecar

https://en.wikipedia.org/wiki/Twist-on_wire_connector

https://en.wikipedia.org/wiki/Ground_and_neutral

https://en.wikipedia.org/wiki/Three-phase_electric_power

https://en.wikipedia.org/wiki/Open_wire

https://en.wikipedia.org/wiki/Chicken_wire_(chemistry)

https://en.wikipedia.org/wiki/Chennai_Suburban_Railway

https://en.wikipedia.org/wiki/Copper_conductor

https://en.wikipedia.org/wiki/Communication_protocol#Wire_image

https://en.wikipedia.org/wiki/Ground_(electricity)

https://en.wikipedia.org/wiki/Circuit_diagram

https://en.wikipedia.org/wiki/Ferrite_bead

https://en.wikipedia.org/wiki/Keyboard_technology

https://en.wikipedia.org/wiki/Hot-wire_barretter

https://en.wikipedia.org/wiki/Tantalum

https://en.wikipedia.org/wiki/Die_(manufacturing)#Wire_pulling

https://en.wikipedia.org/wiki/Electronic_symbol

https://en.wikipedia.org/wiki/Spoke

https://en.wikipedia.org/wiki/Magnetic_field#Force_on_current-carrying_wire

https://en.wikipedia.org/wiki/Victor_Harbor_Horse_Drawn_Tram

https://en.wikipedia.org/wiki/Crown_Jewels_of_the_United_Kingdom

https://en.wikipedia.org/wiki/Artillery

https://en.wikipedia.org/wiki/Victorian_fashion

https://en.wikipedia.org/wiki/Bank

https://en.wikipedia.org/wiki/United_States_Marine_Corps#Capabilities

https://en.wikipedia.org/wiki/Anti-aircraft_warfare

https://en.wikipedia.org/wiki/Normandy_landings

https://en.wikipedia.org/wiki/Wiretapping

https://en.wikipedia.org/wiki/Beryllium_copper

https://en.wikipedia.org/wiki/Lemon_battery

https://en.wikipedia.org/wiki/Forrest_Gump

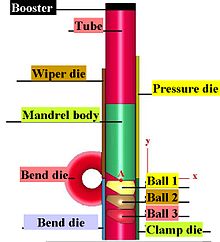

https://en.wikipedia.org/wiki/Tube_drawing

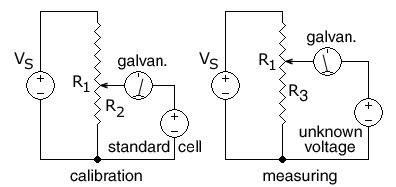

https://en.wikipedia.org/wiki/Potentiometer_(measuring_instrument)

https://en.wikipedia.org/wiki/Reaper-binder

https://en.wikipedia.org/wiki/Popeye

https://en.wikipedia.org/wiki/Russo-Ukrainian_War#A_stable_line_of_conflict_%282015%E2%80%932021%29

https://en.wikipedia.org/wiki/Electrical_resistivity_and_conductivity

https://en.wikipedia.org/wiki/MacOS

https://en.wikipedia.org/wiki/Godzilla

https://en.wikipedia.org/wiki/The_Machine_Stops

https://en.wikipedia.org/wiki/Elastigirl

https://en.wikipedia.org/wiki/Stranger_Things

https://en.wikipedia.org/wiki/Adobe_Photoshop

https://en.wikipedia.org/wiki/Shape-memory_alloy

https://en.wikipedia.org/wiki/Brokeback_Mountain

https://en.wikipedia.org/wiki/The_Lord_of_the_Rings_(film_series)

https://en.wikipedia.org/wiki/Computer-generated_imagery

https://en.wikipedia.org/wiki/1060_aluminium_alloy

https://en.wikipedia.org/wiki/Federal_Deposit_Insurance_Corporation

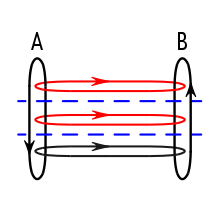

https://en.wikipedia.org/wiki/Inductive_coupling

https://en.wikipedia.org/wiki/A_Call_to_Spy

https://en.wikipedia.org/wiki/Plastic_extrusion

https://en.wikipedia.org/wiki/Golden_Temple

https://en.wikipedia.org/wiki/Dachau_concentration_camp

https://en.wikipedia.org/wiki/The_Good_Wife

https://en.wikipedia.org/wiki/On_the_Internet,_nobody_knows_you%27re_a_dog

https://en.wikipedia.org/wiki/Planar_graph

https://en.wikipedia.org/wiki/DreamWorks_Animation

https://en.wikipedia.org/wiki/Faraday_cage

https://en.wikipedia.org/wiki/New_World_Order_(conspiracy_theory)

https://en.wikipedia.org/wiki/Lapis_lazuli

https://en.wikipedia.org/wiki/Black_Panther_(film)

https://en.wikipedia.org/wiki/Amiga

https://en.wikipedia.org/wiki/Radiation

https://en.wikipedia.org/wiki/Organized_crime

https://en.wikipedia.org/wiki/Watch

https://en.wikipedia.org/wiki/Spike_(missile)

https://en.wikipedia.org/wiki/Hand_Drawn_Pressing

https://en.wikipedia.org/wiki/Voltage

https://en.wikipedia.org/wiki/Mockup

https://en.wikipedia.org/wiki/IEC_60309#Preferred_current_ratings_and_wire_gauges

https://en.wikipedia.org/wiki/Changes_in_Star_Wars_re-releases

https://en.wikipedia.org/wiki/Battle_of_Khe_Sanh

https://en.wikipedia.org/wiki/Sodium_chloride

https://en.wikipedia.org/wiki/Steven_Universe

https://en.wikipedia.org/wiki/Prehistoric_beads_in_the_Philippines#Drawn_Beads

https://en.wikipedia.org/wiki/List_of_Neon_Genesis_Evangelion_characters

https://en.wikipedia.org/wiki/Bob%27s_Burgers

https://en.wikipedia.org/wiki/Electric_field

https://en.wikipedia.org/wiki/Yosemite_National_Park

https://en.wikipedia.org/wiki/Joker_(character)

https://en.wikipedia.org/wiki/Second_American_Civil_War

https://en.wikipedia.org/wiki/The_Bone_Collector_(novel)

https://en.wikipedia.org/wiki/Brain_in_a_vat

https://en.wikipedia.org/wiki/Inter-Services_Intelligence

https://en.wikipedia.org/wiki/Lincoln_Cathedral

https://en.wikipedia.org/wiki/Uncanny_valley

https://en.wikipedia.org/wiki/Extrusion

https://en.wikipedia.org/wiki/Graph_theory

https://en.wikipedia.org/wiki/Carmel-by-the-Sea,_California

https://en.wikipedia.org/wiki/Comanche

https://en.wikipedia.org/wiki/Ranks_and_insignia_of_the_German_Army_(1935%E2%80%931945)

https://en.wikipedia.org/wiki/Film_industry

https://en.wikipedia.org/wiki/Ball_lightning

https://en.wikipedia.org/wiki/Mexican%E2%80%93American_War

https://en.wikipedia.org/wiki/Chester

https://en.wikipedia.org/wiki/Super_heavy-lift_launch_vehicle

https://en.wikipedia.org/wiki/Star_Wars_(film)

https://en.wikipedia.org/wiki/Hydrogen_sulfide

https://en.wikipedia.org/wiki/Violin

https://en.wikipedia.org/wiki/Battle_of_Dien_Bien_Phu

https://en.wikipedia.org/wiki/Reservation_Dogs

https://en.wikipedia.org/wiki/Daguerreotype

https://en.wikipedia.org/wiki/Street_Fighter

https://en.wikipedia.org/wiki/Armature_(electrical)

https://en.wikipedia.org/wiki/6060_aluminium_alloy

https://en.wikipedia.org/wiki/Modern_paganism

https://en.wikipedia.org/wiki/Earring

https://en.wikipedia.org/wiki/Metallic_fiber

https://en.wikipedia.org/wiki/Schematic

https://en.wikipedia.org/wiki/Thorium

https://en.wikipedia.org/wiki/Printed_circuit_board#Solder_resist_application

Solder mask, solder stop mask or solder resist is a thin lacquer-like layer of polymer that is usually applied to the copper traces of a printed circuit board (PCB) for protection against oxidation and to prevent solder bridges from forming between closely spaced solder pads. A solder bridge is an unintended electrical connection between two conductors by means of a small blob of solder. PCBs use solder masks to prevent this from happening. Solder mask is not always used for hand soldered assemblies, but is essential for mass-produced boards that are soldered automatically using reflow or wave soldering techniques. Once applied, openings must be made in the solder mask wherever components are soldered, which is accomplished using photolithography.[1] Solder mask is traditionally green, but is also available in many other colors.[2]

Solder mask comes in different media depending upon the demands of the application. The lowest-cost solder mask is epoxy liquid that is silkscreened through the pattern onto the PCB. Other types are the liquid photoimageable solder mask (LPSM or LPI) inks and dry-film photoimageable solder mask (DFSM). LPSM can be silkscreened or sprayed on the PCB, exposed to the pattern and developed to provide openings in the pattern for parts to be soldered to the copper pads. DFSM is vacuum-laminated on the PCB then exposed and developed. All three processes typically go through a thermal cure of some type after the pattern is defined although LPI solder masks are also available in ultraviolet (UV) cure.

The solder stop layer on a flexible board is also called coverlay or coverfilm.[3]

In electronic design automation, the solder mask is treated as part of the layer stack of the printed circuit board, and is described in individual Gerber files for the top and bottom side of the PCB like any other layer (such as the copper and silk-screen layers).[4] Typical names for these layers include tStop/bStop aka STC/STS[5][nb 1] or TSM/BSM (EAGLE), F.Mask/B.Mask (KiCad), StopTop/StopBot (TARGET), maskTop/maskBottom (Fritzing), SMT/SMB (OrCAD), MT.PHO/MB.PHO (PADS), LSMVS/LSMRS (WEdirekt)[6] or GTS/GBS (Gerber and many others[7]).

Notes

- The letters 'C' and 'S' in EAGLE's old Gerber filename extensions

.STC/.STSfor the top and bottom solder stop mask layers have their origin in times when printed circuit boards were typically equipped with components populated on one side of the board only, the so called "component side" (top) versus the opposite "solder side" (bottom) where these components were soldered (at least in the case of through-hole components).

References

- "Gerber Output Options" (PDF). 1.3. Altium Limited. 2011-07-27 [2008-03-26, 2005-12-05]. Archived (PDF) from the original on 2022-08-29. Retrieved 2022-08-29.

Further reading

- Zühlke, Karin (2017-12-12). "Forschungsallianz Fela und Würth - "s.mask" - der erste Schritte zur digitalen Leiterplatte". Elektroniknet.de (in German). Markt & Technik. Archived from the original on 2018-06-17.

https://en.wikipedia.org/wiki/Printed_circuit_board#Solder_resist_application

Solder resist application

Areas that should not be soldered may be covered with solder resist (solder mask). The solder mask is what gives PCBs their characteristic green color, although it is also available in several other colors, such as red, blue, purple, yellow, black and white. One of the most common solder resists used today is called "LPI" (liquid photoimageable solder mask).[48] A photo-sensitive coating is applied to the surface of the PWB, then exposed to light through the solder mask image film, and finally developed where the unexposed areas are washed away. Dry film solder mask is similar to the dry film used to image the PWB for plating or etching. After being laminated to the PWB surface it is imaged and developed as LPI. Once but no longer commonly used, because of its low accuracy and resolution, is to screen print epoxy ink. In addition to repelling solder, solder resist also provides protection from the environment to the copper that would otherwise be exposed.

Legend / silkscreen

A legend (also known as silk or silkscreen) is often printed on one or both sides of the PCB. It contains the component designators, switch settings, test points and other indications helpful in assembling, testing, servicing, and sometimes using the circuit board.

There are three methods to print the legend:

- Silkscreen printing epoxy ink was the established method, resulting in the alternative name.

- Liquid photo imaging is a more accurate method than screen printing.

- Ink jet printing is increasingly used. Ink jet can print variable data, unique to each PWB unit, such as text or a bar code with a serial number.

Bare-board test

Boards with no components installed are usually bare-board tested for "shorts" and "opens". This is called electrical test or PCB e-test. A short is a connection between two points that should not be connected. An open is a missing connection between points that should be connected. For high-volume production, a fixture such as a "bed of nails" in a rigid needle adapter makes contact with copper lands on the board. The fixture or adapter is a significant fixed cost and this method is only economical for high-volume or high-value production. For small or medium volume production flying probe testers are used where test probes are moved over the board by an XY drive to make contact with the copper lands. There is no need for a fixture and hence the fixed costs are much lower. The CAM system instructs the electrical tester to apply a voltage to each contact point as required and to check that this voltage appears on the appropriate contact points and only on these.

https://en.wikipedia.org/wiki/Printed_circuit_board#Solder_resist_application

https://en.wikipedia.org/wiki/Printed_circuit_board#Solder_resist_application

https://en.wikipedia.org/wiki/Rigid_needle_adapter

https://en.wikipedia.org/wiki/Reference_designator

https://en.wikipedia.org/wiki/Through-hole_technology

https://en.wikipedia.org/wiki/Surface-mount_technology

https://en.wikipedia.org/wiki/Pick-and-place_machine

https://en.wikipedia.org/wiki/Reflow_oven

https://en.wikipedia.org/wiki/Automated_optical_inspection

https://en.wikipedia.org/wiki/Non-volatile_memory

https://en.wikipedia.org/wiki/Boundary_scan

https://en.wikipedia.org/wiki/Desoldering

https://en.wikipedia.org/wiki/Conformal_coating

https://en.wikipedia.org/wiki/Antistatic_bag

https://en.wikipedia.org/wiki/Fuze

https://en.wikipedia.org/wiki/Through-hole_technology

https://en.wikipedia.org/wiki/Monoboard

https://en.wikipedia.org/wiki/Prototype#Electronics_prototyping

https://en.wikipedia.org/wiki/Lab-on-a-chip

https://en.wikipedia.org/wiki/Electronic_waste

https://en.wikipedia.org/wiki/Restriction_of_Hazardous_Substances_Directive

https://en.wikipedia.org/wiki/Breadboard

https://en.wikipedia.org/wiki/Microphonics

https://en.wikipedia.org/wiki/Multi-chip_module

https://en.wikipedia.org/wiki/Occam_process

https://en.wikipedia.org/wiki/Point-to-point_construction

A stamped circuit board (SCB) is used to mechanically support and electrically connect electronic components using conductive pathways, tracks or traces etched from copper sheets laminated onto a non-conductive substrate. This technology is used for small circuits, for instance in the production of LEDs.[1]

Similar to printed circuit boards this layer structure may comprise glass-fibre reinforced epoxy resin and copper. Basically, in the case of LED substrates three variations are possible:

- the PCB (printed circuit board),

- plastic-injection molding and

- the SCB.

Using the SCB technology it is possible to structure and laminate the most widely differing material combinations in a reel-to-reel production process.[2] As the layers are structured separately, improved design concepts are able to be implemented. Consequently, a far better and quicker heat dissipation from within the chip is achieved.

https://en.wikipedia.org/wiki/Stamped_circuit_board

Stripboard is the generic name for a widely used type of electronics prototyping material for circuit boards characterized by a pre-formed 0.1 inches (2.54 mm) regular (rectangular) grid of holes, with wide parallel strips of copper cladding running in one direction all the way across one side of on an insulating bonded paper board. It is commonly also known by the name of the original product Veroboard, which is a trademark, in the UK, of British company Vero Technologies Ltd and Canadian company Pixel Print Ltd. It was originated and developed in the early 1960s by the Electronics Department of Vero Precision Engineering Ltd (VPE). It was introduced as a general-purpose material for use in constructing electronic circuits - differing from purpose-designed printed circuit boards (PCBs) in that a variety of electronics circuits may be constructed using a standard wiring board.[citation needed]

In using the board, breaks are made in the tracks, usually around holes, to divide the strips into multiple electrical nodes. With care, it is possible to break between holes to allow for components that have two pin rows only one position apart such as twin row headers for IDCs.

Stripboard is not designed for surface-mount components, though it is possible to mount many such components on the track side, particularly if tracks are cut/shaped with a knife or small cutting disc in a rotary tool.

The first single-size Veroboard product was the forerunner of the numerous types of prototype wiring board which, with worldwide use over five decades, have become known as stripboard.[citation needed]

The generic terms 'veroboard' and 'stripboard' are now taken to be synonymous.[citation needed]

https://en.wikipedia.org/wiki/Stripboard

https://en.wikipedia.org/wiki/Wire_wrap

Conductive ink is an ink that results in a printed object which conducts electricity. It is typically created by infusing graphite or other conductive materials into ink.[1] There has been a growing interest in replacing metallic materials with nanomaterials due to the emergence of nanotechnology. Among other nanomaterials, graphene, and carbon nanotube-based conductive ink are gaining immense popularity due to their high electrical conductivity and high surface area.[2] Recently, more attention has been paid on using eco-friendly conductive ink using water as a solvent as compared to organic solvents since they are harmful to the environment. However, the high surface tension of water prevents its applicability. Various natural and synthetic surfactants are now used to reduce the surface tension of water and ensure uniform nanomaterials dispersibility for smooth printing and wide application.[3]

Silver inks have multiple uses today including printing RFID tags as used in modern transit tickets, they can be used to improvise or repair circuits on printed circuit boards. Computer keyboards contain membranes with printed circuits that sense when a key is pressed. Windshield defrosters consisting of resistive traces applied to the glass are also printed.

See also

References

- Khan, Junaid; Mariatti, M. (20 November 2022). "Effect of natural surfactant on the performance of reduced graphene oxide conductive ink". Journal of Cleaner Production. 376: 134254. doi:10.1016/j.jclepro.2022.134254. ISSN 0959-6526. S2CID 252524219.

https://en.wikipedia.org/wiki/Conductive_ink

Teledeltos paper is an electrically conductive paper. It is formed by a coating of carbon on one side of a sheet of paper, giving one black and one white side. Western Union developed Teledeltos paper in the late 1940s (several decades after it was already in use for mathematical modelling) for use in spark printer based fax machines and chart recorders.[1]

Teledeltos paper has several uses within engineering that are far removed from its original use in spark printers. Many of these use the paper to model the distribution of electric and other scalar fields.

Use

Teledeltos provides a sheet of a uniform resistor, with isotropic resistivity in each direction. As it is cheap and easily cut to shape, it may be used to make one-off resistors of any shape needed. The paper backing also forms a convenient insulator from the bench. These are usually made to represent or model some real-world example of a two-dimensional scalar field, where is it is necessary to study the field's distribution. This field may be an electric field, or some other field following the same linear distribution rules.

The resistivity of Teledeltos is around 6 kilohms / square.[2][i] This is low enough that it may be used with safe low voltages, yet high enough that the currents remain low, avoiding problems with contact resistance.

Connections are made to the paper by painting on areas of silver-loaded conductive paint and attaching wires to these, usually with spring clips.[2][3] Each painted area has a low resistivity (relative to the carbon) and so may be assumed to be at a constant voltage. With the voltages applied, the current flow through the sheet will emulate the field distribution. Voltages may be measured within the sheet by applying a voltmeter probe (relative to one of the known electrodes) or current flows may be measured. As the sheet's resistivity is constant, the simplest way to measure a current flow is to use a small two-probe voltmeter to measure the voltage difference between the probes. As their spacing is known, and the resistivity, the resistance between them and (by Ohm's law) the current flow can be easily determined.

An assumption in some cases is that the surrounding field is 'infinite', this would also require an infinite sheet of Teledeltos. Provided that the sheet is merely 'large' in comparison to the experimental area, a sheet of finite size is sufficient for most experimental practice.[3]

Field plotting

The basic technique for plotting a field is to first construct a model of the independent variables, then to use voltage meter probes to measure the dependent variables. Typically this means applying known voltages at certain points, then measuring voltages and currents within the model. The two basic approaches are to either applying electrodes and a voltage at known points within a large sheet of Teledeltos (modelling an infinite field) or else to cut a shape from Teledeltos and then apply voltages to its edges (modelling a bounded field).[2][3] There is a common practical association that electrical field models are usually infinite and thermal models are usually bounded.

Modelling fields by analogy

Although the modelling of electric fields is itself directly useful in some fields such as thermionic valve design,[4] the main practical use of the broader technique is for modelling fields of other quantities. This technique may be applied to any field that follows the same linear rules as Ohm's law for bulk resistivity. This includes heat flow, some optics and some aspects of Newtonian mechanics. It is not usually applicable to fluid dynamics, owing to viscosity and compressibility effects, or to high-intensity optics where non-linear effects become apparent. It may be applicable to some mechanical problems involving homogeneous and isotropic materials such as metals, but not to composites.

Before the use of Teledeltos, a similar technique had been used for modelling gas flows, where a shallow tray of copper sulphate solution was used as the medium, with copper electrodes at each side. Barriers within the model could be sculpted from wax. Being a liquid, this was far less convenient. Stanley Hooker describes its use pre-war, although he also notes that compressibility effects could be modelled in this way, by sculpting the base of the tank to give additional depth and thus conductivity locally.[5]

One of the most important applications is for thermal modelling. Voltage is the analog of temperature and current flow that of heat flow. If the boundaries of a heatsink model are both painted with conductive paint to form two separate electrodes, each may be held at a voltage to represent the temperatures of some internal heat source (such as a microprocessor chip) and the external ambient temperature. Potentials within the heatsink represent internal temperatures and current flows represent heat flow. In many cases the internal heat source may be modelled with a constant current source, rather than a voltage, giving a better analogy of power loss as heat, rather than assuming a simple constant temperature. If the external airflow is restricted, the 'ambient' electrode may be subdivided and each section connected to a common voltage supply through a resistor or current limiter, representing the proportionate or maximum heatflow capacity of that airstream.

As heatsinks are commonly manufactured from extruded aluminium sections, the two-dimensional paper is not usually a serious limitation. In some cases, such as pistons for internal combustion engines, three-dimensional modelling may be required. This has been performed, in a manner analogous to Teledeltos paper, by using volume tanks of a conductive electrolyte.[6]

This thermal modelling technique is useful in many branches of mechanical engineering such as heatsink or radiator design and die casting.[7]

The development of computational modelling and finite element analysis has reduced the use of Teledeltos, such that the technique is now obscure and the materials can be hard to obtain.[2] Its use is still highly valuable in teaching, as the technique gives a very obvious method for measuring fields and offers immediate feedback as the shape of an experimental setup is changed, encouraging a more fundamental understanding.[3][4]

Sensors

Teledeltos can also be used to make sensors, either directly as an embedded resistive element or indirectly, as part of their design process.

Resistive sensors

A piece of Teledeltos with conductive electrodes at each end makes a simple resistor. Its resistance is slightly sensitive to applied mechanical strain by bending or compression, but the paper substrate is not robust enough to make a reliable sensor for long-term use.

A more common resistive sensor is in the form of a potentiometer. A long, thin resistor with an applied voltage may have a conductive probe slid along its surface. The voltage at the probe depends on its position between the two end contacts. Such a sensor may form the keyboard for a simple electronic musical instrument like a Tannerin or Stylophone.

A similar linear sensor uses two strips of Teledeltos, placed face to face. Pressure on the back of one (finger pressure is enough) presses the two conductive faces together to form a lower resistance contact. This may be used in similar potentiometric fashion to the conductive probe, but without requiring the special probe. This may be used as a classroom demonstration for another electronic musical instrument, with a ribbon controller keyboard, such as the Monotron. If crossed electrodes are used on each piece of Teledeltos, a two-dimensional resistive touchpad may be demonstrated.

Capacitive sensors

Although Teledeltos is not used to manufacture capacitive sensors, its field modelling abilities also allow it to be used to determine the capacitance of arbitrarily shaped electrodes during sensor design.[2]

See also

Notes

- Note that the resistivity units for a two-dimensional sheet are ohms / square (), not the ohm metre (Ω⋅m)s that would be used for the resistivity of a bulk resistor.

References

- John L., Jorstad (September 2006). "Aluminum Future Technology in Die Casting" (PDF). Die Casting Engineering: 19. Archived (PDF) from the original on 2011-06-14.

https://en.wikipedia.org/wiki/Teledeltos

Category:Analog computers

Subcategories

This category has only the following subcategory.

O

- Optical bombsights (10 P)

Pages in category "Analog computers"

The following 55 pages are in this category, out of 55 total. This list may not reflect recent changes.

R

T

https://en.wikipedia.org/wiki/Category:Analog_computers

An analog computer or analogue computer is a type of computer that uses the continuous variation aspect of physical phenomena such as electrical, mechanical, or hydraulic quantities (analog signals) to model the problem being solved. In contrast, digital computers represent varying quantities symbolically and by discrete values of both time and amplitude (digital signals).

Analog computers can have a very wide range of complexity. Slide rules and nomograms are the simplest, while naval gunfire control computers and large hybrid digital/analog computers were among the most complicated.[1] Complex mechanisms for process control and protective relays used analog computation to perform control and protective functions.

Analog computers were widely used in scientific and industrial applications even after the advent of digital computers, because at the time they were typically much faster, but they started to become obsolete as early as the 1950s and 1960s, although they remained in use in some specific applications, such as aircraft flight simulators, the flight computer in aircraft, and for teaching control systems in universities. Perhaps the most relatable example of analog computers are mechanical watches where the continuous and periodic rotation of interlinked gears drives the second, minute and hour needles in the clock. More complex applications, such as aircraft flight simulators and synthetic-aperture radar, remained the domain of analog computing (and hybrid computing) well into the 1980s, since digital computers were insufficient for the task.[2]

Timeline of analog computers

Precursors

This is a list of examples of early computation devices considered precursors of the modern computers. Some of them may even have been dubbed 'computers' by the press, though they may fail to fit modern definitions.

The Antikythera mechanism, a type of device used to determine the positions of heavenly bodies known as an orrery, was described as an early mechanical analog computer by British physicist, information scientist, and historian of science Derek J. de Solla Price.[3] It was discovered in 1901, in the Antikythera wreck off the Greek island of Antikythera, between Kythera and Crete, and has been dated to c. 150~100 BC, during the Hellenistic period. Devices of a level of complexity comparable to that of the Antikythera mechanism would not reappear until a thousand years later.

Many mechanical aids to calculation and measurement were constructed for astronomical and navigation use. The planisphere was first described by Ptolemy in the 2nd century AD. The astrolabe was invented in the Hellenistic world in either the 1st or 2nd centuries BC and is often attributed to Hipparchus. A combination of the planisphere and dioptra, the astrolabe was effectively an analog computer capable of working out several different kinds of problems in spherical astronomy. An astrolabe incorporating a mechanical calendar computer[4][5] and gear-wheels was invented by Abi Bakr of Isfahan, Persia in 1235.[6] Abū Rayhān al-Bīrūnī invented the first mechanical geared lunisolar calendar astrolabe,[7] an early fixed-wired knowledge processing machine[8] with a gear train and gear-wheels,[9] c. AD 1000.

The sector, a calculating instrument used for solving problems in proportion, trigonometry, multiplication and division, and for various functions, such as squares and cube roots, was developed in the late 16th century and found application in gunnery, surveying and navigation.

The planimeter was a manual instrument to calculate the area of a closed figure by tracing over it with a mechanical linkage.

The slide rule was invented around 1620–1630, shortly after the publication of the concept of the logarithm. It is a hand-operated analog computer for doing multiplication and division. As slide rule development progressed, added scales provided reciprocals, squares and square roots, cubes and cube roots, as well as transcendental functions such as logarithms and exponentials, circular and hyperbolic trigonometry and other functions. Aviation is one of the few fields where slide rules are still in widespread use, particularly for solving time–distance problems in light aircraft.

In 1831–1835, mathematician and engineer Giovanni Plana devised a perpetual-calendar machine, which, through a system of pulleys and cylinders, could predict the perpetual calendar for every year from AD 0 (that is, 1 BC) to AD 4000, keeping track of leap years and varying day length.[10]

The tide-predicting machine invented by Sir William Thomson in 1872 was of great utility to navigation in shallow waters. It used a system of pulleys and wires to automatically calculate predicted tide levels for a set period at a particular location.

The differential analyser, a mechanical analog computer designed to solve differential equations by integration, used wheel-and-disc mechanisms to perform the integration. In 1876 James Thomson had already discussed the possible construction of such calculators, but he had been stymied by the limited output torque of the ball-and-disk integrators. A number of similar systems followed, notably those of the Spanish engineer Leonardo Torres y Quevedo, who built several machines for solving real and complex roots of polynomials; and Michelson and Stratton, whose Harmonic Analyser performed Fourier analysis, but using an array of 80 springs rather than Kelvin integrators. This work led to the mathematical understanding of the Gibbs phenomenon of overshoot in Fourier representation near discontinuities.[11] In a differential analyzer, the output of one integrator drove the input of the next integrator, or a graphing output. The torque amplifier was the advance that allowed these machines to work. Starting in the 1920s, Vannevar Bush and others developed mechanical differential analyzers.

Modern era

The Dumaresq was a mechanical calculating device invented around 1902 by Lieutenant John Dumaresq of the Royal Navy. It was an analog computer that related vital variables of the fire control problem to the movement of one's own ship and that of a target ship. It was often used with other devices, such as a Vickers range clock to generate range and deflection data so the gun sights of the ship could be continuously set. A number of versions of the Dumaresq were produced of increasing complexity as development proceeded.

By 1912, Arthur Pollen had developed an electrically driven mechanical analog computer for fire-control systems, based on the differential analyser. It was used by the Imperial Russian Navy in World War I.[citation needed][12]

Starting in 1929, AC network analyzers were constructed to solve calculation problems related to electrical power systems that were too large to solve with numerical methods at the time.[13] These were essentially scale models of the electrical properties of the full-size system. Since network analyzers could handle problems too large for analytic methods or hand computation, they were also used to solve problems in nuclear physics and in the design of structures. More than 50 large network analyzers were built by the end of the 1950s.

World War II era gun directors, gun data computers, and bomb sights used mechanical analog computers. In 1942 Helmut Hölzer built a fully electronic analog computer at Peenemünde Army Research Center[14][15][16] as an embedded control system (mixing device) to calculate V-2 rocket trajectories from the accelerations and orientations (measured by gyroscopes) and to stabilize and guide the missile.[17][18] Mechanical analog computers were very important in gun fire control in World War II, the Korean War and well past the Vietnam War; they were made in significant numbers.

In the period 1930–1945 in the Netherlands, Johan van Veen developed an analogue computer to calculate and predict tidal currents when the geometry of the channels are changed. Around 1950, this idea was developed into the Deltar, a hydraulic analogy computer supporting the closure of estuaries in the southwest of the Netherlands (the Delta Works).

The FERMIAC was an analog computer invented by physicist Enrico Fermi in 1947 to aid in his studies of neutron transport.[19] Project Cyclone was an analog computer developed by Reeves in 1950 for the analysis and design of dynamic systems.[20] Project Typhoon was an analog computer developed by RCA in 1952. It consisted of over 4,000 electron tubes and used 100 dials and 6,000 plug-in connectors to program.[21] The MONIAC Computer was a hydraulic analogy of a national economy first unveiled in 1949.[22]

Computer Engineering Associates was spun out of Caltech in 1950 to provide commercial services using the "Direct Analogy Electric Analog Computer" ("the largest and most impressive general-purpose analyzer facility for the solution of field problems") developed there by Gilbert D. McCann, Charles H. Wilts, and Bart Locanthi.[23][24]

Educational analog computers illustrated the principles of analog calculation. The Heathkit EC-1, a $199 educational analog computer, was made by the Heath Company, US c. 1960.[25] It was programmed using patch cords that connected nine operational amplifiers and other components.[26] General Electric also marketed an "educational" analog computer kit of a simple design in the early 1960s consisting of a two transistor tone generators and three potentiometers wired such that the frequency of the oscillator was nulled when the potentiometer dials were positioned by hand to satisfy an equation. The relative resistance of the potentiometer was then equivalent to the formula of the equation being solved. Multiplication or division could be performed, depending on which dials were inputs and which was the output. Accuracy and resolution was limited and a simple slide rule was more accurate. However, the unit did demonstrate the basic principle.

Analog computer designs were published in electronics magazines. One example is the PEAC (Practical Electronics analogue computer), published in Practical Electronics in the January 1968 edition.[27] Another more modern hybrid computer design was published in Everyday Practical Electronics in 2002.[28] An example described in the EPE hybrid computer was the flight of a VTOL aircraft such as the Harrier jump jet.[28] The altitude and speed of the aircraft were calculated by the analog part of the computer and sent to a PC via a digital microprocessor and displayed on the PC screen.

In industrial process control, analog loop controllers were used to automatically regulate temperature, flow, pressure, or other process conditions. The technology of these controllers ranged from purely mechanical integrators, through vacuum-tube and solid-state devices, to emulation of analog controllers by microprocessors.

Electronic analog computers

The similarity between linear mechanical components, such as springs and dashpots (viscous-fluid dampers), and electrical components, such as capacitors, inductors, and resistors is striking in terms of mathematics. They can be modeled using equations of the same form.

However, the difference between these systems is what makes analog computing useful. Complex systems often are not amenable to pen-and-paper analysis, and require some form of testing or simulation. Complex mechanical systems, such as suspensions for racing cars, are expensive to fabricate and hard to modify. And taking precise mechanical measurements during high-speed tests adds further difficulty.

By contrast, it is very inexpensive to build an electrical equivalent of a complex mechanical system, to simulate its behavior. Engineers arrange a few operational amplifiers (op amps) and some passive linear components to form a circuit that follows the same equations as the mechanical system being simulated. All measurements can be taken directly with an oscilloscope. In the circuit, the (simulated) stiffness of the spring, for instance, can be changed by adjusting the parameters of an integrator. The electrical system is an analogy to the physical system, hence the name, but it is much less expensive than a mechanical prototype, much easier to modify, and generally safer.

The electronic circuit can also be made to run faster or slower than the physical system being simulated. Experienced users of electronic analog computers said that they offered a comparatively intimate control and understanding of the problem, relative to digital simulations.

Electronic analog computers are especially well-suited to representing situations described by differential equations. Historically, they were often used when a system of differential equations proved very difficult to solve by traditional means. As a simple example, the dynamics of a spring-mass system can be described by the equation , with as the vertical position of a mass , the damping coefficient, the spring constant and the gravity of Earth. For analog computing, the equation is programmed as . The equivalent analog circuit consists of two integrators for the state variables (speed) and (position), one inverter, and three potentiometers.

Electronic analog computers have drawbacks: the value of the circuit's supply voltage limits the range over which the variables may vary (since the value of a variable is represented by a voltage on a particular wire). Therefore, each problem must be scaled so its parameters and dimensions can be represented using voltages that the circuit can supply —e.g., the expected magnitudes of the velocity and the position of a spring pendulum. Improperly scaled variables can have their values "clamped" by the limits of the supply voltage. Or if scaled too small, they can suffer from higher noise levels. Either problem can cause the circuit to produce an incorrect simulation of the physical system. (Modern digital simulations are much more robust to widely varying values of their variables, but are still not entirely immune to these concerns: floating-point digital calculations support a huge dynamic range, but can suffer from imprecision if tiny differences of huge values lead to numerical instability.)

The precision of the analog computer readout was limited chiefly by the precision of the readout equipment used, generally three or four significant figures. (Modern digital simulations are much better in this area. Digital arbitrary-precision arithmetic can provide any desired degree of precision.) However, in most cases the precision of an analog computer is absolutely sufficient given the uncertainty of the model characteristics and its technical parameters.

Many small computers dedicated to specific computations are still part of industrial regulation equipment, but from the 1950s to the 1970s, general-purpose analog computers were the only systems fast enough for real time simulation of dynamic systems, especially in the aircraft, military and aerospace field.

In the 1960s, the major manufacturer was Electronic Associates of Princeton, New Jersey, with its 231R Analog Computer (vacuum tubes, 20 integrators) and subsequently its EAI 8800 Analog Computer (solid state operational amplifiers, 64 integrators).[29] Its challenger was Applied Dynamics of Ann Arbor, Michigan.

Although the basic technology for analog computers is usually operational amplifiers (also called "continuous current amplifiers" because they have no low frequency limitation), in the 1960s an attempt was made in the French ANALAC computer to use an alternative technology: medium frequency carrier and non dissipative reversible circuits.

In the 1970s, every large company and administration concerned with problems in dynamics had an analog computing center, such as:

- In the US: NASA (Huntsville, Houston), Martin Marietta (Orlando), Lockheed, Westinghouse, Hughes Aircraft

- In Europe: CEA (French Atomic Energy Commission), MATRA, Aérospatiale, BAC (British Aircraft Corporation).

Analog–digital hybrids

Analog computing devices are fast, digital computing devices are more versatile and accurate, so the idea is to combine the two processes for the best efficiency. An example of such hybrid elementary device is the hybrid multiplier where one input is an analog signal, the other input is a digital signal and the output is analog. It acts as an analog potentiometer upgradable digitally. This kind of hybrid technique is mainly used for fast dedicated real time computation when computing time is very critical as signal processing for radars and generally for controllers in embedded systems.

In the early 1970s, analog computer manufacturers tried to tie together their analog computer with a digital computer to get the advantages of the two techniques. In such systems, the digital computer controlled the analog computer, providing initial set-up, initiating multiple analog runs, and automatically feeding and collecting data. The digital computer may also participate to the calculation itself using analog-to-digital and digital-to-analog converters.

The largest manufacturer of hybrid computers was Electronics Associates. Their hybrid computer model 8900 was made of a digital computer and one or more analog consoles. These systems were mainly dedicated to large projects such as the Apollo program and Space Shuttle at NASA, or Ariane in Europe, especially during the integration step where at the beginning everything is simulated, and progressively real components replace their simulated part.[30]

Only one company was known as offering general commercial computing services on its hybrid computers, CISI of France, in the 1970s.

The best reference in this field is the 100,000 simulation runs for each certification of the automatic landing systems of Airbus and Concorde aircraft.[31]

After 1980, purely digital computers progressed more and more rapidly and were fast enough to compete with analog computers. One key to the speed of analog computers was their fully parallel computation, but this was also a limitation. The more equations required for a problem, the more analog components were needed, even when the problem wasn't time critical. "Programming" a problem meant interconnecting the analog operators; even with a removable wiring panel this was not very versatile. Today there are no more big hybrid computers, but only hybrid components.[citation needed]

Implementations

This section needs additional citations for verification. (March 2023) |

Mechanical analog computers

While a wide variety of mechanisms have been developed throughout history, some stand out because of their theoretical importance, or because they were manufactured in significant quantities.

Most practical mechanical analog computers of any significant complexity used rotating shafts to carry variables from one mechanism to another. Cables and pulleys were used in a Fourier synthesizer, a tide-predicting machine, which summed the individual harmonic components. Another category, not nearly as well known, used rotating shafts only for input and output, with precision racks and pinions. The racks were connected to linkages that performed the computation. At least one U.S. Naval sonar fire control computer of the later 1950s, made by Librascope, was of this type, as was the principal computer in the Mk. 56 Gun Fire Control System.

Online, there is a remarkably clear illustrated reference (OP 1140)[32] that describes the fire control computer mechanisms.[32] For adding and subtracting, precision miter-gear differentials were in common use in some computers; the Ford Instrument Mark I Fire Control Computer contained about 160 of them.

Integration with respect to another variable was done by a rotating disc driven by one variable. Output came from a pick-off device (such as a wheel) positioned at a radius on the disc proportional to the second variable. (A carrier with a pair of steel balls supported by small rollers worked especially well. A roller, its axis parallel to the disc's surface, provided the output. It was held against the pair of balls by a spring.)

Arbitrary functions of one variable were provided by cams, with gearing to convert follower movement to shaft rotation.

Functions of two variables were provided by three-dimensional cams. In one good design, one of the variables rotated the cam. A hemispherical follower moved its carrier on a pivot axis parallel to that of the cam's rotating axis. Pivoting motion was the output. The second variable moved the follower along the axis of the cam. One practical application was ballistics in gunnery.

Coordinate conversion from polar to rectangular was done by a mechanical resolver (called a "component solver" in US Navy fire control computers). Two discs on a common axis positioned a sliding block with pin (stubby shaft) on it. One disc was a face cam, and a follower on the block in the face cam's groove set the radius. The other disc, closer to the pin, contained a straight slot in which the block moved. The input angle rotated the latter disc (the face cam disc, for an unchanging radius, rotated with the other (angle) disc; a differential and a few gears did this correction).

Referring to the mechanism's frame, the location of the pin corresponded to the tip of the vector represented by the angle and magnitude inputs. Mounted on that pin was a square block.

Rectilinear-coordinate outputs (both sine and cosine, typically) came from two slotted plates, each slot fitting on the block just mentioned. The plates moved in straight lines, the movement of one plate at right angles to that of the other. The slots were at right angles to the direction of movement. Each plate, by itself, was like a Scotch yoke, known to steam engine enthusiasts.

During World War II, a similar mechanism converted rectilinear to polar coordinates, but it was not particularly successful and was eliminated in a significant redesign (USN, Mk. 1 to Mk. 1A).

Multiplication was done by mechanisms based on the geometry of similar right triangles. Using the trigonometric terms for a right triangle, specifically opposite, adjacent, and hypotenuse, the adjacent side was fixed by construction. One variable changed the magnitude of the opposite side. In many cases, this variable changed sign; the hypotenuse could coincide with the adjacent side (a zero input), or move beyond the adjacent side, representing a sign change.

Typically, a pinion-operated rack moving parallel to the (trig.-defined) opposite side would position a slide with a slot coincident with the hypotenuse. A pivot on the rack let the slide's angle change freely. At the other end of the slide (the angle, in trig. terms), a block on a pin fixed to the frame defined the vertex between the hypotenuse and the adjacent side.

At any distance along the adjacent side, a line perpendicular to it intersects the hypotenuse at a particular point. The distance between that point and the adjacent side is some fraction that is the product of 1 the distance from the vertex, and 2 the magnitude of the opposite side.

The second input variable in this type of multiplier positions a slotted plate perpendicular to the adjacent side. That slot contains a block, and that block's position in its slot is determined by another block right next to it. The latter slides along the hypotenuse, so the two blocks are positioned at a distance from the (trig.) adjacent side by an amount proportional to the product.

To provide the product as an output, a third element, another slotted plate, also moves parallel to the (trig.) opposite side of the theoretical triangle. As usual, the slot is perpendicular to the direction of movement. A block in its slot, pivoted to the hypotenuse block positions it.

A special type of integrator, used at a point where only moderate accuracy was needed, was based on a steel ball, instead of a disc. It had two inputs, one to rotate the ball, and the other to define the angle of the ball's rotating axis. That axis was always in a plane that contained the axes of two movement pick-off rollers, quite similar to the mechanism of a rolling-ball computer mouse (in that mechanism, the pick-off rollers were roughly the same diameter as the ball). The pick-off roller axes were at right angles.

A pair of rollers "above" and "below" the pick-off plane were mounted in rotating holders that were geared together. That gearing was driven by the angle input, and established the rotating axis of the ball. The other input rotated the "bottom" roller to make the ball rotate.

Essentially, the whole mechanism, called a component integrator, was a variable-speed drive with one motion input and two outputs, as well as an angle input. The angle input varied the ratio (and direction) of coupling between the "motion" input and the outputs according to the sine and cosine of the input angle.

Although they did not accomplish any computation, electromechanical position servos were essential in mechanical analog computers of the "rotating-shaft" type for providing operating torque to the inputs of subsequent computing mechanisms, as well as driving output data-transmission devices such as large torque-transmitter synchros in naval computers.

Other readout mechanisms, not directly part of the computation, included internal odometer-like counters with interpolating drum dials for indicating internal variables, and mechanical multi-turn limit stops.

Considering that accurately controlled rotational speed in analog fire-control computers was a basic element of their accuracy, there was a motor with its average speed controlled by a balance wheel, hairspring, jeweled-bearing differential, a twin-lobe cam, and spring-loaded contacts (ship's AC power frequency was not necessarily accurate, nor dependable enough, when these computers were designed).

Electronic analog computers

Electronic analog computers typically have front panels with numerous jacks (single-contact sockets) that permit patch cords (flexible wires with plugs at both ends) to create the interconnections that define the problem setup. In addition, there are precision high-resolution potentiometers (variable resistors) for setting up (and, when needed, varying) scale factors. In addition, there is usually a zero-center analog pointer-type meter for modest-accuracy voltage measurement. Stable, accurate voltage sources provide known magnitudes.

Typical electronic analog computers contain anywhere from a few to a hundred or more operational amplifiers ("op amps"), named because they perform mathematical operations. Op amps are a particular type of feedback amplifier with very high gain and stable input (low and stable offset). They are always used with precision feedback components that, in operation, all but cancel out the currents arriving from input components. The majority of op amps in a representative setup are summing amplifiers, which add and subtract analog voltages, providing the result at their output jacks. As well, op amps with capacitor feedback are usually included in a setup; they integrate the sum of their inputs with respect to time.

Integrating with respect to another variable is the nearly exclusive province of mechanical analog integrators; it is almost never done in electronic analog computers. However, given that a problem solution does not change with time, time can serve as one of the variables.

Other computing elements include analog multipliers, nonlinear function generators, and analog comparators.

Electrical elements such as inductors and capacitors used in electrical analog computers had to be carefully manufactured to reduce non-ideal effects. For example, in the construction of AC power network analyzers, one motive for using higher frequencies for the calculator (instead of the actual power frequency) was that higher-quality inductors could be more easily made. Many general-purpose analog computers avoided the use of inductors entirely, re-casting the problem in a form that could be solved using only resistive and capacitive elements, since high-quality capacitors are relatively easy to make.

The use of electrical properties in analog computers means that calculations are normally performed in real time (or faster), at a speed determined mostly by the frequency response of the operational amplifiers and other computing elements. In the history of electronic analog computers, there were some special high-speed types.

Nonlinear functions and calculations can be constructed to a limited precision (three or four digits) by designing function generators—special circuits of various combinations of resistors and diodes to provide the nonlinearity. Typically, as the input voltage increases, progressively more diodes conduct.

When compensated for temperature, the forward voltage drop of a transistor's base-emitter junction can provide a usably accurate logarithmic or exponential function. Op amps scale the output voltage so that it is usable with the rest of the computer.

Any physical process that models some computation can be interpreted as an analog computer. Some examples, invented for the purpose of illustrating the concept of analog computation, include using a bundle of spaghetti as a model of sorting numbers; a board, a set of nails, and a rubber band as a model of finding the convex hull of a set of points; and strings tied together as a model of finding the shortest path in a network. These are all described in Dewdney (1984).

Components

Analog computers often have a complicated framework, but they have, at their core, a set of key components that perform the calculations. The operator manipulates these through the computer's framework.

Key hydraulic components might include pipes, valves and containers.

Key mechanical components might include rotating shafts for carrying data within the computer, miter gear differentials, disc/ball/roller integrators, cams (2-D and 3-D), mechanical resolvers and multipliers, and torque servos.

Key electrical/electronic components might include:

- precision resistors and capacitors

- operational amplifiers

- multipliers

- potentiometers

- fixed-function generators

The core mathematical operations used in an electric analog computer are:

- addition

- integration with respect to time

- inversion

- multiplication

- exponentiation

- logarithm

- division

In some analog computer designs, multiplication is much preferred to division. Division is carried out with a multiplier in the feedback path of an Operational Amplifier.

Differentiation with respect to time is not frequently used, and in practice is avoided by redefining the problem when possible. It corresponds in the frequency domain to a high-pass filter, which means that high-frequency noise is amplified; differentiation also risks instability.

Limitations

In general, analog computers are limited by non-ideal effects. An analog signal is composed of four basic components: DC and AC magnitudes, frequency, and phase. The real limits of range on these characteristics limit analog computers. Some of these limits include the operational amplifier offset, finite gain, and frequency response, noise floor, non-linearities, temperature coefficient, and parasitic effects within semiconductor devices. For commercially available electronic components, ranges of these aspects of input and output signals are always figures of merit.

Decline

In the 1950s to 1970s, digital computers based on first vacuum tubes, transistors, integrated circuits and then micro-processors became more economical and precise. This led digital computers to largely replace analog computers. Even so, some research in analog computation is still being done. A few universities still use analog computers to teach control system theory. The American company Comdyna manufactured small analog computers.[33] At Indiana University Bloomington, Jonathan Mills has developed the Extended Analog Computer based on sampling voltages in a foam sheet.[34] At the Harvard Robotics Laboratory,[35] analog computation is a research topic. Lyric Semiconductor's error correction circuits use analog probabilistic signals. Slide rules are still popular among aircraft personnel.[citation needed]

Resurgence

With the development of very-large-scale integration (VLSI) technology, Yannis Tsividis' group at Columbia University has been revisiting analog/hybrid computers design in standard CMOS process. Two VLSI chips have been developed, an 80th-order analog computer (250 nm) by Glenn Cowan[36] in 2005[37] and a 4th-order hybrid computer (65 nm) developed by Ning Guo in 2015,[38] both targeting at energy-efficient ODE/PDE applications. Glenn's chip contains 16 macros, in which there are 25 analog computing blocks, namely integrators, multipliers, fanouts, few nonlinear blocks. Ning's chip contains one macro block, in which there are 26 computing blocks including integrators, multipliers, fanouts, ADCs, SRAMs and DACs. Arbitrary nonlinear function generation is made possible by the ADC+SRAM+DAC chain, where the SRAM block stores the nonlinear function data. The experiments from the related publications revealed that VLSI analog/hybrid computers demonstrated about 1–2 orders magnitude of advantage in both solution time and energy while achieving accuracy within 5%, which points to the promise of using analog/hybrid computing techniques in the area of energy-efficient approximate computing.[citation needed] In 2016, a team of researchers developed a compiler to solve differential equations using analog circuits.[39]

Analog computers are also used in neuromorphic computing, and in 2021 a group of researchers have shown that a specific type of artificial neural network called a spiking neural network was able to work with analog neuromorphic computers.[40]

Practical examples

These are examples of analog computers that have been constructed or practically used:

- Boeing B-29 Superfortress Central Fire Control System

- Deltar

- E6B flight computer

- Kerrison Predictor

- Leonardo Torres y Quevedo's Analogue Calculating Machines based on "fusee sans fin"

- Librascope, aircraft weight and balance computer

- Mechanical computer

- Mechanical integrators, for example, the planimeter

- Nomogram

- Norden bombsight

- Rangekeeper, and related fire control computers

- Scanimate

- Torpedo Data Computer

- Torquetum

- Water integrator

- MONIAC, economic modelling

- Ishiguro Storm Surge Computer

Analog (audio) synthesizers can also be viewed as a form of analog computer, and their technology was originally based in part on electronic analog computer technology. The ARP 2600's Ring Modulator was actually a moderate-accuracy analog multiplier.

The Simulation Council (or Simulations Council) was an association of analog computer users in US. It is now known as The Society for Modeling and Simulation International. The Simulation Council newsletters from 1952 to 1963 are available online and show the concerns and technologies at the time, and the common use of analog computers for missilry.[41]

See also

- Analog neural network

- Analogical models

- Chaos theory

- Differential equation

- Dynamical system

- Field-programmable analog array

- General purpose analog computer

- Lotfernrohr 7 series of WW II German bombsights

- Signal (electrical engineering)

- Voskhod Spacecraft "Globus" IMP navigation instrument

- XY-writer

Notes

- "Simulation Council newsletter". Archived from the original on 28 May 2013.

References

- A.K. Dewdney. "On the Spaghetti Computer and Other Analog Gadgets for Problem Solving", Scientific American, 250(6):19–26, June 1984. Reprinted in The Armchair Universe, by A.K. Dewdney, published by W.H. Freeman & Company (1988), ISBN 0-7167-1939-8.

- Universiteit van Amsterdam Computer Museum. (2007). Analog Computers.

- Jackson, Albert S., "Analog Computation". London & New York: McGraw-Hill, 1960. OCLC 230146450

External links

- Biruni's eight-geared lunisolar calendar in "Archaeology: High tech from Ancient Greece", François Charette, Nature 444, 551–552(30 November 2006), doi:10.1038/444551a

- The first computers

- Large collection of electronic analog computers with lots of pictures, documentation and samples of implementations (some in German)

- Large collection of old analog and digital computers at Old Computer Museum

- A great disappearing act: the electronic analogue computer Chris Bissell, The Open University, Milton Keynes, UK Accessed February 2007

- German computer museum with still runnable analog computers

- Analog computer basics Archived 6 August 2009 at the Wayback Machine

- Analog computer trumps Turing model

- Harvard Robotics Laboratory Analog Computation

- The Enns Power Network Computer – an analog computer for the analysis of electric power systems (advertisement from 1955)

- Librascope Development Company – Type LC-1 WWII Navy PV-1 "Balance Computor"

https://en.wikipedia.org/wiki/Analog_computer

https://en.wikipedia.org/wiki/Shadow_square

https://en.wikipedia.org/wiki/Deltar

https://en.wikipedia.org/wiki/Minoan_Moulds_of_Palaikastro

Resistance paper,[1][2] also known as conductive paper and by the trade name Teledeltos paper is paper impregnated or coated with a conductive substance such that the paper exhibits a uniform and known surface resistivity. Resistance paper and conductive ink were commonly used as an analog two-dimensional[3] electromagnetic field solver. Teledeltos paper is a particular type of resistance paper.

References

- "Resistance paper is very simple to use for two-dimensional problems..." Ramo, Simon; Whinnery, John R.; van Duzer, Theodore (1965). Fields and Waves in Communication Electronics. John Wiley. p. 168–170. ISBN 9780471707202.

https://en.wikipedia.org/wiki/Resistance_paper

https://en.wikipedia.org/wiki/AN/MPQ-2

https://en.wikipedia.org/wiki/Battenberg_course_indicator

https://en.wikipedia.org/wiki/BT-Epoxy

https://en.wikipedia.org/wiki/Composite_epoxy_material

https://en.wikipedia.org/wiki/Cyanate_ester

https://en.wikipedia.org/wiki/Polyimide

https://en.wikipedia.org/wiki/Polytetrafluoroethylene

https://en.wikipedia.org/wiki/FR-4

https://en.wikipedia.org/wiki/FR-2

https://en.wikipedia.org/wiki/List_of_EDA_companies

https://en.wikipedia.org/wiki/Category:Electronics_substrates

https://en.wikipedia.org/wiki/Barcode

https://en.wikipedia.org/wiki/Serial_number

A magnetic field is a vector field that describes the magnetic influence on moving electric charges, electric currents,[1]: ch1 [2] and magnetic materials. A moving charge in a magnetic field experiences a force perpendicular to its own velocity and to the magnetic field.[1]: ch13 [3]: 278 A permanent magnet's magnetic field pulls on ferromagnetic materials such as iron, and attracts or repels other magnets. In addition, a nonuniform magnetic field exerts minuscule forces on "nonmagnetic" materials by three other magnetic effects: paramagnetism, diamagnetism, and antiferromagnetism, although these forces are usually so small they can only be detected by laboratory equipment. Magnetic fields surround magnetized materials, and are created by electric currents such as those used in electromagnets, and by electric fields varying in time. Since both strength and direction of a magnetic field may vary with location, it is described mathematically by a function assigning a vector to each point of space, called a vector field.

In electromagnetics, the term "magnetic field" is used for two distinct but closely related vector fields denoted by the symbols B and H. In the International System of Units, the unit of H, magnetic field strength, is the ampere per meter (A/m).[4]: 22 The unit of B, the magnetic flux density, is the tesla (in SI base units: kilogram per second2 per ampere),[4]: 21 which is equivalent to newton per meter per ampere. H and B differ in how they account for magnetization. In vacuum, the two fields are related through the vacuum permeability, ; but in a magnetized material, the quantities on each side of this equation differ by the magnetization field of the material.

Magnetic fields are produced by moving electric charges and the intrinsic magnetic moments of elementary particles associated with a fundamental quantum property, their spin.[5][1]: ch1 Magnetic fields and electric fields are interrelated and are both components of the electromagnetic force, one of the four fundamental forces of nature.

Magnetic fields are used throughout modern technology, particularly in electrical engineering and electromechanics. Rotating magnetic fields are used in both electric motors and generators. The interaction of magnetic fields in electric devices such as transformers is conceptualized and investigated as magnetic circuits. Magnetic forces give information about the charge carriers in a material through the Hall effect. The Earth produces its own magnetic field, which shields the Earth's ozone layer from the solar wind and is important in navigation using a compass.

Description

The force on an electric charge depends on its location, speed, and direction; two vector fields are used to describe this force.[1]: ch1 The first is the electric field, which describes the force acting on a stationary charge and gives the component of the force that is independent of motion. The magnetic field, in contrast, describes the component of the force that is proportional to both the speed and direction of charged particles.[1]: ch13 The field is defined by the Lorentz force law and is, at each instant, perpendicular to both the motion of the charge and the force it experiences.

There are two different, but closely related vector fields which are both sometimes called the "magnetic field" written B and H.[note 1] While both the best names for these fields and exact interpretation of what these fields represent has been the subject of long running debate, there is wide agreement about how the underlying physics work.[6] Historically, the term "magnetic field" was reserved for H while using other terms for B, but many recent textbooks use the term "magnetic field" to describe B as well as or in place of H.[note 2] There are many alternative names for both (see sidebar).

The B-field

| Alternative names for B[7] |

|---|

The magnetic field vector B at any point can be defined as the vector that, when used in the Lorentz force law, correctly predicts the force on a charged particle at that point:[9][10]: 204

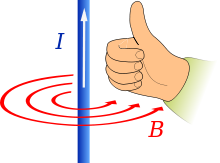

Here F is the force on the particle, q is the particle's electric charge, v, is the particle's velocity, and × denotes the cross product. The direction of force on the charge can be determined by a mnemonic known as the right-hand rule (see the figure).[note 3] Using the right hand, pointing the thumb in the direction of the current, and the fingers in the direction of the magnetic field, the resulting force on the charge points outwards from the palm. The force on a negatively charged particle is in the opposite direction. If both the speed and the charge are reversed then the direction of the force remains the same. For that reason a magnetic field measurement (by itself) cannot distinguish whether there is a positive charge moving to the right or a negative charge moving to the left. (Both of these cases produce the same current.) On the other hand, a magnetic field combined with an electric field can distinguish between these, see Hall effect below.

The first term in the Lorentz equation is from the theory of electrostatics, and says that a particle of charge q in an electric field E experiences an electric force:

The second term is the magnetic force:[10]

Using the definition of the cross product, the magnetic force can also be written as a scalar equation:[9]: 357

where Fmagnetic, v, and B are the scalar magnitude of their respective vectors, and θ is the angle between the velocity of the particle and the magnetic field. The vector B is defined as the vector field necessary to make the Lorentz force law correctly describe the motion of a charged particle. In other words,[9]: 173–4

[T]he command, "Measure the direction and magnitude of the vector B at such and such a place," calls for the following operations: Take a particle of known charge q. Measure the force on q at rest, to determine E. Then measure the force on the particle when its velocity is v; repeat with v in some other direction. Now find a B that makes the Lorentz force law fit all these results—that is the magnetic field at the place in question.

The B field can also be defined by the torque on a magnetic dipole, m.[11]: 174

The SI unit of B is tesla (symbol: T).[note 4] The Gaussian-cgs unit of B is the gauss (symbol: G). (The conversion is 1 T ≘ 10000 G.[12][13]) One nanotesla corresponds to 1 gamma (symbol: γ).[13]

The H-field

| Alternative names for H[7] |

|---|

The magnetic H field is defined:[10]: 269 [11]: 192 [1]: ch36

Where is the vacuum permeability, and M is the magnetization vector. In a vacuum, B and H are proportional to each other. Inside a material they are different (see H and B inside and outside magnetic materials). The SI unit of the H-field is the ampere per metre (A/m),[14] and the CGS unit is the oersted (Oe).[12][9]: 286

Measurement

An instrument used to measure the local magnetic field is known as a magnetometer. Important classes of magnetometers include using induction magnetometers (or search-coil magnetometers) which measure only varying magnetic fields, rotating coil magnetometers, Hall effect magnetometers, NMR magnetometers, SQUID magnetometers, and fluxgate magnetometers. The magnetic fields of distant astronomical objects are measured through their effects on local charged particles. For instance, electrons spiraling around a field line produce synchrotron radiation that is detectable in radio waves. The finest precision for a magnetic field measurement was attained by Gravity Probe B at 5 aT (5×10−18 T).[15]

Visualization

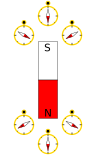

Right: compass needles point in the direction of the local magnetic field, towards a magnet's south pole and away from its north pole.

The field can be visualized by a set of magnetic field lines, that follow the direction of the field at each point. The lines can be constructed by measuring the strength and direction of the magnetic field at a large number of points (or at every point in space). Then, mark each location with an arrow (called a vector) pointing in the direction of the local magnetic field with its magnitude proportional to the strength of the magnetic field. Connecting these arrows then forms a set of magnetic field lines. The direction of the magnetic field at any point is parallel to the direction of nearby field lines, and the local density of field lines can be made proportional to its strength. Magnetic field lines are like streamlines in fluid flow, in that they represent a continuous distribution, and a different resolution would show more or fewer lines.

An advantage of using magnetic field lines as a representation is that many laws of magnetism (and electromagnetism) can be stated completely and concisely using simple concepts such as the "number" of field lines through a surface. These concepts can be quickly "translated" to their mathematical form. For example, the number of field lines through a given surface is the surface integral of the magnetic field.[9]: 237