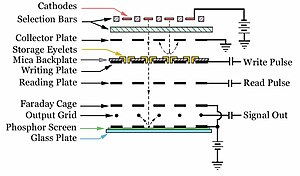

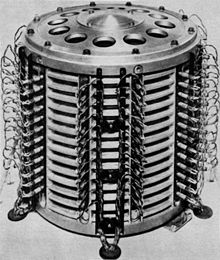

The Williams tube, or the Williams–Kilburn tube named after inventors Freddie Williams and Tom Kilburn, is an early form of computer memory.[1][2] It was the first random-access digital storage device, and was used successfully in several early computers.[3]

The Williams tube works by displaying a grid of dots on a cathode-ray tube (CRT). Due to the way CRTs work, this creates a small charge of static electricity over each dot. The charge at the location of each of the dots is read by a thin metal sheet just in front of the display. Since the display faded over time, it was periodically refreshed. It operates faster than earlier acoustic delay-line memory, at the speed of the electrons inside the vacuum tube, rather than at the speed of sound. The system was adversely affected by nearby electrical fields, and required frequent adjustment to remain operational. Williams–Kilburn tubes were used primarily on high-speed computer designs.

Williams and Kilburn applied for British patents on 11 December 1946,[4] and 2 October 1947,[5] followed by United States patent applications on 10 December 1947,[6] and 16 May 1949.[7]

https://en.wikipedia.org/wiki/Williams_tube

Delay-line memory is a form of computer memory, now obsolete, that was used on some of the earliest digital computers. Like many modern forms of electronic computer memory, delay-line memory was a refreshable memory, but as opposed to modern random-access memory, delay-line memory was sequential-access.

Analog delay line technology had been used since the 1920s to delay the propagation of analog signals. When a delay line is used as a memory device, an amplifier and a pulse shaper are connected between the output of the delay line and the input. These devices recirculate the signals from the output back into the input, creating a loop that maintains the signal as long as power is applied. The shaper ensures the pulses remain well-formed, removing any degradation due to losses in the medium.

The memory capacity is determined by dividing the time taken to transmit one bit into the time it takes for data to circulate through the delay line. Early delay-line memory systems had capacities of a few thousand bits, with recirculation times measured in microseconds. To read or write a particular bit stored in such a memory, it is necessary to wait for that bit to circulate through the delay line into the electronics. The delay to read or write any particular bit is no longer than the recirculation time.

Use of a delay line for a computer memory was invented by J. Presper Eckert in the mid-1940s for use in computers such as the EDVAC and the UNIVAC I. Eckert and John Mauchly applied for a patent for a delay-line memory system on October 31, 1947; the patent was issued in 1953.[1] This patent focused on mercury delay lines, but it also discussed delay lines made of strings of inductors and capacitors, magnetostrictive delay lines, and delay lines built using rotating disks to transfer data to a read head at one point on the circumference from a write head elsewhere around the circumference.

https://en.wikipedia.org/wiki/Delay-line_memory

Mellon optical memory was an early form of computer memory invented at the Mellon Institute (today part of Carnegie Mellon University) in 1951.[1][2] The device used a combination of photoemissive and phosphorescent materials to produce a "light loop" between two surfaces. The presence or lack of light, detected by a photocell, represented a one or zero. Although promising, the system was rendered obsolete with the introduction of magnetic-core memory in the early 1950s. It appears that the system was never used in production.

| Computer memory and data storage types |

|---|

|

|

| Volatile |

|---|

|

|

|

|

| Non-volatile |

|---|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Description

The main memory element of the Mellon device consisted of a very large (television sized) square vacuum tube consisting of two slightly separated flat glass plates. The inner side of one of the plates was coated with a photoemissive material that released electrons when struck by light. The inside of the other plate was coated with a phosphorescent material, that would release light when struck by electrons.

The tube was charged with a high electrical voltage. When an external source of light struck the photoemissive layer, it would release a shower of electrons. The electrons would be pulled toward the positive charge on the phosphorescent layer, traveling through the vacuum. When they struck the phosphorescent layer, they would release a shower of photons (light) travelling in all directions. Some of these photons would travel back to the photoemissive layer, where they would cause a second shower of electrons to be released. To ensure that the light did not activate nearby areas of the photoemissive material, a baffle was used inside the tube, dividing the device up into a grid of cells.

The process of electron emission causing photoemission in turn causing electron emission is what provided the memory action. This process would continue for a short time; the light emitted by the phosphorescent layer was much smaller than the amount of energy absorbed by it from the electrons, so the total amount of light in the cell faded away at a rate determined by the characteristics of the phosphorescent material.

Overall the system was similar to the better-known Williams tube. The Williams tube used the phosphorescent front of a single CRT to create small spots of static electricity on a plate arranged in front of the tube. However, the stability of these dots proved difficult to maintain in the presence of external electrical signals, which were common in computer settings. The Mellon system replaced the static charges with light, which was much more resistant to external influence.

Writing

Writing to the cell was accomplished by an external cathode ray tube (CRT) arranged in front of the photoemissive side of the grid. Cells were activated by using the deflection coils in the CRT to pull the beam into position in front of the cell, lighting up the front of the tube in that location. This initial pulse of light, focussed through a lens, would set the cell to the "on" state. Due to the way the photoemissive layer worked, focusing light on it again when it was already "lit up" would overload the material, stopping electrons from flowing out the other side into the interior of the cell. When the external light was then removed, the cell was dark, turning it off.

Reading

Reading the cells was accomplished by a grid of photocells arranged behind the phosphorescent layer, which emitted photons omnidirectionally. This allowed the cells to be read from the back of the device, as long as the phosphorescent layer was thin enough. To form a complete memory the system was arranged to be regenerative, with the output of the photocells being amplified and sent back into the CRT to refresh the cells periodically.

References

- Echert Jr., J.P., "A Survey of Digital Computer Memory Systems", Proceedings of the IRE, October 1953. Reprinted in IEEE Annals of the History of Computing, Vol.20, No.4, 1998

https://en.wikipedia.org/wiki/Mellon_optical_memory

The Selectron was an early form of digital computer memory developed by Jan A. Rajchman and his group at the Radio Corporation of America (RCA) under the direction of Vladimir K. Zworykin. It was a vacuum tube that stored digital data as electrostatic charges using technology similar to the Williams tube storage device. The team was never able to produce a commercially viable form of Selectron before magnetic-core memory became almost universal.

Development

Development of Selectron started in 1946 at the behest of John von Neumann of the Institute for Advanced Study,[1] who was in the midst of designing the IAS machine and was looking for a new form of high-speed memory.

RCA's original design concept had a capacity of 4096 bits, with a planned production of 200 by the end of 1946. They found the device to be much more difficult to build than expected, and they were still not available by the middle of 1948. As development dragged on, the IAS machine was forced to switch to Williams tubes for storage, and the primary customer for Selectron disappeared. RCA lost interest in the design and assigned its engineers to improve televisions[2]

A contract from the US Air Force led to a re-examination of the device in a 256-bit form. Rand Corporation took advantage of this project to switch their own IAS machine, the JOHNNIAC, to this new version of the Selectron, using 80 of them to provide 512 40-bit words of main memory. They signed a development contract with RCA to produce enough tubes for their machine at a projected cost of $500 per tube ($5631 in 2021).[2]

Around this time IBM expressed an interest in the Selectron as well, but this did not lead to additional production. As a result, RCA assigned their engineers to color television development, and put the Selectron in the hands of "the mothers-in-law of two deserving employees (the Chairman of the Board and the President)."[2]

Both the Selectron and the Williams tube were superseded in the market by the compact and cost-effective magnetic-core memory, in the early 1950s. The JOHNNIAC developers had decided to switch to core even before the first Selectron-based version had been completed.[2]

Principle of operation

Electrostatic storage

The Williams tube was an example of a general class of cathode ray tube (CRT) devices known as storage tubes.

The primary function of a conventional CRT is to display an image by lighting phosphor using a beam of electrons fired at it from an electron gun at the back of the tube. The target point of the beam is steered around the front of the tube though the use of deflection magnets or electrostatic plates.

Storage tubes were based on CRTs, sometimes unmodified. They relied on two normally undesirable principles of phosphor used in the tubes. One was that when electrons from the CRT's electron gun struck the phosphor to light it, some of the electrons "stuck" to the tube and caused a localized static electric charge to build up. The second was that the phosphor, like many materials, also released new electrons when struck by an electron beam, a process known as secondary emission.[3]

Secondary emission had the useful feature that the rate of electron release was significantly non-linear. When a voltage was applied that crossed a certain threshold, the rate of emission increased dramatically. This caused the lit spot to rapidly decay, which also caused any stuck electrons to be released as well. Visual systems used this process to erase the display, causing any stored pattern to rapidly fade. For computer uses it was the rapid release of the stuck charge that allowed it to be used for storage.

In the Williams tube, the electron gun at the back of an otherwise typical CRT is used to deposit a series of small patterns representing a 1 or 0 on the phosphor in a grid representing memory locations. To read the display, the beam scanned the tube again, this time set to a voltage very close to that of the secondary emission threshold. The patterns were selected to bias the tube very slightly positive or negative. When the stored static electricity was added to the voltage of the beam, the total voltage either crossed the secondary emission threshold or didn't. If it crossed the threshold, a burst of electrons was released as the dot decayed. This burst was read capacitively on a metal plate placed just in front of the display side of the tube.[4]

There were four general classes of storage tubes; the "surface redistribution type" represented by the Williams tube, the "barrier grid" system, which was unsuccessfully commercialized by RCA as the Radechon tube, the "sticking potential" type which was not used commercially, and the "holding beam" concept, of which the Selectron is a specific example.[5]

Holding beam concept

In the most basic implementation, the holding beam tube uses three electron guns; one for writing, one for reading, and a third "holding gun" that maintains the pattern. The general operation is very similar to the Williams tube in concept. The main difference was the holding gun, which fired continually and unfocussed so it covered the entire storage area on the phosphor. This caused the phosphor to be continually charged to a selected voltage, somewhat below that of the secondary emission threshold.[6]

Writing was accomplished by firing the writing gun at low voltage in a fashion similar to the Williams tube, adding a further voltage to the phosphor. Thus the storage pattern was the slight difference between two voltages stored on the tube, typically only a few tens of volts different.[6] In comparison, the Williams tube used much higher voltages, producing a pattern that could only be stored for a short period before it decayed below readability.

Reading was accomplished by scanning the reading gun across the storage area. This gun was set to a voltage that would cross the secondary emission threshold for the entire display. If the scanned area held the holding gun potential a certain number of electrons would be released, if it held the writing gun potential the number would be higher. The electrons were read on a grid of fine wires placed behind the display, making the system entirely self-contained. In contrast, the Williams tube's read plate was in front of the tube, and required continual mechanical adjustment to work properly.[6] The grid also had the advantage of breaking the display into individual spots without requiring the tight focus of the Williams system.

General operation was the same as the Williams system, but the holding concept had two major advantages. One was that it operated at much lower voltage differences and was thus able to safely store data for a longer period of time. The other was that the same deflection magnet drivers could be sent to several electron guns to produce a single larger device with no increase in complexity of the electronics.

Design

The Selectron further modified the basic holding gun concept through the use of individual metal eyelets that were used to store additional charge in a more predictable and long-lasting fashion.

Unlike a CRT where the electron gun is a single point source consisting of a filament and single charged accelerator, in the Selectron the "gun" is a plate and the accelerator is a grid of wires (thus borrowing some design notes from the barrier-grid tube). Switching circuits allow voltages to be applied to the wires to turn them on or off. When the gun fires through the eyelets, it is slightly defocussed. Some of the electrons strike the eyelet and deposit a charge on it.

The original 4096-bit Selectron[7] was a 10-inch-long (250 mm) by 3-inch-diameter (76 mm) vacuum tube configured as 1024 by 4 bits. It had an indirectly heated cathode running up the middle, surrounded by two separate sets of wires — one radial, one axial — forming a cylindrical grid array, and finally a dielectric storage material coating on the inside of four segments of an enclosing metal cylinder, called the signal plates. The bits were stored as discrete regions of charge on the smooth surfaces of the signal plates.

The two sets of orthogonal grid wires were normally "biased" slightly positive, so that the electrons from the cathode were accelerated through the grid to reach the dielectric. The continuous flow of electrons allowed the stored charge to be continuously regenerated by the secondary emission of electrons. To select a bit to be read from or written to, all but two adjacent wires on each of the two grids were biased negative, allowing current to flow to the dielectric at one location only.

In this respect, the Selectron works in the opposite sense of the Williams tube. In the Williams tube, the beam is continually scanning in a read/write cycle which is also used to regenerate data. In contrast, the Selectron is almost always regenerating the entire tube, only breaking this periodically to do actual reads and writes. This not only made operation faster due to the lack of required pauses but also meant the data was much more reliable as it was constantly refreshed.

Writing was accomplished by selecting a bit, as above, and then sending a pulse of potential, either positive or negative, to the signal plate. With a bit selected, electrons would be pulled onto (with a positive potential) or pushed from (negative potential) the dielectric. When the bias on the grid was dropped, the electrons were trapped on the dielectric as a spot of static electricity.

To read from the device, a bit location was selected and a pulse sent from the cathode. If the dielectric for that bit contained a charge, the electrons would be pushed off the dielectric and read as a brief pulse of current in the signal plate. No such pulse meant that the dielectric must not have held a charge.

The smaller capacity 256-bit (128 by 2 bits) "production" device[8] was in a similar vacuum-tube envelope. It was built with two storage arrays of discrete "eyelets" on a rectangular plate, separated by a row of eight cathodes. The pin count was reduced from 44 for the 4096-bit device down to 31 pins and two coaxial signal output connectors. This version included visible green phosphors in each eyelet[citation needed] so that the bit status could also be read by eye.

Patents

- U.S. Patent 2,494,670 Cylindrical 4096-bit Selectron

- U.S. Patent 2,604,606 Planar 256-bit Selectron

References

Citations

- Rajchman, JA (1951). "The Selective Electrostatic Storage Tube". RCA Review. 12 (1): 53–97.

Bibliography

- Eckert Jr., J. Presper (October 1953). "A Survey of Digital Computer Memory Systems" (PDF). Proceedings of the IRE. 41 (10): 1393–1406. doi:10.1109/jrproc.1953.274316. S2CID 8564797. Republished in IEEE Annals of the History of Computing, Volume 20 Number 4 (October 1988), pp. 11–28 doi:10.1109/85.728227

- Knoll, Max; Kazan, B. (1952). Storage Tubes and Their Basic Principles (PDF). John Wiley and Sons.

External links

- The Selectron

- Early Devices display: Memories — has a picture of a 256-bit Selectron about halfway down the page

- More pictures

- History of the RCA Selectron

https://en.wikipedia.org/wiki/Selectron_tube

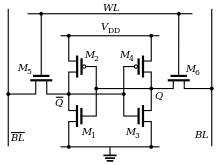

Thyristor RAM (T-RAM) is a type of random-access memory dating from 2009 invented and developed by T-RAM Semiconductor, which departs from the usual designs of memory cells, combining the strengths of the DRAM and SRAM: high density and high speed.[citation needed] This technology, which exploits the electrical property known as negative differential resistance and is called thin capacitively-coupled thyristor,[1] is used to create memory cells capable of very high packing densities. Due to this, the memory is highly scalable, and already has a storage density that is several times higher than found in conventional 6T SRAM. It was expected that the next generation of T-RAM memory will have the same density as DRAM.[by whom?]

This technology exploits the electrical property known as negative differential resistor and is characterized by the way in which its memory cells are built, combining DRAM efficiency in terms of space with that of SRAM in terms of speed. Very similar to the current 6T-SRAM, or SRAM memories with 6 cell transistors, is substantially different because the SRAM latch CMOS, consisting of 4 of the 6 transistors of each cell, is replaced by a bipolar latch PNP -NPN of a single Thyristor. The result is to significantly reduce the area occupied by each cell, thus obtaining a highly scalable memory that has already reached storage density several times higher than the current SRAM.

The Thyristor-RAM provides the best density / performance ratio available between the various integrated memories, matching the performance of an SRAM memory, but allowing 2-3 times greater storage density and lower power consumption. It is expected that the new generation of T-RAM memory will have the same storage density as DRAMs.

Related items

References

- "T - R a M". Archived from the original on 2009-05-23. Retrieved 2009-09-19. Description of the technology

External links

- T-RAM Semiconductor

- T-RAM Description

- Farid Nemati (T-RAM Semiconductor), Thyristor RAM (T-RAM): A High-Speed High-Density Embedded Memory. Technology for Nano-scale CMOS / 2007 Hot Chips Conference, August 21, 2007

- EE Times: GlobalFoundries to apply thyristor-RAM at 32-nm node

- Semiconductor International: GlobalFoundries Outlines 22 nm Roadmap[permanent dead link]

https://en.wikipedia.org/wiki/T-RAM

To filter out static objects, two pulses were compared, and returns with the same delay times were removed. To do this, the signal sent from the receiver to the display was split in two, with one path leading directly to the display and the second leading to a delay unit. The delay was carefully tuned to be some multiple of the time between pulses, or "pulse repetition frequency". This resulted in the delayed signal from an earlier pulse exiting the delay unit the same time that the signal from a newer pulse was received from the antenna. One of the signals was electrically inverted, typically the one from the delay, and the two signals were then combined and sent to the display. Any signal that was at the same location was nullified by the inverted signal from a previous pulse, leaving only the moving objects on the display.

Several different types of delay systems were invented for this purpose, with one common principle being that the information was stored acoustically in a medium. MIT experimented with a number of systems, including glass, quartz, steel and lead. The Japanese deployed a system consisting of a quartz element with a powdered glass coating that reduced surface waves that interfered with proper reception. The United States Naval Research Laboratory used steel rods wrapped into a helix, but this was useful only for low frequencies under 1 MHz. Raytheon used a magnesium alloy originally developed for making bells.[2]

https://en.wikipedia.org/wiki/Delay-line_memory

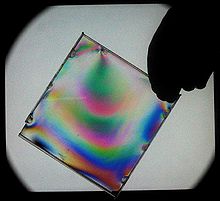

Magnetostriction (cf. electrostriction) is a property of magnetic materials that causes them to change their shape or dimensions during the process of magnetization. The variation of materials' magnetization due to the applied magnetic field changes the magnetostrictive strain until reaching its saturation value, λ. The effect was first identified in 1842 by James Joule when observing a sample of iron.[1]

This effect causes energy loss due to frictional heating in susceptible ferromagnetic cores. The effect is also responsible for the low-pitched humming sound that can be heard coming from transformers, where oscillating AC currents produce a changing magnetic field.[2]

https://en.wikipedia.org/wiki/Magnetostriction

Explanation

Internally, ferromagnetic materials have a structure that is divided into domains, each of which is a region of uniform magnetization. When a magnetic field is applied, the boundaries between the domains shift and the domains rotate; both of these effects cause a change in the material's dimensions. The reason that a change in the magnetic domains of a material results in a change in the material's dimensions is a consequence of magnetocrystalline anisotropy; it takes more energy to magnetize a crystalline material in one direction than in another. If a magnetic field is applied to the material at an angle to an easy axis of magnetization, the material will tend to rearrange its structure so that an easy axis is aligned with the field to minimize the free energy of the system. Since different crystal directions are associated with different lengths, this effect induces a strain in the material.[3]

The reciprocal effect, the change of the magnetic susceptibility (response to an applied field) of a material when subjected to a mechanical stress, is called the Villari effect. Two other effects are related to magnetostriction: the Matteucci effect is the creation of a helical anisotropy of the susceptibility of a magnetostrictive material when subjected to a torque and the Wiedemann effect is the twisting of these materials when a helical magnetic field is applied to them.

The Villari reversal is the change in sign of the magnetostriction of iron from positive to negative when exposed to magnetic fields of approximately 40 kA/m.

On magnetization, a magnetic material undergoes changes in volume which are small: of the order 10−6.

Magnetostrictive hysteresis loop

Like flux density, the magnetostriction also exhibits hysteresis versus the strength of the magnetizing field. The shape of this hysteresis loop (called "dragonfly loop") can be reproduced using the Jiles-Atherton model.[4]

Magnetostrictive materials

Magnetostrictive materials can convert magnetic energy into kinetic energy, or the reverse, and are used to build actuators and sensors.

The property can be quantified by the magnetostrictive coefficient, λ,

which may be positive or negative and is defined as the fractional

change in length as the magnetization of the material increases from

zero to the saturation value. The effect is responsible for the familiar "electric hum" (![]() Listen (help·info)) which can be heard near transformers and high power electrical devices.

Listen (help·info)) which can be heard near transformers and high power electrical devices.

Cobalt exhibits the largest room-temperature magnetostriction of a pure element at 60 microstrains. Among alloys, the highest known magnetostriction is exhibited by Terfenol-D, (Ter for terbium, Fe for iron, NOL for Naval Ordnance Laboratory, and D for dysprosium). Terfenol-D, TbxDy1−xFe2, exhibits about 2,000 microstrains in a field of 160 kA/m (2 kOe) at room temperature and is the most commonly used engineering magnetostrictive material.[5] Galfenol, FexGa1−x, and Alfer, FexAl1−x, are newer alloys that exhibit 200-400 microstrains at lower applied fields (~200 Oe) and have enhanced mechanical properties from the brittle Terfenol-D. Both of these alloys have <100> easy axes for magnetostriction and demonstrate sufficient ductility for sensor and actuator applications.[6]

Another very common magnetostrictive composite is the amorphous alloy Fe81Si3.5B13.5C2 with its trade name Metglas 2605SC. Favourable properties of this material are its high saturation-magnetostriction constant, λ, of about 20 microstrains and more, coupled with a low magnetic-anisotropy field strength, HA, of less than 1 kA/m (to reach magnetic saturation). Metglas 2605SC also exhibits a very strong ΔE-effect with reductions in the effective Young's modulus up to about 80% in bulk. This helps build energy-efficient magnetic MEMS.[citation needed]

Cobalt ferrite, CoFe2O4 (CoO·Fe2O3), is also mainly used for its magnetostrictive applications like sensors and actuators, thanks to its high saturation magnetostriction (~200 parts per million).[7] In the absence of rare-earth elements, it is a good substitute for Terfenol-D.[8] Moreover, its magnetostrictive properties can be tuned by inducing a magnetic uniaxial anisotropy.[9] This can be done by magnetic annealing,[10] magnetic field assisted compaction,[11] or reaction under uniaxial pressure.[12] This last solution has the advantage of being ultrafast (20 min), thanks to the use of spark plasma sintering.

In early sonar transducers during World War II, nickel was used as a magnetostrictive material. To alleviate the shortage of nickel, the Japanese navy used an iron-aluminium alloy from the Alperm family.

Mechanical behaviors of magnetostrictive alloys

Effect of microstructure on elastic strain

Single-crystal alloys exhibit superior microstrain, but are vulnerable to yielding due to the anisotropic mechanical properties of most metals. It has been observed that for polycrystalline alloys with a high area coverage of preferential grains for microstrain, the mechanical properties (ductility) of magnetostrictive alloys can be significantly improved. Targeted metallurgical processing steps promote abnormal grain growth of {011} grains in galfenol and alfenol thin sheets, which contain two easy axes for magnetic domain alignment during magnetostriction. This can be accomplished by adding particles such as boride species [13] and niobium carbide (NbC) [14] during initial chill casting of the ingot.

For a polycrystalline alloy, an established formula for the magnetostriction, λ, from known directional microstrain measurements is:[15]

λs = 1/5(2λ100+3λ111)

During subsequent hot rolling and recrystallization steps, particle strengthening occurs in which the particles introduce a “pinning” force at grain boundaries that hinders normal (stochastic) grain growth in an annealing step assisted by a H2S atmosphere. Thus, single-crystal-like texture (~90% {011} grain coverage) is attainable, reducing the interference with magnetic domain alignment and increasing microstrain attainable for polycrystalline alloys as measured by semiconducting strain gauges.[16] These surface textures can be visualized using electron backscatter diffraction (EBSD) or related diffraction techniques.

Compressive stress to induce domain alignment

For actuator applications, maximum rotation of magnetic moments leads to the highest possible magnetostriction output. This can be achieved by processing techniques such as stress annealing and field annealing. However, mechanical pre-stresses can also be applied to thin sheets to induce alignment perpendicular to actuation as long as the stress is below the buckling limit. For example, it has been demonstrated that applied compressive pre-stress of up to ~50 MPa can result in an increase of magnetostriction by ~90%. This is hypothesized to be due to a "jump" in initial alignment of domains perpendicular to applied stress and improved final alignment parallel to applied stress.[17]

Constitutive behavior of magnetostrictive materials

These materials generally show non-linear behavior with a change in applied magnetic field or stress. For small magnetic fields, linear piezomagnetic constitutive[18] behavior is enough. Non-linear magnetic behavior is captured using a classical macroscopic model such as the Preisach model[19] and Jiles-Atherton model.[20] For capturing magneto-mechanical behavior, Armstrong[21] proposed an "energy average" approach. More recently, Wahi et al.[22] have proposed a computationally efficient constitutive model wherein constitutive behavior is captured using a "locally linearizing" scheme.

Applications

- Electronic article surveillance – using magnetostriction to prevent shoplifting

- Magnetostrictive delay lines - an earlier form of computer memory

- Magnetostrictive loudspeakers and headphones

See also

- Electromagnetically induced acoustic noise and vibration

- Inverse magnetostrictive effect

- Wiedemann effect – a torsional force caused by magnetostriction

- Magnetomechanical effects for a collection of similar effects

- Magnetocaloric effect

- Electrostriction

- Piezoelectricity

- Piezomagnetism

- SoundBug

- FeONIC – developer of audio products using magnetostriction

- Terfenol-D

- Galfenol

References

- Wahi, Sajan K.; Kumar, Manik; Santapuri, Sushma; Dapino, Marcelo J. (2019-06-07). "Computationally efficient locally linearized constitutive model for magnetostrictive materials". Journal of Applied Physics. 125 (21): 215108. Bibcode:2019JAP...125u5108W. doi:10.1063/1.5086953. ISSN 0021-8979. S2CID 189954942.

External links

- Magnetostriction

- "Magnetostriction and transformer noise" (PDF). Archived from the original (PDF) on 2006-05-10.

- Invisible Speakers from Feonic that use Magnetostriction

- Magnetostrictive alloy maker: REMA-CN Archived 2017-03-21 at the Wayback Machine

https://en.wikipedia.org/wiki/Magnetostriction

https://en.wikipedia.org/wiki/Alternating_current

Alternating current (AC) is an electric current which periodically reverses direction and changes its magnitude continuously with time in contrast to direct current (DC), which flows only in one direction. Alternating current is the form in which electric power is delivered to businesses and residences, and it is the form of electrical energy that consumers typically use when they plug kitchen appliances, televisions, fans and electric lamps into a wall socket. A common source of DC power is a battery cell in a flashlight. The abbreviations AC and DC are often used to mean simply alternating and direct, respectively, as when they modify current or voltage.[1][2]

The usual waveform of alternating current in most electric power circuits is a sine wave, whose positive half-period corresponds with positive direction of the current and vice versa. In certain applications, like guitar amplifiers, different waveforms are used, such as triangular waves or square waves. Audio and radio signals carried on electrical wires are also examples of alternating current. These types of alternating current carry information such as sound (audio) or images (video) sometimes carried by modulation of an AC carrier signal. These currents typically alternate at higher frequencies than those used in power transmission.

https://en.wikipedia.org/wiki/Alternating_current

The pulse repetition frequency (PRF) is the number of pulses of a repeating signal in a specific time unit. The term is used within a number of technical disciplines, notably radar.

In radar, a radio signal of a particular carrier frequency is turned on and off; the term "frequency" refers to the carrier, while the PRF refers to the number of switches. Both are measured in terms of cycle per second, or hertz. The PRF is normally much lower than the frequency. For instance, a typical World War II radar like the Type 7 GCI radar had a basic carrier frequency of 209 MHz (209 million cycles per second) and a PRF of 300 or 500 pulses per second. A related measure is the pulse width, the amount of time the transmitter is turned on during each pulse.

After producing a brief pulse of radio signal, the transmitter is turned off in order for the receiver units to hear the reflections of that signal off distant targets. Since the radio signal has to travel out to the target and back again, the required inter-pulse quiet period is a function of the radar's desired range. Longer periods are required for longer range signals, requiring lower PRFs. Conversely, higher PRFs produce shorter maximum ranges, but broadcast more pulses, and thus radio energy, in a given time. This creates stronger reflections that make detection easier. Radar systems must balance these two competing requirements.

Using older electronics, PRFs were generally fixed to a specific value, or might be switched among a limited set of possible values. This gives each radar system a characteristic PRF, which can be used in electronic warfare to identify the type or class of a particular platform such as a ship or aircraft, or in some cases, a particular unit. Radar warning receivers in aircraft include a library of common PRFs which can identify not only the type of radar, but in some cases the mode of operation. This allowed pilots to be warned when an SA-2 SAM battery had "locked on", for instance. Modern radar systems are generally able to smoothly change their PRF, pulse width and carrier frequency, making identification much more difficult.

Sonar and lidar systems also have PRFs, as does any pulsed system. In the case of sonar, the term pulse repetition rate (PRR) is more common, although it refers to the same concept.

Introduction

Electromagnetic (e.g. radio or light) waves are conceptually pure single frequency phenomena while pulses may be mathematically thought of as composed of a number of pure frequencies that sum and nullify in interactions that create a pulse train of the specific amplitudes, PRRs, base frequencies, phase characteristics, et cetera (See Fourier Analysis). The first term (PRF) is more common in device technical literature (Electrical Engineering and some sciences), and the latter (PRR) more commonly used in military-aerospace terminology (especially United States armed forces terminologies) and equipment specifications such as training and technical manuals for radar and sonar systems.

The reciprocal of PRF (or PRR) is called the pulse repetition time (PRT), pulse repetition interval (PRI), or inter-pulse period (IPP), which is the elapsed time from the beginning of one pulse to the beginning of the next pulse. The IPP term is normally used when referring to the quantity of PRT periods to be processed digitally. Each PRT having a fixed number of range gates, but not all of them being used. For example, the APY-1 radar used 128 IPP's with a fixed 50 range gates, producing 128 Doppler filters using an FFT. The different number of range gates on each of the five PRF's all being less than 50.

Within radar technology PRF is important since it determines the maximum target range (Rmax) and maximum Doppler velocity (Vmax) that can be accurately determined by the radar.[1] Conversely, a high PRR/PRF can enhance target discrimination of nearer objects, such as a periscope or fast moving missile. This leads to use of low PRRs for search radar, and very high PRFs for fire control radars. Many dual-purpose and navigation radars—especially naval designs with variable PRRs—allow a skilled operator to adjust PRR to enhance and clarify the radar picture—for example in bad sea states where wave action generates false returns, and in general for less clutter, or perhaps a better return signal off a prominent landscape feature (e.g., a cliff).

https://en.wikipedia.org/wiki/Pulse_repetition_frequency

A radar system consists principally of an antenna, a transmitter, a receiver, and a display. The antenna is connected to the transmitter, which sends out a brief pulse of radio energy before being disconnected again. The antenna is then connected to the receiver, which amplifies any reflected signals and sends them to the display. Objects farther from the radar return echos later than those closer to the radar, which the display indicates visually as a "blip", which can be measured against a scale.

https://en.wikipedia.org/wiki/Delay-line_memory

The first practical de-cluttering system based on the concept was developed by J. Presper Eckert at the University of Pennsylvania's Moore School of Electrical Engineering. His solution used a column of mercury with piezo crystal transducers (a combination of speaker and microphone) at either end. Signals from the radar amplifier were sent to the transducer at one end of the tube, which would generate a small wave in the mercury. The wave would quickly travel to the far end of the tube, where it would be read back out by the other transducer, inverted, and sent to the display. Careful mechanical arrangement was needed to ensure that the delay time matched the inter-pulse timing of the radar being used.

All of these systems were suitable for conversion into a computer memory. The key was to recycle the signals within the memory system, so they would not disappear after traveling through the delay. This was relatively easy to arrange with simple electronics.

https://en.wikipedia.org/wiki/Delay-line_memory

Mercury was used because its acoustic impedance is close to that of the piezoelectric quartz crystals; this minimized the energy loss and the echoes when the signal was transmitted from crystal to medium and back again. The high speed of sound in mercury (1450 m/s) meant that the time needed to wait for a pulse to arrive at the receiving end was less than it would have been with a slower medium, such as air (343.2 m/s), but it also meant that the total number of pulses that could be stored in any reasonably sized column of mercury was limited. Other technical drawbacks of mercury included its weight, its cost, and its toxicity. Moreover, to get the acoustic impedances to match as closely as possible, the mercury had to be kept at a constant temperature. The system heated the mercury to a uniform above-room temperature setting of 40 °C (104 °F), which made servicing the tubes hot and uncomfortable work. (Alan Turing proposed the use of gin as an ultrasonic delay medium, claiming that it had the necessary acoustic properties.[3])

A considerable amount of engineering was needed to maintain a "clean" signal inside the tube. Large transducers were used to generate a very tight "beam" of sound that would not touch the walls of the tube, and care had to be taken to eliminate reflections from the far end of the tubes. The tightness of the beam then required considerable tuning to make sure that both transducers were pointed directly at each other. Since the speed of sound changes with temperature, the tubes were heated in large ovens to keep them at a precise temperature. Other systems[specify] instead adjusted the computer clock rate according to the ambient temperature to achieve the same effect.

EDSAC, the second full-scale stored-program digital computer, began operation with 256 35-bit words of memory, stored in 16 delay lines holding 560 bits each (words in the delay line were composed from 36 pulses, one pulse was used as a space between consecutive numbers).[4] The memory was later expanded to 512 words by adding a second set of 16 delay lines. In the UNIVAC I the capacity of an individual delay line was smaller, each column stored 120 bits (although the term "bit" was not in popular use at the time), requiring 7 large memory units with 18 columns each to make up a 1000-word store. Combined with their support circuitry and amplifiers, the memory subsystem formed its own walk-in room. The average access time was about 222 microseconds, which was considerably faster than the mechanical systems used on earlier computers.

CSIRAC, completed in November 1949, also used delay-line memory.

Some mercury delay-line memory devices produced audible sounds, which were described as akin to a human voice mumbling. This property gave rise to the slang term "mumble-tub" for these devices.

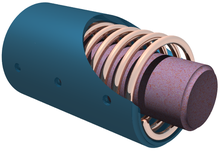

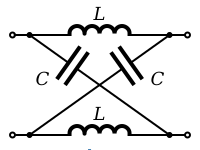

Magnetostrictive delay lines

A later version of the delay line used steel wires as the storage medium. Transducers were built by applying the magnetostrictive effect; small pieces of a magnetostrictive material, typically nickel, were attached to either side of the end of the wire, inside an electromagnet. When bits from the computer entered the magnets, the nickel would contract or expand (based on the polarity) and twist the end of the wire. The resulting torsional wave would then move down the wire just as the sound wave did down the mercury column.

Unlike the compressive wave used in earlier devices, torsional waves are considerably more resistant to problems caused by mechanical imperfections, so much that the wires could be wound into a loose coil and pinned to a board. Due to their ability to be coiled, the wire-based systems could be built as "long" as needed and tended to hold considerably more data per unit; 1 kbit units were typical on a board only 1 foot square (~30 cm × 30 cm). Of course, this also meant that the time needed to find a particular bit was somewhat longer as it travelled through the wire, and access times on the order of 500 microseconds were typical.

Delay-line memory was far less expensive and far more reliable per bit than flip-flops made from tubes, and yet far faster than a latching relay. It was used right into the late 1960s, notably on commercial machines like the LEO I, Highgate Wood Telephone Exchange, various Ferranti machines, and the IBM 2848 Display Control. Delay-line memory was also used for video memory in early terminals, where one delay line would typically store 4 lines of characters (4 lines × 40 characters per line × 6 bits per character = 960 bits in one delay line). They were also used very successfully in several models of early desktop electronic calculator, including the Friden EC-130 (1964) and EC-132, the Olivetti Programma 101 desktop programmable calculator introduced in 1965, and the Litton Monroe Epic 2000 and 3000 programmable calculators of 1967.

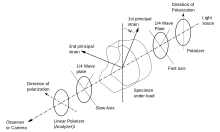

Piezoelectric delay lines

A similar solution to the magnetostrictive system was to use delay lines made entirely of a piezoelectric material, typically quartz. Current fed into one end of the crystal would generate a compressive wave that would flow to the other end, where it could be read. In effect, piezoelectric material simply replaced the mercury and transducers of a conventional mercury delay line with a single unit combining both. However, these solutions were fairly rare; growing crystals of the required quality in large sizes was not easy, which limited them to small sizes and thus small amounts of data storage.[5]

A better and more widespread use of piezoelectric delay lines was in European television sets. The European PAL standard for color broadcasts compares the signal from two successive lines of the image in order to avoid color shifting due to small phase shifts. By comparing two lines, one of which is inverted, the shifting is averaged, and the resulting signal more closely matches the original signal, even in the presence of interference. In order to compare the two lines, a piezoelectric delay unit to delay the signal by a time that is equal to the duration of each line, 64 µs, is inserted in one of the two signal paths that are compared.[6] In order to produce the required delay in a crystal of convenient size, the delay unit is shaped to reflect the signal multiple times through the crystal, thereby greatly reducing the required size of the crystal and thus producing a small, rectangular-shaped device.

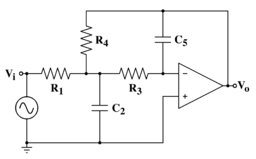

Electric delay lines

Electric delay lines are used for shorter delay times (nanoseconds to several microseconds). They consist of a long electric line or are made of discrete inductors and capacitors, which are arranged in a chain. To shorten the total length of the line, it can be wound around a metal tube, getting some more capacitance against ground and also more inductance due to the wire windings, which are lying close together.

Other examples are:

- short coaxial or microstrip lines for phase matching in high-frequency circuits or antennas,

- hollow resonator lines in magnetrons and klystrons as helices in travelling-wave tubes to match the velocity of the electrons to the velocity of the electromagnetic waves,

- undulators in free-electron lasers.

Another way to create a delay time is to implement a delay line in an integrated circuit storage device. This can be done digitally or with a discrete analogue method. The analogue one uses bucket-brigade devices or charge-coupled devices (CCD), which transport a stored electric charge stepwise from one end to the other. Both digital and analog methods are bandwidth limited at the upper end to the half of the clock frequency, which determines the steps of transportation.

In modern computers operating at gigahertz speeds, millimeter differences in the length of conductors in a parallel data bus can cause data-bit skew, which can lead to data corruption or reduced processing performance. This is remedied by making all conductor paths of similar length, delaying the arrival time for what would otherwise be shorter travel distances by using zig-zagging traces.

References

- Backers, F.T. (1968). Ultrasonic delay lines for the PAL colour-television system (PDF) (Ph.D.). Eindhoven, Netherlands: Technische Universiteit. pp. 7–8.

Backers, F. Th. (1968). "A delay line for PAL colour television receivers" (PDF). Philips Technical Review. 29: 243–251.

External links

- Acoustic Delay Line Memory – has an image of a Ferranti wire-based system about halfway down the page

- Delay line memories – contains a diagram of the magnetostrictive transducer

- Litton Monroe Epic 3000 - Shows details of the torsion delay lines inside this electronic calculator of 1967

- Magnetostrictive memory, still used in a German computer museum

- U.S. Patent 2,629,827 "Memory System", Eckert–Mauchly Computer Corporation, filed October 1947, patented February 1953

- Display Terminal built with 32 TV delay lines Complete description

- "What store for EDSAC?". The National Museum of Computing. 13 September 2013. How nickel delay line memory works, some information about the construction

- Nickel delay line for EDSAC replica

https://en.wikipedia.org/wiki/Delay-line_memory

An analog delay line is a network of electrical components connected in cascade[dubious ], where each individual element creates a time difference between its input and output. It operates on analog signals whose amplitude varies continuously. In the case of a periodic signal, the time difference can be described in terms of a change in the phase of the signal. One example of an analog delay line is a bucket-brigade device.[1]

Other types of delay line include acoustic (usually ultrasonic), magnetostrictive, and surface acoustic wave devices. A series of resistor–capacitor circuits (RC circuits) can be cascaded to form a delay. A long transmission line can also provide a delay element. The delay time of an analog delay line may be only a few nanoseconds or several milliseconds, limited by the practical size of the physical medium used to delay the signal and the propagation speed of impulses in the medium.

Analog delay lines are applied in many types of signal processing circuits; for example the PAL television standard uses an analog delay line to store an entire video scanline. Acoustic and electromechanical delay lines are used to provide a "reverberation" effect in musical instrument amplifiers, or to simulate an echo. High-speed oscilloscopes used an analog delay line to allow observation of waveforms just before some triggering event.

With the growing use of digital signal processing techniques, digital forms of delay are practical and eliminate some of the problems with dissipation and noise in analog systems.

History

Inductor–capacitor ladder networks were used as analog delay lines in the 1920s. For example, Francis Hubbard's sonar direction finder patent filed in 1921.[2] Hubbard referred to this as an Artificial transmission line. In 1941, Gerald Tawney of Sperry Gyroscope Company filed for a patent on a compact packaging of an inductor–capacitor ladder network that he explicitly referred to as a time delay line.[3]

In 1924, Robert Mathes of Bell Telephone Laboratories filed a broad patent covering essentially all electromechanical delay lines, but focusing on acoustic delay lines where an air column confined to a pipe served as the mechanical medium, and a telephone receiver at one end and a telephone transmitter at the other end served as the electromechanical transducers.[4] Mathes was motivated by the problem of echo suppression on long-distance telephone lines, and his patent clearly explained the fundamental relationship between inductor–capacitor ladder networks and mechanical elastic delay lines such as his acoustic line.

In 1938, William Spencer Percival of Electrical & Musical Industries (later EMI) applied for a patent on an acoustical delay line using piezoelectric transducers and a liquid medium. He used water or kerosene, with a 10 MHz carrier frequency, with multiple baffles and reflectors in the delay tank to create a long acoustic path in a relatively small tank.[5]

In 1939, Laurens Hammond applied electromechanical delay lines to the problem of creating artificial reverberation for his Hammond organ.[6] Hammond used coil springs to transmit mechanical waves between voice-coil transducers.

The problem of suppressing multipath interference in television reception motivated Clarence Hansell of RCA to use delay lines in his 1939 patent application. He used "delay cables" for this, relatively short pieces of coaxial cable used as delay lines, but he recognized the possibility of using magnetostrictive or piezoelectric delay lines.[7]

By 1943, compact delay lines with distributed capacitance and inductance were devised. Typical early designs involved winding an enamel insulated wire on an insulating core and then surrounding that with a grounded conductive jacket. Richard Nelson of General Electric filed a patent for such a line that year.[8] Other GE employees, John Rubel and Roy Troell, concluded that the insulated wire could be wound around a conducting core to achieve the same effect.[9] Much of the development of delay lines during World War II was motivated by the problems encountered in radar systems.

In 1944, Madison G. Nicholson applied for a general patent on magnetostrictive delay lines. He recommended their use for applications requiring delays or measurement of intervals in the 10 to 1000 microseconds time range.[10]

In 1945, Gordon D. Forbes and Herbert Shapiro filed a patent for the mercury delay line with piezoelectric transducers.[11] This delay line technology would play an important role, serving as the basis of the delay-line memory used in several first-generation computers.

In 1946, David Arenberg filed patents covering the use of piezoelectric transducers attached to single crystal solid delay lines. He tried using quartz as a delay medium and reported that anisotropy in the quartz crystals caused problems. He reported success with single crystals of lithium bromide, sodium chloride and aluminum.[12][13] Arlenberg developed the idea of complex 2- and 3-dimensional folding of the acoustic path in the solid medium in order to package long delays into a compact crystal.[14] The delay lines used to decode PAL television signals follow the outline of this patent, using quartz glass as a medium instead of a single crystal.

See also

References

- David L. Arenberg, Solid Delay Line, U.S. Patent 2,624,804, granted Jan. 6, 1953.

https://en.wikipedia.org/wiki/Analog_delay_line

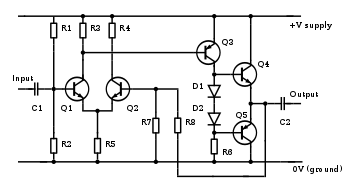

An amplifier, electronic amplifier or (informally) amp is an electronic device that can increase the magnitude of a signal (a time-varying voltage or current). A power amplifier is similarly used to deliver output power (AF or RF), controlled by an input signal. It is a two-port electronic circuit that uses electric power from a power supply to increase the amplitude (magnitude of the voltage or current) of a signal applied to its input terminals, producing a proportionally greater amplitude signal at its output. The amount of amplification provided by an amplifier is measured by its gain: the ratio of output voltage, current, or power to input. An amplifier is a circuit that has a power gain greater than one.[2][3][4]

An amplifier can either be a separate piece of equipment or an electrical circuit contained within another device. Amplification is fundamental to modern electronics, and amplifiers are widely used in almost all electronic equipment. Amplifiers can be categorized in different ways. One is by the frequency of the electronic signal being amplified. For example, audio amplifiers amplify signals in the audio (sound) range of less than 20 kHz, RF amplifiers amplify frequencies in the radio frequency range between 20 kHz and 300 GHz, and servo amplifiers and instrumentation amplifiers may work with very low frequencies down to direct current. Amplifiers can also be categorized by their physical placement in the signal chain; a preamplifier may precede other signal processing stages, for example.[5] The first practical electrical device which could amplify was the triode vacuum tube, invented in 1906 by Lee De Forest, which led to the first amplifiers around 1912. Today most amplifiers use transistors.

History

Vacuum tubes

The first practical prominent device that could amplify was the triode vacuum tube, invented in 1906 by Lee De Forest, which led to the first amplifiers around 1912. Vacuum tubes were used in almost all amplifiers until the 1960s–1970s when transistors replaced them. Today, most amplifiers use transistors, but vacuum tubes continue to be used in some applications.

The development of audio communication technology in form of the telephone, first patented in 1876, created the need to increase the amplitude of electrical signals to extend the transmission of signals over increasingly long distances. In telegraphy, this problem had been solved with intermediate devices at stations that replenished the dissipated energy by operating a signal recorder and transmitter back-to-back, forming a relay, so that a local energy source at each intermediate station powered the next leg of transmission. For duplex transmission, i.e. sending and receiving in both directions, bi-directional relay repeaters were developed starting with the work of C. F. Varley for telegraphic transmission. Duplex transmission was essential for telephony and the problem was not satisfactorily solved until 1904, when H. E. Shreeve of the American Telephone and Telegraph Company improved existing attempts at constructing a telephone repeater consisting of back-to-back carbon-granule transmitter and electrodynamic receiver pairs.[6] The Shreeve repeater was first tested on a line between Boston and Amesbury, MA, and more refined devices remained in service for some time. After the turn of the century it was found that negative resistance mercury lamps could amplify, and were also tried in repeaters, with little success.[7]

The development of thermionic valves starting around 1902, provided an entirely electronic method of amplifying signals. The first practical version of such devices was the Audion triode, invented in 1906 by Lee De Forest,[8][9][10] which led to the first amplifiers around 1912.[11] Since the only previous device which was widely used to strengthen a signal was the relay used in telegraph systems, the amplifying vacuum tube was first called an electron relay.[12][13][14][15] The terms amplifier and amplification, derived from the Latin amplificare, (to enlarge or expand),[16] were first used for this new capability around 1915 when triodes became widespread.[16]

The amplifying vacuum tube revolutionized electrical technology, creating the new field of electronics, the technology of active electrical devices.[11] It made possible long-distance telephone lines, public address systems, radio broadcasting, talking motion pictures, practical audio recording, radar, television, and the first computers. For 50 years virtually all consumer electronic devices used vacuum tubes. Early tube amplifiers often had positive feedback (regeneration), which could increase gain but also make the amplifier unstable and prone to oscillation. Much of the mathematical theory of amplifiers was developed at Bell Telephone Laboratories during the 1920s to 1940s. Distortion levels in early amplifiers were high, usually around 5%, until 1934, when Harold Black developed negative feedback; this allowed the distortion levels to be greatly reduced, at the cost of lower gain. Other advances in the theory of amplification were made by Harry Nyquist and Hendrik Wade Bode.[17]

The vacuum tube was virtually the only amplifying device, other than specialized power devices such as the magnetic amplifier and amplidyne, for 40 years. Power control circuitry used magnetic amplifiers until the latter half of the twentieth century when power semiconductor devices became more economical, with higher operating speeds. The old Shreeve electroacoustic carbon repeaters were used in adjustable amplifiers in telephone subscriber sets for the hearing impaired until the transistor provided smaller and higher quality amplifiers in the 1950s.[18]

Transistors

The first working transistor was a point-contact transistor invented by John Bardeen and Walter Brattain in 1947 at Bell Labs, where William Shockley later invented the bipolar junction transistor (BJT) in 1948. They were followed by the invention of the metal–oxide–semiconductor field-effect transistor (MOSFET) by Mohamed M. Atalla and Dawon Kahng at Bell Labs in 1959. Due to MOSFET scaling, the ability to scale down to increasingly small sizes, the MOSFET has since become the most widely used amplifier.[19]

The replacement of bulky electron tubes with transistors during the 1960s and 1970s created a revolution in electronics, making possible a large class of portable electronic devices, such as the transistor radio developed in 1954. Today, use of vacuum tubes is limited for some high power applications, such as radio transmitters.

Beginning in the 1970s, more and more transistors were connected on a single chip thereby creating higher scales of integration (such as small-scale, medium-scale and large-scale integration) in integrated circuits. Many amplifiers commercially available today are based on integrated circuits.

For special purposes, other active elements have been used. For example, in the early days of the satellite communication, parametric amplifiers were used. The core circuit was a diode whose capacitance was changed by an RF signal created locally. Under certain conditions, this RF signal provided energy that was modulated by the extremely weak satellite signal received at the earth station.

Advances in digital electronics since the late 20th century provided new alternatives to the traditional linear-gain amplifiers by using digital switching to vary the pulse-shape of fixed amplitude signals, resulting in devices such as the Class-D amplifier.

Ideal

In principle, an amplifier is an electrical two-port network that produces a signal at the output port that is a replica of the signal applied to the input port, but increased in magnitude.

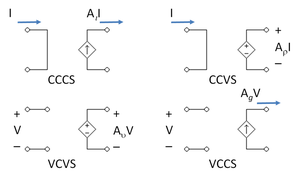

The input port can be idealized as either being a voltage input, which takes no current, with the output proportional to the voltage across the port; or a current input, with no voltage across it, in which the output is proportional to the current through the port. The output port can be idealized as being either a dependent voltage source, with zero source resistance and its output voltage dependent on the input; or a dependent current source, with infinite source resistance and the output current dependent on the input. Combinations of these choices lead to four types of ideal amplifiers.[5] In idealized form they are represented by each of the four types of dependent source used in linear analysis, as shown in the figure, namely:

| Input | Output | Dependent source | Amplifier type | Gain units |

|---|---|---|---|---|

| I | I | Current controlled current source, CCCS | Current amplifier | Unitless |

| I | V | Current controlled voltage source, CCVS | Transresistance amplifier | Ohm |

| V | I | Voltage controlled current source, VCCS | Transconductance amplifier | Siemens |

| V | V | Voltage controlled voltage source, VCVS | Voltage amplifier | Unitless |

Each type of amplifier in its ideal form has an ideal input and output resistance that is the same as that of the corresponding dependent source:[20]

| Amplifier type | Dependent source | Input impedance | Output impedance |

|---|---|---|---|

| Current | CCCS | 0 | ∞ |

| Transresistance | CCVS | 0 | 0 |

| Transconductance | VCCS | ∞ | ∞ |

| Voltage | VCVS | ∞ | 0 |

In real amplifiers the ideal impedances are not possible to achieve, but these ideal elements can be used to construct equivalent circuits of real amplifiers by adding impedances (resistance, capacitance and inductance) to the input and output. For any particular circuit, a small-signal analysis is often used to find the actual impedance. A small-signal AC test current Ix is applied to the input or output node, all external sources are set to AC zero, and the corresponding alternating voltage Vx across the test current source determines the impedance seen at that node as R = Vx / Ix.[21]

Amplifiers designed to attach to a transmission line at input and output, especially RF amplifiers, do not fit into this classification approach. Rather than dealing with voltage or current individually, they ideally couple with an input or output impedance matched to the transmission line impedance, that is, match ratios of voltage to current. Many real RF amplifiers come close to this ideal. Although, for a given appropriate source and load impedance, RF amplifiers can be characterized as amplifying voltage or current, they fundamentally are amplifying power.[22]

Properties

Amplifier properties are given by parameters that include:

- Gain, the ratio between the magnitude of output and input signals

- Bandwidth, the width of the useful frequency range

- Efficiency, the ratio between the power of the output and total power consumption

- Linearity, the extent to which the proportion between input and output amplitude is the same for high amplitude and low amplitude input

- Noise, a measure of undesired noise mixed into the output

- Output dynamic range, the ratio of the largest and the smallest useful output levels

- Slew rate, the maximum rate of change of the output

- Rise time, settling time, ringing and overshoot that characterize the step response

- Stability, the ability to avoid self-oscillation

Amplifiers are described according to the properties of their inputs, their outputs, and how they relate.[23] All amplifiers have gain, a multiplication factor that relates the magnitude of some property of the output signal to a property of the input signal. The gain may be specified as the ratio of output voltage to input voltage (voltage gain), output power to input power (power gain), or some combination of current, voltage, and power. In many cases the property of the output that varies is dependent on the same property of the input, making the gain unitless (though often expressed in decibels (dB)).

Most amplifiers are designed to be linear. That is, they provide constant gain for any normal input level and output signal. If an amplifier's gain is not linear, the output signal can become distorted. There are, however, cases where variable gain is useful. Certain signal processing applications use exponential gain amplifiers.[5]

Amplifiers are usually designed to function well in a specific application, for example: radio and television transmitters and receivers, high-fidelity ("hi-fi") stereo equipment, microcomputers and other digital equipment, and guitar and other instrument amplifiers. Every amplifier includes at least one active device, such as a vacuum tube or transistor.

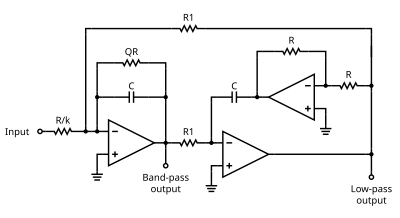

Negative feedback

Negative feedback is a technique used in most modern amplifiers to improve bandwidth and distortion and control gain. In a negative feedback amplifier part of the output is fed back and added to the input in opposite phase, subtracting from the input. The main effect is to reduce the overall gain of the system. However, any unwanted signals introduced by the amplifier, such as distortion are also fed back. Since they are not part of the original input, they are added to the input in opposite phase, subtracting them from the input. In this way, negative feedback also reduces nonlinearity, distortion and other errors introduced by the amplifier. Large amounts of negative feedback can reduce errors to the point that the response of the amplifier itself becomes almost irrelevant as long as it has a large gain, and the output performance of the system (the "closed loop performance") is defined entirely by the components in the feedback loop. This technique is particularly used with operational amplifiers (op-amps).

Non-feedback amplifiers can only achieve about 1% distortion for audio-frequency signals. With negative feedback, distortion can typically be reduced to 0.001%. Noise, even crossover distortion, can be practically eliminated. Negative feedback also compensates for changing temperatures, and degrading or nonlinear components in the gain stage, but any change or nonlinearity in the components in the feedback loop will affect the output. Indeed, the ability of the feedback loop to define the output is used to make active filter circuits.

Another advantage of negative feedback is that it extends the bandwidth of the amplifier. The concept of feedback is used in operational amplifiers to precisely define gain, bandwidth, and other parameters entirely based on the components in the feedback loop.

Negative feedback can be applied at each stage of an amplifier to stabilize the operating point of active devices against minor changes in power-supply voltage or device characteristics.

Some feedback, positive or negative, is unavoidable and often undesirable—introduced, for example, by parasitic elements, such as inherent capacitance between input and output of devices such as transistors, and capacitive coupling of external wiring. Excessive frequency-dependent positive feedback can produce parasitic oscillation and turn an amplifier into an oscillator.

Categories

Active devices

All amplifiers include some form of active device: this is the device that does the actual amplification. The active device can be a vacuum tube, discrete solid state component, such as a single transistor, or part of an integrated circuit, as in an op-amp).

Transistor amplifiers (or solid state amplifiers) are the most common type of amplifier in use today. A transistor is used as the active element. The gain of the amplifier is determined by the properties of the transistor itself as well as the circuit it is contained within.

Common active devices in transistor amplifiers include bipolar junction transistors (BJTs) and metal oxide semiconductor field-effect transistors (MOSFETs).

Applications are numerous, some common examples are audio amplifiers in a home stereo or public address system, RF high power generation for semiconductor equipment, to RF and microwave applications such as radio transmitters.

Transistor-based amplification can be realized using various configurations: for example a bipolar junction transistor can realize common base, common collector or common emitter amplification; a MOSFET can realize common gate, common source or common drain amplification. Each configuration has different characteristics.

Vacuum-tube amplifiers (also known as tube amplifiers or valve amplifiers) use a vacuum tube as the active device. While semiconductor amplifiers have largely displaced valve amplifiers for low-power applications, valve amplifiers can be much more cost effective in high power applications such as radar, countermeasures equipment, and communications equipment. Many microwave amplifiers are specially designed valve amplifiers, such as the klystron, gyrotron, traveling wave tube, and crossed-field amplifier, and these microwave valves provide much greater single-device power output at microwave frequencies than solid-state devices.[24] Vacuum tubes remain in use in some high end audio equipment, as well as in musical instrument amplifiers, due to a preference for "tube sound".

Magnetic amplifiers are devices somewhat similar to a transformer where one winding is used to control the saturation of a magnetic core and hence alter the impedance of the other winding.[25]

They have largely fallen out of use due to development in semiconductor amplifiers but are still useful in HVDC control, and in nuclear power control circuitry due to not being affected by radioactivity.

Negative resistances can be used as amplifiers, such as the tunnel diode amplifier.[26][27]

Power amplifiers

A power amplifier is an amplifier designed primarily to increase the power available to a load. In practice, amplifier power gain depends on the source and load impedances, as well as the inherent voltage and current gain. A radio frequency (RF) amplifier design typically optimizes impedances for power transfer, while audio and instrumentation amplifier designs normally optimize input and output impedance for least loading and highest signal integrity. An amplifier that is said to have a gain of 20 dB might have a voltage gain of 20 dB and an available power gain of much more than 20 dB (power ratio of 100)—yet actually deliver a much lower power gain if, for example, the input is from a 600 Ω microphone and the output connects to a 47 kΩ input socket for a power amplifier. In general, the power amplifier is the last 'amplifier' or actual circuit in a signal chain (the output stage) and is the amplifier stage that requires attention to power efficiency. Efficiency considerations lead to the various classes of power amplifiers based on the biasing of the output transistors or tubes: see power amplifier classes below.

Audio power amplifiers are typically used to drive loudspeakers. They will often have two output channels and deliver equal power to each. An RF power amplifier is found in radio transmitter final stages. A Servo motor controller: amplifies a control voltage to adjust the speed of a motor, or the position of a motorized system.

Operational amplifiers (op-amps)

An operational amplifier is an amplifier circuit which typically has very high open loop gain and differential inputs. Op amps have become very widely used as standardized "gain blocks" in circuits due to their versatility; their gain, bandwidth and other characteristics can be controlled by feedback through an external circuit. Though the term today commonly applies to integrated circuits, the original operational amplifier design used valves, and later designs used discrete transistor circuits.

A fully differential amplifier is similar to the operational amplifier, but also has differential outputs. These are usually constructed using BJTs or FETs.

Distributed amplifiers

These use balanced transmission lines to separate individual single stage amplifiers, the outputs of which are summed by the same transmission line. The transmission line is a balanced type with the input at one end and on one side only of the balanced transmission line and the output at the opposite end is also the opposite side of the balanced transmission line. The gain of each stage adds linearly to the output rather than multiplies one on the other as in a cascade configuration. This allows a higher bandwidth to be achieved than could otherwise be realised even with the same gain stage elements.

Switched mode amplifiers

These nonlinear amplifiers have much higher efficiencies than linear amps, and are used where the power saving justifies the extra complexity. Class-D amplifiers are the main example of this type of amplification.

Negative resistance amplifier

Negative Resistance Amplifier is a type of Regenerative Amplifier [28] that can use the feedback between the transistor's source and gate to transform a capacitive impedance on the transistor's source to a negative resistance on its gate. Compared to other types of amplifiers, this "negative resistance amplifier" will only require a tiny amount of power to achieve very high gain, maintaining a good noise figure at the same time.

Applications

Video amplifiers

Video amplifiers are designed to process video signals and have varying bandwidths depending on whether the video signal is for SDTV, EDTV, HDTV 720p or 1080i/p etc.. The specification of the bandwidth itself depends on what kind of filter is used—and at which point (−1 dB or −3 dB for example) the bandwidth is measured. Certain requirements for step response and overshoot are necessary for an acceptable TV image.[29]

Microwave amplifiers

Traveling wave tube amplifiers (TWTAs) are used for high power amplification at low microwave frequencies. They typically can amplify across a broad spectrum of frequencies; however, they are usually not as tunable as klystrons.[30]

Klystrons are specialized linear-beam vacuum-devices, designed to provide high power, widely tunable amplification of millimetre and sub-millimetre waves. Klystrons are designed for large scale operations and despite having a narrower bandwidth than TWTAs, they have the advantage of coherently amplifying a reference signal so its output may be precisely controlled in amplitude, frequency and phase.

Solid-state devices such as silicon short channel MOSFETs like double-diffused metal–oxide–semiconductor (DMOS) FETs, GaAs FETs, SiGe and GaAs heterojunction bipolar transistors/HBTs, HEMTs, IMPATT diodes, and others, are used especially at lower microwave frequencies and power levels on the order of watts specifically in applications like portable RF terminals/cell phones and access points where size and efficiency are the drivers. New materials like gallium nitride (GaN) or GaN on silicon or on silicon carbide/SiC are emerging in HEMT transistors and applications where improved efficiency, wide bandwidth, operation roughly from few to few tens of GHz with output power of few Watts to few hundred of Watts are needed.[31][32]

Depending on the amplifier specifications and size requirements microwave amplifiers can be realised as monolithically integrated, integrated as modules or based on discrete parts or any combination of those.

The maser is a non-electronic microwave amplifier.

Musical instrument amplifiers

Instrument amplifiers are a range of audio power amplifiers used to increase the sound level of musical instruments, for example guitars, during performances.

Classification of amplifier stages and systems

Common terminal

One set of classifications for amplifiers is based on which device terminal is common to both the input and the output circuit. In the case of bipolar junction transistors, the three classes are common emitter, common base, and common collector. For field-effect transistors, the corresponding configurations are common source, common gate, and common drain; for vacuum tubes, common cathode, common grid, and common plate.