Category:Telephone exchange equipment

Pages in category "Telephone exchange equipment"

The following 43 pages are in this category, out of 43 total. This list may not reflect recent changes.

C

I

N

https://en.wikipedia.org/wiki/Category:Telephone_exchange_equipment

The 3CX Phone System is the software-based private branch exchange (PBX) Phone system developed and marketed by the company, 3CX. The 3CX Phone System is based on the SIP (Session Initiation Protocol) standard and enables extensions to make calls via the public switched telephone network (PSTN) or via Voice over Internet Protocol (VoIP) services on premises, in the cloud, or via a cloud service owned and operated by the 3CX company. The 3CX Phone System is available for Windows, Linux, Raspberry Pi[1] and supports standard SIP soft/hard phones, VoIP services, faxing, voice and web meetings, as well as traditional PSTN phone lines.

https://en.wikipedia.org/wiki/3CX_Phone_System

In data communications, an automatic switching system is a switching system in which all the operations required to execute the three phases of Information transfer transactions are automatically executed in response to signals from a user end-instrument.[1]

In an automatic switching system, the information-transfer transaction is performed without human intervention, except for initiation of the access phase and the disengagement phase by a user.[2]

In telephony, it refers to a telephone exchange in which all the operations required to set up, supervise, and release connections required for telephone calls are automatically performed in response to signals from a calling device. This distinction lost importance as manual switching declined during the 20th century.

References

- Glover, S. (November 1966). "Automatic Switching at the Edmonton Television Studios". Journal of the SMPTE. 75 (11): 1089–1092. doi:10.5594/J05892. ISSN 0361-4573.

https://en.wikipedia.org/wiki/Automatic_switching_system

In electronics and telecommunications, a crossbar switch (cross-point switch, matrix switch) is a collection of switches arranged in a matrix configuration. A crossbar switch has multiple input and output lines that form a crossed pattern of interconnecting lines between which a connection may be established by closing a switch located at each intersection, the elements of the matrix. Originally, a crossbar switch consisted literally of crossing metal bars that provided the input and output paths. Later implementations achieved the same switching topology in solid-state electronics. The crossbar switch is one of the principal telephone exchange architectures, together with a rotary switch, memory switch,[2] and a crossover switch.

General properties

A crossbar switch is an assembly of individual switches between a set of inputs and a set of outputs. The switches are arranged in a matrix. If the crossbar switch has M inputs and N outputs, then a crossbar has a matrix with M × N cross-points or places where connections can be made. At each crosspoint is a switch; when closed, it connects one of the inputs to one of the outputs. A given crossbar is a single layer, non-blocking switch. A crossbar switching system is also called a coordinate switching system.

Collections of crossbars can be used to implement multiple layer and blocking switches. A blocking switch prevents connecting more than one input. A non-blocking switch allows other concurrent connections from inputs to other outputs.

Applications

Crossbar switches are commonly used in information processing applications such as telephony and circuit switching, but they are also used in applications such as mechanical sorting machines.

The matrix layout of a crossbar switch is also used in some semiconductor memory devices which enables the data transmission. Here the bars are extremely thin metal wires, and the switches are fusible links. The fuses are blown or opened using high voltage and read using low voltage. Such devices are called programmable read-only memory.[3] At the 2008 NSTI Nanotechnology Conference a paper was presented that discussed a nanoscale crossbar implementation of an adding circuit used as an alternative to logic gates for computation.[4]

Matrix arrays are fundamental to modern flat-panel displays. Thin-film-transistor LCDs have a transistor at each crosspoint, so they could be considered to include a crossbar switch as part of their structure.

For video switching in home and professional theater applications, a crossbar switch (or a matrix switch, as it is more commonly called in this application) is used to distribute the output of multiple video appliances simultaneously to every monitor or every room throughout a building. In a typical installation, all the video sources are located on an equipment rack, and are connected as inputs to the matrix switch.

Where central control of the matrix is practical, a typical rack-mount matrix switch offers front-panel buttons to allow manual connection of inputs to outputs. An example of such a usage might be a sports bar, where numerous programs are displayed simultaneously. Ordinarily, a sports bar would install a separate desk top box for each display for which independent control is desired. The matrix switch enables the operator to route signals at will, so that only enough set top boxes are needed to cover the total number of unique programs to be viewed, while making it easier to control sound from any program in the overall sound system.

Such switches are used in high-end home theater applications. Video sources typically shared include set-top receivers or DVD changers; the same concept applies to audio. The outputs are wired to televisions in individual rooms. The matrix switch is controlled via an Ethernet or RS-232 connection by a whole-house automation controller, such as those made by AMX, Crestron, or Control4, which provides the user interface that enables the user in each room to select which appliance to watch. The actual user interface varies by system brand, and might include a combination of on-screen menus, touch-screens, and handheld remote controls. The system is necessary to enable the user to select the program they wish to watch from the same room they will watch it from, otherwise it would be necessary for them to walk to the equipment rack.

The special crossbar switches used in distributing satellite TV signals are called multiswitches.

Implementations

Historically, a crossbar switch consisted of metal bars associated with each input and output, together with some means of controlling movable contacts at each cross-point. The first switches used metal pins or plugs to bridge a vertical and horizontal bar. In the later part of the 20th century, the use of mechanical crossbar switches declined and the term described any rectangular array of switches in general. Modern crossbar switches are usually implemented with semiconductor technology. An important emerging class of optical crossbars is implemented with microelectromechanical systems (MEMS) technology.

Mechanical

A type of mid-19th-century telegraph exchange consisted of a grid of vertical and horizontal brass bars with a hole at each intersection (c.f. top picture). The operator inserted a metal pin to connect one telegraph line to another.

Electromechanical switching in telephony

A telephony crossbar switch is an electromechanical device for switching telephone calls. The first design of what is now called a crossbar switch was the Bell company Western Electric's coordinate selector of 1915. To save money on control systems, this system was organized on the stepping switch or selector principle rather than the link principle. It was little used in America, but the Televerket Swedish governmental agency manufactured its own design (the Gotthilf Betulander design from 1919, inspired by the Western Electric system), and used it in Sweden from 1926 until the digitization in the 1980s in small and medium-sized A204 model switches. The system design used in AT&T Corporation's 1XB crossbar exchanges, which entered revenue service from 1938, developed by Bell Telephone Labs, was inspired by the Swedish design but was based on the rediscovered link principle. In 1945, a similar design by Swedish Televerket was installed in Sweden, making it possible to increase the capacity of the A204 model switch. Delayed by the Second World War, several millions of urban 1XB lines were installed from the 1950s in the United States.

In 1950, the Swedish Ericsson company developed their own versions of the 1XB and A204 systems for the international market. In the early 1960s, the company's sales of crossbar switches exceeded those of their rotating 500-switching system, as measured in the number of lines. Crossbar switching quickly spread to the rest of the world, replacing most earlier designs like the Strowger (step-by-step) and Panel systems in larger installations in the U.S. Graduating from entirely electromechanical control on introduction, they were gradually elaborated to have full electronic control and a variety of calling features including short-code and speed-dialing. In the UK the Plessey Company produced a range of TXK crossbar exchanges, but their widespread rollout by the British Post Office began later than in other countries, and then was inhibited by the parallel development of TXE reed relay and electronic exchange systems, so they never achieved a large number of customer connections although they did find some success as tandem switch exchanges.

Crossbar switches use switching matrices made from a two-dimensional array of contacts arranged in an x-y format. These switching matrices are operated by a series of horizontal bars arranged over the contacts. Each such select bar can be rocked up or down by electromagnets to provide access to two levels of the matrix. A second set of vertical hold bars is set at right angles to the first (hence the name, "crossbar") and also operated by electromagnets. The select bars carry spring-loaded wire fingers that enable the hold bars to operate the contacts beneath the bars. When the select and then the hold electromagnets operate in sequence to move the bars, they trap one of the spring fingers to close the contacts beneath the point where two bars cross. This then makes the connection through the switch as part of setting up a calling path through the exchange. Once connected, the select magnet is then released so it can use its other fingers for other connections, while the hold magnet remains energized for the duration of the call to maintain the connection. The crossbar switching interface was referred to as the TXK or TXC (telephone exchange crossbar) switch in the UK.

However, the Bell System Type B crossbar switch of the 1960s was made in the largest quantity. The majority were 200-point switches, with twenty verticals and ten levels of three wires, Each select bar carries ten fingers so that any of the ten circuits assigned to the ten verticals can connect to either of two levels. Five select bars, each able to rotate up or down, mean a choice of ten links to the next stage of switching. Each crosspoint in this particular model connected six wires. The vertical off-normal contacts next to the hold magnets are lined up along the bottom of the switch. They perform logic and memory functions, and the hold bar keeps them in the active position as long as the connection is up. The horizontal off-normals on the sides of the switch are activated by the horizontal bars when the butterfly magnets rotate them. This only happens while the connection is being set up, since the butterflies are only energized then.

The majority of Bell System switches were made to connect three wires including the tip and ring of a balanced pair circuit and a sleeve lead for control. Many connected six wires, either for two distinct circuits or for a four wire circuit or other complex connection. The Bell System Type C miniature crossbar of the 1970s was similar, but the fingers projected forward from the back and the select bars held paddles to move them. The majority of type C had twelve levels; these were the less common ten level ones. The Northern Electric Minibar used in SP1 switch was similar but even smaller. The ITT Pentaconta Multiswitch of the same era had usually 22 verticals, 26 levels, and six to twelve wires. Ericsson crossbar switches sometimes had only five verticals.

Instrumentation

For instrumentation use, James Cunningham, Son and Company[5] made high-speed, very-long-life crossbar switches[6] with physically small mechanical parts which permitted faster operation than telephone-type crossbar switches. Many of their switches had the mechanical Boolean AND function of telephony crossbar switches, but other models had individual relays (one coil per crosspoint) in matrix arrays, connecting the relay contacts to [x] and [y] buses. These latter types were equivalent to separate relays; there was no logical AND function built in. Cunningham crossbar switches had precious-metal contacts capable of handling millivolt signals.

Telephone exchange

Early crossbar exchanges were divided into an originating side and a terminating side, while the later and prominent Canadian and US SP1 switch and 5XB switch were not. When a user picked up the telephone handset, the resulting line loop operating the user's line relay caused the exchange to connect the user's telephone to an originating sender, which returned the user a dial tone. The sender then recorded the dialed digits and passed them to the originating marker, which selected an outgoing trunk and operated the various crossbar switch stages to connect the calling user to it. The originating marker then passed the trunk call completion requirements (type of pulsing, resistance of the trunk, etc.) and the called party's details to the sender and released. The sender then relayed this information to a terminating sender (which could be on either the same or a different exchange). This sender then used a terminating marker to connect the calling user, via the selected incoming trunk, to the called user, and caused the controlling relay set to send the ring signal to the called user's phone, and return ringing tone to the caller.

The crossbar switch itself was simple: exchange design moved all the logical decision-making to the common control elements, which were very reliable as relay sets. The design criteria specified only two hours of downtime for service every forty years, which was a large improvement over earlier electromechanical systems. The exchange design concept lent itself to incremental upgrades, as the control elements could be replaced separately from the call switching elements. The minimum size of a crossbar exchange was comparatively large, but in city areas with a large installed line capacity the whole exchange occupied less space than other exchange technologies of equivalent capacity. For this reason they were also typically the first switches to be replaced with digital systems, which were even smaller and more reliable.

Two principles of crossbar switching existed. An early method was based on the selector principle, which used crossbar switches to implement the same switching fabric used with Strowger switches. In this principle, each crossbar switch would receive one dialed digit, corresponding to one of several groups of switches or trunks. The switch would then find an idle switch or trunk among those selected and connect to it. Each crossbar switch could only handle one call at a time; thus, an exchange with a hundred 10×10 switches in five stages could only have twenty conversations in progress. Distributed control meant there was no common point of failure, but also meant that the setup stage lasted for the ten seconds or so the caller took to dial the required number. In control occupancy terms this comparatively long interval degrades the traffic capacity of a switch.[citation needed]

Starting with the 1XB switch, the later and more common method was based on the link principle, and used the switches as crosspoints. Each moving contact was multipled to the other contacts on the same level by bare-strip wiring, often nicknamed banjo wiring.[7] to a link on one of the inputs of a switch in the next stage. The switch could handle its portion of as many calls as it had levels or verticals. Thus an exchange with forty 10×10 switches in four stages could have one hundred conversations in progress. The link principle was more efficient, but required a complex control system to find idle links through the switching fabric.

This meant common control, as described above: all the digits were recorded, then passed to the common control equipment, the marker, to establish the call at all the separate switch stages simultaneously. A marker-controlled crossbar system had in the marker a highly vulnerable central control; this was invariably protected by having duplicate markers. The great advantage was that the control occupancy on the switches was of the order of one second or less, representing the operate and release lags of the X-then-Y armatures of the switches. The only downside of common control was the need to provide digit recorders enough to deal with the greatest forecast originating traffic level on the exchange.

The Plessey TXK1 or 5005 design used an intermediate form, in which a clear path was marked through the switching fabric by distributed logic, and then closed through all at once.

Crossbar exchanges remain in revenue service only in a few telephone networks. Preserved installations are maintained in museums, such as the Museum of Communications in Seattle, Washington, and the Science Museum in London.

Semiconductor

Semiconductor implementations of crossbar switches typically consist of a set of input amplifiers or retimers connected to a series of interconnects within a semiconductor device. A similar set of interconnects are connected to output amplifiers or retimers. At each cross-point where the bars cross, a pass transistor is implemented which connects the bars. When the pass transistor is enabled, the input is connected to the output.

As computer technologies have improved, crossbar switches have found uses in systems such as the multistage interconnection networks that connect the various processing units in a uniform memory access parallel processor to the array of memory elements.

Arbitration

A standard problem in using crossbar switches is that of setting the crosspoints.[citation needed] In the classic telephony application of crossbars, the crosspoints are closed, and open as the telephone calls come and go. In Asynchronous Transfer Mode or packet switching applications, the crosspoints must be made and broken at each decision interval. In high-speed switches, the settings of all of the crosspoints must be determined and then set millions or billions of times per second. One approach for making these decisions quickly is through the use of a wavefront arbiter.

See also

- Matrix mixer

- Nonblocking minimal spanning switch - describes how to combine crossbar switches into larger switches.

- RF switch matrix

References

- The Western Electric Engineer: Volumes 5-7. Western Electric. 1961. p. 23.

Further reading

- Pacific Telephone and Telegraph Company. General Administration Engineering Section (1956). Survey of telephone switching. San Francisco, California. OCLC 11376478.

- Scudder, F.J.; Reynolds, J.N. (January 1939). "Crossbar Dial Telephone Switching System". Bell System Technical Journal. 8 (1): 76–118. doi:10.1109/EE.1939.6431910. S2CID 51659407. Retrieved 23 April 2015.

External links

https://en.wikipedia.org/wiki/Crossbar_switch

The GTD-5 EAX (General Telephone Digital Number 5 Electronic Automatic Exchange) is the Class 5 telephone switch developed by GTE Automatic Electric Laboratories. This digital central office telephone circuit switching system is used in the former GTE service areas and by many smaller telecommunications service providers.

History

The GTD-5 EAX first appeared in Banning, California on June 26, 1982,[1] slowly replacing the electromechanical systems still in use in the independent switch market at that time. The GTD-5 EAX was also used as a Class 4 telephone switch or as a mixed Class 4/5 in markets too small for a GTD-3 EAX or 4ESS switch. The GTD-5 EAX was also exported internationally, and manufactured outside of the U.S. under license, primarily in Canada, Belgium and Italy. By 1988, it had 4% of the worldwide switching market, with an installed base of 11,000,000 subscriber lines.[2] GTE Automatic Electric Laboratories became GTE Network Systems and later GTE Communication Systems. In 1989, GTE sold partial ownership of its switching division to AT&T, forming AG Communication Systems. AG Communication Systems eventually fell under the ownership of Lucent Technologies, and was dissolved as a separate corporate entity in 2003.

Architecture

Processor complexes

The processing building block of the GTD-5 EAX was the "processor complex". These were each assigned a specific function within the overall switch design. In the original generation, Intel 8086 processors were used. These were replaced by NEC V30s (an 80186 instruction set compatible processor with 8086 pinout implemented in CMOS and somewhat faster than the 8086 due to internal improvements) in the second generation, and ultimately by 80386 processors.

- Administrative Processor Complex (APC)

The APC was responsible for the craft interface to the system, administration of status control for all hardware devices, Recent Change, billing, and overall administration.

- Telephony Processor Complex (TPC)

The TPC was responsible for call sequence and state control. It received signalling inputs collected from peripheral processors (see MXU, RLU, RSU, and TCU below) and sent control information back to the peripheral processors.

- Base Processor Complex (BPC)

This term referred collectively to the APC and TPCs. Physically, this distinction made little sense, but was important from a software compilation standpoint. Since the APC and TPC processors shared a large memory-mapped space, some stages of compilation were performed in common.

- Timeswitch and Peripheral Control Unit (TCU)

The TCU was responsible for a group of Facility Interface Units (FIUs). Each FIU was responsible for connecting the system to a particular class of physical connection: analog lines in the Analog Line FIU (and its successor, the Extended Line FIU); analog trunks in the Analog Trunk FIU; and digital carrier in the Digital Trunk FIU and its successor, the EDT FIU. Unlike the SM in the competitive 5ESS Switch, the TCUs did not perform all call processing functions, but limited themselves to digit collection and signalling interpretation.

- Remote Switching Unit (RSU)

The RSU was similar to the TCU, but had a network capable of local switching, and could process calls locally when links to the base unit were severed.

- Remote Line Unit (RLU)

The RLU was a condensed version of the RSU, with no local switching capability and limited line capacity.

- MultipleXor Unit (MXU)

The MXU was actually a Lenkurt 914E Subscriber Loop Carrier. When integrated with the GTD-5 EAX, it used a custom software load that permitted message communication with the remainder of the system.

Internal communication

Most communication within the GTD-5 was performed via direct memory-mapped I/O. The APC and each TPC were each connected to three common memory units. These common memory units each contained 16 megabytes of memory which were allocated to shared data structures, both dynamic structures related to dynamic call data and static (protected) data related to the office database. The APC, TPC, and TCUs all connected to a smaller shared memory, the Message Distribution Circuit (MDC). This was an 8k word 96 port memory that was used to place small packetized messages into software defined queues. The MXU, RLU, and RSU were all sufficiently far from the base unit that they could not participate in the shared memory based communication directly. A special circuit pack, the Remote Data Link Controller (RDLC) was installed in the DT-FIU of the remote unit and its host TCU. This allowed a serial communication link over a dedicated timeslot of a DS1 carrier. The host TCU was responsible for forwarding messages from the remote unit through the MDC.

Network

Two generations of network were available on the GTD-5. The latter network was made available sometime around 2000, but its characteristics are not described in public documentation. The network described in the article is the original network, available from 1982 until approximately 2000.

The GTD-5 EAX ran on a Time-Space-Time (TST) topology. Each TCU contained two timeswitches (TSWs) with a total capacity of 1544 timeslots: 772 in the originating time switch and 772 in the terminating time switch. Four FIUs of 193 timeslots each were connected to the TSW. Trunking FIUs connected 192 timeslots of facility (eight DS1 carriers or 192 individual analog trunks). The original Analog Line FIU had a 768 line capacity with one codec per line. The digital output of the 768 codecs was concentrated to 192 timeslots before presentation to the timeswitch, a 4:1 concentration. In the later 1980s, higher capacity line frames of 1172 and 1536 lines became available, allowing for higher concentration ratios of 6:1 and 8:1.

The Space Switch (SSW) was under the control of the TPCs and APC, which accessed it via the Space Interface Controller (SIC). The SSW was divided into eight Space Switch Units (SSUs). Each SSU could switch all 772 channels between 32 TCUs. The first 32 TCUs connected in sequential order to the first two SSUs. Connecting the two SSUs in parallel this way provided the doubling of network capacity required in a CLOS network. When the system grew beyond 32 TCUs, an additional 6 SSUs were added. Two of these SSUs connected to TCU32-TCU63 in a manner directly analogous to the first two SSUs. Two connected the inputs from TCU0-TCU31 to the output of TCU32-TCU63, while the final two connected the outputs of TCU32-TCU63 to the input of TCU0-TCU31.

The GTD-5, unlike its contemporaries, did not make extensive use of serial line technology. Network communication was based on a 12-bit parallel PCM word[3] carried over cables incorporating parallel twisted pairs. Communication between processors and peripherals was memory mapped, with similar cables extending 18 bit address and data buses between frames.

Analog line FIU (AL-FIU)

The AL-FIU contained 8 simplex groups of 96 lines each, referred to as Analog Line Units (ALUs), controlled by a redundant controller, the Analog Control Unit (ACU). The 96 lines within each ALU were housed on 12 circuit packs of eight line circuits. These 12 circuit packs were electrically grouped into four groups of three cards, where each group of three cards shared a serial 24 timeslot PCM group. The timeslot assignment capabilities of the codec were used to manage timeslots within the PCM group. The ACU contained a timeslot selection circuit that could select the same timeslot from up to eight PCM groups, (i.e. network timeslot 0-7 would select PCM timeslot 0, network timeslot 8-15 would select PCM timeslot 1, etc., giving eight opportunities for PCM timeslot 0 to connect to network). Since the same timeslot could be selected only eight times out of thirty-two possible candidates, the overall concentration was four to one. A later generation expanded the number of ALUs to twelve or sixteen, as appropriate, giving larger effective concentration.

Analog trunk FIU (AT-FIU)

The AT-FIU was a repackaged AL-FIU. Only two simplex groups were supported, and the trunk cards carried four circuits instead of eight. PCM groups were six cards wide instead of three. Since two simplex groups provided a total of 192 trunks, the AT-FIU was unconcentrated, as trunk interfaces demand.

Digital trunk FIU (DT-FIU)

T-carrier spans were terminated, four per card, on the Quad Span Interface Circuit (QSIC) in Digital Trunk Facility Interface Units (DTUs). Two QSICS were equipped per copy. providing for an eight DS1 capacity. The span interface circuits were completely redundant, and all control circuitry operated in lockstep between the two copies. This arrangement provided for excellent failure detection but was plagued by design flaws in the earliest versions. Corrected versions of the design were not widely available until the early 1990s. The later generation Extended Digital Trunk Unit (EDT) included 8 T-carriers per card, and incorporated ESF and PRI interfaces. This FIU operated also operated in lockstep between the two copies, but incorporated a small backplane mounted "fingerboard" to house the transformer circuit.

Processor architecture

Throughout its lifecycle, the GTD-5 EAX incorporated a quad-redundant processor architecture. The main processor complex of the APC, TPC, TCU, RLU, and RSU all consisted of a pair of processor cards, and each of those processor cards contained a pair of processors. The on-card pair of processors executed precisely the same sequence of instructions, and the output of the pair were compared each clock cycle. If the results were not identical, the processors were immediately reset, and the pair of processors on the other card were brought online as the active processor complex. The active processor always kept memory up-to-date so that when these forced switches occurred, little data loss was suffered. When the switch was requested as a part of routine maintenance, the switch could be accomplished with no data loss at all.

Software architecture

The GTD-5 EAX was programmed in a custom version of Pascal.[4][5] This Pascal was extended to include a separate data and type compilation phase, known as the COMPOOL (Communications Pool). By enforcing this separate compilation phase, strict typing could be enforced across separate code compilation. This allowed type checking across procedure boundaries and across processor boundaries.

A small subset of code was programmed in 8086 assembly language. The assembler used had a preprocessor that imported identifiers from the COMPOOL, allowing type compatibility checking between Pascal and assembly.

The earliest peripherals were programmed in the assembly language appropriate to each processor. Eventually, most peripherals were programmed in variations of C and C++.

Administration

The system is administered through an assortment of teletypewriter "Channels" (also called the system console). Various outboard systems have been connected to these channels to provide specialized functions.

Patents

The following is a non-exhaustive list of U.S. patents applicable to the GTD-5 EAX design

- 4569017 Duplex central processing unit synchronization circuit

- 4757494 Method of Generating Additive Combinations for PCM voice samples

- 4835767 Additive PCM speaker circuit for a time shared conference arrangement

- 4466093 Time Shared Conference Arrangement

- 4406005 Dual Rail Time Control Unit for a T-S-T Digital Switching System

- 4509169 Dual rail network for a remote switching unit

- 4466094 Data capture arrangement for a conference circuit

- 4740960 Synchronization arrangement for time multiplexed data scanning circuitry

- 4580243 Circuit for duplex synchronization of asynchronous signals

- 4466092 Test data insertion arrangement for a conference circuit

- 4740961 Synchronization circuitry for duplex digital span equipment

- 5226121 Method of bit rate de-adaption using the ECMA 102 protocol

- 4532624 Parity checking arrangement for a remote switching unit network

- 4509168 Digital remote switching unit

- 4514842 T-S-T-S-T Digital switching network

- 4520478 Space Stage Arrangement for a T-S-T Digital Switching System

- 4524441 Modular Space Stage Arrangement for a T-S-T Digital Switching System

- 4524422 Modularly Expandable Space Stage for a T-S-T Digital Switching System

- 4525831 Interface Arrangement for Buffering Communication information between stages of T-S-T switch

- 5140616 Network independent clocking circuit which allows a synchronous master to be connected to a circuit switched data adapter

- 4402077 Dual rail time and control unit for a duplex T-S-T-digital switching system

- 4468737 Circuit for extending a multiplexed address and data bus to distant peripheral devices

- 4374361 Clock failure monitor circuit employing counter pair to indicate clock failure within two pulses

- 4399534 Dual rail time and control unit for a duplex T-S-T-digital switching system

- 4498174 Parallel cyclic redundancy checking circuit

See also

References

- "100 Years of Telephone Switching",Robert J. Chapuis, A. E. Joel, Jr., Amos E. Joel,p. 51

External links

- Description of GTD-5 in 100 Years of Telephone Switching

- Description of GTD-5 in Electronic Materials Handbook: Packaging

https://en.wikipedia.org/wiki/GTD-5_EAX

Emergency Stand Alone (ESA) is a term used by the vendors of telephone equipment such as Nortel DMS-100, Lucent 5ESS or GTD-5.

Typically, small towns or communities have telephone services provided from a "remote switching unit" which is controlled by the more powerful host switching complex. ESA occurs when the host/remote links are severed, thus leaving the region in “community isolation”. While in the ESA mode, the town/community is limited to only receiving or placing calls within that community/town.

Larger towns/regions may have several remote switching units which required “backdoor trunking” to connect all remote units during ESA within the same town/community. Special translations can be implemented to allow 911 to be redirected to a local number such as the local police station or fire hall that resides within that same community/town.

https://en.wikipedia.org/wiki/Emergency_Stand_Alone

IPC Systems, Inc. is an American company headquartered in Jersey City, New Jersey that provides and services voice communication systems for financial companies.[1] In 2014, IPC Systems employs approximately 1,000 employees throughout the Americas, EMEA and Asia-Pacific regions[2]

IPC's products, called trading turrets, are specialized, multi-line, multi-speaker communications devices used by traders. Turrets can have access to hundreds of lines and allow traders to monitor multiple connections simultaneously to maintain communication with counterparties, liquidity providers, intermediaries and exchanges[3][4] IPC's desktop system for traders provides multiple market data screens. and gives traders the option to use instant messaging for colleague communications while checking on incoming calls.[2]

In 2010, IPC was described by Waters Technology as the "Best Trading Turret Provider"[5] Companies that provide similar services to IPC Systems include BT and Orange Business Services[5]

History

IPC was founded as Interconnect Planning Corporation, a consulting company, in 1973. Its voice communication system was created after Republic National Bank approached IPC founder Stephan Nichols with a request to improve the bank's trading hardware[6]

In 2001, IPC Systems was purchased by Goldman Sachs.[7]

IPC was the first to use Voice over Internet Protocol (VoIP) on the trading floor; this reduced communication costs.[7] The company introduced the first VoIP-based turret in 2001 and its second generation VoIP based turret, the IQ/MAX, in 2006.[8]

Goldman Sachs sold the company to Silver Lake Partners in 2006 for $800 million.[9] In 2014, Centerbridge Partners announced that it had agreed to acquire IPC Systems from Silver Lake Partners.[10]

IPC Systems also developed a division called Positron Public Safety, which developed similar systems for use by 911 operators and other dispatchers.[11] This was sold in 2008 to rival Intrado.[12]

References

- "Intrado-IPC Positron deal expected to close this quarter". Nov 18, 2008 Donny Jackson | Urgent Communications

https://en.wikipedia.org/wiki/IPC_Systems

A trading turret or dealer board is a specialized telephony key system that is generally used by financial traders on their trading desks. Trading has progressed from floor trading through phone trading to electronic trading during the later half of the twentieth century with phone trading having dominated during the 1980s and 1990s. Although most trading volume is now done via electronic trading platforms, some phone trading persists and trading turrets are common on trading desks of investment banks.

Voice trading turrets

Trading turrets, unlike typical phone systems, have a number of features, functions and capabilities specifically designed for the needs of financial traders. Trading turrets enable users to visualize and prioritize incoming call activity from customers or counter-parties and make calls to these same people instantaneously by pushing a single button to access dedicated point-to-point telephone lines (commonly called Ringdown circuits). In addition, many traders have dozens or hundreds of dedicated speed dial buttons and large distribution hoot-n-holler or Squawk box circuits which allow immediate mass dissemination or exchange of information to other traders within their organization or to customers and counter-parties. Due to these requirements many Turrets have multiple handsets and multi-channel speaker units, generally these are shared by teams (for example: equities, fixed income, foreign exchange) or in some cases globally across whole trading organizations.

Unlike standard Private Branch Exchange telephone systems (PBX) designed for general office users, Trading turret system architecture has historically relied on highly distributed switching architectures that enable parallel processing of calls and ensure a "non-blocking, non-contended" state where there is always a greater number of trunks (paths in/out of the system) than users as well as fault tolerance which ensures that any one component failure can not affect all users or lines. As processing power has increased and switching technologies have matured, voice trading systems are evolving from digital time-division multiplexing (TDM) system architectures to Internet Protocol (IP) server-based architectures. IP technologies have transformed communications for traders by enabling converged, multimedia communications that include, in addition to traditional voice calls, presence-based communications such as: unified communications and messaging, instant messaging (IM), chat and audio/video conferencing. Some of modern trading turret models are optimised to integrate with PBX platform. By natively registering on CUCM, for example, office users and turret users can have tighter collaboration and reduce total cost of ownership[citation needed].

While some of trading turret systems also include intercom functions, it is common that financial services firms use an independent intercom system along with trading turret systems.

See also

- Electronic trading platform

- Dedicated line

- Stock market data systems

- Straight-through processing (STP)

- Trading system

- Trading room

References

External links

- A Bankers Guide to Trading Turrets, Peter Redshaw, 12 September 2013. Gartner.

https://en.wikipedia.org/wiki/Trading_turret

A time-slot interchange (TSI) switch is a network switch that stores data in RAM in one sequence, and reads it out in a different sequence. It uses RAM, a small routing memory and a counter. Like any switch, it has input and output ports. The RAM stores the packets or other data that arrive via its input terminal.

Mechanism

In a pure time-slot interchange switch, there is only one physical input, and one physical output. Each physical connection is an opportunity for a switching fabric to fail. The limited number of connections of this switch is therefore valuable in a large switching fabric, because it makes this type of switching very reliable. The disadvantage of this type of switch is that it introduces delay into the signals.

When a packet (or byte, on telephone switches) comes to the input, the switch stores the data in RAM in one sequence, and reads it out in a different sequence. Switch designs vary, but typically, a repeating counter is incremented with an internal clock. It typically wraps-around to zero. The RAM location chosen for the incoming data is taken from a small memory indexed by the counter. It is usually a location for the desired output time-slot. The current value of the counter also selects the RAM data to forward in the current output time slot. Then the counter is incremented to the next value. The switch repeats the algorithm, eventually sending data from any input time-slot to any output time-slot.

To minimize connections, and therefore improve reliability, the data to reprogram the switch is usually programmed via a single wire that threads through the entire group of integrated circuits in a printed circuit board. The software typically compares the data shifted-in with the data shifted-out, to verify that the ICs remain correctly connected. The switching data entered into the ICs is double-buffered. That is, a new switch set-up is shifted-in, and then a single pulse applies the new configuration instantly to all the connected ICs.

Limitation

In

a time-slot interchange (TSI) switch, two memory accesses are required

for each connection (one to read and one to store). Let T be the time to

access the memory. Therefore, for a connection, 2T time will be taken

to access the memory. If there are n connections and t is the operation

time for n lines, then

t=2nT

which gives

n=t/2T

t and n normally come from a higher-level system design of the switching fabric. Hence the technology yielding T determines n for a given t. T also limits t for a given n. Real switching fabrics have real requirements for n and t, and therefore since T must be an actual number set by a possible technology, real switches cannot be arbitrarily large n or small t.

In higher-speed switches, the limit from T can be halved by using a more expensive, less reliable two-port RAM. In these designs, the read and write usually occur at the same time. The switch must still arbitrate when there is an attempt to read and write a RAM slot at the same time. This is normally done by avoiding the case in the control software, by rearranging the connections in the switching fabric. (E.g. see Nonblocking minimal spanning switch)

Customary applications

In packet-switching networks, a time-slot interchange switch is often combined with two space-division switches to implement small network switches.

In telephone switches, time-slot interchange switches usually form the outer layer of the switching fabric at a central office's switch. They take data from time-multiplexed T-1 or E-1 lines that serve neighborhoods. The T-1 or E-1 lines serve the subscriber line interface cards (SLICs) in local neighborhoods. The SLICs serve as the outer space-division switches of a modern wired telephone system.

See also

References

https://en.wikipedia.org/wiki/Time-slot_interchange

A nonblocking minimal spanning switch is a device that can connect N inputs to N outputs in any combination. The most familiar use of switches of this type is in a telephone exchange. The term "non-blocking" means that if it is not defective, it can always make the connection. The term "minimal" means that it has the fewest possible components, and therefore the minimal expense.

Historically, in telephone switches, connections between callers were arranged with large, expensive banks of electromechanical relays, Strowger switches. The basic mathematical property of Strowger switches is that for each input to the switch, there is exactly one output. Much of the mathematical switching circuit theory attempts to use this property to reduce the total number of switches needed to connect a combination of inputs to a combination of outputs.

In the 1940s and 1950s, engineers in Bell Lab began an extended series of mathematical investigations into methods for reducing the size and expense of the "switched fabric" needed to implement a telephone exchange. One early, successful mathematical analysis was performed by Charles Clos (French pronunciation: [ʃaʁl klo]), and a switched fabric constructed of smaller switches is called a Clos network.[1]

Background: switching topologies

The crossbar switch

The crossbar switch has the property of being able to connect N inputs to N outputs in any one-to-one combination, so it can connect any caller to any non-busy receiver, a property given the technical term "nonblocking". Being nonblocking it could always complete a call (to a non-busy receiver), which would maximize service availability.

However, the crossbar switch does so at the expense of using N2 (N squared) simple SPST switches. For large N (and the practical requirements of a phone switch are considered large) this growth was too expensive. Further, large crossbar switches had physical problems. Not only did the switch require too much space, but the metal bars containing the switch contacts would become so long that they would sag and become unreliable. Engineers also noticed that at any time, each bar of a crossbar switch was only making a single connection. The other contacts on the two bars were unused. This seemed to imply that most of the switching fabric of a crossbar switch was wasted.

The obvious way to emulate a crossbar switch was to find some way to build it from smaller crossbar switches. If a crossbar switch could be emulated by some arrangement of smaller crossbar switches, then these smaller crossbar switches could also, in turn be emulated by even smaller crossbar switches. The switching fabric could become very efficient, and possibly even be created from standardized parts. This is called a Clos network.

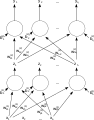

Completely connected 3-layer switches

The next approach was to break apart the crossbar switch into three layers of smaller crossbar switches. There would be an "input layer", a "middle layer" and an "output layer." The smaller switches are less massive, more reliable, and generally easier to build, and therefore less expensive.

A telephone system only has to make a one-to-one connection. Intuitively this seems to mean that the number of inputs and the number of outputs can always be equal in each subswitch, but intuition does not prove this can be done nor does it tell us how to do so. Suppose we want to synthesize a 16 by 16 crossbar switch. The design could have 4 subswitches on the input side, each with 4 inputs, for 16 total inputs. Further, on the output side, we could also have 4 output subswitches, each with 4 outputs, for a total of 16 outputs. It is desirable that the design use as few wires as possible, because wires cost real money. The least possible number of wires that can connect two subswitches is a single wire. So, each input subswitch will have a single wire to each middle subswitch. Also, each middle subswitch will have a single wire to each output subswitch.

The question is how many middle subswitches are needed, and therefore how many total wires should connect the input layer to the middle layer. Since telephone switches are symmetric (callers and callees are interchangeable), the same logic will apply to the output layer, and the middle subswitches will be "square", having the same number of inputs as outputs.

The number of middle subswitches depends on the algorithm used to allocate connection to them. The basic algorithm for managing a three-layer switch is to search the middle subswitches for a middle subswitch that has unused wires to the needed input and output switches. Once a connectible middle subswitch is found, connecting to the correct inputs and outputs in the input and output switches is trivial.

Theoretically, in the example, only four central switches are needed, each with exactly one connection to each input switch and one connection to each output switch. This is called a "minimal spanning switch," and managing it was the holy grail of the Bell Labs' investigations.

However, a bit of work with a pencil and paper will show that it is easy to get such a minimal switch into conditions in which no single middle switch has a connection to both the needed input switch and the needed output switch. It only takes four calls to partially block the switch. If an input switch is half-full, it has connections via two middle switches. If an output switch is also half full with connections from the other two middle switches, then there is no remaining middle switch which can provide a path between that input and output.

For this reason, a "simply connected nonblocking switch" 16x16 switch with four input subswitches and four output switches was thought to require 7 middle switches; in the worst case an almost-full input subswitch would use three middle switches, an almost-full output subswitch would use three different ones, and the seventh would be guaranteed to be free to make the last connection. For this reason, sometimes this switch arrangement is called a "2n−1 switch", where n is the number of input ports of the input subswitches.

The example is intentionally small, and in such a small example, the reorganization does not save many switches. A 16×16 crossbar has 256 contacts, while a 16×16 minimal spanning switch has 4×4×4×3 = 192 contacts.

As the numbers get larger, the savings increase. For example, a 10,000 line exchange would need 100 million contacts to implement a full crossbar. But three layers of 100 100×100 subswitches would use only 300 10,000 contact subswitches, or 3 million contacts.

Those subswitches could in turn each be made of 3×10 10×10 crossbars, a total of 3000 contacts, making 900,000 for the whole exchange; that is a far smaller number than 100 million.

Managing a minimal spanning switch

The crucial discovery was a way to reorganize connections in the middle switches to "trade wires" so that a new connection could be completed.

The first step is to find an unused link from the input subswitch to a middle-layer subswitch (which we shall call A), and an unused link from a middle-layer subswitch (which we shall call B) to the desired output subswitch. Since, prior to the arrival of the new connection, the input and output subswitches each had at least one unused connection, both of these unused links must exist.

If A and B happen to be the same middle-layer switch, then the connection can be made immediately just as in the "2n−1" switch case. However, if A and B are different middle-layer subswitches, more work is required. The algorithm finds a new arrangement of the connections through the middle subswitches A and B which includes all of the existing connections, plus the desired new connection.

Make a list of all of the desired connections that pass through A or B. That is, all of the existing connections to be maintained and the new connection. The algorithm proper only cares about the internal connections from input to output switch, although a practical implementation also has to keep track of the correct input and output switch connections.

In this list, each input subswitch can appear in at most two connections: one to subswitch A, and one to subswitch B. The options are zero, one, or two. Likewise, each output subswitch appears in at most two connections.

Each connection is linked to at most two others by a shared input or output subswitch, forming one link in a "chain" of connections.

Next, begin with the new connection. Assign it the path from its input subswitch, through middle subswitch A, to its output subswitch. If this first connection's output subswitch has a second connection, assign that second connection a path from its input subswitch through subswitch B. If that input subswitch has another connection, assign that third connection a path through subswitch A. Continue back and forth in this manner, alternating between middle subswitches A and B. Eventually one of two things must happen:

- the chain terminates in a subswitch with only one connection, or

- the chain loops back to the originally chosen connection.

In the first case, go back to the new connection's input subswitch and follow its chain backward, assigning connections to paths through middle subswitches B and A in the same alternating pattern.

When this is done, each input or output subswitch in the chain has at most two connections passing through it, and they are assigned to different middle switches. Thus, all the required links are available.

There may be additional connections through subswitches A and B which are not part of the chain including the new connection; those connections may be left as-is.

After the new connection pattern is designed in the software, then the electronics of the switch can actually be reprogrammed, physically moving the connections. The electronic switches are designed internally so that the new configuration can be written into the electronics without disturbing the existing connection, and then take effect with a single logic pulse. The result is that the connection moves instantaneously, with an imperceptible interruption to the conversation. In older electromechanical switches, one occasionally heard a clank of "switching noise."

This algorithm is a form of topological sort, and is the heart of the algorithm that controls a minimal spanning switch.

Practical implementations of switches

As soon as the algorithm was discovered, Bell system engineers and managers began discussing it. After several years, Bell engineers began designing electromechanical switches that could be controlled by it. At the time, computers used tubes and were not reliable enough to control a phone system (phone system switches are safety-critical, and they are designed to have an unplanned failure about once per thirty years). Relay-based computers were too slow to implement the algorithm. However, the entire system could be designed so that when computers were reliable enough, they could be retrofitted to existing switching systems.

It's not difficult to make composite switches fault-tolerant. When a subswitch fails, the callers simply redial. So, on each new connection, the software tries the next free connection in each subswitch rather than reusing the most recently released one. The new connection is more likely to work because it uses different circuitry.

Therefore, in a busy switch, when a particular PCB lacks any connections, it is an excellent candidate for testing.

To test or remove a particular printed circuit card from service, there is a well-known algorithm. As fewer connections pass through the card's subswitch, the software routes more test signals through the subswitch to a measurement device, and then reads the measurement. This does not interrupt old calls, which remain working.

If a test fails, the software isolates the exact circuit board by reading the failure from several external switches. It then marks the free circuits in the failing circuitry as busy. As calls using the faulty circuitry are ended, those circuits are also marked busy. Some time later, when no calls pass through the faulty circuitry, the computer lights a light on the circuit board that needs replacement, and a technician can replace the circuit board. Shortly after replacement, the next test succeeds, the connections to the repaired subswitch are marked "not busy," and the switch returns to full operation.

The diagnostics on Bell's early electronic switches would actually light a green light on each good printed circuit board, and light a red light on each failed printed circuit board. The printed circuits were designed so that they could be removed and replaced without turning off the whole switch.

The eventual result was the Bell 1ESS. This was controlled by a CPU called the Central Control (CC), a lock-step, Harvard architecture dual computer using reliable diode–transistor logic. In the 1ESS CPU, two computers performed each step, checking each other. When they disagreed, they would diagnose themselves, and the correctly running computer would take up switch operation while the other would disqualify itself and request repair. The 1ESS switch was still in limited use as of 2012, and had a verified reliability of less than one unscheduled hour of failure in each thirty years of operation, validating its design.

Initially it was installed on long-distance trunks in major cities, the most heavily used parts of each telephone exchange. On the first Mother's Day that major cities operated with it, the Bell system set a record for total network capacity, both in calls completed, and total calls per second per switch. This resulted in a record for total revenue per trunk.

Digital switches

A practical implementation of a switch can be created from an odd number of layers of smaller subswitches. Conceptually, the crossbar switches of the three-stage switch can each be further decomposed into smaller crossbar switches. Although each subswitch has limited multiplexing capability, working together they synthesize the effect of a larger N×N crossbar switch.

In a modern digital telephone switch, application of two different multiplexer approaches in alternate layers further reduces the cost of the switching fabric:

- space-division multiplexers are something like the crossbar switches already described, or some arrangement of crossover switches or banyan switches. Any single output can select from any input. In digital switches, this is usually an arrangement of AND gates. 8000 times per second, the connection is reprogrammed to connect particular wires for the duration of a time slot. Design advantage: In space-division systems the number of space-division connections is divided by the number of time slots in the time-division multiplexing system. This dramatically reduces the size and expense of the switching fabric. It also increases the reliability, because there are far fewer physical connections to fail.

- time-division multiplexers each have a memory which is read in a fixed order and written in a programmable order (or vice versa). This type of switch permutes time-slots in a time-division multiplexed signal that goes to the space-division multiplexers in its adjacent layers. Design advantage: Time-division switches have only one input and output wire. Since they have far fewer electrical connections to fail, they are far more reliable than space-division switches, and are therefore the preferred switches for the outer (input and output) layers of modern telephone switches.

Practical digital telephonic switches minimize the size and expense of the electronics. First, it is typical to "fold" the switch, so that both the input and output connections to a subscriber-line are handled by the same control logic. Then, a time-division switch is used in the outer layer. The outer layer is implemented in subscriber-line interface cards (SLICs) in the local presence street-side boxes. Under remote control from the central switch, the cards connect to timing-slots in a time-multiplexed line to a central switch. In the U.S. the multiplexed line is a multiple of a T-1 line. In Europe and many other countries it is a multiple of an E-1 line.

The scarce resources in a telephone switch are the connections between layers of subswitches. These connections can be either time slots or wires, depending on the type of multiplexing. The control logic has to allocate these connections, and the basic method is the algorithm already discussed. The subswitches are logically arranged so that they synthesize larger subswitches. Each subswitch, and synthesized subswitch is controlled (recursively) by logic derived from Clos's mathematics. The computer code decomposes larger multiplexers into smaller multiplexers.

If the recursion is taken to the limit, breaking down the crossbar to the minimum possible number of switching elements, the resulting device is sometimes called a crossover switch or a banyan switch depending on its topology.

Switches typically interface to other switches and fiber optic networks via fast multiplexed data lines such as SONET.

Each line of a switch may be periodically tested by the computer, by sending test data through it. If a switch's line fails, all lines of a switch are marked as in use. Multiplexer lines are allocated in a first-in-first out way, so that new connections find new switch elements. When all connections are gone from a defective switch, the defective switch can be avoided, and later replaced.

As of 2018, such switches are no longer made. They are being replaced by high-speed Internet Protocol routers.

Example of rerouting a switch

See also

References

- Clos, Charles (Mar 1953). "A study of non-blocking switching networks" (PDF). Bell System Technical Journal. 32 (2): 406–424. doi:10.1002/j.1538-7305.1953.tb01433.x. ISSN 0005-8580. Retrieved 22 March 2011.

https://en.wikipedia.org/wiki/Nonblocking_minimal_spanning_switch

The Panel Machine Switching System is a type of automatic telephone exchange for urban service that was used in the Bell System in the United States for seven decades. The first semi-mechanical types of this design were installed in 1915 in Newark, New Jersey, and the last were retired in the same city in 1983.

The Panel switch was named for its tall panels which consisted of layered strips of terminals. Between each strip was placed an insulating layer, which kept each metal strip electrically isolated from the ones above and below. These terminals were arranged in banks, five of which occupied an average selector frame. Each bank contained 100 sets of terminals, for a total of 500 sets of terminals per frame.[1] At the bottom, the frame had two electric motors to drive sixty selectors up and down by electromagnetically controlled clutches. As calls were completed through the system, selectors moved vertically over the sets of terminals until they reached the desired location, at which point the selector stopped its upward travel, and selections progressed to the next frame, until finally, the called subscriber's line was reached.

History

In c. 1906, AT&T organized two research groups for solving the unique challenges in switching telephone traffic in the large urban centers in the Bell System. Large cities had a complex infrastructure of manual switching that prevented complete ad hoc conversion to mechanical switching, but more favorable economics was anticipated from conversion to mechanical operation. No satisfactory methods existed for interconnecting manual systems with machines for switching. The two groups at the Western Electric Laboratories focussed on different technologies, using a competitive development approach to stimulate invention and increase product quality, a concept that had been successful at AT&T previously in transmitter design.[2] One group continued existing work that yielded the Rotary system, while the second group developed a system that was based on linear movement of switch components, which became known as the panel bank. As work continued, many subassemblies were shared, and the two switches only distinguished themselves in the switching mechanisms.

By 1910, the design of the Rotary system had progressed farther and internal trials employed it at Western Electric as a private branch exchange (PBX). However, by 1912, the company had decided that the panel system showed better promise to solve the large-city problem, and delegated the use of the Rotary system for use in Europe to satisfy the growing demand and competition from other vendors there, under the management and manufacture by the International Western Electric Company in Belgium.[3]

After a trial installation as a PBX within Western Electric in 1913, Panel system planning commenced with design and construction of field trial central offices using a semi-mechanical method of switching, in which subscribers still used telephones without a dial, and operators answered calls and keyed the destination telephone number into the panel switch, which then completed the call automatically.[4]

These first panel-type exchanges were placed in service in Newark, New Jersey,[5] on January 16, 1915 at the Mulberry central office serving 3640 subscribers, and on June 12 in the Waverly central office, which had 6480 lines. Panel development continued throughout the rest of the 1910s and in the 1920s in the United States. A third system in Newark (Branch Brook) followed in April 1917 for testing automatic call distribution.

The first fully machine-switching Panel systems using common control principles were the Douglas and Tyler exchanges in Omaha, Nebraska, completed in December 1921. Subscribers were issued new telephones with dials, that permitted the subscriber to place local calls without operator assistance. This installation was followed by the first installations in the eastern region in the Sherwood and Syracuse-2 central offices in Paterson, New Jersey, in May and July 1921, respectively.[6] The storied Pennsylvania exchange in New York City was cut-over to service in October 1922.[4][7]

Most Panel installations were replaced by modern systems during the 1970s. The last Panel switch, located in the Bigelow central office in Newark, was decommissioned by 1983.[8]

Operational overview

When a subscriber removes the receiver (earpiece) from the hookswitch of a telephone, the local loop circuit to the central office is closed. This causes the flow of current through the loop and a line relay, which causes the relay to operate, starting a selector in the line finder frame to hunt for the terminal of the subscriber's line. Simultaneously, a sender is selected, which provides dial tone to the caller once the line is found. The line finder then operates a cutoff relay, which prevents that telephone from being called, should another subscriber happen to dial the number.

Dial tone confirms to the subscriber that the system is ready for dialing. Depending on the local numbering system, the sender required either six or seven digits in order to complete the call. As the subscriber dialed, relays in the sender counted and stored the digits for later usage. As soon as the two, or three digits of the office code were dialed and stored, the sender performed a lookup against a translator (early-type) or decoder (later-type). The translator or decoder took the two or three digits as input, and returned data to the sender that contained the parameters for connecting to the called central office. After the sender received the data provided by the translator or decoder, the sender used this information to guide the district selector and office selector to the location of the terminals that would connect the caller to the central office where the terminating line was located. The sender also stored and utilized other information pertaining to the electrical requirements for signaling over the newly established connection, and the rate at which the subscriber should be billed, should the call successfully complete.

On the district or office selectors themselves, idle outgoing trunks were picked by the "sleeve test" method. After being directed by the sender to the correct group of terminals corresponding to the outgoing trunks to the called office, the selector continued moving upward through a number of terminals, checking for one with an un-grounded sleeve lead, then selecting and grounding it. If all the trunks were busy, the selector hunted to the end of the group, and finally sent back an "all circuits busy" tone. There was no provision for alternate routing as in earlier manual systems and later more sophisticated mechanical ones.

Once the connection to the terminating office was established, the sender used the last four (or five) digits of the telephone number to reach the called party. It did so by converting the digits into specific locations on the remaining incoming and final frames. After the connection was established all the way to the final frame, the called party's line was tested for busy. If the line was not busy, the incoming selector circuit sent ringing voltage forward to the called party's line and waited for the called party to answer their telephone. If the called party answered, supervision signals were sent backwards through the sender, and to the district frame, which established a talking path between both subscribers, and charged the calling party for the call. At this time, the sender was released, and could be used again in service of an entirely new call. If the called subscriber's line was busy, the final selector sent a busy signal back to the called party to alert them that the caller was on the phone and could not accept their call.

Telephone numbering

As in the Strowger system, each central office could address up to 10,000 numbered lines (0000 to 9999), requiring four digits for each subscriber station.

The panel system was designed to connect calls in a local metropolitan calling area. Each office was assigned a two- or three-digit office code, called an office code, which indicated to the system the central office in which the desired party was located. Callers dialed the office code followed by the station number. In larger cities, such as New York City, dialing required a three-digit office code,[9] and in less-populated cities, such as Seattle, WA[10] and Omaha, NE, a two-digit code. The remaining digits of the telephone number corresponded to the station number, which pointed to the physical location of the subscriber's telephone on the final frame of the called office. For instance, a telephone number may be listed as PA2-5678, where PA2 (722) is the office code and 5678 is the station number.

In areas that served party lines, the system accepted an additional digit for party identification. This allowed the sender to direct the final selector not only to the correct terminal, but to ring the correct subscriber's line on that terminal. The panel system supported individual, 2-party, and 4-party lines.

Circuit features

Similar to the divided-multiple telephone switchboard, the panel system was divided into an originating section and a terminating section. The subscriber's line had two appearances in a local office: one on the originating side, and one on the terminating side. The line circuit consisted of a line relay on the originating side to indicate that a customer had gone off-hook, and a cutoff relay to keep the line relay from interfering with an established connection. The cutoff relay was controlled by a sleeve lead that, as with the multiple switchboard, could be activated by either the originating section or the terminating. On the terminating end, the line circuit was connected to a final selector, which was used in call completion. Thus, when a call was completed to a subscriber, the final selector circuit connected to the desired line, and then performed a sleeve (busy) test. If the line was not busy, the final selector operated the cut-off relay via the sleeve lead, and proceeded to ring the called subscriber.

Supervision (line signaling) was supplied by a District circuit, similar to the cord circuit that plugged into a line jack on a switchboard. The District circuit supervised the calling party, and when the calling party went on-hook, it released the ground on the sleeve lead, thus releasing all selectors except the final, which returned down to their start position to make ready for further traffic. The final selector circuit was not supervised by the district circuit, and only returned to normal once the called party hung up.[11] Some District frames were equipped with the more complex supervisory and timing circuits required to generate coin collect and return signals for handling calls from payphones.

Many of the urban and commercial areas where Panel was first used had message rate service rather than flat rate calling. For this reason the line finder had a fourth wire known as the "M" lead. This enabled the District circuit to send metering pulses to control the subscriber's message register. The introduction of direct distance dialing (DDD) in the 1950s required the addition of automatic number identification equipment for centralized automatic message accounting.

The terminating section of the office was fixed to the structure of the last four digits of the telephone number, had a limit of 10,000 phone numbers. In some of the urban areas where Panel was used, even a single square mile might have three or five times that many telephone subscribers. Thus the incoming selectors of several separate switching entities shared floor space and staff, but required separate incoming trunk groups from distant offices. Sometimes an Office Selector Tandem was used to distribute incoming traffic among the offices. This was a Panel office with no senders or other common control equipment; just one stage of selectors and accepting only the Office Brush and Office Group parameters. Panel Sender Tandems were also used when their greater capabilities were worth their additional cost.

Sender

While the Strowger (step-by-step) switch moved under direct control of dial pulses that came from the telephone dial, the more sophisticated Panel switch had senders, which registered and stored the digits that the customer dialed, and then translated the received digits into numbers appropriate to drive the selectors to their desired position: District Brush, District Group, Office Brush, Office Group, Incoming Brush, Incoming Group, Final Brush, Final Tens, Final Units.

The use of senders provided advantages over the previous direct control systems, because they allowed the office code of the telephone number to be decoupled from the actual location on the switching fabric. Thus, an office code (for example, "722") had no direct relationship to the physical layout of the trunks on the district and office frames. By the usage of translation, the trunks could be located arbitrarily on the physical frames themselves, and the decoder or translator could direct the sender to their location as needed. Additionally, because the sender stored the telephone number dialed by the subscriber, and then controlled the selectors itself, there was no need for the subscriber's dial to have a direct-control relationship to the selectors themselves. This allowed the selectors to hunt at their own speed, over large groups of terminals, and allowed for smooth, motor controlled motion, rather than the staccato, momentary motion of the step-by-step system.

The sender also provided fault detection. As it was responsible for driving the selectors to their destinations, it was able to detect errors (known as trouble) and alert central office staff of the problem by lighting a lamp at the appropriate panel. In addition to lighting a lamp, the sender held itself and the selectors that were under its control out of service, which prevented their use by other callers. Upon noting the alarm condition, staff could inspect the sender and its associated selectors, and resolve whatever trouble occurred before returning the sender and selectors back to service.

When the sender's job was complete, it connected the talk path from the originating to the terminating side, and dropped out of the call. At this time, the sender was available to handle another subscriber's call. In this way, a comparatively small number of senders could handle a large amount of traffic, as each was only used for a short duration during call setup. This principle became known as common control, and was used in all subsequent switching systems.

Signaling and control

Revertive Pulsing (RP) was the primary signaling method used within and between panel switches. The selectors, once seized by the sender or another selector, would begin moving upwards under motor power. Each terminal the selector passed would send a pulse of ground potential along the circuit, back to the sender. The sender counted each pulse, and when the correct terminal was reached, the sender then signalled the selector to disengage the upward drive clutch and stop on the appropriate terminal as determined by the sender and decoder. The selector then either began its next selection operation, or extended the circuit to the next selector frame. In the case of the final frame, the last selection would result in connection to an individual's phone line and would begin ringing.

As the selectors were driven upwards by the motors, brushes attached to the vertical selector rods wiped over commutators at the top of the frame. These commutators contained alternating segments serving as insulators or conductors. When the brush passed over a conductive segment, it was grounded, thereby generating a pulse which was sent back to the sender for counting. When the sender counted the appropriate number of pulses, it cut the power to the solenoid in the terminating office, and caused the brush to stop at its current position.

Calls from one panel office to another worked very similarly to calls within an office by use of revertive pulse signalling. The originating office used the same protocol, but inserted a compensating resistance during pulsing so its sender encountered the same resistance for all trunks.[12] This is in contrast to more modern forms of forward pulsing, where the originating equipment will directly outpulse to the terminating side the information it needs to connect the call.

Compatibility

Later systems maintained compatibility with revertive pulsing, even as more advanced signaling methods were developed. The Number One Crossbar, which was the first successor to the Panel system also used this method of signaling exclusively, until later upgrades introduced newer signaling such as Multi-frequency signaling.