The gas laws were developed at the end of the 18th century, when scientists began to realize that relationships between pressure, volume and temperature of a sample of gas could be obtained which would hold to approximation for all gases.

Boyle's law[edit]

In 1662 Robert Boyle studied the relationship between volume and pressure of a gas of fixed amount at constant temperature. He observed that volume of a given mass of a gas is inversely proportional to its pressure at a constant temperature. Boyle's law, published in 1662, states that, at constant temperature, the product of the pressure and volume of a given mass of an ideal gas in a closed system is always constant. It can be verified experimentally using a pressure gauge and a variable volume container. It can also be derived from the kinetic theory of gases: if a container, with a fixed number of molecules inside, is reduced in volume, more molecules will strike a given area of the sides of the container per unit time, causing a greater pressure.

A statement of Boyle's law is as follows:

- The volume of a given mass of a gas is inversely related to pressure when the temperature is constant.

The concept can be represented with these formulae:

- , meaning "Volume is inversely proportional to Pressure", or

- , meaning "Pressure is inversely proportional to Volume", or

- , or

- where P is the pressure, and V is the volume of a gas, and k1 is the constant in this equation (and is not the same as the proportionality constants in the other equations in this article).

Charles's law[edit]

Charles's law, or the law of volumes, was found in 1787 by Jacques Charles. It states that, for a given mass of an ideal gas at constant pressure, the volume is directly proportional to its absolute temperature, assuming in a closed system.

The statement of Charles's law is as follows: the volume (V) of a given mass of a gas, at constant pressure (P), is directly proportional to its temperature (T). As a mathematical equation, Charles's law is written as either:

- , or

- , or

- ,

where "V" is the volume of a gas, "T" is the absolute temperature and k2 is a proportionality constant (which is not the same as the proportionality constants in the other equations in this article).

Gay-Lussac's law[edit]

Gay-Lussac's law, Amontons' law or the pressure law was found by Joseph Louis Gay-Lussac in 1808. It states that, for a given mass and constant volume of an ideal gas, the pressure exerted on the sides of its container is directly proportional to its absolute temperature.

As a mathematical equation, Gay-Lussac's law is written as either:

- , or

- , or

- ,

- where P is the pressure, T is the absolute temperature, and k is another proportionality constant.

Avogadro's law[edit]

Avogadro's law (hypothesized in 1811) states that the volume occupied by an ideal gas is directly proportional to the number of molecules of the gas present in the container. This gives rise to the molar volume of a gas, which at STP (273.15 K, 1 atm) is about 22.4 L. The relation is given by

- where n is equal to the number of molecules of gas (or the number of moles of gas).

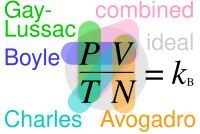

Combined and ideal gas laws[edit]

The Combined gas law or General Gas Equation is obtained by combining Boyle's Law, Charles's law, and Gay-Lussac's Law. It shows the relationship between the pressure, volume, and temperature for a fixed mass (quantity) of gas:

This can also be written as:

With the addition of Avogadro's law, the combined gas law develops into the ideal gas law:

- where

- P is pressure

- V is volume

- n is the number of moles

- R is the universal gas constant

- T is temperature (K)

- where the proportionality constant, now named R, is the universal gas constant with a value of 8.3144598 (kPa∙L)/(mol∙K). An equivalent formulation of this law is:

- where

- P is the pressure

- V is the volume

- N is the number of gas molecules

- k is the Boltzmann constant (1.381×10−23 J·K−1 in SI units)

- T is the temperature (K)

These equations are exact only for an ideal gas, which neglects various intermolecular effects (see real gas). However, the ideal gas law is a good approximation for most gases under moderate pressure and temperature.

This law has the following important consequences:

- If temperature and pressure are kept constant, then the volume of the gas is directly proportional to the number of molecules of gas.

- If the temperature and volume remain constant, then the pressure of the gas changes is directly proportional to the number of molecules of gas present.

- If the number of gas molecules and the temperature remain constant, then the pressure is inversely proportional to the volume.

- If the temperature changes and the number of gas molecules are kept constant, then either pressure or volume (or both) will change in direct proportion to the temperature.

Other gas laws[edit]

- Graham's law

- states that the rate at which gas molecules diffuse is inversely proportional to the square root of the gas density at constant temperature. Combined with Avogadro's law (i.e. since equal volumes have equal number of molecules) this is the same as being inversely proportional to the root of the molecular weight.

- Dalton's law of partial pressures

- states that the pressure of a mixture of gases simply is the sum of the partial pressures of the individual components. Dalton's law is as follows:

- and all component gases and the mixture are at the same temperature and volume

- where Ptotal is the total pressure of the gas mixture

- Pi is the partial pressure, or pressure of the component gas at the given volume and temperature.

- Amagat's law of partial volumes

- states that the volume of a mixture of gases (or the volume of the container) simply is the sum of the partial volumes of the individual components. Amagat's law is as follows:

- and all component gases and the mixture are at the same temperature and pressure

- where Vtotal is the total volume of the gas mixture, or the volume of the container,

- Vi is the partial volume, or volume of the component gas at the given pressure and temperature.

- Henry's law

- states that At constant temperature, the amount of a given gas dissolved in a given type and volume of liquid is directly proportional to the partial pressure of that gas in equilibrium with that liquid.

- Real gas law

- formulated by Johannes Diderik van der Waals (1873).

References[edit]

- Castka, Joseph F.; Metcalfe, H. Clark; Davis, Raymond E.; Williams, John E. (2002). Modern Chemistry. Holt, Rinehart and Winston. ISBN 0-03-056537-5.

- Guch, Ian (2003). The Complete Idiot's Guide to Chemistry. Alpha, Penguin Group Inc. ISBN 1-59257-101-8.

- Zumdahl, Steven S (1998). Chemical Principles. Houghton Mifflin Company. ISBN 0-395-83995-5.

https://en.wikipedia.org/wiki/Gas_laws

In chemistry and thermodynamics, the Van der Waals equation (or Van der Waals equation of state; named after Dutch physicist Johannes Diderik van der Waals) is an equation of state that generalizes the ideal gas law based on plausible reasons that real gases do not act ideally. The ideal gas law treats gas molecules as point particles that interact with their containers but not each other, meaning they neither take up space nor change kinetic energy during collisions(i.e. all collisions are perfectly elastic).[1] The ideal gas law states that volume (V) occupied by n moles of any gas has a pressure (P) at temperature (T) in kelvinsgiven by the following relationship, where R is the gas constant:

To account for the volume that a real gas molecule takes up, the Van der Waals equation replaces V in the ideal gas law with , where Vm is the molar volume of the gas and b is the volume that is occupied by one mole of the molecules. This leads to:[1]

The second modification made to the ideal gas law accounts for the fact that gas molecules do in fact interact with each other (they usually experience attraction at low pressures and repulsion at high pressures) and that real gases therefore show different compressibility than ideal gases. Van der Waals provided for intermolecular interaction by adding to the observed pressure P in the equation of state a term , where a is a constant whose value depends on the gas. The Van der Waals equation is therefore written as:[1]

and, for n moles of gas, can also be written as the equation below:

where Vm is the molar volume of the gas, R is the universal gas constant, T is temperature, P is pressure, and V is volume. When the molar volume Vm is large, bbecomes negligible in comparison with Vm, a/Vm2 becomes negligible with respect to P, and the Van der Waals equation reduces to the ideal gas law, PVm=RT.[1]

It is available via its traditional derivation (a mechanical equation of state), or via a derivation based in statistical thermodynamics, the latter of which provides the partition function of the system and allows thermodynamic functions to be specified. It successfully approximates the behavior of real fluids above their critical temperatures and is qualitatively reasonable for their liquid and low-pressure gaseous states at low temperatures. However, near the phase transitions between gas and liquid, in the range of p, V, and T where the liquid phase and the gas phase are in equilibrium, the Van der Waals equation fails to accurately model observed experimental behaviour, in particular that p is a constant function of V at given temperatures. As such, the Van der Waals model is not useful only for calculations intended to predict real behavior in regions near the critical point. Corrections to address these predictive deficiencies have since been made, such as the equal area rule or the principle of corresponding states.

https://en.wikipedia.org/wiki/Van_der_Waals_equation

According to van der Waals, the theorem of corresponding states (or principle/law of corresponding states) indicates that all fluids, when compared at the same reduced temperature and reduced pressure, have approximately the same compressibility factor and all deviate from ideal gas behavior to about the same degree.[1][2]

Material constants that vary for each type of material are eliminated, in a recast reduced form of a constitutive equation. The reduced variables are defined in terms of critical variables.

The principle originated with the work of Johannes Diderik van der Waals in about 1873[3] when he used the critical temperature and critical pressure to characterize a fluid.

The most prominent example is the van der Waals equation of state, the reduced form of which applies to all fluids.

Compressibility factor at the critical point[edit]

The compressibility factor at the critical point, which is defined as , where the subscript indicates the critical point, is predicted to be a constant independent of substance by many equations of state; the Van der Waals equation e.g. predicts a value of .

Where:

- : critical temperature [K]

- : critical pressure [Pa]

- : critical specific volume [m3⋅kg−1]

- : gas constant (8.314 J⋅K−1⋅mol−1)

- : Molar mass [kg⋅mol−1]

For example:

| Substance | [Pa] | [K] | [m3/kg] | |

|---|---|---|---|---|

| H2O | 21.817×106 | 647.3 | 3.154×10−3 | 0.23[4] |

| 4He | 0.226×106 | 5.2 | 14.43×10−3 | 0.31[4] |

| He | 0.226×106 | 5.2 | 14.43×10−3 | 0.30[5] |

| H2 | 1.279×106 | 33.2 | 32.3×10−3 | 0.30[5] |

| Ne | 2.73×106 | 44.5 | 2.066×10−3 | 0.29[5] |

| N2 | 3.354×106 | 126.2 | 3.2154×10−3 | 0.29[5] |

| Ar | 4.861×106 | 150.7 | 1.883×10−3 | 0.29[5] |

| Xe | 5.87×106 | 289.7 | 0.9049×10−3 | 0.29 |

| O2 | 5.014×106 | 154.8 | 2.33×10−3 | 0.291 |

| CO2 | 7.290×106 | 304.2 | 2.17×10−3 | 0.275 |

| SO2 | 7.88×106 | 430.0 | 1.900×10−3 | 0.275 |

| CH4 | 4.58×106 | 190.7 | 6.17×10−3 | 0.285 |

| C3H8 | 4.21×106 | 370.0 | 4.425×10−3 | 0.267 |

See also[edit]

- Van der Waals equation

- Equation of state

- Compressibility factors

- Johannes Diderik van der Waals equation

- Noro-Frenkel law of corresponding states

https://en.wikipedia.org/wiki/Theorem_of_corresponding_states

In physics and thermodynamics, an equation of state is a thermodynamic equation relating state variables which describe the state of matter under a given set of physical conditions, such as pressure, volume, temperature (PVT), or internal energy.[1] Equations of state are useful in describing the properties of fluids, mixtures of fluids, solids, and the interior of stars.

https://en.wikipedia.org/wiki/Equation_of_state

In thermodynamics, the compressibility factor (Z), also known as the compression factor or the gas deviation factor, is a correction factor which describes the deviation of a real gas from ideal gas behaviour. It is simply defined as the ratio of the molar volume of a gas to the molar volume of an ideal gas at the same temperature and pressure. It is a useful thermodynamic property for modifying the ideal gas law to account for the real gas behaviour.[1] In general, deviation from ideal behaviour becomes more significant the closer a gas is to a phase change, the lower the temperature or the larger the pressure. Compressibility factor values are usually obtained by calculation from equations of state (EOS), such as the virial equation which take compound-specific empirical constants as input. For a gas that is a mixture of two or more pure gases (air or natural gas, for example), the gas composition must be known before compressibility can be calculated.

Alternatively, the compressibility factor for specific gases can be read from generalized compressibility charts[1] that plot as a function of pressure at constant temperature.

The compressibility factor should not be confused with the compressibility (also known as coefficient of compressibility or isothermal compressibility) of a material, which is the measure of the relative volume change of a fluid or solid in response to a pressure change.

https://en.wikipedia.org/wiki/Compressibility_factor

In physics and thermodynamics, an equation of state is a thermodynamic equation relating state variables which describe the state of matter under a given set of physical conditions, such as pressure, volume, temperature (PVT), or internal energy.[1] Equations of state are useful in describing the properties of fluids, mixtures of fluids, solids, and the interior of stars.

Overview[edit]

At present, there is no single equation of state that accurately predicts the properties of all substances under all conditions. An example of an equation of state correlates densities of gases and liquids to temperatures and pressures, known as the ideal gas law, which is roughly accurate for weakly polar gases at low pressures and moderate temperatures. This equation becomes increasingly inaccurate at higher pressures and lower temperatures, and fails to predict condensation from a gas to a liquid.

Another common use is in modeling the interior of stars, including neutron stars, dense matter (quark–gluon plasmas) and radiation fields. A related concept is the perfect fluid equation of state used in cosmology.

Equations of state can also describe solids, including the transition of solids from one crystalline state to another.

In a practical context, equations of state are instrumental for PVT calculations in process engineering problems, such as petroleum gas/liquid equilibrium calculations. A successful PVT model based on a fitted equation of state can be helpful to determine the state of the flow regime, the parameters for handling the reservoir fluids, and pipe sizing.

Measurements of equation-of-state parameters, especially at high pressures, can be made using lasers.[2][3][4]

Historical[edit]

Boyle's law (1662)[edit]

Boyle's Law was perhaps the first expression of an equation of state.[citation needed] In 1662, the Irish physicist and chemist Robert Boyle performed a series of experiments employing a J-shaped glass tube, which was sealed on one end. Mercury was added to the tube, trapping a fixed quantity of air in the short, sealed end of the tube. Then the volume of gas was measured as additional mercury was added to the tube. The pressure of the gas could be determined by the difference between the mercury level in the short end of the tube and that in the long, open end. Through these experiments, Boyle noted that the gas volume varied inversely with the pressure. In mathematical form, this can be stated as:

The above relationship has also been attributed to Edme Mariotte and is sometimes referred to as Mariotte's law. However, Mariotte's work was not published until 1676.

Charles's law or Law of Charles and Gay-Lussac (1787)[edit]

In 1787 the French physicist Jacques Charles found that oxygen, nitrogen, hydrogen, carbon dioxide, and air expand to roughly the same extent over the same 80-kelvin interval. Later, in 1802, Joseph Louis Gay-Lussac published results of similar experiments, indicating a linear relationship between volume and temperature (Charles's Law):

Dalton's law of partial pressures (1801)[edit]

Dalton's Law of partial pressure states that the pressure of a mixture of gases is equal to the sum of the pressures of all of the constituent gases alone.

Mathematically, this can be represented for n species as:

The ideal gas law (1834)[edit]

In 1834, Émile Clapeyron combined Boyle's Law and Charles' law into the first statement of the ideal gas law. Initially, the law was formulated as pVm = R(TC + 267) (with temperature expressed in degrees Celsius), where R is the gas constant. However, later work revealed that the number should actually be closer to 273.2, and then the Celsius scale was defined with 0 °C = 273.15 K, giving:

Van der Waals equation of state (1873)[edit]

In 1873, J. D. van der Waals introduced the first equation of state derived by the assumption of a finite volume occupied by the constituent molecules.[5] His new formula revolutionized the study of equations of state, and was most famously continued via the Redlich–Kwong equation of state[6] and the Soave modification of Redlich-Kwong.[7]

General form of an equation of state[edit]

Thermodynamic systems are specified by an equation of state that constrains the values that the state variables may assume. For a given amount of substance contained in a system, the temperature, volume, and pressure are not independent quantities; they are connected by a relationship of the general form

An equation used to model this relationship is called an equation of state. In the following sections major equations of state are described, and the variables used here are defined as follows. Any consistent set of units may be used, although SI units are preferred. Absolute temperature refers to the use of the Kelvin (K) or Rankine (°R) temperature scales, with zero being absolute zero.

- , pressure (absolute)

- , volume

- , number of moles of a substance

- , , molar volume, the volume of 1 mole of gas or liquid

- , absolute temperature

- , ideal gas constant ≈ 8.3144621 J/mol·K

- , pressure at the critical point

- , molar volume at the critical point

- , absolute temperature at the critical point

Classical ideal gas law[edit]

The classical ideal gas law may be written

In the form shown above, the equation of state is thus

If the calorically perfect gas approximation is used, then the ideal gas law may also be expressed as follows

where is the density, is the (constant) adiabatic index (ratio of specific heats), is the internal energy per unit mass (the "specific internal energy"), is the constant specific heat at constant volume, and is the constant specific heat at constant pressure.

Quantum ideal gas law[edit]

Since for atomic and molecular gases, the classical ideal gas law is well suited in most cases, let us describe the equation of state for elementary particles with mass and spin that takes into account of quantum effects. In the following, the upper sign will always correspond to Fermi-Dirac statistics and the lower sign to Bose–Einstein statistics. The equation of state of such gases with particles occupying a volume with temperature and pressure is given by[8]

where is the Boltzmann constant and the chemical potential is given by the following implicit function

In the limiting case where , this equation of state will reduce to that of the classical ideal gas. It can be shown that the above equation of state in the limit reduces to

With a fixed number density , decreasing the temperature causes in Fermi gas, a increase in the value for pressure from its classical value implying an effective repulsion between particles (this is an apparent repulsion due to quantum exchange effects not because of actual interactions between particles since in ideal gas, interactional forces are neglected) and in Bose gas, a decrease in pressure from its classical value implying an effective attraction.

Cubic equations of state[edit]

Cubic equations of state are called such because they can be rewritten as a cubic function of .

Van der Waals equation of state[edit]

The Van der Waals equation of state may be written:

where is molar volume. The substance-specific constants and can be calculated from the critical properties , , and (noting that is the molar volume at the critical point) as:

Also written as

Proposed in 1873, the van der Waals equation of state was one of the first to perform markedly better than the ideal gas law. In this landmark equation is called the attraction parameter and the repulsion parameter or the effective molecular volume. While the equation is definitely superior to the ideal gas law and does predict the formation of a liquid phase, the agreement with experimental data is limited for conditions where the liquid forms. While the van der Waals equation is commonly referenced in text-books and papers for historical reasons, it is now obsolete. Other modern equations of only slightly greater complexity are much more accurate.

The van der Waals equation may be considered as the ideal gas law, "improved" due to two independent reasons:

- Molecules are thought as particles with volume, not material points. Thus cannot be too little, less than some constant. So we get () instead of .

- While ideal gas molecules do not interact, we consider molecules attracting others within a distance of several molecules' radii. It makes no effect inside the material, but surface molecules are attracted into the material from the surface. We see this as diminishing of pressure on the outer shell (which is used in the ideal gas law), so we write ( something) instead of . To evaluate this ‘something’, let's examine an additional force acting on an element of gas surface. While the force acting on each surface molecule is ~, the force acting on the whole element is ~~.

With the reduced state variables, i.e. , and , the reduced form of the Van der Waals equation can be formulated:

The benefit of this form is that for given and , the reduced volume of the liquid and gas can be calculated directly using Cardano's method for the reduced cubic form:

For and , the system is in a state of vapor–liquid equilibrium. The reduced cubic equation of state yields in that case 3 solutions. The largest and the lowest solution are the gas and liquid reduced volume.

Redlich-Kwong equation of state[6][edit]

Introduced in 1949, the Redlich-Kwong equation of state was a considerable improvement over other equations of the time. It is still of interest primarily due to its relatively simple form. While superior to the van der Waals equation of state, it performs poorly with respect to the liquid phase and thus cannot be used for accurately calculating vapor–liquid equilibria. However, it can be used in conjunction with separate liquid-phase correlations for this purpose.

The Redlich-Kwong equation is adequate for calculation of gas phase properties when the ratio of the pressure to the critical pressure (reduced pressure) is less than about one-half of the ratio of the temperature to the critical temperature (reduced temperature):

Soave modification of Redlich-Kwong[7][edit]

Where ω is the acentric factor for the species.

This formulation for is due to Graboski and Daubert. The original formulation from Soave is:

for hydrogen:

We can also write it in the polynomial form, with:

then we have:

where is the universal gas constant and Z=PV/(RT) is the compressibility factor.

In 1972 G. Soave[9] replaced the 1/√T term of the Redlich-Kwong equation with a function α(T,ω) involving the temperature and the acentric factor (the resulting equation is also known as the Soave-Redlich-Kwong equation of state; SRK EOS). The α function was devised to fit the vapor pressure data of hydrocarbons and the equation does fairly well for these materials.

Note especially that this replacement changes the definition of a slightly, as the is now to the second power.

Volume translation of Peneloux et al. (1982)[edit]

The SRK EOS may be written as

where

where and other parts of the SRK EOS is defined in the SRK EOS section.

A downside of the SRK EOS, and other cubic EOS, is that the liquid molar volume is significantly less accurate than the gas molar volume. Peneloux et alios (1982)[10] proposed a simple correction for this by introducing a volume translation

where is an additional fluid component parameter that translates the molar volume slightly. On the liquid branch of the EOS, a small change in molar volume corresponds to a large change in pressure. On the gas branch of the EOS, a small change in molar volume corresponds to a much smaller change in pressure than for the liquid branch. Thus, the perturbation of the molar gas volume is small. Unfortunately, there are two versions that occur in science and industry.

In the first version only is translated,[11] [12] and the EOS becomes

In the second version both and are translated, or the translation of is followed by a renaming of the composite parameter b − c.[13] This gives

The c-parameter of a fluid mixture is calculated by

The c-parameter of the individual fluid components in a petroleum gas and oil can be estimated by the correlation

where the Rackett compressibility factor can be estimated by

A nice feature with the volume translation method of Peneloux et al. (1982) is that it does not affect the vapor-liquid equilibrium calculations.[14] This method of volume translation can also be applied to other cubic EOSs if the c-parameter correlation is adjusted to match the selected EOS.

Peng–Robinson equation of state[edit]

In polynomial form:

where is the acentric factor of the species, is the universal gas constant and is compressibility factor.

The Peng–Robinson equation of state (PR EOS) was developed in 1976 at The University of Alberta by Ding-Yu Peng and Donald Robinson in order to satisfy the following goals:[15]

- The parameters should be expressible in terms of the critical properties and the acentric factor.

- The model should provide reasonable accuracy near the critical point, particularly for calculations of the compressibility factor and liquid density.

- The mixing rules should not employ more than a single binary interaction parameter, which should be independent of temperature, pressure, and composition.

- The equation should be applicable to all calculations of all fluid properties in natural gas processes.

For the most part the Peng–Robinson equation exhibits performance similar to the Soave equation, although it is generally superior in predicting the liquid densities of many materials, especially nonpolar ones.[16] The departure functions of the Peng–Robinson equation are given on a separate article.

The analytic values of its characteristic constants are:

Peng–Robinson-Stryjek-Vera equations of state[edit]

PRSV1[edit]

A modification to the attraction term in the Peng–Robinson equation of state published by Stryjek and Vera in 1986 (PRSV) significantly improved the model's accuracy by introducing an adjustable pure component parameter and by modifying the polynomial fit of the acentric factor.[17]

The modification is:

where is an adjustable pure component parameter. Stryjek and Vera published pure component parameters for many compounds of industrial interest in their original journal article. At reduced temperatures above 0.7, they recommend to set and simply use . For alcohols and water the value of may be used up to the critical temperature and set to zero at higher temperatures.[17]

PRSV2[edit]

A subsequent modification published in 1986 (PRSV2) further improved the model's accuracy by introducing two additional pure component parameters to the previous attraction term modification.[18]

The modification is:

where , , and are adjustable pure component parameters.

PRSV2 is particularly advantageous for VLE calculations. While PRSV1 does offer an advantage over the Peng–Robinson model for describing thermodynamic behavior, it is still not accurate enough, in general, for phase equilibrium calculations.[17] The highly non-linear behavior of phase-equilibrium calculation methods tends to amplify what would otherwise be acceptably small errors. It is therefore recommended that PRSV2 be used for equilibrium calculations when applying these models to a design. However, once the equilibrium state has been determined, the phase specific thermodynamic values at equilibrium may be determined by one of several simpler models with a reasonable degree of accuracy.[18]

One thing to note is that in the PRSV equation, the parameter fit is done in a particular temperature range which is usually below the critical temperature. Above the critical temperature, the PRSV alpha function tends to diverge and become arbitrarily large instead of tending towards 0. Because of this, alternate equations for alpha should be employed above the critical point. This is especially important for systems containing hydrogen which is often found at temperatures far above its critical point. Several alternate formulations have been proposed. Some well known ones are by Twu et al or by Mathias and Copeman.

Peng-Robinson-Babalola equation of state (PRB)[edit]

Babalola [19] modified the Peng–Robinson Equation of state as:

The attractive force parameter ‘a’, which was considered to be a constant with respect to pressure in Peng–Robinson EOS. The modification, in which parameter ‘a’ was treated as a variable with respect to pressure for multicomponent multi-phase high density reservoir systems was to improve accuracy in the prediction of properties of complex reservoir fluids for PVT modeling. The variation was represented with a linear equation where a1 and a2 represent the slope and the intercept respectively of the straight line obtained when values of parameter ‘a’ are plotted against pressure.

This modification increases the accuracy of Peng–Robinson equation of state for heavier fluids particularly at pressure ranges (>30MPa) and eliminates the need for tuning the original Peng-Robinson equation of state. Values for a

Elliott, Suresh, Donohue equation of state[edit]

The Elliott, Suresh, and Donohue (ESD) equation of state was proposed in 1990.[20] The equation seeks to correct a shortcoming in the Peng–Robinson EOS in that there was an inaccuracy in the van der Waals repulsive term. The EOS accounts for the effect of the shape of a non-polar molecule and can be extended to polymers with the addition of an extra term (not shown). The EOS itself was developed through modeling computer simulations and should capture the essential physics of the size, shape, and hydrogen bonding.

where:

and

- is a "shape factor", with for spherical molecules

- For non-spherical molecules, the following relation is suggested

- where is the acentric factor.

- The reduced number density is defined as

where

- is the characteristic size parameter

- is the number of molecules

- is the volume of the container

The characteristic size parameter is related to the shape parameter through

where

- and is Boltzmann's constant.

Noting the relationships between Boltzmann's constant and the Universal gas constant, and observing that the number of molecules can be expressed in terms of Avogadro's number and the molar mass, the reduced number density can be expressed in terms of the molar volume as

The shape parameter appearing in the Attraction term and the term are given by

- (and is hence also equal to 1 for spherical molecules).

where is the depth of the square-well potential and is given by

- , , and are constants in the equation of state:

- for spherical molecules (c=1)

- for spherical molecules (c=1)

- for spherical molecules (c=1)

The model can be extended to associating components and mixtures of nonassociating components. Details are in the paper by J.R. Elliott, Jr. et al. (1990).[20]

Cubic-Plus-Association[edit]

The Cubic-Plus-Association (CPA) equation of state combines the Soave-Redlich-Kwong equation with the association term from SAFT[21][22] based on Chapman's extensions and simplifications of a theory of associating molecules due to Michael Wertheim.[23] The development of the equation began in 1995 as a research project that was funded by Shell, and in 1996 an article was published which presented the CPA equation of state.[23][24]

In the association term is the mole fraction of molecules not bonded at site A.

Non-cubic equations of state[edit]

Dieterici equation of state[edit]

where a is associated with the interaction between molecules and b takes into account the finite size of the molecules, similar to the Van der Waals equation.

The reduced coordinates are:

Virial equations of state[edit]

Virial equation of state[edit]

Although usually not the most convenient equation of state, the virial equation is important because it can be derived directly from statistical mechanics. This equation is also called the Kamerlingh Onnes equation. If appropriate assumptions are made about the mathematical form of intermolecular forces, theoretical expressions can be developed for each of the coefficients. A is the first virial coefficient, which has a constant value of 1 and makes the statement that when volume is large, all fluids behave like ideal gases. The second virial coefficient B corresponds to interactions between pairs of molecules, C to triplets, and so on. Accuracy can be increased indefinitely by considering higher order terms. The coefficients B, C, D, etc. are functions of temperature only.

One of the most accurate equations of state is that from Benedict-Webb-Rubin-Starling[25] shown next. It was very close to a virial equation of state. If the exponential term in it is expanded to two Taylor terms, a virial equation can be derived:

Note that in this virial equation, the fourth and fifth virial terms are zero. The second virial coefficient is monotonically decreasing as temperature is lowered. The third virial coefficient is monotonically increasing as temperature is lowered.

The BWR equation of state[edit]

where

- p is pressure

- ρ is molar density

Values of the various parameters can be found in reference materials.[26]

Lee-Kesler equation of state[edit]

The Lee-Kesler equation of state is based on the corresponding states principle, and is a modification of the BWR equation of state.[27]

SAFT equations of state[edit]

Statistical associating fluid theory (SAFT) equations of state predict the effect of molecular size and shape and hydrogen bonding on fluid properties and phase behavior. The SAFT equation of state was developed using statistical mechanical methods (in particular perturbation theory) to describe the interactions between molecules in a system.[21][22][28] The idea of a SAFT equation of state was first proposed by Chapman et al. in 1988 and 1989.[21][22][28] Many different versions of the SAFT equation of state have been proposed, but all use the same chain and association terms derived by Chapman.[21][29][30] SAFT equations of state represent molecules as chains of typically spherical particles that interact with one another through short range repulsion, long range attraction, and hydrogen bonding between specific sites.[28] One popular version of the SAFT equation of state includes the effect of chain length on the shielding of the dispersion interactions between molecules (PC-SAFT).[31] In general, SAFT equations give more accurate results than traditional cubic equations of state, especially for systems containing liquids or solids.[32][33]

Multiparameter equations of state[edit]

Helmholtz Function form[edit]

Multiparameter equations of state (MEOS) can be used to represent pure fluids with high accuracy, in both the liquid and gaseous states. MEOS's represent the Helmholtz function of the fluid as the sum of ideal gas and residual terms. Both terms are explicit in reduced temperature and reduced density - thus:

where:

The reduced density and temperature are typically, though not always, the critical values for the pure fluid.

Other thermodynamic functions can be derived from the MEOS by using appropriate derivatives of the Helmholtz function; hence, because integration of the MEOS is not required, there are few restrictions as to the functional form of the ideal or residual terms.[34][35] Typical MEOS use upwards of 50 fluid specific parameters, but are able to represent the fluid's properties with high accuracy. MEOS are available currently for about 50 of the most common industrial fluids including refrigerants. The IAPWS95 reference equation of state for water is also an MEOS.[36] Mixture models for MEOS exist, as well.

One example of such an equation of state is the form proposed by Span and Wagner.[34]

This is a somewhat simpler form that is intended to be used more in technical applications.[34] Reference equations of state require a higher accuracy and use a more complicated form with more terms.[36][35]

Other equations of state of interest[edit]

Stiffened equation of state[edit]

When considering water under very high pressures, in situations such as underwater nuclear explosions, sonic shock lithotripsy, and sonoluminescence, the stiffened equation of state[37] is often used:

where is the internal energy per unit mass, is an empirically determined constant typically taken to be about 6.1, and is another constant, representing the molecular attraction between water molecules. The magnitude of the correction is about 2 gigapascals (20,000 atmospheres).

The equation is stated in this form because the speed of sound in water is given by .

Thus water behaves as though it is an ideal gas that is already under about 20,000 atmospheres (2 GPa) pressure, and explains why water is commonly assumed to be incompressible: when the external pressure changes from 1 atmosphere to 2 atmospheres (100 kPa to 200 kPa), the water behaves as an ideal gas would when changing from 20,001 to 20,002 atmospheres (2000.1 MPa to 2000.2 MPa).

This equation mispredicts the specific heat capacity of water but few simple alternatives are available for severely nonisentropic processes such as strong shocks.

Ultrarelativistic equation of state[edit]

An ultrarelativistic fluid has equation of state

where is the pressure, is the mass density, and is the speed of sound.

Ideal Bose equation of state[edit]

The equation of state for an ideal Bose gas is

where α is an exponent specific to the system (e.g. in the absence of a potential field, α = 3/2), z is exp(μ/kT) where μ is the chemical potential, Li is the polylogarithm, ζ is the Riemann zeta function, and Tc is the critical temperature at which a Bose–Einstein condensate begins to form.

Jones–Wilkins–Lee equation of state for explosives (JWL equation)[edit]

The equation of state from Jones–Wilkins–Lee is used to describe the detonation products of explosives.

The ratio is defined by using = density of the explosive (solid part) and = density of the detonation products. The parameters , , , and are given by several references.[38] In addition, the initial density (solid part) , speed of detonation , Chapman–Jouguet pressure and the chemical energy of the explosive are given in such references. These parameters are obtained by fitting the JWL-EOS to experimental results. Typical parameters for some explosives are listed in the table below.

| Material | (g/cm3) | (m/s) | (GPa) | (GPa) | (GPa) | (GPa) | |||

|---|---|---|---|---|---|---|---|---|---|

| TNT | 1.630 | 6930 | 21.0 | 373.8 | 3.747 | 4.15 | 0.90 | 0.35 | 6.00 |

| Composition B | 1.717 | 7980 | 29.5 | 524.2 | 7.678 | 4.20 | 1.10 | 0.35 | 8.50 |

| PBX 9501[39] | 1.844 | 36.3 | 852.4 | 18.02 | 4.55 | 1.3 | 0.38 | 10.2 |

Equations of state for solids and liquids[edit]

Common abbreviations:

- Tait equation for water and other liquids. Several equations are referred to as the Tait equation.

- Murnaghan equation of state

- Stacey-Brennan-Irvine equation of state[40] (falsely often refer to Rose-Vinet equation of state)

- Adapted Polynomial equation of state[44] (second order form = AP2, adapted for extreme compression)

- with

- where = 0.02337 GPa.nm5. The total number of electrons in the initial volume determines the Fermi gas pressure , which provides for the correct behavior at extreme compression. So far there are no known "simple" solids that require higher order terms.

- Adapted polynomial equation of state[44] (third order form = AP3)

- Mie–Grüneisen equation of state (for a more detailed discussion see refs.[45] [46])

- where is the bulk modulus at equilibrium volume and typically about −2 is often related to the Grüneisen parameter by

See also[edit]

The ideal gas law, also called the general gas equation, is the equation of state of a hypothetical ideal gas. It is a good approximation of the behavior of many gases under many conditions, although it has several limitations. It was first stated by Benoît Paul Émile Clapeyron in 1834 as a combination of the empirical Boyle's law, Charles's law, Avogadro's law, and Gay-Lussac's law.[1] The ideal gas law is often written in an empirical form:

where , and are the pressure, volume and temperature; is the amount of substance; and is the ideal gas constant. It is the same for all gases. It can also be derived from the microscopic kinetic theory, as was achieved (apparently independently) by August Krönig in 1856[2] and Rudolf Clausius in 1857.[3]

Equation[edit]

The state of an amount of gas is determined by its pressure, volume, and temperature. The modern form of the equation relates these simply in two main forms. The temperature used in the equation of state is an absolute temperature: the appropriate SI unit is the kelvin.[4]

Common forms[edit]

The most frequently introduced forms are:

where:

- is the pressure of the gas,

- is the volume of the gas,

- is the amount of substance of gas (also known as number of moles),

- is the ideal, or universal, gas constant, equal to the product of the Boltzmann constant and the Avogadro constant,

- is the Boltzmann constant

- is the Avogadro constant

- is the absolute temperature of the gas.

In SI units, p is measured in pascals, V is measured in cubic metres, n is measured in moles, and T in kelvins (the Kelvin scale is a shifted Celsius scale, where 0.00 K = −273.15 °C, the lowest possible temperature). R has the value 8.314 J/(K⋅mol) ≈ 2 cal/(K⋅mol), or 0.0821 L⋅atm/(mol⋅K).

Molar form[edit]

How much gas is present could be specified by giving the mass instead of the chemical amount of gas. Therefore, an alternative form of the ideal gas law may be useful. The chemical amount (n) (in moles) is equal to total mass of the gas (m) (in kilograms) divided by the molar mass (M) (in kilograms per mole):

By replacing n with m/M and subsequently introducing density ρ = m/V, we get:

Defining the specific gas constant Rspecific(r) as the ratio R/M,

This form of the ideal gas law is very useful because it links pressure, density, and temperature in a unique formula independent of the quantity of the considered gas. Alternatively, the law may be written in terms of the specific volume v, the reciprocal of density, as

It is common, especially in engineering and meteorological applications, to represent the specific gas constant by the symbol R. In such cases, the universalgas constant is usually given a different symbol such as or to distinguish it. In any case, the context and/or units of the gas constant should make it clear as to whether the universal or specific gas constant is being referred to.[5]

Statistical mechanics[edit]

In statistical mechanics the following molecular equation is derived from first principles

where P is the absolute pressure of the gas, n is the number density of the molecules (given by the ratio n = N/V, in contrast to the previous formulation in which n is the number of moles), T is the absolute temperature, and kB is the Boltzmann constant relating temperature and energy, given by:

where NA is the Avogadro constant.

From this we notice that for a gas of mass m, with an average particle mass of μ times the atomic mass constant, mu, (i.e., the mass is μ u) the number of molecules will be given by

and since ρ = m/V = nμmu, we find that the ideal gas law can be rewritten as

In SI units, P is measured in pascals, V in cubic metres, T in kelvins, and kB = 1.38×10−23 J⋅K−1 in SI units.

Combined gas law[edit]

Combining the laws of Charles, Boyle and Gay-Lussac gives the combined gas law, which takes the same functional form as the ideal gas law save that the number of moles is unspecified, and the ratio of to is simply taken as a constant:[6]

where is the pressure of the gas, is the volume of the gas, is the absolute temperature of the gas, and is a constant. When comparing the same substance under two different sets of conditions, the law can be written as

Energy associated with a gas[edit]

According to assumptions of the kinetic theory of ideal gases, we assume that there are no intermolecular attractions between the molecules of an ideal gas. In other words, its potential energy is zero. Hence, all the energy possessed by the gas is in the kinetic energy of the molecules of the gas.

This is the kinetic energy of n moles of a monatomic gas having 3 degrees of freedom; x, y, z.

| Energy of gas | Mathematical expression |

|---|---|

| energy associated with one mole of a monatomic gas | |

| energy associated with one gram of a monatomic gas | |

| energy associated with one molecule (or atom) of a monatomic gas |

Applications to thermodynamic processes[edit]

The table below essentially simplifies the ideal gas equation for a particular processes, thus making this equation easier to solve using numerical methods.

A thermodynamic process is defined as a system that moves from state 1 to state 2, where the state number is denoted by subscript. As shown in the first column of the table, basic thermodynamic processes are defined such that one of the gas properties (P, V, T, S, or H) is constant throughout the process.

For a given thermodynamics process, in order to specify the extent of a particular process, one of the properties ratios (which are listed under the column labeled "known ratio") must be specified (either directly or indirectly). Also, the property for which the ratio is known must be distinct from the property held constant in the previous column (otherwise the ratio would be unity, and not enough information would be available to simplify the gas law equation).

In the final three columns, the properties (p, V, or T) at state 2 can be calculated from the properties at state 1 using the equations listed.

| Process | Constant | Known ratio or delta | p2 | V2 | T2 |

|---|---|---|---|---|---|

| Isobaric process | Pressure | p2 = p1 | V2 = V1(V2/V1) | T2 = T1(V2/V1) | |

| p2 = p1 | V2 = V1(T2/T1) | T2 = T1(T2/T1) | |||

| Isochoric process (Isovolumetric process) (Isometric process) | Volume | p2 = p1(p2/p1) | V2 = V1 | T2 = T1(p2/p1) | |

| p2 = p1(T2/T1) | V2 = V1 | T2 = T1(T2/T1) | |||

| Isothermal process | Temperature | p2 = p1(p2/p1) | V2 = V1/(p2/p1) | T2 = T1 | |

| p2 = p1/(V2/V1) | V2 = V1(V2/V1) | T2 = T1 | |||

| Isentropic process (Reversible adiabatic process) | p2 = p1(p2/p1) | V2 = V1(p2/p1)(−1/γ) | T2 = T1(p2/p1)(γ − 1)/γ | ||

| p2 = p1(V2/V1)−γ | V2 = V1(V2/V1) | T2 = T1(V2/V1)(1 − γ) | |||

| p2 = p1(T2/T1)γ/(γ − 1) | V2 = V1(T2/T1)1/(1 − γ) | T2 = T1(T2/T1) | |||

| Polytropic process | P Vn | p2 = p1(p2/p1) | V2 = V1(p2/p1)(−1/n) | T2 = T1(p2/p1)(n − 1)/n | |

| p2 = p1(V2/V1)−n | V2 = V1(V2/V1) | T2 = T1(V2/V1)(1 − n) | |||

| p2 = p1(T2/T1)n/(n − 1) | V2 = V1(T2/T1)1/(1 − n) | T2 = T1(T2/T1) | |||

| Isenthalpic process (Irreversible adiabatic process) | p2 = p1 + (p2 − p1) | T2 = T1 + μJT(p2 − p1) | |||

| p2 = p1 + (T2 − T1)/μJT | T2 = T1 + (T2 − T1) |

^ a. In an isentropic process, system entropy (S) is constant. Under these conditions, p1V1γ = p2V2γ, where γ is defined as the heat capacity ratio, which is constant for a calorifically perfect gas. The value used for γ is typically 1.4 for diatomic gases like nitrogen (N2) and oxygen (O2), (and air, which is 99% diatomic). Also γ is typically 1.6 for mono atomic gases like the noble gases helium (He), and argon (Ar). In internal combustion engines γ varies between 1.35 and 1.15, depending on constitution gases and temperature.

^ b. In an isenthalpic process, system enthalpy (H) is constant. In the case of free expansion for an ideal gas, there are no molecular interactions, and the temperature remains constant. For real gasses, the molecules do interact via attraction or repulsion depending on temperature and pressure, and heating or cooling does occur. This is known as the Joule–Thomson effect. For reference, the Joule–Thomson coefficient μJT for air at room temperature and sea level is 0.22 °C/bar.[7]

Deviations from ideal behavior of real gases[edit]

The equation of state given here (PV = nRT) applies only to an ideal gas, or as an approximation to a real gas that behaves sufficiently like an ideal gas. There are in fact many different forms of the equation of state. Since the ideal gas law neglects both molecular size and inter molecular attractions, it is most accurate for monatomic gases at high temperatures and low pressures. The neglect of molecular size becomes less important for lower densities, i.e. for larger volumes at lower pressures, because the average distance between adjacent molecules becomes much larger than the molecular size. The relative importance of intermolecular attractions diminishes with increasing thermal kinetic energy, i.e., with increasing temperatures. More detailed equations of state, such as the van der Waals equation, account for deviations from ideality caused by molecular size and intermolecular forces.

Derivations[edit]

Empirical[edit]

The empirical laws that led to the derivation of the ideal gas law were discovered with experiments that changed only 2 state variables of the gas and kept every other one constant.

All the possible gas laws that could have been discovered with this kind of setup are:

or |

| (1) known as Boyle's law |

or |

| (2) known as Charles's law |

or |

| (3) known as Avogadro's law |

or |

| (4) known as Gay-Lussac's law |

or |

| (5) |

or |

| (6) |

where P stands for pressure, V for volume, N for number of particles in the gas and T for temperature; where are not actual constants but are in this context because of each equation requiring only the parameters explicitly noted in it changing.

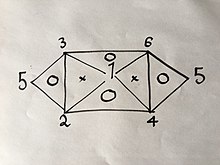

To derive the ideal gas law one does not need to know all 6 formulas, one can just know 3 and with those derive the rest or just one more to be able to get the ideal gas law, which needs 4.

Since each formula only holds when only the state variables involved in said formula change while the others remain constant, we cannot simply use algebra and directly combine them all. I.e. Boyle did his experiments while keeping N and Tconstant and this must be taken into account.

Keeping this in mind, to carry the derivation on correctly, one must imagine the gas being altered by one process at a time. The derivation using 4 formulas can look like this:

at first the gas has parameters

Say, starting to change only pressure and volume, according to Boyle's law, then:

| (7) |

After this process, the gas has parameters

Using then equation (5) to change the number of particles in the gas and the temperature,

| (8) |

After this process, the gas has parameters

Using then equation (6) to change the pressure and the number of particles,

| (9) |

After this process, the gas has parameters

Using then Charles's law to change the volume and temperature of the gas,

| (10) |

After this process, the gas has parameters

Using simple algebra on equations (7), (8), (9) and (10) yields the result:

or

where stands for Boltzmann's constant.

Another equivalent result, using the fact that , where n is the number of moles in the gas and R is the universal gas constant, is:

which is known as the ideal gas law.

If three of the six equations are known, it may be possible to derive the remaining three using the same method. However, because each formula has two variables, this is possible only for certain groups of three. For example, if you were to have equations (1), (2) and (4) you would not be able to get any more because combining any two of them will only give you the third. However, if you had equations (1), (2) and (3) you would be able to get all six equations because combining (1) and (2) will yield (4), then (1) and (3) will yield (6), then (4) and (6) will yield (5), as well as would the combination of (2) and (3) as is explained in the following visual relation:

where the numbers represent the gas laws numbered above.

If you were to use the same method used above on 2 of the 3 laws on the vertices of one triangle that has a "O" inside it, you would get the third.

For example:

Change only pressure and volume first:

| (1') |

then only volume and temperature:

| (2') |

then as we can choose any value for , if we set , equation (2') becomes:

| (3') |

combining equations (1') and (3') yields , which is equation (4), of which we had no prior knowledge until this derivation.

Theoretical[edit]

Kinetic theory[edit]

The ideal gas law can also be derived from first principles using the kinetic theory of gases, in which several simplifying assumptions are made, chief among which are that the molecules, or atoms, of the gas are point masses, possessing mass but no significant volume, and undergo only elastic collisions with each other and the sides of the container in which both linear momentum and kinetic energy are conserved.

The fundamental assumptions of the kinetic theory of gases imply that

Using the Maxwell–Boltzmann distribution, the fraction of molecules that have a speed in the range to is , where

and denotes the Boltzmann constant. The root-mean-square speed can be calculated by

Using the integration formula

it follows that

from which we get the ideal gas law:

Statistical mechanics[edit]

Let q = (qx, qy, qz) and p = (px, py, pz) denote the position vector and momentum vector of a particle of an ideal gas, respectively. Let F denote the net force on that particle. Then the time-averaged kinetic energy of the particle is:

where the first equality is Newton's second law, and the second line uses Hamilton's equations and the equipartition theorem. Summing over a system of Nparticles yields

By Newton's third law and the ideal gas assumption, the net force of the system is the force applied by the walls of the container, and this force is given by the pressure P of the gas. Hence

where dS is the infinitesimal area element along the walls of the container. Since the divergence of the position vector q is

the divergence theorem implies that

where dV is an infinitesimal volume within the container and V is the total volume of the container.

Putting these equalities together yields

which immediately implies the ideal gas law for N particles:

where n = N/NA is the number of moles of gas and R = NAkB is the gas constant.

Other dimensions[edit]

For a d-dimensional system, the ideal gas pressure is:[8]

where is the volume of the d-dimensional domain in which the gas exists. Note that the dimensions of the pressure changes with dimensionality.

See also[edit]

- Boltzmann constant – Physical constant relating particle kinetic energy with temperature

- Configuration integral – Function in thermodynamics and statistical physics

- Dynamic pressure – Concept in fluid dynamics

- Gas laws

- Internal energy – Energy contained in a system, excluding energy due to its position as a body in external force fields or its overall motion

- Van der Waals equation – Gas equation of state which accounts for non-ideal gas behavior

https://en.wikipedia.org/wiki/Ideal_gas_law

A thermodynamic system is a body of matter and/or radiation, confined in space by walls, with defined permeabilities, which separate it from its surroundings. The surroundings may include other thermodynamic systems, or physical systems that are not thermodynamic systems. A wall of a thermodynamic system may be purely notional, when it is described as being 'permeable' to all matter, all radiation, and all forces. A thermodynamic system can be fully described by a definite set of thermodynamic state variables, which always covers both intensive and extensive properties.

A widely used distinction is between isolated, closed, and open thermodynamic systems.

An isolated thermodynamic system has walls that are non-conductive of heat and perfectly reflective of all radiation, that are rigid and immovable, and that are impermeable to all forms of matter and all forces. (Some writers use the word 'closed' when here the word 'isolated' is being used.)

A closed thermodynamic system is confined by walls that are impermeable to matter, but, by thermodynamic operations, alternately can be made permeable (described as 'diathermal') or impermeable ('adiabatic') to heat, and that, for thermodynamic processes (initiated and terminated by thermodynamic operations), alternately can be allowed or not allowed to move, with system volume change or agitation with internal friction in system contents, as in Joule's original demonstration of the mechanical equivalent of heat, and alternately can be made rough or smooth, so as to allow or not allow heating of the system by friction on its surface.

An open thermodynamic system has at least one wall that separates it from another thermodynamic system, which for this purpose is counted as part of the surroundings of the open system, the wall being permeable to at least one chemical substance, as well as to radiation; such a wall, when the open system is in thermodynamic equilibrium, does not sustain a temperature difference across itself.

A thermodynamic system is subject to external interventions called thermodynamic operations; these alter the system's walls or its surroundings; as a result, the system undergoes transient thermodynamic processes according to the principles of thermodynamics. Such operations and processes effect changes in the thermodynamic state of the system.

When the intensive state variables of its content vary in space, a thermodynamic system can be considered as many systems contiguous with each other, each being a different thermodynamical system.

A thermodynamic system may comprise several phases, such as ice, liquid water, and water vapour, in mutual thermodynamic equilibrium, mutually unseparated by any wall. Or it may be homogeneous. Such systems may be regarded as 'simple'.

A 'compound' thermodynamic system may comprise several simple thermodynamic sub-systems, mutually separated by one or several walls of definite respective permeabilities. It is often convenient to consider such a compound system initially isolated in a state of thermodynamic equilibrium, then affected by a thermodynamic operation of increase of some inter-sub-system wall permeability, to initiate a transient thermodynamic process, so as to generate a final new state of thermodynamic equilibrium. This idea was used, and perhaps introduced, by Carathéodory. In a compound system, initially isolated in a state of thermodynamic equilibrium, a reduction of a wall permeability does not effect a thermodynamic process, nor a change of thermodynamic state. This difference expresses the Second Law of thermodynamics. It illustrates that increase in entropy measures increase in dispersal of energy, due to increase of accessibility of microstates.[1]

In equilibrium thermodynamics, the state of a thermodynamic system is a state of thermodynamic equilibrium, as opposed to a non-equilibrium state.

According to the permeabilities of the walls of a system, transfers of energy and matter occur between it and its surroundings, which are assumed to be unchanging over time, until a state of thermodynamic equilibrium is attained. The only states considered in equilibrium thermodynamics are equilibrium states. Classical thermodynamics includes (a) equilibrium thermodynamics; (b) systems considered in terms of cyclic sequences of processes rather than of states of the system; such were historically important in the conceptual development of the subject. Systems considered in terms of continuously persisting processes described by steady flows are important in engineering.

The very existence of thermodynamic equilibrium, defining states of thermodynamic systems, is the essential, characteristic, and most fundamental postulate of thermodynamics, though it is only rarely cited as a numbered law.[2][3][4] According to Bailyn, the commonly rehearsed statement of the zeroth law of thermodynamics is a consequence of this fundamental postulate.[5] In reality, practically nothing in nature is in strict thermodynamic equilibrium, but the postulate of thermodynamic equilibrium often provides very useful idealizations or approximations, both theoretically and experimentally; experiments can provide scenarios of practical thermodynamic equilibrium.

In equilibrium thermodynamics the state variables do not include fluxes because in a state of thermodynamic equilibrium all fluxes have zero values by definition. Equilibrium thermodynamic processes may involve fluxes but these must have ceased by the time a thermodynamic process or operation is complete bringing a system to its eventual thermodynamic state. Non-equilibrium thermodynamics allows its state variables to include non-zero fluxes, that describe transfers of massor energy or entropy between a system and its surroundings.[6]

In 1824 Sadi Carnot described a thermodynamic system as the working substance (such as the volume of steam) of any heat engine under study.

https://en.wikipedia.org/wiki/Thermodynamic_system

In thermodynamics, a thermodynamic state of a system is its condition at a specific time; that is, fully identified by values of a suitable set of parameters known as state variables, state parameters or thermodynamic variables. Once such a set of values of thermodynamic variables has been specified for a system, the values of all thermodynamic properties of the system are uniquely determined. Usually, by default, a thermodynamic state is taken to be one of thermodynamic equilibrium. This means that the state is not merely the condition of the system at a specific time, but that the condition is the same, unchanging, over an indefinitely long duration of time.

Thermodynamics sets up an idealized conceptual structure that can be summarized by a formal scheme of definitions and postulates. Thermodynamic states are amongst the fundamental or primitive objects or notions of the scheme, for which their existence is primary and definitive, rather than being derived or constructed from other concepts.[1][2][3]

A thermodynamic system is not simply a physical system.[4] Rather, in general, infinitely many different alternative physical systems comprise a given thermodynamic system, because in general a physical system has vastly many more microscopic characteristics than are mentioned in a thermodynamic description. A thermodynamic system is a macroscopic object, the microscopic details of which are not explicitly considered in its thermodynamic description. The number of state variables required to specify the thermodynamic state depends on the system, and is not always known in advance of experiment; it is usually found from experimental evidence. The number is always two or more; usually it is not more than some dozen. Though the number of state variables is fixed by experiment, there remains choice of which of them to use for a particular convenient description; a given thermodynamic system may be alternatively identified by several different choices of the set of state variables. The choice is usually made on the basis of the walls and surroundings that are relevant for the thermodynamic processes that are to be considered for the system. For example, if it is intended to consider heat transfer for the system, then a wall of the system should be permeable to heat, and that wall should connect the system to a body, in the surroundings, that has a definite time-invariant temperature.[5][6]

For equilibrium thermodynamics, in a thermodynamic state of a system, its contents are in internal thermodynamic equilibrium, with zero flows of all quantities, both internal and between system and surroundings. For Planck, the primary characteristic of a thermodynamic state of a system that consists of a single phase, in the absence of an externally imposed force field, is spatial homogeneity.[7] For non-equilibrium thermodynamics, a suitable set of identifying state variables includes some macroscopic variables, for example a non-zero spatial gradient of temperature, that indicate departure from thermodynamic equilibrium. Such non-equilibrium identifying state variables indicate that some non-zero flow may be occurring within the system or between system and surroundings.[8]

https://en.wikipedia.org/wiki/Thermodynamic_state

Classical thermodynamics considers three main kinds of thermodynamic process: (1) changes in a system, (2) cycles in a system, and (3) flow processes.

(1) A change in a system is defined by a passage from an initial to a final state of thermodynamic equilibrium. In classical thermodynamics, the actual course of the process is not the primary concern, and often is ignored. A state of thermodynamic equilibrium endures unchangingly unless it is interrupted by a thermodynamic operation that initiates a thermodynamic process. The equilibrium states are each respectively fully specified by a suitable set of thermodynamic state variables, that depend only on the current state of the system, not on the path taken by the processes that produce the state. In general, during the actual course of a thermodynamic process, the system may pass through physical states which are not describable as thermodynamic states, because they are far from internal thermodynamic equilibrium. Non-equilibrium thermodynamics, however, considers processes in which the states of the system are close to thermodynamic equilibrium, and aims to describe the continuous passage along the path, at definite rates of progress.

As a useful theoretical but not actually physically realizable limiting case, a process may be imagined to take place practically infinitely slowly or smoothly enough to allow it to be described by a continuous path of equilibrium thermodynamic states, when it is called a "quasi-static" process. This is a theoretical exercise in differential geometry, as opposed to a description of an actually possible physical process; in this idealized case, the calculation may be exact.

A really possible or actual thermodynamic process, considered closely, involves friction. This contrasts with theoretically idealized, imagined, or limiting, but not actually possible, quasi-static processes which may occur with a theoretical slowness that avoids friction. It also contrasts with idealized frictionless processes in the surroundings, which may be thought of as including 'purely mechanical systems'; this difference comes close to defining a thermodynamic process.[1]

(2) A cyclic process carries the system through a cycle of stages, starting and being completed in some particular state. The descriptions of the staged states of the system are not the primary concern. The primary concern is the sums of matter and energy inputs and outputs to the cycle. Cyclic processes were important conceptual devices in the early days of thermodynamical investigation, while the concept of the thermodynamic state variable was being developed.

(3) Defined by flows through a system, a flow process is a steady state of flows into and out of a vessel with definite wall properties. The internal state of the vessel contents is not the primary concern. The quantities of primary concern describe the states of the inflow and the outflow materials, and, on the side, the transfers of heat, work, and kinetic and potential energies for the vessel. Flow processes are of interest in engineering.

https://en.wikipedia.org/wiki/Thermodynamic_process

A thermodynamic cycle consists of a linked sequence of thermodynamic processes that involve transfer of heatand work into and out of the system, while varying pressure, temperature, and other state variables within the system, and that eventually returns the system to its initial state.[1] In the process of passing through a cycle, the working fluid (system) may convert heat from a warm source into useful work, and dispose of the remaining heat to a cold sink, thereby acting as a heat engine. Conversely, the cycle may be reversed and use work to move heat from a cold source and transfer it to a warm sink thereby acting as a heat pump. At every point in the cycle, the system is in thermodynamic equilibrium, so the cycle is reversible (its entropy change is zero, as entropy is a state function).

During a closed cycle, the system returns to its original thermodynamic state of temperature and pressure. Process quantities (or path quantities), such as heat and work are process dependent. For a cycle for which the system returns to its initial state the first law of thermodynamics applies:

The above states that there is no change of the energy of the system over the cycle. Ein might be the work and heat input during the cycle and Eout would be the work and heat output during the cycle. The first law of thermodynamicsalso dictates that the net heat input is equal to the net work output over a cycle (we account for heat, Qin, as positive and Qout as negative). The repeating nature of the process path allows for continuous operation, making the cycle an important concept in thermodynamics. Thermodynamic cycles are often represented mathematically as quasistatic processes in the modeling of the workings of an actual device.

https://en.wikipedia.org/wiki/Thermodynamic_cycle

A state variable is one of the set of variables that are used to describe the mathematical "state"of a dynamical system. Intuitively, the state of a system describes enough about the system to determine its future behaviour in the absence of any external forces affecting the system. Models that consist of coupled first-order differential equations are said to be in state-variable form.[1]

Examples[edit]

- In mechanical systems, the position coordinates and velocities of mechanical parts are typical state variables; knowing these, it is possible to determine the future state of the objects in the system.

- In thermodynamics, a state variable is an independent variable of a state function like internal energy, enthalpy, and entropy. Examples include temperature, pressure, and volume. Heat and work are not state functions, but process functions.

- In electronic/electrical circuits, the voltages of the nodes and the currents through components in the circuit are usually the state variables. In any electrical circuit, the number of state variables are equal to the number of storage elements, which are inductors and capacitors. The state variable for an inductor is the current through the inductor, while that for a capacitor is the voltage across the capacitor.

- In ecosystem models, population sizes (or concentrations) of plants, animals and resources (nutrients, organic material) are typical state variables.

Control systems engineering[edit]

In control engineering and other areas of science and engineering, state variables are used to represent the states of a general system. The set of possible combinations of state variable values is called the state space of the system. The equations relating the current state of a system to its most recent input and past states are called the state equations, and the equations expressing the values of the output variables in terms of the state variables and inputs are called the output equations. As shown below, the state equations and output equations for a linear time invariant system can be expressed using coefficient matrices: A, B, C, and D

where N, L and M are the dimensions of the vectors describing the state, input and output, respectively.

Discrete-time systems[edit]

The state vector (vector of state variables) representing the current state of a discrete-time system (i.e. digital system) is , where n is the discrete point in time at which the system is being evaluated. The discrete-time state equations are

which describes the next state of the system (x[n+1]) with respect to current state and inputs u[n] of the system. The output equations are

which describes the output y[n] with respect to current states and inputs u[n] to the system.

Continuous time systems[edit]

The state vector representing the current state of a continuous-time system (i.e. analog system) is , and the continuous-time state equations giving the evolution of the state vector are

which describes the continuous rate of change of the state of the system with respect to current state x(t) and inputs u(t) of the system. The output equations are

which describes the output y(t) with respect to current states x(t) and inputs u(t) to the system.

See also[edit]

https://en.wikipedia.org/wiki/State_variable

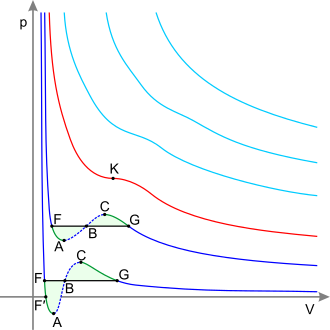

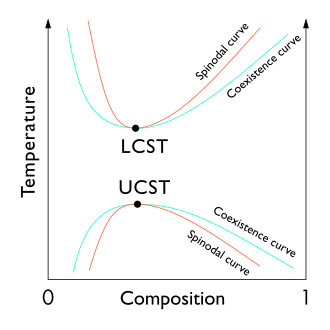

In thermodynamics, a critical point (or critical state) is the end point of a phase equilibrium curve. The most prominent example is the liquid–vapor critical point, the end point of the pressure–temperature curve that designates conditions under which a liquid and its vapor can coexist. At higher temperatures, the gas cannot be liquefied by pressure alone. At the critical point, defined by a critical temperature Tc and a critical pressure pc, phase boundaries vanish. Other examples include the liquid–liquid critical points in mixtures.

Liquid–vapor critical point[edit]

Overview[edit]

For simplicity and clarity, the generic notion of critical point is best introduced by discussing a specific example, the vapor-liquid critical point. This was the first critical point to be discovered, and it is still the best known and most studied one.

The figure to the right shows the schematic PT diagram of a pure substance (as opposed to mixtures, which have additional state variables and richer phase diagrams, discussed below). The commonly known phases solid, liquid and vapor are separated by phase boundaries, i.e. pressure–temperature combinations where two phases can coexist. At the triple point, all three phases can coexist. However, the liquid–vapor boundary terminates in an endpoint at some critical temperature Tc and critical pressure pc. This is the critical point.

In water, the critical point occurs at 647.096 K (373.946 °C; 705.103 °F) and 22.064 megapascals (3,200.1 psi; 217.75 atm).[2]

In the vicinity of the critical point, the physical properties of the liquid and the vapor change dramatically, with both phases becoming ever more similar. For instance, liquid water under normal conditions is nearly incompressible, has a low thermal expansion coefficient, has a high dielectric constant, and is an excellent solvent for electrolytes. Near the critical point, all these properties change into the exact opposite: water becomes compressible, expandable, a poor dielectric, a bad solvent for electrolytes, and prefers to mix with nonpolar gases and organic molecules.[3]

At the critical point, only one phase exists. The heat of vaporization is zero. There is a stationary inflection point in the constant-temperature line (critical isotherm) on a PV diagram. This means that at the critical point:[4][5][6]

Above the critical point there exists a state of matter that is continuously connected with (can be transformed without phase transition into) both the liquid and the gaseous state. It is called supercritical fluid. The common textbook knowledge that all distinction between liquid and vapor disappears beyond the critical point has been challenged by Fisher and Widom,[7] who identified a p–T line that separates states with different asymptotic statistical properties (Fisher–Widom line).