Energy price

The present disambiguation page holds the title of a primary topic, and an article needs to be written about it. It is believed to qualify as a broad-concept article. It may be written directly at this page or drafted elsewhere and then moved over here. Related titles should be described in Energy price, while unrelated titles should be moved to Energy price (disambiguation). |

The following articles relate to the price of energy:

https://en.wikipedia.org/wiki/Energy_price

dia

- Miner, a person engaged in mining or digging

- Mining, extraction of mineral resources from the ground through a mine

Grammar

- Mine, a first-person English possessive pronoun

Military

- Anti-tank mine, a land mine made for use against armored vehicles

- Antipersonnel mine, a land mine targeting people walking around, either with explosives or poison gas

- Bangalore mine, colloquial name for the Bangalore torpedo, a man-portable explosive device for clearing a path through wire obstacles and land mines

- Cluster bomb, an aerial bomb which releases many small submunitions, which often act as mines

- Land mine, explosive mines placed under or on the ground

- Mining (military), digging under a fortified military position to penetrate its defenses

- Naval mine, or sea mine, a mine at sea, either floating or on the sea bed, often dropped via parachute from aircraft, or otherwise lain by surface ships or submarines

- Parachute mine, an air-dropped "sea mine" falling gently under a parachute, used as a high-capacity cheaply-cased large bomb against ground targets

Places

- The Mine, Queensland, a locality in the Rockhampton Region, Australia

- Mine, Saga, a Japanese town

- Mine, Yamaguchi, a Japanese city

- Mine District, Yamaguchi, a former district in the area of the city

People

Given name

- Mine Ercan (born 1978), Turkish women's wheelchair basketball player

- Mine Guri, Albanian communist politician

- Miné Okubo (1912–2001), American artist and writer

Nickname

- Mine Boy, nickname of Alex Levinsky (1910–1990), NHL hockey player

Surname

- Kazuki Mine (born 1993), Japanese football player

- George Ralph Mines (born 1886), English cardiac electrophysiologist

Arts, entertainment, and media

Films

- Mine (1985 film), a Turkish film

- Mine (2009 film), an American documentary film

- Mine (2016 film), an Italian-American film

- Abandoned Mine or The Mine, a 2013 horror film

- The Mine (1978 film), Turkish film

Literature

- Mine (novel), a 1990 novel by Robert R. McCammon

- The Mine (novel), 2012 novel by Arnab Ray

Music

Albums

- Mine (Kim Jaejoong EP), 2013

- Mine (Dolly Parton album), 1973

- Mine (Phoebe Ryan EP), 2015

- Mine (Li Yuchun album), 2007

- Mines (album), a 2010 album by indie rock band Menomena

- Mine!, a 1994 album released by musical duo Trout Fishing in America

Songs

- "Mine" (The 1975 song), 2018

- "Mine" (Bazzi song), 2017

- "Mine" (Beyoncé song), 2013

- "Mine" (Kelly Clarkson song), 2023

- "Mine" (Alice Glass song), 2018

- "Mine" (Taylor Swift song), 2010

- "Mine", a song from the 1933 Broadway musical Let 'Em Eat Cake

- "Mine", a song by Bebe Rexha from the album Expectations

- "Mine", a song by Dolly Parton from In the Good Old Days (When Times Were Bad)

- "Mine", a song by Everything but the Girl from Everything but the Girl

- "Mine", a song by Ghinzu from Blow

- "Mine", a song by Jason Webley from Only Just Beginning

- "Mine", a song by Krezip from Days Like This

- "Mine", a song by Mustasch from Mustasch

- "Mine", a song by Savage Garden from Savage Garden

- "Mine", a song by Sepultura from Roots

- "Mine", a song by Taproot from Welcome

- "Mine", a song by Christina Perri from lovestrong

- "Mine", a song by Disturbed from The Lost Children

- "Mine", a song by M.I from the album The Chairman

- "#Mine", a song by Lil' Kim from Lil Kim Season

- "Mine, Mine, Mine", a song from the soundtrack for the 1995 Disney film Pocahontas

Television

- Mine (2021 TV series), a South Korean television series

Organizations and enterprises

- MINE, a design office in San Francisco, US, of which Christopher Simmons is principal creative director

- Colorado School of Mines or "Mines", a university in Golden, Colorado, US

- Mine's, a Japanese auto tuning company

- South Dakota School of Mines and Technology, a university in Rapid City, South Dakota, US

Science and technology

- MINE (chemotherapy), a chemotherapy regimen

- Mine or star mine, a type of fireworks

- MinE, a bacterial protein

- Data mining, the computational process of discovering patterns in large data sets

- Leaf mine, a space in a leaf

- Mina (unit), or mine, an ancient Greek unit of mass

See also

- Disambiguation pages

- Place name disambiguation pages

- Disambiguation pages with given-name-holder lists

- Disambiguation pages with surname-holder lists

https://en.wikipedia.org/wiki/Mine

| Part of a series on |

| Evolutionary biology |

|---|

|

|

|

|

|

|

|

|

|

|

|

Transmutation of species and transformism are unproven 18th and 19th-century evolutionary ideas about the change of one species into another that preceded Charles Darwin's theory of natural selection.[1] The French Transformisme was a term used by Jean Baptiste Lamarck in 1809 for his theory, and other 18th and 19th century proponents of pre-Darwinian evolutionary ideas included Denis Diderot, Étienne Geoffroy Saint-Hilaire, Erasmus Darwin, Robert Grant, and Robert Chambers, the anonymous author of the book Vestiges of the Natural History of Creation. Opposition in the scientific community to these early theories of evolution, led by influential scientists like the anatomists Georges Cuvier and Richard Owen, and the geologist Charles Lyell, was intense. The debate over them was an important stage in the history of evolutionary thought and influenced the subsequent reaction to Darwin's theory.

Terminology

Transmutation was one of the names commonly used for evolutionary ideas in the 19th century before Charles Darwin published On The Origin of Species (1859). Transmutation had previously been used as a term in alchemy to describe the transformation of base metals into gold. Other names for evolutionary ideas used in this period include the development hypothesis (one of the terms used by Darwin) and the theory of regular gradation, used by William Chilton in the periodical press such as The Oracle of Reason.[2] Transformation is another word used quite as often as transmutation in this context. These early 19th century evolutionary ideas played an important role in the history of evolutionary thought.

The proto-evolutionary thinkers of the 18th and early 19th century had to invent terms to label their ideas, but it was first Joseph Gottlieb Kölreuter who used the term "transmutation" to refer to species who have had biological changes through hybridization.[3]

The terminology did not settle down until some time after the publication of the Origin of Species. The word evolved in a modern sense was first used in 1826 in an anonymous paper published in Robert Jameson's journal and evolution was a relative late-comer which can be seen in Herbert Spencer's Social Statics of 1851,[a] and at least one earlier example, but was not in general use until about 1865–70.

Historical development

In the 10th and 11th centuries, Ibn Miskawayh's Al-Fawz al-Kabir (الفوز الأكبر), and the Brethren of Purity's Encyclopedia of the Brethren of Purity (رسائل إخوان الصفا) developed ideas about changes in biological species.[5] In 1993, Muhammad Hamidullah described the ideas in lectures:

[These books] state that God first created matter and invested it with energy for development. Matter, therefore, adopted the form of vapour which assumed the shape of water in due time. The next stage of development was mineral life. Different kinds of stones developed in course of time. Their highest form being mirjan (coral). It is a stone which has in it branches like those of a tree. After mineral life evolves vegetation. The evolution of vegetation culminates with a tree which bears the qualities of an animal. This is the date-palm. It has male and female genders. It does not wither if all its branches are chopped but it dies when the head is cut off. The date-palm is therefore considered the highest among the trees and resembles the lowest among animals. Then is born the lowest of animals. It evolves into an ape. This is not the statement of Darwin. This is what Ibn Maskawayh states and this is precisely what is written in the Epistles of Ikhwan al-Safa. The Muslim thinkers state that ape then evolved into a lower kind of a barbarian man. He then became a superior human being. Man becomes a saint, a prophet. He evolves into a higher stage and becomes an angel. The one higher to angels is indeed none but God. Everything begins from Him and everything returns to Him.[6]

In the 14th century, Ibn Khaldun further developed these ideas. According to some commentators,[who?] statements in his 1377 work, the Muqaddimah anticipate the biological theory of evolution.[7]

Robert Hooke proposed in a speech to the Royal Society in the late 17th century that species vary, change, and especially become extinct. His “Discourse of Earthquakes” was based on comparisons made between fossils, especially the modern pearly nautilus and the curled shells of ammonites.[8]

In the 18th century, Jacques-Antoine des Bureaux claimed a “genealogical ascent of species”. He argued that through crossbreeding and hybridization in reproduction, “progressive organization” occurred, allowing organisms to change and more complex species to develop.[9]

Simultaneously, Retif de la Bretonne wrote La decouverte australe par un homme-volant (1781) and La philosophie de monsieur Nicolas (1796), which encapsulated his view that more complex species, such as mankind, had developed step-by-step from “less perfect” animals. De la Bretonne believed that living forms undergo constant change. Although he believed in constant change, he took a very different approach than Diderot: chance and blind combinations of atoms, in de la Bretonne’s opinion, was not the cause of transmutation. De la Bretonne argued that all species had developed from more primitive organisms, and that nature aimed to reach perfection.[9]

Denis Diderot, chief editor of the Encyclopedia, spent his time pouring over scientific theories attempting to explain rock strata and the diversity of fossils. Geological and fossil evidence was presented to him as contributions to Encyclopedia articles, chief among them “Mammoth,” “Fossil,” and “Ivory Fossil,” all of which noted the existence of mammoth bones in Siberia. As a result of this geological and fossil evidence, Diderot believed that species were mutable. Particularly, he argued that organisms metamorphosized over millennia, resulting in species changes. In Diderot’s theory of transformationism, random chance plays a large role in allowing species to change, develop and become extinct, as well as having new species form. Specifically, Diderot believed that given randomness and an infinite number of times, all possible scenarios would manifest themselves. He proposed that this randomness was behind the development of new traits in offspring and as a result the development and extinction of species.[8][10]

Diderot drew from Leonardo da Vinci’s comparison of the leg structure of a human and a horse as proof of the interconnectivity of species. He saw this experiment as demonstrating that nature could continually try out new variations. Additionally, Diderot argued that organic molecules and organic matter possessed an inherent consciousness, which allowed the smallest particles of organic matter to organize into fibers, then a network, and then organs. The idea that organic molecules have consciousness was derived from both Maupertuis and Lucretian texts. Overall, Diderot’s musings all fit together as a “composite transformist philosophy,” one dependent on the randomness inherent to nature as a transformist mechanism.[8][10]

Erasmus Darwin developed a theory of universal transformation. His major works, The Botanic Garden (1792), Zoonomia (1794–96), and The Temple of Nature all touched on the transformation of organic creatures. In both The Botanic Garden and The Temple of Nature, Darwin used poetry to describe his ideas regarding species. In Zoonomia, however, Erasmus clearly articulates (as a more scientific text) his beliefs about the connections between organic life. He notes particularly that some plants and animals have “useless appendages,” which have gradually changed from their original, useful states. Additionally, Darwin relied on cosmological transformation as a crucial aspect of his theory of transformation, making a connection between William Herschel’s approach to natural historical cosmology and the changing aspects of plants and animals.[10][11][12]

Erasmus believed that life had one origin, a common ancestor, which he referred to as the “filament” of life. He used his understanding of chemical transmutation to justify the spontaneous generation of this filament. His geological study of Derbyshire and the sea- shells and fossils which he found there helped him to come to the conclusion that complex life had developed from more primitive forms (Laniel-Musitelli). Erasmus was an early proponent of what we now refer to as “adaptations,” albeit through a different transformist mechanism – he argued that sexual reproduction could pass on acquired traits through the father’s contribution to the embryon. These changes, he believed, were mainly driven by the three great needs of life: lust, food, and security. Erasmus proposed that these acquired changes gradually altered the physical makeup of organisms as a result of the desires of plants and animals. Notably, he describes insects developing from plants, a grand example of one species transforming into another.[10][11][12]

Erasmus Darwin relied on Lucretian philosophy to form a theory of universal change. He proposed that both organic and inorganic matter changed throughout the course of the universe, and that plants and animals could pass on acquired traits to their progeny. His view of universal transformation placed time as a driving force in the universe’s journey towards improvement. In addition, Erasmus believed that nature had some amount of agency in this inheritance. Darwin spun his own story of how nature began to develop from the ocean, and then slowly became more diverse and more complex. His transmutation theory relied heavily on the needs which drove animal competition, as well as the results of this contest between both animals and plants.[10][11][12]

Charles Darwin acknowledged his grandfather’s contribution to the field of transmutation in his synopsis of Erasmus’ life, The Life of Erasmus Darwin. Darwin collaborated with Ernst Krause to write a forward on Krause’s Erasmus Darwin und Seine Stellung in Der Geschichte Der Descendenz-Theorie, which translates into Erasmus Darwin and His Place in the History of the Descent Theory. Krause explains Erasmus’ motivations for arguing for the theory of descent, including Darwin’s connection with and correspondence with Rousseau, which may have influenced how he saw the world.[13]

Jean-Baptiste Lamarck proposed a hypothesis on the transmutation of species in Philosophie Zoologique (1809). Lamarck did not believe that all living things shared a common ancestor. Rather he believed that simple forms of life were created continuously by spontaneous generation. He also believed that an innate life force, which he sometimes described as a nervous fluid, drove species to become more complex over time, advancing up a linear ladder of complexity that was related to the great chain of being. Lamarck also recognized that species were adapted to their environment. He explained this observation by saying that the same nervous fluid driving increasing complexity, also caused the organs of an animal (or a plant) to change based on the use or disuse of that organ, just as muscles are affected by exercise. He argued that these changes would be inherited by the next generation and produce slow adaptation to the environment. It was this secondary mechanism of adaptation through the inheritance of acquired characteristics that became closely associated with his name and would influence discussions of evolution into the 20th century.[14][15]

The German Abraham Gottlob Werner believed in geological transformism. Specifically, Werner argued that the Earth undergoes irreversible and continuous change. The Edinburgh school, a radical British school of comparative anatomy, fostered a lot of debate around natural history.[16][17] Edinburgh, which included the surgeon Robert Knox and the anatomist Robert Grant, was closely in touch with Lamarck's school of French Transformationism, which contained scientists such as Étienne Geoffroy Saint-Hilaire. Grant developed Lamarck's and Erasmus Darwin's ideas of transmutation and evolutionism, investigating homology to prove common descent. As a young student Charles Darwin joined Grant in investigations of the life cycle of marine animals. He also studied geology under professor Robert Jameson whose journal published an anonymous paper in 1826 praising "Mr. Lamarck" for explaining how the higher animals had "evolved" from the "simplest worms" – this was the first use of the word "evolved" in a modern sense. Professor Jameson was a Wernerian, which allowed him to consider transformation theories and foster the interest in transformism among his students.[16][17] Jameson's course closed with lectures on the "Origin of the Species of Animals".[18][19]

The computing pioneer Charles Babbage published his unofficial Ninth Bridgewater Treatise in 1837, putting forward the thesis that God had the omnipotence and foresight to create as a divine legislator, making laws (or programs) which then produced species at the appropriate times, rather than continually interfering with ad hoc miracles each time a new species was required. In 1844 the Scottish publisher Robert Chambers anonymously published an influential and extremely controversial book of popular science entitled Vestiges of the Natural History of Creation. This book proposed an evolutionary scenario for the origins of the solar system and life on earth. It claimed that the fossil record showed an ascent of animals with current animals being branches off a main line that leads progressively to humanity. It implied that the transmutations led to the unfolding of a preordained orthogenetic plan woven into the laws that governed the universe. In this sense it was less completely materialistic than the ideas of radicals like Robert Grant, but its implication that humans were just the last step in the ascent of animal life incensed many conservative thinkers. Both conservatives like Adam Sedgwick, and radical materialists like Thomas Henry Huxley, who disliked Chambers' implications of preordained progress, were able to find scientific inaccuracies in the book that they could disparage. Darwin himself openly deplored the author's "poverty of intellect", and dismissed it as a "literary curiosity." However, the high profile of the public debate over Vestiges, with its depiction of evolution as a progressive process, and its popular success, would greatly influence the perception of Darwin's theory a decade later.[20][21][22] It also influenced some younger naturalists, including Alfred Russel Wallace, to take an interest in the idea of transmutation.[23]

Opposition to transmutation

Ideas about the transmutation of species were strongly associated with the radical materialism of the Enlightenment and were greeted with hostility by more conservative thinkers. Cuvier attacked the ideas of Lamarck and Geoffroy Saint-Hilaire, agreeing with Aristotle that species were immutable. Cuvier believed that the individual parts of an animal were too closely correlated with one another to allow for one part of the anatomy to change in isolation from the others, and argued that the fossil record showed patterns of catastrophic extinctions followed by re-population, rather than gradual change over time. He also noted that drawings of animals and animal mummies from Egypt, which were thousands of years old, showed no signs of change when compared with modern animals. The strength of Cuvier's arguments and his reputation as a leading scientist helped keep transmutational ideas out of the scientific mainstream for decades.[24]

In Britain, where the philosophy of natural theology remained influential, William Paley wrote the book Natural Theology with its famous watchmaker analogy, at least in part as a response to the transmutational ideas of Erasmus Darwin.[25] Geologists influenced by natural theology, such as Buckland and Sedgwick, made a regular practice of attacking the evolutionary ideas of Lamarck and Grant, and Sedgwick wrote a famously harsh review of The Vestiges of the Natural History of Creation.[26][27] Although the geologist Charles Lyell opposed scriptural geology, he also believed in the immutability of species, and in his Principles of Geology (1830–1833) he criticized and dismissed Lamarck's theories of development. Instead, he advocated a form of progressive creation, in which each species had its "centre of creation" and was designed for this particular habitat, but would go extinct when this habitat changed.[19]

Another source of opposition to transmutation was a school of naturalists who were influenced by the German philosophers and naturalists associated with idealism, such as Goethe, Hegel and Lorenz Oken. Idealists such as Louis Agassiz and Richard Owen believed that each species was fixed and unchangeable because it represented an idea in the mind of the creator. They believed that relationships between species could be discerned from developmental patterns in embryology, as well as in the fossil record, but that these relationships represented an underlying pattern of divine thought, with progressive creation leading to increasing complexity and culminating in humanity. Owen developed the idea of "archetypes" in the divine mind that would produce a sequence of species related by anatomical homologies, such as vertebrate limbs. Owen was concerned by the political implications of the ideas of transmutationists like Robert Grant, and he led a public campaign by conservatives that successfully marginalized Grant in the scientific community. In his famous 1841 paper, which coined the term dinosaur for the giant reptiles discovered by Buckland and Gideon Mantell, Owen argued that these reptiles contradicted the transmutational ideas of Lamarck because they were more sophisticated than the reptiles of the modern world. Darwin would make good use of the homologies analyzed by Owen in his own theory, but the harsh treatment of Grant, along with the controversy surrounding Vestiges, would be factors in his decision to ensure that his theory was fully supported by facts and arguments before publishing his ideas.[19][28][29]

See also

Notes

- There are three examples of the word 'evolution' in Social Statics, but none in the sense that is used today in biology.[4]

References

- (van Wyhe 2007, pp. 181–182)

Bibliography

- Bowler, Peter J. (2003). Evolution:The History of an Idea. University of California Press. ISBN 0-520-23693-9.

- Desmond, Adrian; Moore, James (1994). Darwin: The Life of a Tormented Evolutionist. W. W. Norton & Company. ISBN 0-393-31150-3.

- Bowler, Peter J.; Morus, Iwan Rhys (2005). Making Modern Science. The University of Chicago Press. ISBN 0-226-06861-7.

- Larson, Edward J. (2004). Evolution:The Remarkable History of Scientific Theory. Modern Library. ISBN 0-679-64288-9.

- Secord, James A. (2000). Victorian sensation: the extraordinary publication, reception, and secret authorship of the Vestiges of the Natural History of Creation. Chicago.

- van Wyhe, John (27 March 2007). "Mind the gap: Did Darwin avoid publishing his theory for many years?" (PDF). Notes and Records of the Royal Society. 61 (2): 177–205. doi:10.1098/rsnr.2006.0171. S2CID 202574857.

External links

https://en.wikipedia.org/wiki/Transmutation_of_species

A transborder agglomeration is an urban agglomeration or conurbation that extends into multiple sovereign states and/or dependent territories. It includes city-states that agglomerate with their neighbouring countries.

List of transborder agglomerations

Africa

| Main municipalities | Countries | Total population |

|---|---|---|

| Kinshasa–Brazzaville | Democratic Republic of the Congo–Republic of the Congo | 19,547,463 |

| Bukavu–Cyangugu | Democratic Republic of the Congo–Rwanda | ~1.2 million[1][2] |

| Goma–Gisenyi | Democratic Republic of the Congo–Rwanda | 756,323 |

| Lomé–Aflao | Togo–Ghana | 1,544,206 |

| N'djamena–Kousséri | Chad–Cameroon | 1,694,819 |

Asia

| Main municipalities | Countries | Total population |

|---|---|---|

| Astara–Astara | Iran–Azerbaijan | 100,000 |

| Basra–Khorramshahr–Abadan | Iraq–Iran | 2,000,000 |

| Blagoveshchensk–Heihe | Russia–China | 400,000 |

| Dandong–Sinuiju | China–North Korea | 1,000,000 |

| Dhahran–Jubail–Manama | Saudi Arabia–Bahrain | 2,000,000 |

| Fergana–Kyzyl-Kiya | Uzbekistan–Kyrgyzstan | 300,000 |

| Sijori Growth Triangle (Singapore–Johor Bahru–Batam–Bintan) | Singapore–Malaysia–Indonesia | 9,000,000 |

| Greater Bay Area (Guangzhou–Dongguan–Shenzhen–Hong Kong–Macau) | China–Hong Kong–Macau | 71,200,000 |

| Kara-Suu–Qorasuv | Kyrgyzstan–Uzbekistan | 40,000 |

| Lahore–Amritsar | Pakistan–India | 12,000,000 |

| Mukdahan–Savannakhet | Thailand–Laos | 200,000 |

| Padang Besar–Padang Besar | Malaysia–Thailand | 20,000 |

| Tashkent–Saryagash | Uzbekistan–Kazakhstan | 3,000,000 [3] |

| Vientiane–Nong Khai | Laos–Thailand | 900,000 |

Europe

North America

| Main municipalities | Countries | Total population |

|---|---|---|

| Paso Canoas | Costa Rica–Panama |

|

| Metro Vancouver–Fraser Valley–Bellingham | Canada–United States | 3,050,000 |

| Detroit–Windsor | United States–Canada | 5,976,595 |

| Buffalo-Niagara Region | United States–Canada | 1,614,790 |

| Port Huron–Sarnia–Point Edward | United States–Canada | 105,776 |

| Sault Ste. Marie–Sault Ste. Marie | United States–Canada | 93,944 |

| San Diego–Tijuana | United States–Mexico | 5,105,769[6] |

| Mexicali–Calexico | Mexico–United States | 1,143,000 |

| San Luis Río Colorado–San Luis | Mexico–United States | 227,000 |

| Nogales–Nogales | United States–Mexico | 240,000 |

| El Paso–Juárez | United States–Mexico | 2,500,000[7] |

| Laredo–Nuevo Laredo | United States–Mexico | 775,481[8] |

| Reynosa–McAllen | Mexico–United States | 1,500,000[9] |

| Brownsville–Matamoros | United States–Mexico | 1,136,995[10] |

South America

| Main municipalities | Countries | Total population |

|---|---|---|

| Saint-Laurent-du-Maroni–Albina | French Guiana (France)–Suriname |

|

| Saint Georges–Oiapoque | French Guiana (France)–Brazil | 32,000 |

| Santana do Livramento–Rivera | Brazil–Uruguay | 140,000 |

| Chuí–Chuy | Brazil–Uruguay | 15,592[11][12] |

| Corumbá–Puerto Suárez | Brazil–Bolivia | 124,000 |

| Leticia–Tabatinga–Santa Rosa | Colombia–Brazil–Peru | 107,000 |

| Ponta Porã–Pedro Juan Caballero | Brazil–Paraguay | 209,000 |

| Foz do Iguaçu–Ciudad del Este–Puerto Iguazu | Brazil–Paraguay–Argentina | 800,000 |

| Bernardo de Irigoyen–Barracão–Dionísio Cerqueira | Argentina–Brazil | 36,000 |

| Uruguaiana–Paso de los Libres | Brazil–Argentina | 170,000 |

| Cúcuta–San Antonio del Táchira | Colombia–Venezuela | 700,000 [13] |

See also

References

- http://www.lapatilla.com/site/2016/08/15/cucuta-y-san-antonio-dos-ciudades-unidas-pero-separadas-por-cierre-frontera/ "...que une ambas ciudades formando una conurbación, los 650.000 habitantes de Cúcuta y los 50.000 de San Antonio supieron que comenzaban tiempos difíciles."

https://en.wikipedia.org/wiki/Transborder_agglomeration

Transport Layer Security (TLS) is a cryptographic protocol designed to provide communications security over a computer network. The protocol is widely used in applications such as email, instant messaging, and voice over IP, but its use in securing HTTPS remains the most publicly visible.

The TLS protocol aims primarily to provide security, including privacy (confidentiality), integrity, and authenticity through the use of cryptography, such as the use of certificates, between two or more communicating computer applications. It runs in the presentation layer and is itself composed of two layers: the TLS record and the TLS handshake protocols.

The closely related Datagram Transport Layer Security (DTLS) is a communications protocol that provides security to datagram-based applications. In technical writing, references to "(D)TLS" are often seen when it applies to both versions.[1]

TLS is a proposed Internet Engineering Task Force (IETF) standard, first defined in 1999, and the current version is TLS 1.3, defined in August 2018. TLS builds on the now-deprecated SSL (Secure Sockets Layer) specifications (1994, 1995, 1996) developed by Netscape Communications for adding the HTTPS protocol to their Navigator web browser.

https://en.wikipedia.org/wiki/Transport_Layer_Security

The Dynamic Host Configuration Protocol version 6 (DHCPv6) is a network protocol for configuring Internet Protocol version 6 (IPv6) hosts with IP addresses, IP prefixes, default route, local segment MTU, and other configuration data required to operate in an IPv6 network. It is not just the IPv6 equivalent of the Dynamic Host Configuration Protocol for IPv4.

IPv6 hosts may automatically generate IP addresses internally using stateless address autoconfiguration (SLAAC), or they may be assigned configuration data with DHCPv6.

IPv6 hosts that use stateless autoconfiguration may require information other than an IP address or route. DHCPv6 can be used to acquire this information, even though it is not being used to configure IP addresses. DHCPv6 is not necessary for configuring hosts with the addresses of Domain Name System (DNS) servers, because they can be configured using Neighbor Discovery Protocol, which is also the mechanism for stateless autoconfiguration.[1]

Many IPv6 routers, such as routers for residential networks, must be configured automatically with no operator intervention. Such routers require not only an IPv6 address for use in communicating with upstream routers, but also an IPv6 prefix for use in configuring devices on the downstream side of the router. DHCPv6 prefix delegation provides a mechanism for configuring such routers.

https://en.wikipedia.org/wiki/DHCPv6

Transfer genes or tra genes (also transfer operons or tra operons), are some genes necessary for non-sexual transfer of genetic material in both gram-positive and gram-negative bacteria. The tra locus includes the pilin gene and regulatory genes, which together form pili on the cell surface, polymeric proteins that can attach themselves to the surface of F-bacteria and initiate the conjugation. The existence of the tra region of a plasmid genome was first discovered in 1979 by David H. Figurski and Donald R. Helinski[1] In the course of their work, Figurski and Helinski also discovered a second key fact about the tra region – that it can act in trans to the mobilization marker which it affects.[1]

This finding suggested that there were two basic aspects necessary for a plasmid to move from one cell to another:

- An origin of transfer – A plasmid with no origin of transfer is non-mobilizable.[2]

- The transfer genes – Though a functioning set of tra genes is necessary for plasmid transfer, they may be located in a variety of places including the plasmid in question, another plasmid in the same host cell, or even in the bacterial genome.[3]

The tra genes encode proteins which are useful for the propagation of the plasmid from the host cell to a compatible donor cell or maintenance of the plasmid. Not all transfer operons are the same. Some genes are only found in a few species or a single genus of bacteria while others (such as traL) are found in very similar forms in many bacterial species. Many of the transfer systems are incompatible. For example, oriT and bom are two origins of transfer which interact with different sets of transfer genes. A plasmid with a mob site (like many found in Rhodococcus species) cannot be transferred via transfer genes which normally interact with the oriT site (which is common in E. coli)[3]

| The roles of some tra-gene encoded proteins:[4] | |

| Pili Assembly and Production | traA, traB, traE, traC, traF, traG, traH, traK, traL, traQ, traU, traV, traW, |

| Inner Membrane Proteins | traB, traE, traG, traL, traP |

| Periplasmic Proteins | traC, traF, traH traK, traU, traW |

| DNA transfer | traC, traD, traI, traM, traY |

| Surface Exclusion Proteins | traS, traT |

| Mating Pair Stabilization | traN, traG |

Each of the individual genes in the tra operon codes for a different protein product. These products may perform a number of tasks including interaction with one another to perform mating pair functions and regulation of different regions of the tra operon itself,[5] or conjugative DNA metabolism and surface exclusion.[4] Also, note that some proteins perform multiple functions or are associated closely with proteins which have non-similar functions.

References

- Grohmann E, Muth G, Espinosa M (2003). "Conjugative Plasmid Transfer in Gram-Positive Bacteria". Microbiology and Molecular Biology Reviews. 67 (2): 277–301. doi:10.1128/MMBR.67.2.277-301.2003. PMC 156469. PMID 12794193.

https://en.wikipedia.org/wiki/Transfer_gene

Pridnestrovian Moldavian Republic

| |

|---|---|

| Anthem: Мы славим тебя, Приднестровье My slavim tebya, Pridnestrovie "We Sing the Praises of Transnistria"[2] | |

| |

| Status | Unrecognised state |

| Capital and largest city | Tiraspol 46°50′25″N 29°38′36″E |

| Official languages | |

| Interethnic language | Russian[3][4][5] |

| Ethnic groups (2015) |

|

| Demonym(s) |

|

| Government | Unitary presidential republic |

| Vadim Krasnoselsky | |

| Aleksandr Rozenberg | |

| Alexander Korshunov | |

| Legislature | Supreme Council |

| Unrecognised state | |

• Independence from SSR of Moldova declared | 2 September 1990 |

• Independence from Soviet Union declared | 25 August 1991 |

• Succeeds the Pridnestrovian Moldavian Soviet Socialist Republic | 5 November 1991[6] |

| 2 March – 1 July 1992 | |

| Area | |

• Total | 4,163 km2 (1,607 sq mi) |

• Water (%) | 2.35 |

| Population | |

• 31 December 2022 estimate | |

• 2015 census | |

• Density | 73.5/km2 (190.4/sq mi) |

| GDP (nominal) | 2021 estimate |

• Total | $1.201 billion[9] |

• Per capita | $2,584 |

| Currency | Transnistrian ruble (PRB) |

| Time zone | UTC+2 (EET) |

• Summer (DST) | UTC+3 (EEST) |

| Calling code | +373[a] |

| |

Transnistria, officially the Pridnestrovian Moldavian Republic (PMR),[c] is an unrecognised breakaway state that is internationally recognised as a part of Moldova. Transnistria controls most of the narrow strip of land between the Dniester river and the Moldovan–Ukrainian border, as well as some land on the other side of the river's bank. Its capital and largest city is Tiraspol. Transnistria has been recognised only by three other unrecognised or partially recognised breakaway states: Abkhazia, Artsakh and South Ossetia.[10] Transnistria is officially designated by the Republic of Moldova as the Administrative-Territorial Units of the Left Bank of the Dniester (Romanian: Unitățile Administrativ-Teritoriale din stînga Nistrului)[11] or as Stînga Nistrului ("Left Bank of the Dniester").[12][13][14] In March 2022, the Parliamentary Assembly of the Council of Europe adopted a resolution that defines the territory as under military occupation by Russia.[15]

The region's origins can be traced to the Moldavian Autonomous Soviet Socialist Republic, which was formed in 1924 within the Ukrainian SSR. During World War II, the Soviet Union took parts of the Moldavian ASSR, which was dissolved, and of the Kingdom of Romania's Bessarabia to form the Moldavian Soviet Socialist Republic in 1940. The present history of the region dates to 1990, during the dissolution of the Soviet Union, when the Pridnestrovian Moldavian Soviet Socialist Republic was established in hopes that it would remain within the Soviet Union should Moldova seek unification with Romania or independence, the latter occurring in August 1991. Shortly afterwards, a military conflict between the two parties started in March 1992 and concluded with a ceasefire in July that year.

As part of the ceasefire agreement, a three-party (Russia, Moldova, Transnistria) Joint Control Commission supervises the security arrangements in the demilitarised zone, comprising 20 localities on both sides of the river.[citation needed] Although the ceasefire has held, the territory's political status remains unresolved: Transnistria is an unrecognised but de facto independent presidential republic[16] with its own government, parliament, military, police, postal system, currency, and vehicle registration.[17][18][19][20] Its authorities have adopted a constitution, flag, national anthem, and coat of arms. After a 2005 agreement between Moldova and Ukraine, all Transnistrian companies that seek to export goods through the Ukrainian border must be registered with the Moldovan authorities.[21] This agreement was implemented after the European Union Border Assistance Mission to Moldova and Ukraine (EUBAM) took force in 2005.[22] Most Transnistrians have Moldovan citizenship,[23] but many also have Russian, Romanian, or Ukrainian citizenship.[24][25] The main ethnic groups are Russians, Moldovans/Romanians, and Ukrainians.

Transnistria, along with Abkhazia, South Ossetia, and Artsakh, is a post-Soviet "frozen conflict" zone.[26] These four partially recognised or unrecognised states maintain friendly relations with each other and form the Community for Democracy and Rights of Nations.[27][28][29]

https://en.wikipedia.org/wiki/Transnistria

The Transmission Control Protocol (TCP) is one of the main protocols of the Internet protocol suite. It originated in the initial network implementation in which it complemented the Internet Protocol (IP). Therefore, the entire suite is commonly referred to as TCP/IP. TCP provides reliable, ordered, and error-checked delivery of a stream of octets (bytes) between applications running on hosts communicating via an IP network. Major internet applications such as the World Wide Web, email, remote administration, and file transfer rely on TCP, which is part of the Transport Layer of the TCP/IP suite. SSL/TLS often runs on top of TCP.

TCP is connection-oriented, and a connection between client and server is established before data can be sent. The server must be listening (passive open) for connection requests from clients before a connection is established. Three-way handshake (active open), retransmission, and error detection adds to reliability but lengthens latency. Applications that do not require reliable data stream service may use the User Datagram Protocol (UDP) instead, which provides a connectionless datagram service that prioritizes time over reliability. TCP employs network congestion avoidance. However, there are vulnerabilities in TCP, including denial of service, connection hijacking, TCP veto, and reset attack.

https://en.wikipedia.org/wiki/Transmission_Control_Protocol

TCP reset attack, also known as a "forged TCP reset" or "spoofed TCP reset", is a way to terminate a TCP connection by sending a forged TCP reset packet. This tampering technique can be used by a firewall or abused by a malicious attacker to interrupt Internet connections.

The Great Firewall of China, and Iranian Internet censors are known to use TCP reset attacks to interfere with and block connections,[1] as a major method to carry out Internet censorship.

https://en.wikipedia.org/wiki/TCP_reset_attack

https://en.wikipedia.org/wiki/Category:Computer_security_exploits

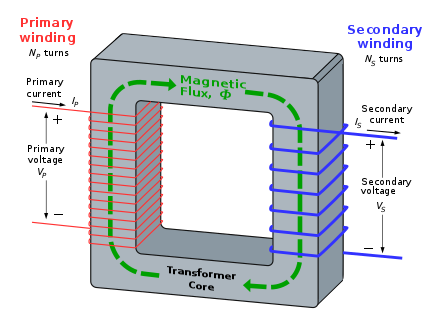

A transformer is a passive component that transfers electrical energy from one electrical circuit to another circuit, or multiple circuits. A varying current in any coil of the transformer produces a varying magnetic flux in the transformer's core, which induces a varying electromotive force (EMF) across any other coils wound around the same core. Electrical energy can be transferred between separate coils without a metallic (conductive) connection between the two circuits. Faraday's law of induction, discovered in 1831, describes the induced voltage effect in any coil due to a changing magnetic flux encircled by the coil.

Transformers are used to change AC voltage levels, such transformers being termed step-up or step-down type to increase or decrease voltage level, respectively. Transformers can also be used to provide galvanic isolation between circuits as well as to couple stages of signal-processing circuits. Since the invention of the first constant-potential transformer in 1885, transformers have become essential for the transmission, distribution, and utilization of alternating current electric power.[1] A wide range of transformer designs is encountered in electronic and electric power applications. Transformers range in size from RF transformers less than a cubic centimeter in volume, to units weighing hundreds of tons used to interconnect the power grid.

https://en.wikipedia.org/wiki/Transformer

Principles

Ideal transformer equations

By Faraday's law of induction:

-

(Eq. 2)

where is the instantaneous voltage, is the number of turns in a winding, dΦ/dt is the derivative of the magnetic flux Φ through one turn of the winding over time (t), and subscripts P and S denotes primary and secondary.

Combining the ratio of eq. 1 & eq. 2:

-

Turns ratio

(Eq. 3)

where for a step-up transformer a < 1 and for a step-down transformer a > 1.[3]

By the law of conservation of energy, apparent, real and reactive power are each conserved in the input and output:

-

(Eq. 4)

where is apparent power and is current.

Combining Eq. 3 & Eq. 4 with this endnote[b][4] gives the ideal transformer identity:

-

(Eq. 5)

where is winding self-inductance.

By Ohm's law and ideal transformer identity:

-

(Eq. 6)

-

(Eq. 7)

where is the load impedance of the secondary circuit & is the apparent load or driving point impedance of the primary circuit, the superscript denoting referred to the primary.

Ideal transformer

An ideal transformer is linear, lossless and perfectly coupled. Perfect coupling implies infinitely high core magnetic permeability and winding inductance and zero net magnetomotive force (i.e. ipnp − isns = 0).[3][c]

A varying current in the transformer's primary winding creates a varying magnetic flux in the transformer core, which is also encircled by the secondary winding. This varying flux at the secondary winding induces a varying electromotive force or voltage in the secondary winding. This electromagnetic induction phenomenon is the basis of transformer action and, in accordance with Lenz's law, the secondary current so produced creates a flux equal and opposite to that produced by the primary winding.

The windings are wound around a core of infinitely high magnetic permeability so that all of the magnetic flux passes through both the primary and secondary windings. With a voltage source connected to the primary winding and a load connected to the secondary winding, the transformer currents flow in the indicated directions and the core magnetomotive force cancels to zero.

According to Faraday's law, since the same magnetic flux passes through both the primary and secondary windings in an ideal transformer, a voltage is induced in each winding proportional to its number of windings. The transformer winding voltage ratio is equal to the winding turns ratio.[6]

An ideal transformer is a reasonable approximation for a typical commercial transformer, with voltage ratio and winding turns ratio both being inversely proportional to the corresponding current ratio.

The load impedance referred to the primary circuit is equal to the turns ratio squared times the secondary circuit load impedance.[7]

Real transformer

Deviations from ideal transformer

The ideal transformer model neglects the following basic linear aspects of real transformers:

(a) Core losses, collectively called magnetizing current losses, consisting of[8]

- Hysteresis losses due to nonlinear magnetic effects in the transformer core, and

- Eddy current losses due to joule heating in the core that are proportional to the square of the transformer's applied voltage.

(b) Unlike the ideal model, the windings in a real transformer have non-zero resistances and inductances associated with:

- Joule losses due to resistance in the primary and secondary windings[8]

- Leakage flux that escapes from the core and passes through one winding only resulting in primary and secondary reactive impedance.

(c) similar to an inductor, parasitic capacitance and self-resonance phenomenon due to the electric field distribution. Three kinds of parasitic capacitance are usually considered and the closed-loop equations are provided[9]

- Capacitance between adjacent turns in any one layer;

- Capacitance between adjacent layers;

- Capacitance between the core and the layer(s) adjacent to the core;

Inclusion of capacitance into the transformer model is complicated, and is rarely attempted; the ‘real’ transformer model's equivalent circuit shown below does not include parasitic capacitance. However, the capacitance effect can be measured by comparing open-circuit inductance, i.e. the inductance of a primary winding when the secondary circuit is open, to a short-circuit inductance when the secondary winding is shorted.

Leakage flux

The ideal transformer model assumes that all flux generated by the primary winding links all the turns of every winding, including itself. In practice, some flux traverses paths that take it outside the windings.[10] Such flux is termed leakage flux, and results in leakage inductance in series with the mutually coupled transformer windings.[11] Leakage flux results in energy being alternately stored in and discharged from the magnetic fields with each cycle of the power supply. It is not directly a power loss, but results in inferior voltage regulation, causing the secondary voltage not to be directly proportional to the primary voltage, particularly under heavy load.[10] Transformers are therefore normally designed to have very low leakage inductance.

In some applications increased leakage is desired, and long magnetic paths, air gaps, or magnetic bypass shunts may deliberately be introduced in a transformer design to limit the short-circuit current it will supply.[11] Leaky transformers may be used to supply loads that exhibit negative resistance, such as electric arcs, mercury- and sodium- vapor lamps and neon signs or for safely handling loads that become periodically short-circuited such as electric arc welders.[8]: 485

Air gaps are also used to keep a transformer from saturating, especially audio-frequency transformers in circuits that have a DC component flowing in the windings.[12] A saturable reactor exploits saturation of the core to control alternating current.

Knowledge of leakage inductance is also useful when transformers are operated in parallel. It can be shown that if the percent impedance [e] and associated winding leakage reactance-to-resistance (X/R) ratio of two transformers were the same, the transformers would share the load power in proportion to their respective ratings. However, the impedance tolerances of commercial transformers are significant. Also, the impedance and X/R ratio of different capacity transformers tends to vary.[14]

Equivalent circuit

Referring to the diagram, a practical transformer's physical behavior may be represented by an equivalent circuit model, which can incorporate an ideal transformer.[15]

Winding joule losses and leakage reactances are represented by the following series loop impedances of the model:

- Primary winding: RP, XP

- Secondary winding: RS, XS.

In normal course of circuit equivalence transformation, RS and XS are in practice usually referred to the primary side by multiplying these impedances by the turns ratio squared, (NP/NS) 2 = a2.

Core loss and reactance is represented by the following shunt leg impedances of the model:

- Core or iron losses: RC

- Magnetizing reactance: XM.

RC and XM are collectively termed the magnetizing branch of the model.

Core losses are caused mostly by hysteresis and eddy current effects in the core and are proportional to the square of the core flux for operation at a given frequency.[8] : 142–143 The finite permeability core requires a magnetizing current IM to maintain mutual flux in the core. Magnetizing current is in phase with the flux, the relationship between the two being non-linear due to saturation effects. However, all impedances of the equivalent circuit shown are by definition linear and such non-linearity effects are not typically reflected in transformer equivalent circuits.[8]: 142 With sinusoidal supply, core flux lags the induced EMF by 90°. With open-circuited secondary winding, magnetizing branch current I0 equals transformer no-load current.[15]

The resulting model, though sometimes termed 'exact' equivalent circuit based on linearity assumptions, retains a number of approximations.[15] Analysis may be simplified by assuming that magnetizing branch impedance is relatively high and relocating the branch to the left of the primary impedances. This introduces error but allows combination of primary and referred secondary resistances and reactances by simple summation as two series impedances.

Transformer equivalent circuit impedance and transformer ratio parameters can be derived from the following tests: open-circuit test, short-circuit test, winding resistance test, and transformer ratio test.

Transformer EMF equation

If the flux in the core is purely sinusoidal, the relationship for either winding between its rms voltage Erms of the winding, and the supply frequency f, number of turns N, core cross-sectional area A in m2 and peak magnetic flux density Bpeak in Wb/m2 or T (tesla) is given by the universal EMF equation:[8]

Polarity

A dot convention is often used in transformer circuit diagrams, nameplates or terminal markings to define the relative polarity of transformer windings. Positively increasing instantaneous current entering the primary winding's ‘dot’ end induces positive polarity voltage exiting the secondary winding's ‘dot’ end. Three-phase transformers used in electric power systems will have a nameplate that indicate the phase relationships between their terminals. This may be in the form of a phasor diagram, or using an alpha-numeric code to show the type of internal connection (wye or delta) for each winding.

Effect of frequency

The EMF of a transformer at a given flux increases with frequency.[8] By operating at higher frequencies, transformers can be physically more compact because a given core is able to transfer more power without reaching saturation and fewer turns are needed to achieve the same impedance. However, properties such as core loss and conductor skin effect also increase with frequency. Aircraft and military equipment employ 400 Hz power supplies which reduce core and winding weight.[16] Conversely, frequencies used for some railway electrification systems were much lower (e.g. 16.7 Hz and 25 Hz) than normal utility frequencies (50–60 Hz) for historical reasons concerned mainly with the limitations of early electric traction motors. Consequently, the transformers used to step-down the high overhead line voltages were much larger and heavier for the same power rating than those required for the higher frequencies.

Operation of a transformer at its designed voltage but at a higher frequency than intended will lead to reduced magnetizing current. At a lower frequency, the magnetizing current will increase. Operation of a large transformer at other than its design frequency may require assessment of voltages, losses, and cooling to establish if safe operation is practical. Transformers may require protective relays to protect the transformer from overvoltage at higher than rated frequency.

One example is in traction transformers used for electric multiple unit and high-speed train service operating across regions with different electrical standards. The converter equipment and traction transformers have to accommodate different input frequencies and voltage (ranging from as high as 50 Hz down to 16.7 Hz and rated up to 25 kV).

At much higher frequencies the transformer core size required drops dramatically: a physically small transformer can handle power levels that would require a massive iron core at mains frequency. The development of switching power semiconductor devices made switch-mode power supplies viable, to generate a high frequency, then change the voltage level with a small transformer.

Transformers for higher frequency applications such as SMPS typically use core materials with much lower hysteresis and eddy-current losses than those for 50/60 Hz. Primary examples are iron-powder and ferrite cores. The lower frequency-dependant losses of these cores often is at the expense of flux density at saturation. For instance, ferrite saturation occurs at a substantially lower flux density than laminated iron.

Large power transformers are vulnerable to insulation failure due to transient voltages with high-frequency components, such as caused in switching or by lightning.

Energy losses

Transformer energy losses are dominated by winding and core losses. Transformers' efficiency tends to improve with increasing transformer capacity.[17] The efficiency of typical distribution transformers is between about 98 and 99 percent.[17][18]

As transformer losses vary with load, it is often useful to tabulate no-load loss, full-load loss, half-load loss, and so on. Hysteresis and eddy current losses are constant at all load levels and dominate at no load, while winding loss increases as load increases. The no-load loss can be significant, so that even an idle transformer constitutes a drain on the electrical supply. Designing energy efficient transformers for lower loss requires a larger core, good-quality silicon steel, or even amorphous steel for the core and thicker wire, increasing initial cost. The choice of construction represents a trade-off between initial cost and operating cost.[19]

Transformer losses arise from:

- Winding joule losses

- Current flowing through a winding's conductor causes joule heating due to the resistance of the wire. As frequency increases, skin effect and proximity effect causes the winding's resistance and, hence, losses to increase.

- Core losses

-

- Hysteresis losses

- Each time the magnetic field is reversed, a small amount of energy is lost due to hysteresis within the core, caused by motion of the magnetic domains within the steel. According to Steinmetz's formula, the heat energy due to hysteresis is given by

- and,

- hysteresis loss is thus given by

- where, f is the frequency, η is the hysteresis coefficient and βmax is the maximum flux density, the empirical exponent of which varies from about 1.4 to 1.8 but is often given as 1.6 for iron.[19] For more detailed analysis, see Magnetic core and Steinmetz's equation.

- Eddy current losses

- Eddy currents are induced in the conductive metal transformer core by the changing magnetic field, and this current flowing through the resistance of the iron dissipates energy as heat in the core. The eddy current loss is a complex function of the square of supply frequency and inverse square of the material thickness.[19] Eddy current losses can be reduced by making the core of a stack of laminations (thin plates) electrically insulated from each other, rather than a solid block; all transformers operating at low frequencies use laminated or similar cores.

- Magnetostriction related transformer hum

- Magnetic flux in a ferromagnetic material, such as the core, causes it to physically expand and contract slightly with each cycle of the magnetic field, an effect known as magnetostriction, the frictional energy of which produces an audible noise known as mains hum or "transformer hum".[20] This transformer hum is especially objectionable in transformers supplied at power frequencies and in high-frequency flyback transformers associated with television CRTs.

- Stray losses

- Leakage inductance is by itself largely lossless, since energy supplied to its magnetic fields is returned to the supply with the next half-cycle. However, any leakage flux that intercepts nearby conductive materials such as the transformer's support structure will give rise to eddy currents and be converted to heat.[21]

- Radiative

- There are also radiative losses due to the oscillating magnetic field but these are usually small.

- Mechanical vibration and audible noise transmission

- In addition to magnetostriction, the alternating magnetic field causes fluctuating forces between the primary and secondary windings. This energy incites vibration transmission in interconnected metalwork, thus amplifying audible transformer hum.[22]

Construction

Cores

- Core form = core type; shell form = shell type

Closed-core transformers are constructed in 'core form' or 'shell form'. When windings surround the core, the transformer is core form; when windings are surrounded by the core, the transformer is shell form.[23] Shell form design may be more prevalent than core form design for distribution transformer applications due to the relative ease in stacking the core around winding coils.[23] Core form design tends to, as a general rule, be more economical, and therefore more prevalent, than shell form design for high voltage power transformer applications at the lower end of their voltage and power rating ranges (less than or equal to, nominally, 230 kV or 75 MVA). At higher voltage and power ratings, shell form transformers tend to be more prevalent.[23][24][25] Shell form design tends to be preferred for extra-high voltage and higher MVA applications because, though more labor-intensive to manufacture, shell form transformers are characterized as having inherently better kVA-to-weight ratio, better short-circuit strength characteristics and higher immunity to transit damage.[25]

Laminated steel cores

Transformers for use at power or audio frequencies typically have cores made of high permeability silicon steel.[26] The steel has a permeability many times that of free space and the core thus serves to greatly reduce the magnetizing current and confine the flux to a path which closely couples the windings.[27] Early transformer developers soon realized that cores constructed from solid iron resulted in prohibitive eddy current losses, and their designs mitigated this effect with cores consisting of bundles of insulated iron wires.[28] Later designs constructed the core by stacking layers of thin steel laminations, a principle that has remained in use. Each lamination is insulated from its neighbors by a thin non-conducting layer of insulation.[29] The transformer universal EMF equation can be used to calculate the core cross-sectional area for a preferred level of magnetic flux.[8]

The effect of laminations is to confine eddy currents to highly elliptical paths that enclose little flux, and so reduce their magnitude. Thinner laminations reduce losses,[26] but are more laborious and expensive to construct.[30] Thin laminations are generally used on high-frequency transformers, with some of very thin steel laminations able to operate up to 10 kHz.

One common design of laminated core is made from interleaved stacks of E-shaped steel sheets capped with I-shaped pieces, leading to its name of 'E-I transformer'.[30] Such a design tends to exhibit more losses, but is very economical to manufacture. The cut-core or C-core type is made by winding a steel strip around a rectangular form and then bonding the layers together. It is then cut in two, forming two C shapes, and the core assembled by binding the two C halves together with a steel strap.[30] They have the advantage that the flux is always oriented parallel to the metal grains, reducing reluctance.

A steel core's remanence means that it retains a static magnetic field when power is removed. When power is then reapplied, the residual field will cause a high inrush current until the effect of the remaining magnetism is reduced, usually after a few cycles of the applied AC waveform.[31] Overcurrent protection devices such as fuses must be selected to allow this harmless inrush to pass.

On transformers connected to long, overhead power transmission lines, induced currents due to geomagnetic disturbances during solar storms can cause saturation of the core and operation of transformer protection devices.[32]

Distribution transformers can achieve low no-load losses by using cores made with low-loss high-permeability silicon steel or amorphous (non-crystalline) metal alloy. The higher initial cost of the core material is offset over the life of the transformer by its lower losses at light load.[33]

Solid cores

Powdered iron cores are used in circuits such as switch-mode power supplies that operate above mains frequencies and up to a few tens of kilohertz. These materials combine high magnetic permeability with high bulk electrical resistivity. For frequencies extending beyond the VHF band, cores made from non-conductive magnetic ceramic materials called ferrites are common.[30] Some radio-frequency transformers also have movable cores (sometimes called 'slugs') which allow adjustment of the coupling coefficient (and bandwidth) of tuned radio-frequency circuits.

Toroidal cores

Toroidal transformers are built around a ring-shaped core, which, depending on operating frequency, is made from a long strip of silicon steel or permalloy wound into a coil, powdered iron, or ferrite.[34] A strip construction ensures that the grain boundaries are optimally aligned, improving the transformer's efficiency by reducing the core's reluctance. The closed ring shape eliminates air gaps inherent in the construction of an E-I core.[8] : 485 The cross-section of the ring is usually square or rectangular, but more expensive cores with circular cross-sections are also available. The primary and secondary coils are often wound concentrically to cover the entire surface of the core. This minimizes the length of wire needed and provides screening to minimize the core's magnetic field from generating electromagnetic interference.

Toroidal transformers are more efficient than the cheaper laminated E-I types for a similar power level. Other advantages compared to E-I types, include smaller size (about half), lower weight (about half), less mechanical hum (making them superior in audio amplifiers), lower exterior magnetic field (about one tenth), low off-load losses (making them more efficient in standby circuits), single-bolt mounting, and greater choice of shapes. The main disadvantages are higher cost and limited power capacity (see Classification parameters below). Because of the lack of a residual gap in the magnetic path, toroidal transformers also tend to exhibit higher inrush current, compared to laminated E-I types.

Ferrite toroidal cores are used at higher frequencies, typically between a few tens of kilohertz to hundreds of megahertz, to reduce losses, physical size, and weight of inductive components. A drawback of toroidal transformer construction is the higher labor cost of winding. This is because it is necessary to pass the entire length of a coil winding through the core aperture each time a single turn is added to the coil. As a consequence, toroidal transformers rated more than a few kVA are uncommon. Relatively few toroids are offered with power ratings above 10 kVA, and practically none above 25 kVA. Small distribution transformers may achieve some of the benefits of a toroidal core by splitting it and forcing it open, then inserting a bobbin containing primary and secondary windings.[35]

Air cores

A transformer can be produced by placing the windings near each other, an arrangement termed an "air-core" transformer. An air-core transformer eliminates loss due to hysteresis in the core material.[11] The magnetizing inductance is drastically reduced by the lack of a magnetic core, resulting in large magnetizing currents and losses if used at low frequencies. Air-core transformers are unsuitable for use in power distribution,[11] but are frequently employed in radio-frequency applications.[36] Air cores are also used for resonant transformers such as Tesla coils, where they can achieve reasonably low loss despite the low magnetizing inductance.

Windings

White: Air, liquid or other insulating medium

Green spiral: Grain oriented silicon steel

Black: Primary winding

Red: Secondary winding

The electrical conductor used for the windings depends upon the application, but in all cases the individual turns must be electrically insulated from each other to ensure that the current travels throughout every turn. For small transformers, in which currents are low and the potential difference between adjacent turns is small, the coils are often wound from enamelled magnet wire. Larger power transformers may be wound with copper rectangular strip conductors insulated by oil-impregnated paper and blocks of pressboard.[37]

High-frequency transformers operating in the tens to hundreds of kilohertz often have windings made of braided Litz wire to minimize the skin-effect and proximity effect losses.[38] Large power transformers use multiple-stranded conductors as well, since even at low power frequencies non-uniform distribution of current would otherwise exist in high-current windings.[37] Each strand is individually insulated, and the strands are arranged so that at certain points in the winding, or throughout the whole winding, each portion occupies different relative positions in the complete conductor. The transposition equalizes the current flowing in each strand of the conductor, and reduces eddy current losses in the winding itself. The stranded conductor is also more flexible than a solid conductor of similar size, aiding manufacture.[37]

The windings of signal transformers minimize leakage inductance and stray capacitance to improve high-frequency response. Coils are split into sections, and those sections interleaved between the sections of the other winding.

Power-frequency transformers may have taps at intermediate points on the winding, usually on the higher voltage winding side, for voltage adjustment. Taps may be manually reconnected, or a manual or automatic switch may be provided for changing taps. Automatic on-load tap changers are used in electric power transmission or distribution, on equipment such as arc furnace transformers, or for automatic voltage regulators for sensitive loads. Audio-frequency transformers, used for the distribution of audio to public address loudspeakers, have taps to allow adjustment of impedance to each speaker. A center-tapped transformer is often used in the output stage of an audio power amplifier in a push-pull circuit. Modulation transformers in AM transmitters are very similar.

Cooling

It is a rule of thumb that the life expectancy of electrical insulation is halved for about every 7 °C to 10 °C increase in operating temperature (an instance of the application of the Arrhenius equation).[39]

Small dry-type and liquid-immersed transformers are often self-cooled by natural convection and radiation heat dissipation. As power ratings increase, transformers are often cooled by forced-air cooling, forced-oil cooling, water-cooling, or combinations of these.[40] Large transformers are filled with transformer oil that both cools and insulates the windings.[41] Transformer oil is often a highly refined mineral oil that cools the windings and insulation by circulating within the transformer tank. The mineral oil and paper insulation system has been extensively studied and used for more than 100 years. It is estimated that 50% of power transformers will survive 50 years of use, that the average age of failure of power transformers is about 10 to 15 years, and that about 30% of power transformer failures are due to insulation and overloading failures.[42][43] Prolonged operation at elevated temperature degrades insulating properties of winding insulation and dielectric coolant, which not only shortens transformer life but can ultimately lead to catastrophic transformer failure.[39] With a great body of empirical study as a guide, transformer oil testing including dissolved gas analysis provides valuable maintenance information.

Building regulations in many jurisdictions require indoor liquid-filled transformers to either use dielectric fluids that are less flammable than oil, or be installed in fire-resistant rooms.[17] Air-cooled dry transformers can be more economical where they eliminate the cost of a fire-resistant transformer room.

The tank of liquid-filled transformers often has radiators through which the liquid coolant circulates by natural convection or fins. Some large transformers employ electric fans for forced-air cooling, pumps for forced-liquid cooling, or have heat exchangers for water-cooling.[41] An oil-immersed transformer may be equipped with a Buchholz relay, which, depending on severity of gas accumulation due to internal arcing, is used to either alarm or de-energize the transformer.[31] Oil-immersed transformer installations usually include fire protection measures such as walls, oil containment, and fire-suppression sprinkler systems.

Polychlorinated biphenyls (PCBs) have properties that once favored their use as a dielectric coolant, though concerns over their environmental persistence led to a widespread ban on their use.[44] Today, non-toxic, stable silicone-based oils, or fluorinated hydrocarbons may be used where the expense of a fire-resistant liquid offsets additional building cost for a transformer vault.[17][45] However, the long life span of transformers can mean that the potential for exposure can be high long after banning.[46]

Some transformers are gas-insulated. Their windings are enclosed in sealed, pressurized tanks and often cooled by nitrogen or sulfur hexafluoride gas.[45]

Experimental power transformers in the 500‐to‐1,000 kVA range have been built with liquid nitrogen or helium cooled superconducting windings, which eliminates winding losses without affecting core losses.[47][48]

Insulation

Insulation must be provided between the individual turns of the windings, between the windings, between windings and core, and at the terminals of the winding.

Inter-turn insulation of small transformers may be a layer of insulating varnish on the wire. Layer of paper or polymer films may be inserted between layers of windings, and between primary and secondary windings. A transformer may be coated or dipped in a polymer resin to improve the strength of windings and protect them from moisture or corrosion. The resin may be impregnated into the winding insulation using combinations of vacuum and pressure during the coating process, eliminating all air voids in the winding. In the limit, the entire coil may be placed in a mold, and resin cast around it as a solid block, encapsulating the windings.[49]

Large oil-filled power transformers use windings wrapped with insulating paper, which is impregnated with oil during assembly of the transformer. Oil-filled transformers use highly refined mineral oil to insulate and cool the windings and core. Construction of oil-filled transformers requires that the insulation covering the windings be thoroughly dried of residual moisture before the oil is introduced. Drying may be done by circulating hot air around the core, by circulating externally heated transformer oil, or by vapor-phase drying (VPD) where an evaporated solvent transfers heat by condensation on the coil and core. For small transformers, resistance heating by injection of current into the windings is used.

Bushings

Larger transformers are provided with high-voltage insulated bushings made of polymers or porcelain. A large bushing can be a complex structure since it must provide careful control of the electric field gradient without letting the transformer leak oil.[50]

Classification parameters

Transformers can be classified in many ways, such as the following:

- Power rating: From a fraction of a volt-ampere (VA) to over a thousand MVA.

- Duty of a transformer: Continuous, short-time, intermittent, periodic, varying.

- Frequency range: Power-frequency, audio-frequency, or radio-frequency.

- Voltage class: From a few volts to hundreds of kilovolts.

- Cooling type: Dry or liquid-immersed; self-cooled, forced air-cooled;forced oil-cooled, water-cooled.

- Application: power supply, impedance matching, output voltage and current stabilizer, pulse, circuit isolation, power distribution, rectifier, arc furnace, amplifier output, etc..

- Basic magnetic form: Core form, shell form, concentric, sandwich.

- Constant-potential transformer descriptor: Step-up, step-down, isolation.

- General winding configuration: By IEC vector group, two-winding combinations of the phase designations delta, wye or star, and zigzag; autotransformer, Scott-T

- Rectifier phase-shift winding configuration: 2-winding, 6-pulse; 3-winding, 12-pulse; . . ., n-winding, [n − 1]·6-pulse; polygon; etc..

Applications

Various specific electrical application designs require a variety of transformer types. Although they all share the basic characteristic transformer principles, they are customized in construction or electrical properties for certain installation requirements or circuit conditions.

In electric power transmission, transformers allow transmission of electric power at high voltages, which reduces the loss due to heating of the wires. This allows generating plants to be located economically at a distance from electrical consumers.[51] All but a tiny fraction of the world's electrical power has passed through a series of transformers by the time it reaches the consumer.[21]

In many electronic devices, a transformer is used to convert voltage from the distribution wiring to convenient values for the circuit requirements, either directly at the power line frequency or through a switch mode power supply.

Signal and audio transformers are used to couple stages of amplifiers and to match devices such as microphones and record players to the input of amplifiers. Audio transformers allowed telephone circuits to carry on a two-way conversation over a single pair of wires. A balun transformer converts a signal that is referenced to ground to a signal that has balanced voltages to ground, such as between external cables and internal circuits. Isolation transformers prevent leakage of current into the secondary circuit and are used in medical equipment and at construction sites. Resonant transformers are used for coupling between stages of radio receivers, or in high-voltage Tesla coils.

History

Discovery of induction

Electromagnetic induction, the principle of the operation of the transformer, was discovered independently by Michael Faraday in 1831 and Joseph Henry in 1832.[53][54][55][56] Only Faraday furthered his experiments to the point of working out the equation describing the relationship between EMF and magnetic flux now known as Faraday's law of induction:

where is the magnitude of the EMF in volts and ΦB is the magnetic flux through the circuit in webers.[57]

Faraday performed early experiments on induction between coils of wire, including winding a pair of coils around an iron ring, thus creating the first toroidal closed-core transformer.[56][58] However he only applied individual pulses of current to his transformer, and never discovered the relation between the turns ratio and EMF in the windings.

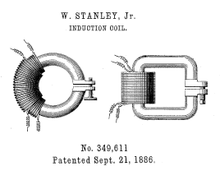

Induction coils

The first type of transformer to see wide use was the induction coil, invented by Rev. Nicholas Callan of Maynooth College, Ireland in 1836.[56] He was one of the first researchers to realize the more turns the secondary winding has in relation to the primary winding, the larger the induced secondary EMF will be. Induction coils evolved from scientists' and inventors' efforts to get higher voltages from batteries. Since batteries produce direct current (DC) rather than AC, induction coils relied upon vibrating electrical contacts that regularly interrupted the current in the primary to create the flux changes necessary for induction. Between the 1830s and the 1870s, efforts to build better induction coils, mostly by trial and error, slowly revealed the basic principles of transformers.

First alternating current transformers

By the 1870s, efficient generators producing alternating current (AC) were available, and it was found AC could power an induction coil directly, without an interrupter.