An attic (sometimes referred to as a loft) is a space found directly below the pitched roof of a house or other building; an attic may also be called a sky parlor[1] or a garret. Because attics fill the space between the ceiling of the top floor of a building and the slanted roof, they are known for being awkwardly shaped spaces with exposed rafters and difficult-to-reach corners.

While some attics are converted into bedrooms, home offices, or attic apartments complete with windows and staircases, most remain difficult to access (and are usually entered using a loft hatch and ladder). Attics help control temperatures in a house by providing a large mass of slowly moving air, and are often used for storage. The hot air rising from the lower floors of a building is often retained in attics, further compounding their reputation as inhospitable environments. However, in recent years attics have been insulated to help decrease heating costs, since, on average, uninsulated attics account for 15 percent of the total energy loss in average houses.[2]

A loft is also the uppermost space in a building but is distinguished from an attic in that an attic typically constitutes an entire floor of the building, while a loft covers only a few rooms, leaving one or more sides open to the lower floor.[citation needed]

https://en.wikipedia.org/wiki/Attic

Pyroxferroite (Fe2+,Ca)SiO3 is a single chain inosilicate. It is mostly composed of iron, silicon and oxygen, with smaller fractions of calcium and several other metals.[1] Together with armalcolite and tranquillityite, it is one of the three minerals which were discovered on the Moon. It was then found in Lunar and Martian meteorites as well as a mineral in the Earth's crust. Pyroxferroite can also be produced by annealing synthetic clinopyroxene at high pressures and temperatures. The mineral is metastable and gradually decomposes at ambient conditions, but this process can take billions of years.

https://en.wikipedia.org/wiki/Pyroxferroite

A fusion energy gain factor, usually expressed with the symbol Q, is the ratio of fusion power produced in a nuclear fusion reactor to the power required to maintain the plasma in steady state. The condition of Q = 1, when the power being released by the fusion reactions is equal to the required heating power, is referred to as breakeven, or in some sources, scientific breakeven.

The energy given off by the fusion reactions may be captured within the fuel, leading to self-heating. Most fusion reactions release at least some of their energy in a form that cannot be captured within the plasma, so a system at Q = 1 will cool without external heating. With typical fuels, self-heating in fusion reactors is not expected to match the external sources until at least Q = 5. If Q increases past this point, increasing self-heating eventually removes the need for external heating. At this point the reaction becomes self-sustaining, a condition called ignition. Ignition corresponds to infinite Q, and is generally regarded as highly desirable for practical reactor designs.

Over time, several related terms have entered the fusion lexicon. Energy that is not captured within the fuel can be captured externally to produce electricity. That electricity can be used to heat the plasma to operational temperatures. A system that is self-powered in this way is referred to as running at engineering breakeven. Operating above engineering breakeven, a machine would produce more electricity than it uses and could sell that excess. One that sells enough electricity to cover its operating costs is sometimes known as economic breakeven. Additionally, fusion fuels, especially tritium, are very expensive, so many experiments run on various test gasses like hydrogen or deuterium. A reactor running on these fuels that reaches the conditions for breakeven if tritium was introduced is said to be operating at extrapolated breakeven.

As of 2021, the record for Q is held by the JET tokamak in the UK, at Q = (16 MW)/(24 MW) ≈ 0.67, first attained in 1997. The highest record for extrapolated breakeven was posted by the JT-60 device, with Qext = 1.25, slightly besting JET's earlier 1.14. ITER was originally designed to reach ignition, but is currently designed to reach Q = 10, producing 500 MW of fusion power from 50 MW of injected thermal power.

In the case of neutrons carrying most of the practical energy, as is the case in the D-T fuel, this neutron energy is normally captured in a "blanket" of lithium that produces more tritium that is used to fuel the reactor. Due to various exothermic and endothermic reactions, the blanket may have a power gain factor MR. MR is typically on the order of 1.1 to 1.3, meaning it produces a small amount of energy as well. The net result, the total amount of energy released to the environment and thus available for energy production, is referred to as PR, the net power output of the reactor.[9]

The blanket is then cooled and the cooling fluid used in a heat exchanger driving conventional steam turbines and generators. That electricity is then fed back into the heating system.[9] Each of these steps in the generation chain has an efficiency to consider. In the case of the plasma heating systems, is on the order of 60 to 70%, while modern generator systems based on the Rankine cycle have around 35 to 40%. Combining these we get a net efficiency of the power conversion loop as a whole, , of around 0.20 to 0.25. That is, about 20 to 25% of can be recirculated.[9]

Thus, the fusion energy gain factor required to reach engineering breakeven is defined as:[10]

To understand how is used, consider a reactor operating at 20 MW and Q = 2. Q = 2 at 20 MW implies that Pheat is 10 MW. Of that original 20 MW about 20% is alphas, so assuming complete capture, 4 MW of Pheat is self-supplied. We need a total of 10 MW of heating and get 4 of that through alphas, so we need another 6 MW of power. Of the original 20 MW of output, 4 MW are left in the fuel, so we have 16 MW of net output. Using MR of 1.15 for the blanket, we get PR about 18.4 MW. Assuming a good of 0.25, that requires 24 MW PR, so a reactor at Q = 2 cannot reach engineering breakeven. At Q = 4 one needs 5 MW of heating, 4 of which come from the fusion, leaving 1 MW of external power required, which can easily be generated by the 18.4 MW net output. Thus for this theoretical design the QE is between 2 and 4.

Considering real-world losses and efficiencies, Q values between 5 and 8 are typically listed for magnetic confinement devices,[9] while inertial devices have dramatically lower values for and thus require much higher QE values, on the order of 50 to 100.[11]

Ignition[edit]

As the temperature of the plasma increases, the rate of fusion reactions grows rapidly, and with it, the rate of self-heating. In contrast, non-capturable energy losses like x-rays do not grow at the same rate. Thus, in overall terms, the self-heating process becomes more efficient as the temperature increases, and less energy is needed from external sources to keep it hot.

Eventually Pheat reaches zero, that is, all of the energy needed to keep the plasma at the operational temperature is being supplied by self-heating, and the amount of external energy that needs to be added drops to zero. This point is known as ignition. In the case of D-T fuel, where only 20% of the energy is released as alphas that give rise to self-heating, this cannot occur until the plasma is releasing at least five times the power needed to keep it at its working temperature.

Ignition, by definition, corresponds to an infinite Q, but it does not mean that frecirc drops to zero as the other power sinks in the system, like the magnets and cooling systems, still need to be powered. Generally, however, these are much smaller than the energy in the heaters, and require a much smaller frecirc. More importantly, this number is more likely to be near-constant, meaning that further improvements in plasma performance will result in more energy that can be directly used for commercial generation, as opposed to recirculation.

https://en.wikipedia.org/wiki/Fusion_energy_gain_factor

A field-reversed configuration (FRC) is a type of plasma device studied as a means of producing nuclear fusion. It confines a plasma on closed magnetic field lines without a central penetration.[1]In an FRC, the plasma has the form of a self-stable torus, similar to a smoke ring.

FRCs are closely related to another self-stable magnetic confinement fusion device, the spheromak. Both are considered part of the compact toroid class of fusion devices. FRCs normally have a plasma that is more elongated than spheromaks, having the overall shape of a hollowed out sausage rather than the roughly spherical spheromak.

FRCs were a major area of research in the 1960s and into the 1970s, but had problems scaling up into practical fusion triple products. Interest returned in the 1990s and as of 2019, FRCs were an active research area.

https://en.wikipedia.org/wiki/Field-reversed_configuration

Fusion power, processes and devices

Core topics

Nuclear fusion Timeline List of experiments Nuclear power Nuclear reactor Atomic nucleus Fusion energy gain factor Lawson criterion Magnetohydrodynamics Neutron Plasma

Processes,

methods

Confinement

type

Gravitational

Alpha process Triple-alpha process CNO cycle Fusor Helium flash Nova remnants Proton-proton chain Carbon-burning Lithium burning Neon-burning Oxygen-burning Silicon-burning R-process S-process

Magnetic

Dense plasma focus Field-reversed configuration Levitated dipole Magnetic mirror Bumpy torus Reversed field pinch Spheromak Stellarator Tokamak Spherical Z-pinch

Inertial

Bubble (acoustic) Laser-driven Ion-driven Magnetized Liner Inertial Fusion

Electrostatic

Fusor Polywell

Other forms

Colliding beam Magnetized target Migma Muon-catalyzed Pyroelectric

https://en.wikipedia.org/wiki/nuclear_cascade

https://en.wikipedia.org/wiki/Proton–proton_chain

https://en.wikipedia.org/wiki/CNO_cycle

https://en.wikipedia.org/wiki/Fusor_(astronomy)

https://en.wikipedia.org/wiki/Helium_flash

https://en.wikipedia.org/wiki/Fusion_energy_gain_factor

https://en.wikipedia.org/wiki/Aneutronic_fusion

Candidate reactions[edit]

Several nuclear reactions produce no neutrons on any of their branches. Those with the largest cross sections are these:

| Isotopes | Reaction | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| Deuterium - Helium-3 | 2D | + | 3He | → | 4He | + | 1p | + 18.3 MeV | |

| Deuterium - Lithium-6 | 2D | + | 6Li | → | 2 | 4He | + 22.4 MeV | ||

| Proton - Lithium-6 | 1p | + | 6Li | → | 4He | + | 3He | + 4.0 MeV | |

| Helium-3 – Lithium-6 | 3He | + | 6Li | → | 2 | 4He | + | 1p | + 16.9 MeV |

| Helium-3 - Helium-3 | 3He | + | 3He | → | 4He | + | 2 1p | + 12.86 MeV | |

| Proton – Lithium-7 | 1p | + | 7Li | → | 2 | 4He | + 17.2 MeV | ||

| Proton – Boron-11 | 1p | + | 11B | → | 3 | 4He | + 8.7 MeV | ||

| Proton – Nitrogen | 1p | + | 15N | → | 12C | + | 4He | + 5.0 MeV | |

Energy capture[edit]

Aneutronic fusion produces energy in the form of charged particles instead of neutrons. This means that energy from aneutronic fusion could be captured using direct conversion instead of the steam cycle. Direct conversion techniques can either be inductive, based on changes in magnetic fields, electrostatic, based on pitting charged particles against an electric field, or photoelectric, in which light energy is captured. In a pulsed mode.[50]

Electrostatic direct conversion uses the motion of charged particles to create voltage. This voltage drives electricity in a wire. This becomes electrical power, the reverse of most phenomena that use a voltage to put a particle in motion. Direct energy conversion does the opposite, using particle motion to produce a voltage. It has been described as a linear accelerator running backwards.[51] An early supporter of this method was Richard F. Post at Lawrence Livermore. He proposed to capture the kinetic energy of charged particles as they were exhausted from a fusion reactor and convert this into voltage to drive current.[52] Post helped develop the theoretical underpinnings of direct conversion, later demonstrated by Barr and Moir. They demonstrated a 48 percent energy capture efficiency on the Tandem Mirror Experiment in 1981.[53]

Aneutronic fusion loses much of its energy as light. This energy results from the acceleration and deceleration of charged particles. These speed changes can be caused by Bremsstrahlung radiation or cyclotron radiation or synchrotron radiation or electric field interactions. The radiation can be estimated using the Larmor formula and comes in the X-ray, IR, UV and visible spectra. Some of the energy radiated as X-rays may be converted directly to electricity. Because of the photoelectric effect, X-rays passing through an array of conducting foils transfer some of their energy to electrons, which can then be captured electrostatically. Since X-rays can go through far greater material thickness than electrons, many hundreds or thousands of layers are needed to absorb them.[54]

See also[edit]

https://en.wikipedia.org/wiki/Aneutronic_fusion

Direct energy conversion (DEC) or simply direct conversion converts a charged particle's kinetic energy into a voltage. It is a scheme for power extraction from nuclear fusion.

https://en.wikipedia.org/wiki/Direct_energy_conversion

A magnetic mirror, known as a magnetic trap (магнитный захват) in Russia and briefly as a pyrotron in the US, is a type of magnetic confinement device used in fusion power to trap high temperature plasma using magnetic fields. The mirror was one of the earliest major approaches to fusion power, along with the stellaratorand z-pinch machines.

https://en.wikipedia.org/wiki/Magnetic_mirror

The bumpy torus is a class of magnetic fusion energy devices that consist of a series of magnetic mirrorsconnected end-to-end to form a closed torus. It is based on a discovery made by a team headed by Dr. Ray Dandl at Oak Ridge National Laboratory in the 1960s.[1]

https://en.wikipedia.org/wiki/Bumpy_torus

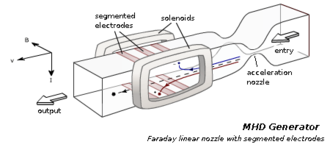

A magnetohydrodynamic generator (MHD generator) is a magnetohydrodynamic converter that utilizes a Brayton cycle to transform thermal energy and kinetic energy directly into electricity. MHD generators are different from traditional electric generators in that they operate without moving parts (e.g. no turbine) to limit the upper temperature. They therefore have the highest known theoretical thermodynamic efficiency of any electrical generation method. MHD has been extensively developed as a topping cycle to increase the efficiency of electric generation, especially when burning coal or natural gas. The hot exhaust gas from an MHD generator can heat the boilers of a steam power plant, increasing overall efficiency.

An MHD generator, like a conventional generator, relies on moving a conductor through a magnetic field to generate electric current. The MHD generator uses hot conductive ionized gas (a plasma) as the moving conductor. The mechanical dynamo, in contrast, uses the motion of mechanical devices to accomplish this.

Practical MHD generators have been developed for fossil fuels, but these were overtaken by less expensive combined cycles in which the exhaust of a gas turbine or molten carbonate fuel cell heats steam to power a steam turbine.

MHD dynamos are the complement of MHD accelerators, which have been applied to pump liquid metals, seawater and plasmas.

Natural MHD dynamos are an active area of research in plasma physics and are of great interest to the geophysics and astrophysics communities, since the magnetic fields of the earth and sun are produced by these natural dynamos.

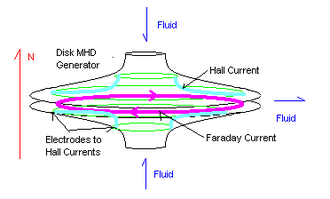

Disc generator[edit]

The third and, currently, the most efficient design is the Hall effect disc generator. This design currently holds the efficiency and energy density records for MHD generation. A disc generator has fluid flowing between the center of a disc, and a duct wrapped around the edge. (The ducts are not shown.) The magnetic excitation field is made by a pair of circular Helmholtz coils above and below the disk. (The coils are not shown.)

The Faraday currents flow in a perfect dead short around the periphery of the disk.

The Hall effect currents flow between ring electrodes near the center duct and ring electrodes near the periphery duct.

The wide flat gas flow reduced the distance, hence the resistance of the moving fluid. This increases efficiency.

Another significant advantage of this design is that the magnets are more efficient. First, they cause simple parallel field lines. Second, because the fluid is processed in a disk, the magnet can be closer to the fluid, and in this magnetic geometry, magnetic field strengths increase as the 7th power of distance. Finally, the generator is compact for its power, so the magnet is also smaller. The resulting magnet uses a much smaller percentage of the generated power.

Generator efficiency[edit]

The efficiency of the direct energy conversion in MHD power generation increases with the magnetic field strength and the plasma conductivity, which depends directly on the plasma temperature, and more precisely on the electron temperature. As very hot plasmas can only be used in pulsed MHD generators (for example using shock tubes) due to the fast thermal material erosion, it was envisaged to use nonthermal plasmas as working fluids in steady MHD generators, where only free electrons are heated a lot (10,000–20,000 kelvins) while the main gas (neutral atoms and ions) remains at a much lower temperature, typically 2500 kelvins. The goal was to preserve the materials of the generator (walls and electrodes) while improving the limited conductivity of such poor conductors to the same level as a plasma in thermodynamic equilibrium; i.e. completely heated to more than 10,000 kelvins, a temperature that no material could stand.[1][2][3][4]

But Evgeny Velikhov first discovered theoretically in 1962 and experimentally in 1963 that an ionization instability, later called the Velikhov instability or electrothermal instability, quickly arises in any MHD converter using magnetized nonthermal plasmas with hot electrons, when a critical Hall parameteris reached, hence depending on the degree of ionization and the magnetic field.[5][6][7] Such an instability greatly degrades the performance of nonequilibrium MHD generators. The prospects about this technology, which initially predicted awesome efficiencies, crippled MHD programs all over the world as no solution to mitigate the instability was found at that time.[8][9][10][11]

Also, MHDs work better with stronger magnetic fields. The most successful magnets have been superconducting, and very close to the channel. A major difficulty was refrigerating these magnets while insulating them from the channel. The problem is worse because the magnets work better when they are closer to the channel. There are also severe risks of damage to the hot, brittle ceramics from differential thermal cracking. The magnets are usually near absolute zero, while the channel is several thousand degrees.

A magnetohydrodynamic generator might also be the first stage of a gas-cooled nuclear reactor.[12]

Toxic byproducts[edit]

MHD reduces overall production of hazardous fossil fuel wastes because it increases plant efficiency. In MHD coal plants, the patented commercial "Econoseed" process developed by the U.S. (see below) recycles potassium ionization seed from the fly ash captured by the stack-gas scrubber. However, this equipment is an additional expense. If molten metal is the armature fluid of an MHD generator, care must be taken with the coolant of the electromagnetics and channel. The alkali metals commonly used as MHD fluids react violently with water. Also, the chemical byproducts of heated, electrified alkali metals and channel ceramics may be poisonous and environmentally persistent.

History[edit]

The first practical MHD power research was funded in 1938 in the U.S. by Westinghouse in its Pittsburgh, Pennsylvania laboratories, headed by Hungarian Bela Karlovitz. The initial patent on MHD is by B. Karlovitz, U.S. Patent No. 2,210,918, "Process for the Conversion of Energy", August 13, 1940.

Former Yugoslavia development[edit]

Over more than a ten-year span, engineers in former Yugoslavian Institute of Thermal and Nuclear Technology (ITEN), Energoinvest Co., Sarajevo, had built the first experimental Magneto-Hydrodynamic facility power generator in 1989. It was here it was first patented.[16][17]

U.S. development[edit]

In the 1980s, the U.S. Department of Energy began a vigorous multiyear program, culminating in a 1992 50 MW demonstration coal combustor at the Component Development and Integration Facility (CDIF) in Butte, Montana. This program also had significant work at the Coal-Fired-In-Flow-Facility (CFIFF) at University of Tennessee Space Institute.

This program combined four parts:

- An integrated MHD topping cycle, with channel, electrodes and current control units developed by AVCO, later known as Textron Defence of Boston. This system was a Hall effect duct generator heated by pulverized coal, with a potassium ionisation seed. AVCO had developed the famous Mk. V generator, and had significant experience.

- An integrated bottoming cycle, developed at the CDIF.

- A facility to regenerate the ionization seed was developed by TRW. Potassium carbonate is separated from the sulphate in the fly ash from the scrubbers. The carbonate is removed, to regain the potassium.

- A method to integrate MHD into preexisting coal plants. The Department of Energy commissioned two studies. Westinghouse Electric performed a study based on the Scholtz Plant of Gulf Power in Sneads, Florida. The MHD Development Corporation also produced a study based on the J.E. Corrette Plant of the Montana Power Company of Billings, Montana.

Initial prototypes at the CDIF were operated for short durations, with various coals: Montana Rosebud, and a high-sulphur corrosive coal, Illinois No. 6. A great deal of engineering, chemistry and material science was completed. After final components were developed, operational testing completed with 4,000 hours of continuous operation, 2,000 on Montana Rosebud, 2,000 on Illinois No. 6. The testing ended in 1993.[citation needed]

Japanese development[edit]

The Japanese program in the late 1980s concentrated on closed-cycle MHD. The belief was that it would have higher efficiencies, and smaller equipment, especially in the clean, small, economical plant capacities near 100 megawatts (electrical) which are suited to Japanese conditions. Open-cycle coal-powered plants are generally thought to become economical above 200 megawatts.

The first major series of experiments was FUJI-1, a blow-down system powered from a shock tube at the Tokyo Institute of Technology. These experiments extracted up to 30.2% of enthalpy, and achieved power densities near 100 megawatts per cubic meter. This facility was funded by Tokyo Electric Power, other Japanese utilities, and the Department of Education. Some authorities believe this system was a disc generator with a helium and argon carrier gas and potassium ionization seed.

In 1994, there were detailed plans for FUJI-2, a 5 MWe continuous closed-cycle facility, powered by natural gas, to be built using the experience of FUJI-1. The basic MHD design was to be a system with inert gases using a disk generator. The aim was an enthalpy extraction of 30% and an MHD thermal efficiency of 60%. FUJI-2 was to be followed by a retrofit to a 300 MWe natural gas plant.

Australian development[edit]

In 1986, Professor Hugo Karl Messerle at The University of Sydney researched coal-fueled MHD. This resulted in a 28 MWe topping facility that was operated outside Sydney. Messerle also wrote one of the most recent reference works (see below), as part of a UNESCO education program.

A detailed obituary for Hugo is located on the Australian Academy of Technological Sciences and Engineering (ATSE) website.[18]

Italian development[edit]

The Italian program began in 1989 with a budget of about 20 million $US, and had three main development areas:

- MHD Modelling.

- Superconducting magnet development. The goal in 1994 was a prototype 2 m long, storing 66 MJ, for an MHD demonstration 8 m long. The field was to be 5 teslas, with a taper of 0.15 T/m. The geometry was to resemble a saddle shape, with cylindrical and rectangular windings of niobium-titanium copper.

- Retrofits to natural gas powerplants. One was to be at the Enichem-Anic factor in Ravenna. In this plant, the combustion gases from the MHD would pass to the boiler. The other was a 230 MW (thermal) installation for a power station in Brindisi, that would pass steam to the main power plant.

Chinese development[edit]

A joint U.S.-China national programme ended in 1992 by retrofitting the coal-fired No. 3 plant in Asbach.[citation needed] A further eleven-year program was approved in March 1994. This established centres of research in:

- The Institute of Electrical Engineering in the Chinese Academy of Sciences, Beijing, concerned with MHD generator design.

- The Shanghai Power Research Institute, concerned with overall system and superconducting magnet research.

- The Thermoenergy Research Engineering Institute at the Nanjing's Southeast University, concerned with later developments.

The 1994 study proposed a 10 W (electrical, 108 MW thermal) generator with the MHD and bottoming cycle plants connected by steam piping, so either could operate independently.

Russian developments[edit]

In 1971 the natural-gas fired U-25 plant was completed near Moscow, with a designed capacity of 25 megawatts. By 1974 it delivered 6 megawatts of power.[19] By 1994, Russia had developed and operated the coal-operated facility U-25, at the High-Temperature Institute of the Russian Academy of Science in Moscow. U-25's bottoming plant was actually operated under contract with the Moscow utility, and fed power into Moscow's grid. There was substantial interest in Russia in developing a coal-powered disc generator. In 1986 the first industrial power plant with MHD generator was built, but in 1989 the project was cancelled before MHD launch and this power plant later joined to Ryazan Power Station as a 7th unit with ordinary construction.

https://en.wikipedia.org/wiki/Magnetohydrodynamic_generator

Shocks and discontinuities are transition layers where the plasma properties change from one equilibrium state to another. The relation between the plasma properties on both sides of a shock or a discontinuity can be obtained from the conservative form of the magnetohydrodynamic (MHD) equations, assuming conservation of mass, momentum, energy and of .

https://en.wikipedia.org/wiki/Shocks_and_discontinuities_(magnetohydrodynamics)

https://en.wikipedia.org/wiki/Helion_Energy

https://en.wikipedia.org/wiki/Magnetic_pressure

https://en.wikipedia.org/wiki/Tangential_and_normal_components

https://en.wikipedia.org/wiki/Density

https://en.wikipedia.org/wiki/thermodynamics

https://en.wikipedia.org/wiki/Speed_of_sound

https://en.wikipedia.org/wiki/Bow_shock

https://en.wikipedia.org/wiki/Category:Space_plasmas

https://en.wikipedia.org/wiki/Magnetic_confinement_fusion

https://en.wikipedia.org/wiki/Plasma_(physics)

https://en.wikipedia.org/wiki/Neutron

https://en.wikipedia.org/wiki/Levitated_dipole

https://en.wikipedia.org/wiki/Stellarator

https://en.wikipedia.org/wiki/Z-pinch

https://en.wikipedia.org/wiki/Thermonuclear_fusion#Confinement

https://en.wikipedia.org/wiki/Vacuum

https://en.wikipedia.org/wiki/Binding_energy#Nuclear_binding_energy_curve

https://en.wikipedia.org/wiki/R-process

https://en.wikipedia.org/wiki/Voitenko_compressor

https://en.wikipedia.org/wiki/Shear

https://en.wikipedia.org/wiki/conduction

https://en.wikipedia.org/wiki/compression

https://en.wikipedia.org/wiki/Spinor

https://en.wikipedia.org/wiki/Spin_(physics)

https://en.wikipedia.org/wiki/intrinsic_angular_momentum

https://en.wikipedia.org/wiki/scalar

https://en.wikipedia.org/wiki/eigen

https://en.wikipedia.org/wiki/zero

https://en.wikipedia.org/wiki/linear

https://en.wikipedia.org/wiki/Spin–statistics_theorem

https://en.wikipedia.org/wiki/Intrinsically_disordered_proteins

https://en.wikipedia.org/wiki/supramolecular_chemistry

https://en.wikipedia.org/wiki/Folding_(chemistry)

https://en.wikipedia.org/wiki/Category:Self-organization

https://en.wikipedia.org/wiki/Globular_protein

https://en.wikipedia.org/wiki/Scleroprotein

Spinors were introduced in geometry by Élie Cartan in 1913.[1][d] In the 1920s physicists discovered that spinors are essential to describe the intrinsic angular momentum, or "spin", of the electron and other subatomic particles.[e]

https://en.wikipedia.org/wiki/Spinor

https://en.wikipedia.org/wiki/Möbius_strip

https://en.wikipedia.org/wiki/Killing_spinor

https://en.wikipedia.org/wiki/Spinor_condensate

https://en.wikipedia.org/wiki/Killing_spinor

https://en.wikipedia.org/wiki/Spin–spin_relaxation

https://en.wikipedia.org/wiki/Spinor_spherical_harmonics

https://en.wikipedia.org/wiki/Orthogonal_group

https://en.wikipedia.org/wiki/Dirac_operator

https://en.wikipedia.org/wiki/Spin-1/2

https://en.wikipedia.org/wiki/Metal_spinning

https://en.wikipedia.org/wiki/Symplectic_spinor_bundle

https://en.wikipedia.org/wiki/Vorticity#Vortex_lines_and_vortex_tubes

https://en.wikipedia.org/wiki/Wingtip_vortices

https://en.wikipedia.org/wiki/Dust_devil

https://en.wikipedia.org/wiki/Negative_temperature#Two-dimensional_vortex_motion

https://en.wikipedia.org/wiki/Strake_(aeronautics)#Anti-spin_strakes

https://en.wikipedia.org/wiki/negative_matter

https://en.wikipedia.org/wiki/negative_resistance

https://en.wikipedia.org/wiki/negative_energy

https://en.wikipedia.org/wiki/Atomic_Age

https://en.wikipedia.org/wiki/Stimulated_emission#Small_signal_gain_equation

https://en.wikipedia.org/wiki/Ion

https://en.wikipedia.org/wiki/Second

https://en.wikipedia.org/wiki/Atomic_layer_deposition

https://en.wikipedia.org/wiki/Gas

https://en.wikipedia.org/wiki/Neon

https://en.wikipedia.org/wiki/Accretion_disk

https://en.wikipedia.org/wiki/astrophysical_jet

Roy Orbison - Oh, Pretty Woman

Above. Flo Rida (ft. Timbaland) - Elevator

Above. White Zombie - Thunder Kiss '65 (Official Video)

Triple-alpha process in stars[edit]

Helium accumulates in the cores of stars as a result of the proton–proton chain reaction and the carbon–nitrogen–oxygen cycle.

https://en.wikipedia.org/wiki/Magnetohydrodynamics

https://en.wikipedia.org/wiki/Fusion_energy_gain_factor

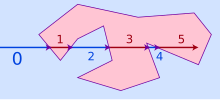

In linguistics, and more precisely in traditional grammar, a cardinal numeral (or cardinal number word) is a part of speechused to count. Examples in English are the words one, two, three, and the compounds three hundred and forty-two and nine hundred and sixty. Cardinal numerals are classified as definite, and are related to ordinal numbers, such as the English first, second, and third, etc.[1][2][3]

See also[edit]

- Arity

- Cardinal number for the related usage in mathematics

- English numerals (in particular the Cardinal numbers section)

- Distributive number

- Multiplier

- Numeral for examples of number systems

- Ordinal number

- Valency

In linguistics, ordinal numerals or ordinal number words are words representing position or rank in a sequential order; the order may be of size, importance, chronology, and so on (e.g., "third", "tertiary"). They differ from cardinal numerals, which represent quantity (e.g., "three") and other types of numerals.

In traditional grammar, all numerals, including ordinal numerals, are grouped into a separate part of speech(Latin: nomen numerale, hence, "noun numeral" in older English grammar books). However, in modern interpretations of English grammar, ordinal numerals are usually conflated with adjectives.

Ordinal numbers may be written in English with numerals and letter suffixes: 1st, 2nd or 2d, 3rd or 3d, 4th, 11th, 21st, 101st, 477th, etc., with the suffix acting as an ordinal indicator. Written dates often omit the suffix, although it is nevertheless pronounced. For example: 5 November 1605 (pronounced "the fifth of November ... "); November 5, 1605, ("November (the) Fifth ..."). When written out in full with "of", however, the suffix is retained: the 5th of November. In other languages, different ordinal indicators are used to write ordinal numbers.

In American Sign Language, the ordinal numbers first through ninth are formed with handshapes similar to those for the corresponding cardinal numbers with the addition of a small twist of the wrist.[1]

https://en.wikipedia.org/wiki/Ordinal_numeral

0 (zero) is a number,[1] and the numerical digit used to represent that number in numerals. It fulfills a central role in mathematics as the additive identity[2] of the integers, real numbers, and many other algebraic structures. As a digit, 0 is used as a placeholder in place value systems. Names for the number 0 in English include zero, nought (UK), naught (US; /nɔːt/), nil, or—in contexts where at least one adjacent digit distinguishes it from the letter "O"—oh or o (/oʊ/). Informal or slang terms for zero include zilch and zip.[3] Ought and aught (/ɔːt/),[4] as well as cipher,[5] have also been used historically.[6][7]

https://en.wikipedia.org/wiki/0

Zero-point energy (ZPE) is the lowest possible energy that a quantum mechanical system may have. Unlike in classical mechanics, quantum systems constantly fluctuate in their lowest energy state as described by the Heisenberg uncertainty principle.[1] As well as atoms and molecules, the empty space of the vacuum has these properties. According to quantum field theory, the universe can be thought of not as isolated particles but continuous fluctuating fields: matter fields, whose quanta are fermions (i.e., leptons and quarks), and force fields, whose quanta are bosons (e.g., photons and gluons). All these fields have zero-point energy.[2] These fluctuating zero-point fields lead to a kind of reintroduction of an aether in physics[1][3] since some systems can detect the existence of this energy. However, this aether cannot be thought of as a physical medium if it is to be Lorentz invariant such that there is no contradiction with Einstein's theory of special relativity.[1]

Physics currently lacks a full theoretical model for understanding zero-point energy; in particular, the discrepancy between theorized and observed vacuum energy is a source of major contention.[4] Physicists Richard Feynman and John Wheeler calculated the zero-point radiation of the vacuum to be an order of magnitude greater than nuclear energy, with a single light bulb containing enough energy to boil all the world's oceans.[5] Yet according to Einstein's theory of general relativity, any such energy would gravitate and the experimental evidence from both the expansion of the universe, dark energy and the Casimir effect shows any such energy to be exceptionally weak. A popular proposal that attempts to address this issue is to say that the fermion field has a negative zero-point energy, while the boson field has positive zero-point energy and thus these energies somehow cancel each other out.[6][7] This idea would be true if supersymmetry were an exact symmetry of nature; however, the LHC at CERN has so far found no evidence to support it. Moreover, it is known that if supersymmetry is valid at all, it is at most a broken symmetry, only true at very high energies, and no one has been able to show a theory where zero-point cancellations occur in the low energy universe we observe today.[7] This discrepancy is known as the cosmological constant problem and it is one of the greatest unsolved mysteries in physics. Many physicists believe that "the vacuum holds the key to a full understanding of nature".[8]

https://en.wikipedia.org/wiki/Zero-point_energy

Necessity of the vacuum field in QED[edit]

The vacuum state of the "free" electromagnetic field (that with no sources) is defined as the ground state in which nkλ = 0 for all modes (k, λ). The vacuum state, like all stationary states of the field, is an eigenstate of the Hamiltonian but not the electric and magnetic field operators. In the vacuum state, therefore, the electric and magnetic fields do not have definite values. We can imagine them to be fluctuating about their mean value of zero.

In a process in which a photon is annihilated (absorbed), we can think of the photon as making a transition into the vacuum state. Similarly, when a photon is created (emitted), it is occasionally useful to imagine that the photon has made a transition out of the vacuum state.[55] An atom, for instance, can be considered to be "dressed" by emission and reabsorption of "virtual photons" from the vacuum. The vacuum state energy described by ∑kλ ħωk2is infinite. We can make the replacement:

the zero-point energy density is:

or in other words the spectral energy density of the vacuum field:

The zero-point energy density in the frequency range from ω1 to ω2 is therefore:

This can be large even in relatively narrow "low frequency" regions of the spectrum. In the optical region from 400 to 700 nm, for instance, the above equation yields around 220 erg/cm3.

We showed in the above section that the zero-point energy can be eliminated from the Hamiltonian by the normal ordering prescription. However, this elimination does not mean that the vacuum field has been rendered unimportant or without physical consequences. To illustrate this point we consider a linear dipole oscillator in the vacuum. The Hamiltonian for the oscillator plus the field with which it interacts is:

This has the same form as the corresponding classical Hamiltonian and the Heisenberg equations of motion for the oscillator and the field are formally the same as their classical counterparts. For instance the Heisenberg equations for the coordinate x and the canonical momentum p = mẋ +eAc of the oscillator are:

or:

since the rate of change of the vector potential in the frame of the moving charge is given by the convective derivative

For nonrelativistic motion we may neglect the magnetic force and replace the expression for mẍ by:

Above we have made the electric dipole approximation in which the spatial dependence of the field is neglected. The Heisenberg equation for akλ is found similarly from the Hamiltonian to be:

In the electric dipole approximation.

In deriving these equations for x, p, and akλ we have used the fact that equal-time particle and field operators commute. This follows from the assumption that particle and field operators commute at some time (say, t = 0) when the matter-field interpretation is presumed to begin, together with the fact that a Heisenberg-picture operator A(t) evolves in time as A(t) = U†(t)A(0)U(t), where U(t) is the time evolution operator satisfying

Alternatively, we can argue that these operators must commute if we are to obtain the correct equations of motion from the Hamiltonian, just as the corresponding Poisson brackets in classical theory must vanish in order to generate the correct Hamilton equations. The formal solution of the field equation is:

and therefore the equation for ȧkλ may be written:

where:

and:

It can be shown that in the radiation reaction field, if the mass m is regarded as the "observed" mass then we can take:

The total field acting on the dipole has two parts, E0(t) and ERR(t). E0(t) is the free or zero-point field acting on the dipole. It is the homogeneous solution of the Maxwell equation for the field acting on the dipole, i.e., the solution, at the position of the dipole, of the wave equation

satisfied by the field in the (source free) vacuum. For this reason E0(t) is often referred to as the "vacuum field", although it is of course a Heisenberg-picture operator acting on whatever state of the field happens to be appropriate at t = 0. ERR(t) is the source field, the field generated by the dipole and acting on the dipole.

Using the above equation for ERR(t) we obtain an equation for the Heisenberg-picture operator that is formally the same as the classical equation for a linear dipole oscillator:

where τ = 2e23mc3. in this instance we have considered a dipole in the vacuum, without any "external" field acting on it. the role of the external field in the above equation is played by the vacuum electric field acting on the dipole.

Classically, a dipole in the vacuum is not acted upon by any "external" field: if there are no sources other than the dipole itself, then the only field acting on the dipole is its own radiation reaction field. In quantum theory however there is always an "external" field, namely the source-free or vacuum field E0(t).

According to our earlier equation for akλ(t) the free field is the only field in existence at t = 0 as the time at which the interaction between the dipole and the field is "switched on". The state vector of the dipole-field system at t = 0 is therefore of the form

where |vac⟩ is the vacuum state of the field and |ψD⟩ is the initial state of the dipole oscillator. The expectation value of the free field is therefore at all times equal to zero:

since akλ(0)|vac⟩ = 0. however, the energy density associated with the free field is infinite:

The important point of this is that the zero-point field energy HF does not affect the Heisenberg equation for akλ since it is a c-number or constant (i.e. an ordinary number rather than an operator) and commutes with akλ. We can therefore drop the zero-point field energy from the Hamiltonian, as is usually done. But the zero-point field re-emerges as the homogeneous solution for the field equation. A charged particle in the vacuum will therefore always see a zero-point field of infinite density. This is the origin of one of the infinities of quantum electrodynamics, and it cannot be eliminated by the trivial expedient dropping of the term ∑kλ ħωk2 in the field Hamiltonian.

The free field is in fact necessary for the formal consistency of the theory. In particular, it is necessary for the preservation of the commutation relations, which is required by the unitary of time evolution in quantum theory:

We can calculate [z(t),pz(t)] from the formal solution of the operator equation of motion

Using the fact that

and that equal-time particle and field operators commute, we obtain:

For the dipole oscillator under consideration it can be assumed that the radiative damping rate is small compared with the natural oscillation frequency, i.e., τω0 ≪ 1. Then the integrand above is sharply peaked at ω = ω0 and:

the necessity of the vacuum field can also be appreciated by making the small damping approximation in

and

Without the free field E0(t) in this equation the operator x(t) would be exponentially dampened, and commutators like [z(t),pz(t)] would approach zero for t ≫ 1τω2

0. With the vacuum field included, however, the commutator is iħ at all times, as required by unitarity, and as we have just shown. A similar result is easily worked out for the case of a free particle instead of a dipole oscillator.[98]

What we have here is an example of a "fluctuation-dissipation elation". Generally speaking if a system is coupled to a bath that can take energy from the system in an effectively irreversible way, then the bath must also cause fluctuations. The fluctuations and the dissipation go hand in hand we cannot have one without the other. In the current example the coupling of a dipole oscillator to the electromagnetic field has a dissipative component, in the form of the zero-point (vacuum) field; given the existence of radiation reaction, the vacuum field must also exist in order to preserve the canonical commutation rule and all it entails.

The spectral density of the vacuum field is fixed by the form of the radiation reaction field, or vice versa: because the radiation reaction field varies with the third derivative of x, the spectral energy density of the vacuum field must be proportional to the third power of ω in order for [z(t),pz(t)] to hold. In the case of a dissipative force proportional to ẋ, by contrast, the fluctuation force must be proportional to in order to maintain the canonical commutation relation.[98] This relation between the form of the dissipation and the spectral density of the fluctuation is the essence of the fluctuation-dissipation theorem.[77]

The fact that the canonical commutation relation for a harmonic oscillator coupled to the vacuum field is preserved implies that the zero-point energy of the oscillator is preserved. it is easy to show that after a few damping times the zero-point motion of the oscillator is in fact sustained by the driving zero-point field.[99]

https://en.wikipedia.org/wiki/Zero-point_energy#Necessity_of_the_vacuum_field_in_QED

The cosmic microwave background (CMB, CMBR), in Big Bang cosmology, is electromagnetic radiation which is a remnant from an early stage of the universe, also known as "relic radiation".[1] The CMB is faint cosmic background radiation filling all space. It is an important source of data on the early universe because it is the oldest electromagnetic radiation in the universe, dating to the epoch of recombination. With a traditional optical telescope, the space between stars and galaxies (the background) is completely dark. However, a sufficiently sensitive radio telescope shows a faint background noise, or glow, almost isotropic, that is not associated with any star, galaxy, or other object. This glow is strongest in the microwave region of the radio spectrum. The accidental discovery of the CMBin 1965 by American radio astronomers Arno Penzias and Robert Wilson[2][3] was the culmination of work initiated in the 1940s, and earned the discoverers the 1978 Nobel Prize in Physics.

https://en.wikipedia.org/wiki/Cosmic_microwave_background

In quantum physics, a quantum fluctuation (or vacuum state fluctuation or vacuum fluctuation) is the temporary random change in the amount of energy in a point in space,[2] as prescribed by Werner Heisenberg's uncertainty principle. They are tiny random fluctuations in the values of the fields which represent elementary particles, such as electric and magnetic fields which represent the electromagnetic force carried by photons, W and Z fields which carry the weak force, and gluon fields which carry the strong force.[3] Vacuum fluctuations appear as virtual particles, which are always created in particle-antiparticle pairs.[4] Since they are created spontaneously without a source of energy, vacuum fluctuations and virtual particles are said to violate the conservation of energy. This is theoretically allowable because the particles annihilate each other within a time limit determined by the uncertainty principle so they are not directly observable.[4][3] The uncertainty principlestates the uncertainty in energy and time can be related by[5] , where 12ħ ≈ 5,27286×10−35 Js. This means that pairs of virtual particles with energy and lifetime shorter than are continually created and annihilated in empty space. Although the particles are not directly detectable, the cumulative effects of these particles are measurable. For example, without quantum fluctuations the "bare" mass and charge of elementary particles would be infinite; from renormalization theory the shielding effect of the cloud of virtual particles is responsible for the finite mass and charge of elementary particles. Another consequence is the Casimir effect. One of the first observations which was evidence for vacuum fluctuations was the Lamb shift in hydrogen. In July 2020, scientists reported that quantum vacuum fluctuations can influence the motion of macroscopic, human-scale objects by measuring correlations below the standard quantum limitbetween the position/momentum uncertainty of the mirrors of LIGO and the photon number/phase uncertainty of light that they reflect.[6][7][8]

https://en.wikipedia.org/wiki/Quantum_fluctuation

https://en.wikipedia.org/wiki/Quantum_annealing

https://en.wikipedia.org/wiki/Quantum_foam

Vacuum energy is an underlying background energy that exists in space throughout the entire Universe.[1] The vacuum energy is a special case of zero-point energy that relates to the quantum vacuum.[2]

Why does the zero-point energy of the vacuum not cause a large cosmological constant? What cancels it out?

Summing over all possible oscillators at all points in space gives an infinite quantity. To remove this infinity, one may argue that only differences in energy are physically measurable, much as the concept of potential energy has been treated in classical mechanics for centuries. This argument is the underpinning of the theory of renormalization. In all practical calculations, this is how the infinity is handled.

Vacuum energy can also be thought of in terms of virtual particles (also known as vacuum fluctuations) which are created and destroyed out of the vacuum. These particles are always created out of the vacuum in particle–antiparticle pairs, which in most cases shortly annihilate each other and disappear. However, these particles and antiparticles may interact with others before disappearing, a process which can be mapped using Feynman diagrams. Note that this method of computing vacuum energy is mathematically equivalent to having a quantum harmonic oscillator at each point and, therefore, suffers the same renormalization problems.

Additional contributions to the vacuum energy come from spontaneous symmetry breaking in quantum field theory.

https://en.wikipedia.org/wiki/Vacuum_energy

Above. Property of Material , origins, scale, hydrogen emissions spectra, consideration to possibility of fine state no two state match without signal and with proper measure ; consider approximate vac model and limit of math ; actual v. model ; zero absou zero idea zero or number line force zero or concep zero type zero ; heterogenous or control at homogeniety supercession; etc..

Description[edit]

The defining criterion of a shock wave is that the bulk velocity of the plasma drops from "supersonic" to "subsonic", where the speed of sound cs is defined by where is the ratio of specific heats, is the pressure, and is the density of the plasma.

A common complication in astrophysics is the presence of a magnetic field. For instance, the charged particles making up the solar wind follow spiral paths along magnetic field lines. The velocity of each particle as it gyrates around a field line can be treated similarly to a thermal velocity in an ordinary gas, and in an ordinary gas the mean thermal velocity is roughly the speed of sound. At the bow shock, the bulk forward velocity of the wind (which is the component of the velocity parallel to the field lines about which the particles gyrate) drops below the speed at which the particles are gyrating.

https://en.wikipedia.org/wiki/Bow_shock

Comet Grigg–Skjellerup (formally designated 26P/Grigg–Skjellerup) is a periodic comet. It was visited by the Giotto probe in July 1992.[5]

https://en.wikipedia.org/wiki/26P/Grigg–Skjellerup

| Brownleeite | |

|---|---|

| General | |

| Category | Native element class, Fersilicite group |

| Formula (repeating unit) | MnSi |

| Strunz classification | 1.XX.00 |

| Dana classification | 01.01.23.07 |

| Crystal system | Isometric |

| Crystal class | Tetartoidal (23) H-M symbol: (23) |

| Space group | P213 |

| Identification | |

| Crystal habit | Cubic grain in microscopic dust particle (< 2.5 μm) |

| References | [1][2] |

A mirror galvanometer is an ammeter that indicates it has sensed an electric current by deflecting a light beam with a mirror. The beam of light projected on a scale acts as a long massless pointer. In 1826, Johann Christian Poggendorffdeveloped the mirror galvanometer for detecting electric currents. The apparatus is also known as a spot galvanometerafter the spot of light produced in some models.

Mirror galvanometers were used extensively in scientific instruments before reliable, stable electronic amplifiers were available. The most common uses were as recording equipment for seismometers and submarine cables used for telegraphy.

In modern times, the term mirror galvanometer is also used for devices that move laser beams by rotating a mirror through a galvanometer set-up, often with a servo-like control loop. The name is often abbreviated as galvo.

Kelvin's galvanometer[edit]

The mirror galvanometer was improved significantly by William Thomson, later to become Lord Kelvin. He coined the term mirror galvanometer and patented the device in 1858. Thomson intended the instrument to read weak signal currents on very long submarine telegraph cables.[1] This instrument was far more sensitive than any which preceded it, enabling the detection of the slightest defect in the core of a cable during its manufacture and submersion.

Thomson decided that he needed an extremely sensitive instrument after he took part in the failed attempt to lay a transatlantic telegraph cable in 1857. He worked on the device while waiting for a new expedition the following year. He first looked at improving a galvanometer used by Hermann von Helmholtz to measure the speed of nerve signals in 1849. Helmholtz's galvanometer had a mirror fixed to the moving needle, which was used to project a beam of light onto the opposite wall, thus greatly amplifying the signal. Thomson intended to make this more sensitive by reducing the mass of the moving parts, but in a flash of inspiration while watching the light reflected from his monocle suspended around his neck, he realised that he could dispense with the needle and its mounting altogether. He instead used a small piece of mirrored glass with a small piece of magnetised steel glued on the back. This was suspended by a thread in the magnetic field of the fixed sensing coil. In a hurry to try the idea, Thomson first used a hair from his dog, but later used a silk thread from the dress of his niece Agnes.[1]

The following is adapted from a contemporary account of Thomson's instrument:[2]

Moving coil galvanometer

Moving coil galvanometer was developed independently by Marcel Deprez and Jacques-Arsène d'Arsonval about 1880. Deprez's galvanometer was developed for high currents, while D'Arsonval designed his to measure weak currents. Unlike in the Kelvin's galvanometer, in this type of galvanometer the magnet is stationary and the coil is suspended in the magnet gap. The mirror attached to the coil frame rotates together with it. This form of instrument can be more sensitive and accurate and it replaced the Kelvin's galvanometer in most applications. The moving coil galvanometer is practically immune to ambient magnetic fields. Another important feature is self-damping generated by the electro-magnetic forces due to the currents induced in the coil by its movements the magnetic field. These are proportional to the angular velocity of the coil.

In modern times, high-speed mirror galvanometers are employed in laser light shows to move the laser beams and produce colorful geometric patterns in fog around the audience. Such high speed mirror galvanometers have proved to be indispensable in industry for laser marking systems for everything from laser etching hand tools, containers, and parts to batch-coding semiconductor wafers in semiconductor device fabrication. They typically control X and Y directions on Nd:YAG and CO2 laser markers to control the position of the infrared power laser spot. Laser ablation, laser beam machining and wafer dicing are all industrial areas where high-speed mirror galvanometers can be found.

This moving coil galvanometer is mainly used to measure very feeble or low currents of order 10−9 A.

To linearise the magnetic field across the coil throughout the galvanometer's range of movement, the d'Arsonval design of a soft iron cylinder is placed inside the coil without touching it. This gives a consistent radial field, rather than a parallel linear field.

See also[edit]

https://en.wikipedia.org/wiki/Mirror_galvanometer

https://en.wikipedia.org/wiki/String_galvanometer

https://en.wikipedia.org/wiki/Current_mirror

https://en.wikipedia.org/wiki/Cold_mirror

https://en.wikipedia.org/wiki/Index_case

https://en.wikipedia.org/wiki/Disk_mirroring

https://en.wikipedia.org/wiki/Retroreflector

https://en.wikipedia.org/wiki/Retroreflector#Phase-conjugate_mirror

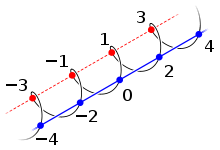

Parity of Zero

Zero is an even number. In other words, its parity—the quality of an integer being even or odd—is even. This can be easily verified based on the definition of "even": it is an integer multiple of 2, specifically 0 × 2. As a result, zero shares all the properties that characterize even numbers: for example, 0 is neighbored on both sides by odd numbers, any decimal integer has the same parity as its last digit—so, since 10 is even, 0 will be even, and if y is even then y + x has the same parity as x—and x and 0 + x always have the same parity.

Zero also fits into the patterns formed by other even numbers. The parity rules of arithmetic, such as even − even = even, require 0 to be even. Zero is the additive identity element of the group of even integers, and it is the starting case from which other even natural numbers are recursively defined. Applications of this recursion from graph theory to computational geometry rely on zero being even. Not only is 0 divisible by 2, it is divisible by every power of 2, which is relevant to the binary numeral system used by computers. In this sense, 0 is the "most even" number of all.[1]

Among the general public, the parity of zero can be a source of confusion. In reaction time experiments, most people are slower to identify 0 as even than 2, 4, 6, or 8. Some students of mathematics—and some teachers—think that zero is odd, or both even and odd, or neither. Researchers in mathematics education propose that these misconceptions can become learning opportunities. Studying equalities like 0 × 2 = 0 can address students' doubts about calling 0 a number and using it in arithmetic. Class discussions can lead students to appreciate the basic principles of mathematical reasoning, such as the importance of definitions. Evaluating the parity of this exceptional number is an early example of a pervasive theme in mathematics: the abstraction of a familiar concept to an unfamiliar setting.

Mathematical contexts[edit]

Countless results in number theory invoke the fundamental theorem of arithmetic and the algebraic properties of even numbers, so the above choices have far-reaching consequences. For example, the fact that positive numbers have unique factorizations means that one can determine whether a number has an even or odd number of distinct prime factors. Since 1 is not prime, nor does it have prime factors, it is a product of 0 distinct primes; since 0 is an even number, 1 has an even number of distinct prime factors. This implies that the Möbius function takes the value μ(1) = 1, which is necessary for it to be a multiplicative function and for the Möbius inversion formula to work.[14]

Not being odd[edit]

A number n is odd if there is an integer k such that n = 2k + 1. One way to prove that zero is not odd is by contradiction: if 0 = 2k + 1 then k = −1/2, which is not an integer.[15] Since zero is not odd, if an unknown number is proven to be odd, then it cannot be zero. This apparently trivial observation can provide a convenient and revealing proof explaining why an odd number is nonzero.

A classic result of graph theory states that a graph of odd order (having an odd number of vertices) always has at least one vertex of even degree. (The statement itself requires zero to be even: the empty graph has an even order, and an isolated vertex has an even degree.)[16] In order to prove the statement, it is actually easier to prove a stronger result: any odd-order graph has an odd number of even degree vertices. The appearance of this odd number is explained by a still more general result, known as the handshaking lemma: any graph has an even number of vertices of odd degree.[17]Finally, the even number of odd vertices is naturally explained by the degree sum formula.

Sperner's lemma is a more advanced application of the same strategy. The lemma states that a certain kind of coloring on a triangulation of a simplexhas a subsimplex that contains every color. Rather than directly construct such a subsimplex, it is more convenient to prove that there exists an odd number of such subsimplices through an induction argument.[18] A stronger statement of the lemma then explains why this number is odd: it naturally breaks down as (n + 1) + n when one considers the two possible orientations of a simplex.[19]

Even-odd alternation[edit]

The fact that zero is even, together with the fact that even and odd numbers alternate, is enough to determine the parity of every other natural number. This idea can be formalized into a recursive definition of the set of even natural numbers:

- 0 is even.

- (n + 1) is even if and only if n is not even.

This definition has the conceptual advantage of relying only on the minimal foundations of the natural numbers: the existence of 0 and of successors. As such, it is useful for computer logic systems such as LF and the Isabelle theorem prover.[20] With this definition, the evenness of zero is not a theorem but an axiom. Indeed, "zero is an even number" may be interpreted as one of the Peano axioms, of which the even natural numbers are a model.[21] A similar construction extends the definition of parity to transfinite ordinal numbers: every limit ordinal is even, including zero, and successors of even ordinals are odd.[22]

The classic point in polygon test from computational geometry applies the above ideas. To determine if a point lies within a polygon, one casts a ray from infinity to the point and counts the number of times the ray crosses the edge of polygon. The crossing number is even if and only if the point is outside the polygon. This algorithmworks because if the ray never crosses the polygon, then its crossing number is zero, which is even, and the point is outside. Every time the ray does cross the polygon, the crossing number alternates between even and odd, and the point at its tip alternates between outside and inside.[23]

In graph theory, a bipartite graph is a graph whose vertices are split into two colors, such that neighboring vertices have different colors. If a connected graph has no odd cycles, then a bipartition can be constructed by choosing a base vertex v and coloring every vertex black or white, depending on whether its distance from v is even or odd. Since the distance between v and itself is 0, and 0 is even, the base vertex is colored differently from its neighbors, which lie at a distance of 1.[24]

Algebraic patterns[edit]

In abstract algebra, the even integers form various algebraic structuresthat require the inclusion of zero. The fact that the additive identity (zero) is even, together with the evenness of sums and additive inverses of even numbers and the associativity of addition, means that the even integers form a group. Moreover, the group of even integers under addition is a subgroup of the group of all integers; this is an elementary example of the subgroup concept.[16] The earlier observation that the rule "even − even = even" forces 0 to be even is part of a general pattern: any nonempty subset of an additive group that is closed under subtraction must be a subgroup, and in particular, must contain the identity.[25]

Since the even integers form a subgroup of the integers, they partition the integers into cosets. These cosets may be described as the equivalence classes of the following equivalence relation: x ~ y if (x − y) is even. Here, the evenness of zero is directly manifested as the reflexivity of the binary relation ~.[26] There are only two cosets of this subgroup—the even and odd numbers—so it has index 2.

Analogously, the alternating group is a subgroup of index 2 in the symmetric group on n letters. The elements of the alternating group, called even permutations, are the products of even numbers of transpositions. The identity map, an empty product of no transpositions, is an even permutation since zero is even; it is the identity element of the group.[27]

The rule "even × integer = even" means that the even numbers form an ideal in the ring of integers, and the above equivalence relation can be described as equivalence modulo this ideal. In particular, even integers are exactly those integers k where k ≡ 0 (mod 2). This formulation is useful for investigating integer zeroes of polynomials.[28]

2-adic order[edit]

There is a sense in which some multiples of 2 are "more even" than others. Multiples of 4 are called doubly even, since they can be divided by 2 twice. Not only is zero divisible by 4, zero has the unique property of being divisible by every power of 2, so it surpasses all other numbers in "evenness".[1]

One consequence of this fact appears in the bit-reversed ordering of integer data types used by some computer algorithms, such as the Cooley–Tukeyfast Fourier transform. This ordering has the property that the farther to the left the first 1 occurs in a number's binary expansion, or the more times it is divisible by 2, the sooner it appears. Zero's bit reversal is still zero; it can be divided by 2 any number of times, and its binary expansion does not contain any 1s, so it always comes first.[29]

Although 0 is divisible by 2 more times than any other number, it is not straightforward to quantify exactly how many times that is. For any nonzero integer n, one may define the 2-adic order of n to be the number of times n is divisible by 2. This description does not work for 0; no matter how many times it is divided by 2, it can always be divided by 2 again. Rather, the usual convention is to set the 2-order of 0 to be infinity as a special case.[30] This convention is not peculiar to the 2-order; it is one of the axioms of an additive valuation in higher algebra.[31]

The powers of two—1, 2, 4, 8, ...—form a simple sequence of numbers of increasing 2-order. In the 2-adic numbers, such sequences actually convergeto zero.[32]

https://en.wikipedia.org/wiki/Parity_of_zero

In mathematics, the classic Möbius inversion formula is a relation between pairs of arithmetic functions, each defined from the other by sums over divisors. It was introduced into number theory in 1832 by August Ferdinand Möbius.[1]

A large generalization of this formula applies to summation over an arbitrary locally finite partially ordered set, with Möbius' classical formula applying to the set of the natural numbers ordered by divisibility: see incidence algebra.

https://en.wikipedia.org/wiki/Möbius_inversion_formula

In mathematics, the Möbius energy of a knot is a particular knot energy, i.e., a functional on the space of knots. It was discovered by Jun O'Hara, who demonstrated that the energy blows up as the knot's strands get close to one another.[1] This is a useful property because it prevents self-intersection and ensures the result under gradient descent is of the same knot type.

https://en.wikipedia.org/wiki/Möbius_energy

Signed zero is zero with an associated sign. In ordinary arithmetic, the number 0 does not have a sign, so that −0, +0 and 0 are identical. However, in computing, some number representations allow for the existence of two zeros, often denoted by −0 (negative zero) and +0 (positive zero), regarded as equal by the numerical comparison operations but with possible different behaviors in particular operations. This occurs in the sign and magnitudeand ones' complement signed number representations for integers, and in most floating-point number representations. The number 0 is usually encoded as +0, but can be represented by either +0 or −0.

The IEEE 754 standard for floating-point arithmetic (presently used by most computers and programming languages that support floating-point numbers) requires both +0 and −0. Real arithmetic with signed zeros can be considered a variant of the extended real number line such that 1/−0 = −∞and 1/+0 = +∞; division is only undefined for ±0/±0 and ±∞/±∞.

Negatively signed zero echoes the mathematical analysis concept of approaching 0 from below as a one-sided limit, which may be denoted by x → 0−, x → 0−, or x → ↑0. The notation "−0" may be used informally to denote a negative number that has been rounded to zero. The concept of negative zero also has some theoretical applications in statistical mechanics and other disciplines.

It is claimed that the inclusion of signed zero in IEEE 754 makes it much easier to achieve numerical accuracy in some critical problems,[1] in particular when computing with complex elementary functions.[2] On the other hand, the concept of signed zero runs contrary to the general assumption made in most mathematical fields that negative zero is the same thing as zero. Representations that allow negative zero can be a source of errors in programs, if software developers do not take into account that while the two zero representations behave as equal under numeric comparisons, they yield different results in some operations.

https://en.wikipedia.org/wiki/Signed_zero

The zero-energy universe hypothesis proposes that the total amount of energy in the universe is exactly zero: its amount of positive energy in the form of matter is exactly canceled out by its negative energy in the form of gravity.[1] Some physicists, such as Lawrence Krauss, Stephen Hawking or Alexander Vilenkin, call or called this state "a universe from nothingness", although the zero-energy universe model requires both a matter field with positive energy and a gravitational field with negative energy to exist.[2] The hypothesis is broadly discussed in popular sources.[3][4][5]

https://en.wikipedia.org/wiki/Zero-energy_universe

Gravitational energy or gravitational potential energy is the potential energy a massive object has in relation to another massive object due to gravity. It is the potential energy associated with the gravitational field, which is released (converted into kinetic energy) when the objects fall towards each other. Gravitational potential energy increases when two objects are brought further apart.

For two pairwise interacting point particles, the gravitational potential energy is given by

where and are the masses of the two particles, is the distance between them, and is the gravitational constant.[1]

Close to the Earth's surface, the gravitational field is approximately constant, and the gravitational potential energy of an object reduces to

where is the object's mass, is the gravity of Earth, and is the height of the object's center of mass above a chosen reference level.[1]

https://en.wikipedia.org/wiki/Gravitational_energy

Energy

Outline History Index

Fundamental

concepts

Energy Units Conservation of energy Energetics Energy transformation Energy condition Energy transition Energy level Energy system Mass Negative mass Mass–energy equivalence Power Thermodynamics Quantum thermodynamics Laws of thermodynamics Thermodynamic system Thermodynamic state Thermodynamic potential Thermodynamic free energy Irreversible process Thermal reservoir Heat transfer Heat capacity Volume (thermodynamics) Thermodynamic equilibrium Thermal equilibrium Thermodynamic temperature Isolated system Entropy Free entropy Entropic force Negentropy Work Exergy Enthalpy

Types

Kinetic Internal Thermal Potential Gravitational Elastic Electric potential energy Mechanical Interatomic potential Quantum potential Electrical Magnetic Ionization Radiant Binding Nuclear binding energy Gravitational binding energy Quantum chromodynamics binding energy Dark Quintessence Phantom Negative Chemical Rest Sound energy Surface energy Vacuum energy Zero-point energy Quantum potential Quantum fluctuation

Energy carriers

Radiation Enthalpy Mechanical wave Sound wave Fuel fossil fuel Heat Latent heat Work Electricity Battery Capacitor

Primary energy

Fossil fuel Coal Petroleum Natural gas Nuclear fuel Natural uranium Radiant energy Solar Wind Hydropower Marine energy Geothermal Bioenergy Gravitational energy

Energy system

components

Energy engineering Oil refinery Electric power Fossil fuel power station Cogeneration Integrated gasification combined cycle Nuclear power Nuclear power plant Radioisotope thermoelectric generator Solar power Photovoltaic system Concentrated solar power Solar thermal energy Solar power tower Solar furnace Wind power Wind farm Airborne wind energy Hydropower Hydroelectricity Wave farm Tidal power Geothermal power Biomass

Use and

supply

Energy consumption Energy storage World energy consumption Energy security Energy conservation Efficient energy use Transport Agriculture Renewable energy Sustainable energy Energy policy Energy development Worldwide energy supply South America USA Mexico Canada Europe Asia Africa Australia

Misc.

Jevons paradox Carbon footprint

Category Category Commons page Commons Portal Portal WikiProject WikiProject

Categories: Forms of energyGravityConservation lawsTensors in general relativity

https://en.wikipedia.org/wiki/Gravitational_energy

https://en.wikipedia.org/wiki/Outline_of_energy

https://en.wikipedia.org/wiki/Retroreflector

In politics, “poodle” is an insult used to describe a politician who obediently or passively follows the lead of others.[1] It is considered to be equivalent to lackey.[2] Usage of the term is thought to relate to the passive and obedient nature of the type of dog. Colette Avital unsuccessfully tried to have the term’s use banned from the Knesset in June 2001.[3]

During the 2000s, it was used against Tony Blair with regard to his close relationship with George W. Bush and the involvement of the United Kingdom in the Iraq War. The singer George Michael infamously used it in his song “Shoot the Dog” in July 2002, the video of which showed Blair as the “poodle” on the lawn of the White House.[4] However, it has somewhat of a longer history as a label to criticise British Prime Ministers who are perceived to be too close to the United States.[5]

See also[edit]

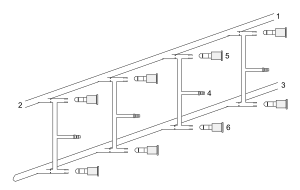

The Schlenk line (also vacuum gas manifold) is a commonly used chemistry apparatus developed by Wilhelm Schlenk. It consists of a dual manifold with several ports.[1] One manifold is connected to a source of purified inert gas, while the other is connected to a vacuum pump. The inert-gas line is vented through an oil bubbler, while solvent vapors and gaseous reaction products are prevented from contaminating the vacuum pump by a liquid-nitrogen or dry-ice/acetone cold trap. Special stopcocks or Teflon taps allow vacuum or inert gas to be selected without the need for placing the sample on a separate line.

Schlenk lines are useful for safely and successfully manipulating moisture- and air-sensitive compounds. The vacuum is also often used to remove the last traces of solvent from a sample. Vacuum and gas manifolds often have many ports and lines, and with care, it is possible for several reactions or operations to be run simultaneously.

When the reagents are highly susceptible to oxidation, traces of oxygen may pose a problem. Then, for the removal of oxygen below the ppm level, the inert gas needs to be purified by passing it through a deoxygenation catalyst.[2] This is usually a column of copper(I) or manganese(II) oxide, which reacts with oxygen traces present in the inert gas.

Techniques[edit]

The main techniques associated with the use of a Schlenk line include:

- counterflow additions, where air-stable reagents are added to the reaction vessel against a flow of inert gas;

- the use of syringes and rubber septa to transfer liquids and solutions;[3]

- cannula transfer, where liquids or solutions of air-sensitive reagents are transferred between different vessels stoppered with septa using a long thin tube known as a cannula. Liquid flow is supported by vacuum or inert-gas pressure.[4]

Glassware are usually connected by tightly fitting and greased ground glass joints. Round bends of glass tubing with ground glass joints may be used to adjust the orientation of various vessels. Glassware is necessarily purged of outside air by alternating application of vacuum and inert gas. The solvents and reagents that are used are also purged of air and water using various methods.

Filtration under inert conditions poses a special challenge that is usually tackled with specialized glassware. A Schlenk filter consists of sintered glass funnel fitted with joints and stopcocks. By fitting the pre-dried funnel and receiving flask to the reaction flask against a flow of nitrogen, carefully inverting the set-up, and turning on the vacuum appropriately, the filtration may be accomplished with minimal exposure to air.

Dangers[edit]

The main dangers associated with the use of a Schlenk line are the risks of an implosion or explosion. An implosion can occur due to the use of vacuum and flaws in the glass apparatus.

An explosion can occur due to the common use of liquid nitrogen in the cold trap, used to protect the vacuum pump from solvents. If a reasonable amount of air is allowed to enter the Schlenk line, liquid oxygen can condense into the cold trap as a pale blue liquid. An explosion may occur due to reaction of the liquid oxygen with any organic compounds also in the trap.

Gallery[edit]

See also[edit]

- Air-free technique gives a broad overview of methods including:

- Glovebox – used to manipulate air-sensitive (oxygen- or moisture-sensitive) chemicals.

- Schlenk flask – reaction vessel for handling air-sensitive compounds.