Chronozone

A chronozone or chron is a unit in chronostratigraphy, defined by events such as geomagnetic reversals (magnetozones), or based on the presence of specific fossils (biozone or biochronozone). According to the International Commission on Stratigraphy, the term "chronozone" refers to the rocks formed during a particular time period, while "chron" refers to that time period.[1]

Although non-hierarchical, chronozones have been recognized as useful markers or benchmarks of time in the rock record. Chronozones are non-hierarchical in that chronozones do not need to correspond across geographic or geologic boundaries, nor be equal in length. Although a former, early constraint required that a chronozone be defined as smaller than a geological stage. Another early use was hierarchical in that Harland et al. (1989) used "chronozone" for the slice of time smaller than a faunal stage defined in biostratigraphy. [2] The ICS superseded these earlier usages in 1994.[3]

The key factor in designating an internationally acceptable chronozone is whether the overall fossil column is clear, unambiguous, and widespread. Some accepted chronozones contain others, and certain larger chronozones have been designated which span whole defined geological time units, both large and small. For example, the chronozone Pliocene is a subset of the chronozone Neogene, and the chronozone Pleistocene is a subset of the chronozone Quaternary.

| Segments of rock (strata) in chronostratigraphy | Time spans in geochronology | Notes to geochronological units |

|---|---|---|

| Eonothem | Eon | 4 total, half a billion years or more |

| Erathem | Era | 10 defined, several hundred million years |

| System | Period | 22 defined, tens to ~one hundred million years |

| Series | Epoch | 34 defined, tens of millions of years |

| Stage | Age | 99 defined, millions of years |

| Chronozone | Chron | subdivision of an age, not used by the ICS timescale |

See also

- Body form

- Chronology (geology)

- European Mammal Neogene

- Geologic time scale

- North American Land Mammal Age

- Type locality (geology)

- List of GSSPs

References

- Cohen, K.M.; Finney, S.; Gibbard, P.L. (2015), International Chronostratigraphic Chart (PDF), International Commission on Stratigraphy.

- Gehling, James; Jensen, Sören; Droser, Mary; Myrow, Paul; Narbonne, Guy (March 2001). "Burrowing below the basal Cambrian GSSP, Fortune Head, Newfoundland". Geological Magazine. 138 (2): 213–218. Bibcode:2001GeoM..138..213G. doi:10.1017/S001675680100509X. S2CID 131211543. 1.

- Hedberg, H.D., (editor), International stratigraphic guide: A guide to stratigraphic classification, terminology, and procedure, New York, John Wiley and Sons, 1976

External links

- International Stratigraphic Chart from the International Commission on Stratigraphy

- USA National Park Service

- Washington State University Archived 2011-07-26 at the Wayback Machine

- Web Geological Time Machine

- Eon or Aeon, Math Words - An alphabetical index

- The Global Boundary Stratotype Section and Point (GSSP): overview

- Chart of The Global Boundary Stratotype Sections and Points (GSSP): chart

- Geotime chart displaying geologic time periods compared to the fossil record

https://en.wikipedia.org/wiki/Chronozone

Magnetic orientations of all samples from a site are then compared and their average magnetic polarity is determined with directional statistics, most commonly Fisher statistics or bootstrapping.[5] The statistical significance of each average is evaluated. The latitudes of the Virtual Geomagnetic Poles from those sites determined to be statistically significant are plotted against the stratigraphic level at which they were collected. These data are then abstracted to the standard black and white magnetostratigraphic columns in which black indicates normal polarity and white is reversed polarity.

https://en.wikipedia.org/wiki/Magnetostratigraphy#Chron

Analytical procedures

Samples are first analyzed in their natural state to obtain their natural remanent magnetization (NRM). The NRM is then stripped away in a stepwise manner using thermal or alternating field demagnetization techniques to reveal the stable magnetic component.

https://en.wikipedia.org/wiki/Magnetostratigraphy#Chron

Because the age of each reversal shown on the GMPTS is relatively well known, the correlation establishes numerous time lines through the stratigraphic section. These ages provide relatively precise dates for features in the rocks such as fossils, changes in sedimentary rock composition, changes in depositional environment, etc. They also constrain the ages of cross-cutting features such as faults, dikes, and unconformities.

https://en.wikipedia.org/wiki/Magnetostratigraphy#Chron

https://en.wikipedia.org/wiki/Volcanic_ash

https://en.wikipedia.org/wiki/Biostratigraphy

These data are also used to model basin subsidence rates. Knowing the depth of a hydrocarbon source rock beneath the basin-filling strata allows calculation of the age at which the source rock passed through the generation window and hydrocarbon migration began. Because the ages of cross-cutting trapping structures can usually be determined from magnetostratigraphic data, a comparison of these ages will assist reservoir geologists in their determination of whether or not a play is likely in a given trap.[6]

https://en.wikipedia.org/wiki/Magnetostratigraphy#Chron

https://en.wikipedia.org/wiki/Magnetostratigraphy#Chron

See also

- Biostratigraphy

- Chemostratigraphy

- Chronostratigraphy

- Cyclostratigraphy

- Lithostratigraphy

- Tectonostratigraphy

- Paleomagnetism

https://en.wikipedia.org/wiki/Magnetostratigraphy#Chron

https://en.wikipedia.org/wiki/Category:Stratigraphy

https://en.wikipedia.org/wiki/Geophysics

Cyclostratigraphy is a subdiscipline of stratigraphy that studies astronomically forced climate cycles within sedimentary successions.[1]

https://en.wikipedia.org/wiki/Cyclostratigraphy

Orbital changes

Astronomical cycles (also known as Milankovitch cycles) are variations of the Earth's orbit around the sun due to the gravitational interaction with other masses within the solar system.[1] Due to this cyclicity, solar irradiation differs through time on different hemispheres and seasonality is affected. These insolation variations have influence on Earth's climate and on the deposition of sedimentary rocks.

The main orbital cycles are precession with main periods of 19 and 23 kyr, obliquity with main periods of 41 kyr, and 1.2 Myr, and eccentricity with main periods of around 100 kyr, 405 kyr, and 2.4 Myr.[2] Precession influences how much insolation each hemisphere receives. Obliquity controls the intensity of the seasons. Eccentricity influences how much insolation the Earth receives altogether. Varied insolation directly influences Earth's climate, and changes in precipitation and weathering are revealed in the sedimentary record. The 405 kyr eccentricity cycle helps correct chronologies in rocks or sediment cores when variable sedimentation makes them difficult to assign.[1] Indicators of these cycles in sediments include rock magnetism, geochemistry, biological composition, and physical features like color and facies changes.[1][3]

https://en.wikipedia.org/wiki/Cyclostratigraphy

Dating methods and applications

To determine the time range of a cyclostratigraphic study, rocks are dated using radiometric dating and other stratigraphic methods. Once the time range is calibrated, the rocks are examined for Milankovitch signals. From there, ages can be assigned to the sediment layers based on the astronomical signals they contain.[3]

Cyclostratigraphic studies of rock records can lead to accurate dating of events in the geological past, to increase understanding of cause and consequences of Earth's (climate) history, and to more control on depositional mechanisms of sediments and the acting of sedimentary systems. Cyclostratigraphy also aids the study of planetary physics, because it provides information of astronomical cycles that extends beyond 50 Ma (astronomical models are not accurate beyond this).[1] Assigning time ranges to these astronomical cycles can be used to calibrate 40Ar/39Ar dating.[3]

Limitations

Uncertainties also arise when using cyclostratigraphy. Using radioisotope dating to set parameters for time scales introduces a degree of uncertainty. There are also stratigraphic uncertainties, uncertainties due to climate forcing, and uncertainty about Earth's rotational effects on its precession. There is also uncertainty in records extending beyond 50 Ma because astronomical models are not accurate beyond 50 Ma due to chaos and uncertainties of initial conditions.[1]

https://en.wikipedia.org/wiki/Cyclostratigraphy

Cyclic sediments (also called rhythmic sediments[1]) are sequences of sedimentary rocks that are characterised by repetitive patterns of different rock types (strata) or facies within the sequence. Processes that generate sedimentary cyclicity can be either autocyclic or allocyclic, and can result in piles of sedimentary cycles hundreds or even thousands of metres thick. The study of sequence stratigraphy was developed from controversies over the causes of cyclic sedimentation.[2]

https://en.wikipedia.org/wiki/Cyclic_sediments

In geology, depositional environment or sedimentary environment describes the combination of physical, chemical, and biological processes associated with the deposition of a particular type of sediment and, therefore, the rock types that will be formed after lithification, if the sediment is preserved in the rock record. In most cases, the environments associated with particular rock types or associations of rock types can be matched to existing analogues. However, the further back in geological time sediments were deposited, the more likely that direct modern analogues are not available (e.g. banded iron formations).

https://en.wikipedia.org/wiki/Depositional_environment

| Sedimentary rock | |

Banded iron formation, Karijini National Park, Western Australia | |

| Composition | |

|---|---|

| Primary | iron oxides, cherts |

| Secondary | Other |

Banded iron formations (BIFs; also called banded ironstone formations) are distinctive units of sedimentary rock consisting of alternating layers of iron oxides and iron-poor chert. They can be up to several hundred meters in thickness and extend laterally for several hundred kilometers. Almost all of these formations are of Precambrian age and are thought to record the oxygenation of the Earth's oceans. Some of the Earth's oldest rock formations, which formed about 3,700 million years ago (Ma), are associated with banded iron formations.

Banded iron formations are thought to have formed in sea water as the result of oxygen production by photosynthetic cyanobacteria. The oxygen combined with dissolved iron in Earth's oceans to form insoluble iron oxides, which precipitated out, forming a thin layer on the ocean floor. Each band is similar to a varve, resulting from cyclic variations in oxygen production.

Banded iron formations were first discovered in northern Michigan in 1844. Banded iron formations account for more than 60% of global iron reserves and provide most of the iron ore presently mined. Most formations can be found in Australia, Brazil, Canada, India, Russia, South Africa, Ukraine, and the United States.

https://en.wikipedia.org/wiki/Banded_iron_formation

Description

A typical banded iron formation consists of repeated, thin layers (a few millimeters to a few centimeters in thickness) of silver to black iron oxides, either magnetite (Fe3O4) or hematite (Fe2O3), alternating with bands of iron-poor chert, often red in color, of similar thickness.[1][2][3][4] A single banded iron formation can be up to several hundred meters in thickness and extend laterally for several hundred kilometers.[5]

Banded iron formation is more precisely defined as chemically precipitated sedimentary rock containing greater than 15% iron. However, most BIFs have a higher content of iron, typically around 30% by mass, so that roughly half the rock is iron oxides and the other half is silica.[5][6] The iron in BIFs is divided roughly equally between the more oxidized ferric form, Fe(III), and the more reduced ferrous form, Fe(II), so that the ratio Fe(III)/Fe(II+III) typically varies from 0.3 to 0.6. This indicates a predominance of magnetite, in which the ratio is 0.67, over hematite, for which the ratio is 1.[4] In addition to the iron oxides (hematite and magnetite), the iron sediment may contain the iron-rich carbonates siderite and ankerite, or the iron-rich silicates minnesotaite and greenalite. Most BIFs are chemically simple, containing little but iron oxides, silica, and minor carbonate,[5] though some contain significant calcium and magnesium, up to 9% and 6.7% as oxides respectively.[7][8]

When used in the singular, the term banded iron formation refers to the sedimentary lithology just described.[1] The plural form, banded iron formations, is used informally to refer to stratigraphic units that consist primarily of banded iron formation.[9]

A well-preserved banded iron formation typically consists of macrobands several meters thick that are separated by thin shale beds. The macrobands in turn are composed of characteristic alternating layers of chert and iron oxides, called mesobands, that are several millimeters to a few centimeters thick. Many of the chert mesobands contain microbands of iron oxides that are less than a millimeter thick, while the iron mesobands are relatively featureless. BIFs tend to be extremely hard, tough, and dense, making them highly resistant to erosion, and they show fine details of stratification over great distances, suggesting they were deposited in a very low-energy environment; that is, in relatively deep water, undisturbed by wave motion or currents.[2] BIFs only rarely interfinger with other rock types, tending to form sharply bounded discrete units that never grade laterally into other rock types.[5]

Banded iron formations of the Great Lakes region and the Frere Formation of western Australia are somewhat different in character and are sometimes described as granular iron formations or GIFs.[7][5] Their iron sediments are granular to oolitic in character, forming discrete grains about a millimeter in diameter, and they lack microbanding in their chert mesobands. They also show more irregular mesobanding, with indications of ripples and other sedimentary structures, and their mesobands cannot be traced out any great distance. Though they form well-defined, discrete units, these are commonly interbedded with coarse to medium-grained epiclastic sediments (sediments formed by weathering of rock). These features suggest a higher energy depositional environment, in shallower water disturbed by wave motions. However, they otherwise resemble other banded iron formations.[7]

The great majority of banded iron formations are Archean or Paleoproterozoic in age. However, a small number of BIFs are Neoproterozoic in age, and are frequently,[8][10][11] if not universally,[12] associated with glacial deposits, often containing glacial dropstones.[8] They also tend to show a higher level of oxidation, with hematite prevailing over magnetite,[10] and they typically contain a small amount of phosphate, about 1% by mass.[10] Mesobanding is often poor to nonexistent[13] and soft-sediment deformation structures are common. This suggests very rapid deposition.[14] However, like the granular iron formations of the Great Lakes, the Neoproterozoic occurrences are widely described as banded iron formations.[8][10][14][4][15][16]

Banded iron formations are distinct from most Phanerozoic ironstones. Ironstones are relatively rare and are thought to have been deposited in marine anoxic events, in which the depositional basin became depleted in free oxygen. They are composed of iron silicates and oxides without appreciable chert but with significant phosphorus content, which is lacking in BIFs.[11]

No classification scheme for banded iron formations has gained complete acceptance.[5] In 1954, Harold Lloyd James advocated a classification based on four lithological facies (oxide, carbonate, silicate, and sulfide) assumed to represent different depths of deposition,[1] but this speculative model did not hold up.[5] In 1980, Gordon A. Gross advocated a twofold division of BIFs into an Algoma type and a Lake Superior type, based on the character of the depositional basin. Algoma BIFs are found in relatively small basins in association with greywackes and other volcanic rocks and are assumed to be associated with volcanic centers. Lake Superior BIFs are found in larger basins in association with black shales, quartzites, and dolomites, with relatively minor tuffs or other volcanic rocks, and are assumed to have formed on a continental shelf.[17] This classification has been more widely accepted, but the failure to appreciate that it is strictly based on the characteristics of the depositional basin and not the lithology of the BIF itself has led to confusion, and some geologists have advocated for its abandonment.[2][18] However, the classification into Algoma versus Lake Superior types continues to be used.[19][20]

https://en.wikipedia.org/wiki/Banded_iron_formation

A shield is a large area of exposed Precambrian crystalline igneous and high-grade metamorphic rocks that form tectonically stable areas.[1] These rocks are older than 570 million years and sometimes date back to around 2 to 3.5 billion years.[citation needed] They have been little affected by tectonic events following the end of the Precambrian, and are relatively flat regions where mountain building, faulting, and other tectonic processes are minor, compared with the activity at their margins and between tectonic plates. Shields occur on all continents.

https://en.wikipedia.org/wiki/Shield_(geology)

Terminology

The term shield cannot be used interchangeably with the term craton. However, shield can be used interchangeably with the term basement. The difference is that a craton describes a basement overlayed by a sedimentary platform while shield only describes the basement.

The term shield, used to describe this type of geographic region, appears in the 1901 English translation of Eduard Suess's Face of Earth by H. B. C. Sollas, and comes from the shape "not unlike a flat shield"[2] of the Canadian Shield which has an outline that "suggests the shape of the shields carried by soldiers in the days of hand-to-hand combat."[3]

Lithology

A shield is that part of the continental crust in which these usually Precambrian basement rocks crop out extensively at the surface. Shields can be very complex: they consist of vast areas of granitic or granodioritic gneisses, usually of tonalitic composition, and they also contain belts of sedimentary rocks, often surrounded by low-grade volcano-sedimentary sequences, or greenstone belts. These rocks are frequently metamorphosed greenschist, amphibolite, and granulite facies.[citation needed] It is estimated that over 50% of Earth's shields surface is made up of gneiss.[4]

Erosion and landforms

Being relatively stable regions the relief of shields is rather old with elements such as peneplains being shaped in Precambrian times. The oldest peneplain identifiable in a shield is called a "primary peneplain",[5] in the case of the Fennoscandian Shield this is the Sub-Cambrian peneplain.[6]

The landforms and shallow deposits of northern shields that have been subject to Quaternary glaciation and periglaciation are distinct from those found in closer to the equator.[5] Shield relief, including peneplains, can be protected from erosion by various means.[5][7] Shield surfaces exposed to sub-tropical and tropical climate for long enough time can end up being silicified, becoming hard and extremely difficult to erode.[7] Erosion of peneplains by glaciers in shield regions is limited.[7][8] In the Fennoscandian Shield average glacier erosion during the Quaternary amounts to tens of meters, albeit this was not evenly distributed.[8] For glacier erosion to be effective in shields a long "preparation period" of weathering under non-glacial conditions may be a requirement.[7]

In weathered and eroded shields inselbergs are common sights.[9]

List of shields

- The Canadian Shield forms the nucleus of North America and extends from Lake Superior on the south to the Arctic Islands on the north, and from western Canada eastward across to include most of Greenland.[10]

- The Atlantic Shield

- The Amazonian (Brazilian) Shield on the eastern bulge portion of South America. Bordering this is the Guiana Shield to the north, and the Platian Shield to the south.

- The Uruguayan Shield

- The Baltic (Fennoscandian) Shield is located in eastern Norway, Finland and Sweden.

- The African (Ethiopian) Shield is located in Africa.

- The Australian Shield occupies most of the western half of Australia.

- The Arabian-Nubian Shield on the western edge of Arabia.

- The Antarctic Shield.

- In Asia, an area in China and North Korea is sometimes referred to as the China-Korean Shield.

- The Angaran Shield, as it is sometimes called, is bounded by the Yenisey River on the west, the Lena River on the east, the Arctic Ocean on the north, and Lake Baikal on the south.

- The Indian Shield occupies two-thirds of the southern Indian peninsula.

See also

Notes

- Merriam, D. F. (2005). Encyclopedia of Geology. Selley, Richard C., 1939-, Cocks, L. R. M. (Leonard Robert Morrison), 1938-, Plimer, I. R. Amsterdam: Elsevier Academic. p. 21. ISBN 9781601193674. OCLC 183883048.

https://en.wikipedia.org/wiki/Shield_(geology)

In geology, basement and crystalline basement are crystalline rocks lying above the mantle and beneath all other rocks and sediments. They are sometimes exposed at the surface, but often they are buried under miles of rock and sediment.[1] The basement rocks lie below a sedimentary platform or cover, or more generally any rock below sedimentary rocks or sedimentary basins that are metamorphic or igneous in origin. In the same way, the sediments or sedimentary rocks on top of the basement can be called a "cover" or "sedimentary cover".

Crustal rocks are modified several times before they become basement, and these transitions alter their composition.[1]

https://en.wikipedia.org/wiki/Basement_(geology)

Continental crust

Basement rock is the thick foundation of ancient, and oldest, metamorphic and igneous rock that forms the crust of continents, often in the form of granite.[2] Basement rock is contrasted to overlying sedimentary rocks which are laid down on top of the basement rocks after the continent was formed, such as sandstone and limestone. The sedimentary rocks which may be deposited on top of the basement usually form a relatively thin veneer, but can be more than 5 kilometres (3 mi) thick. The basement rock of the crust can be 32–48 kilometres (20–30 mi) thick or more. The basement rock can be located under layers of sedimentary rock, or be visible at the surface.

Basement rock is visible, for example, at the bottom of the Grand Canyon, consisting of 1.7- to 2-billion-year-old granite (Zoroaster Granite) and schist (Vishnu Schist). The Vishnu Schist is believed to be highly metamorphosed igneous rocks and shale, from basalt, mud and clay laid from volcanic eruptions, and the granite is the result of magma intrusions into the Vishnu Schist. An extensive cross section of sedimentary rocks laid down on top of it through the ages is visible as well.

https://en.wikipedia.org/wiki/Basement_(geology)

Age

The basement rocks of the continental crust tend to be much older than the oceanic crust.[3] The oceanic crust can be from 0–340 million years in age, with an average age of 64 million years.[4] Continental crust is older because continental crust is light and thick enough so it is not subducted, while oceanic crust is periodically subducted and replaced at subduction and oceanic rifting areas.

https://en.wikipedia.org/wiki/Basement_(geology)

Complexity

This section needs additional citations for verification. (January 2019) |

The basement rocks are often highly metamorphosed and complex, and are usually crystalline.[5] They may consist of many different types of rock – volcanic, intrusive igneous and metamorphic. They may also contain ophiolites, which are fragments of oceanic crust that became wedged between plates when a terrane was accreted to the edge of the continent. Any of this material may be folded, refolded and metamorphosed. New igneous rock may freshly intrude into the crust from underneath, or may form underplating, where the new igneous rock forms a layer on the underside of the crust. The majority of continental crust on the planet is around 1 to 3 billion years old, and it is theorised that there was at least one period of rapid expansion and accretion to the continents during the Precambrian.

Much of the basement rock may have originally been oceanic crust, but it was highly metamorphosed and converted into continental crust. It is possible for oceanic crust to be subducted down into the Earth's mantle, at subduction fronts, where oceanic crust is being pushed down into the mantle by an overriding plate of oceanic or continental crust.

https://en.wikipedia.org/wiki/Basement_(geology)

Magmatic underplating occurs when basaltic magmas are trapped during their rise to the surface at the Mohorovičić discontinuity or within the crust.[1] Entrapment (or 'stalling out') of magmas within the crust occurs due to the difference in relative densities between the rising magma and the surrounding rock. Magmatic underplating can be responsible for thickening of the crust when the magma cools.[1] Geophysical seismic studies (as well as igneous petrology and geochemistry) utilize the differences in densities to identify underplating that occurs at depth.[1]

https://en.wikipedia.org/wiki/Magmatic_underplating

Cratons

Many continents can consist of several continental cratons – blocks of crust built around an initial original core of continents – that gradually grew and expanded as additional newly created terranes were added to their edges. For instance, Pangea consisted of most of the Earth's continents being accreted into one giant supercontinent. Most continents, such as Asia, Africa and Europe, include several continental cratons, as they were formed by the accretion of many smaller continents.

Usage

In European geology, the basement generally refers to rocks older than the Variscan orogeny. On top of this older basement Permian evaporites and Mesozoic limestones were deposited. The evaporites formed a weak zone on which the harder (stronger) limestone cover was able to move over the hard basement, making the distinction between basement and cover even more pronounced.[citation needed]

In Andean geology the basement refers to the Proterozoic, Paleozoic and early Mesozoic (Triassic to Jurassic) rock units as the basement to the late Mesozoic and Cenozoic Andean sequences developed following the onset of subduction along the western margin of the South American Plate.[7]

When discussing the Trans-Mexican Volcanic Belt of Mexico the basement include Proterozoic, Paleozoic and Mesozoic age rocks for the Oaxaquia, the Mixteco and the Guerrero terranes respectively.[8]

The term basement is used mostly in disciplines of geology like basin geology, sedimentology and petroleum geology in which the (typically Precambrian) crystalline basement is not of interest as it rarely contains petroleum or natural gas.[9] The term economic basement is also used to describe the deeper parts of a cover sequence that are of no economic interest.[10]

https://en.wikipedia.org/wiki/Basement_(geology)

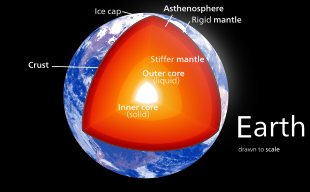

The internal structure of Earth is the solid portion of the Earth,[clarification needed] excluding its atmosphere and hydrosphere. The structure consists of an outer silicate solid crust, a highly viscous asthenosphere and solid mantle, a liquid outer core whose flow generates the Earth's magnetic field, and a solid inner core.

Scientific understanding of the internal structure of Earth is based on observations of topography and bathymetry, observations of rock in outcrop, samples brought to the surface from greater depths by volcanoes or volcanic activity, analysis of the seismic waves that pass through Earth, measurements of the gravitational and magnetic fields of Earth, and experiments with crystalline solids at pressures and temperatures characteristic of Earth's deep interior.

https://en.wikipedia.org/wiki/Internal_structure_of_Earth

Lodestones are naturally magnetized pieces of the mineral magnetite.[1][2] They are naturally occurring magnets, which can attract iron. The property of magnetism was first discovered in antiquity through lodestones.[3] Pieces of lodestone, suspended so they could turn, were the first magnetic compasses,[3][4][5][6] and their importance to early navigation is indicated by the name lodestone, which in Middle English means "course stone" or "leading stone",[7] from the now-obsolete meaning of lode as "journey, way".[8]

Lodestone is one of only a very few minerals that is found naturally magnetized.[1] Magnetite is black or brownish-black, with a metallic luster, a Mohs hardness of 5.5–6.5 and a black streak.

https://en.wikipedia.org/wiki/Lodestone

Geophysics

Geophysics (/ˌdʒiːoʊˈfɪzɪks/) is a subject of natural science concerned with the physical processes and physical properties of the Earth and its surrounding space environment, and the use of quantitative methods for their analysis. Geophysicists, who usually study geophysics, physics, or one of the earth sciences at the graduate level, complete investigations across a wide range of scientific disciplines. The term geophysics classically refers to solid earth applications: Earth's shape; its gravitational, magnetic fields, and electromagnetic fields ; its internal structure and composition; its dynamics and their surface expression in plate tectonics, the generation of magmas, volcanism and rock formation.[3] However, modern geophysics organizations and pure scientists use a broader definition that includes the water cycle including snow and ice; fluid dynamics of the oceans and the atmosphere; electricity and magnetism in the ionosphere and magnetosphere and solar-terrestrial physics; and analogous problems associated with the Moon and other planets.[3][4][5][6][7][8]

Although geophysics was only recognized as a separate discipline in the 19th century, its origins date back to ancient times. The first magnetic compasses were made from lodestones, while more modern magnetic compasses played an important role in the history of navigation. The first seismic instrument was built in 132 AD. Isaac Newton applied his theory of mechanics to the tides and the precession of the equinox; and instruments were developed to measure the Earth's shape, density and gravity field, as well as the components of the water cycle. In the 20th century, geophysical methods were developed for remote exploration of the solid Earth and the ocean, and geophysics played an essential role in the development of the theory of plate tectonics.

Geophysics is applied to societal needs, such as mineral resources, mitigation of natural hazards and environmental protection.[4] In exploration geophysics, geophysical survey data are used to analyze potential petroleum reservoirs and mineral deposits, locate groundwater, find archaeological relics, determine the thickness of glaciers and soils, and assess sites for environmental remediation.

Physical phenomena

Geophysics is a highly interdisciplinary subject, and geophysicists contribute to every area of the Earth sciences and some geophysicists conduct research in planetary sciences. To provide a clearer idea of what constitutes geophysics, this section describes phenomena that are studied in physics and how they relate to the Earth and its surroundings. Geophysicists also investigate the physical processes and properties of the Earth, its fluid layers, and magnetic field along with the near-Earth environment in the Solar System, which includes other planetary bodies.

Gravity

The gravitational pull of the Moon and Sun gives rise to two high tides and two low tides every lunar day, or every 24 hours and 50 minutes. Therefore, there is a gap of 12 hours and 25 minutes between every high tide and between every low tide.[9]

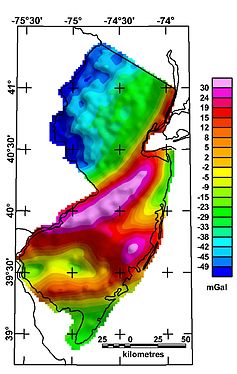

Gravitational forces make rocks press down on deeper rocks, increasing their density as the depth increases.[10] Measurements of gravitational acceleration and gravitational potential at the Earth's surface and above it can be used to look for mineral deposits (see gravity anomaly and gravimetry).[11] The surface gravitational field provides information on the dynamics of tectonic plates. The geopotential surface called the geoid is one definition of the shape of the Earth. The geoid would be the global mean sea level if the oceans were in equilibrium and could be extended through the continents (such as with very narrow canals).[12]

Heat flow

The Earth is cooling, and the resulting heat flow generates the Earth's magnetic field through the geodynamo and plate tectonics through mantle convection.[13] The main sources of heat are the primordial heat and radioactivity, although there are also contributions from phase transitions. Heat is mostly carried to the surface by thermal convection, although there are two thermal boundary layers – the core–mantle boundary and the lithosphere – in which heat is transported by conduction.[14] Some heat is carried up from the bottom of the mantle by mantle plumes. The heat flow at the Earth's surface is about 4.2 × 1013 W, and it is a potential source of geothermal energy.[15]

Vibrations

Seismic waves are vibrations that travel through the Earth's interior or along its surface. The entire Earth can also oscillate in forms that are called normal modes or free oscillations of the Earth. Ground motions from waves or normal modes are measured using seismographs. If the waves come from a localized source such as an earthquake or explosion, measurements at more than one location can be used to locate the source. The locations of earthquakes provide information on plate tectonics and mantle convection.[16][17]

Recording of seismic waves from controlled sources provides information on the region that the waves travel through. If the density or composition of the rock changes, waves are reflected. Reflections recorded using Reflection Seismology can provide a wealth of information on the structure of the earth up to several kilometers deep and are used to increase our understanding of the geology as well as to explore for oil and gas.[11] Changes in the travel direction, called refraction, can be used to infer the deep structure of the Earth.[17]

Earthquakes pose a risk to humans. Understanding their mechanisms, which depend on the type of earthquake (e.g., intraplate or deep focus), can lead to better estimates of earthquake risk and improvements in earthquake engineering.[18]

Electricity

Although we mainly notice electricity during thunderstorms, there is always a downward electric field near the surface that averages 120 volts per meter.[19] Relative to the solid Earth, the atmosphere has a net positive charge due to bombardment by cosmic rays. A current of about 1800 amperes flows in the global circuit.[19] It flows downward from the ionosphere over most of the Earth and back upwards through thunderstorms. The flow is manifested by lightning below the clouds and sprites above.

A variety of electric methods are used in geophysical survey. Some measure spontaneous potential, a potential that arises in the ground because of man-made or natural disturbances. Telluric currents flow in Earth and the oceans. They have two causes: electromagnetic induction by the time-varying, external-origin geomagnetic field and motion of conducting bodies (such as seawater) across the Earth's permanent magnetic field.[20] The distribution of telluric current density can be used to detect variations in electrical resistivity of underground structures. Geophysicists can also provide the electric current themselves (see induced polarization and electrical resistivity tomography).

Electromagnetic waves

Electromagnetic waves occur in the ionosphere and magnetosphere as well as in Earth's outer core. Dawn chorus is believed to be caused by high-energy electrons that get caught in the Van Allen radiation belt. Whistlers are produced by lightning strikes. Hiss may be generated by both. Electromagnetic waves may also be generated by earthquakes (see seismo-electromagnetics).

In the highly conductive liquid iron of the outer core, magnetic fields are generated by electric currents through electromagnetic induction. Alfvén waves are magnetohydrodynamic waves in the magnetosphere or the Earth's core. In the core, they probably have little observable effect on the Earth's magnetic field, but slower waves such as magnetic Rossby waves may be one source of geomagnetic secular variation.[21]

Electromagnetic methods that are used for geophysical survey include transient electromagnetics, magnetotellurics, surface nuclear magnetic resonance and electromagnetic seabed logging.[22]

Magnetism

The Earth's magnetic field protects the Earth from the deadly solar wind and has long been used for navigation. It originates in the fluid motions of the outer core.[21] The magnetic field in the upper atmosphere gives rise to the auroras.[23]

The Earth's field is roughly like a tilted dipole, but it changes over time (a phenomenon called geomagnetic secular variation). Mostly the geomagnetic pole stays near the geographic pole, but at random intervals averaging 440,000 to a million years or so, the polarity of the Earth's field reverses. These geomagnetic reversals, analyzed within a Geomagnetic Polarity Time Scale, contain 184 polarity intervals in the last 83 million years, with change in frequency over time, with the most recent brief complete reversal of the Laschamp event occurring 41,000 years ago during the last glacial period. Geologists observed geomagnetic reversal recorded in volcanic rocks, through magnetostratigraphy correlation (see natural remanent magnetization) and their signature can be seen as parallel linear magnetic anomaly stripes on the seafloor. These stripes provide quantitative information on seafloor spreading, a part of plate tectonics. They are the basis of magnetostratigraphy, which correlates magnetic reversals with other stratigraphies to construct geologic time scales.[24] In addition, the magnetization in rocks can be used to measure the motion of continents.[21]

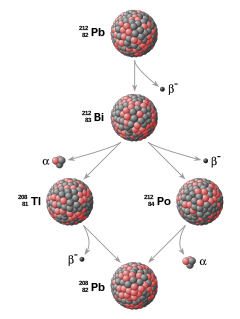

Radioactivity

Radioactive decay accounts for about 80% of the Earth's internal heat, powering the geodynamo and plate tectonics.[25] The main heat-producing isotopes are potassium-40, uranium-238, uranium-235, and thorium-232.[26] Radioactive elements are used for radiometric dating, the primary method for establishing an absolute time scale in geochronology.

Unstable isotopes decay at predictable rates, and the decay rates of different isotopes cover several orders of magnitude, so radioactive decay can be used to accurately date both recent events and events in past geologic eras.[27] Radiometric mapping using ground and airborne gamma spectrometry can be used to map the concentration and distribution of radioisotopes near the Earth's surface, which is useful for mapping lithology and alteration.[28][29]

Fluid dynamics

Fluid motions occur in the magnetosphere, atmosphere, ocean, mantle and core. Even the mantle, though it has an enormous viscosity, flows like a fluid over long time intervals. This flow is reflected in phenomena such as isostasy, post-glacial rebound and mantle plumes. The mantle flow drives plate tectonics and the flow in the Earth's core drives the geodynamo.[21]

Geophysical fluid dynamics is a primary tool in physical oceanography and meteorology. The rotation of the Earth has profound effects on the Earth's fluid dynamics, often due to the Coriolis effect. In the atmosphere, it gives rise to large-scale patterns like Rossby waves and determines the basic circulation patterns of storms. In the ocean, they drive large-scale circulation patterns as well as Kelvin waves and Ekman spirals at the ocean surface.[30] In the Earth's core, the circulation of the molten iron is structured by Taylor columns.[21]

Waves and other phenomena in the magnetosphere can be modeled using magnetohydrodynamics.

Mineral physics

The physical properties of minerals must be understood to infer the composition of the Earth's interior from seismology, the geothermal gradient and other sources of information. Mineral physicists study the elastic properties of minerals; their high-pressure phase diagrams, melting points and equations of state at high pressure; and the rheological properties of rocks, or their ability to flow. Deformation of rocks by creep make flow possible, although over short times the rocks are brittle. The viscosity of rocks is affected by temperature and pressure, and in turn, determines the rates at which tectonic plates move.[10]

Water is a very complex substance and its unique properties are essential for life.[31] Its physical properties shape the hydrosphere and are an essential part of the water cycle and climate. Its thermodynamic properties determine evaporation and the thermal gradient in the atmosphere. The many types of precipitation involve a complex mixture of processes such as coalescence, supercooling and supersaturation.[32] Some precipitated water becomes groundwater, and groundwater flow includes phenomena such as percolation, while the conductivity of water makes electrical and electromagnetic methods useful for tracking groundwater flow. Physical properties of water such as salinity have a large effect on its motion in the oceans.[30]

The many phases of ice form the cryosphere and come in forms like ice sheets, glaciers, sea ice, freshwater ice, snow, and frozen ground (or permafrost).[33]

Regions of the Earth

Size and form of the Earth

The Earth is roughly spherical, but it bulges towards the Equator, so it is roughly in the shape of an ellipsoid (see Earth ellipsoid). This bulge is due to its rotation and is nearly consistent with an Earth in hydrostatic equilibrium. The detailed shape of the Earth, however, is also affected by the distribution of continents and ocean basins, and to some extent by the dynamics of the plates.[12]

Structure of the interior

Evidence from seismology, heat flow at the surface, and mineral physics is combined with the Earth's mass and moment of inertia to infer models of the Earth's interior – its composition, density, temperature, pressure. For example, the Earth's mean specific gravity (5.515) is far higher than the typical specific gravity of rocks at the surface (2.7–3.3), implying that the deeper material is denser. This is also implied by its low moment of inertia ( 0.33 M R2, compared to 0.4 M R2 for a sphere of constant density). However, some of the density increase is compression under the enormous pressures inside the Earth. The effect of pressure can be calculated using the Adams–Williamson equation. The conclusion is that pressure alone cannot account for the increase in density. Instead, we know that the Earth's core is composed of an alloy of iron and other minerals.[10]

Reconstructions of seismic waves in the deep interior of the Earth show that there are no S-waves in the outer core. This indicates that the outer core is liquid, because liquids cannot support shear. The outer core is liquid, and the motion of this highly conductive fluid generates the Earth's field. Earth's inner core, however, is solid because of the enormous pressure.[12]

Reconstruction of seismic reflections in the deep interior indicates some major discontinuities in seismic velocities that demarcate the major zones of the Earth: inner core, outer core, mantle, lithosphere and crust. The mantle itself is divided into the upper mantle, transition zone, lower mantle and D′′ layer. Between the crust and the mantle is the Mohorovičić discontinuity.[12]

The seismic model of the Earth does not by itself determine the composition of the layers. For a complete model of the Earth, mineral physics is needed to interpret seismic velocities in terms of composition. The mineral properties are temperature-dependent, so the geotherm must also be determined. This requires physical theory for thermal conduction and convection and the heat contribution of radioactive elements. The main model for the radial structure of the interior of the Earth is the preliminary reference Earth model (PREM). Some parts of this model have been updated by recent findings in mineral physics (see post-perovskite) and supplemented by seismic tomography. The mantle is mainly composed of silicates, and the boundaries between layers of the mantle are consistent with phase transitions.[10]

The mantle acts as a solid for seismic waves, but under high pressures and temperatures, it deforms so that over millions of years it acts like a liquid. This makes plate tectonics possible.

Magnetosphere

If a planet's magnetic field is strong enough, its interaction with the solar wind forms a magnetosphere. Early space probes mapped out the gross dimensions of the Earth's magnetic field, which extends about 10 Earth radii towards the Sun. The solar wind, a stream of charged particles, streams out and around the terrestrial magnetic field, and continues behind the magnetic tail, hundreds of Earth radii downstream. Inside the magnetosphere, there are relatively dense regions of solar wind particles called the Van Allen radiation belts.[23]

Methods

Geodesy

Geophysical measurements are generally at a particular time and place. Accurate measurements of position, along with earth deformation and gravity, are the province of geodesy. While geodesy and geophysics are separate fields, the two are so closely connected that many scientific organizations such as the American Geophysical Union, the Canadian Geophysical Union and the International Union of Geodesy and Geophysics encompass both.[34]

Absolute positions are most frequently determined using the global positioning system (GPS). A three-dimensional position is calculated using messages from four or more visible satellites and referred to the 1980 Geodetic Reference System. An alternative, optical astronomy, combines astronomical coordinates and the local gravity vector to get geodetic coordinates. This method only provides the position in two coordinates and is more difficult to use than GPS. However, it is useful for measuring motions of the Earth such as nutation and Chandler wobble. Relative positions of two or more points can be determined using very-long-baseline interferometry.[34][35][36]

Gravity measurements became part of geodesy because they were needed to related measurements at the surface of the Earth to the reference coordinate system. Gravity measurements on land can be made using gravimeters deployed either on the surface or in helicopter flyovers. Since the 1960s, the Earth's gravity field has been measured by analyzing the motion of satellites. Sea level can also be measured by satellites using radar altimetry, contributing to a more accurate geoid.[34] In 2002, NASA launched the Gravity Recovery and Climate Experiment (GRACE), wherein two twin satellites map variations in Earth's gravity field by making measurements of the distance between the two satellites using GPS and a microwave ranging system. Gravity variations detected by GRACE include those caused by changes in ocean currents; runoff and ground water depletion; melting ice sheets and glaciers.[37]

Satellites and space probes

Satellites in space have made it possible to collect data from not only the visible light region, but in other areas of the electromagnetic spectrum. The planets can be characterized by their force fields: gravity and their magnetic fields, which are studied through geophysics and space physics.

Measuring the changes in acceleration experienced by spacecraft as they orbit has allowed fine details of the gravity fields of the planets to be mapped. For example, in the 1970s, the gravity field disturbances above lunar maria were measured through lunar orbiters, which led to the discovery of concentrations of mass, mascons, beneath the Imbrium, Serenitatis, Crisium, Nectaris and Humorum basins.[38]

Global positioning systems (GPS) and geographical information systems (GIS)

Since geophysics is concerned with the shape of the Earth, and by extension the mapping of features around and in the planet, geophysical measurements include high accuracy GPS measurements. These measurements are processed to increase their accuracy through differential GPS processing. Once the geophysical measurements have been processed and inverted, the interpreted results are plotted using GIS. Programs such as ArcGIS and Geosoft were built to meet these needs and include many geophysical functions that are built-in, such as upward continuation, and the calculation of the measurement derivative such as the first-vertical derivative.[11][39] Many geophysics companies have designed in-house geophysics programs that pre-date ArcGIS and GeoSoft in order to meet the visualization requirements of a geophysical dataset.

Remote sensing

Exploration geophysics is applied geophysics that often uses remote sensing platforms such as; satellites, aircraft, ships, boats, rovers, drones, borehole sensing equipment, and seismic receivers.[11] Most correction for data gathered using geophysical methods such as magnetic, gravimetry, electromagnetic, radiometric, radar, laser altimetry, barometry, and Lidar, on remote sensing platforms involves the correction of the geophysical data gathered from that remote sensing platform due to the effects of that platform on the geophysical data.[11] For instance, aeromagnetic data (aircraft gathered magnetic data) gathered using conventional fixed-wing aircraft platforms must be corrected for electromagnetic eddy currents that are created as the aircraft moves through Earth's magnetic field.[11] There are also corrections related to changes in measured potential field intensity as the Earth rotates, as the Earth orbits the Sun, and as the moon orbits the Earth.[11] [39]

Signal processing

Geophysical measurements are often recorded as time-series with GPS location. Signal processing involves the correction of time-series data for unwanted noise or errors introduced by the measurement platform, such as aircraft vibrations in gravity data. It also involves the reduction of sources of noise, such as diurnal corrections in magnetic data.[11][39] In seismic data, electromagnetic data, and gravity data, processing continues after error corrections to include computational geophysics which result in the final interpretation of the geophysical data into a geological interpretation of the geophysical measurements[11][39]

History

Geophysics emerged as a separate discipline only in the 19th century, from the intersection of physical geography, geology, astronomy, meteorology, and physics.[40][41] The first known use of the word geophysics was in german („Geophysik”) by Julius Fröbel in 1834.[42] However, many geophysical phenomena – such as the Earth's magnetic field and earthquakes – have been investigated since the ancient era.

Ancient and classical eras

The magnetic compass existed in China back as far as the fourth century BC. It was used as much for feng shui as for navigation on land. It was not until good steel needles could be forged that compasses were used for navigation at sea; before that, they could not retain their magnetism long enough to be useful. The first mention of a compass in Europe was in 1190 AD.[43]

In circa 240 BC, Eratosthenes of Cyrene deduced that the Earth was round and measured the circumference of Earth with great precision.[44] He developed a system of latitude and longitude.[45]

Perhaps the earliest contribution to seismology was the invention of a seismoscope by the prolific inventor Zhang Heng in 132 AD.[46] This instrument was designed to drop a bronze ball from the mouth of a dragon into the mouth of a toad. By looking at which of eight toads had the ball, one could determine the direction of the earthquake. It was 1571 years before the first design for a seismoscope was published in Europe, by Jean de la Hautefeuille. It was never built.[47]

Beginnings of modern science

One of the publications that marked the beginning of modern science was William Gilbert's De Magnete (1600), a report of a series of meticulous experiments in magnetism. Gilbert deduced that compasses point north because the Earth itself is magnetic.[21]

In 1687 Isaac Newton published his Principia, which not only laid the foundations for classical mechanics and gravitation but also explained a variety of geophysical phenomena such as the tides and the precession of the equinox.[48]

The first seismometer, an instrument capable of keeping a continuous record of seismic activity, was built by James Forbes in 1844.[47]

See also

- International Union of Geodesy and Geophysics (IUGG)

- Sociedade Brasileira de Geofísica

- Earth system science – Scientific study of the Earth's spheres and their natural integrated systems

- List of geophysicists – Famous geophysicists

- Outline of geophysics – Topics in the physics of the Earth and its vicinity

- Geodynamics – Study of dynamics of the Earth

- Planetary science – Science of planets and planetary systems

- Geological Engineering

- Physics

- Space physics

- Geosciences

- Geodesy

Notes

- Newton 1999 Section 3

References

- American Geophysical Union (2011). "Our Science". About AGU. Retrieved 30 September 2011.

- "About IUGG". 2011. Retrieved 30 September 2011.

- "AGUs Cryosphere Focus Group". 2011. Archived from the original on 16 November 2011. Retrieved 30 September 2011.

- Bozorgnia, Yousef; Bertero, Vitelmo V. (2004). Earthquake Engineering: From Engineering Seismology to Performance-Based Engineering. CRC Press. ISBN 978-0-8493-1439-1.

- Chemin, Jean-Yves; Desjardins, Benoit; Gallagher, Isabelle; Grenier, Emmanuel (2006). Mathematical geophysics: an introduction to rotating fluids and the Navier-Stokes equations. Oxford lecture series in mathematics and its applications. Oxford University Press. ISBN 0-19-857133-X.

- Davies, Geoffrey F. (2001). Dynamic Earth: Plates, Plumes and Mantle Convection. Cambridge University Press. ISBN 0-521-59067-1.

- Dewey, James; Byerly, Perry (1969). "The Early History of Seismometry (to 1900)". Bulletin of the Seismological Society of America. 59 (1): 183–227. Archived from the original on 23 November 2011.

- Defense Mapping Agency (1984) [1959]. Geodesy for the Layman (Technical report). National Geospatial-Intelligence Agency. TR 80-003. Retrieved 30 September 2011.

- Eratosthenes (2010). Eratosthenes' "Geography". Fragments collected and translated, with commentary and additional material by Duane W. Roller. Princeton University Press. ISBN 978-0-691-14267-8.

- Fowler, C.M.R. (2005). The Solid Earth: An Introduction to Global Geophysics (2 ed.). Cambridge University Press. ISBN 0-521-89307-0.

- "GRACE: Gravity Recovery and Climate Experiment". University of Texas at Austin Center for Space Research. 2011. Retrieved 30 September 2011.

- Hardy, Shaun J.; Goodman, Roy E. (2005). "Web resources in the history of geophysics". American Geophysical Union. Archived from the original on 27 April 2013. Retrieved 30 September 2011.

- Harrison, R. G.; Carslaw, K. S. (2003). "Ion-aerosol-cloud processes in the lower atmosphere". Reviews of Geophysics. 41 (3): 1012. Bibcode:2003RvGeo..41.1012H. doi:10.1029/2002RG000114. S2CID 123305218.

- Kivelson, Margaret G.; Russell, Christopher T. (1995). Introduction to Space Physics. Cambridge University Press. ISBN 978-0-521-45714-9.

- Lanzerotti, Louis J.; Gregori, Giovanni P. (1986). "Telluric currents: the natural environment and interactions with man-made systems". In Geophysics Study Committee; Geophysics Research Forum; Commission on Physical Sciences, Mathematics and Resources; National Research Council (eds.). The Earth's electrical environment. The Earth's Electrical Environment. National Academy Press. pp. 232–258. ISBN 0-309-03680-1.

- Lowrie, William (2004). Fundamentals of Geophysics. Cambridge University Press. ISBN 0-521-46164-2.

- Merrill, Ronald T.; McElhinny, Michael W.; McFadden, Phillip L. (1998). The Magnetic Field of the Earth: Paleomagnetism, the Core, and the Deep Mantle. International Geophysics Series. Vol. 63. Academic Press. ISBN 978-0124912458.

- Muller, Paul; Sjogren, William (1968). "Mascons: lunar mass concentrations". Science. 161 (3842): 680–684. Bibcode:1968Sci...161..680M. doi:10.1126/science.161.3842.680. PMID 17801458. S2CID 40110502.

- National Research Council (U.S.). Committee on Geodesy (1985). Geodesy: a look to the future (PDF) (Report). National Academies.

- Newton, Isaac (1999). The Principia, Mathematical principles of natural philosophy. A new translation by I Bernard Cohen and Anne Whitman, preceded by "A Guide to Newton's Principia" by I Bernard Cohen. University of California Press. ISBN 978-0-520-08816-0.

- Opdyke, Neil D.; Channell, James T. (1996). Magnetic Stratigraphy. Academic Press. ISBN 0-12-527470-X.

- Pedlosky, Joseph (1987). Geophysical Fluid Dynamics (Second ed.). Springer-Verlag. ISBN 0-387-96387-1.

- Poirier, Jean-Paul (2000). Introduction to the Physics of the Earth's Interior. Cambridge Topics in Mineral Physics & Chemistry. Cambridge University Press. ISBN 0-521-66313-X.

- Pollack, Henry N.; Hurter, Suzanne J.; Johnson, Jeffrey R. (1993). "Heat flow from the Earth's interior: Analysis of the global data set". Reviews of Geophysics. 31 (3): 267–280. Bibcode:1993RvGeo..31..267P. doi:10.1029/93RG01249.

- Renne, P.R.; Ludwig, K.R.; Karner, D.B. (2000). "Progress and challenges in geochronology". Science Progress. 83: 107–121. PMID 10800377.

- Reynolds, John M. (2011). An Introduction to Applied and Environmental Geophysics. Wiley-Blackwell. ISBN 978-0-471-48535-3.

- Richards, M. A.; Duncan, R. A.; Courtillot, V. E. (1989). "Flood Basalts and Hot-Spot Tracks: Plume Heads and Tails". Science. 246 (4926): 103–107. Bibcode:1989Sci...246..103R. doi:10.1126/science.246.4926.103. PMID 17837768. S2CID 9147772.

- Ross, D.A. (1995). Introduction to Oceanography. HarperCollins. ISBN 0-13-491408-2.

- Sadava, David; Heller, H. Craig; Hillis, David M.; Berenbaum, May (2009). Life: The Science of Biology. Macmillan. ISBN 978-1-4292-1962-4.

- Sanders, Robert (10 December 2003). "Radioactive potassium may be major heat source in Earth's core". UC Berkeley News. Retrieved 28 February 2007.

- Sirvatka, Paul (2003). "Cloud Physics: Collision/Coalescence; The Bergeron Process". College of DuPage. Retrieved 31 August 2011.

- Sheriff, Robert E. (1991). "Geophysics". Encyclopedic Dictionary of Exploration Geophysics (3rd ed.). Society of Exploration. ISBN 978-1-56080-018-7.

- Stein, Seth; Wysession, Michael (2003). An introduction to seismology, earthquakes, and earth structure. Wiley-Blackwell. ISBN 0-86542-078-5.

- Telford, William Murray; Geldart, L. P.; Sheriff, Robert E. (1990). Applied geophysics. Cambridge University Press. ISBN 978-0-521-33938-4.

- Temple, Robert (2006). The Genius of China. Andre Deutsch. ISBN 0-671-62028-2.

- Torge, W. (2001). Geodesy (3rd ed.). Walter de Gruyter. ISBN 0-89925-680-5.

- Turcotte, Donald Lawson; Schubert, Gerald (2002). Geodynamics (2nd ed.). Cambridge University Press. ISBN 0-521-66624-4.

- Verhoogen, John (1980). Energetics of the Earth. National Academy Press. ISBN 978-0-309-03076-2.

External links

- A reference manual for near-surface geophysics techniques and applications

- Commission on Geophysical Risk and Sustainability (GeoRisk), International Union of Geodesy and Geophysics (IUGG)

- Study of the Earth's Deep Interior, a Committee of IUGG

- Union Commissions (IUGG)

- USGS Geomagnetism Program

- Career crate: Seismic processor

- Society of Exploration Geophysicists

https://en.wikipedia.org/wiki/Geophysics

Surface nuclear magnetic resonance (SNMR), also known as magnetic resonance Sounding (MRS), is a geophysical technique specially designed for hydrogeology. It is based on the principle of nuclear magnetic resonance (NMR) and measurements can be used to indirectly estimate the water content of saturated and unsaturated zones in the earth's subsurface.[1] SNMR is used to estimate aquifer properties, including the quantity of water contained in the aquifer, porosity, and hydraulic conductivity.

https://en.wikipedia.org/wiki/Surface_nuclear_magnetic_resonance

Magnetotellurics (MT) is an electromagnetic geophysical method for inferring the earth's subsurface electrical conductivity from measurements of natural geomagnetic and geoelectric field variation at the Earth's surface.

Investigation depth ranges from 300 m below ground by recording higher frequencies down to 10,000 m or deeper with long-period soundings. Proposed in Japan in the 1940s, and France and the USSR during the early 1950s, MT is now an international academic discipline and is used in exploration surveys around the world.

Commercial uses include hydrocarbon (oil and gas) exploration, geothermal exploration, carbon sequestration, mining exploration, as well as hydrocarbon and groundwater monitoring. Research applications include experimentation to further develop the MT technique, long-period deep crustal exploration, deep mantle probing, sub-glacial water flow mapping, and earthquake precursor research.

https://en.wikipedia.org/wiki/Magnetotellurics

Transient electromagnetics, (also time-domain electromagnetics / TDEM), is a geophysical exploration technique in which electric and magnetic fields are induced by transient pulses of electric current and the subsequent decay response measured. TEM / TDEM methods are generally able to determine subsurface electrical properties, but are also sensitive to subsurface magnetic properties in applications like UXO detection and characterization. TEM/TDEM surveys are a very common surface EM technique for mineral exploration, groundwater exploration, and for environmental mapping, used throughout the world in both onshore and offshore applications.

https://en.wikipedia.org/wiki/Transient_electromagnetics

Geomagnetic secular variation refers to changes in the Earth's magnetic field on time scales of about a year or more. These changes mostly reflect changes in the Earth's interior, while more rapid changes mostly originate in the ionosphere or magnetosphere.[1]

The geomagnetic field changes on time scales from milliseconds to millions of years. Shorter time scales mostly arise from currents in the ionosphere and magnetosphere, and some changes can be traced to geomagnetic storms or daily variations in currents. Changes over time scales of a year or more mostly reflect changes in the Earth's interior, particularly the iron-rich core. These changes are referred to as secular variation.[1] In most models, the secular variation is the amortized time derivative of the magnetic field , . The second derivative, is the secular acceleration.[2]

https://en.wikipedia.org/wiki/Geomagnetic_secular_variation

In astronomy and planetary science, a magnetosphere is a region of space surrounding an astronomical object in which charged particles are affected by that object's magnetic field.[1][2] It is created by a celestial body with an active interior dynamo.

In the space environment close to a planetary body, the magnetic field resembles a magnetic dipole. Farther out, field lines can be significantly distorted by the flow of electrically conducting plasma, as emitted from the Sun (i.e., the solar wind) or a nearby star.[3][4] Planets having active magnetospheres, like the Earth, are capable of mitigating or blocking the effects of solar radiation or cosmic radiation, that also protects all living organisms from potentially detrimental and dangerous consequences. This is studied under the specialized scientific subjects of plasma physics, space physics, and aeronomy.

https://en.wikipedia.org/wiki/Magnetosphere

Magnetohydrodynamics (MHD; also called magneto-fluid dynamics or hydromagnetics) is the study of the magnetic properties and behaviour of electrically conducting fluids. Examples of such magnetofluids include plasmas, liquid metals, salt water, and electrolytes.[1] The word magnetohydrodynamics is derived from magneto- meaning magnetic field, hydro- meaning water, and dynamics meaning movement. The field of MHD was initiated by Hannes Alfvén,[2] for which he received the Nobel Prize in Physics in 1970.

The fundamental concept behind MHD is that magnetic fields can induce currents in a moving conductive fluid, which in turn polarizes the fluid and reciprocally changes the magnetic field itself. The set of equations that describe MHD is a combination of the Navier–Stokes equations of fluid dynamics and Maxwell’s equations of electromagnetism. These differential equations must be solved simultaneously, either analytically or numerically.

https://en.wikipedia.org/wiki/Magnetohydrodynamics

Seismo-electromagnetics are various electro-magnetic phenomena believed to be generated by tectonic forces acting on the earth's crust, and possibly associated with seismic activity such as earthquakes and volcanoes. Study of these has been prompted by the prospect they might be generated by the increased stress leading up to an earthquake, and might thereby provide a basis for short-term earthquake prediction. However, despite many studies, no form of seismo-electromagnetics has been shown to be effective for earthquake prediction. A key problem is that earthquakes themselves produce relatively weak electromagnetic phenomena, and the effects from any precursory phenomena are likely to be too weak to measure. Close monitoring of the Parkfield earthquake revealed no significant pre-seismic electromagnetic effects. However, some researchers remain optimistic, and searches for seismo-electromagnetic earthquake precursors continue.[citation needed]

https://en.wikipedia.org/wiki/Seismo-electromagnetics

Electromagnetic or magnetic induction is the production of an electromotive force (emf) across an electrical conductor in a changing magnetic field.

Michael Faraday is generally credited with the discovery of induction in 1831, and James Clerk Maxwell mathematically described it as Faraday's law of induction. Lenz's law describes the direction of the induced field. Faraday's law was later generalized to become the Maxwell–Faraday equation, one of the four Maxwell equations in his theory of electromagnetism.

Electromagnetic induction has found many applications, including electrical components such as inductors and transformers, and devices such as electric motors and generators.

https://en.wikipedia.org/wiki/Electromagnetic_induction

Sprites or red sprites are large-scale electric discharges that occur in the mesosphere, high above thunderstorm clouds, or cumulonimbus, giving rise to a varied range of visual shapes flickering in the night sky. They are usually triggered by the discharges of positive lightning between an underlying thundercloud and the ground.

Sprites appear as luminous red-orange flashes. They often occur in clusters above the troposphere at an altitude range of 50–90 km (31–56 mi). Sporadic visual reports of sprites go back at least to 1886 [1] but they were first photographed on July 4, 1989,[2] by scientists from the University of Minnesota and have subsequently been captured in video recordings many thousands of times.

Sprites are sometimes inaccurately called upper-atmospheric lightning. However, sprites are cold plasma phenomena that lack the hot channel temperatures of tropospheric lightning, so they are more akin to fluorescent tube discharges than to lightning discharges. Sprites are associated with various other upper-atmospheric optical phenomena including blue jets and ELVES.[1]

https://en.wikipedia.org/wiki/Sprite_(lightning)

Spontaneous potentials are often measured down boreholes for formation evaluation in the oil and gas industry, and they can also be measured along the Earth's surface for mineral exploration or groundwater investigation. The phenomenon and its application to geology was first recognized by Conrad Schlumberger, Marcel Schlumberger, and E.G. Leonardon in 1931, and the first published examples were from Romanian oil fields.

https://en.wikipedia.org/wiki/Spontaneous_potential

Induced polarization (IP) is a geophysical imaging technique used to identify the electrical chargeability of subsurface materials, such as ore.[1][2]

The polarization effect was originally discovered by Conrad Schlumberger when measuring the resistivity of rock.[3]

The survey method is similar to electrical resistivity tomography (ERT), in that an electric current is transmitted into the subsurface through two electrodes, and voltage is monitored through two other electrodes.

Induced polarization is a geophysical method used extensively in mineral exploration and mine operations. Resistivity and IP methods are often applied on the ground surface using multiple four-electrode sites. In an IP survey, in addition to resistivity measurement, capacitive properties of the subsurface materials are determined as well. As a result, IP surveys provide additional information about the spatial variation in lithology and grain-surface chemistry.

The IP survey can be made in time-domain and frequency-domain mode:

In the time-domain induced polarization method, the voltage response is observed as a function of time after the injected current is switched off or on. [4]

In the frequency-domain induced polarization mode, an alternating current is injected into the ground with variable frequencies. Voltage phase-shifts are measured to evaluate the impedance spectrum at different injection frequencies, which is commonly referred to as spectral IP.

The IP method is one of the most widely used techniques in mineral exploration and mining industry and it has other applications in hydrogeophysical surveys, environmental investigations and geotechnical engineering projects.[5]

https://en.wikipedia.org/wiki/Induced_polarization

A telluric current (from Latin tellūs, "earth"), or Earth current,[1] is an electric current which moves underground or through the sea. Telluric currents result from both natural causes and human activity, and the discrete currents interact in a complex pattern. The currents are extremely low frequency and travel over large areas at or near the surface of the Earth.

https://en.wikipedia.org/wiki/Telluric_current

The term intraplate earthquake refers to a variety of earthquake that occurs within the interior of a tectonic plate; this stands in contrast to an interplate earthquake, which occurs at the boundary of a tectonic plate. Intraplate earthquakes are often called "intraslab earthquakes," especially when occurring in microplates.[1][2]

Intraplate earthquakes are relatively rare compared to the more familiar boundary-located interplate earthquakes. Structures far from plate boundaries tend to lack seismic retrofitting, so large intraplate earthquakes can inflict heavy damage. Examples of damaging intraplate earthquakes are the devastating Gujarat earthquake in 2001, the 2012 Indian Ocean earthquakes, the 2017 Puebla earthquake, the 1811–1812 earthquakes in New Madrid, Missouri, and the 1886 earthquake in Charleston, South Carolina.[3]

https://en.wikipedia.org/wiki/Intraplate_earthquake

The internal structure of Earth is the solid portion of the Earth,[clarification needed] excluding its atmosphere and hydrosphere. The structure consists of an outer silicate solid crust, a highly viscous asthenosphere and solid mantle, a liquid outer core whose flow generates the Earth's magnetic field, and a solid inner core.

Scientific understanding of the internal structure of Earth is based on observations of topography and bathymetry, observations of rock in outcrop, samples brought to the surface from greater depths by volcanoes or volcanic activity, analysis of the seismic waves that pass through Earth, measurements of the gravitational and magnetic fields of Earth, and experiments with crystalline solids at pressures and temperatures characteristic of Earth's deep interior.

https://en.wikipedia.org/wiki/Internal_structure_of_Earth

Reflection seismology (or seismic reflection) is a method of exploration geophysics that uses the principles of seismology to estimate the properties of the Earth's subsurface from reflected seismic waves. The method requires a controlled seismic source of energy, such as dynamite or Tovex blast, a specialized air gun or a seismic vibrator. Reflection seismology is similar to sonar and echolocation. This article is about surface seismic surveys; for vertical seismic profiles, see VSP.

Seismic reflection data

https://en.wikipedia.org/wiki/Reflection_seismology

Normal modes

Free oscillations of the Earth are standing waves, the result of interference between two surface waves traveling in opposite directions. Interference of Rayleigh waves results in spheroidal oscillation S while interference of Love waves gives toroidal oscillation T. The modes of oscillations are specified by three numbers, e.g., nSlm, where l is the angular order number (or spherical harmonic degree, see Spherical harmonics for more details). The number m is the azimuthal order number. It may take on 2l+1 values from −l to +l. The number n is the radial order number. It means the wave with n zero crossings in radius. For spherically symmetric Earth the period for given n and l does not depend on m.

Some examples of spheroidal oscillations are the "breathing" mode 0S0, which involves an expansion and contraction of the whole Earth, and has a period of about 20 minutes; and the "rugby" mode 0S2, which involves expansions along two alternating directions, and has a period of about 54 minutes. The mode 0S1 does not exist because it would require a change in the center of gravity, which would require an external force.[3]

Of the fundamental toroidal modes, 0T1 represents changes in Earth's rotation rate; although this occurs, it is much too slow to be useful in seismology. The mode 0T2 describes a twisting of the northern and southern hemispheres relative to each other; it has a period of about 44 minutes.[3]

The first observations of free oscillations of the Earth were done during the great 1960 earthquake in Chile. Presently periods of thousands of modes are known. These data are used for determining some large scale structures of the Earth interior.

P and S waves in Earth's mantle and core

When an earthquake occurs, seismographs near the epicenter are able to record both P and S waves, but those at a greater distance no longer detect the high frequencies of the first S wave. Since shear waves cannot pass through liquids, this phenomenon was original evidence for the now well-established observation that the Earth has a liquid outer core, as demonstrated by Richard Dixon Oldham. This kind of observation has also been used to argue, by seismic testing, that the Moon has a solid core, although recent geodetic studies suggest the core is still molten[citation needed].

https://en.wikipedia.org/wiki/Seismic_wave#Normal_modes

A mantle plume is a proposed mechanism of convection within the Earth's mantle, hypothesized to explain anomalous volcanism.[2] Because the plume head partially melts on reaching shallow depths, a plume is often invoked as the cause of volcanic hotspots, such as Hawaii or Iceland, and large igneous provinces such as the Deccan and Siberian Traps. Some such volcanic regions lie far from tectonic plate boundaries, while others represent unusually large-volume volcanism near plate boundaries.

https://en.wikipedia.org/wiki/Mantle_plume

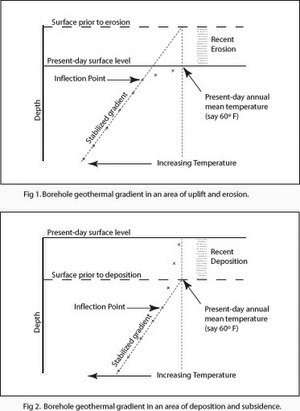

Geothermal gradient

Geothermal gradient is the rate of temperature change with respect to increasing depth in Earth's interior. As a general rule, the crust temperature rises with depth due to the heat flow from the much hotter mantle; away from tectonic plate boundaries, temperature rises in about 25–30 °C/km (72–87 °F/mi) of depth near the surface in most of the world.[1] However, in some cases the temperature may drop with increasing depth, especially near the surface, a phenomenon known as inverse or negative geothermal gradient. The effects of weather, sun, and season only reach a depth of approximately 10-20 metres.

Strictly speaking, geo-thermal necessarily refers to Earth but the concept may be applied to other planets. In SI units, the geothermal gradient is expressed as °C/km,[1] K/km,[2] or mK/m.[3] These are all equivalent.