In mathematics, Marden's theorem, named after Morris Marden but proved about 100 years earlier by Jörg Siebeck, gives a geometric relationship between the zeroes of a third-degree polynomial with complex coefficients and the zeroes of its derivative. See also geometrical properties of polynomial roots.

Statement[edit]

A cubic polynomial has three zeroes in the complex number plane, which in general form a triangle, and the Gauss–Lucas theorem states that the roots of its derivative lie within this triangle. Marden's theorem states their location within this triangle more precisely:

- Suppose the zeroes z1, z2, and z3 of a third-degree polynomial p(z) are non-collinear. There is a unique ellipse inscribed in the triangle with vertices z1, z2, z3 and tangent to the sides at their midpoints: the Steiner inellipse. The foci of that ellipse are the zeroes of the derivative p'(z).

Additional relations between root locations and the Steiner inellipse[edit]

By the Gauss–Lucas theorem, the root of the double derivative p"(z) must be the average of the two foci, which is the center point of the ellipse and the centroid of the triangle. In the special case that the triangle is equilateral (as happens, for instance, for the polynomial p(z) = z3 − 1) the inscribed ellipse degenerates to a circle, and the derivative of p has a double root at the center of the circle. Conversely, if the derivative has a double root, then the triangle must be equilateral (Kalman 2008a).

Generalizations[edit]

A more general version of the theorem, due to Linfield (1920), applies to polynomials p(z) = (z − a)i (z − b)j (z − c)k whose degree i + j + k may be higher than three, but that have only three roots a, b, and c. For such polynomials, the roots of the derivative may be found at the multiple roots of the given polynomial (the roots whose exponent is greater than one) and at the foci of an ellipse whose points of tangency to the triangle divide its sides in the ratios i : j, j : k, and k : i.

Another generalization (Parish (2006)) is to n-gons: some n-gons have an interior ellipse that is tangent to each side at the side's midpoint. Marden's theorem still applies: the foci of this midpoint-tangent inellipse are zeroes of the derivative of the polynomial whose zeroes are the vertices of the n-gon.

History[edit]

Jörg Siebeck discovered this theorem 81 years before Marden wrote about it. However, Dan Kalman titled his American Mathematical Monthly paper "Marden's theorem" because, as he writes, "I call this Marden’s Theorem because I first read it in M. Marden’s wonderful book".

Marden (1945, 1966) attributes what is now known as Marden's theorem to Siebeck (1864) and cites nine papers that included a version of the theorem. Dan Kalman won the 2009 Lester R. Ford Award of the Mathematical Association of America for his 2008 paper in the American Mathematical Monthly describing the theorem.

A short and elementary proof of Marden’s theorem is explained in the solution of an exercise in Fritz Carlson’s book “Geometri” (in Swedish, 1943).[1]

See also[edit]

- Bôcher's theorem for rational functions

https://en.wikipedia.org/wiki/Marden%27s_theorem

In mathematics, a function is said to vanish at infinity if its values approach 0 as the input grows without bounds. There are two different ways to define this with one definition applying to functions defined on normed vector spaces and the other applying to functions defined on locally compact spaces. Aside from this difference, both of these notions correspond to the intuitive notion of adding a point at infinity, and requiring the values of the function to get arbitrarily close to zero as one approaches it. This definition can be formalized in many cases by adding an (actual) point at infinity.

https://en.wikipedia.org/wiki/Vanish_at_infinity

A zero-crossing is a point where the sign of a mathematical function changes (e.g. from positive to negative), represented by an intercept of the axis (zero value) in the graph of the function. It is a commonly used term in electronics, mathematics, acoustics, and image processing.

See also[edit]

- Reconstruction from zero crossings

- Zero crossing control

- Zero-crossing rate

- Root of a function

- Sign function

https://en.wikipedia.org/wiki/Zero_crossing

In complex analysis (a branch of mathematics), a pole is a certain type of singularity of a function, nearby which the function behaves relatively regularly, in contrast to essential singularities, such as 0 for the logarithm function, and branch points, such as 0 for the complex square root function.

A function f of a complex variable z is meromorphic in the neighbourhood of a point z0 if either f or its reciprocal function 1/f is holomorphic in some neighbourhood of z0 (that is, if f or 1/f is complex differentiable in a neighbourhood of z0).

A zero of a meromorphic function f is a complex number z such that f(z) = 0. A pole of f is a zero of 1/f .

This induces a duality between zeros and poles, that is obtained by replacing the function f by its reciprocal 1/f . This duality is fundamental for the study of meromorphic functions. For example, if a function is meromorphic on the whole complex plane, including the point at infinity, then the sum of the multiplicities of its poles equals the sum of the multiplicities of its zeros.

https://en.wikipedia.org/wiki/Zeros_and_poles

Complex analysis, traditionally known as the theory of functions of a complex variable, is the branch of mathematical analysis that investigates functions of complex numbers. It is helpful in many branches of mathematics, including algebraic geometry, number theory, analytic combinatorics, applied mathematics; as well as in physics, including the branches of hydrodynamics, thermodynamics, and particularly quantum mechanics. By extension, use of complex analysis also has applications in engineering fields such as nuclear, aerospace, mechanical and electrical engineering.[citation needed]

As a differentiable function of a complex variable is equal to its Taylor series (that is, it is analytic), complex analysis is particularly concerned with analytic functions of a complex variable (that is, holomorphic functions).

https://en.wikipedia.org/wiki/Complex_analysis

In mathematics, a holomorphic function is a complex-valued function of one or more complex variables that is complex differentiable in a neighbourhood of each point in a domain in complex coordinate space Cn. The existence of a complex derivative in a neighbourhood is a very strong condition: it implies that a holomorphic function is infinitely differentiable and locally equal to its own Taylor series (analytic). Holomorphic functions are the central objects of study in complex analysis.

Though the term analytic function is often used interchangeably with "holomorphic function", the word "analytic" is defined in a broader sense to denote any function (real, complex, or of more general type) that can be written as a convergent power series in a neighbourhood of each point in its domain. That all holomorphic functions are complex analytic functions, and vice versa, is a major theorem in complex analysis.[1]

Holomorphic functions are also sometimes referred to as regular functions.[2][3] A holomorphic function whose domain is the whole complex plane is called an entire function. The phrase "holomorphic at a point z0" means not just differentiable at z0, but differentiable everywhere within some neighbourhood of z0 in the complex plane.

https://en.wikipedia.org/wiki/Holomorphic_function

An isoquant (derived from quantity and the Greek word iso, meaning equal), in microeconomics, is a contour line drawn through the set of points at which the same quantity of output is produced while changing the quantities of two or more inputs.[1][2] The x and y axis on an isoquant represent two relevant inputs, which are usually a factor of production such as labour, capital, land, or organisation. An isoquant may also be known as an “Iso-Product Curve”, or an “Equal Product Curve”.

https://en.wikipedia.org/wiki/Isoquant

In computer science, a set is an abstract data type that can store unique values, without any particular order. It is a computer implementation of the mathematicalconcept of a finite set. Unlike most other collection types, rather than retrieving a specific element from a set, one typically tests a value for membership in a set.

Some set data structures are designed for static or frozen sets that do not change after they are constructed. Static sets allow only query operations on their elements — such as checking whether a given value is in the set, or enumerating the values in some arbitrary order. Other variants, called dynamic or mutable sets, allow also the insertion and deletion of elements from the set.

A multiset is a special kind of set in which an element can figure several times.

https://en.wikipedia.org/wiki/Set_(abstract_data_type)

A parameter (from the Ancient Greek παρά, para: "beside", "subsidiary"; and μέτρον, metron: "measure"), generally, is any characteristic that can help in defining or classifying a particular system (meaning an event, project, object, situation, etc.). That is, a parameter is an element of a system that is useful, or critical, when identifying the system, or when evaluating its performance, status, condition, etc.

Parameter has more specific meanings within various disciplines, including mathematics, computer programming, engineering, statistics, logic, linguistics, electronic musical composition.

In addition to its technical uses, there are also extended uses, especially in non-scientific contexts, where it is used to mean defining characteristics or boundaries, as in the phrases 'test parameters' or 'game play parameters'.[1]

https://en.wikipedia.org/wiki/Parameter

In computer programming, a variable or scalar is an abstract storage location paired with an associated symbolic name, which contains some known or unknown quantity of information referred to as a value; or in easy terms, a variable is a container for a particular type of data (like integer, float, String and etc...). A variable can eventually be associated with or identified by a memory address. The variable name is the usual way to reference the stored value, in addition to referring to the variable itself, depending on the context. This separation of name and content allows the name to be used independently of the exact information it represents. The identifier in computer source code can be bound to a value during run time, and the value of the variable may thus change during the course of program execution.[1][2][3][4]

Variables in programming may not directly correspond to the concept of variables in mathematics. The latter is abstract, having no reference to a physical object such as storage location. The value of a computing variable is not necessarily part of an equation or formula as in mathematics. Variables in computer programming are frequently given long names to make them relatively descriptive of their use, whereas variables in mathematics often have terse, one- or two-character names for brevity in transcription and manipulation.

A variable's storage location may be referenced by several different identifiers, a situation known as aliasing. Assigning a value to the variable using one of the identifiers will change the value that can be accessed through the other identifiers.

Compilers have to replace variables' symbolic names with the actual locations of the data. While a variable's name, type, and location often remain fixed, the data stored in the location may be changed during program execution.

https://en.wikipedia.org/wiki/Variable_(computer_science)

In computer programming, string interpolation (or variable interpolation, variable substitution, or variable expansion) is the process of evaluating a string literalcontaining one or more placeholders, yielding a result in which the placeholders are replaced with their corresponding values. It is a form of simple template processing[1] or, in formal terms, a form of quasi-quotation (or logic substitution interpretation). String interpolation allows easier and more intuitive string formatting and content-specification compared with string concatenation.[2]

String interpolation is common in many programming languages which make heavy use of string representations of data, such as Apache Groovy, Julia, Kotlin, Perl, PHP, Python, Ruby, Scala, Swift, Tcl and most Unix shells. Two modes of literal expression are usually offered: one with interpolation enabled, the other without (termed raw string). Placeholders are usually represented by a bare or a named sigil (typically $ or %), e.g. $placeholder or %123. Expansion of the string usually occurs at run time.

https://en.wikipedia.org/wiki/String_interpolation

In computing, aliasing describes a situation in which a data location in memory can be accessed through different symbolic names in the program. Thus, modifying the data through one name implicitly modifies the values associated with all aliased names, which may not be expected by the programmer. As a result, aliasing makes it particularly difficult to understand, analyze and optimize programs. Aliasing analysers intend to make and compute useful information for understanding aliasing in programs.

https://en.wikipedia.org/wiki/Aliasing_(computing)

In probability theory and statistics, variance is the expectation of the squared deviation of a random variable from its population mean or sample mean. Variance is a measure of dispersion, meaning it is a measure of how far a set of numbers is spread out from their average value. Variance has a central role in statistics, where some ideas that use it include descriptive statistics, statistical inference, hypothesis testing, goodness of fit, and Monte Carlo sampling. Variance is an important tool in the sciences, where statistical analysis of data is common. The variance is the square of the standard deviation, the second central moment of a distribution, and the covariance of the random variable with itself, and it is often represented by , , , , or . [1] [2]

https://en.wikipedia.org/wiki/Variance

In statistics, deviance is a goodness-of-fit statistic for a statistical model; it is often used for statistical hypothesis testing. It is a generalization of the idea of using the sum of squares of residuals (RSS) in ordinary least squares to cases where model-fitting is achieved by maximum likelihood. It plays an important role in exponential dispersion models and generalized linear models.

https://en.wikipedia.org/wiki/Deviance_(statistics)

In computer science, an instruction set architecture (ISA), also called computer architecture, is an abstract model of a computer. A device that executes instructions described by that ISA, such as a central processing unit (CPU), is called an implementation.

In general, an ISA defines the supported instructions, data types, registers, the hardware support for managing main memory, fundamental features (such as the memory consistency, addressing modes, virtual memory), and the input/output model of a family of implementations of the ISA.

An ISA specifies the behavior of machine code running on implementations of that ISA in a fashion that does not depend on the characteristics of that implementation, providing binary compatibility between implementations. This enables multiple implementations of an ISA that differ in characteristics such as performance, physical size, and monetary cost (among other things), but that are capable of running the same machine code, so that a lower-performance, lower-cost machine can be replaced with a higher-cost, higher-performance machine without having to replace software. It also enables the evolution of the microarchitectures of the implementations of that ISA, so that a newer, higher-performance implementation of an ISA can run software that runs on previous generations of implementations.

If an operating system maintains a standard and compatible application binary interface (ABI) for a particular ISA, machine code will run on future implementations of that ISA and operating system. However, if an ISA supports running multiple operating systems, it does not guarantee that machine code for one operating system will run on another operating system, unless the first operating system supports running machine code built for the other operating system.

An ISA can be extended by adding instructions or other capabilities, or adding support for larger addresses and data values; an implementation of the extended ISA will still be able to execute machine code for versions of the ISA without those extensions. Machine code using those extensions will only run on implementations that support those extensions.

The binary compatibility that they provide make ISAs one of the most fundamental abstractions in computing.

https://en.wikipedia.org/wiki/Instruction_set_architecture

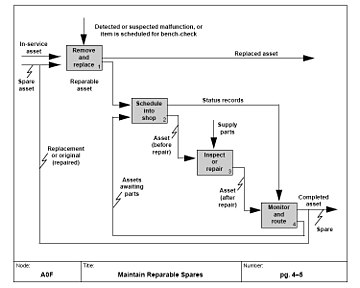

In systems engineering, software engineering, and computer science, a function model or functional model is a structured representation of the functions (activities, actions, processes, operations) within the modeled system or subject area.[1]

A function model, similar with the activity model or process model, is a graphical representation of an enterprise's function within a defined scope. The purposes of the function model are to describe the functions and processes, assist with discovery of information needs, help identify opportunities, and establish a basis for determining product and service costs.[2]

https://en.wikipedia.org/wiki/Function_model

https://en.wikipedia.org/wiki/N2_chart

https://en.wikipedia.org/wiki/Marden%27s_theorem

https://en.wikipedia.org/wiki/vocabulary

https://en.wikipedia.org/wiki/keywords

https://en.wikipedia.org/wiki/academic_discipline

https://en.wikipedia.org/wiki/education

https://en.wikipedia.org/wiki/interdisciplinary_study

https://en.wikipedia.org/wiki/encyclopedia

https://en.wikipedia.org/wiki/dictionary

https://en.wikipedia.org/wiki/input

https://en.wikipedia.org/wiki/output

https://en.wikipedia.org/wiki/hierarchy

https://en.wikipedia.org/wiki/order

https://en.wikipedia.org/wiki/array

https://en.wikipedia.org/wiki/function

https://en.wikipedia.org/wiki/Value

https://en.wikipedia.org/wiki/Set

https://en.wikipedia.org/wiki/Define

https://en.wikipedia.org/wiki/Variable

https://en.wikipedia.org/wiki/computer_science

https://en.wikipedia.org/wiki/model

Vanish At Infinity

vector field

Instrumental and intrinsic value

In moral philosophy, instrumental and intrinsic value are the distinction between what is a means to an end and what is as an end in itself.[1] Things are deemed to have instrumental value if they help one achieve a particular end; intrinsic values, by contrast, are understood to be desirable in and of themselves. A tool or appliance, such as a hammer or washing machine, has instrumental value because it helps you pound in a nail or cleans your clothes. Happiness and pleasure are typically considered to have intrinsic value insofar as asking why someone would want them makes little sense: they are desirable for their own sake irrespective of their possible instrumental value. The classic names instrumental and intrinsic were coined by sociologist Max Weber, who spent years studying good meanings people assigned to their actions and beliefs.

The Oxford Handbook of Value Theory provide three modern definitions of intrinsic and instrumental value:

They are "the distinction between what is good 'in itself' and what is good 'as a means'."[1]: 14

"The concept of intrinsic value has been glossed variously as what is valuable for its own sake, in itself, on its own, in its own right, as an end, or as such. By contrast, extrinsic value has been characterized mainly as what is valuable as a means, or for something else's sake."[1]: 29

"Among nonfinal values, instrumental value—intuitively, the value attaching a means to what is finally valuable—stands out as a bona fide example of what is not valuable for its own sake."[1]: 34

When people judge efficient means and legitimate ends at the same time, both can be considered as good. However, when ends are judged separately from means, it may result in a conflict: what works may not be right; what is right may not work. Separating the criteria contaminates reasoning about the good. Philosopher John Dewey argued that separating criteria for good ends from those for good means necessarily contaminates recognition of efficient and legitimate patterns of behavior. Economist J. Fagg Foster explained why only instrumental value is capable of correlating good ends with good means. Philosopher Jacques Ellul argued that instrumental value has become completely contaminated by inhuman technological consequences, and must be subordinated to intrinsic supernatural value. Philosopher Anjan Chakravartty argued that instrumental value is only legitimate when it produces good scientific theories compatible with the intrinsic truth of mind-independent reality.

The word value is ambiguous in that it is both a verb and a noun, as well as denoting both a criterion of judgment itself and the result of applying a criterion.[2][3]: 37–44 To reduce ambiguity, throughout this article the noun value names a criterion of judgment, as opposed to valuation which is an object that is judged valuable. The plural values identifies collections of valuations, without identifying the criterion applied.

https://en.wikipedia.org/wiki/Instrumental_and_intrinsic_value

No comments:

Post a Comment