A hydrogen vehicle is a type of alternative fuel vehicle that uses hydrogen fuel for motive power. Hydrogen vehicles include hydrogen-fueled space rockets, as well as automobiles and other transportation vehicles. Power is generated by converting the chemical energy of hydrogen to mechanical energy, either by reacting hydrogen with oxygen in a fuel cell to power electric motors or, less commonly, by burning hydrogen in an internal combustion engine.[2]

As of 2021, there are two models of hydrogen cars publicly available in select markets: the Toyota Mirai (2014–), which is the world's first mass produced dedicated fuel cell electric vehicle (FCEV), and the Hyundai Nexo(2018–). The Honda Clarity was produced from 2016 to 2021.[3] A few other companies, like BMW, are still exploring hydrogen cars, while Volkswagen has expressed that the technology has no future in the automotive space, mainly because a fuel cell electric vehicle consumes about three times more energy than a battery electric car for each mile driven. As of December 2020, there were 31,225 passenger FCEVs powered with hydrogen on the world's roads.[4] —

As of 2019, 98% of hydrogen is produced by steam methane reforming, which emits carbon dioxide.[5] It can be produced by thermochemical or pyrolytic means using renewable feedstocks, but the processes are currently expensive.[6] Various technologies are being developed that aim to deliver costs low enough, and quantities great enough, to compete with hydrogen production using natural gas.[7]

The benefits of hydrogen technology are fast refueling time (comparable to gasoline) and long driving range on a single tank. The drawbacks of hydrogen use are high carbon emissions when hydrogen is produced from natural gas, capital cost burden, low energy content per unit volume at ambient conditions, production and compression of hydrogen, the investment required in filling stations to dispense hydrogen, transportation of hydrogen to filling stations, and lack of ability to produce or dispense hydrogen at home.[8][9][10]

https://en.wikipedia.org/wiki/Hydrogen_vehicle

The abundance of the chemical elements is a measure of the occurrence of the chemical elements relative to all other elements in a given environment. Abundance is measured in one of three ways: by the mass-fraction (the same as weight fraction); by the mole-fraction (fraction of atoms by numerical count, or sometimes fraction of molecules in gases); or by the volume-fraction. Volume-fraction is a common abundance measure in mixed gases such as planetary atmospheres, and is similar in value to molecular mole-fraction for gas mixtures at relatively low densities and pressures, and ideal gas mixtures. Most abundance values in this article are given as mass-fractions.

For example, the abundance of oxygen in pure water can be measured in two ways: the mass fraction is about 89%, because that is the fraction of water's mass which is oxygen. However, the mole-fraction is about 33% because only 1 atom of 3 in water, H2O, is oxygen. As another example, looking at the mass-fractionabundance of hydrogen and helium in both the Universe as a whole and in the atmospheres of gas-giant planets such as Jupiter, it is 74% for hydrogen and 23–25% for helium; while the (atomic) mole-fraction for hydrogen is 92%, and for helium is 8%, in these environments. Changing the given environment to Jupiter's outer atmosphere, where hydrogen is diatomic while helium is not, changes the molecular mole-fraction (fraction of total gas molecules), as well as the fraction of atmosphere by volume, of hydrogen to about 86%, and of helium to 13%.[Note 1]

The abundance of chemical elements in the universe is dominated by the large amounts of hydrogen and helium which were produced in the Big Bang. Remaining elements, making up only about 2% of the universe, were largely produced by supernovae and certain red giant stars. Lithium, beryllium and boron are rare because although they are produced by nuclear fusion, they are then destroyed by other reactions in the stars.[1][2] The elements from carbon to iron are relatively more abundant in the universe because of the ease of making them in supernova nucleosynthesis. Elements of higher atomic number than iron (element 26) become progressively rarer in the universe, because they increasingly absorb stellar energy in their production. Also, elements with even atomic numbers are generally more common than their neighbors in the periodic table, due to favorable energetics of formation.

The abundance of elements in the Sun and outer planets is similar to that in the universe. Due to solar heating, the elements of Earth and the inner rocky planets of the Solar System have undergone an additional depletion of volatile hydrogen, helium, neon, nitrogen, and carbon (which volatilizes as methane). The crust, mantle, and core of the Earth show evidence of chemical segregation plus some sequestration by density. Lighter silicates of aluminum are found in the crust, with more magnesium silicate in the mantle, while metallic iron and nickel compose the core. The abundance of elements in specialized environments, such as atmospheres, or oceans, or the human body, are primarily a product of chemical interactions with the medium in which they reside.

| Z | Element | Mass fraction (ppm) |

|---|---|---|

| 1 | Hydrogen | 739,000 |

| 2 | Helium | 240,000 |

| 8 | Oxygen | 10,400 |

| 6 | Carbon | 4,600 |

| 10 | Neon | 1,340 |

| 26 | Iron | 1,090 |

| 7 | Nitrogen | 960 |

| 14 | Silicon | 650 |

| 12 | Magnesium | 580 |

| 16 | Sulfur | 440 |

| Total | 999,500 |

| Nuclide | A | Mass fraction in parts per million | Atom fraction in parts per million |

|---|---|---|---|

| Hydrogen-1 | 1 | 705,700 | 909,964 |

| Helium-4 | 4 | 275,200 | 88,714 |

| Oxygen-16 | 16 | 9,592 | 477 |

| Carbon-12 | 12 | 3,032 | 326 |

| Nitrogen-14 | 14 | 1,105 | 102 |

| Neon-20 | 20 | 1,548 | 100 |

| Other nuclides: | 3,879 | 149 | |

| Silicon-28 | 28 | 653 | 30 |

| Magnesium-24 | 24 | 513 | 28 |

| Iron-56 | 56 | 1,169 | 27 |

| Sulfur-32 | 32 | 396 | 16 |

| Helium-3 | 3 | 35 | 15 |

| Hydrogen-2 | 2 | 23 | 15 |

| Neon-22 | 22 | 208 | 12 |

| Magnesium-26 | 26 | 79 | 4 |

| Carbon-13 | 13 | 37 | 4 |

| Magnesium-25 | 25 | 69 | 4 |

| Aluminium-27 | 27 | 58 | 3 |

| Argon-36 | 36 | 77 | 3 |

| Calcium-40 | 40 | 60 | 2 |

| Sodium-23 | 23 | 33 | 2 |

| Iron-54 | 54 | 72 | 2 |

| Silicon-29 | 29 | 34 | 2 |

| Nickel-58 | 58 | 49 | 1 |

| Silicon-30 | 30 | 23 | 1 |

| Iron-57 | 57 | 28 | 1 |

Earth[edit]

The Earth formed from the same cloud of matter that formed the Sun, but the planets acquired different compositions during the formation and evolution of the solar system. In turn, the natural history of the Earth caused parts of this planet to have differing concentrations of the elements.

The mass of the Earth is approximately 5.98×1024 kg. In bulk, by mass, it is composed mostly of iron (32.1%), oxygen (30.1%), silicon (15.1%), magnesium (13.9%), sulfur (2.9%), nickel (1.8%), calcium (1.5%), and aluminium (1.4%); with the remaining 1.2% consisting of trace amounts of other elements.[12]

The bulk composition of the Earth by elemental-mass is roughly similar to the gross composition of the solar system, with the major differences being that Earth is missing a great deal of the volatile elements hydrogen, helium, neon, and nitrogen, as well as carbon which has been lost as volatile hydrocarbons. The remaining elemental composition is roughly typical of the "rocky" inner planets, which formed in the thermal zone where solar heat drove volatile compounds into space. The Earth retains oxygen as the second-largest component of its mass (and largest atomic-fraction), mainly from this element being retained in silicate minerals which have a very high melting point and low vapor pressure.

Crust[edit]

The mass-abundance of the nine most abundant elements in the Earth's crust is approximately: oxygen 46%, silicon 28%, aluminum 8.3%, iron 5.6%, calcium 4.2%, sodium 2.5%, magnesium 2.4%, potassium 2.0%, and titanium 0.61%. Other elements occur at less than 0.15%. For a complete list, see abundance of elements in Earth's crust.

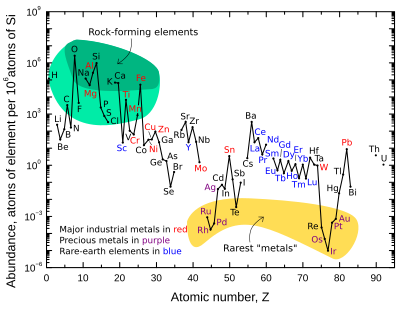

The graph at right illustrates the relative atomic-abundance of the chemical elements in Earth's upper continental crust—the part that is relatively accessible for measurements and estimation.

Many of the elements shown in the graph are classified into (partially overlapping) categories:

- rock-forming elements (major elements in green field, and minor elements in light green field);

- rare earth elements (lanthanides, La-Lu, Sc and Y; labeled in blue);

- major industrial metals (global production >~3×107 kg/year; labeled in red);

- precious metals (labeled in purple);

- the nine rarest "metals" – the six platinum group elements plus Au, Re, and Te (a metalloid) – in the yellow field. These are rare in the crust from being soluble in iron and thus concentrated in the Earth's core. Tellurium is the single most depleted element in the silicate Earth relative to cosmic abundance, because in addition to being concentrated as dense chalcogenides in the core it was severely depleted by preaccretional sorting in the nebula as volatile hydrogen telluride.[14]

Note that there are two breaks where the unstable (radioactive) elements technetium (atomic number 43) and promethium (atomic number 61) would be. These elements are surrounded by stable elements, yet both have relatively short half lives (~4 million years and ~18 years respectively). These are thus extremely rare, since any primordial initial fractions of these in pre-Solar System materials have long since decayed. These two elements are now only produced naturally through the spontaneous fission of very heavy radioactiveelements (for example, uranium, thorium, or the trace amounts of plutonium that exist in uranium ores), or by the interaction of certain other elements with cosmic rays. Both technetium and promethium have been identified spectroscopically in the atmospheres of stars, where they are produced by ongoing nucleosynthetic processes.

There are also breaks in the abundance graph where the six noble gases would be, since they are not chemically bound in the Earth's crust, and they are only generated in the crust by decay chains from radioactive elements, and are therefore extremely rare there.

The eight naturally occurring very rare, highly radioactive elements (polonium, astatine, francium, radium, actinium, protactinium, neptunium, and plutonium) are not included, since any of these elements that were present at the formation of the Earth have decayed away eons ago, and their quantity today is negligible and is only produced from the radioactive decay of uranium and thorium.

Oxygen and silicon are notably the most common elements in the crust. On Earth and in rocky planets in general, silicon and oxygen are far more common than their cosmic abundance. The reason is that they combine with each other to form silicate minerals.[14] Other cosmically-common elements such as hydrogen, carbon and nitrogen form volatile compounds such as ammonia and methane that easily boil away into space from the heat of planetary formation and/or the Sun's light.

Rare-earth elements[edit]

"Rare" earth elements is a historical misnomer. The persistence of the term reflects unfamiliarity rather than true rarity. The more abundant rare earth elements are similarly concentrated in the crust compared to commonplace industrial metals such as chromium, nickel, copper, zinc, molybdenum, tin, tungsten, or lead. The two least abundant rare earth elements (thulium and lutetium) are nearly 200 times more common than gold. However, in contrast to the ordinary base and precious metals, rare earth elements have very little tendency to become concentrated in exploitable ore deposits. Consequently, most of the world's supply of rare earth elements comes from only a handful of sources. Furthermore, the rare earth metals are all quite chemically similar to each other, and they are thus quite difficult to separate into quantities of the pure elements.

Differences in abundances of individual rare earth elements in the upper continental crust of the Earth represent the superposition of two effects, one nuclear and one geochemical. First, the rare earth elements with even atomic numbers (58Ce, 60Nd, ...) have greater cosmic and terrestrial abundances than the adjacent rare earth elements with odd atomic numbers (57La, 59Pr, ...). Second, the lighter rare earth elements are more incompatible (because they have larger ionic radii) and therefore more strongly concentrated in the continental crust than the heavier rare earth elements. In most rare earth ore deposits, the first four rare earth elements – lanthanum, cerium, praseodymium, and neodymium – constitute 80% to 99% of the total amount of rare earth metal that can be found in the ore.

Mantle[edit]

The mass-abundance of the eight most abundant elements in the Earth's mantle (see main article above) is approximately: oxygen 45%, magnesium 23%, silicon 22%, iron 5.8%, calcium 2.3%, aluminum 2.2%, sodium 0.3%, potassium 0.3%.[citation needed]

Core[edit]

Due to mass segregation, the core of the Earth is believed to be primarily composed of iron (88.8%), with smaller amounts of nickel (5.8%), sulfur (4.5%), and less than 1% trace elements.[12]

Ocean[edit]

The most abundant elements in the ocean by proportion of mass in percent are oxygen (85.84%), hydrogen (10.82%), chlorine (1.94%), sodium (1.08%), magnesium (0.13%), sulfur (0.09%), calcium (0.04%), potassium (0.04%), bromine (0.007%), carbon (0.003%), and boron (0.0004%).

Atmosphere[edit]

The order of elements by volume-fraction (which is approximately molecular mole-fraction) in the atmosphere is nitrogen (78.1%), oxygen (20.9%),[15] argon (0.96%), followed by (in uncertain order) carbon and hydrogen because water vapor and carbon dioxide, which represent most of these two elements in the air, are variable components. Sulfur, phosphorus, and all other elements are present in significantly lower proportions.

According to the abundance curve graph (above right), argon, a significant if not major component of the atmosphere, does not appear in the crust at all. This is because the atmosphere has a far smaller mass than the crust, so argon remaining in the crust contributes little to mass-fraction there, while at the same time buildup of argon in the atmosphere has become large enough to be significant.

Urban soils[edit]

For a complete list of the abundance of elements in urban soils, see Abundances of the elements (data page)#Urban soils.

https://en.wikipedia.org/wiki/Abundance_of_the_chemical_elements

Earth is the third planet from the Sun and the only astronomical object known to harbour and support life. About 29.2% of Earth's surface is land consisting of continents and islands. The remaining 70.8% is covered with water, mostly by oceans, seas, gulfs, and other salt-water bodies, but also by lakes, rivers, and other freshwater, which together constitute the hydrosphere. Much of Earth's polar regions are covered in ice. Earth's outer layer is divided into several rigid tectonic plates that migrate across the surface over many millions of years, while its interior remains active with a solid iron inner core, a liquid outer core that generates Earth's magnetic field, and a convective mantle that drives plate tectonics.

Earth's atmosphere consists mostly of nitrogen and oxygen. More solar energy is received by tropical regions than polar regions and is redistributed by atmospheric and ocean circulation. Greenhouse gases also play an important role in regulating the surface temperature. A region's climate is not only determined by latitude, but also by elevation and proximity to moderating oceans, among other factors. Severe weather, such as tropical cyclones, thunderstorms, and heatwaves, occurs in most areas and greatly impacts life.

Earth's gravity interacts with other objects in space, especially the Moon, which is Earth's only natural satellite. Earth orbits around the Sun in about 365.25 days. Earth's axis of rotation is tilted with respect to its orbital plane, producing seasons on Earth. The gravitational interaction between Earth and the Moon causes tides, stabilizes Earth's orientation on its axis, and gradually slows its rotation. Earth is the densest planet in the Solar System and the largest and most massive of the four rocky planets.

According to radiometric dating estimation and other evidence, Earth formed over 4.5 billion years ago. Within the first billion years of Earth's history, life appeared in the oceans and began to affect Earth's atmosphere and surface, leading to the proliferation of anaerobic and, later, aerobic organisms. Some geological evidence indicates that life may have arisen as early as 4.1 billion years ago. Since then, the combination of Earth's distance from the Sun, physical properties, and geological history have allowed life to evolve and thrive. In the history of life on Earth, biodiversity has gone through long periods of expansion, occasionally punctuated by mass extinctions. More than 99% of all species that ever lived on Earth are extinct. Almost 8 billion humans live on Earth and depend on its biosphere and natural resources for their survival. Humans increasingly impact Earth's surface, hydrology, atmospheric processes, and other life.

The Blue Marble, the most widely used photograph of Earth,[1][2] taken by the Apollo 17 mission in 1972 | |||||||||||||

| Designations | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Gaia, Terra, Tellus, the world, the globe | |||||||||||||

| Adjectives | Earthly, terrestrial, terran, tellurian | ||||||||||||

| Orbital characteristics | |||||||||||||

| Epoch J2000[n 1] | |||||||||||||

| Aphelion | 152100000 km (94500000 mi)[n 2] | ||||||||||||

| Perihelion | 147095000 km (91401000 mi)[n 2] | ||||||||||||

| 149598023 km (92955902 mi)[3] | |||||||||||||

| Eccentricity | 0.0167086[3] | ||||||||||||

| 365.256363004 d[4] (31558.1497635 ks) | |||||||||||||

Average orbital speed | 29.78 km/s[5] (107200 km/h; 66600 mph) | ||||||||||||

| 358.617° | |||||||||||||

| Inclination |

| ||||||||||||

| −11.26064°[5] to J2000 ecliptic | |||||||||||||

| 2022-Jan-04[7] | |||||||||||||

| 114.20783°[5] | |||||||||||||

| Satellites |

| ||||||||||||

| Physical characteristics | |||||||||||||

Mean radius | 6371.0 km (3958.8 mi)[9] | ||||||||||||

Equatorialradius | 6378.137 km (3963.191 mi)[10][11] | ||||||||||||

Polar radius | 6356.752 km (3949.903 mi)[12] | ||||||||||||

| Flattening | 1/298.257222101 (ETRS89)[13] | ||||||||||||

| Circumference |

| ||||||||||||

| Volume | 1.08321×1012 km3 (2.59876×1011 cu mi)[5] | ||||||||||||

| Mass | 5.97237×1024 kg (1.31668×1025 lb)[16] (3.0×10−6 M☉) | ||||||||||||

Mean density | 5.514 g/cm3 (0.1992 lb/cu in)[5] | ||||||||||||

| 9.80665 m/s2 (1 g; 32.1740 ft/s2)[17] | |||||||||||||

| 0.3307[18] | |||||||||||||

| 11.186 km/s[5] (40270 km/h; 25020 mph) | |||||||||||||

Sidereal rotation period | |||||||||||||

Equatorial rotation velocity | 0.4651 km/s[20] (1674.4 km/h; 1040.4 mph) | ||||||||||||

| 23.4392811°[4] | |||||||||||||

| Albedo | |||||||||||||

| |||||||||||||

| Atmosphere | |||||||||||||

Surface pressure | 101.325 kPa (at MSL) | ||||||||||||

| Composition by volume | |||||||||||||

https://en.wikipedia.org/wiki/Earth

https://en.wikipedia.org/wiki/Category:Astrochemistry

In chemistry and physics, a nucleon is either a proton or a neutron, considered in its role as a component of an atomic nucleus. The number of nucleons in a nucleus defines an isotope's mass number (nucleon number).

Until the 1960s, nucleons were thought to be elementary particles, not made up of smaller parts. Now they are known to be composite particles, made of three quarks bound together by the strong interaction. The interaction between two or more nucleons is called internucleon interaction or nuclear force, which is also ultimately caused by the strong interaction. (Before the discovery of quarks, the term "strong interaction" referred to just internucleon interactions.)

Nucleons sit at the boundary where particle physics and nuclear physics overlap. Particle physics, particularly quantum chromodynamics, provides the fundamental equations that describe the properties of quarks and of the strong interaction. These equations describe quantitatively how quarks can bind together into protons and neutrons (and all the other hadrons). However, when multiple nucleons are assembled into an atomic nucleus (nuclide), these fundamental equations become too difficult to solve directly (see lattice QCD). Instead, nuclides are studied within nuclear physics, which studies nucleons and their interactions by approximations and models, such as the nuclear shell model. These models can successfully describe nuclide properties, as for example, whether or not a particular nuclide undergoes radioactive decay.

The proton and neutron are in a scheme of categories being at once fermions, hadrons and baryons. The proton carries a positive net charge, and the neutron carries a zero net charge; the proton's mass is only about 0.13% less than the neutron's. Thus, they can be viewed as two states of the same nucleon, and together form an isospin doublet (I = 12). In isospin space, neutrons can be transformed into protons and conversely by SU(2) symmetries. These nucleons are acted upon equally by the strong interaction, which is invariant under rotation in isospin space. According to the Noether theorem, isospin is conserved with respect to the strong interaction.[1]: 129–130

https://en.wikipedia.org/wiki/Nucleon

The Ford Nucleon is a concept car developed by Ford in 1957, designed as a future nuclear-powered car—one of a handful of such designs during the 1950s and '60s. The concept was only demonstrated as a scale model. The design did not include an internal-combustion engine; rather, the vehicle was to be powered by a small nuclear reactor in the rear of the vehicle, based on the assumption that this would one day be possible by reducing sizes. The car was to use a steam engine powered by uranium fission, similar to those found in nuclear submarines.[1]

The mock-up of the car can be viewed at the Henry Ford Museum in Dearborn, Michigan.[2]

https://en.wikipedia.org/wiki/Ford_Nucleon

Nuclear fission is a reaction in which the nucleus of an atom splits into two or more smaller nuclei. The fission process often produces gamma photons, and releases a very large amount of energy even by the energetic standards of radioactive decay.

Nuclear fission of heavy elements was discovered on 17 December 1938, by German chemist Otto Hahn and his assistant Fritz Strassmann in cooperation with Austrian-Swedish physicist Lise Meitner. Hahn understood that a "burst" of the atomic nuclei had occurred.[1][2] Meitner explained it theoretically in January 1939 along with her nephew Otto Robert Frisch. Frisch named the process by analogy with biological fission of living cells. For heavy nuclides, it is an exothermic reaction which can release large amounts of energy both as electromagnetic radiation and as kinetic energy of the fragments (heating the bulk material where fission takes place). Like nuclear fusion, in order for fission to produce energy, the total binding energy of the resulting elements must be greater than that of the starting element.

Fission is a form of nuclear transmutation because the resulting fragments (or daughter atoms) are not the same element as the original parent atom. The two (or more) nuclei produced are most often of comparable but slightly different sizes, typically with a mass ratio of products of about 3 to 2, for common fissile isotopes.[3][4] Most fissions are binary fissions (producing two charged fragments), but occasionally (2 to 4 times per 1000 events), three positively charged fragments are produced, in a ternary fission. The smallest of these fragments in ternary processes ranges in size from a proton to an argon nucleus.

Apart from fission induced by a neutron, harnessed and exploited by humans, a natural form of spontaneous radioactive decay (not requiring a neutron) is also referred to as fission, and occurs especially in very high-mass-number isotopes. Spontaneous fission was discovered in 1940 by Flyorov, Petrzhak, and Kurchatov[5] in Moscow, in an experiment intended to confirm that, without bombardment by neutrons, the fission rate of uranium was negligible, as predicted by Niels Bohr; it was not negligible.[5]

The unpredictable composition of the products (which vary in a broad probabilistic and somewhat chaotic manner) distinguishes fission from purely quantum tunneling processes such as proton emission, alpha decay, and cluster decay, which give the same products each time. Nuclear fission produces energy for nuclear power and drives the explosion of nuclear weapons. Both uses are possible because certain substances called nuclear fuels undergo fission when struck by fission neutrons, and in turn emit neutrons when they break apart. This makes a self-sustaining nuclear chain reactionpossible, releasing energy at a controlled rate in a nuclear reactor or at a very rapid, uncontrolled rate in a nuclear weapon.

The amount of free energy contained in nuclear fuel is millions of times the amount of free energy contained in a similar mass of chemical fuel such as gasoline, making nuclear fission a very dense source of energy. The products of nuclear fission, however, are on average far more radioactive than the heavy elements which are normally fissioned as fuel, and remain so for significant amounts of time, giving rise to a nuclear waste problem. Concerns over nuclear waste accumulation and the destructive potential of nuclear weapons are a counterbalance to the peaceful desire to use fission as an energy source.

https://en.wikipedia.org/wiki/Nuclear_fission

Population density (in agriculture: standing stock or plant density) is a measurement of population per unit area, or exceptionally unit volume; it is a quantity of type number density. It is frequently applied to living organisms, most of the time to humans. It is a key geographical term.[1] In simple terms, population density refers to the number of people living in an area per square kilometre.

https://en.wikipedia.org/wiki/Population_density

Reference. Sample Group Subjects (2020), on a global population scale with mass unfettered self-procreation and trafficking of birthright (exponential with technology and non-living data retention/recordkeeping) post intellectual property theft by USA/NAC (1900s et bef.). Cannot afford to build and permiss operation of nuclear powered jeep/nucleon/etc. by S.Wade, S.Petersen, Amcan, USA, NAC, etc.; for they cannot afford the price to buy. World population density evidences their support base/foundation and accomplice breadth (connections in crime). The builders, birthright and goths (viv) cannot supply to furnish demand of inferior genetic word or world of forced procreation-dissemination-indoctrination/shell swap/BTR/amnestic drug CTR meth/conspiracy/clandestine crime ops/trafficking/peonage/slavery/theft of gene-child-sequence-component-etc./destructive use by earth/theft of IP/theft/stolen child/trafficker family/etc., etc.. Until world population less than 1000/etc. with constraint/etc. without slavery/pupetting/crime/interact/etc. per race group five, cannot sustain advanced technology at CL1 due abuse propensity by inferior genetic line/group/etc. to fac certainty of outcome violant.

Extinction vortices are a class of models through which conservation biologists, geneticists and ecologists can understand the dynamics of and categorize extinctions in the context of their causes. This model shows the events that ultimately lead small populations to become increasingly more vulnerable as they spiral toward extinction. Developed by M. E. Gilpin and M. E. Soulé in 1986, there are currently four classes of extinction vortices.[1] The first two (R and D) deal with environmental factors that have an effect on the ecosystem or community level, such as disturbance, pollution, habitat loss etc. Whereas the second two (F and A) deal with genetic factors such as inbreeding depression and outbreeding depression, genetic drift etc.

https://en.wikipedia.org/wiki/Extinction_vortex

In biology, outbreeding depression is when crosses between two genetically distant groups or populations results in a reduction of fitness.[1] The concept is in contrast to inbreeding depression, although the two effects can occur simultaneously.[2] Outbreeding depression is a risk that sometimes limits the potential for genetic rescue or augmentations. Therefore it is important to consider the potential for outbreeding depression when crossing populations of a fragmented species.[1] It is considered postzygotic response because outbreeding depression is noted usually in the performance of the progeny.[3] Some common cases of outbreeding depression have arisen from crosses between different species or populations that exhibit fixed chromosomal differences.[1]

Outbreeding depression manifests in two ways:

- Generating intermediate genotypes that are less fit than either parental form. For example, selection in one population might favor a large body size, whereas in another population small body size might be more advantageous, while individuals with intermediate body sizes are comparatively disadvantaged in both populations. As another example, in the Tatra Mountains, the introduction of ibex from the Middle East resulted in hybrids which produced calves at the coldest time of the year.[4]

- Breakdown of biochemical or physiological compatibility. Within isolated breeding populations, alleles are selected in the context of the local genetic background. Because the same alleles may have rather different effects in different genetic backgrounds, this can result in different locally coadapted gene complexes. Outcrossing between individuals with differently adapted gene complexes can result in disruption of this selective advantage, resulting in a loss of fitness.

Epistasis is a phenomenon in genetics in which the effect of a gene mutation is dependent on the presence or absence of mutations in one or more other genes, respectively termed modifier genes. In other words, the effect of the mutation is dependent on the genetic background in which it appears.[2]Epistatic mutations therefore have different effects on their own than when they occur together. Originally, the term epistasis specifically meant that the effect of a gene variant is masked by that of a different gene.[3]

The concept of epistasis originated in genetics in 1907[4] but is now used in biochemistry, computational biology and evolutionary biology. It arises due to interactions, either between genes (such as mutations also being needed in regulators of gene expression) or within them (multiple mutations being needed before the gene loses function), leading to non-linear effects. Epistasis has a great influence on the shape of evolutionary landscapes, which leads to profound consequences for evolution and for the evolvability of phenotypic traits.

Double mutant cycles[edit]

When assaying epistasis within a gene, site-directed mutagenesis can be used to generate the different genes, and their protein products can be assayed (e.g. for stability or catalytic activity). This is sometimes called a double mutant cycle and involves producing and assaying the wild type protein, the two single mutants and the double mutant. Epistasis is measured as the difference between the effects of the mutations together versus the sum of their individual effects.[52] This can be expressed as a free energy of interaction. The same methodology can be used to investigate the interactions between larger sets of mutations but all combinations have to be produced and assayed. For example, there are 120 different combinations of 5 mutations, some or all of which may show epistasis...

https://en.wikipedia.org/wiki/Epistasis

https://en.wikipedia.org/w/index.php?title=Genetic_suppression&redirect=no

https://en.wikipedia.org/genetic_suppression

An F1 hybrid (also known as filial 1 hybrid) is the first filial generation of offspring of distinctly different parental types.[1] F1 hybrids are used in genetics, and in selective breeding, where the term F1 crossbreed may be used. The term also is sometimes written with a subscript, as F1 hybrid.[2][3] Subsequent generations are called F2, F3, etc.

The offspring of distinctly different parental types produce a new, uniform phenotype[citation needed] with a combination of characteristics from the parents. In fish breeding, those parents frequently are two closely related fish species, while in plant and animal breeding, the parents often are two inbred lines.

Gregor Mendel focused on patterns of inheritance and the genetic basis for variation. In his cross-pollination experiments involving two true-breeding, or homozygous, parents, Mendel found that the resulting F1 generation was heterozygous and consistent. The offspring showed a combination of the phenotypes from each parent that were genetically dominant. Mendel's discoveries involving the F1 and F2 generations laid the foundation for modern genetics.

https://en.wikipedia.org/wiki/F1_hybrid

A population bottleneck or genetic bottleneck is a sharp reduction in the size of a population due to environmental events such as famines, earthquakes, floods, fires, disease, and droughts or human activities such as specicide, widespread violence or intentional culling, and human population planning. Such events can reduce the variation in the gene pool of a population; thereafter, a smaller population, with a smaller genetic diversity, remains to pass on genes to future generations of offspring through sexual reproduction. Genetic diversity remains lower, increasing only when gene flow from another population occurs or very slowly increasing with time as random mutations occur.[1][self-published source] This results in a reduction in the robustness of the population and in its ability to adapt to and survive selecting environmental changes, such as climate change or a shift in available resources.[2] Alternatively, if survivors of the bottleneck are the individuals with the greatest genetic fitness, the frequency of the fitter genes within the gene pool is increased, while the pool itself is reduced.

The genetic drift caused by a population bottleneck can change the proportional random distribution of alleles and even lead to loss of alleles. The chances of inbreeding and genetic homogeneity can increase, possibly leading to inbreeding depression. Smaller population size can also cause deleterious mutations to accumulate.[3]

Population bottlenecks play an important role in conservation biology (see minimum viable population size) and in the context of agriculture (biological and pest control).[4]

Scientists have witnessed population bottlenecks in American bison, greater prairie chickens, northern elephant seals, golden hamsters, and cheetahs. The New Zealand black robins experienced a bottleneck of five individuals, all descendants of a single female. Geneticists have found evidence for past bottlenecks in pandas, golden snub-nosed monkeys, and humans.

https://en.wikipedia.org/wiki/Population_bottleneck

Genetic drift (allelic drift or the Sewall Wright effect)[1] is the change in the frequency of an existing gene variant (allele) in a population due to random sampling of organisms.[2] The alleles in the offspring are a sample of those in the parents, and chance has a role in determining whether a given individual survives and reproduces. A population's allele frequency is the fraction of the copies of one gene that share a particular form.[3]

Genetic drift may cause gene variants to disappear completely and thereby reduce genetic variation.[4] It can also cause initially rare alleles to become much more frequent and even fixed.

When there are few copies of an allele, the effect of genetic drift is larger, and when there are many copies the effect is smaller. In the middle of the 20th century, vigorous debates occurred over the relative importance of natural selectionversus neutral processes, including genetic drift. Ronald Fisher, who explained natural selection using Mendelian genetics,[5] held the view that genetic drift plays at the most a minor role in evolution, and this remained the dominant view for several decades. In 1968, population geneticist Motoo Kimura rekindled the debate with his neutral theory of molecular evolution, which claims that most instances where a genetic change spreads across a population (although not necessarily changes in phenotypes) are caused by genetic drift acting on neutral mutations.[6][7]

https://en.wikipedia.org/wiki/Genetic_drift

Mutational meltdown (not to be confused with the concept of an error catastrophe[1]) is the accumulation of harmful mutations in a small population, which leads to loss of fitness and decline of the population size, which may lead to further accumulation of deleterious mutations due to fixation by genetic drift.

https://en.wikipedia.org/wiki/Mutational_meltdown

In evolutionary biology and population genetics, the error threshold (or critical mutation rate) is a limit on the number of base pairs a self-replicating molecule may have before mutation will destroy the information in subsequent generations of the molecule. The error threshold is crucial to understanding "Eigen's paradox".

https://en.wikipedia.org/wiki/Error_threshold_(evolution)

Error catastrophe is the extinction of an organism (often in the context of microorganisms such as viruses) as a result of excessive mutations. Error catastrophe is something predicted in mathematical models and has also been observed empirically.[1]

https://en.wikipedia.org/wiki/Error_catastrophe

Synthetic lethality arises when a combination of deficiencies in the expression of two or more genes leads to cell death, whereas a deficiency in only one of these genes does not. The deficiencies can arise through mutations, epigenetic alterations or inhibitors of one of the genes. In a synthetic lethal genetic screen, it is necessary to begin with a mutation that does not kill the cell, although may confer a phenotype (for example, slow growth), and then systematically test other mutations at additional loci to determine which confer lethality. Synthetic lethality has utility for purposes of molecular targeted cancer therapy, with the first example of a molecular targeted therapeutic exploiting a synthetic lethal exposed by an inactivated tumor suppressor gene (BRCA1 and 2) receiving FDA approval in 2016 (PARP inhibitor).[1] A sub-case of synthetic lethality, where vulnerabilities are exposed by the deletion of passenger genes rather than tumor suppressor is the so-called "collateral lethality".[2]

Synthetic lethality is a consequence of the tendency of organisms to maintain buffering schemes that allow phenotypic stability despite genetic variation, environmental changes and random events such as mutations. This genetic robustness is the result of parallel redundant pathways and "capacitor" proteins that camouflage the effects of mutations so that important cellular processes do not depend on any individual component.[6] Synthetic lethality can help identify these buffering relationships, and what type of disease or malfunction that may occur when these relationships break down, through the identification of gene interactions that function in either the same biochemical process or pathways that appear to be unrelated.[7]

DNA mismatch repair deficiency[edit]

Mutations in genes employed in DNA mismatch repair (MMR) cause a high mutation rate.[10][11] In tumors, such frequent subsequent mutations often generate "non-self" immunogenic antigens. A human Phase II clinical trial, with 41 patients, evaluated one synthetic lethal approach for tumors with or without MMR defects.[12] In the case of sporadic tumors evaluated, the majority would be deficient in MMR due to epigenetic repression of an MMR gene (see DNA mismatch repair). The product of gene PD-1 ordinarily represses cytotoxic immune responses. Inhibition of this gene allows a greater immune response. In this Phase II clinical trial with 47 patients, when cancer patients with a defect in MMR in their tumors were exposed to an inhibitor of PD-1, 67% - 78% of patients experienced immune-related progression-free survival. In contrast, for patients without defective MMR, addition of PD-1 inhibitor generated only 11% of patients with immune-related progression-free survival. Thus inhibition of PD-1 is primarily synthetically lethal with MMR defects.

https://en.wikipedia.org/wiki/Synthetic_lethality

The ATP-binding cassette transporters (ABC transporters) are a transport system superfamily that is one of the largest and possibly one of the oldest gene families. It is represented in all extant phyla, from prokaryotes to humans.[1][2][3]

https://en.wikipedia.org/wiki/ATP-binding_cassette_transporter

Evolutionary capacitance is the storage and release of variation, just as electric capacitors store and release charge. Living systems are robust to mutations.

https://en.wikipedia.org/wiki/Evolutionary_capacitance

Habitat destruction (also termed habitat loss and habitat reduction) is the process by which a natural habitat becomes incapable of supporting its native species. The organisms that previously inhabited the site are displaced or dead, thereby reducing biodiversity and species abundance.[1][2] Habitat destruction is the leading cause of biodiversity loss.[3]

https://en.wikipedia.org/wiki/Habitat_destruction

A Roman lead pipe inscription is a Latin inscription on a Roman water pipe made of lead which provides brief information on its manufacturer and owner, often the reigning emperor himself as the supreme authority. The identification marks were created by full text stamps.[2]

https://en.wikipedia.org/wiki/Roman_lead_pipe_inscription

An ice age is a long period of reduction in the temperature of Earth's surface and atmosphere, resulting in the presence or expansion of continental and polar ice sheets and alpine glaciers. Earth's climate alternates between ice ages and greenhouse periods, during which there are no glaciers on the planet. Earth is currently in the Quaternary glaciation.[1] Individual pulses of cold climate within an ice age are termed glacial periods (or, alternatively, glacials, glaciations, glacial stages, stadials, stades, or colloquially, ice ages), and intermittent warm periods within an ice age are called interglacials or interstadials.[2]

In glaciology, ice age implies the presence of extensive ice sheets in both northern and southern hemispheres.[3]By this definition, Earth is currently in an interglacial period—the Holocene. The amount of anthropogenic greenhouse gases emitted into Earth's oceans and atmosphere is predicted to prevent the next glacial period for the next 500,000 years, which otherwise would begin in around 50,000 years, and likely more glacial cycles after.[4][5][6]

https://en.wikipedia.org/wiki/Ice_age

The Quaternary glaciation, also known as the Pleistocene glaciation, is an alternating series of glacial and interglacial periods during the Quaternary period that began 2.58 Ma (million years ago) and is ongoing.[1][2][3] Although geologists describe the entire time period up to the present as an "ice age", in popular culture the term "ice age" is usually associated with just the most recent glacial period during the Pleistocene or the Pleistocene epoch in general.[4] Since planet Earth still has ice sheets, geologists consider the Quaternary glaciation to be ongoing, with the Earth now experiencing an interglacial period.

During the Quaternary glaciation, ice sheets appeared. During glacial periods they expanded, and during interglacial periods they contracted. Since the end of the last glacial period, the only surviving ice sheets are the Antarctic and Greenland ice sheets. Other ice sheets, such as the Laurentide Ice Sheet, formed during glacial periods, had completely melted and disappeared during interglacials. The major effects of the Quaternary glaciation have been the erosion of land and the deposition of material, both over large parts of the continents; the modification of river systems; the creation of millions of lakes, including the development of pluvial lakes far from the ice margins; changes in sea level; the isostatic adjustment of the Earth's crust; flooding; and abnormal winds. The ice sheets themselves, by raising the albedo (the extent to which the radiant energy of the Sun is reflected from Earth) created significant feedback to further cool the climate. These effects have shaped entire environments on land and in the oceans, and in their associated biological communities.

Before the Quaternary glaciation, land-based ice appeared, and then disappeared, during at least four other ice ages.

https://en.wikipedia.org/wiki/Quaternary_glaciation

Plumbing is any system that conveys fluids for a wide range of applications. Plumbing uses pipes, valves, plumbing fixtures, tanks, and other apparatuses to convey fluids.[1] Heating and cooling (HVAC), waste removal, and potable water delivery are among the most common uses for plumbing, but it is not limited to these applications.[2] The word derives from the Latin for lead, plumbum, as the first effective pipes used in the Roman era were lead pipes.[3]

In the developed world, plumbing infrastructure is critical to public health and sanitation.[4][5]

Boilermakers and pipefitters are not plumbers although they work with piping as part of their trade and their work can include some plumbing.

https://en.wikipedia.org/wiki/Plumbing#Water_pipes

Examples[edit]

The first mechanism has the greatest effects on fitness for polyploids, an intermediate effect on translocations, and a modest effect on centric fusions and inversions.[1] Generally this mechanism will be more prevalent in the first generation (F1) after the initial outcrossing when most individuals are made up of the intermediate phenotype. An extreme case of this type of outbreeding depression is the sterility and other fitness-reducing effects often seen in interspecific hybrids(such as mules), which involves not only different alleles of the same gene but even different orthologous genes.

Examples of the second mechanism include stickleback fish, which developed benthic and lymnetic forms when separated. When crosses occurred between the two forms, there were low spawning rates. However, when the same forms mated with each other and no crossing occurred between lakes, the spawning rates were normal. This pattern has also been studied in Drosophila and leaf beetles, where the F1 progeny and later progeny resulted in intermediate fitness between the two parents. This circumstance is more likely to happen and occurs more quickly with selection than genetic drift.[1]

For the third mechanism, examples include poison dart frogs, anole lizards, and cichlid fish. Selection over genetic drift seems to be the dominant mechanism for outbreeding depression.[1]

https://en.wikipedia.org/wiki/Outbreeding_depression

Sterility is the physiological inability to affect sexual reproduction in a living thing, members of whose kind have been produced sexually. Sterility has a wide range of causes. It may be an inherited trait, as in the mule; or it may be acquired from the environment, for example through physical injury or disease, or by exposure to radiation.

Sterility is defined as the inability to produce a biological child, while infertility is defined as the inability to conceive after a certain period.[1] Sterility is rarely discussed in clinical literature and is often used synonymously with infertility. Infertility affects about 12-15% of couples globally.[2] Still, the prevalence of sterility remains unknown. Sterility can be divided into three subtypes natural, clinical, and hardship.[3] Natural sterility is the couple’s physiological inability to conceive a child by natural means. Clinical sterility is natural sterility for which treatment of the patient will not result in conception. Hardship sterility is the inability to take advantage of available treatments due to extraneous factors such as economic, psychological, or physical factors. Clinical sterility is a subtype of natural sterility, and Hardship sterility is a subtype of Clinical sterility.

Mechanisms of sterility[edit]

Hybrid sterility can be caused by different closely related species breeding and producing offspring. These animals are usually sterile due to different numbers of chromosomes from the two parents. The imbalance results in offspring that is viable but not fertile, as is the case with the mule.

Sterility can also be caused by selective breeding, where a selected trait is closely linked to genes involved in sex determination or fertility. For example goats breed to be polled (hornless). This results in a high number of intersex individuals among the offspring, which are typically sterile.[4]

Sterility can also be caused by chromosomal differences within an individual. These individuals tend to be known as a genetic mosaics. Loss of part of a chromosome can also cause sterility due to nondisjunction.

XX male syndrome is another cause of sterility, wherein the sexual determining factor on the Y chromosome (SRY) is transferred to the X chromosome due to an unequal crossing over. This gene triggers the development of testes, causing the individual to be phenotypically male but genotypically female.

Economic uses of sterility[edit]

Economic uses of sterility include:

- the production of certain kinds of seedless fruit, such as seedless tomato[5] or watermelon (though sterility is not the only available route to fruit seedlessness);

- terminator technology, methods for restricting the use of genetically modified plants by causing second generation seeds to be sterile;

- biological control; for example, trap-neuter-return programs for cats; and the sterile insect technique, in which large numbers of sterile insects are released, which compete with fertile insects for food and mates, thus reducing the population size of subsequent generations, which can be used to fight diseases spread by insect vectors such as malaria in mosquitoes.

- some animals which can produce sterile offspring because of mating with closely related species like mule, hinny, liger and tigon.

A mule is the offspring of a male donkey (jack) and a female horse (mare).[1][2] Horses and donkeys are different species, with different numbers of chromosomes. Of the two first-generation hybrids between these two species, a mule is easier to obtain than a hinny, which is the offspring of a female donkey (jenny) and a male horse (stallion).

The size of a mule and work to which it is put depend largely on the breeding of the mule's mother (dam). Mules can be lightweight, medium weight, or when produced from draft mares, of moderately heavy weight.[3]: 85–87 Mules are reputed to be more patient, hardy, and long-lived than horses, and are described as less obstinate and more intelligent than donkeys.[4]: 5

https://en.wikipedia.org/wiki/Mule

https://journals.plos.org/plosone/article?id=10.1371/journal.pone.0061746

https://genetics.thetech.org/ask/ask225

https://www.researchgate.net/publication/18736197_Meiosis_in_interspecific_equine_hybrids_I_The_male_mule_Equus_asinus_X_E_caballus_and_hinny_E_caballus_X_E_asinus

Hydrogen fuel is a zero carbon fuel burned with oxygen; provided it is created in a zero carbon way. It can be used in fuel cells or internal combustion engines (see HICEV). Regarding hydrogen vehicles, hydrogen has begun to be used in commercial fuel cell vehicles, such as passenger cars, and has been used in fuel cell busesfor many years. It is also used as a fuel for spacecraft propulsion.

In the early 2020s, most hydrogen is produced by steam methane reforming of fossil gas. Only a small quantity is made by alternative routes such as biomass gasification or electrolysis of water[1][2] or solar thermochemistry,[3] a solar fuel with no carbon emissions.

Hydrogen is found in the first group and first period in the periodic table, i.e. it is the lightest and first element of all. Since the weight of hydrogen is less than air, it rises in the atmosphere and is therefore rarely found in its pure form, H2.[4] In a flame of pure hydrogen gas, burning in air, the hydrogen (H2) reacts with oxygen (O2) to form water (H2O) and releases energy.

- 2H2 (g) + O2 (g) → 2H2O (g) + energy

If carried out in atmospheric air instead of pure oxygen, as is usually the case, hydrogen combustion may yield small amounts of nitrogen oxides, along with the water vapor.

The energy released enables hydrogen to act as a fuel. In an electrochemical cell, that energy can be used with relatively high efficiency. If it is used simply for heat, the usual thermodynamics limits on the thermal efficiency apply.

Hydrogen is usually considered an energy carrier, like electricity, as it must be produced from a primary energy source such as solar energy, biomass, electricity (e.g. in the form of solar PV or via wind turbines), or hydrocarbons such as natural gas or coal.[5] Conventional hydrogen production using natural gas induces significant environmental impacts; as with the use of any hydrocarbon, carbon dioxide is emitted.[6] At the same time, the addition of 20% of hydrogen (an optimal share that does not affect gas pipes and appliances) to natural gas can reduce CO

2 emissions caused by heating and cooking.[7]

https://en.wikipedia.org/wiki/Hydrogen_fuel

https://en.wikipedia.org/wiki/Trihydrogen_cation

https://en.wikipedia.org/wiki/Origin_of_water_on_Earth

https://en.wikipedia.org/wiki/Desalination

https://en.wikipedia.org/wiki/hydroelectricity

https://en.wikipedia.org/wiki/hydroelectric_power

https://en.wikipedia.org/wiki/thermodynamics

https://en.wikipedia.org/wiki/statics

https://en.wikipedia.org/wiki/linear

https://en.wikipedia.org/wiki/launch_loop

https://en.wikipedia.org/wiki/linear_induction_motor

https://en.wikipedia.org/wiki/maglev

https://en.wikipedia.org/wiki/magnetosphere

https://en.wikipedia.org/wiki/gravity

https://en.wikipedia.org/wiki/atmosphere

https://en.wikipedia.org/wiki/dark_matter

https://en.wikipedia.org/wiki/microwave

https://en.wikipedia.org/wiki/nucleon

https://en.wikipedia.org/wiki/neutrino

https://en.wikipedia.org/wiki/Lambda-CDM_model

https://en.wikipedia.org/wiki/mirror

https://en.wikipedia.org/wiki/neutron

particle collision, collision, compression, shear, thermodynamics

pressure, oscillation

plasma, gas, state of matter, starting material, ending material

https://en.wikipedia.org/wiki/Cosmological_constant

https://en.wikipedia.org/wiki/Big_Bang_nucleosynthesis

https://en.wikipedia.org/wiki/Quintessence_(physics)

https://en.wikipedia.org/wiki/Cosmic_microwave_background

https://en.wikipedia.org/wiki/Cosmological_principle

https://en.wikipedia.org/wiki/Homogeneity_(physics)

https://en.wikipedia.org/wiki/symmetry

https://en.wikipedia.org/wiki/supersymmetry

https://en.wikipedia.org/wiki/Expansion_of_the_universe

https://en.wikipedia.org/wiki/Vacuum

https://en.wikipedia.org/wiki/Decoupling_(cosmology)

https://en.wikipedia.org/wiki/Oscilloscope

https://en.wikipedia.org/wiki/Fluorescent_lamp#Cold-cathode_fluorescent_lamps

https://en.wikipedia.org/wiki/Nixie_tube

https://en.wikipedia.org/wiki/Vacuum_tube#Indirectly_heated_cathodes

https://en.wikipedia.org/wiki/Vacuum_tube

https://en.wikipedia.org/wiki/Fusion_power

https://en.wikipedia.org/wiki/J._J._Thomson

https://en.wikipedia.org/wiki/William_Crookes

- Sir William Crookes (June 17, 1832 – April 4, 1919) was an English chemist and physicist who attended the Royal College of Chemistry, in London, and worked

| Composition by volume |

|---|

Underwater[edit]

The ambient pressure in water with a free surface is a combination of the hydrostatic pressure due to the weight of the water column and the atmospheric pressure on the free surface. This increases approximately linearly with depth. Since water is much denser than air, much greater changes in ambient pressure can be experienced under water. Each 10 metres (33 ft) of depth adds another bar to the ambient pressure.

https://en.wikipedia.org/wiki/Ambient_pressure

Other environments[edit]

The concept is not limited to environments frequented by people. Almost any place in the universe will have an ambient pressure, from the hard vacuum of deep space to the interior of an exploding supernova. At extremely small scales the concept of pressure becomes irrelevant, and it is undefined at a gravitational singularity.[citation needed]

Examples of ambient pressure in various environments[edit]

Pressures are given in terms of the normal ambient pressure experienced by humans — standard atmospheric pressure at sea level on earth.

| Environment | Typical ambient pressure in standard atmospheres |

|---|---|

| Hard vacuum of outer space | 0 atm |

| Surface of Mars, average | 0.006 atm [2] |

| Top of Mount Everest | 0.333 atm [3] |

| Pressurized passenger aircraft cabin altitude 8,000 ft (2,400 m) | 0.76 atm[4] |

| Sea level atmospheric pressure | 1 atm |

| Surface of Titan | 1.45 atm |

| 10m depth in seawater | 2 atm |

| 20m depth in seawater | 3 atm |

| Recreational diving depth limit (40m)[5] | 5 atm |

| Common technical diving depth limit (100m)[6][7] | 11 atm |

| Experimental ambient pressure dive maximum (Maximum ambient pressure a human has survived)[8] | 54 atm |

| Surface of Venus | 92 atm [9] |

| 1 km depth in seawater | 101 atm |

| Deepest point in the Earth's oceans[10] | 1100 atm |

| Centre of the Earth | 3.3 to 3.6 million atm[11] |

| Centre of Jupiter | 30 to 45 million atm[12] |

| Centre of the sun | 244 billion atm [13] |

https://en.wikipedia.org/wiki/Ambient_pressure

https://en.wikipedia.org/wiki/Galaxy_filament

Earth's inner core is the innermost geologic layer of the planet Earth. It is primarily a solid ball with a radius of about 1,220 km (760 mi), which is about 20% of Earth's radius or 70% of the Moon's radius.[1][2]

There are no samples of Earth's core accessible for direct measurement, as there are for Earth's mantle. Information about Earth's core mostly comes from analysis of seismic waves and Earth's magnetic field.[3] The inner core is believed to be composed of an iron–nickel alloy with some other elements. The temperature at the inner core's surface is estimated to be approximately 5,700 K (5,430 °C; 9,800 °F), which is about the temperature at the surface of the Sun.[4]

https://en.wikipedia.org/wiki/Earth%27s_inner_core

Dziewoński and Gilbert established that measurements of normal modes of vibration of Earth caused by large earthquakes were consistent with a liquid outer core.[14]In 2005, shear waves were detected passing through the inner core; these claims were initially controversial, but are now gaining acceptance.[15]

Seismic waves[edit]

Almost all direct measurements that scientists have about the physical properties of the inner core are the seismic waves that pass through it. The most informative waves are generated by deep earthquakes, 30 km or more below the surface of the Earth (where the mantle is relatively more homogeneous) and recorded by seismographs as they reach the surface, all over the globe.[citation needed]

Seismic waves include "P" (primary or pressure) waves, compressional waves that can travel through solid or liquid materials, and "S" (secondary or shear) shear waves that can only propagate through rigid elastic solids. The two waves have different velocities and are damped at different rates as they travel through the same material.

Of particular interest are the so-called "PKiKP" waves—pressure waves (P) that start near the surface, cross the mantle-core boundary, travel through the core (K), are reflected at the inner core boundary (i), cross again the liquid core (K), cross back into the mantle, and are detected as pressure waves (P) at the surface. Also of interest are the "PKIKP" waves, that travel through the inner core (I) instead of being reflected at its surface (i). Those signals are easier to interpret when the path from source to detector is close to a straight line—namely, when the receiver is just above the source for the reflected PKiKP waves, and antipodal to it for the transmitted PKIKP waves.[16]

While S waves cannot reach or leave the inner core as such, P waves can be converted into S waves, and vice versa, as they hit the boundary between the inner and outer core at an oblique angle. The "PKJKP" waves are similar to the PKIKP waves, but are converted into S waves when they enter the inner core, travel through it as S waves (J), and are converted again into P waves when they exit the inner core. Thanks to this phenomenon, it is known that the inner core can propagate S waves, and therefore must be solid.

Other sources

[edit]

Other sources of information about the inner core include

- The magnetic field of the Earth. While it seems to be generated mostly by fluid and electric currents in the outer core, those currents are strongly affected by the presence of the solid inner core and by the heat that flows out of it. (Although made of iron, the core is apparently not ferromagnetic, due to its extremely high temperature.)[citation needed]

- The Earth's mass, its gravitational field, and its angular inertia. These are all affected by the density and dimensions of the inner layers.[17]

- The natural oscillation frequencies and modes of the whole Earth oscillations, when large earthquakes make the planet "ring" like a bell. These oscillations also depend strongly on the density, size, and shape of the inner layers.[18]

- Nazis

Physical properties[edit]

Seismic wave velocity[edit]

The velocity of the S waves in the core varies smoothly from about 3.7 km/s at the center to about 3.5 km/s at the surface. That is considerably less than the velocity of S waves in the lower crust (about 4.5 km/s) and less than half the velocity in the deep mantle, just above the outer core (about 7.3 km/s).[4]: fig.2

The velocity of the P-waves in the core also varies smoothly through the inner core, from about 11.4 km/s at the center to about 11.1 km/s at the surface. Then the speed drops abruptly at the inner-outer core boundary to about 10.4 km/s.[4]: fig.2

Size and shape[edit]

On the basis of the seismic data, the inner core is estimated to be about 1221 km in radius (2442 km in diameter);,[4] which is about 19% of the radius of the Earth and 70% of the radius of the Moon.

Its volume is about 7.6 billion cubic km (7.6 × 1018 m3), which is about 1⁄140 (0.7%) of the volume of the whole Earth.

Its shape is believed to be close to an oblate ellipsoid of revolution, like the surface of the Earth, only that more spherical: The flattening f is estimated to be between 1⁄400 and 1⁄416;[17]: f.2 meaning that the radius along the Earth's axis is estimated to be about 3 km shorter than the radius at the equator. In comparison, the flattening of the Earth as a whole is close to 1⁄300, and the polar radius is 21 km shorter than the equatorial one.

Pressure and gravity[edit]

The pressure in the Earth's inner core is slightly higher than it is at the boundary between the outer and inner cores: It ranges from about 330 to 360 gigapascals (3,300,000 to 3,600,000 atm).[4][19][20]

The acceleration of gravity at the surface of the inner core can be computed to be 4.3 m/s2;[21] which is less than half the value at the surface of the Earth (9.8 m/s2).

Density and mass[edit]

The density of the inner core is believed to vary smoothly from about 13.0 kg/L (= g/cm3 = t/m3) at the center to about 12.8 kg/L at the surface. As it happens with other material properties, the density drops suddenly at that surface: The liquid just above the inner core is believed to be significantly less dense, at about 12.1 kg/L.[4] For comparison, the average density in the upper 100 km of the Earth is about 3.4 kg/L.

That density implies a mass of about 1023 kg for the inner core, which is 1⁄60 (1.7%) of the mass of the whole Earth.

Temperature[edit]

The temperature of the inner core can be estimated from the melting temperature of impure iron at the pressure which iron is under at the boundary of the inner core (about 330 GPa). From these considerations, in 2002 D. Alfè and others estimated its temperature as between 5,400 K (5,100 °C; 9,300 °F) and 5,700 K (5,400 °C; 9,800 °F).[4] However, in 2013 S. Anzellini and others obtained experimentally a substantially higher temperature for the melting point of iron, 6230 ± 500 K.[22]

Iron can be solid at such high temperatures only because its melting temperature increases dramatically at pressures of that magnitude (see the Clausius–Clapeyron relation).[23][24]

Magnetic field[edit]

In 2010, Bruce Buffett determined that the average magnetic field in the liquid outer core is about 2.5 milliteslas (25 gauss), which is about 40 times the maximum strength at the surface. He started from the known fact that the Moon and Sun cause tides in the liquid outer core, just as they do on the oceans on the surface. He observed that motion of the liquid through the local magnetic field creates electric currents, that dissipate energy as heat according to Ohm's law. This dissipation, in turn, damps the tidal motions and explains previously detected anomalies in Earth's nutation. From the magnitude of the latter effect he could calculate the magnetic field.[25] The field inside the inner core presumably has a similar strength. While indirect, this measurement does not depend significantly on any assumptions about the evolution of the Earth or the composition of the core.

Viscosity[edit]

Although seismic waves propagate through the core as if it was solid, the measurements cannot distinguish between a perfectly solid material from an extremely viscous one. Some scientists have therefore considered whether there may be slow convection in the inner core (as is believed to exist in the mantle). That could be an explanation for the anisotropy detected in seismic studies. In 2009, B. Buffett estimated the viscosity of the inner core at 1018 Pa·s;[26] which is a sextillion times the viscosity of water, and more than a billion times that of pitch.

Composition[edit]

There is still no direct evidence about the composition of the inner core. However, based on the relative prevalence of various chemical elements in the Solar System, the theory of planetary formation, and constraints imposed or implied by the chemistry of the rest of the Earth's volume, the inner core is believed to consist primarily of an iron–nickel alloy.

At the known pressures and estimated temperatures of the core, it is predicted that pure iron could be solid, but its density would exceed the known density of the core by approximately 3%. That result implies the presence of lighter elements in the core, such as silicon, oxygen, or sulfur, in addition to the probable presence of nickel.[27] Recent estimates (2007) allow for up to 10% nickel and 2–3% of unidentified lighter elements.[4]

According to computations by D. Alfè and others, the liquid outer core contains 8–13% of oxygen, but as the iron crystallizes out to form the inner core the oxygen is mostly left in the liquid.[4]

Laboratory experiments and analysis of seismic wave velocities seem to indicate that the inner core consists specifically of ε-iron, a crystalline form of the metal with the hexagonal close-packed (hcp) structure. That structure can still admit the inclusion of small amounts of nickel and other elements.[16][28]

Also, if the inner core grows by precipitation of frozen particles falling onto its surface, then some liquid can also be trapped in the pore spaces. In that case, some of this residual fluid may still persist to some small degree in much of its interior.[citation needed]

Structure[edit]

Many scientists had initially expected that the inner core would be found to be homogeneous, because that same process should have proceeded uniformly during its entire formation. It was even suggested that Earth's inner core might be a single crystal of iron.[29]

Axis-aligned anisotropy[edit]

In 1983, G. Poupinet and others observed that the travel time of PKIKP waves (P waves that travel through the inner core) was about 2 seconds less for straight north–south paths than straight paths on the equatorial plane.[30] Even taking into account the flattening of the Earth at the poles (about 0.33% for the whole Earth, 0.25% for the inner core) and crust and upper mantle heterogeneities, this difference implied that P waves (of a broad range of wavelengths) travel through the inner core about 1% faster in the north–south direction than along directions perpendicular to that.[31]

This P wave speed anisotropy has been confirmed by later studies, including more seismic data[16] and study of the free oscillations of the whole Earth.[18] Some authors have claimed higher values for the difference, up to 4.8%; however, in 2017 D. Frost and B. Romanowicz confirmed that the value is between 0.5% and 1.5%.[32]

Non-axial anisotropy[edit]

Some authors have claimed that P wave speed is faster in directions that are oblique or perpendicular to the N−S axis, at least in some regions of the inner core.[33]However, these claims have been disputed by D. Frost and B. Romanowicz, who instead claim that the direction of maximum speed is as close to the Earth's rotation axis as can be determined.[34]

Causes of anisotropy[edit]

Laboratory data and theoretical computations indicate that the propagation of pressure waves in the hcp crystals of ε-iron are strongly anisotropic, too, with one "fast" axis and two equally "slow" ones. A preference for the crystals in the core to align in the north–south direction could account for the observed seismic anomaly.[16]

One phenomenon that could cause such partial alignment is slow flow ("creep") inside the inner core, from the equator towards the poles or vice versa. That flow would cause the crystals to partially reorient themselves according to the direction of the flow. In 1996, S. Yoshida and others proposed that such a flow could be caused by higher rate of freezing at the equator than at polar latitudes. An equator-to-pole flow then would set up in the inner core, tending to restore the isostatic equilibrium of its surface.[35][28]

Others suggested that the required flow could be caused by slow thermal convection inside the inner core. T. Yukutake claimed in 1998 that such convective motions were unlikely.[36] However, B. Buffet in 2009 estimated the viscosity of the inner core and found that such convection could have happened, especially when the core was smaller.[26]

On the other hand, M. Bergman in 1997 proposed that the anisotropy was due to an observed tendency of iron crystals to grow faster when their crystallographic axes are aligned with the direction of the cooling heat flow. He, therefore, proposed that the heat flow out of the inner core would be biased towards the radial direction.[37]

In 1998, S. Karato proposed that changes in the magnetic field might also deform the inner core slowly over time.[38]

Multiple layers[edit]

In 2002, M. Ishii and A. Dziewoński presented evidence that the solid inner core contained an "innermost inner core" (IMIC) with somewhat different properties than the shell around it. The nature of the differences and radius of the IMIC are still unresolved as of 2019, with proposals for the latter ranging from 300 km to 750 km.[39][40][41][34]

A. Wang and X. Song proposed, in 2018, a three-layer model, with an "inner inner core" (IIC) with about 500 km radius, an "outer inner core" (OIC) layer about 600 km thick, and an isotropic shell 100 km thick. In this model, the "faster P wave" direction would be parallel to the Earth's axis in the OIC, but perpendicular to that axis in the IIC.[33] However, conclusion has been disputed by claims that there need not be sharp discontinuities in the inner core, only a gradual change of properties with depth.[34]

Lateral variation[edit]

In 1997, S. Tanaka and H. Hamaguchi claimed, on the basis of seismic data, that the anisotropy of the inner core material, while oriented N−S, was more pronounced in "eastern" hemisphere of the inner core (at about 110 °E longitude, roughly under Borneo) than in the "western" hemisphere (at about 70 °W, roughly under Colombia).[42]: fg.9

Alboussère and others proposed that this asymmetry could be due to melting in the Eastern hemisphere and re-crystallization in the Western one.[43] C. Finlay conjectured that this process could explain the asymmetry in the Earth's magnetic field.[44]

However, in 2017 D. Frost and B. Romanowicz disputed those earlier inferences, claiming that the data shows only a weak anisotropy, with the speed in the N−S direction being only 0.5% to 1.5% faster than in equatorial directions, and no clear signs of E−W variation.[32]

Other structure[edit]

Other researchers claim that the properties of the inner core's surface vary from place to place across distances as small as 1 km. This variation is surprising since lateral temperature variations along the inner-core boundary are known to be extremely small (this conclusion is confidently constrained by magnetic fieldobservations).[citation needed]

Growth[edit]

The Earth's inner core is thought to be slowly growing as the liquid outer core at the boundary with the inner core cools and solidifies due to the gradual cooling of the Earth's interior (about 100 degrees Celsius per billion years).[45]

According to calculations by Alfé and others, as the iron crystallizes onto the inner core, the liquid just above it becomes enriched in oxygen, and therefore less dense than the rest of the outer core. This process creates convection currents in the outer core, which are thought to be the prime driver for the currents that create the Earth's magnetic field.[4]

The existence of the inner core also affects the dynamic motions of liquid in the outer core, and thus may help fix the magnetic field.[citation needed]

Dynamics[edit]

Because the inner core is not rigidly connected to the Earth's solid mantle, the possibility that it rotates slightly more quickly or slowly than the rest of Earth has long been entertained.[46][47] In the 1990s, seismologists made various claims about detecting this kind of super-rotation by observing changes in the characteristics of seismic waves passing through the inner core over several decades, using the aforementioned property that it transmits waves more quickly in some directions. In 1996, X. Song and P. Richards estimated this "super-rotation" of the inner core relative to the mantle as about one degree per year.[48][49] In 2005, they and J. Zhang compared recordings of "seismic doublets" (recordings by the same station of earthquakes occurring in the same location on the opposite side of the Earth, years apart), and revised that estimate to 0.3 to 0.5 degree per year.[50]

In 1999, M. Greff-Lefftz and H. Legros noted that the gravitational fields of the Sun and Moon that are responsible for ocean tides also apply torques to the Earth, affecting its axis of rotation and a slowing down of its rotation rate. Those torques are felt mainly by the crust and mantle, so that their rotation axis and speed may differ from overall rotation of the fluid in the outer core and the rotation of the inner core. The dynamics is complicated because of the currents and magnetic fields in the inner core. They find that the axis of the inner core wobbles (nutates) slightly with a period of about 1 day. With some assumptions on the evolution of the Earth, they conclude that the fluid motions in the outer core would have entered resonance with the tidal forces at several times in the past (3.0, 1.8, and 0.3 billion years ago). During those epochs, which lasted 200–300 million years each, the extra heat generated by stronger fluid motions might have stopped the growth of the inner core.[51]

Age[edit]

Theories about the age of the core are necessarily part of theories of the history of Earth as a whole. This has been a long-debated topic and is still under discussion at the present time. It is widely believed that the Earth's solid inner core formed out of an initially completely liquid core as the Earth cooled down. However, there is still no firm evidence about the time when this process started.[3]

|

Two main approaches have been used to infer the age of the inner core: thermodynamic modeling of the cooling of the Earth, and analysis of paleomagnetic evidence. The estimates yielded by these methods still vary over a large range, from 0.5 to 2 billion years old.

Thermodynamic evidence[edit]

One of the ways to estimate the age of the inner core is by modeling the cooling of the Earth, constrained by a minimum value for the heat flux at the core–mantle boundary (CMB). That estimate is based on the prevailing theory that the Earth's magnetic field is primarily triggered by convection currents in the liquid part of the core, and the fact that a minimum heat flux is required to sustain those currents. The heat flux at the CMB at present time can be reliably estimated because it is related to the measured heat flux at Earth's surface and to the measured rate of mantle convection.[63][52]