Trans-Atlantic Pipeline 1800s-1900s

(N/R, 2021)

The Atlantic Bridge is a flight route from Gander, Newfoundland, Canada to Scotland, with a refueling stop in Iceland.

During the Second World War, new bombers flew this route. Today, it is seldom used for commercial aviation, since modern jet airliners can fly a direct route from Canada or the United States to Europe without the need for a fueling stop. However, smaller aircraft which do not have the necessary range to make a direct crossing of the ocean still routinely use this route, or may alternatively stop in Greenland, typically via Narsarsuaq and Kulusuk or the Azores for refueling. The most common users of this route are ferry pilots delivering light aeroplanes (often six seats or less) to new owners.

This route is longer overall than the direct route and involves an extra landing and takeoff, which is costly in fuel terms.

https://en.wikipedia.org/wiki/Atlantic_Bridge_(flight_route)

https://en.wikipedia.org/wiki/Transatlantic_flight

Other uses of the seabed[edit]

Proper planning of a pipeline route has to factor in a wide range of human activities that make use of the seabed along the proposed route, or that are likely to do so in the future. They include the following:[2][8][12]

- Other pipelines: If and where the proposed pipeline intersects an existing one, which is not uncommon, a bridging structure may be required at that juncture in order to cross it. This has to be done at a right angle. The juncture should be carefully designed so as to avoid interferences between the two structures either by direct physical contact or due to hydrodynamic effects.

In applied mechanics, bending (also known as flexure) characterizes the behavior of a slender structural element subjected to an external load applied perpendicularly to a longitudinal axis of the element.

The structural element is assumed to be such that at least one of its dimensions is a small fraction, typically 1/10 or less, of the other two.[1] When the length is considerably longer than the width and the thickness, the element is called a beam. For example, a closet rod sagging under the weight of clothes on clothes hangers is an example of a beam experiencing bending. On the other hand, a shell is a structure of any geometric form where the length and the width are of the same order of magnitude but the thickness of the structure (known as the 'wall') is considerably smaller. A large diameter, but thin-walled, short tube supported at its ends and loaded laterally is an example of a shell experiencing bending.

In the absence of a qualifier, the term bending is ambiguous because bending can occur locally in all objects. Therefore, to make the usage of the term more precise, engineers refer to a specific object such as; the bending of rods,[2] the bending of beams,[1] the bending of plates,[3] the bending of shells[2] and so on.

https://en.wikipedia.org/wiki/Bending

Electromagnetic or magnetic induction is the production of an electromotive force across an electrical conductor in a changing magnetic field.

Michael Faraday is generally credited with the discovery of induction in 1831, and James Clerk Maxwell mathematically described it as Faraday's law of induction. Lenz's law describes the direction of the induced field. Faraday's law was later generalized to become the Maxwell–Faraday equation, one of the four Maxwell equations in his theory of electromagnetism.

Electromagnetic induction has found many applications, including electrical components such as inductors and transformers, and devices such as electric motors and generators.

https://en.wikipedia.org/wiki/Electromagnetic_induction

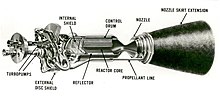

A hydrogen turboexpander-generator or generator loaded expander for hydrogen gas is an axial flow turbine or radial expander for energy recovery through which a high pressure hydrogen gas is expanded to produce work that is used to drive an electrical generator. It replaces the control valve or regulator where the pressure drops to the appropriate pressure for the low pressure network. A turboexpander-generator can help recover energy losses and offset electrical requirements and CO2 emissions.[1]

https://en.wikipedia.org/wiki/Hydrogen_turboexpander-generator

A transatlantic flight is the flight of an aircraft across the Atlantic Ocean from Europe, Africa, South Asia, or the Middle East to North America, Central America, or South America, or vice versa. Such flights have been made by fixed-wing aircraft, airships, balloons and other aircraft.

Early aircraft engines did not have the reliability needed for the crossing, nor the power to lift the required fuel. There are difficulties navigating over featureless expanses of water for thousands of miles, and the weather, especially in the North Atlantic, is unpredictable. Since the middle of the 20th century, however, transatlantic flight has become routine, for commercial, military, diplomatic, and other purposes. Experimental flights (in balloons, small aircraft, etc.) present challenges for transatlantic fliers.

History[edit]

The idea of transatlantic flight came about with the advent of the hot air balloon. The balloons of the period were inflated with coal gas, a moderate lifting medium compared to hydrogen or helium, but with enough lift to use the winds that would later be known as the Jet Stream. In 1859, John Wise built an enormous aerostat named the Atlantic, intending to cross the Atlantic. The flight lasted less than a day, crash-landing in Henderson, New York. Thaddeus S. C. Lowe prepared a massive balloon of 725,000 cubic feet (20,500 m3) called the City of New York to take off from Philadelphia in 1860, but was interrupted by the onset of the American Civil Warin 1861. The first successful transatlantic flight in a balloon was the Double Eagle II from Presque Isle, Maine, to Miserey, near Paris in 1978.

First transatlantic flights[edit]

In April 1913 the London newspaper The Daily Mail offered a prize of £10,000[1] (£470,000 in 2021[2]) to

The competition was suspended with the outbreak of World War I in 1914 but reopened after Armistice was declared in 1918.[3] The war saw tremendous advances in aerial capabilities, and a real possibility of transatlantic flight by aircraft emerged.

Between 8 and 31 May 1919, the Curtiss seaplane NC-4 made a crossing of the Atlantic flying from the U.S. to Newfoundland, then to the Azores, and on to mainland Portugal and finally the United Kingdom. The whole journey took 23 days, with six stops along the way. A trail of 53 "station ships" across the Atlantic gave the aircraft points to navigate by. This flight was not eligible for the Daily Mail prize since it took more than 72 consecutive hours and also because more than one aircraft was used in the attempt.[4]

There were four teams competing for the first non-stop flight across the Atlantic. They were Australian pilot Harry Hawker with observer Kenneth Mackenzie-Grieve in a single-engine Sopwith Atlantic; Frederick Raynham and C. W. F. Morgan in a Martinsyde; the Handley Page Group, led by Mark Kerr; and the Vickers entry John Alcock and Arthur Whitten Brown. Each group had to ship its aircraft to Newfoundland and make a rough field for the takeoff.[5][6]

Hawker and Mackenzie-Grieve made the first attempt on 18 May, but engine failure brought them down in the ocean where they were rescued. Raynham and Morgan also made an attempt on 18 May but crashed on takeoff due to the high fuel load. The Handley Page team was in the final stages of testing its aircraft for the flight in June, but the Vickers group was ready earlier.[5][6]

The first transatlantic flight by rigid airship, and the first return transatlantic flight, was made just a couple of weeks after the transatlantic flight of Alcock and Brown, on 2 July 1919. Major George Herbert Scott of the Royal Air Force flew the airship R34 with his crew and passengers from RAF East Fortune, Scotland to Mineola, New York (on Long Island), covering a distance of about 3,000 miles (4,800 km) in about four and a half days.

Transatlantic routes[edit]

Unlike over land, transatlantic flights use standardized aircraft routes called North Atlantic Tracks (NATs). These change daily in position (although altitudes are standardized) to compensate for weather—particularly the jet stream tailwinds and headwinds, which may be substantial at cruising altitudes and have a strong influence on trip duration and fuel economy. Eastbound flights generally operate during night-time hours, while westbound flights generally operate during daytime hours, for passenger convenience. The eastbound flow, as it is called, generally makes European landfall from about 0600UT to 0900UT. The westbound flow generally operates within a 1200–1500UT time slot. Restrictions on how far a given aircraft may be from an airport also play a part in determining its route; in the past, airliners with three or more engines were not restricted, but a twin-engine airliner was required to stay within a certain distance of airports that could accommodate it (since a single engine failure in a four-engine aircraft is less crippling than a single engine failure in a twin). Modern aircraft with two engines flying transatlantic (the most common models used for transatlantic service being the Airbus A330, Boeing 767, Boeing 777 and Boeing 787) have to be ETOPS certified.

https://en.wikipedia.org/wiki/Transatlantic_flight

https://en.wikipedia.org/wiki/Max_Aitken,_1st_Baron_Beaverbrook

https://en.wikipedia.org/wiki/Edward_Wentworth_Beatty

Jet streams are fast flowing, narrow, meandering, air currents in the atmospheres of some planets, including Earth.[1] On Earth, the main jet streams are located near the altitude of the tropopause and are westerly winds (flowing west to east). Jet streams may start, stop, split into two or more parts, combine into one stream, or flow in various directions including opposite to the direction of the remainder of the jet.

Overview[edit]

The strongest jet streams are the polar jets, at 9–12 km (30,000–39,000 ft) above sea level, and the higher altitude and somewhat weaker subtropical jets at 10–16 km (33,000–52,000 ft). The Northern Hemisphere and the Southern Hemisphere each have a polar jet and a subtropical jet. The northern hemisphere polar jet flows over the middle to northern latitudes of North America, Europe, and Asia and their intervening oceans, while the southern hemisphere polar jet mostly circles Antarctica, both all year round.

Jet streams are the product of two factors: the atmospheric heating by solar radiation that produces the large-scale Polar, Ferrel, and Hadley circulation cells, and the action of the Coriolis force acting on those moving masses. The Coriolis force is caused by the planet's rotation on its axis. On other planets, internal heat rather than solar heating drives their jet streams. The Polar jet stream forms near the interface of the Polar and Ferrel circulation cells; the subtropical jet forms near the boundary of the Ferrel and Hadley circulation cells.[2]

Other jet streams also exist. During the Northern Hemisphere summer, easterly jets can form in tropical regions, typically where dry air encounters more humid air at high altitudes. Low-level jets also are typical of various regions such as the central United States. There are also jet streams in the thermosphere.

Meteorologists use the location of some of the jet streams as an aid in weather forecasting. The main commercial relevance of the jet streams is in air travel, as flight time can be dramatically affected by either flying with the flow or against. Often, airlines work to fly 'with' the jet stream to obtain significant fuel cost and time savings . Dynamic North Atlantic Tracks are one example of how airlines and air traffic control work together to accommodate the jet stream and winds aloft that results in the maximum benefit for airlines and other users. Clear-air turbulence, a potential hazard to aircraft passenger safety, is often found in a jet stream's vicinity, but it does not create a substantial alteration on flight times.

Discovery[edit]

The first indications of this phenomenon came from American professor Elias Loomis in the 1800s, when he proposed a powerful air current in the upper air blowing west to east across the United States as an explanation for the behaviour of major storms.[3] After the 1883 eruption of the Krakatoa volcano, weather watchers tracked and mapped the effects on the sky over several years. They labelled the phenomenon the "equatorial smoke stream".[4][5] In the 1920s, a Japanese meteorologist, Wasaburo Oishi, detected the jet stream from a site near Mount Fuji.[6][7] He tracked pilot balloons, also known as pibals (balloons used to determine upper level winds),[8] as they rose into the atmosphere. Oishi's work largely went unnoticed outside Japan because it was published in Esperanto. American pilot Wiley Post, the first man to fly around the world solo in 1933, is often given some credit for discovery of jet streams. Post invented a pressurized suit that let him fly above 6,200 metres (20,300 ft). In the year before his death, Post made several attempts at a high-altitude transcontinental flight, and noticed that at times his ground speed greatly exceeded his air speed.[9] German meteorologist Heinrich Seilkopf is credited with coining a special term, Strahlströmung (literally "jet current"), for the phenomenon in 1939.[10][11] Many sources credit real understanding of the nature of jet streams to regular and repeated flight-path traversals during World War II. Flyers consistently noticed westerly tailwinds in excess of 160 km/h (100 mph) in flights, for example, from the US to the UK.[12] Similarly in 1944 a team of American meteorologists in Guam, including Reid Bryson, had enough observations to forecast very high west winds that would slow World War II bombers travelling to Japan.[13]

Description[edit]

Polar jet streams are typically located near the 250 hPa (about 1/4 atmosphere) pressure level, or seven to twelve kilometres (23,000 to 39,000 ft) above sea level, while the weaker subtropical jet streams are much higher, between 10 and 16 kilometres (33,000 and 52,000 ft). Jet streams wander laterally dramatically, and changes in their altitude. The jet streams form near breaks in the tropopause, at the transitions between the Polar, Ferrel and Hadley circulation cells, and whose circulation, with the Coriolis force acting on those masses, drives the jet streams. The Polar jets, at lower altitude, and often intruding into mid-latitudes, strongly affect weather and aviation.[14][15] The polar jet stream is most commonly found between latitudes 30° and 60° (closer to 60°), while the subtropical jet streams are located close to latitude 30°. These two jets merge at some locations and times, while at other times they are well separated. The northern Polar jet stream is said to "follow the sun" as it slowly migrates northward as that hemisphere warms, and southward again as it cools.[16][17]

The width of a jet stream is typically a few hundred kilometres or miles and its vertical thickness often less than five kilometres (16,000 feet).[18]

Jet streams are typically continuous over long distances, but discontinuities are common.[19] The path of the jet typically has a meandering shape, and these meanders themselves propagate eastward, at lower speeds than that of the actual wind within the flow. Each large meander, or wave, within the jet stream is known as a Rossby wave (planetary wave). Rossby waves are caused by changes in the Coriolis effect with latitude.[citation needed] Shortwave troughs, are smaller scale waves superimposed on the Rossby waves, with a scale of 1,000 to 4,000 kilometres (600–2,500 mi) long,[20] that move along through the flow pattern around large scale, or longwave, "ridges" and "troughs" within Rossby waves.[21] Jet streams can split into two when they encounter an upper-level low, that diverts a portion of the jet stream under its base, while the remainder of the jet moves by to its north.

The wind speeds are greatest where temperature differences between air masses are greatest, and often exceed 92 km/h (50 kn; 57 mph).[19] Speeds of 400 km/h (220 kn; 250 mph) have been measured.[22]

The jet stream moves from West to East bringing changes of weather.[23] Meteorologists now understand that the path of jet streams affects cyclonic storm systems at lower levels in the atmosphere, and so knowledge of their course has become an important part of weather forecasting. For example, in 2007 and 2012, Britain experienced severe flooding as a result of the polar jet staying south for the summer.[24][25][26]

Cause[edit]

In general, winds are strongest immediately under the tropopause (except locally, during tornadoes, tropical cyclones or other anomalous situations). If two air masses of different temperatures or densities meet, the resulting pressure difference caused by the density difference (which ultimately causes wind) is highest within the transition zone. The wind does not flow directly from the hot to the cold area, but is deflected by the Coriolis effect and flows along the boundary of the two air masses.[27]

All these facts are consequences of the thermal wind relation. The balance of forces acting on an atmospheric air parcel in the vertical direction is primarily between the gravitational force acting on the mass of the parcel and the buoyancy force, or the difference in pressure between the top and bottom surfaces of the parcel. Any imbalance between these forces results in the acceleration of the parcel in the imbalance direction: upward if the buoyant force exceeds the weight, and downward if the weight exceeds the buoyancy force. The balance in the vertical direction is referred to as hydrostatic. Beyond the tropics, the dominant forces act in the horizontal direction, and the primary struggle is between the Coriolis force and the pressure gradient force. Balance between these two forces is referred to as geostrophic. Given both hydrostatic and geostrophic balance, one can derive the thermal wind relation: the vertical gradient of the horizontal wind is proportional to the horizontal temperature gradient. If two air masses, one cold and dense to the North and the other hot and less dense to the South, are separated by a vertical boundary and that boundary should be removed, the difference in densities will result in the cold air mass slipping under the hotter and less dense air mass. The Coriolis effect will then cause poleward-moving mass to deviate to the East, while equatorward-moving mass will deviate toward the west. The general trend in the atmosphere is for temperatures to decrease in the poleward direction. As a result, winds develop an eastward component and that component grows with altitude. Therefore, the strong eastward moving jet streams are in part a simple consequence of the fact that the Equator is warmer than the North and South poles.[27]

Polar jet stream[edit]

The thermal wind relation does not explain why the winds are organized into tight jets, rather than distributed more broadly over the hemisphere. One factor that contributes to the creation of a concentrated polar jet is the undercutting of sub-tropical air masses by the more dense polar air masses at the polar front. This causes a sharp north-south pressure (south-north potential vorticity) gradient in the horizontal plane, an effect which is most significant during double Rossby wave breaking events.[28] At high altitudes, lack of friction allows air to respond freely to the steep pressure gradient with low pressure at high altitude over the pole. This results in the formation of planetary wind circulations that experience a strong Coriolis deflection and thus can be considered 'quasi-geostrophic'. The polar front jet stream is closely linked to the frontogenesis process in midlatitudes, as the acceleration/deceleration of the air flow induces areas of low/high pressure respectively, which link to the formation of cyclones and anticyclones along the polar front in a relatively narrow region.[19]

Subtropical jet[edit]

A second factor which contributes to a concentrated jet is more applicable to the subtropical jet which forms at the poleward limit of the tropical Hadley cell, and to first order this circulation is symmetric with respect to longitude. Tropical air rises to the tropopause, and moves poleward before sinking; this is the Hadley cell circulation. As it does so it tends to conserve angular momentum, since friction with the ground is slight. Air masses that begin moving poleward are deflected eastward by the Coriolis force (true for either hemisphere), which for poleward moving air implies an increased westerly component of the winds[29] (note that deflection is leftward in the southern hemisphere).

Other planets[edit]

Jupiter's atmosphere has multiple jet streams, caused by the convection cells that form the familiar banded color structure; on Jupiter, these convection cells are driven by internal heating.[22] The factors that control the number of jet streams in a planetary atmosphere is an active area of research in dynamical meteorology. In models, as one increases the planetary radius, holding all other parameters fixed,[clarification needed] the number of jet streams decreases.[citation needed]

Some effects[edit]

Hurricane protection[edit]

The subtropical jet stream rounding the base of the mid-oceanic upper trough is thought[30] to be one of the causes most of the Hawaiian Islands have been resistant to the long list of Hawaii hurricanes that have approached. For example, when Hurricane Flossie (2007) approached and dissipated just before reaching landfall, the U.S. National Oceanic and Atmospheric Administration (NOAA) cited vertical wind shear as evidenced in the photo.[30]

Uses[edit]

On Earth, the northern polar jet stream is the most important one for aviation and weather forecasting, as it is much stronger and at a much lower altitude than the subtropical jet streams and also covers many countries in the Northern Hemisphere, while the southern polar jet stream mostly circles Antarctica and sometimes the southern tip of South America. Thus, the term jet stream in these contexts usually implies the northern polar jet stream.

Aviation[edit]

The location of the jet stream is extremely important for aviation. Commercial use of the jet stream began on 18 November 1952, when Pan Am flew from Tokyo to Honolulu at an altitude of 7,600 metres (24,900 ft). It cut the trip time by over one-third, from 18 to 11.5 hours.[31] Not only does it cut time off the flight, it also nets fuel savings for the airline industry.[32][33] Within North America, the time needed to fly east across the continent can be decreased by about 30 minutes if an airplane can fly with the jet stream, or increased by more than that amount if it must fly west against it.

Associated with jet streams is a phenomenon known as clear-air turbulence (CAT), caused by vertical and horizontal wind shear caused by jet streams.[34] The CAT is strongest on the cold air side of the jet,[35] next to and just under the axis of the jet.[36] Clear-air turbulence can cause aircraft to plunge and so present a passenger safety hazard that has caused fatal accidents, such as the death of one passenger on United Airlines Flight 826.[37][38]

Possible future power generation[edit]

Scientists are investigating ways to harness the wind energy within the jet stream. According to one estimate of the potential wind energy in the jet stream, only one percent would be needed to meet the world's current energy needs. The required technology would reportedly take 10–20 years to develop.[39] There are two major but divergent scientific articles about jet stream power. Archer & Caldeira[40] claim that the Earth's jet streams could generate a total power of 1700 terawatts (TW)and that the climatic impact of harnessing this amount would be negligible. However, Miller, Gans, & Kleidon[41] claim that the jet streams could generate a total power of only 7.5 TW and that the climatic impact would be catastrophic.

Unpowered aerial attack[edit]

Near the end of World War II, from late 1944 until early 1945, the Japanese Fu-Go balloon bomb, a type of fire balloon, was designed as a cheap weapon intended to make use of the jet stream over the Pacific Ocean to reach the west coast of Canada and the United States. They were relatively ineffective as weapons, but they were used in one of the few attacks on North America during World War II, causing six deaths and a small amount of damage.[42] However, the Japanese were world leaders in biological weapons research at this time. The Japanese Imperial Army's Noborito Institute cultivated anthrax and plague Yersinia pestis; furthermore, it produced enough cowpox viruses to infect the entire United States.[43] The deployment of these biological weapons on fire balloons was planned in 1944.[44] Emperor Hirohito did not permit deployment of biological weapons on the basis of a report of President Staff Officer Umezu on 25 October 1944. Consequently, biological warfare using Fu-Go balloons was not implemented.[45]

Dust Bowl[edit]

Evidence suggests the jet stream was at least partly responsible for the widespread drought conditions during the 1930s Dust Bowl in the Midwest United States. Normally, the jet stream flows east over the Gulf of Mexico and turns northward pulling up moisture and dumping rain onto the Great Plains. During the Dust Bowl, the jet stream weakened and changed course traveling farther south than normal. This starved the Great Plains and other areas of the Midwest of rainfall, causing extraordinary drought conditions.[58]

https://en.wikipedia.org/wiki/Jet_stream

Notable transatlantic flights and attempts[edit]

1910s[edit]

- Airship America failure

- In October 1910, the American journalist Walter Wellman, who had in 1909 attempted to reach the North Pole by balloon, set out for Europe from Atlantic City in a dirigible, America. A storm off Cape Cod sent him off course, and then engine failure forced him to ditch halfway between New York and Bermuda. Wellman, his crew of five – and the balloon's cat – were rescued by RMS Trent, a passing British ship. The Atlantic bid failed, but the distance covered, about 1,000 statute miles (1,600 km), was at the time a record for a dirigible.[61]

- First transatlantic flight

- On 8–31 May 1919, the U.S. Navy Curtiss NC-4 flying boat under the command of Albert Read, flew 4,526 statute miles (7,284 km) from Rockaway, New York, to Plymouth (England), via among other stops Trepassey (Newfoundland), Hortaand Ponta Delgada (both Azores) and Lisbon (Portugal) in 53h 58m, spread over 23 days. The crossing from Newfoundland to the European mainland had taken 10 days 22 hours, with the total time in flight of 26h 46m. The longest non-stop leg of the journey, from Trepassey, Newfoundland, to Horta in the Azores, was 1,200 statute miles (1,900 km) and lasted 15h 18m.

- Sopwith Atlantic failure

- On 18 May 1919, the Australian Harry Hawker, together with navigator Kenneth Mackenzie Grieve, attempted to become the first to achieve a non-stop flight across the Atlantic Ocean. They set off from Mount Pearl, Newfoundland, in the Sopwith Atlantic biplane. After fourteen and a half hours of flight the engine overheated and they were forced to divert towards the shipping lanes: they found a passing freighter, the Danish Mary, established contact and crash-landed ahead of her. Mary's radio was out of order, so that it was not until six days later when the boat reached Scotland that word was received that they were safe. The wheels from the undercarriage, jettisoned soon after takeoff, were later recovered by local fishermen and are now in the Newfoundland Museum in St. John's.[62]

- First non-stop transatlantic flight

- On 14–15 June 1919, Capt. John Alcock and Lieut. Arthur Whitten Brown of the United Kingdom in Vickers Vimy bomber, between islands, 1,960 nautical miles (3,630 km), from St. John's, Newfoundland, to Clifden, Ireland, in 16h 12m.

- First east-to-west transatlantic flight

- On 2 July 1919, Major George Herbert Scott of the Royal Air Force with his crew and passengers flies from RAF East Fortune, Scotland to Mineola, New York (on Long Island) in airship R34, covering a distance of about 3,000 statute miles (4,800 km) in about four and a half days. R34 then made the return trip to England arriving at RNAS Pulham in 75 hours, thus also completing the first double crossing of the Atlantic (east-west-east).

1920s[edit]

- First flight across the South Atlantic

- On 30 March–17 June 1922, Lieutenant Commander Sacadura Cabral and Commander Gago Coutinho of Portugal, using three Fairey IIID floatplanes (Lusitania, Portugal, and Santa Cruz), after two ditchings, with only internal means of navigation (the Coutinho-invented sextant with artificial horizon) from Lisbon, Portugal, to Rio de Janeiro, Brazil.[63]

- First non-stop aircraft flight between European and American mainlands

- In October 1924, the Zeppelin LZ-126 (later known as ZR-3 USS Los Angeles), flew from Germany to New Jersey with a crew commanded by Dr. Hugo Eckener, covering a distance of about 4,000 statute miles (6,400 km).[64]

- First night-time flight across the Atlantic

- On the night of 16–17 April 1927, the Portuguese aviators Sarmento de Beires, Jorge de Castilho and Manuel Gouveia, flew from the Bijagós islands, Portuguese Guinea to Fernando de Noronha island, Brazil in the Dornier Wal flying boat Argos.

- First flight across the South Atlantic made by a non-European crew

- On 28 April 1927, Brazilian João Ribeiro de Barros, with the assistance of João Negrão (co-pilot), Newton Braga (navigator), and Vasco Cinquini (mechanic), crossed the Atlantic in the hydroplane Jahú. The four aviators flew from Genoa, in Italy, to Santo Amaro (São Paulo), making stops in Spain, Gibraltar, Cape Verdeand Fernando de Noronha, in the Brazilian territory.

- Disappearance of L'Oiseau Blanc

- On 8–9 May 1927, Charles Nungesser and François Coli attempted to cross the Atlantic from Paris to the US in a Levasseur PL-8 biplane L'Oiseau Blanc ("The White Bird"), but were lost.

- First solo transatlantic flight and first non-stop fixed-wing aircraft flight between America and mainland Europe

- On 20–21 May 1927, Charles A. Lindbergh flew his Ryan monoplane (named Spirit of St. Louis), 3,600 nautical miles (6,700 km), from Roosevelt Field, New Yorkto Paris–Le Bourget Airport, in 33½ hours.

- First transatlantic air passenger

- On 4–6 June 1927, the first transatlantic air passenger was Charles A. Levine. He was carried as a passenger by Clarence D. Chamberlin from Roosevelt Field, New York, to Eisleben, Germany, in a Wright-powered Bellanca.

- First non-stop air crossing of the South Atlantic

- On 14–15 October 1927, Dieudonne Costes and Joseph Le Brix, flying a Breguet 19, flew from Senegal to Brazil.

- First non-stop fixed-wing aircraft westbound flight over the North Atlantic

- On 12–13 April 1928, Ehrenfried Günther Freiherr von Hünefeld and Capt. Hermann Köhl of Germany and Comdr. James Fitzmaurice of Ireland, flew a Junkers W33 monoplane (named Bremen), 2,070 statute miles (3,330 km), from Baldonnell near Dublin, Ireland, to Labrador, in 36½ hours.[65]

- First crossing of the Atlantic by a woman

- On 17–18 June 1928, Amelia Earhart was a passenger on an aircraft piloted by Wilmer Stultz. Since most of the flight was on instruments for which Earhart had no training, she did not pilot the aircraft. Interviewed after landing, she said, "Stultz did all the flying – had to. I was just baggage, like a sack of potatoes. Maybe someday I'll try it alone."

- Notable flight (around the world)

- On 1–8 August 1929, in making the circumnavigation, Dr Hugo Eckener piloted the LZ 127 Graf Zeppelin across the Atlantic three times: from Germany 4,391 statute miles (7,067 km) east to west in four days from 1 August; return 4,391 statute miles (7,067 km) west to east in two days from 8 August; after completing the circumnavigation to Lakehurst, a final 4,391 statute miles (7,067 km) west to east landing 4 September, making three crossings in 34 days.[66]

1930s[edit]

- First scheduled transatlantic passenger flights

- From 1931 onwards, LZ 127 Graf Zeppelin operated the world's first scheduled transatlantic passenger flights, mainly between Germany and Brazil (64 such round trips overall) sometimes stopping in Spain, Miami, London, and Berlin.

- First nonstop east-to-west fixed-wing aircraft flight between European and American mainlands

- On 1–2 September 1930, Dieudonne Costes and Maurice Bellonte flew a Breguet 19 Super Bidon biplane (named Point d'Interrogation, Question Mark), 6,200 km from Paris to New York City.

- First non-stop flight to exceed 5,000 miles distance

- On 28–30 July 1931, Russell Norton Boardman and John Louis Polando flew a Bellanca Special J-300 high-wing monoplane named the Cape Cod from New York City's Floyd Bennett Field to Istanbul in 49:20 hours in completely crossing the North Atlantic and much of the Mediterranean Sea; establishing a straight-line distance record of 5,011.8 miles (8,065.7 km).[67][68]

- First solo crossing of the South Atlantic

- 27–28 November 1931. Bert Hinkler flew from Canada to New York, then via the West Indies, Venezuela, Guiana, Brazil and the South Atlantic to Great Britain in a de Havilland Puss Moth.[69]

- First solo crossing of the Atlantic by a woman

- On 20 May 1932, Amelia Earhart set off from Harbour Grace, Newfoundland, intending to fly to Paris in her single engine Lockheed Vega 5b to emulate Charles Lindbergh's solo flight. After encountering storms and a burnt exhaust pipe, Earhart landed in a pasture at Culmore, north of Derry, Northern Ireland, ending a flight lasting 14h 56m.

- First solo westbound crossing of the Atlantic

- On 18–19 August 1932, Jim Mollison, flying a de Havilland Puss Moth, flew from Dublin to New Brunswick.

- Lightest (empty weight) aircraft that crossed the Atlantic

- On 7–8 May 1933, Stanisław Skarżyński made a solo flight across the South Atlantic, covering 3,582 kilometres (2,226 mi), in a RWD-5bis – empty weight below 450 kilograms (990 lb). If considering the total takeoff weight (as per FAI records) then there is a longer distance Atlantic crossing: the distance world record holder, Piper PA-24 Comanche in this class, 1000–1750 kg. FAI[permanent dead link].

- Mass flight

- Notable mass transatlantic flight: On 1–15 July 1933, Gen. Italo Balbo of Italy led 24 Savoia-Marchetti S.55X seaplanes 6,100 statute miles (9,800 km), in a flight from Orbetello, Italy, to the Century of Progress International Exposition Chicago, Illinois, in 47h 52m. The flight made six intermediate stops. Previously, Balbo had led a flight of 12 flying boats from Rome to Rio de Janeiro, Brazil, in December 1930 – January 1931, taking nearly a month.

- First solo westbound crossing of the Atlantic by a woman and first person to solo westbound from England

- On 4–5 September 1936, Beryl Markham, flying a Percival Vega Gull from Abingdon (then in Berkshire, now Oxfordshire), intended to fly to New York, but was forced down at Cape Breton Island, Nova Scotia, due to icing of fuel tank vents.

- First transatlantic passenger service on heavier-than air aircraft

- on 24 June 1939, Pan American inaugurated transatlantic passenger service between New York and Marseilles, France, using Boeing 314 flying boats. On 8 July 1939, a service began between New York and Southampton as well. A single fare was US$375.00 (US$6,909.00 in 2019 dollars). Scheduled landplane flights started in October 1945.

1940s[edit]

- First transatlantic flight of non-rigid airships

- On 1 June 1944, two K class blimps from Blimp Squadron 14 of the United States Navy (USN) completed the first transatlantic crossing by non-rigid airships.[70]On 28 May 1944, the two K-ships (K-123 and K-130) left South Weymouth, Massachusetts, and flew approximately 16 hours to Naval Station Argentia, Newfoundland. From Argentia, the blimps flew approximately 22 hours to Lajes Field on Terceira Island in the Azores. The final leg of the first transatlantic crossing was about a 20-hour flight from the Azores to Craw Field in Port Lyautey (Kenitra), French Morocco.[71]

- First jet aircraft to cross the Atlantic Ocean

- On 14 July 1948, six de Havilland Vampire F3s of No. 54 Squadron RAF, commanded by Wing Commander D S Wilson-MacDonald, DSO, DFC, flew via Stornoway, Iceland, and Labrador to Montreal on the first leg of a goodwill tour of the U.S. and Canada.

1950s[edit]

- First jet aircraft to make a non-stop transatlantic flight

- On 21 February 1951, an RAF English Electric Canberra B Mk 2 (serial number WD932) flown by Squadron Leader A Callard of the Aeroplane & Armament Experimental Establishment, flew from Aldergrove Northern Ireland, to Gander, Newfoundland. The flight covered almost 1,800 nautical miles (3,300 km) in 4h 37 m. The aircraft was being flown to the U.S. to act as a pattern aircraft for the Martin B-57 Canberra.

- First jet aircraft transatlantic passenger service

- On 4 October 1958, British Overseas Airways Corporation (BOAC) flew the first jet airliner service using the de Havilland Comet, when G-APDC initiated the first transatlantic Comet 4 service and the first scheduled transatlantic passenger jet service in history, flying from London to New York with a stopover at Gander.

https://en.wikipedia.org/wiki/Transatlantic_flight

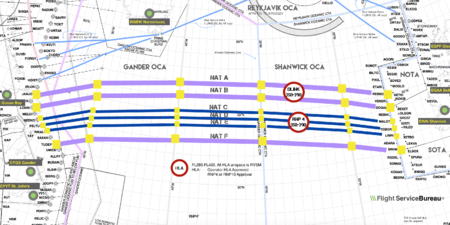

North Atlantic Tracks, officially titled the North Atlantic Organised Track System (NAT-OTS), is a structured set of transatlantic flight routes that stretch from the northeast of North America to western Europe across the Atlantic Ocean, within the North Atlantic airspace region. They ensure that aircraft are separated over the ocean, where there is little radar coverage. These heavily travelled routes are used by aircraft flying between North America and Europe, operating between the altitudes of 29,000 and 41,000 ft (8,800 and 12,500 m) inclusive. Entrance and movement along these tracks is controlled by special oceanic control centres to maintain separation between aircraft. The primary purpose of these routes is to allow air traffic control to effectively separate the aircraft. Because of the volume of NAT traffic, allowing aircraft to choose their own co-ordinates would make the ATC task far more complex. They are aligned in such a way as to minimize any head winds and maximize tail winds impact on the aircraft. This results in much more efficiency by reducing fuel burn and flight time. To make such efficiencies possible, the routes are created twice daily to take account of the shifting of the winds aloft and the principal traffic flow, eastward in North America evening and westward twelve hours later.

History[edit]

The first implementation of an organised track system across the North Atlantic was in fact for commercial shipping, dating back to 1898 when the North Atlantic Track Agreement was signed. After World War II, increasing commercial airline traffic across the North Atlantic led to difficulties for ATC in separating aircraft effectively, and so in 1961 the first occasional use of NAT Tracks was made. In 1965, the publication of NAT Tracks became a daily feature, allowing controllers to force traffic onto fixed track structures in order to effectively separate the aircraft by time, altitude, and latitude.[1] In 1966, the two agencies at Shannon and Prestwick merged to become Shanwick, with responsibility out to 30°W longitude; according to the official document "From 1st April, 1966, such a communications service between such aircraft and the said air traffic control centres as has before that date been provided by the radio stations at Ballygirreen in Ireland and Birdlip in the United Kingdom will be provided between such aircraft and the said air traffic control centre at Prestwick or such other air traffic control centre in the United Kingdom as may from time to time be nominated".[2]

Other historical dates include:

- 1977 – MNPS Introduced

- 1981 – Longitudinal separation reduced to 10 minutes

- 1996 – GPS approved for navigation on NAT; OMEGA withdrawn

- 1997 – RVSM introduced on the NAT

- 2006 – CPDLC overtakes HF as primary comms method

- 2011 – Longitudinal separation reduced to 5 minutes

- 2015 – RLAT introduced[1]

Route planning[edit]

The specific routing of the tracks is dictated based on a number of factors, the most important being the jetstream—aircraft going from North America to Europe experience tailwinds caused by the jetstream. Tracks to Europe use the jetstream to their advantage by routing along the strongest tailwinds. Because of the difference in ground speed caused by the jetstream, westbound flights tend to be longer in duration than their eastbound counterparts. North Atlantic Tracks are published by Shanwick Centre (EGGX) and Gander Centre (CZQX), in consultation with other adjacent air traffic control agencies and airlines.[citation needed]

The day prior to the tracks being published, airlines that fly the North Atlantic regularly send a preferred route message (PRM) to Gander and Shanwick. This allows the ATC agency to know what the route preferences are of the bulk of the North Atlantic traffic.

Provision of North Atlantic Track air traffic control[edit]

Air traffic controllers responsible for the Shanwick flight information region (FIR) are based at the Shanwick Oceanic Control Centre at Prestwick Centre in Ayrshire, Scotland. Air traffic controllers responsible for the Gander FIR are based at the Gander Oceanic Control Centre in Gander, Newfoundland and Labrador, Canada.[citation needed]

Flight planning[edit]

Using a NAT Track, even when they are active in the direction an aircraft is flying, is not mandatory. However, less than optimum altitude assignment, or a reroute, is likely to occur. Therefore, most operators choose to file a flight plan on a NAT Track. The correct method is to file a flight plan with an Oceanic Entry Point (OEP), then the name of the NAT Track, e.g. "NAT A" for NAT Track Alpha, and the Oceanic Exit Point (OXP).[citation needed]

A typical routing would be: DCT KONAN UL607 EVRIN DCT MALOT/M081F350 DCT 53N020W 52N030W NATA JOOPY/N0462F360 N276C TUSKY DCT PLYMM. Oceanic boundary points for the NAT Tracks are along the FIR boundary of Gander on the west side, and Shanwick on the east side.[citation needed]

While the routes change daily, they maintain a series of entrance and exit waypoints which link into the airspace system of North America and Europe. Each route is uniquely identified by a letter of the alphabet. Westbound tracks (valid from 11:30 UTC to 19:00 UTC at 30W) are indicated by the letters A,B,C,D etc. (as far as M if necessary, omitting I), where A is the northernmost track, and eastbound tracks (valid from 01:00 UTC to 08:00 UTC at 30W) are indicated by the letters Z,Y,X,W etc. (as far as N if necessary, omitting O), where Z is the southernmost track. Waypoints on the route are identified by named waypoints (or "fixes") and by the crossing of degrees of latitude and longitude (such as "54/40", indicating 54°N latitude, 40°W longitude).[citation needed]

A ‘random route’ must have a waypoint every 10 degrees of longitude. Aircraft can also join an outer track half way along.[citation needed]

Since 2017, aircraft can plan any flight level in the NAT HLA (high level airspace), with no need to follow ICAO standard cruising levels.[3]

Flying the routes[edit]

Prior to departure, airline flight dispatchers/flight operations officers will determine the best track based on destination, aircraft weight, aircraft type, prevailing winds and air traffic control route charges. The aircraft will then contact the Oceanic Center controller before entering oceanic airspace and request the track giving the estimated time of arrival at the entry point. The Oceanic Controllers then calculate the required separation distances between aircraft and issue clearances to the pilots. It may be that the track is not available at that altitude or time so an alternate track or altitude will be assigned. Aircraft cannot change assigned course or altitude without permission.

Contingency plans exist within the North Atlantic Track system to account for any operational issues that occur. For example, if an aircraft can no longer maintain the speed or altitude it was assigned, the aircraft can move off the track route and fly parallel to its track, but well away from other aircraft. Also, pilots on North Atlantic Tracks are required to inform air traffic control of any deviations in altitude or speed necessitated by avoiding weather, such as thunderstorms or turbulence.

Despite advances in navigation technology, such as GPS and LNAV, errors can and do occur. While typically not dangerous, two aircraft can violate separation requirements. On a busy day, aircraft are spaced approximately 10 minutes apart. With the introduction of TCAS, aircraft traveling along these tracks can monitor the relative position of other aircraft, thereby increasing the safety of all track users.

Since there is little radar coverage in the middle of the Atlantic, aircraft must report in as they cross various waypoints along each track, their anticipated crossing time of the next waypoint, and the waypoint after that. These reports enable the Oceanic Controllers to maintain separation between aircraft. These reports can be made to dispatchers via a satellite communications link (CPDLC) or via high frequency (HF) radios. In the case of HF reports, each aircraft operates using SELCAL (selective calling). The use of SELCAL allows an aircraft crew to be notified of incoming communications even when the aircraft's radio has been muted. Thus, crew members need not devote their attention to continuous radio listening. If the aircraft is equipped with automatic dependent surveillance, (ADS-C & ADS-B), voice position reports on HF are no longer necessary, as automatic reports are downlinked to the Oceanic Control Centre. In this case, a SELCAL check only has to be performed when entering the oceanic area and with any change in radio frequency to ensure a working backup system for the event of a datalink failure.

Maximizing traffic capacity[edit]

Increased aircraft density can be achieved by allowing closer vertical spacing of aircraft through participation in the RVSM program.[citation needed]

Additionally from 10 June 2004 the strategic lateral offset procedure (SLOP) was introduced to the North Atlantic airspace to reduce the risk of mid-air collision by spreading out aircraft laterally. It reduces the risk of collision for non-normal events such as operational altitude deviation errors and turbulence induced altitude deviations. In essence, the procedure demands that aircraft in North Atlantic airspace fly track centreline or one or two nautical mile offsets to the right of centreline only. However, the choice is left up to the pilot.[citation needed]

On 12 November 2015, a new procedure allowing for reduced lateral separation minima (RLAT) was introduced. RLAT reduces the standard distance between NAT tracks from 60 to 30 nautical miles (69 to 35 mi; 111 to 56 km), or from one whole degree of latitude to a half degree. This allows more traffic to operate on the most efficient routes, reducing fuel cost. The first RLAT tracks were published in December 2015.

The tracks reverse direction twice daily. In the daylight, all traffic on the tracks operates in a westbound flow. At night, the tracks flow eastbound towards Europe. This is done to accommodate traditional airline schedules, with departures from North America to Europe scheduled for departure in the evening thereby allowing passengers to arrive at their destination in the morning. Westbound departures typically leave Europe between early morning to late afternoon and arrive in North America from early afternoon to late evening. In this manner, a single aircraft can be efficiently utilized by flying to Europe at night and to North America in the day. The tracks are updated daily and their position may alternate on the basis of a variety of variable factors, but predominantly due to weather systems.[citation needed]

The FAA, Nav Canada, NATS and the JAA publish a NOTAM daily with the routes and flight levels to be used in each direction of travel.[citation needed] The current tracks are available online.

Space-based ADS-B[edit]

At the end of March 2019, Nav Canada and the UK’s National Air Traffic Services (NATS) activated the Aireon space-based ADS-B relayed every few seconds by 450 nmi (830 km) high Iridium satellites to air traffic control centers. Aircraft separation can be lowered from 40 nmi (74 km) longitudinally to 14–17 nmi (26–31 km), while lateral separations will be reduced from 23 to 19 nmi (43 to 35 km) in October and to 15 nmi (28 km) in November 2020. In the three following months, 31,700 flights could fly at their optimum speeds, saving up to 400–650 kg (880–1,430 lb) of fuel per crossing. Capacity is increased as NATS expects 16% more flights by 2025, while predicting that 10% of traffic will use the Organized Track System in the coming years, down from 38% today[when?].[4]

In 2018, 500,000 flights went through; annual fuel savings are expected around 38,800 t (85,500,000 lb), and may improve later.[5]

Concorde[edit]

Concorde did not travel on the North Atlantic Tracks as it flew between 45,000 and 60,000 ft (14,000 and 18,000 m), a much higher altitude than subsonic airliners. The weather variations at these altitudes were so minor that Concorde followed the same track each day. These fixed tracks were known as 'Track Sierra Mike' (SM) and 'Track Sierra Oscar' (SO) for westbound flights and 'Track Sierra November' (SN) for eastbounds. An additional route, 'Track Sierra Papa' (SP), was used for seasonal British Airways flights from London Heathrow to/from Barbados.[citation needed]

See also[edit]

https://en.wikipedia.org/wiki/North_Atlantic_Tracks

Pages in category "Airline routes"

The following 17 pages are in this category, out of 17 total. This list may not reflect recent changes (learn more).

W

Longer-term climatic changes[edit]

Climate scientists have hypothesized that the jet stream will gradually weaken as a result of global warming. Trends such as Arctic sea ice decline, reduced snow cover, evapotranspiration patterns, and other weather anomalies have caused the Arctic to heat up faster than other parts of the globe (polar amplification). This in turn reduces the temperature gradient that drives jet stream winds, which may eventually cause the jet stream to become weaker and more variable in its course.[59][60][61][62][63][64][65] As a consequence, extreme winter weather is expected to become more frequent. With a weaker jet stream, the Polar vortex has a higher probability to leak out of the polar area and bring extremely cold weather to the middle latitude regions.

Since 2007, and particularly in 2012 and early 2013, the jet stream has been at an abnormally low latitude across the UK, lying closer to the English Channel, around 50°N rather than its more usual north of Scotland latitude of around 60°N.[failed verification] However, between 1979 and 2001, the average position of the jet stream moved northward at a rate of 2.01 kilometres (1.25 mi) per year across the Northern Hemisphere. Across North America, this type of change could lead to drier conditions across the southern tier of the United States and more frequent and more intense tropical cyclones in the tropics. A similar slow poleward drift was found when studying the Southern Hemisphere jet stream over the same time frame.[66]

Other upper-level jets[edit]

Polar night jet[edit]

The polar-night jet stream forms mainly during the winter months when the nights are much longer, hence polar nights, in their respective hemispheres at around 60° latitude. The polar night jet moves at a greater height (about 24,000 metres (80,000 ft)) than it does during the summer.[67] During these dark months the air high over the poles becomes much colder than the air over the Equator. This difference in temperature gives rise to extreme air pressure differences in the stratosphere, which, when combined with the Coriolis effect, create the polar night jets, that race eastward at an altitude of about 48 kilometres (30 mi).[68] The polar vortex is circled by the polar night jet. The warmer air can only move along the edge of the polar vortex, but not enter it. Within the vortex, the cold polar air becomes increasingly cold with neither warmer air from lower latitudes nor energy from the Sun entering during the polar night.[69]

Low-level jets[edit]

There are wind maxima at lower levels of the atmosphere that are also referred to as jets.

Barrier jet[edit]

A barrier jet in the low levels forms just upstream of mountain chains, with the mountains forcing the jet to be oriented parallel to the mountains. The mountain barrier increases the strength of the low level wind by 45 percent.[70] In the North American Great Plains a southerly low-level jet helps fuel overnight thunderstorm activity during the warm season, normally in the form of mesoscale convective systems which form during the overnight hours.[71] A similar phenomenon develops across Australia, which pulls moisture poleward from the Coral Sea towards cut-off lows which form mainly across southwestern portions of the continent.[72]

Coastal jet[edit]

Coastal low-level jets are related to a sharp contrast between high temperatures over land and lower temperatures over the sea and play an important role in coastal weather, giving rise to strong coast parallel winds.[73][74][75] Most costal jets are associated with the oceanic high-pressure systems and thermal low over land.[76][77]These jets are mainly located along cold eastern boundary marine currents, in upwelling regions offshore California, Peru-Chile, Benguela, Portugal, Canary and West Australia, and offshore Yemen—Oman.[78][79][80]

Valley exit jet[edit]

A valley exit jet is a strong, down-valley, elevated air current that emerges above the intersection of the valley and its adjacent plain. These winds frequently reach a maximum of 20 m/s (72 km/h; 45 mph) at a height of 40–200 m (130–660 ft) above the ground. Surface winds below the jet may sway vegetation, but are significantly weaker.

They are likely to be found in valley regions that exhibit diurnal mountain wind systems, such as those of the dry mountain ranges of the US. Deep valleys that terminate abruptly at a plain are more impacted by these factors than are those that gradually become shallower as downvalley distance increases.[81]

Africa[edit]

The mid-level African easterly jet occurs during the Northern Hemisphere summer between 10°N and 20°N above West Africa, and the nocturnal poleward low-level jet occurs in the Great Plains of east and South Africa.[82] The low-level easterly African jet stream is considered to play a crucial role in the southwest monsoon of Africa,[83] and helps form the tropical waves which move across the tropical Atlantic and eastern Pacific oceans during the warm season.[84] The formation of the thermal low over northern Africa leads to a low-level westerly jet stream from June into October.[85]

See also[edit]

- Atmospheric river

- Block (meteorology)

- Polar vortex

- Surface weather analysis

- Sting jet

- Tornado

- Wind shear

- Weather

https://en.wikipedia.org/wiki/Jet_stream

Evapotranspiration (ET) is the sum of water evaporation and transpiration from a surface area to the atmosphere. Evaporation accounts for the movement of water to the air from sources such as the soil, canopy interception, and water bodies. Transpiration accounts for the movement of water within a plant and the subsequent exit of water as vapor through stomata in its leaves in vascular plants and phyllids in non-vascular plants. A plant that contributes to evapotranspiration is called an evapotranspirator.[1] Evapotranspiration is an important part of the water cycle.

Potential evapotranspiration (PET) is a representation of the environmental demand for evapotranspiration and represents the evapotranspiration rate of a short green crop (grass), completely shading the ground, of uniform height and with adequate water status in the soil profile. It is a reflection of the energy available to evaporate water, and of the wind available to transport the water vapor from the ground up into the lower atmosphere. Often a value for the potential evapotranspiration is calculated at a nearby climatic station on a reference surface, conventionally short grass. This value is called the reference evapotranspiration (ET0). Actual evapotranspiration is said to equal potential evapotranspiration when there is ample water. Some US states utilize a full cover alfalfa reference crop that is 0.5 m in height, rather than the short green grass reference, due to the higher value of ET from the alfalfa reference.[2]

https://en.wikipedia.org/wiki/Evapotranspiration

A circumpolar vortex, or simply polar vortex, is a large region of cold, rotating air that encircles both of Earth's polar regions. Polar vortices also exist on other rotating, low-obliquity planetary bodies.[1] The term polar vortex can be used to describe two distinct phenomena; the stratospheric polar vortex, and the tropospheric polar vortex. The stratospheric and tropospheric polar vortices both rotate in the direction of the Earth's spin, but they are distinct phenomena that have different sizes, structures, seasonal cycles, and impacts on weather.

The stratospheric polar vortex is an area of high-speed, cyclonically rotating winds around 15 km to 50 km high, poleward of 50°, and is strongest in winter. It forms in Autumn when Arctic or Antarctic temperatures cool rapidly as the polar night begins. The increased temperature difference between the pole and the tropics causes strong winds and the Coriolis effect causes the vortex to spin up. The stratospheric polar vortex breaks down in Spring as the polar night ends. A sudden stratospheric warming (SSW) is an event that occurs when the stratospheric vortex breaks down during winter, and can have significant impacts on surface weather.[citation needed]

The tropospheric polar vortex is often defined as the area poleward of the tropospheric jet stream. The equatorward edge is around 40° to 50°, and it extends from the surface up to around 10 km to 15 km. Its yearly cycle differs from the stratospheric vortex because the tropospheric vortex exists all year, but is similar to the stratospheric vortex since it is also strongest in winter when the polar regions are coldest.

The tropospheric polar vortex was first described as early as 1853.[2] The stratospheric vortex's SSWs were discovered in 1952 with radiosonde observations at altitudes higher than 20 km.[3] The tropospheric polar vortex was mentioned frequently in the news and weather media in the cold North American winter of 2013–2014, popularizing the term as an explanation of very cold temperatures.[4] The tropospheric vortex increased in public visibility in 2021 as a result of extreme frigid temperatures in the central United States, with some sources linking its effects to climate change.[5]

Ozone depletion occurs within the polar vortices – particularly over the Southern Hemisphere – reaching a maximum depletion in the spring.

Identification[edit]

The bases of the two polar vortices are located in the middle and upper troposphere and extend into the stratosphere. Beneath that lies a large mass of cold, dense Arctic air. The interface between the cold dry air mass of the pole and the warm moist air mass farther south defines the location of the polar front. The polar front is centered, roughly at 60° latitude. A polar vortex strengthens in the winter and weakens in the summer because of its dependence on the temperature difference between the equator and the poles.[14]

Polar cyclones are low-pressure zones embedded within the polar air masses, and exist year-round. The stratospheric polar vortex develops at latitudes above the subtropical jet stream.[15] Horizontally, most polar vortices have a radius of less than 1,000 kilometres (620 mi).[16] Since polar vortices exist from the stratosphere downward into the mid-troposphere,[6] a variety of heights/pressure levels are used to mark its position. The 50 hPa pressure surface is most often used to identify its stratospheric location.[17] At the level of the tropopause, the extent of closed contours of potential temperature can be used to determine its strength. Others have used levels down to the 500 hPa pressure level (about 5,460 metres (17,910 ft) above sea level during the winter) to identify the polar vortex.[18]

https://en.wikipedia.org/wiki/Polar_vortex

The Arctic oscillation (AO) or Northern Annular Mode/Northern Hemisphere Annular Mode (NAM) is a weather phenomenon at the Arctic poles north of 20 degrees latitude. It is an important mode of climate variability for the Northern Hemisphere. The southern hemisphere analogue is called the Antarctic oscillation or Southern Annular Mode (SAM). The index varies over time with no particular periodicity, and is characterized by non-seasonal sea-level pressure anomalies of one sign in the Arctic, balanced by anomalies of opposite sign centered at about 37–45° N.[1]

The North Atlantic oscillation (NAO) is a close relative of the Arctic oscillation. There is debate over whether one or the other is more fundamentally representative of the atmosphere's dynamics. The NAO may be identified in a more physically meaningful way, which may carry more impact on measurable effects of changes in the atmosphere.[2]

The Arctic oscillation index is defined using the daily or monthly 1000 hPa geopotential height anomalies from latitudes 20° N to 90° N. The anomalies are projected onto the Arctic oscillation loading pattern,[5] which is defined as the first empirical orthogonal function (EOF) of monthly mean 1000 hPa geopotential height during the 1979-2000 period. The time series is then normalized with the monthly mean index's standard deviation.

https://en.wikipedia.org/wiki/Arctic_oscillation

Geopotential height or geopotential altitude is a vertical coordinate referenced to Earth's mean sea level, an adjustment to geometric height (altitude above mean sea level) that accounts for the variation of gravity with latitude and altitude. Thus, it can be considered a "gravity-adjusted height".

Definition[edit]

At an elevation of h, the geopotential is defined as:

where is the acceleration due to gravity, is latitude, and z is the geometric elevation. Thus geopotential is the gravitational potential energy per unit mass at that elevation h.[1]

The geopotential height is:

which normalizes the geopotential to = 9.80665 m/s2, the standard gravity at mean sea level.[citation needed][1]

Usage[edit]

Geophysical sciences such as meteorology often prefer to express the horizontal pressure gradient force as the gradient of geopotential along a constant-pressure surface, because then it has the properties of a conservative force. For example, the primitive equations which weather forecast models solve use hydrostatic pressure as a vertical coordinate, and express the slopes of those pressure surfaces in terms of geopotential height.

A plot of geopotential height for a single pressure level in the atmosphere shows the troughs and ridges (highs and lows) which are typically seen on upper air charts. The geopotential thickness between pressure levels – difference of the 850 hPa and 1000 hPa geopotential heights for example – is proportional to mean virtual temperature in that layer. Geopotential height contours can be used to calculate the geostrophic wind, which is faster where the contours are more closely spaced and tangential to the geopotential height contours.[citation needed]

The National Weather Service defines geopotential height as:

See also[edit]

https://en.wikipedia.org/wiki/Geopotential_height

The primitive equations are a set of nonlinear partial differential equations that are used to approximate global atmospheric flow and are used in most atmospheric models. They consist of three main sets of balance equations:

- A continuity equation: Representing the conservation of mass.

- Conservation of momentum: Consisting of a form of the Navier–Stokes equations that describe hydrodynamical flow on the surface of a sphere under the assumption that vertical motion is much smaller than horizontal motion (hydrostasis) and that the fluid layer depth is small compared to the radius of the sphere

- A thermal energy equation: Relating the overall temperature of the system to heat sources and sinks

The primitive equations may be linearized to yield Laplace's tidal equations, an eigenvalue problem from which the analytical solution to the latitudinal structure of the flow may be determined.

In general, nearly all forms of the primitive equations relate the five variables u, v, ω, T, W, and their evolution over space and time.

The equations were first written down by Vilhelm Bjerknes.[1]

https://en.wikipedia.org/wiki/Primitive_equations

Hydrostatic pressure[edit]

In a fluid at rest, all frictional and inertial stresses vanish and the state of stress of the system is called hydrostatic. When this condition of V = 0 is applied to the Navier–Stokes equations, the gradient of pressure becomes a function of body forces only. For a barotropic fluid in a conservative force field like a gravitational force field, the pressure exerted by a fluid at equilibrium becomes a function of force exerted by gravity.

The hydrostatic pressure can be determined from a control volume analysis of an infinitesimally small cube of fluid. Since pressure is defined as the force exerted on a test area (p = FA, with p: pressure, F: force normal to area A, A: area), and the only force acting on any such small cube of fluid is the weight of the fluid column above it, hydrostatic pressure can be calculated according to the following formula:

where:

- p is the hydrostatic pressure (Pa),

- ρ is the fluid density (kg/m3),

- g is gravitational acceleration (m/s2),

- A is the test area (m2),

- z is the height (parallel to the direction of gravity) of the test area (m),

- z0 is the height of the zero reference point of the pressure (m).

For water and other liquids, this integral can be simplified significantly for many practical applications, based on the following two assumptions: Since many liquids can be considered incompressible, a reasonable good estimation can be made from assuming a constant density throughout the liquid. (The same assumption cannot be made within a gaseous environment.) Also, since the height h of the fluid column between z and z0 is often reasonably small compared to the radius of the Earth, one can neglect the variation of g. Under these circumstances, the integral is simplified into the formula:

where h is the height z − z0 of the liquid column between the test volume and the zero reference point of the pressure. This formula is often called Stevin's law.[4][5]Note that this reference point should lie at or below the surface of the liquid. Otherwise, one has to split the integral into two (or more) terms with the constant ρliquidand ρ(z′)above. For example, the absolute pressure compared to vacuum is:

where H is the total height of the liquid column above the test area to the surface, and patm is the atmospheric pressure, i.e., the pressure calculated from the remaining integral over the air column from the liquid surface to infinity. This can easily be visualized using a pressure prism.

Hydrostatic pressure has been used in the preservation of foods in a process called pascalization.[6]

https://en.wikipedia.org/wiki/Hydrostatics#Hydrostatic_pressure

Pascalization, bridgmanization, high pressure processing (HPP)[1] or high hydrostatic pressure (HHP) processing[2] is a method of preserving and sterilizingfood, in which a product is processed under very high pressure, leading to the inactivation of certain microorganisms and enzymes in the food.[3] HPP has a limited effect on covalent bonds within the food product, thus maintaining both the sensory and nutritional aspects of the product.[4] The technique was named after Blaise Pascal, a French scientist of the 17th century whose work included detailing the effects of pressure on fluids. During pascalization, more than 50,000 pounds per square inch (340 MPa, 3.4 kbar) may be applied for around fifteen minutes, leading to the inactivation of yeast, mold, and bacteria.[5][6] Pascalization is also known as bridgmanization,[7] named for physicist Percy Williams Bridgman.[8]

https://en.wikipedia.org/wiki/Pascalization

Vertical pressure variation is the variation in pressure as a function of elevation. Depending on the fluid in question and the context being referred to, it may also vary significantly in dimensions perpendicular to elevation as well, and these variations have relevance in the context of pressure gradient force and its effects. However, the vertical variation is especially significant, as it results from the pull of gravity on the fluid; namely, for the same given fluid, a decrease in elevation within it corresponds to a taller column of fluid weighing down on that point.

Basic formula[edit]

A relatively simple version [1] of the vertical fluid pressure variation is simply that the pressure difference between two elevations is the product of elevation change, gravity, and density. The equation is as follows:

- , and

where

- P is pressure,

- ρ is density,

- g is acceleration of gravity, and

- h is height.

The delta symbol indicates a change in a given variable. Since g is negative, an increase in height will correspond to a decrease in pressure, which fits with the previously mentioned reasoning about the weight of a column of fluid.

When density and gravity are approximately constant (that is, for relatively small changes in height), simply multiplying height difference, gravity, and density will yield a good approximation of pressure difference. Where different fluids are layered on top of one another, the total pressure difference would be obtained by adding the two pressure differences; the first being from point 1 to the boundary, the second being from the boundary to point 2; which would just involve substituting the ρ and Δh values for each fluid and taking the sum of the results. If the density of the fluid varies with height, mathematical integration would be required.

Whether or not density and gravity can be reasonably approximated as constant depends on the level of accuracy needed, but also on the length scale of height difference, as gravity and density also decrease with higher elevation. For density in particular, the fluid in question is also relevant; seawater, for example, is considered an incompressible fluid; its density can vary with height, but much less significantly than that of air. Thus water's density can be more reasonably approximated as constant than that of air, and given the same height difference, the pressure differences in water are approximately equal at any height.

Hydrostatic paradox[edit]

| Wikimedia Commons has media related to Hydrostatic paradox. |

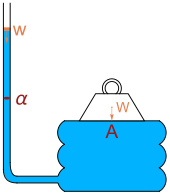

The barometric formula depends only on the height of the fluid chamber, and not on its width or length. Given a large enough height, any pressure may be attained. This feature of hydrostatics has been called the hydrostatic paradox. As expressed by W. H. Besant,[2]

- Any quantity of liquid, however small, may be made to support any weight, however large.

The Dutch scientist Simon Stevin was the first to explain the paradox mathematically.[3] In 1916 Richard Glazebrook mentioned the hydrostatic paradox as he described an arrangement he attributed to Pascal: a heavy weight W rests on a board with area A resting on a fluid bladder connected to a vertical tube with cross-sectional area α. Pouring water of weight w down the tube will eventually raise the heavy weight. Balance of forces leads to the equation

Glazebrook says, "By making the area of the board considerable and that of the tube small, a large weight W can be supported by a small weight w of water. This fact is sometimes described as the hydrostatic paradox."[4]

Demonstrations of the hydrostatic paradox are used in teaching the phenomenon.[5][6]

In the context of Earth's atmosphere[edit]

If one is to analyze the vertical pressure variation of the atmosphere of Earth, the length scale is very significant (troposphere alone being several kilometres tall; thermosphere being several hundred kilometres) and the involved fluid (air) is compressible. Gravity can still be reasonably approximated as constant, because length scales on the order of kilometres are still small in comparison to Earth's radius, which is on average about 6371 km,[7] and gravity is a function of distance from Earth's core.[8]

Density, on the other hand, varies more significantly with height. It follows from the ideal gas law that

where

- m is average mass per air molecule,

- P is pressure at a given point,

- k is the Boltzmann constant,

- T is the temperature in kelvins.

Put more simply, air density depends on air pressure. Given that air pressure also depends on air density, it would be easy to get the impression that this was circular definition, but it is simply interdependency of different variables. This then yields a more accurate formula, of the form

where

- Ph is the pressure at height h,

- P0 is the pressure at reference point 0 (typically referring to sea level),

- m is the mass per air molecule,

- g is the acceleration due to gravity,

- h is height from reference point 0,

- k is the Boltzmann constant,

- T is the temperature in kelvins.

Therefore, instead of pressure being a linear function of height as one might expect from the more simple formula given in the "basic formula" section, it is more accurately represented as an exponential function of height.

Note that in this simplification, the temperature is treated as constant, even though temperature also varies with height. However, the temperature variation within the lower layers of the atmosphere (troposphere, stratosphere) is only in the dozens of degrees, as opposed to their thermodynamic temperature, which is in the hundreds, so the temperature variation is reasonably small and is thus ignored. For smaller height differences, including those from top to bottom of even the tallest of buildings, (like the CN tower) or for mountains of comparable size, the temperature variation will easily be within the single-digits. (See also lapse rate.)

An alternative derivation, shown by the Portland State Aerospace Society,[9] is used to give height as a function of pressure instead. This may seem counter-intuitive, as pressure results from height rather than vice versa, but such a formula can be useful in finding height based on pressure difference when one knows the latter and not the former. Different formulas are presented for different kinds of approximations; for comparison with the previous formula, the first referenced from the article will be the one applying the same constant-temperature approximation; in which case:

where (with values used in the article)

- z is the elevation in meters,

- R is the specific gas constant = 287.053 J/(kg K)

- T is the absolute temperature in kelvins = 288.15 K at sea level,

- g is the acceleration due to gravity = 9.80665 m/s2 at sea level,

- P is the pressure at a given point at elevation z in Pascals, and

- P0 is pressure at the reference point = 101,325 Pa at sea level.

A more general formula derived in the same article accounts for a linear change in temperature as a function of height (lapse rate), and reduces to above when the temperature is constant:

where

- L is the atmospheric lapse rate (change in temperature divided by distance) = −6.5×10−3 K/m, and

- T0 is the temperature at the same reference point for which P = P0

and the other quantities are the same as those above. This is the recommended formula to use.

See also[edit]

https://en.wikipedia.org/wiki/Vertical_pressure_variation

The pressure-gradient force is the force that results when there is a difference in pressure across a surface. In general, a pressure is a force per unit area, across a surface. A difference in pressure across a surface then implies a difference in force, which can result in an acceleration according to Newton's second law of motion, if there is no additional force to balance it. The resulting force is always directed from the region of higher-pressure to the region of lower-pressure. When a fluid is in an equilibrium state (i.e. there are no net forces, and no acceleration), the system is referred to as being in hydrostatic equilibrium. In the case of atmospheres, the pressure-gradient force is balanced by the gravitational force, maintaining hydrostatic equilibrium. In Earth's atmosphere, for example, air pressure decreases at altitudes above Earth's surface, thus providing a pressure-gradient force which counteracts the force of gravity on the atmosphere.

Formalism[edit]

Consider a cubic parcel of fluid with a density , a height , and a surface area . The mass of the parcel can be expressed as, . Using Newton's second law, , we can then examine a pressure difference (assumed to be only in the -direction) to find the resulting force, .

The acceleration resulting from the pressure gradient is then,

.

The effects of the pressure gradient are usually expressed in this way, in terms of an acceleration, instead of in terms of a force. We can express the acceleration more precisely, for a general pressure as,

.

The direction of the resulting force (acceleration) is thus in the opposite direction of the most rapid increase of pressure.

References[edit]