In algebraic topology, a branch of mathematics, Moore space is the name given to a particular type of topological space that is the homology analogue of the Eilenberg–Maclane spaces of homotopy theory, in the sense that it has only one nonzero homology (rather than homotopy) group.

https://en.wikipedia.org/wiki/Moore_space_(algebraic_topology)

https://en.wikipedia.org/wiki/Homology_sphere#Cosmology

In algebraic topology, the Betti numbers are used to distinguish topological spaces based on the connectivity of n-dimensional simplicial complexes. For the most reasonable finite-dimensional spaces (such as compact manifolds, finite simplicial complexes or CW complexes), the sequence of Betti numbers is 0 from some point onward (Betti numbers vanish above the dimension of a space), and they are all finite.

The nth Betti number represents the rank of the nth homology group, denoted Hn, which tells us the maximum number of cuts that can be made before separating a surface into two pieces or 0-cycles, 1-cycles, etc.[1] For example, if then , if then , if then , if then , etc. Note that only the ranks of infinite groups are considered, so for example if , where is the finite cyclic group of order 2, then . These finite components of the homology groups are their torsion subgroups, and they are denoted by torsion coefficients.

The term "Betti numbers" was coined by Henri Poincaré after Enrico Betti. The modern formulation is due to Emmy Noether. Betti numbers are used today in fields such as simplicial homology, computer science, digital images, etc.

https://en.wikipedia.org/wiki/Betti_number

In homotopy theory, a branch of algebraic topology, a Postnikov system (or Postnikov tower) is a way of decomposing a topological space's homotopy groupsusing an inverse system of topological spaces whose homotopy type at degree agrees with the truncated homotopy type of the original space . Postnikov systems were introduced by, and are named after, Mikhail Postnikov.

https://en.wikipedia.org/wiki/Postnikov_system

Andrei Andreevich Bolibrukh (Russian: Андрей Андреевич Болибрух) (30 January 1950 – 11 November 2003) was a Soviet and Russian mathematician. He was known for his work on ordinary differential equations especially Hilbert's twenty-first problem (Riemann–Hilbert problem).[1] Bolibrukh was the author of about a hundred research articles on theory of ordinary differential equations including Riemann–Hilbert problem and Fuchsian system.[2]

https://en.wikipedia.org/wiki/Andrei_Bolibrukh

In mathematics, the equations governing the isomonodromic deformation of meromorphic linear systems of ordinary differential equations are, in a fairly precise sense, the most fundamental exact nonlinear differential equations. As a result, their solutions and properties lie at the heart of the field of exact nonlinearity and integrable systems.

Isomonodromic deformations were first studied by Richard Fuchs, with early pioneering contributions from Lazarus Fuchs, Paul Painlevé, René Garnier, and Ludwig Schlesinger. Inspired by results in statistical mechanics, a seminal contribution to the theory was made by Michio Jimbo, Tetsuji Miwa, and Kimio Ueno, who studied cases with arbitrary singularity structure.

https://en.wikipedia.org/wiki/Isomonodromic_deformation#Fuchsian_systems_and_Schlesinger's_equations

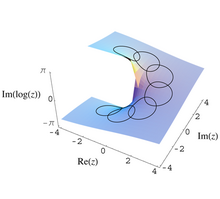

In mathematics, monodromy is the study of how objects from mathematical analysis, algebraic topology, algebraic geometryand differential geometry behave as they "run round" a singularity. As the name implies, the fundamental meaning of monodromy comes from "running round singly". It is closely associated with covering maps and their degeneration into ramification; the aspect giving rise to monodromy phenomena is that certain functions we may wish to define fail to be single-valued as we "run round" a path encircling a singularity. The failure of monodromy can be measured by defining a monodromy group: a group of transformations acting on the data that encodes what does happen as we "run round" in one dimension. Lack of monodromy is sometimes called polydromy.[1]

https://en.wikipedia.org/wiki/Monodromy

In geometry and physics, spinors /spɪnər/ are elements of a complex vector space that can be associated with Euclidean space.[b] Like geometric vectors and more general tensors, spinors transform linearly when the Euclidean space is subjected to a slight (infinitesimal) rotation.[c] However, when a sequence of such small rotations is composed (integrated) to form an overall final rotation, the resulting spinor transformation depends on which sequence of small rotations was used. Unlike vectors and tensors, a spinor transforms to its negative when the space is continuously rotated through a complete turn from 0° to 360° (see picture). This property characterizes spinors: spinors can be viewed as the "square roots" of vectors (although this is inaccurate and may be misleading; they are better viewed as "square roots" of sections of vector bundles – in the case of the exterior algebra bundle of the cotangent bundle, they thus become "square roots" of differential forms).

https://en.wikipedia.org/wiki/Spinor

The helicoid, after the plane and the catenoid, is the third minimal surface to be known.

https://en.wikipedia.org/wiki/Helicoid

https://en.wikipedia.org/wiki/Catenoid#Helicoid_transformation

In topology, a branch of mathematics, a fibration is a generalization of the notion of a fiber bundle. A fiber bundle makes precise the idea of one topological space(called a fiber) being "parameterized" by another topological space (called a base). A fibration is like a fiber bundle, except that the fibers need not be the same space, nor even homeomorphic; rather, they are just homotopy equivalent. Weak fibrations discard even this equivalence for a more technical property.

Fibrations do not necessarily have the local Cartesian product structure that defines the more restricted fiber bundle case, but something weaker that still allows "sideways" movement from fiber to fiber. Fiber bundles have a particularly simple homotopy theory that allows topological information about the bundle to be inferred from information about one or both of these constituent spaces. A fibration satisfies an additional condition (the homotopy lifting property) guaranteeing that it will behave like a fiber bundle from the point of view of homotopy theory.

Fibrations are dual to cofibrations, with a correspondingly dual notion of the homotopy extension property; this is loosely known as Eckmann–Hilton duality.

https://en.wikipedia.org/wiki/Fibration

In mathematics, homotopy theory is a systematic study of situations in which maps come with homotopies between them. It originated as a topic in algebraic topology but nowadays is studied as an independent discipline. Besides algebraic topology, the theory has also been used in other areas of mathematics such as algebraic geometry (e.g., A¹ homotopy theory) and category theory (specifically the study of higher categories).

https://en.wikipedia.org/wiki/Homotopy_theory

In mathematics, and more specifically in linear algebra, a linear map (also called a linear mapping, linear transformation, vector space homomorphism, or in some contexts linear function) is a mapping between two vector spaces that preserves the operations of vector addition and scalar multiplication. The same names and the same definition are also used for the more general case of modules over a ring; see Module homomorphism.

If a linear map is a bijection then it is called a linear isomorphism. In the case where , a linear map is called a (linear) endomorphism. Sometimes the term linear operator refers to this case,[1] but the term "linear operator" can have different meanings for different conventions: for example, it can be used to emphasize that and are real vector spaces (not necessarily with ),[citation needed] or it can be used to emphasize that is a function space, which is a common convention in functional analysis.[2] Sometimes the term linear function has the same meaning as linear map, while in analysis it does not.

A linear map from V to W always maps the origin of V to the origin of W. Moreover, it maps linear subspaces in V onto linear subspaces in W (possibly of a lower dimension);[3] for example, it maps a plane through the origin in V to either a plane through the origin in W, a line through the origin in W, or just the origin in W. Linear maps can often be represented as matrices, and simple examples include rotation and reflection linear transformations.

In the language of category theory, linear maps are the morphisms of vector spaces.

https://en.wikipedia.org/wiki/Linear_map

A scalar is an element of a field which is used to define a vector space. A quantity described by multiple scalars, such as having both direction and magnitude, is called a vector.[1]

In linear algebra, real numbers or generally elements of a field are called scalars and relate to vectors in an associated vector space through the operation of scalar multiplication (defined in the vector space), in which a vector can be multiplied by a scalar in the defined way to produce another vector.[2][3][4] Generally speaking, a vector space may be defined by using any field instead of real numbers (such as complex numbers). Then scalars of that vector space will be elements of the associated field (such as complex numbers).

A scalar product operation – not to be confused with scalar multiplication – may be defined on a vector space, allowing two vectors to be multiplied in the defined way to produce a scalar. A vector space equipped with a scalar product is called an inner product space.

The real component of a quaternion is also called its scalar part.

The term scalar is also sometimes used informally to mean a vector, matrix, tensor, or other, usually, "compound" value that is actually reduced to a single component. Thus, for example, the product of a 1 × n matrix and an n × 1 matrix, which is formally a 1 × 1 matrix, is often said to be a scalar.

The term scalar matrix is used to denote a matrix of the form kI where k is a scalar and I is the identity matrix.

https://en.wikipedia.org/wiki/Scalar_(mathematics)

In mathematics, an inner product space (or, rarely, a Hausdorff pre-Hilbert space[1][2]) is a vector space with a binary operation called an inner product.

https://en.wikipedia.org/wiki/Inner_product_space

In mathematics, a space is a set (sometimes called a universe) with some added structure.

https://en.wikipedia.org/wiki/Space_(mathematics)

In probability theory, a probability space or a probability triple is a mathematical construct that provides a formal model of a random process or "experiment". For example, one can define a probability space which models the throwing of a die.

https://en.wikipedia.org/wiki/Probability_space

In linear algebra, eigendecomposition is the factorization of a matrix into a canonical form, whereby the matrix is represented in terms of its eigenvalues and eigenvectors. Only diagonalizable matrices can be factorized in this way. When the matrix being factorized is normal or real symmetric matrix, the decomposition is called "spectral decomposition", derived from the Spectral theorem.

https://en.wikipedia.org/wiki/Eigendecomposition_of_a_matrix

In linear algebra, a symmetric matrix is a square matrix that is equal to its transpose. Formally,

Because equal matrices have equal dimensions, only square matrices can be symmetric.

The entries of a symmetric matrix are symmetric with respect to the main diagonal. So if denotes the entry in the th row and th column then

for all indices and

Every square diagonal matrix is symmetric, since all off-diagonal elements are zero. Similarly in characteristic different from 2, each diagonal element of a skew-symmetric matrix must be zero, since each is its own negative.

In linear algebra, a real symmetric matrix represents a self-adjoint operator[1] over a real inner product space. The corresponding object for a complex inner product space is a Hermitian matrix with complex-valued entries, which is equal to its conjugate transpose. Therefore, in linear algebra over the complex numbers, it is often assumed that a symmetric matrix refers to one which has real-valued entries. Symmetric matrices appear naturally in a variety of applications, and typical numerical linear algebra software makes special accommodations for them.

https://en.wikipedia.org/wiki/Symmetric_matrix

https://en.wikipedia.org/wiki/Symmetry

https://en.wikipedia.org/wiki/Supersymmetry

In linear algebra, an eigenvector (/ˈaɪɡənˌvɛktər/) or characteristic vector of a linear transformation is a nonzero vector that changes at most by a scalar factor when that linear transformation is applied to it. The corresponding eigenvalue, often denoted by ,[1] is the factor by which the eigenvector is scaled.

Geometrically, an eigenvector, corresponding to a real nonzero eigenvalue, points in a direction in which it is stretched by the transformation and the eigenvalue is the factor by which it is stretched. If the eigenvalue is negative, the direction is reversed.[2] Loosely speaking, in a multidimensional vector space, the eigenvector is not rotated.

https://en.wikipedia.org/wiki/Eigenvalues_and_eigenvectors

The eigenstate thermalization hypothesis (or ETH) is a set of ideas which purports to explain when and why an isolated quantum mechanical system can be accurately described using equilibrium statistical mechanics. In particular, it is devoted to understanding how systems which are initially prepared in far-from-equilibrium states can evolve in time to a state which appears to be in thermal equilibrium. The phrase "eigenstate thermalization" was first coined by Mark Srednicki in 1994,[1] after similar ideas had been introduced by Josh Deutsch in 1991.[2] The principal philosophy underlying the eigenstate thermalization hypothesis is that instead of explaining the ergodicity of a thermodynamic system through the mechanism of dynamical chaos, as is done in classical mechanics, one should instead examine the properties of matrix elements of observable quantities in individual energy eigenstates of the system.

https://en.wikipedia.org/wiki/Eigenstate_thermalization_hypothesis

Catabolism (/kəˈtæbəlɪsm/) is the set of metabolic pathways that breaks down molecules into smaller units that are either oxidized to release energy or used in other anabolic reactions.[1] Catabolism breaks down large molecules (such as polysaccharides, lipids, nucleic acids, and proteins) into smaller units (such as monosaccharides, fatty acids, nucleotides, and amino acids, respectively). Catabolism is the breaking-down aspect of metabolism, whereas anabolismis the building-up aspect.

https://en.wikipedia.org/wiki/Catabolism

In physics, specifically statistical mechanics, an ensemble (also statistical ensemble) is an idealization consisting of a large number of virtual copies (sometimes infinitely many) of a system, considered all at once, each of which represents a possible state that the real system might be in. In other words, a statistical ensemble is a probability distribution for the state of the system. The concept of an ensemble was introduced by J. Willard Gibbs in 1902.[1]

A thermodynamic ensemble is a specific variety of statistical ensemble that, among other properties, is in statistical equilibrium (defined below), and is used to derive the properties of thermodynamic systems from the laws of classical or quantum mechanics.[2][3]

https://en.wikipedia.org/wiki/Statistical_ensemble_(mathematical_physics)

The internal energy of a thermodynamic system is the energy contained within it. It is the energy necessary to create or prepare the system in any given internal state. It does not include the kinetic energy of motion of the system as a whole, nor the potential energy of the system as a whole due to external force fields, including the energy of displacement of the surroundings of the system. It keeps account of the gains and losses of energy of the system that are due to changes in its internal state.[1][2] The internal energy is measured as a difference from a reference zero defined by a standard state. The difference is determined by thermodynamic processes that carry the system between the reference state and the current state of interest.

https://en.wikipedia.org/wiki/Internal_energy

https://en.wikipedia.org/wiki/potential_energy

https://en.wikipedia.org/wiki/kinetic_energy

https://en.wikipedia.org/wiki/non-ideal_gas

https://en.wikipedia.org/wiki/conservation_of_momentum

https://en.wikipedia.org/wiki/chemical_property

https://en.wikipedia.org/wiki/physical_property

https://en.wikipedia.org/wiki/nuclear_transmutation

https://en.wikipedia.org/wiki/plasma

https://en.wikipedia.org/wiki/Thermodynamic_process

https://en.wikipedia.org/wiki/Thermodynamics

https://en.wikipedia.org/wiki/Thermodynamic_equilibrium

https://en.wikipedia.org/wiki/Observable_universe

https://en.wikipedia.org/wiki/Cosmic_microwave_background

https://en.wikipedia.org/wiki/Lambda-CDM_model

https://en.wikipedia.org/wiki/Atmosphere_of_Earth

https://en.wikipedia.org/wiki/Earth

https://en.wikipedia.org/wiki/Nucleon

https://en.wikipedia.org/wiki/Pressuron

https://en.wikipedia.org/wiki/Preon

https://en.wikipedia.org/wiki/Oscillation

https://en.wikipedia.org/wiki/Hooke

https://en.wikipedia.org/wiki/Boyle

https://en.wikipedia.org/wiki/Hyugens

https://en.wikipedia.org/wiki/Christiaan_Huygens

https://en.wikipedia.org/wiki/Robert_Boyle

https://en.wikipedia.org/wiki/Antoine_Lavoisier

https://en.wikipedia.org/wiki/Robert_Hooke

https://en.wikipedia.org/wiki/Electromagnetic_radiation

https://en.wikipedia.org/wiki/Graviton

https://en.wikipedia.org/wiki/Spindle

https://en.wikipedia.org/wiki/Tapestry

https://en.wikipedia.org/wiki/Filament

https://en.wikipedia.org/wiki/String

https://en.wikipedia.org/wiki/Bundle

https://en.wikipedia.org/wiki/Transform

https://en.wikipedia.org/wiki/Linear

https://en.wikipedia.org/wiki/Vacuum

https://en.wikipedia.org/wiki/Galaxy_filament

https://en.wikipedia.org/wiki/Accretion_disk

https://en.wikipedia.org/wiki/Black_hole

https://en.wikipedia.org/wiki/Astrophysical_jet

https://en.wikipedia.org/wiki/Explosive

https://en.wikipedia.org/wiki/compression

https://en.wikipedia.org/wiki/matrix

https://en.wikipedia.org/wiki/collapse

https://en.wikipedia.org/wiki/implosion

https://en.wikipedia.org/wiki/cascade

https://en.wikipedia.org/wiki/expansion

https://en.wikipedia.org/wiki/refraction

https://en.wikipedia.org/wiki/mirror

https://en.wikipedia.org/wiki/Psychological_statistics

https://psycnet.apa.org/fulltext/1988-18958-001.html

https://psychology.wikia.org/wiki/MANOVA

ANOVA - Psychology Stai

A grain elevator is an agrarian facility complex designed to stockpile or store grain. In the grain trade, the term "grain elevator" also describes a tower containing a bucket elevator or a pneumatic conveyor, which scoops up grain from a lower level and deposits it in a silo or other storage facility.

In most cases, grain elevator also describes the entire elevator complex, including receiving and testing offices, weighbridges, and storage facilities. It may also mean organizations that operate or control several individual elevators, in different locations. In Australia, the term describes only the lifting mechanism.

Before the advent of the grain elevator, grain was usually handled in bags rather than in bulk (large quantities of loose grain). Dart's Elevator was a major innovation. It was invented by Joseph Dart, a merchant, and Robert Dunbar, an engineer, in 1842 and 1843, in Buffalo, New York. Using the steam-powered flour mills of Oliver Evans as their model, they invented the marine leg, which scooped loose grain out of the hulls of ships and elevated it to the top of a marine tower.[1]

Early grain elevators and bins were often built of framed or cribbed wood, and were prone to fire. Grain-elevator bins, tanks, and silos are now usually made of steel or reinforced concrete. Bucket elevators are used to lift grain to a distributor or consignor, from which it falls through spouts and/or conveyors and into one or more bins, silos, or tanks in a facility. When desired, silos, bins, and tanks are emptied by gravity flow, sweep augers, and conveyors. As grain is emptied from bins, tanks, and silos, it is conveyed, blended, and weighted into trucks, railroad cars, or barges for shipment.

Elevator explosions[edit]

Given a large enough suspension of combustible flour or grain dust in the air, a significant explosion can occur. A historical example of the destructive power of grain explosions is the 1878 explosion of the Washburn "A" Mill in Minneapolis, Minnesota, which killed 18, leveled two nearby mills, damaged many others, and caused a destructive fire that gutted much of the nearby milling district. (The Washburn "A" mill was later rebuilt and continued to be used until 1965.) Another example occurred in 1998, when the DeBruce grain elevator in Wichita, Kansas, exploded and killed seven people.[38] A recent example is an explosion on October 29, 2011 at the Bartlett Grain Company in Atchison, Kansas. The death toll was six people. Two more men received severe burns, but the remaining four were not hurt.[39]

Almost any finely divided organic substance becomes an explosive material when dispersed as an air suspension; hence, a very fine flour is dangerously explosive in air suspension. This poses a significant risk when milling grain to produce flour, so mills go to great lengths to remove sources of sparks. These measures include carefully sifting the grain before it is milled or ground to remove stones, which could strike sparks from the millstones, and the use of magnets to remove metallic debris able to strike sparks.

The earliest recorded flour explosion took place in an Italian mill in 1785, but many have occurred since. These two references give numbers of recorded flour and dust explosions in the United States in 1994:[40] and 1997[41] In the ten-year period up to and including 1997, there were 129 explosions.

https://en.wikipedia.org/wiki/Grain_elevator#Elevator_explosions

An explosive (or explosive material) is a reactive substance that contains a great amount of potential energy that can produce an explosion if released suddenly, usually accompanied by the production of light, heat, sound, and pressure. An explosive charge is a measured quantity of explosive material, which may either be composed solely of one ingredient or be a mixture containing at least two substances.

The potential energy stored in an explosive material may, for example, be

- chemical energy, such as nitroglycerin or grain dust

- pressurized gas, such as a gas cylinder, aerosol can, or BLEVE

- nuclear energy, such as in the fissile isotopes uranium-235 and plutonium-239

Explosive materials may be categorized by the speed at which they expand. Materials that detonate (the front of the chemical reaction moves faster through the material than the speed of sound) are said to be "high explosives" and materials that deflagrate are said to be "low explosives". Explosives may also be categorized by their sensitivity. Sensitive materials that can be initiated by a relatively small amount of heat or pressure are primary explosives and materials that are relatively insensitive are secondary or tertiary explosives.

A wide variety of chemicals can explode; a smaller number are manufactured specifically for the purpose of being used as explosives. The remainder are too dangerous, sensitive, toxic, expensive, unstable, or prone to decomposition or degradation over short time spans.

In contrast, some materials are merely combustible or flammable if they burn without exploding.

The distinction, however, is not razor-sharp. Certain materials—dusts, powders, gases, or volatile organic liquids—may be simply combustible or flammable under ordinary conditions, but become explosive in specific situations or forms, such as dispersed airborne clouds, or confinement or sudden release.

https://en.wikipedia.org/wiki/Explosive

Thermionic emission is the liberation of electrons from an electrode by virtue of its temperature (releasing of energy supplied by heat). This occurs because the thermal energy given to the charge carrier overcomes the work function of the material. The charge carriers can be electrons or ions, and in older literature are sometimes referred to as thermions. After emission, a charge that is equal in magnitude and opposite in sign to the total charge emitted is initially left behind in the emitting region. But if the emitter is connected to a battery, the charge left behind is neutralized by charge supplied by the battery as the emitted charge carriers move away from the emitter, and finally the emitter will be in the same state as it was before emission.

The classical example of thermionic emission is that of electrons from a hot cathode into a vacuum (also known as thermal electron emission or the Edison effect) in a vacuum tube. The hot cathode can be a metal filament, a coated metal filament, or a separate structure of metal or carbides or borides of transition metals. Vacuum emission from metals tends to become significant only for temperatures over 1,000 K (730 °C; 1,340 °F).

This process is crucially important in the operation of a variety of electronic devices and can be used for electricity generation (such as thermionic converters and electrodynamic tethers) or cooling. The magnitude of the charge flow increases dramatically with increasing temperature.

The term 'thermionic emission' is now also used to refer to any thermally-excited charge emission process, even when the charge is emitted from one solid-state region into another.

https://en.wikipedia.org/wiki/Thermionic_emission

https://en.wikipedia.org/wiki/Electrical_grid

https://en.wikipedia.org/wiki/Electric_power_conversion

https://en.wikipedia.org/wiki/Grid-tie_inverter

https://en.wikipedia.org/wiki/Nuclear_reactor

https://en.wikipedia.org/wiki/Sewerage

https://en.wikipedia.org/wiki/Hydroelectricity

https://en.wikipedia.org/wiki/Synchronverter

https://en.wikipedia.org/wiki/Water_supply_network

https://en.wikipedia.org/wiki/Pressure_vessel

https://en.wikipedia.org/wiki/Diving_chamber

https://en.wikipedia.org/wiki/Tether

https://en.wikipedia.org/wiki/Waveguide

https://en.wikipedia.org/wiki/pipe

https://en.wikipedia.org/wiki/F1_cathode

https://en.wikipedia.org/wiki/hollow_cathode

https://en.wikipedia.org/wiki/cold_cathode

https://en.wikipedia.org/wiki/linear_motor

https://en.wikipedia.org/wiki/linear_induction_motor

https://en.wikipedia.org/wiki/magnet

Vortex Plane

Tuesday, September 21, 2021

09-21-2021-1506 - fluid thread breakup

Fluid thread breakup is the process by which a single mass of fluid breaks into several smaller fluid masses. The process is characterized by the elongation of the fluid mass forming thin, thread-like regions between larger nodules of fluid. The thread-like regions continue to thin until they break, forming individual droplets of fluid.

Thread breakup occurs where two fluids or a fluid in a vacuum form a free surface with surface energy. If more surface area is present than the minimum required to contain the volume of fluid, the system has an excess of surface energy. A system not at the minimum energy state will attempt to rearrange so as to move toward the lower energy state, leading to the breakup of the fluid into smaller masses to minimize the system surface energy by reducing the surface area. The exact outcome of the thread breakup process is dependent on the surface tension, viscosity, density, and diameter of the thread undergoing breakup.

https://en.wikipedia.org/wiki/Fluid_thread_breakup

https://en.wikipedia.org/wiki/Category:Fluid_dynamic_instability

https://en.wikipedia.org/wiki/Category:Plasma_instabilities

https://en.wikipedia.org/wiki/Category:Fluid_dynamics

Tuesday, September 21, 2021

09-21-2021-1507 - vortex sheet

A vortex sheet is a term used in fluid mechanics for a surface across which there is a discontinuity in fluid velocity, such as in slippage of one layer of fluid over another.[1] While the tangential components of the flow velocity are discontinuous across the vortex sheet, the normal component of the flow velocity is continuous. The discontinuity in the tangential velocity means the flow has infinite vorticity on a vortex sheet.

At high Reynolds numbers, vortex sheets tend to be unstable. In particular, they may exhibit Kelvin–Helmholtz instability.

The formulation of the vortex sheet equation of motion is given in terms of a complex coordinate . The sheet is described parametrically by where is the arclength between coordinate and a reference point, and is time. Let denote the strength of the sheet, that is, the jump in the tangential discontinuity. Then the velocity field induced by the sheet is

The integral in the above equation is a Cauchy principal value integral. We now define as the integrated sheet strength or circulation between a point with arc length and the reference material point in the sheet.

As a consequence of Kelvin's circulation theorem, in the absence of external forces on the sheet, the circulation between any two material points in the sheet remains conserved, so . The equation of motion of the sheet can be rewritten in terms of and by a change of variable. The parameter is replaced by . That is,

This nonlinear integro-differential equation is called the Birkoff-Rott equation. It describes the evolution of the vortex sheet given initial conditions. Greater details on vortex sheets can be found in the textbook by Saffman (1977).

https://en.wikipedia.org/wiki/Vortex_sheet

https://en.wikipedia.org/wiki/Category:Fluid_dynamic_instability

https://en.wikipedia.org/wiki/Category:Plasma_instabilities

https://en.wikipedia.org/wiki/Category:Fluid_dynamics

Oscillation is the repetitive variation, typically in time, of some measure about a central value (often a point of equilibrium) or between two or more different states. The term vibration is precisely used to describe mechanical oscillation. Familiar examples of oscillation include a swinging pendulum and alternating current.

Oscillations occur not only in mechanical systems but also in dynamic systems in virtually every area of science: for example the beating of the human heart (for circulation), business cycles in economics, predator–prey population cycles in ecology, geothermal geysers in geology, vibration of strings in guitar and other string instruments, periodic firing of nerve cells in the brain, and the periodic swelling of Cepheid variable stars in astronomy.

https://en.wikipedia.org/wiki/Oscillation

The Kelvin–Helmholtz instability (after Lord Kelvin and Hermann von Helmholtz) typically occurs when there is velocity shear in a single continuous fluid, or additionally where there is a velocity difference across the interface between two fluids. A common example is seen when wind blows across a water surface; the instability constant manifests as waves. Kelvin-Helmholtz instabilities are also visible in the atmospheres of planets and moons, such as in cloud formations on Earth or the Red Spot on Jupiter, and the atmospheres (coronas) of stars, including the sun's.[1]

https://en.wikipedia.org/wiki/Kelvin–Helmholtz_instability

https://en.wikipedia.org/wiki/Non-Hermitian_quantum_mechanics

Tuesday, September 21, 2021

09-21-2021-1501 - Gamow–Sommerfeld factor gamow factor Gamow Radium Curie George Gamow window

Tuesday, September 21, 2021

09-21-2021-1500 - Coulomb barrier

Monday, September 20, 2021

09-20-2021-1052 - mass–energy equivalence mass energy equivalence charge mass equivalence zero setting etc.

Monday, September 20, 2021

09-20-2021-0859 - Matrix Mechanics

https://en.wikipedia.org/wiki/Scalar_field

https://en.wikipedia.org/wiki/Ladder_operator

https://nikiyaantonbettey.blogspot.com/2021/09/09-18-2021-0742-electron-gun-also.html

https://nikiyaantonbettey.blogspot.com/2021/09/09-18-2021-0745-synchronous-electric.html

https://nikiyaantonbettey.blogspot.com/2021/09/09-18-2021-0738-magnetic-scalar.html

https://nikiyaantonbettey.blogspot.com/2021/09/09-18-2021-0739-pyrolytic-carbon.html

https://nikiyaantonbettey.blogspot.com/2021/09/09-18-2021-0356-fixed-stars.html

https://nikiyaantonbettey.blogspot.com/2021/09/09-19-2021-1447-bunsen-detected.html

The holographic principle is a tenet of string theories and a supposed property of quantum gravity that states that the description of a volume of space can be thought of as encoded on a lower-dimensional boundary to the region—such as a light-like boundary like a gravitational horizon. First proposed by Gerard 't Hooft, it was given a precise string-theory interpretation by Leonard Susskind,[1] who combined his ideas with previous ones of 't Hooft and Charles Thorn.[1][2] As pointed out by Raphael Bousso,[3] Thorn observed in 1978 that string theory admits a lower-dimensional description in which gravity emerges from it in what would now be called a holographic way. The prime example of holography is the AdS/CFT correspondence.

The holographic principle was inspired by black hole thermodynamics, which conjectures that the maximal entropy in any region scales with the radius squared, and not cubed as might be expected. In the case of a black hole, the insight was that the informational content of all the objects that have fallen into the hole might be entirely contained in surface fluctuations of the event horizon. The holographic principle resolves the black hole information paradox within the framework of string theory.[4] However, there exist classical solutions to the Einstein equations that allow values of the entropy larger than those allowed by an area law, hence in principle larger than those of a black hole. These are the so-called "Wheeler's bags of gold". The existence of such solutions conflicts with the holographic interpretation, and their effects in a quantum theory of gravity including the holographic principle are not fully understood yet.[5]

https://en.wikipedia.org/wiki/Holographic_principle

https://en.wikipedia.org/wiki/Stephen_Hawking

https://en.wikipedia.org/wiki/Alexander_Markovich_Polyakov

https://en.wikipedia.org/wiki/Edward_Witten

https://en.wikipedia.org/wiki/Gerard_%27t_Hooft

https://en.wikipedia.org/wiki/Black_hole_thermodynamics

https://en.wikipedia.org/wiki/Dihedral_angle

No comments:

Post a Comment