PANs are secondary pollutants, which means they are not directly emitted as exhaust from power plants or internal combustion engines, but they are formed from other pollutants by chemical reactions in the atmosphere.

Free radical reactions catalyzed by ultraviolet light from the sun oxidize unburned hydrocarbons to aldehydes, ketones, and dicarbonyl compounds, whose secondary reactions create peroxyacyl radicals, which combine with nitrogen dioxide to form peroxyacyl nitrates.

he most common peroxyacyl radical is peroxyacetyl, which can be formed from the free radical oxidation of acetaldehyde, various ketones, or the photolysis of dicarbonyl compounds such as methylglyoxal or diacetyl.

photolysis of dicarbonyl compounds such as methylglyoxal or diacetyl.

Since they dissociate quite slowly in the atmosphere into radicals and NO2,

Both PANs and their chlorinated derivates are said to be mutagenic, as they can be a factor causing skin cancer.

https://en.wikipedia.org/wiki/Peroxyacyl_nitrates

Photodissociation, photolysis, or photodecomposition is a chemical reaction in which a chemical compound is broken down by photons. It is defined as the interaction of one or more photons with one target molecule. Photodissociation is not limited to visible light. Any photon with sufficient energy can affect the chemical bonds of a chemical compound. Since a photon's energy is inversely proportional to its wavelength, electromagnetic waves with the energy of visible light or higher, such as ultraviolet light, x-rays and gamma rays are usually involved in such reactions.

Photolysis in photosynthesis[edit]

Photolysis is part of the light-dependent reaction or light phase or photochemical phase or Hill reaction of photosynthesis. The general reaction of photosynthetic photolysis can be given as

- H2A + 2 photons (light) → 2 e− + 2 H+ + A

The chemical nature of "A" depends on the type of organism. In purple sulfur bacteria, hydrogen sulfide (H2S) is oxidized to sulfur (S). In oxygenic photosynthesis, water (H2O) serves as a substrate for photolysis resulting in the generation of diatomic oxygen(O2). This is the process which returns oxygen to Earth's atmosphere. Photolysis of water occurs in the thylakoids of cyanobacteriaand the chloroplasts of green algae and plants.

Energy transfer models[edit]

The conventional, semi-classical, model describes the photosynthetic energy transfer process as one in which excitation energy hops from light-capturing pigment molecules to reaction center molecules step-by-step down the molecular energy ladder.

The effectiveness of photons of different wavelengths depends on the absorption spectra of the photosynthetic pigments in the organism. Chlorophylls absorb light in the violet-blue and red parts of the spectrum, while accessory pigments capture other wavelengths as well. The phycobilins of red algae absorb blue-green light which penetrates deeper into water than red light, enabling them to photosynthesize in deep waters. Each absorbed photon causes the formation of an exciton (an electron excited to a higher energy state) in the pigment molecule. The energy of the exciton is transferred to a chlorophyll molecule (P680, where P stands for pigment and 680 for its absorption maximum at 680 nm) in the reaction center of photosystem II via resonance energy transfer. P680 can also directly absorb a photon at a suitable wavelength.

Photolysis during photosynthesis occurs in a series of light-driven oxidation events. The energized electron (exciton) of P680 is captured by a primary electron acceptor of the photosynthetic electron transfer chain and thus exits photosystem II. In order to repeat the reaction, the electron in the reaction center needs to be replenished. This occurs by oxidation of water in the case of oxygenic photosynthesis. The electron-deficient reaction center of photosystem II (P680*) is the strongest biological oxidizing agentyet discovered, which allows it to break apart molecules as stable as water.[1]

The water-splitting reaction is catalyzed by the oxygen evolving complex of photosystem II. This protein-bound inorganic complex contains four manganese ions, plus calcium and chloride ions as cofactors. Two water molecules are complexed by the manganese cluster, which then undergoes a series of four electron removals (oxidations) to replenish the reaction center of photosystem II. At the end of this cycle, free oxygen (O2) is generated and the hydrogen of the water molecules has been converted to four protons released into the thylakoid lumen (Dolai's S-state diagrams).[citation needed]

These protons, as well as additional protons pumped across the thylakoid membrane coupled with the electron transfer chain, form a proton gradient across the membrane that drives photophosphorylation and thus the generation of chemical energy in the form of adenosine triphosphate (ATP). The electrons reach the P700 reaction center of photosystem I where they are energized again by light. They are passed down another electron transfer chain and finally combine with the coenzyme NADP+ and protons outside the thylakoids to form NADPH. Thus, the net oxidation reaction of water photolysis can be written as:

- 2 H2O + 2 NADP+ + 8 photons (light) → 2 NADPH + 2 H+ + O2

The free energy change (ΔG) for this reaction is 102 kilocalories per mole. Since the energy of light at 700 nm is about 40 kilocalories per mole of photons, approximately 320 kilocalories of light energy are available for the reaction. Therefore, approximately one-third of the available light energy is captured as NADPH during photolysis and electron transfer. An equal amount of ATP is generated by the resulting proton gradient. Oxygen as a byproduct is of no further use to the reaction and thus released into the atmosphere.[2]

Quantum models[edit]

In 2007 a quantum model was proposed by Graham Fleming and his co-workers which includes the possibility that photosynthetic energy transfer might involve quantum oscillations, explaining its unusually high efficiency.[3]

According to Fleming[4] there is direct evidence that remarkably long-lived wavelike electronic quantum coherence plays an important part in energy transfer processes during photosynthesis, which can explain the extreme efficiency of the energy transfer because it enables the system to sample all the potential energy pathways, with low loss, and choose the most efficient one. This claim has, however, since been proven wrong in several publications [5] [6] [7] .[8][9]

This approach has been further investigated by Gregory Scholes and his team at the University of Toronto, which in early 2010 published research results that indicate that some marine algae make use of quantum-coherent electronic energy transfer (EET) to enhance the efficiency of their energy harnessing.[10][11][12]

Photoinduced proton transfer[edit]

Photoacids are molecules that upon light absorption undergo a proton transfer to form the photobase.

In these reactions the dissociation occurs in the electronically excited state. After proton transfer and relaxation to the electronic ground state, the proton and acid recombine to form the photoacid again.

photoacids are a convenient source to induce pH jumps in ultrafast laser spectroscopy experiments.

Photolysis in the atmosphere[edit]

Photolysis occurs in the atmosphere as part of a series of reactions by which primary pollutants such as hydrocarbons and nitrogen oxides react to form secondary pollutants such as peroxyacyl nitrates. See photochemical smog.

The two most important photodissociation reactions in the troposphere are firstly:

- O3 + hν → O2 + O(1D) λ < 320 nm

which generates an excited oxygen atom which can react with water to give the hydroxyl radical:

- O(1D) + H2O → 2 •OH

The hydroxyl radical is central to atmospheric chemistry as it initiates the oxidation of hydrocarbons in the atmosphere and so acts as a detergent.

Secondly the reaction:

- NO2 + hν → NO + O

is a key reaction in the formation of tropospheric ozone.

The formation of the ozone layer is also caused by photodissociation. Ozone in the Earth's stratosphere is created by ultraviolet light striking oxygen molecules containing two oxygen atoms (O2), splitting them into individual oxygen atoms (atomic oxygen). The atomic oxygen then combines with unbroken O2 to create ozone, O3. In addition, photolysis is the process by which CFCs are broken down in the upper atmosphere to form ozone-destroying chlorine free radicals.

Astrophysics[edit]

In astrophysics, photodissociation is one of the major processes through which molecules are broken down (but new molecules are being formed). Because of the vacuum of the interstellar medium, molecules and free radicals can exist for a long time. Photodissociation is the main path by which molecules are broken down. Photodissociation rates are important in the study of the composition of interstellar clouds in which stars are formed.

Examples of photodissociation in the interstellar medium are (hν is the energy of a single photon of frequency ν):

Atmospheric gamma-ray bursts[edit]

Currently orbiting satellites detect an average of about one gamma-ray burst per day. Because gamma-ray bursts are visible to distances encompassing most of the observable universe, a volume encompassing many billions of galaxies, this suggests that gamma-ray bursts must be exceedingly rare events per galaxy.

Measuring the exact rate of gamma-ray bursts is difficult, but for a galaxy of approximately the same size as the Milky Way, the expected rate (for long GRBs) is about one burst every 100,000 to 1,000,000 years.[13] Only a few percent of these would be beamed towards Earth. Estimates of rates of short GRBs are even more uncertain because of the unknown beaming fraction, but are probably comparable.[14]

A gamma-ray burst in the Milky Way, if close enough to Earth and beamed towards it, could have significant effects on the biosphere. The absorption of radiation in the atmosphere would cause photodissociation of nitrogen, generating nitric oxide that would act as a catalyst to destroy ozone.[15]

The atmospheric photodissociation

would yield

- NO2 (consumes up to 400 ozone molecules)

- CH2 (nominal)

- CH4 (nominal)

- CO2

(incomplete)

According to a 2004 study, a GRB at a distance of about a kiloparsec could destroy up to half of Earth's ozone layer; the direct UV irradiation from the burst combined with additional solar UV radiation passing through the diminished ozone layer could then have potentially significant impacts on the food chain and potentially trigger a mass extinction.[16][17] The authors estimate that one such burst is expected per billion years, and hypothesize that the Ordovician-Silurian extinction event could have been the result of such a burst.

There are strong indications that long gamma-ray bursts preferentially or exclusively occur in regions of low metallicity. Because the Milky Way has been metal-rich since before the Earth formed, this effect may diminish or even eliminate the possibility that a long gamma-ray burst has occurred within the Milky Way within the past billion years.[18] No such metallicity biases are known for short gamma-ray bursts. Thus, depending on their local rate and beaming properties, the possibility for a nearby event to have had a large impact on Earth at some point in geological time may still be significant.[19]

Multiple photon dissociation[edit]

Single photons in the infrared spectral range usually are not energetic enough for direct photodissociation of molecules. However, after absorption of multiple infrared photons a molecule may gain internal energy to overcome its barrier for dissociation. Multiple photon dissociation (MPD, IRMPD with infrared radiation) can be achieved by applying high power lasers, e.g. a carbon dioxide laser, or a free electron laser, or by long interaction times of the molecule with the radiation field without the possibility for rapid cooling, e.g. by collisions. The latter method allows even for MPD induced by black-body radiation, a technique called blackbody infrared radiative dissociation (BIRD).

See also[edit]

References[edit]

- ^ Campbell, Neil A.; Reece, Jane B. (2005). Biology (7th ed.). San Francisco: Pearson – Benjamin Cummings. pp. 186–191. ISBN 0-8053-7171-0.

- ^ Raven, Peter H.; Ray F. Evert; Susan E. Eichhorn (2005). Biology of Plants (7th ed.). New York: W.H. Freeman and Company Publishers. pp. 115–127. ISBN 0-7167-1007-2.

- ^ Engel Gregory S., Calhoun Tessa R., Read Elizabeth L., Ahn Tae-Kyu, Mančal Tomáš, Cheng Yuan-Chung, Blankenship Robert E., Fleming Graham R. (2007). "Evidence for wavelike energy transfer through quantum coherence in photosynthetic systems". Nature. 446: 782–786. Bibcode:2007Natur.446..782E. doi:10.1038/nature05678. PMID 17429397.

- ^ http://www.physorg.com/news95605211.html Quantum secrets of photosynthesis revealed

- ^ R. Tempelaar; T. L. C. Jansen; J. Knoester (2014). "Vibrational Beatings Conceal Evidence of Electronic Coherence in the FMO Light-Harvesting Complex". J. Phys. Chem. B. 118 (45): 12865–12872. doi:10.1021/jp510074q. PMID 25321492.

- ^ N. Christenson; H. F. Kauffmann; T. Pullerits; T. Mancal (2012). "Origin of Long-Lived Coherences in Light-Harvesting Complexes". J. Phys. Chem. B. 116: 7449–7454. arXiv:1201.6325. doi:10.1021/jp304649c. PMC 3789255. PMID 22642682.

- ^ E. Thyrhaug; K. Zidek; J. Dostal; D. Bina; D. Zigmantas (2016). "Exciton Structure and Energy Transfer in the Fenna−Matthews− Olson Complex". J. Phys. Chem. Lett. 7(9): 1653–1660. doi:10.1021/acs.jpclett.6b00534. PMID 27082631.

- ^ A. G. Dijkstra; Y. Tanimura (2012). "The role of the environment time scale in light-harvesting efficiency and coherent oscillations". New J. Phys. 14 (7): 073027. Bibcode:2012NJPh...14g3027D. doi:10.1088/1367-2630/14/7/073027.

- ^ D. M. Monahan; L. Whaley-Mayda; A. Ishizaki; G. R. Fleming (2015). "Influence of weak vibrational-electronic couplings on 2D electronic spectra and inter-site coherence in weakly coupled photosynthetic complexes". J. Chem. Phys. 143 (6): 065101. Bibcode:2015JChPh.143f5101M. doi:10.1063/1.4928068. PMID 26277167.

- ^ "Scholes Group Research". Archived from the originalon 2018-09-30. Retrieved 2010-03-23.

- ^ Gregory D. Scholes (7 January 2010), "Quantum-coherent electronic energy transfer: Did Nature think of it first?", Journal of Physical Chemistry Letters, 1 (1): 2–8, doi:10.1021/jz900062f

- ^ Elisabetta Collini; Cathy Y. Wong; Krystyna E. Wilk; Paul M. G. Curmi; Paul Brumer; Gregory D. Scholes (4 February 2010), "Coherently wired light-harvesting in photosynthetic marine algae at ambient temperature", Nature, 463 (7281): 644–7, Bibcode:2010Natur.463..644C, doi:10.1038/nature08811, PMID 20130647

- ^ Podsiadlowski 2004[citation not found]

- ^ Guetta 2006[citation not found]

- ^ Thorsett 1995[citation not found]

- ^ Melott 2004[citation not found]

- ^ Wanjek 2005[citation not found]

- ^ Stanek 2006[citation not found]

- ^ Ejzak 2007[citation not found]

Authority control Edit this at Wikidata

General

Integrated Authority File (Germany)

Other

Microsoft Academic

Categories: Chemical reactionsAstrophysicsPhotosynthesisPhotochemistry

https://en.wikipedia.org/wiki/Photodissociation

Photoacids are molecules which become more acidic upon absorption of light. Either the light causes a photodissociation to produce a strong acid or the light causes photoassociation (such as a ring forming reaction) that leads to an increased acidity and dissociation of a proton.

There are two main types of molecules that release protons upon illumination: photoacid generators (PAGs) and photoacids(PAHs). PAGs undergo proton photodissociation irreversibly, while PAHs are molecules that undergo proton photodissociation and thermal reassociation.[1] In this latter case, the excited state is strongly acidic, but reversible.

Photoacid generators[edit]

An example due to photodissociation is triphenylsulfonium triflate. This colourless salt consists of a sulfonium cation and the triflateanion. Many related salts are known including those with other noncoordinating anions and those with diverse substituents on the phenyl rings.

The triphenylsulfonium salts absorb at a wavelength of 233 nm, which induces a dissociation of one of the three phenyl rings. This dissociated phenyl radical then re-combines with remaining diphenylsulfonium to liberate an H+ ion.[2] The second reaction is irreversible, and therefore the entire process is irreversible, so triphenylsulfonium triflate is a photoacid generator. The ultimate products are thus a neutral organic sulfide and the strong acid triflic acid.

- [(C6H5)3S+][CF3SO−

3] + hν → [(C6H5)2S+.][CF3SO−

3] + C6H.

5 - [(C6H5)2S+.][CF3SO−

3] + C6H.

5 → (C6H5C6H4)(C6H5)S + [CF3SO−

3][H+]

Applications of these photoacids include photolithography[3] and catalysis of the polymerization of epoxides.

Photoacids[edit]

An example of a photoacid which undergoes excited-state proton transfer without prior photolysis is the fluorescent dye pyranine(8-hydroxy-1,3,6-pyrenetrisulfonate or HPTS).[4]

The Förster cycle was proposed by Theodor Förster[5] and combines knowledge of the ground state acid dissociation constant(pKa), absorption, and fluorescence spectra to predict the pKa in the excited state of a photoacid.

References[edit]

- ^ V. K. Johns, P. K. Patel, S. Hassett, P. Calvo-Marzal, Y. Qin and K. Y. Chumbimuni-Torres, Visible Light Activated Ion Sensing Using a Photoacid Polymer for Calcium Detection, Anal. Chem. 2014, 86, 6184−6187. (Published online: 3 June 2014) doi:10.1021/ac500956j

- ^ W. D. Hinsberg, G. M. Wallraff, Lithographic Resists, Kirk-Othmer Encyclopedia of Chemical Technology, Wiley-VCH, Weinheim, 2005. (Published online: 17 June 2005) doi:10.1002/0471238961.1209200808091419.a01.pub2

- ^ J. V. Crivello The Discovery and Development of Onium Salt Cationic Photoinitiators, J. Polym. Sci., Part A: Polym. Chem., 1999, 37, 4241−4254. doi:10.1002/(SICI)1099-0518(19991201)37:23<4241::AID-POLA1>3.0.CO;2-R

- ^ N. Amdursky, R. Simkovitch and D. Huppert, Excited-state proton transfer of photoacids adsorbed on biomaterials, J. Phys. Chem. B., 2014, 118, 13859−13869. doi:10.1021/jp509153r

- ^ Kramer, Horst E. A.; Fischer, Peter (9 November 2010). "The Scientific Work of Theodor Förster: A Brief Sketch of his Life and Personality". ChemPhysChem. 12 (3): 555–558. doi:10.1002/cphc.201000733. PMID 21344592.

https://en.wikipedia.org/wiki/Photoacid

Pyranine is a hydrophilic, pH-sensitive fluorescent dye from the group of chemicals known as arylsulfonates.[1][2] Pyranine is soluble in water and has applications as a coloring agent, biological stain, optical detecting reagent, and a pH indicator.[3][4] One example would be the measurement of intracellular pH.[5] Pyranine is also found in yellow highlighters, giving them their characteristic fluorescence and bright yellow-green colour. It is also found in some types of soap.[6]

It is synthesized from pyrenetetrasulfonic acid and a solution of sodium hydroxide in water under reflux.[7] The trisodium salt crystallizes as yellow needles when adding an aqueous solution of sodium chloride.

https://en.wikipedia.org/wiki/Pyranine

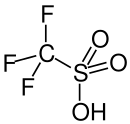

Triflic acid, the short name for trifluoromethanesulfonic acid, TFMS, TFSA, HOTfor TfOH, is a sulfonic acid with the chemical formula CF3SO3H. It is one of the strongest known acids. Triflic acid is mainly used in research as a catalyst for esterification.[2][3] It is a hygroscopic, colorless, slightly viscous liquid and is soluble in polar solvents.

Trifluoromethanesulfonic acid is produced industrially by electrochemical fluorination(ECF) of methanesulfonic acid:

- CH3SO3H + 4 HF → CF3SO2F + H2O + 3 H2

The resulting CF3SO2F is hydrolyzed, and the resulting triflate salt is preprotonated. Alternatively, trifluoromethanesulfonic acid arises by oxidation of trifluoromethylsulfenyl chloride:[4]

- CF3SCl + 2 Cl2 + 3 H2O → CF3SO3H + 5 HCl

Triflic acid is purified by distillation from triflic anhydride.[3]

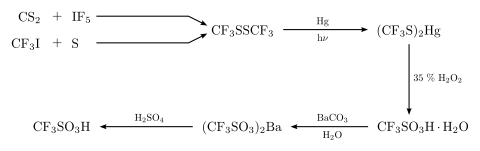

Trifluoromethanesulfonic acid was first synthesized in 1954 by Robert Haszeldineand Kidd by the following reaction:[5]

In the laboratory, triflic acid is useful in protonations because the conjugate base of triflic acid is nonnucleophilic. It is also used as an acidic titrant in nonaqueous acid-base titration because it behaves as a strong acid in many solvents (acetonitrile, acetic acid, etc.) where common mineral acids (such as HCl or H2SO4) are only moderately strong.

With a Ka = 5×1014, pKa −14.7±2.0,[1] triflic acid qualifies as a superacid. It owes many of its useful properties to its great thermal and chemical stability. Both the acid and its conjugate base CF3SO−

3, known as triflate, resist oxidation/reduction reactions, whereas many strong acids are oxidizing, e.g. perchloric or nitric acid. Further recommending its use, triflic acid does not sulfonate substrates, which can be a problem with sulfuric acid, fluorosulfuric acid, and chlorosulfonic acid. Below is a prototypical sulfonation, which HOTf does not undergo:

- C6H6 + H2SO4 → C6H5(SO3H) + H2O

Triflic acid fumes in moist air and forms a stable solid monohydrate, CF3SO3H·H2O, melting point 34 °C.

Salt and complex formation[edit]

The triflate ligand is labile, reflecting its low basicity. Trifluoromethanesulfonic acid exothermically reacts with metal carbonates, hydroxides, and oxides. Illustrative is the synthesis of Cu(OTf)2.[6]

- CuCO3 + 2 CF3SO3H → Cu(O3SCF3)2 + H2O + CO2

Chloride ligands can be converted to the corresponding triflates:

- 3 CF3SO3H + [Co(NH3)5Cl]Cl2 → [Co(NH3)5O3SCF3](O3SCF3)2 + 3 HCl

This conversion is conducted in neat HOTf at 100 °C, followed by precipitation of the salt upon the addition of ether.

Organic chemistry[edit]

Triflic acid reacts with acyl halides to give mixed triflate anhydrides, which are strong acylating agents, e.g. in Friedel–Crafts reactions.

- CH3C(O)Cl + CF3SO3H → CH3C(O)OSO2CF3 + HCl

- CH3C(O)OSO2CF3 + C6H6 → CH3C(O)C6H5 + CF3SO3H

Triflic acid catalyzes the reaction of aromatic compounds with sulfonyl chlorides, probably also through the intermediacy of a mixed anhydride of the sulfonic acid.

Triflic acid promotes other Friedel–Crafts-like reactions including the cracking of alkanes and alkylation of alkenes, which are very important to the petroleum industry. These triflic acid derivative catalysts are very effective in isomerizing straight chain or slightly branched hydrocarbons that can increase the octane rating of a particular petroleum-based fuel.

Triflic acid reacts exothermically with alcohols to produce ethers and olefins.

Dehydration gives the acid anhydride, trifluoromethanesulfonic anhydride, (CF3SO2)2O.

Triflic acid is one of the strongest acids. Contact with skin causes severe burns with delayed tissue destruction. On inhalation it causes fatal spasms, inflammation and edema.[7]

Like sulfuric acid, triflic acid must be slowly added to polar solvents to prevent thermal runaway.

https://en.wikipedia.org/wiki/Triflic_acid

Thermal runaway describes a process that is accelerated by increased temperature, in turn releasing energy that further increases temperature. Thermal runaway occurs in situations where an increase in temperature changes the conditions in a way that causes a further increase in temperature, often leading to a destructive result. It is a kind of uncontrolled positive feedback.

In chemistry (and chemical engineering), thermal runaway is associated with strongly exothermic reactions that are accelerated by temperature rise. In electrical engineering, thermal runaway is typically associated with increased current flow and power dissipation. Thermal runaway can occur in civil engineering, notably when the heat released by large amounts of curing concrete is not controlled.[citation needed] In astrophysics, runaway nuclear fusion reactions in stars can lead to nova and several types of supernovaexplosions, and also occur as a less dramatic event in the normal evolution of solar-mass stars, the "helium flash".

Some climate researchers have postulated that a global average temperature increase of 3–4 degrees Celsius above the preindustrial baseline could lead to a further unchecked increase in surface temperatures. For example, releases of methane, a greenhouse gas more potent than CO2, from wetlands, melting permafrost and continental margin seabed clathrate deposits could be subject to positive feedback.[1][2]

Thermal runaway is also called thermal explosion in chemical engineering, or runaway reaction in organic chemistry. It is a process by which an exothermic reaction goes out of control: the reaction rate increases due to an increase in temperature, causing a further increase in temperature and hence a further rapid increase in the reaction rate. This has contributed to industrial chemical accidents, most notably the 1947 Texas City disaster from overheated ammonium nitrate in a ship's hold, and the 1976 explosion of zoalene, in a drier, at King's Lynn.[3] Frank-Kamenetskii theory provides a simplified analytical model for thermal explosion. Chain branching is an additional positive feedback mechanism which may also cause temperature to skyrocket because of rapidly increasing reaction rate.

Chemical reactions are either endothermic or exothermic, as expressed by their change in enthalpy. Many reactions are highly exothermic, so many industrial-scale and oil refinery processes have some level of risk of thermal runaway. These include hydrocracking, hydrogenation, alkylation (SN2), oxidation, metalation and nucleophilic aromatic substitution. For example, oxidation of cyclohexane into cyclohexanol and cyclohexanone and ortho-xylene into phthalic anhydride have led to catastrophic explosions when reaction control failed.

Thermal runaway may result from unwanted exothermic side reaction(s) that begin at higher temperatures, following an initial accidental overheating of the reaction mixture. This scenario was behind the Seveso disaster, where thermal runaway heated a reaction to temperatures such that in addition to the intended 2,4,5-trichlorophenol, poisonous 2,3,7,8-tetrachlorodibenzo-p-dioxinwas also produced, and was vented into the environment after the reactor's rupture disk burst.[4]

Thermal runaway is most often caused by failure of the reactor vessel's cooling system. Failure of the mixer can result in localized heating, which initiates thermal runaway. Similarly, in flow reactors, localized insufficient mixing causes hotspots to form, wherein thermal runaway conditions occur, which causes violent blowouts of reactor contents and catalysts. Incorrect equipment component installation is also a common cause. Many chemical production facilities are designed with high-volume emergency venting, a measure to limit the extent of injury and property damage when such accidents occur.

At large scale, it is unsafe to "charge all reagents and mix", as is done in laboratory scale. This is because the amount of reaction scales with the cube of the size of the vessel (V ∝ r³), but the heat transfer area scales with the square of the size (A ∝ r²), so that the heat production-to-area ratio scales with the size (V/A ∝ r). Consequently, reactions that easily cool fast enough in the laboratory can dangerously self-heat at ton scale. In 2007, this kind of erroneous procedure caused an explosion of a 2,400 U.S. gallons (9,100 L)-reactor used to metalate methylcyclopentadiene with metallic sodium, causing the loss of four lives and parts of the reactor being flung 400 feet (120 m) away.[5][6] Thus, industrial scale reactions prone to thermal runaway are preferably controlled by the addition of one reagent at a rate corresponding to the available cooling capacity.

Some laboratory reactions must be run under extreme cooling, because they are very prone to hazardous thermal runaway. For example, in Swern oxidation, the formation of sulfonium chloride must be performed in a cooled system (−30 °C), because at room temperature the reaction undergoes explosive thermal runaway.[6]

Microwave heating[edit]

Microwaves are used for heating of various materials in cooking and various industrial processes. The rate of heating of the material depends on the energy absorption, which depends on the dielectric constant of the material. The dependence of dielectric constant on temperature varies for different materials; some materials display significant increase with increasing temperature. This behavior, when the material gets exposed to microwaves, leads to selective local overheating, as the warmer areas are better able to accept further energy than the colder areas—potentially dangerous especially for thermal insulators, where the heat exchange between the hot spots and the rest of the material is slow. These materials are called thermal runaway materials. This phenomenon occurs in some ceramics.

Electrical engineering[edit]

Some electronic components develop lower resistances or lower triggering voltages (for nonlinear resistances) as their internal temperature increases. If circuit conditions cause markedly increased current flow in these situations, increased power dissipationmay raise the temperature further by Joule heating. A vicious circle or positive feedback effect of thermal runaway can cause failure, sometimes in a spectacular fashion (e.g. electrical explosion or fire). To prevent these hazards, well-designed electronic systems typically incorporate current limiting protection, such as thermal fuses, circuit breakers, or PTC current limiters.

To handle larger currents, circuit designers may connect multiple lower-capacity devices (e.g. transistors, diodes, or MOVs) in parallel. This technique can work well, but is susceptible to a phenomenon called current hogging, in which the current is not shared equally across all devices. Typically, one device may have a slightly lower resistance, and thus draws more current, heating it more than its sibling devices, causing its resistance to drop further. The electrical load ends up funneling into a single device, which then rapidly fails. Thus, an array of devices may end up no more robust than its weakest component.

The current-hogging effect can be reduced by carefully matching the characteristics of each paralleled device, or by using other design techniques to balance the electrical load. However, maintaining load balance under extreme conditions may not be straightforward. Devices with an intrinsic positive temperature coefficient (PTC) of electrical resistance are less prone to current hogging, but thermal runaway can still occur because of poor heat sinking or other problems.

Many electronic circuits contain special provisions to prevent thermal runaway. This is most often seen in transistor biasing arrangements for high-power output stages. However, when equipment is used above its designed ambient temperature, thermal runaway can still occur in some cases. This occasionally causes equipment failures in hot environments, or when air cooling vents are blocked.

Semiconductors[edit]

Silicon shows a peculiar profile, in that its electrical resistance increases with temperature up to about 160 °C, then starts decreasing, and drops further when the melting point is reached. This can lead to thermal runaway phenomena within internal regions of the semiconductor junction; the resistance decreases in the regions which become heated above this threshold, allowing more current to flow through the overheated regions, in turn causing yet more heating in comparison with the surrounding regions, which leads to further temperature increase and resistance decrease. This leads to the phenomenon of current crowding and formation of current filaments (similar to current hogging, but within a single device), and is one of the underlying causes of many semiconductor junction failures.

Bipolar junction transistors (BJTs)[edit]

Leakage current increases significantly in bipolar transistors (especially germanium-based bipolar transistors) as they increase in temperature. Depending on the design of the circuit, this increase in leakage current can increase the current flowing through a transistor and thus the power dissipation, causing a further increase in collector-to-emitter leakage current. This is frequently seen in a push–pull stage of a class AB amplifier. If the pull-up and pull-down transistors are biased to have minimal crossover distortionat room temperature, and the biasing is not temperature-compensated, then as the temperature rises both transistors will be increasingly biased on, causing current and power to further increase, and eventually destroying one or both devices.

One rule of thumb to avoid thermal runaway is to keep the operating point of a BJT so that Vce ≤ 1/2Vcc

Another practice is to mount a thermal feedback sensing transistor or other device on the heat sink, to control the crossover bias voltage. As the output transistors heat up, so does the thermal feedback transistor. This in turn causes the thermal feedback transistor to turn on at a slightly lower voltage, reducing the crossover bias voltage, and so reducing the heat dissipated by the output transistors.

If multiple BJT transistors are connected in parallel (which is typical in high current applications), a current hogging problem can occur. Special measures must be taken to control this characteristic vulnerability of BJTs.

In power transistors (which effectively consist of many small transistors in parallel), current hogging can occur between different parts of the transistor itself, with one part of the transistor becoming more hot than the others. This is called second breakdown, and can result in destruction of the transistor even when the average junction temperature seems to be at a safe level.

Power MOSFETs[edit]

Power MOSFETs typically increase their on-resistance with temperature. Under some circumstances, power dissipated in this resistance causes more heating of the junction, which further increases the junction temperature, in a positive feedback loop. As a consequence, power MOSFETs have stable and unstable regions of operation.[7] However, the increase of on-resistance with temperature helps balance current across multiple MOSFETs connected in parallel, so current hogging does not occur. If a MOSFET transistor produces more heat than the heatsink can dissipate, then thermal runaway can still destroy the transistors. This problem can be alleviated to a degree by lowering the thermal resistance between the transistor die and the heatsink. See also Thermal Design Power.

Metal oxide varistors (MOVs)[edit]

Metal oxide varistors typically develop lower resistance as they heat up. If connected directly across an AC or DC power bus (a common usage for protection against electrical transients), a MOV which has developed a lowered trigger voltage can slide into catastrophic thermal runaway, possibly culminating in a small explosion or fire.[8] To prevent this possibility, fault current is typically limited by a thermal fuse, circuit breaker, or other current limiting device.

Tantalum capacitors[edit]

Tantalum capacitors are, under some conditions, prone to self-destruction by thermal runaway. The capacitor typically consists of a sintered tantalum sponge acting as the anode, a manganese dioxide cathode, and a dielectric layer of tantalum pentoxide created on the tantalum sponge surface by anodizing. It may happen that the tantalum oxide layer has weak spots that undergo dielectric breakdown during a voltage spike. The tantalum sponge then comes into direct contact with the manganese dioxide, and increased leakage current causes localized heating; usually, this drives an endothermic chemical reaction that produces manganese(III) oxideand regenerates (self-heals) the tantalum oxide dielectric layer.

However, if the energy dissipated at the failure point is high enough, a self-sustaining exothermic reaction can start, similar to the thermite reaction, with metallic tantalum as fuel and manganese dioxide as oxidizer. This undesirable reaction will destroy the capacitor, producing smoke and possibly flame.[9]

Therefore, tantalum capacitors can be freely deployed in small-signal circuits, but application in high-power circuits must be carefully designed to avoid thermal runaway failures.

Digital logic[edit]

The leakage current of logic switching transistors increases with temperature. In rare instances, this may lead to thermal runaway in digital circuits. This is not a common problem, since leakage currents usually make up a small portion of overall power consumption, so the increase in power is fairly modest — for an Athlon 64, the power dissipation increases by about 10% for every 30 degrees Celsius.[10] For a device with a TDP of 100 W, for thermal runaway to occur, the heat sink would have to have a thermal resistivity of over 3 K/W (kelvins per watt), which is about 6 times worse than a stock Athlon 64 heat sink. (A stock Athlon 64 heat sink is rated at 0.34 K/W, although the actual thermal resistance to the environment is somewhat higher, due to the thermal boundary between processor and heatsink, rising temperatures in the case, and other thermal resistances.[citation needed]) Regardless, an inadequate heat sink with a thermal resistance of over 0.5 to 1 K/W would result in the destruction of a 100 W device even without thermal runaway effects.

Batteries[edit]

When handled improperly, or if manufactured defectively, some rechargeable batteries can experience thermal runaway resulting in overheating. Sealed cells will sometimes explode violently if safety vents are overwhelmed or nonfunctional.[11] Especially prone to thermal runaway are lithium-ion batteries, most markedly in the form of the lithium polymer battery.[citation needed] Reports of exploding cellphones occasionally appear in newspapers. In 2006, batteries from Apple, HP, Toshiba, Lenovo, Dell and other notebook manufacturers were recalled because of fire and explosions.[12][13][14][15] The Pipeline and Hazardous Materials Safety Administration (PHMSA) of the U.S. Department of Transportation has established regulations regarding the carrying of certain types of batteries on airplanes because of their instability in certain situations. This action was partially inspired by a cargo bay fire on a UPS airplane.[16] One of the possible solutions is in using safer and less reactive anode (lithium titanates) and cathode (lithium iron phosphate) materials — thereby avoiding the cobalt electrodes in many lithium rechargeable cells — together with non-flammable electrolytes based on ionic liquids.

Astrophysics[edit]

Runaway thermonuclear reactions can occur in stars when nuclear fusion is ignited in conditions under which the gravitational pressure exerted by overlying layers of the star greatly exceeds thermal pressure, a situation that makes possible rapid increases in temperature through gravitational compression. Such a scenario may arise in stars containing degenerate matter, in which electron degeneracy pressure rather than normal thermal pressure does most of the work of supporting the star against gravity, and in stars undergoing implosion. In all cases, the imbalance arises prior to fusion ignition; otherwise, the fusion reactions would be naturally regulated to counteract temperature changes and stabilize the star. When thermal pressure is in equilibrium with overlying pressure, a star will respond to the increase in temperature and thermal pressure due to initiation of a new exothermic reaction by expanding and cooling. A runaway reaction is only possible when this response is inhibited.

Helium flashes in red giant stars[edit]

When stars in the 0.8–2.0 solar mass range exhaust the hydrogen in their cores and become red giants, the helium accumulating in their cores reaches degeneracy before it ignites. When the degenerate core reaches a critical mass of about 0.45 solar masses, helium fusion is ignited and takes off in a runaway fashion, called the helium flash, briefly increasing the star's energy production to a rate 100 billion times normal. About 6% of the core is quickly converted into carbon.[17] While the release is sufficient to convert the core back into normal plasma after a few seconds, it does not disrupt the star,[18][19] nor immediately change its luminosity. The star then contracts, leaving the red giant phase and continuing its evolution into a stable helium-burning phase.

Novae[edit]

A nova results from runaway hydrogen fusion (via the CNO cycle) in the outer layer of a carbon-oxygen white dwarf star. If a white dwarf has a companion star from which it can accrete gas, the material will accumulate in a surface layer made degenerate by the dwarf's intense gravity. Under the right conditions, a sufficiently thick layer of hydrogen is eventually heated to a temperature of 20 million K, igniting runaway fusion. The surface layer is blasted off the white dwarf, increasing luminosity by a factor on the order of 50,000. The white dwarf and companion remain intact, however, so the process can repeat.[20] A much rarer type of nova may occur when the outer layer that ignites is composed of helium.[21]

X-ray bursts[edit]

Analogous to the process leading to novae, degenerate matter can also accumulate on the surface of a neutron star that is accreting gas from a close companion. If a sufficiently thick layer of hydrogen accumulates, ignition of runaway hydrogen fusion can then lead to an X-ray burst. As with novae, such bursts tend to repeat and may also be triggered by helium or even carbon fusion.[22][23] It has been proposed that in the case of "superbursts", runaway breakup of accumulated heavy nuclei into iron groupnuclei via photodissociation rather than nuclear fusion could contribute the majority of the energy of the burst.[23]

Type Ia supernovae[edit]

A type Ia supernova results from runaway carbon fusion in the core of a carbon-oxygen white dwarf star. If a white dwarf, which is composed almost entirely of degenerate matter, can gain mass from a companion, the increasing temperature and density of material in its core will ignite carbon fusion if the star's mass approaches the Chandrasekhar limit. This leads to an explosion that completely disrupts the star. Luminosity increases by a factor of greater than 5 billion. One way to gain the additional mass would be by accreting gas from a giant star (or even main sequence) companion.[24] A second and apparently more common mechanism to generate the same type of explosion is the merger of two white dwarfs.[24][25]

Pair-instability supernovae[edit]

A pair-instability supernova is believed to result from runaway oxygen fusion in the core of a massive, 130–250 solar mass, low to moderate metallicity star.[26] According to theory, in such a star, a large but relatively low density core of nonfusing oxygen builds up, with its weight supported by the pressure of gamma rays produced by the extreme temperature. As the core heats further, the gamma rays eventually begin to pass the energy threshold needed for collision-induced decay into electron-positron pairs, a process called pair production. This causes a drop in the pressure within the core, leading it to contract and heat further, causing more pair production, a further pressure drop, and so on. The core starts to undergo gravitational collapse. At some point this ignites runaway oxygen fusion, releasing enough energy to obliterate the star. These explosions are rare, perhaps about one per 100,000 supernovae.

Comparison to nonrunaway supernovae[edit]

Not all supernovae are triggered by runaway nuclear fusion. Type Ib, Ic and type II supernovae also undergo core collapse, but because they have exhausted their supply of atomic nuclei capable of undergoing exothermic fusion reactions, they collapse all the way into neutron stars, or in the higher-mass cases, stellar black holes, powering explosions by the release of gravitational potential energy (largely via release of neutrinos). It is the absence of runaway fusion reactions that allows such supernovae to leave behind compact stellar remnants.

See also[edit]

- Cascading failure

- Frank-Kamenetskii theory

- Boeing 787 Dreamliner battery problems (related to lithium-ion batteries)

- Lithium Ion Batteries and Safety

- Plug-in electric vehicle fire incidents (related to lithium-ion batteries)

- UPS Flight 6 (a 2010 jet crash related to lithium-ion batteries in the cargo)

https://en.wikipedia.org/wiki/Thermal_runaway

A cascading failure is a process in a system of interconnected parts in which the failure of one or few parts can trigger the failure of other parts and so on. Such a failure may happen in many types of systems, including power transmission, computer networking, finance, transportation systems, organisms, the human body, and ecosystems.

Cascading failures may occur when one part of the system fails. When this happens, other parts must then compensate for the failed component. This in turn overloads these nodes, causing them to fail as well, prompting additional nodes to fail one after another.

Cascading failure is common in power grids when one of the elements fails (completely or partially) and shifts its load to nearby elements in the system. Those nearby elements are then pushed beyond their capacity so they become overloaded and shift their load onto other elements. Cascading failure is a common effect seen in high voltage systems, where a single point of failure (SPF) on a fully loaded or slightly overloaded system results in a sudden spike across all nodes of the system. This surge current can induce the already overloaded nodes into failure, setting off more overloads and thereby taking down the entire system in a very short time.

This failure process cascades through the elements of the system like a ripple on a pond and continues until substantially all of the elements in the system are compromised and/or the system becomes functionally disconnected from the source of its load. For example, under certain conditions a large power grid can collapse after the failure of a single transformer.

Monitoring the operation of a system, in real-time, and judicious disconnection of parts can help stop a cascade. Another common technique is to calculate a safety margin for the system by computer simulation of possible failures, to establish safe operating levels below which none of the calculated scenarios is predicted to cause cascading failure, and to identify the parts of the network which are most likely to cause cascading failures.[1]

One of the primary problems with preventing electrical grid failures is that the speed of the control signal is no faster than the speed of the propagating power overload, i.e. since both the control signal and the electrical power are moving at the same speed, it is not possible to isolate the outage by sending a warning ahead to isolate the element.

The question if power grid failures are correlated have been studied in Daqing Li et al.[2] as well as by Paul DH Hines et al.[3]

Examples[edit]

Cascading failure caused the following power outages:

Cascading structural failure[edit]

Certain load-bearing structures with discrete structural components can be subject to the "zipper effect", where the failure of a single structural member increases the load on adjacent members. In the case of the Hyatt Regency walkway collapse, a suspended walkway (which was already overstressed due to an error in construction) failed when a single vertical suspension rod failed, overloading the neighboring rods which failed sequentially (i.e. like a zipper). A bridge that can have such a failure is called fracture critical, and numerous bridge collapses have been caused by the failure of a single part. Properly designed structures use an adequate factor of safety and/or alternate load paths to prevent this type of mechanical cascade failure.[5]

Biology[edit]

Biochemical cascades exist in biology, where a small reaction can have system-wide implications. One negative example is ischemic cascade, in which a small ischemic attack releases toxins which kill off far more cells than the initial damage, resulting in more toxins being released. Current research is to find a way to block this cascade in stroke patients to minimize the damage.

In the study of extinction, sometimes the extinction of one species will cause many other extinctions to happen. Such a species is known as a keystone species.

Electronics[edit]

Another example is the Cockcroft–Walton generator, which can also experience cascade failures wherein one failed diode can result in all the diodes failing in a fraction of a second.

Yet another example of this effect in a scientific experiment was the implosion in 2001 of several thousand fragile glass photomultiplier tubes used in the Super-Kamiokande experiment, where the shock wave caused by the failure of a single detector appears to have triggered the implosion of the other detectors in a chain reaction.

Diverse infrastructures such as water supply, transportation, fuel and power stations are coupled together and depend on each other for functioning, see Fig. 1. Owing to this coupling, interdependent networks are extremely sensitive to random failures, and in particular to targeted attacks, such that a failure of a small fraction of nodes in one network can trigger an iterative cascade of failures in several interdependent networks.[12][13]Electrical blackouts frequently result from a cascade of failures between interdependent networks, and the problem has been dramatically exemplified by the several large-scale blackouts that have occurred in recent years. Blackouts are a fascinating demonstration of the important role played by the dependencies between networks. For example, the 2003 Italy blackout resulted in a widespread failure of the railway network, health care systems, and financial services and, in addition, severely influenced the telecommunication networks. The partial failure of the communication system in turn further impaired the electrical grid management system, thus producing a positive feedback on the power grid.[14] This example emphasizes how inter-dependence can significantly magnify the damage in an interacting network system. A framework to study the cascading failures between coupled networks based on percolation theory was developed recently.[15] The cascading failures can lead to abrupt collapse compare to percolation in a single network where the breakdown of the network is continuous, see Fig. 2. Cascading failures in spatially embedded systems have been shown to lead to extreme vulnerability.[16] For the dynamic process of cascading failures see ref.[17] A model for repairing failures in order to avoid cascading failures was developed by Di Muro et al.[18]

Furthermore, it was shown that such interdependent systems when embedded in space are extremely vulnerable to localized attacks or failures. Above a critical radius of damage, the failure may spread to the entire system.[19]

Cascading failures spreading of localized attacks on spatial multiplex networks with a community structure has been studied by Vaknin et al.[20] Universal features of cascading failures in interdependent networks have been reported Duan et al.[21] A method for mitigating cascading failures in networks using localized information has been developed by Smolyak et al.[22]

For a comprehensive review on cascading failures in complex networks see Valdez et al.[23]

Model for overload cascading failures[edit]

A model for cascading failures due to overload propagation is the Motter–Lai model.[24] The tempo-spatial propagation of such failures have been studied by Jichang Zhao et al.[25]

See also[edit]

- Blackouts

- Brittle system

- Butterfly effect

- Byzantine failure

- Cascading rollback

- Chain reaction

- Chaos theory

- Cache stampede

- Congestion collapse

- Domino effect

- For Want of a Nail (proverb)

- Network science

- Network theory

- Interdependent networks

- Kessler Syndrome

- Percolation theory

- Progressive collapse

- Virtuous circle and vicious circle

- Wicked problem

https://en.wikipedia.org/wiki/Cascading_failure

| Non-renewable | |

|---|---|

| Renewable |

Cogeneration or combined heat and power (CHP) is the use of a heat engine[1] or power station to generate electricity and useful heat at the same time.

https://en.wikipedia.org/wiki/Cogeneration

Geothermal Power

Geothermal power is electrical power generated from geothermal energy. Technologies in use include dry steam power stations, flash steam power stations and binary cycle power stations. Geothermal electricity generation is currently used in 26 countries,[1][2] while geothermal heating is in use in 70 countries.[3]

As of 2019, worldwide geothermal power capacity amounts to 15.4 gigawatts(GW), of which 23.86 percent or 3.68 GW are installed in the United States.[4]International markets grew at an average annual rate of 5 percent over the three years to 2015, and global geothermal power capacity is expected to reach 14.5–17.6 GW by 2020.[5] Based on current geologic knowledge and technology the GEA publicly discloses, the Geothermal Energy Association (GEA) estimates that only 6.9 percent of total global potential has been tapped so far, while the IPCCreported geothermal power potential to be in the range of 35 GW to 2 TW.[3]Countries generating more than 15 percent of their electricity from geothermal sources include El Salvador, Kenya, the Philippines, Iceland, New Zealand,[6] and Costa Rica.

Geothermal power is considered to be a sustainable, renewable source of energy because the heat extraction is small compared with the Earth's heat content.[7]The greenhouse gas emissions of geothermal electric stations are on average 45 grams of carbon dioxide per kilowatt-hour of electricity, or less than 5 percent of that of conventional coal-fired plants.[8]

As a source of renewable energy for both power and heating, geothermal has the potential to meet 3-5% of global demand by 2050. With economic incentives, it is estimated that by 2100 it will be possible to meet 10% of global demand.[6]

The Earth's heat content is about 1×1019 TJ (2.8×1015 TWh).[3] This heat naturally flows to the surface by conduction at a rate of 44.2 TW[20] and is replenished by radioactive decay at a rate of 30 TW.[7] These power rates are more than double humanity's current energy consumption from primary sources, but most of this power is too diffuse (approximately 0.1 W/m2 on average) to be recoverable. The Earth's crust effectively acts as a thick insulating blanket which must be pierced by fluid conduits (of magma, water or other) to release the heat underneath.

Electricity generation requires high-temperature resources that can only come from deep underground. The heat must be carried to the surface by fluid circulation, either through magma conduits, hot springs, hydrothermal circulation, oil wells, drilled water wells, or a combination of these. This circulation sometimes exists naturally where the crust is thin: magma conduits bring heat close to the surface, and hot springs bring the heat to the surface. If no hot spring is available, a well must be drilled into a hot aquifer. Away from tectonic plate boundaries the geothermal gradient is 25–30 °C per kilometre (km) of depth in most of the world, so wells would have to be several kilometres deep to permit electricity generation.[3] The quantity and quality of recoverable resources improves with drilling depth and proximity to tectonic plate boundaries.

In ground that is hot but dry, or where water pressure is inadequate, injected fluid can stimulate production. Developers bore two holes into a candidate site, and fracture the rock between them with explosives or high-pressure water. Then they pump water or liquefied carbon dioxide down one borehole, and it comes up the other borehole as a gas.[15] This approach is called hot dry rock geothermal energy in Europe, or enhanced geothermal systems in North America. Much greater potential may be available from this approach than from conventional tapping of natural aquifers.[15]

Estimates of the electricity generating potential of geothermal energy vary from 35 to 2000 GW depending on the scale of investments.[3] This does not include non-electric heat recovered by co-generation, geothermal heat pumps and other direct use. A 2006 report by the Massachusetts Institute of Technology (MIT) that included the potential of enhanced geothermal systems estimated that investing US$1 billion in research and development over 15 years would allow the creation of 100 GW of electrical generating capacity by 2050 in the United States alone.[15] The MIT report estimated that over 200×109 TJ (200 ZJ; 5.6×107 TWh) would be extractable, with the potential to increase this to over 2,000 ZJ with technology improvements – sufficient to provide all the world's present energy needs for several millennia.[15]

At present, geothermal wells are rarely more than 3 km (1.9 mi) deep.[3] Upper estimates of geothermal resources assume wells as deep as 10 km (6.2 mi). Drilling near this depth is now possible in the petroleum industry, although it is an expensive process. The deepest research well in the world, the Kola Superdeep Borehole (KSDB-3), is 12.261 km (7.619 mi) deep.[21] This record has recently been imitated by commercial oil wells, such as Exxon's Z-12 well in the Chayvo field, Sakhalin.[22] Wells drilled to depths greater than 4 km (2.5 mi) generally incur drilling costs in the tens of millions of dollars.[23] The technological challenges are to drill wide bores at low cost and to break larger volumes of rock.

Geothermal power is considered to be sustainable because the heat extraction is small compared to the Earth's heat content, but extraction must still be monitored to avoid local depletion.[7] Although geothermal sites are capable of providing heat for many decades, individual wells may cool down or run out of water. The three oldest sites, at Larderello, Wairakei, and the Geysers have all reduced production from their peaks. It is not clear whether these stations extracted energy faster than it was replenished from greater depths, or whether the aquifers supplying them are being depleted. If production is reduced, and water is reinjected, these wells could theoretically recover their full potential. Such mitigation strategies have already been implemented at some sites. The long-term sustainability of geothermal energy has been demonstrated at the Lardarello field in Italy since 1913, at the Wairakei field in New Zealand since 1958,[24] and at the Geysers field in California since 1960.[25]

Power station types[edit]

Geothermal power stations are similar to other steam turbine thermal power stationsin that heat from a fuel source (in geothermal's case, the Earth's core) is used to heat water or another working fluid. The working fluid is then used to turn a turbine of a generator, thereby producing electricity. The fluid is then cooled and returned to the heat source.

Dry steam power stations[edit]

Dry steam stations are the simplest and oldest design. This type of power station is not found very often, because it requires a resource that produces dry steam, but is the most efficient, with the simplest facilities.[26] In these sites, there may be liquid water present in the reservoir, but no water is produced to the surface, only steam.[26] Dry Steam Power directly uses geothermal steam of 150 °C or greater to turn turbines.[3] As the turbine rotates it powers a generator which then produces electricity and adds to the power field.[27] Then, the steam is emitted to a condenser. Here the steam turns back into a liquid which then cools the water.[28]After the water is cooled it flows down a pipe that conducts the condensate back into deep wells, where it can be reheated and produced again. At The Geysers in California, after the first 30 years of power production, the steam supply had depleted and generation was substantially reduced. To restore some of the former capacity, supplemental water injection was developed during the 1990s and 2000s, including utilization of effluent from nearby municipal sewage treatment facilities.[29]

Flash steam power stations[edit]

Flash steam stations pull deep, high-pressure hot water into lower-pressure tanks and use the resulting flashed steam to drive turbines. They require fluid temperatures of at least 180 °C, usually more. This is the most common type of station in operation today. Flash steam plants use geothermal reservoirs of water with temperatures greater than 360 °F (182 °C). The hot water flows up through wells in the ground under its own pressure. As it flows upward, the pressure decreases and some of the hot water is transformed into steam. The steam is then separated from the water and used to power a turbine/generator. Any leftover water and condensed steam may be injected back into the reservoir, making this a potentially sustainable resource.[30] [31]

Binary cycle power stations[edit]

Binary cycle power stations are the most recent development, and can accept fluid temperatures as low as 57 °C.[14] The moderately hot geothermal water is passed by a secondary fluid with a much lower boiling point than water. This causes the secondary fluid to flash vaporize, which then drives the turbines. This is the most common type of geothermal electricity station being constructed today.[32] Both Organic Rankine and Kalina cycles are used. The thermal efficiency of this type of station is typically about 10–13%.[citation needed]

Fluids drawn from the deep earth carry a mixture of gases, notably carbon dioxide (CO

2), hydrogen sulfide (H

2S), methane (CH

4), ammonia (NH

3), and radon (Rn). If released, these pollutants contribute to global warming, acid rain, radiation, and noxious smells.[failed verification]

https://en.wikipedia.org/wiki/Geothermal_power

A combined cycle power plant is an assembly of heat engines that work in tandem from the same source of heat, converting it into mechanical energy. On land, when used to make electricity the most common type is called a combined cycle gas turbine (CCGT) plant. The same principle is also used for marine propulsion, where it is called a combined gas and steam (COGAS) plant. Combining two or more thermodynamic cycles improves overall efficiency, which reduces fuel costs.

The principle is that after completing its cycle in the first engine, the working fluid (the exhaust) is still hot enough that a second subsequent heat engine can extract energy from the heat in the exhaust. Usually the heat passes through a heat exchanger so that the two engines can use different working fluids.

By generating power from multiple streams of work, the overall efficiency of the system can be increased by 50–60%. That is, from an overall efficiency of say 34% (for a simple cycle), to as much as 64% (for a combined cycle).[1] This is more than 84% of the theoretical efficiency of a Carnot cycle. Heat engines can only use part of the energy from their fuel (usually less than 50%), so in a non-combined cycle heat engine, the remaining heat (i.e., hot exhaust gas) from combustion is wasted.

https://en.wikipedia.org/wiki/Combined_cycle_power_plant

Instantaneous power in an electric circuit is the rate of flow of energy past a given point of the circuit. In alternating current circuits, energy storage elements such as inductors and capacitors may result in periodic reversals of the direction of energy flow.

The portion of instantaneous power that, averaged over a complete cycle of the AC waveform, results in net transfer of energy in one direction is known as instantaneous active power, and its time average is known as active power or real power.[1]:3 The portion of instantaneous power that results in no net transfer of energy but instead oscillates between the source and load in each cycle due to stored energy, is known as instantaneous reactive power, and its amplitude is the absolute value of reactive power.[2][1]:4

Active, reactive, apparent, and complex power in sinusoidal steady-state[edit]

In a simple alternating current (AC) circuit consisting of a source and a linear time-invariant load, both the current and voltage are sinusoidal at the same frequency.[3] If the load is purely resistive, the two quantities reverse their polarity at the same time. At every instant the product of voltage and current is positive or zero, the result being that the direction of energy flow does not reverse. In this case, only active power is transferred.

If the load is purely reactive, then the voltage and current are 90 degrees out of phase. For two quarters of each cycle, the product of voltage and current is positive, but for the other two quarters, the product is negative, indicating that on average, exactly as much energy flows into the load as flows back out. There is no net energy flow over each half cycle. In this case, only reactive power flows: There is no net transfer of energy to the load; however, electrical power does flow along the wires and returns by flowing in reverse along the same wires. The current required for this reactive power flow dissipates energy in the line resistance, even if the ideal load device consumes no energy itself. Practical loads have resistance as well as inductance, or capacitance, so both active and reactive powers will flow to normal loads.

Apparent power is the product of the RMS values of voltage and current. Apparent power is taken into account when designing and operating power systems, because although the current associated with reactive power does no work at the load, it still must be supplied by the power source. Conductors, transformers and generators must be sized to carry the total current, not just the current that does useful work. Failure to provide for the supply of sufficient reactive power in electrical grids can lead to lowered voltage levels and, under certain operating conditions, to the complete collapse of the network or blackout. Another consequence is that adding the apparent power for two loads will not accurately give the total power unless they have the same phase difference between current and voltage (the same power factor).

Conventionally, capacitors are treated as if they generate reactive power, and inductors are treated as if they consume it. If a capacitor and an inductor are placed in parallel, then the currents flowing through the capacitor and the inductor tend to cancel rather than add. This is the fundamental mechanism for controlling the power factor in electric power transmission; capacitors (or inductors) are inserted in a circuit to partially compensate for reactive power 'consumed' ('generated') by the load. Purely capacitive circuits supply reactive power with the current waveform leading the voltage waveform by 90 degrees, while purely inductive circuits absorb reactive power with the current waveform lagging the voltage waveform by 90 degrees. The result of this is that capacitive and inductive circuit elements tend to cancel each other out.[4]

Engineers use the following terms to describe energy flow in a system (and assign each of them a different unit to differentiate between them):

- Active power,[5] P, or real power:[6] watt (W);

- Reactive power, Q: volt-ampere reactive (var);

- Complex power, S: volt-ampere (VA);

- Apparent power, |S|: the magnitude of complex power S: volt-ampere (VA);

- Phase of voltage relative to current, φ: the angle of difference (in degrees) between current and voltage; . Current lagging voltage (quadrant I vector), current leading voltage (quadrant IV vector).

These are all denoted in the adjacent diagram (called a Power Triangle).

In the diagram, P is the active power, Q is the reactive power (in this case positive), S is the complex power and the length of S is the apparent power. Reactive power does not do any work, so it is represented as the imaginary axisof the vector diagram. Active power does do work, so it is the real axis.

The unit for power is the watt (symbol: W). Apparent power is often expressed in volt-amperes (VA) since it is the product of RMS voltage and RMS current. The unit for reactive power is var, which stands for volt-ampere reactive. Since reactive power transfers no net energy to the load, it is sometimes called "wattless" power. It does, however, serve an important function in electrical grids and its lack has been cited as a significant factor in the Northeast Blackout of 2003.[7] Understanding the relationship among these three quantities lies at the heart of understanding power engineering. The mathematical relationship among them can be represented by vectors or expressed using complex numbers, S = P + j Q (where j is the imaginary unit).

Calculations and equations in sinusoidal steady-state[edit]

The formula for complex power (units: VA) in phasor form is:

- ,

where V denotes voltage in phasor form, with the amplitude as RMS, and I denotes current in phasor form, with the amplitude as RMS. Also by convention, the complex conjugate of I is used, which is denoted (or ), rather than I itself. This is done because otherwise using the product V I to define S would result in a quantity that depends on the reference angle chosen for V or I, but defining S as V I* results in a quantity that doesn't depend on the reference angle and allows to relate S to P and Q.[8]

Other forms of complex power (units in volt-amps, VA) are derived from Z, the load impedance (units in ohms, Ω).

- .

Consequentially, with reference to the power triangle, real power (units in watts, W) is derived as:

- .

For a purely resistive load, real power can be simplified to:

- .

R denotes resistance (units in ohms, Ω) of the load.

Reactive power (units in volts-amps-reactive, var) is derived as:

- .

For a purely reactive load, reactive power can be simplified to:

- ,

where X denotes reactance (units in ohms, Ω) of the load.

Combining, the complex power (units in volt-amps, VA) is back-derived as

- ,

and the apparent power (units in volt-amps, VA) as

- .

These are simplified diagrammatically by the power triangle.

Power factor[edit]

The ratio of active power to apparent power in a circuit is called the power factor. For two systems transmitting the same amount of active power, the system with the lower power factor will have higher circulating currents due to energy that returns to the source from energy storage in the load. These higher currents produce higher losses and reduce overall transmission efficiency. A lower power factor circuit will have a higher apparent power and higher losses for the same amount of active power. The power factor is 1.0 when the voltage and current are in phase. It is zero when the current leads or lags the voltage by 90 degrees. When the voltage and current are 180 degrees out of phase, the power factor is negative one, and the load is feeding energy into the source (an example would be a home with solar cells on the roof that feed power into the power grid when the sun is shining). Power factors are usually stated as "leading" or "lagging" to show the sign of the phase angle of current with respect to voltage. Voltage is designated as the base to which current angle is compared, meaning that current is thought of as either "leading" or "lagging" voltage. Where the waveforms are purely sinusoidal, the power factor is the cosine of the phase angle () between the current and voltage sinusoidal waveforms. Equipment data sheets and nameplates will often abbreviate power factor as "" for this reason.

Example: The active power is 700 W and the phase angle between voltage and current is 45.6°. The power factor is cos(45.6°) = 0.700. The apparent power is then: 700 W / cos(45.6°) = 1000 VA. The concept of power dissipation in AC circuit is explained and illustrated with the example.

For instance, a power factor of 0.68 means that only 68 percent of the total current supplied (in magnitude) is actually doing work; the remaining current does no work at the load.

Reactive power[edit]

In a direct current circuit, the power flowing to the load is proportional to the product of the current through the load and the potential drop across the load. Energy flows in one direction from the source to the load. In AC power, the voltage and current both vary approximately sinusoidally. When there is inductance or capacitance in the circuit, the voltage and current waveforms do not line up perfectly. The power flow has two components – one component flows from source to load and can perform work at the load; the other portion, known as "reactive power", is due to the delay between voltage and current, known as phase angle, and cannot do useful work at the load. It can be thought of as current that is arriving at the wrong time (too late or too early). To distinguish reactive power from active power, it is measured in units of "volt-amperes reactive", or var. These units can simplify to watts but are left as var to denote that they represent no actual work output.

Energy stored in capacitive or inductive elements of the network gives rise to reactive power flow. Reactive power flow strongly influences the voltage levels across the network. Voltage levels and reactive power flow must be carefully controlled to allow a power system to be operated within acceptable limits. A technique known as reactive compensation is used to reduce apparent power flow to a load by reducing reactive power supplied from transmission lines and providing it locally. For example, to compensate an inductive load, a shunt capacitor is installed close to the load itself. This allows all reactive power needed by the load to be supplied by the capacitor and not have to be transferred over the transmission lines. This practice saves energy because it reduces the amount of energy that is required to be produced by the utility to do the same amount of work. Additionally, it allows for more efficient transmission line designs using smaller conductors or fewer bundled conductors and optimizing the design of transmission towers.

Capacitive vs. inductive loads[edit]

Stored energy in the magnetic or electric field of a load device, such as a motor or capacitor, causes an offset between the current and the voltage waveforms. A capacitor is an AC device that stores energy in the form of an electric field. As current is driven through the capacitor, charge build-up causes an opposing voltage to develop across the capacitor. This voltage increases until some maximum dictated by the capacitor structure. In an AC network, the voltage across a capacitor is constantly changing. The capacitor opposes this change, causing the current to lead the voltage in phase. Capacitors are said to "source" reactive power, and thus to cause a leading power factor.

Induction machines are some of the most common types of loads in the electric power system today. These machines use inductors, or large coils of wire to store energy in the form of a magnetic field. When a voltage is initially placed across the coil, the inductor strongly resists this change in a current and magnetic field, which causes a time delay for the current to reach its maximum value. This causes the current to lag behind the voltage in phase. Inductors are said to "sink" reactive power, and thus to cause a lagging power factor. Induction generators can source or sink reactive power, and provide a measure of control to system operators over reactive power flow and thus voltage.[9] Because these devices have opposite effects on the phase angle between voltage and current, they can be used to "cancel out" each other's effects. This usually takes the form of capacitor banks being used to counteract the lagging power factor caused by induction motors.

Reactive power control[edit]

Transmission connected generators are generally required to support reactive power flow. For example, on the United Kingdom transmission system, generators are required by the Grid Code Requirements to supply their rated power between the limits of 0.85 power factor lagging and 0.90 power factor leading at the designated terminals. The system operator will perform switching actions to maintain a secure and economical voltage profile while maintaining a reactive power balance equation:

The ‘system gain’ is an important source of reactive power in the above power balance equation, which is generated by the capacitative nature of the transmission network itself. By making decisive switching actions in the early morning before the demand increases, the system gain can be maximized early on, helping to secure the system for the whole day. To balance the equation some pre-fault reactive generator use will be required. Other sources of reactive power that will also be used include shunt capacitors, shunt reactors, static VAR compensators and voltage control circuits.

Unbalanced sinusoidal polyphase systems[edit]

While active power and reactive power are well defined in any system, the definition of apparent power for unbalanced polyphase systems is considered to be one of the most controversial topics in power engineering. Originally, apparent power arose merely as a figure of merit. Major delineations of the concept are attributed to Stanley's Phenomena of Retardation in the Induction Coil (1888) and Steinmetz's Theoretical Elements of Engineering (1915). However, with the development of three phase power distribution, it became clear that the definition of apparent power and the power factor could not be applied to unbalanced polyphase systems. In 1920, a "Special Joint Committee of the AIEE and the National Electric Light Association" met to resolve the issue. They considered two definitions.

- ,

that is, the arithmetic sum of the phase apparent powers; and

- ,

that is, the magnitude of total three-phase complex power.